Change and chance

Nuffield Advance Science Physics

Students' book and Teachers' guide

Unit 9

Change and chance

1972

Front Cover

Full Size Image

Contents

- Nuffield Advance Physics team and special advisers

- Foreword

- Introduction

- 1. One-way processes

- 2. The fuel resources of the Earth

-

3. Chance and diffusion

- The purpose of the discussion about chance and diffusion

- Diffusion and mixing as one-way processes

- A collection of many two-way processes can look one-way

- Large numbers and small numbers

- Chance is enough to work out what happens on average

- Counting numbers of ways

- Processes that happen inexorably, but quite by chance

- Summary

-

4. Thermal equilibrium, temperature, and chance

- Thermal equilibrium

- The zeroth law

- An analogy: getting on with someone

- Scales of temperature

- The large scale and the small scale

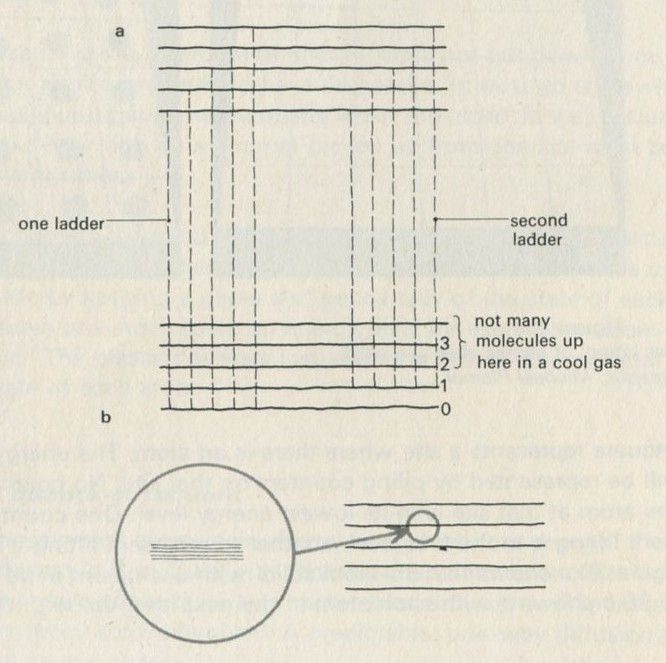

- A game of chess with quanta for chessmen

- What happens in the game?

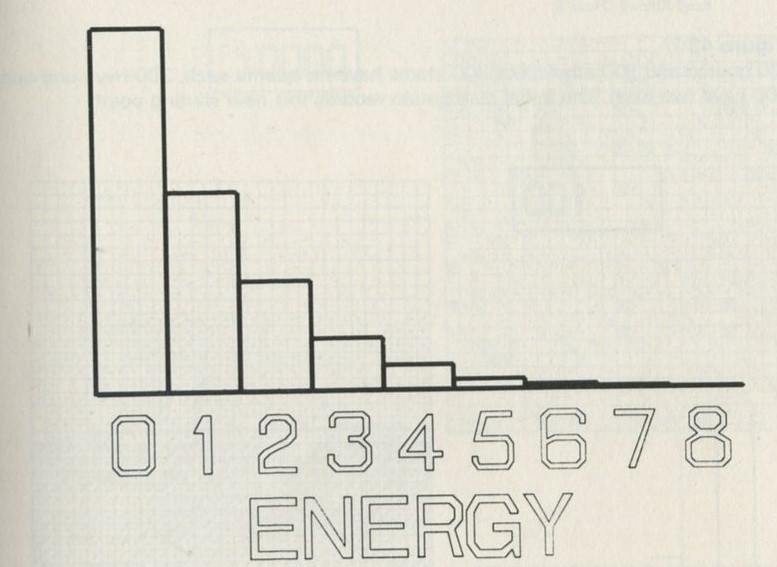

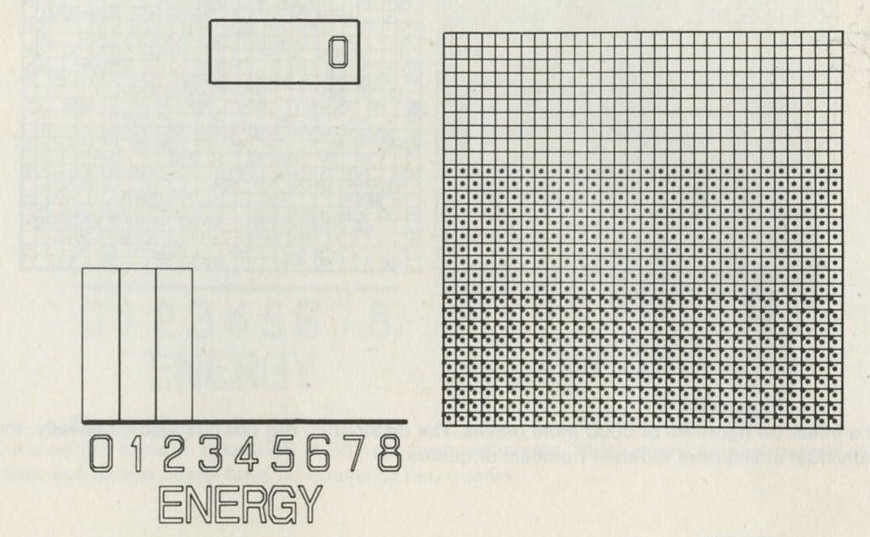

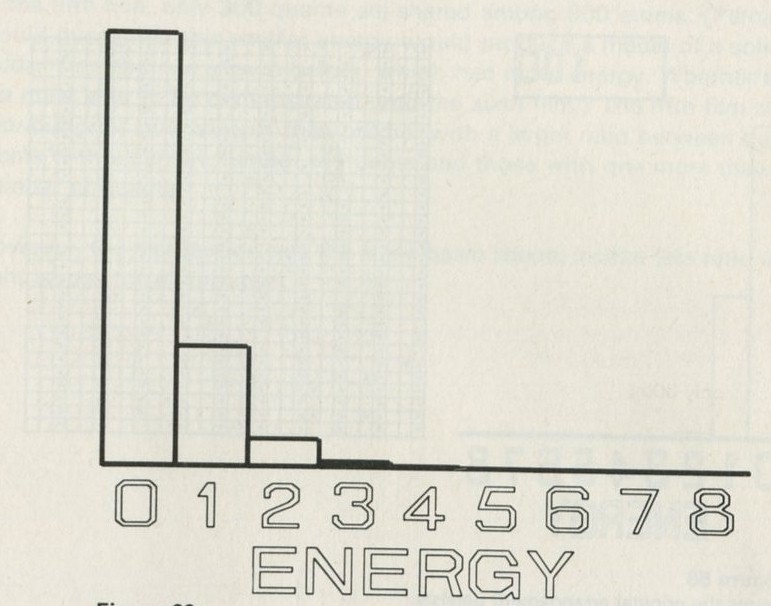

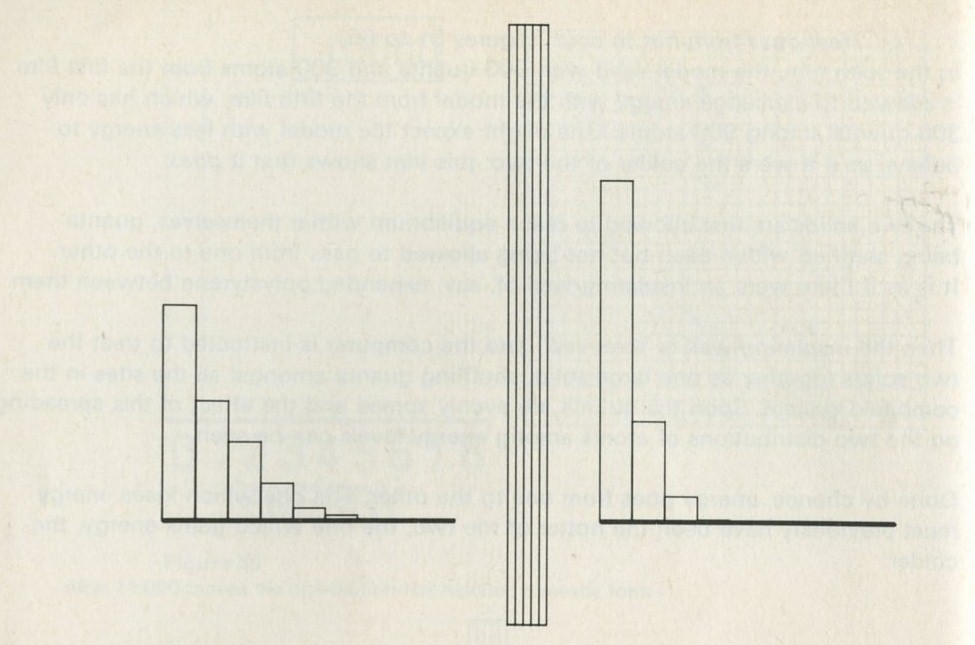

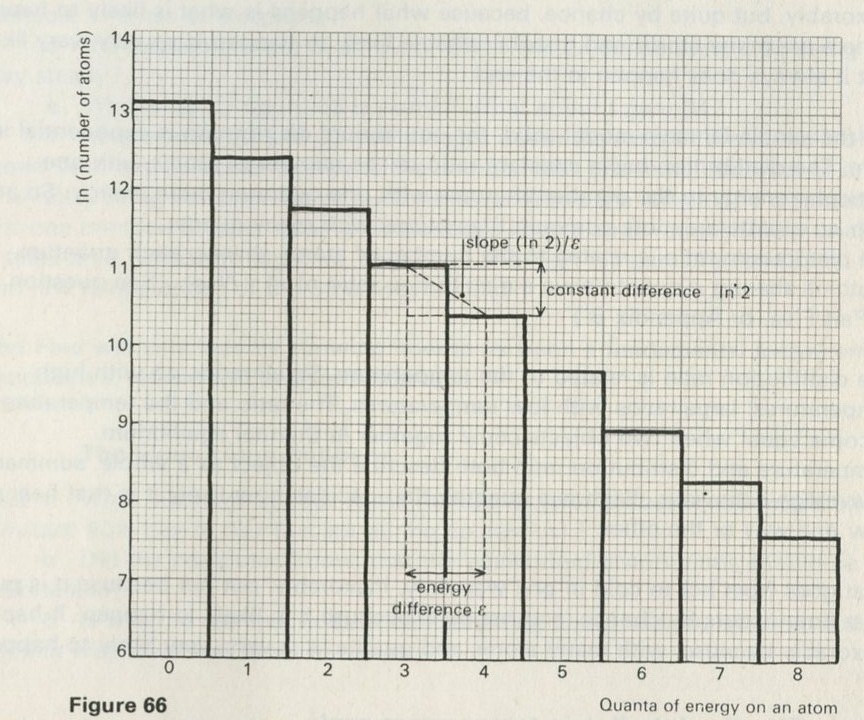

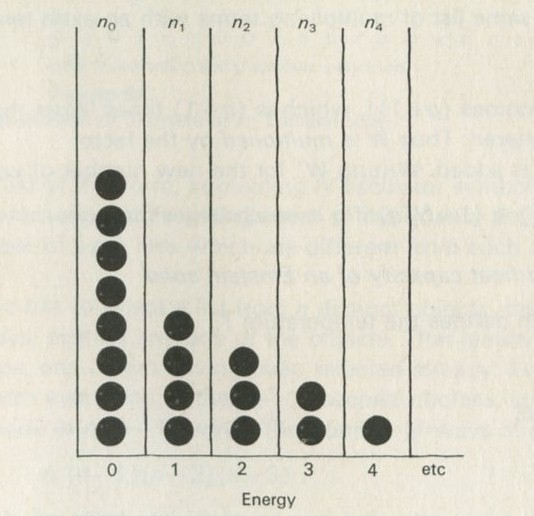

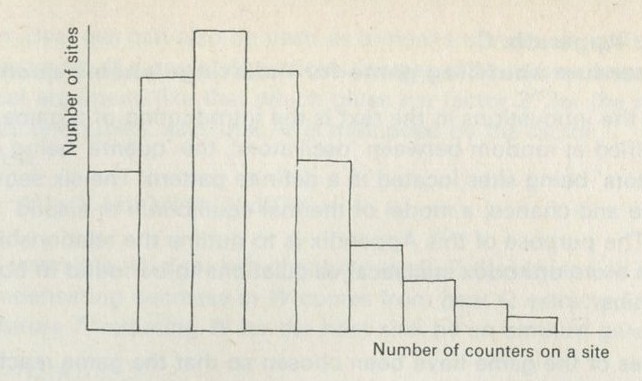

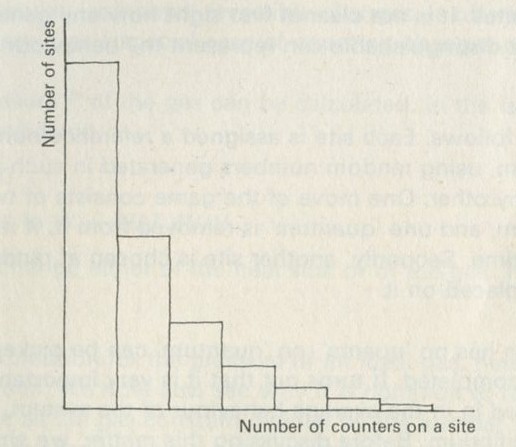

- The distribution of quanta among atoms

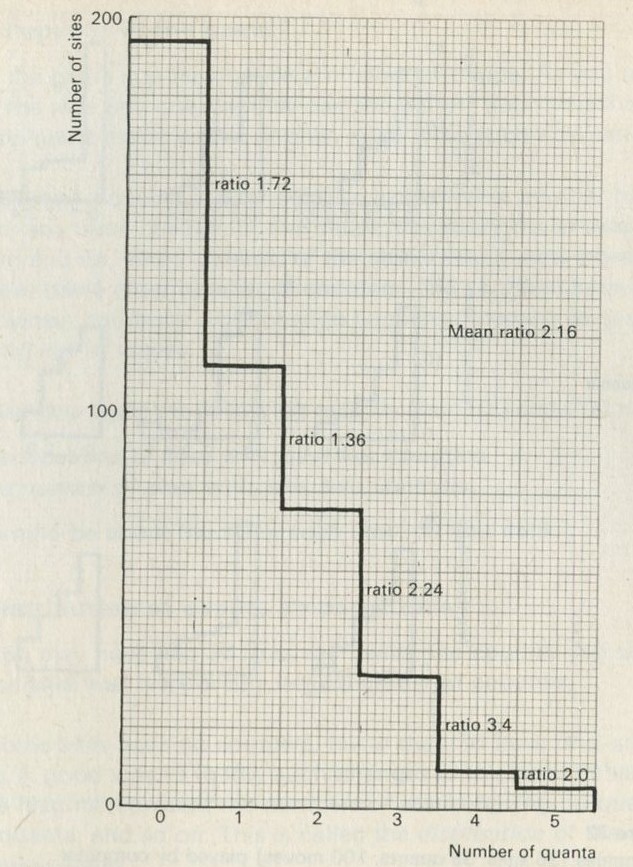

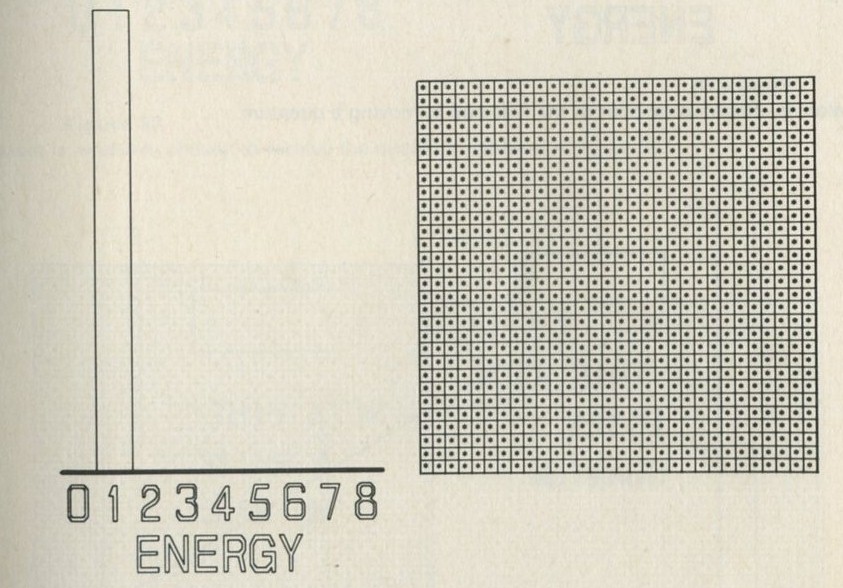

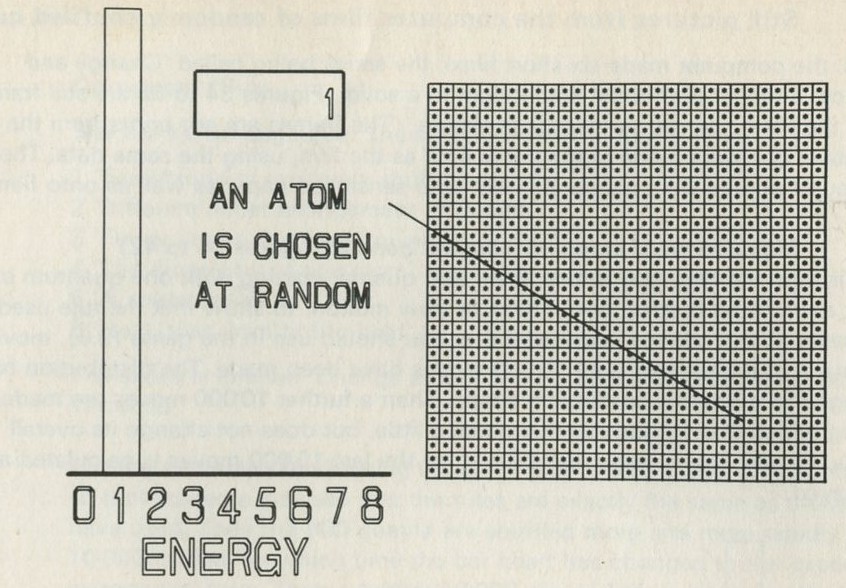

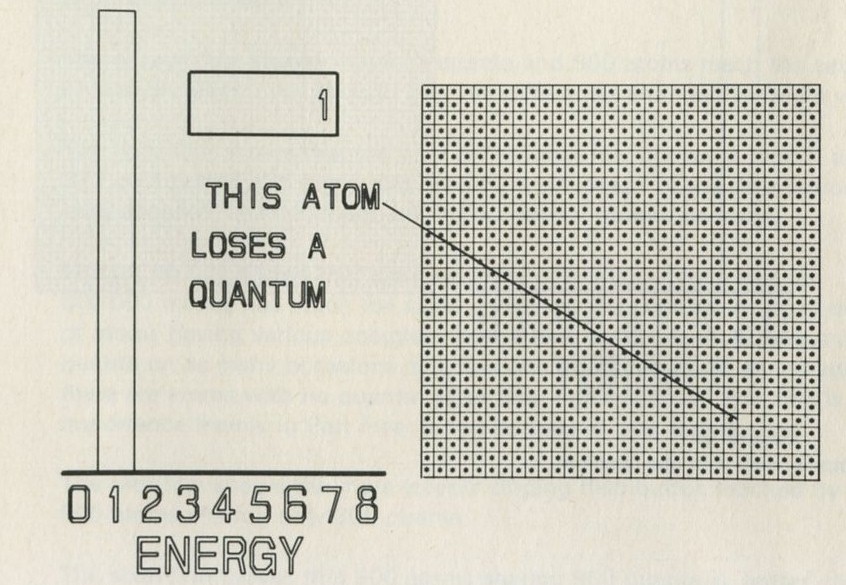

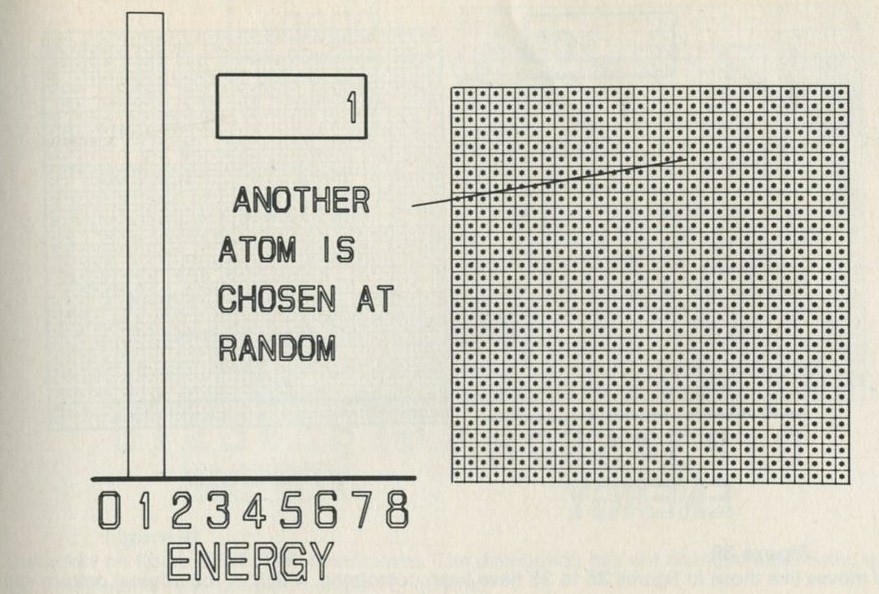

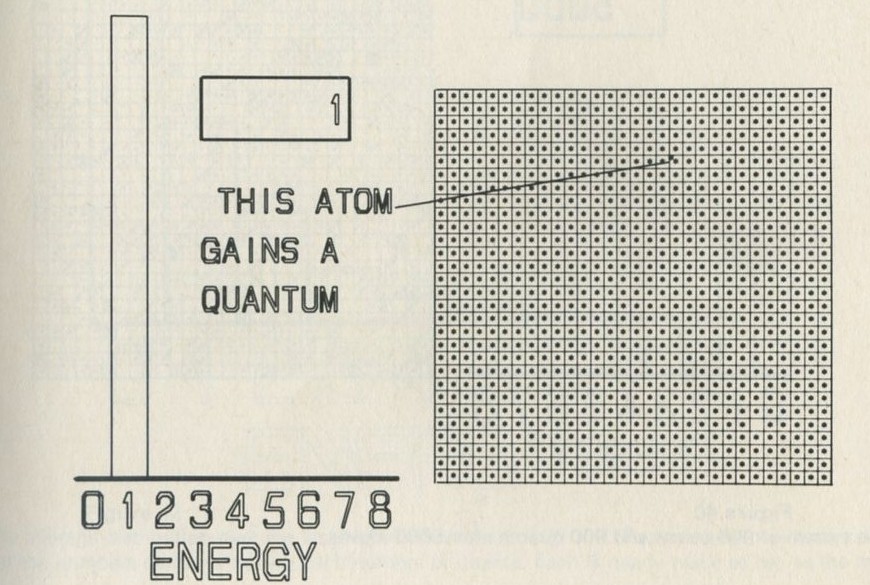

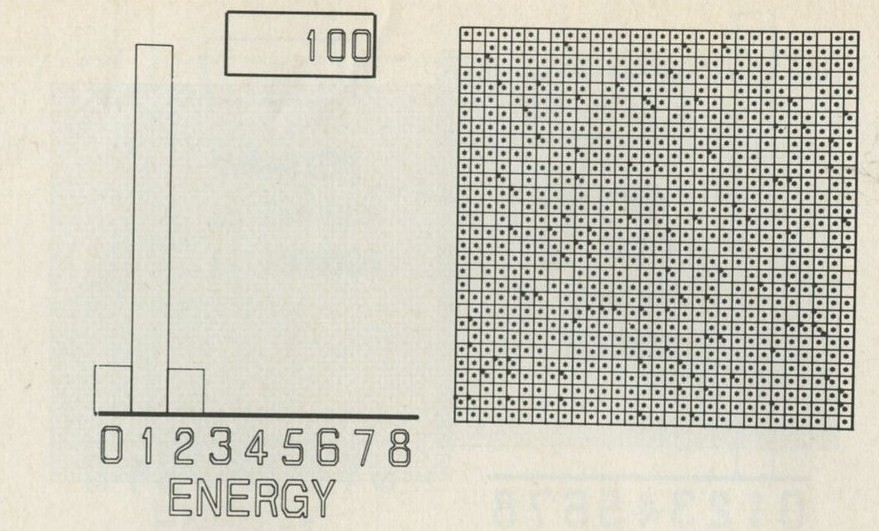

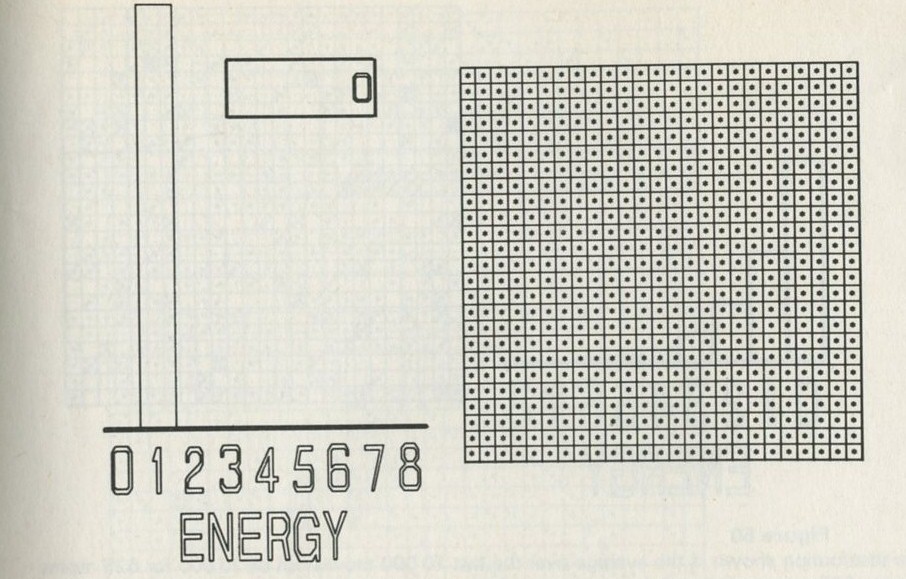

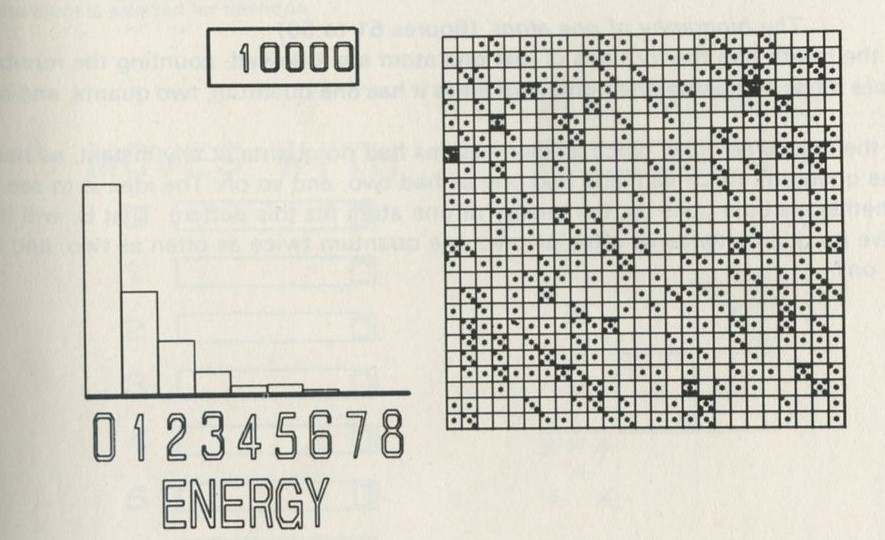

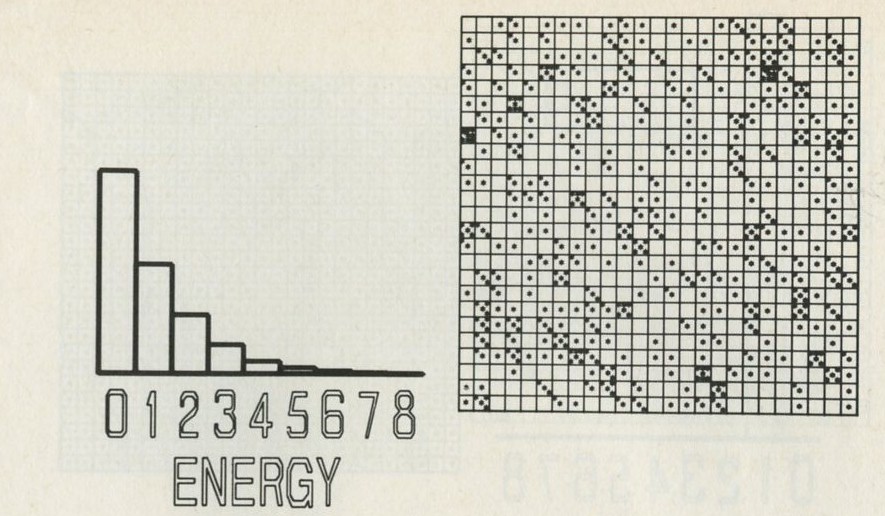

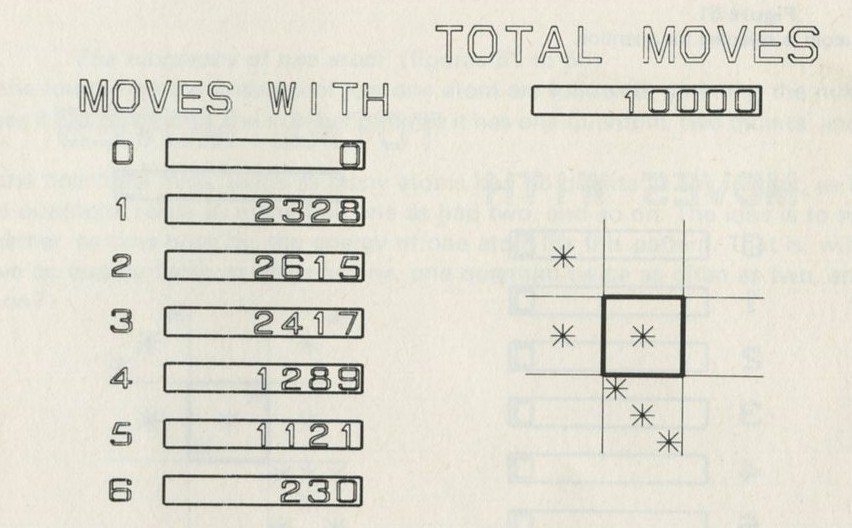

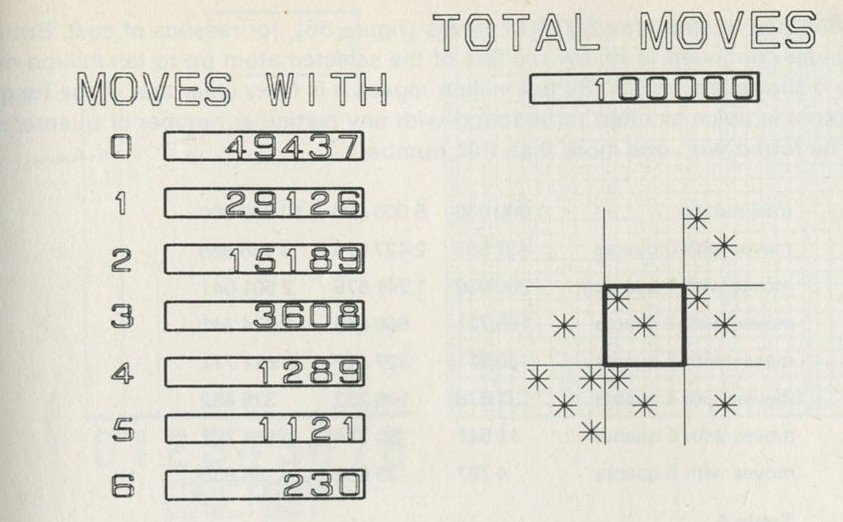

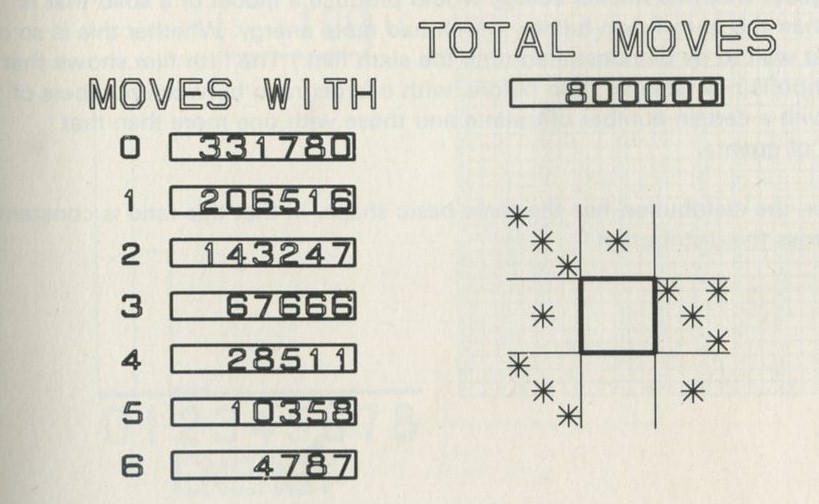

- The quantum shuffling game played with large numbers of atoms by a computer

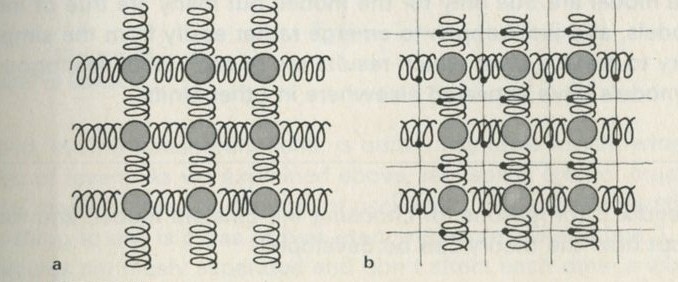

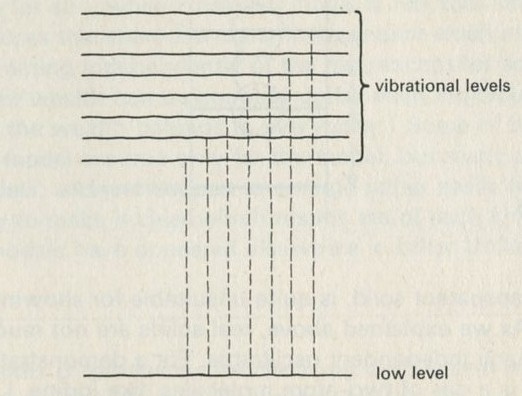

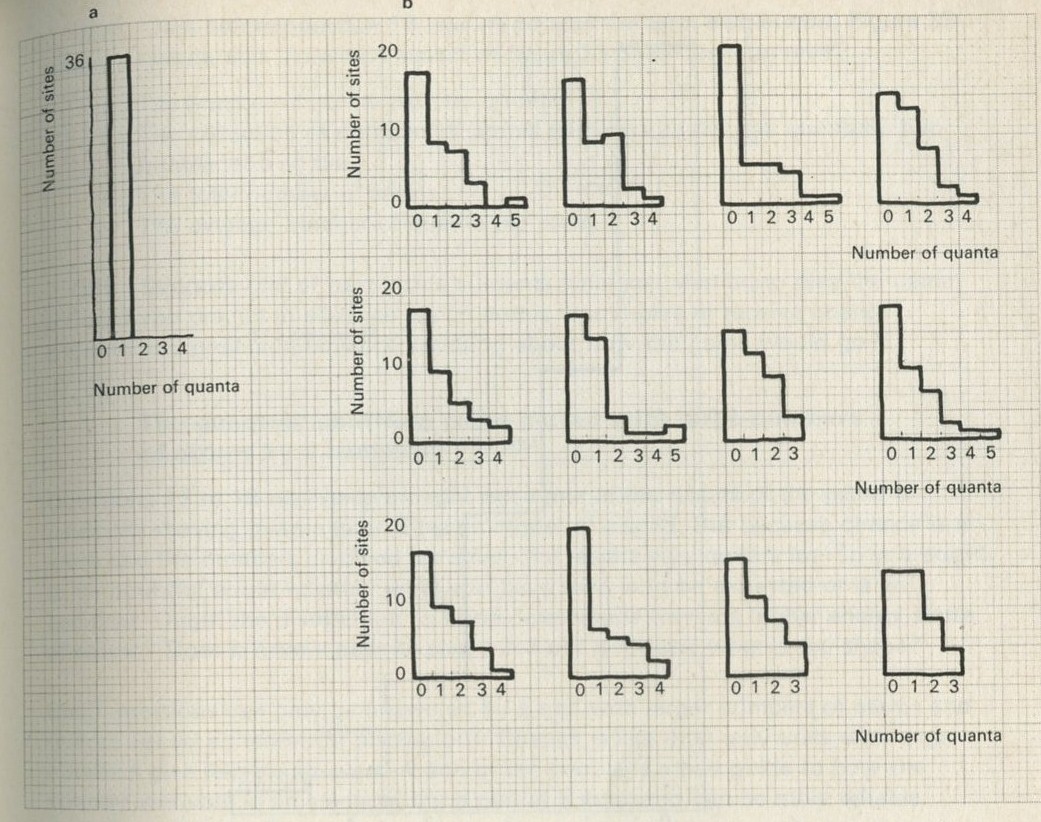

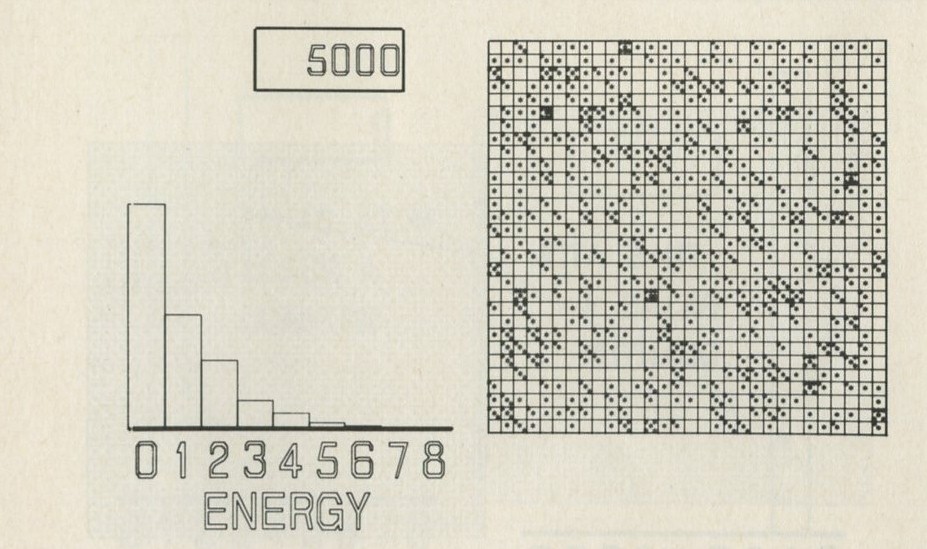

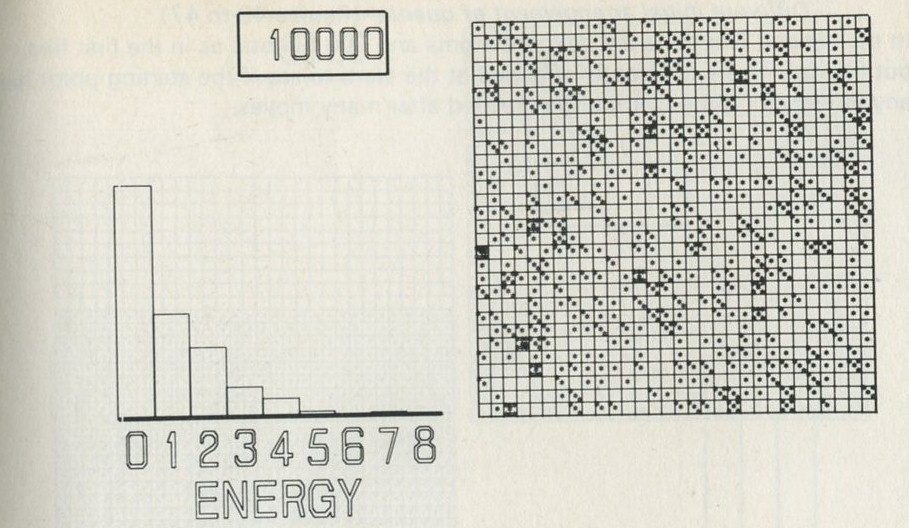

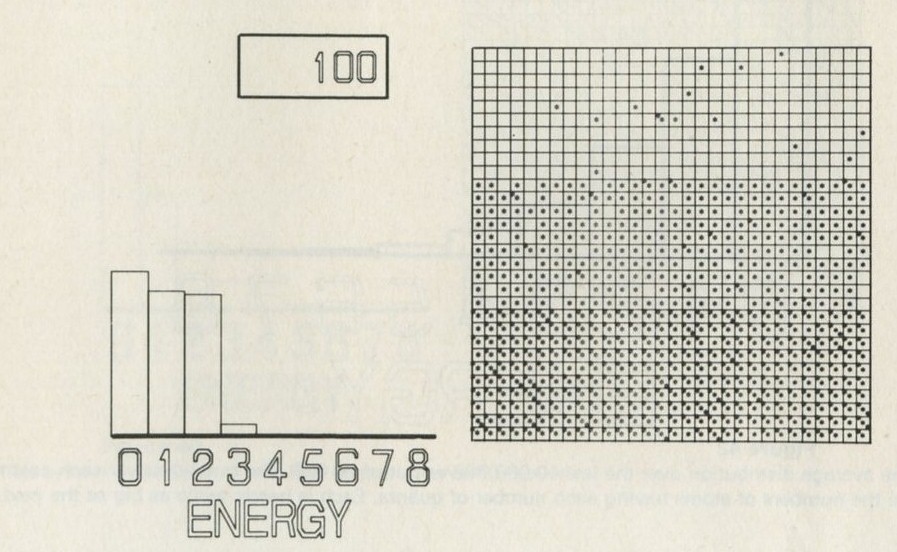

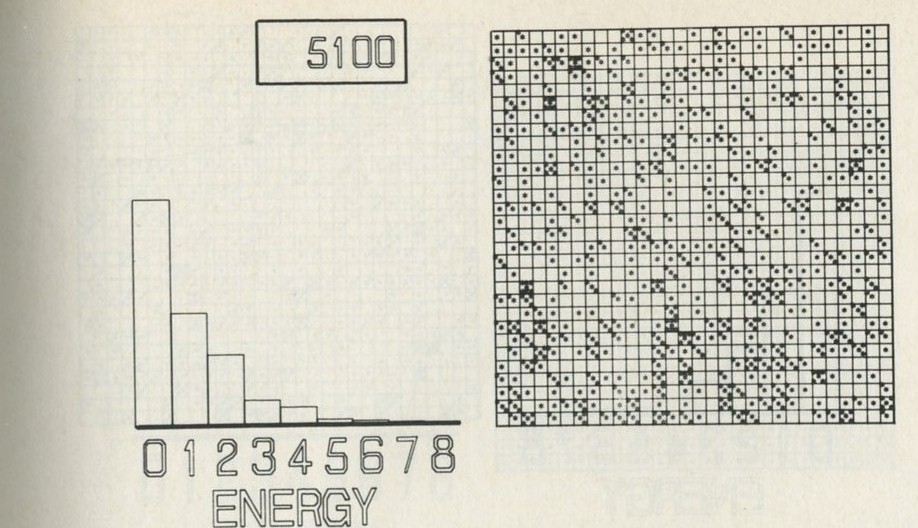

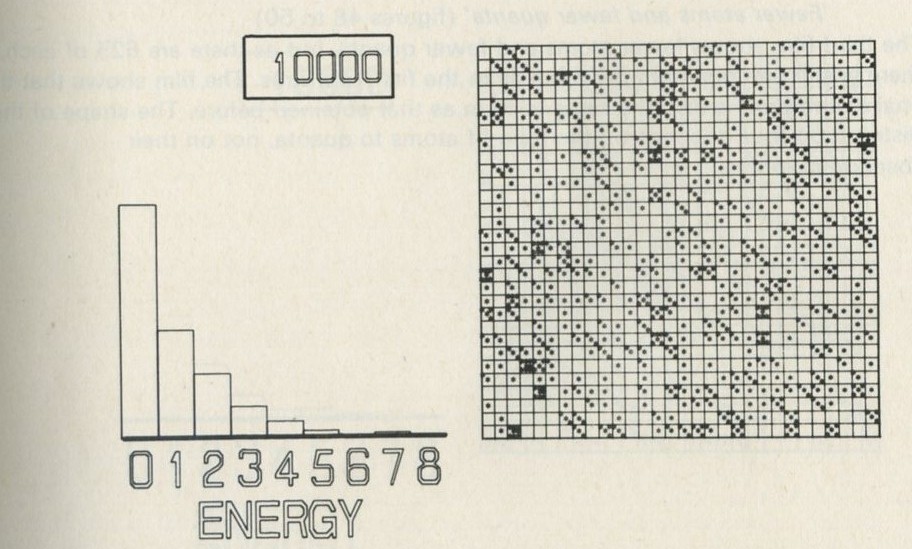

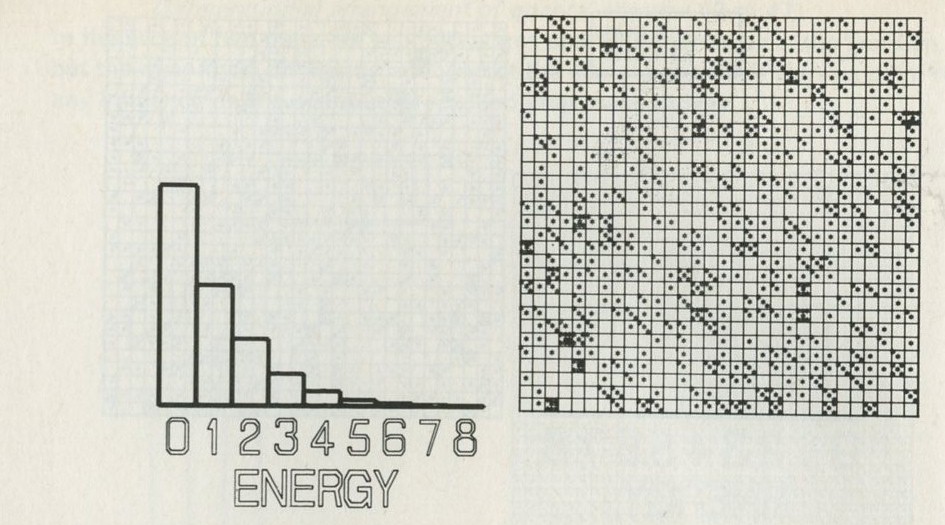

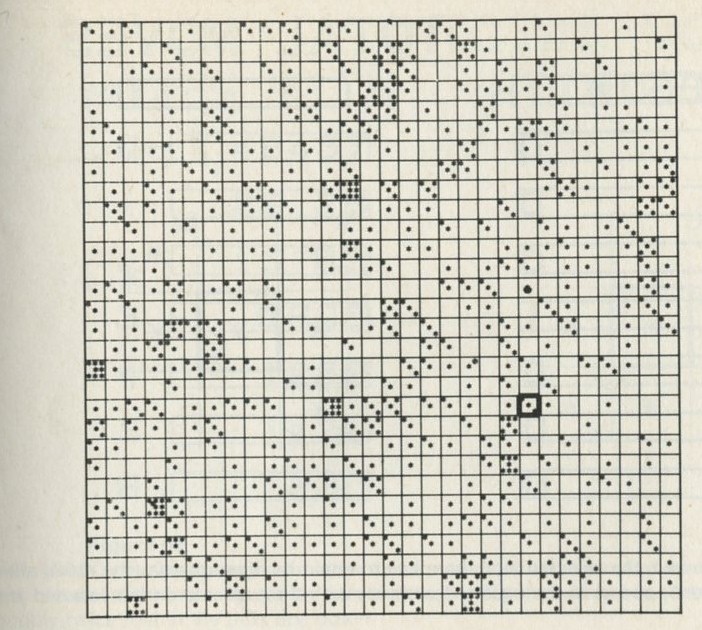

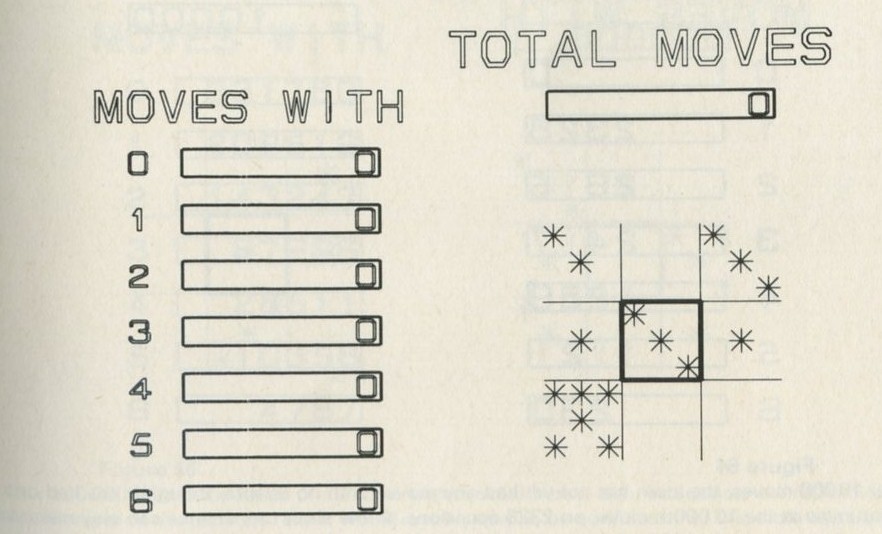

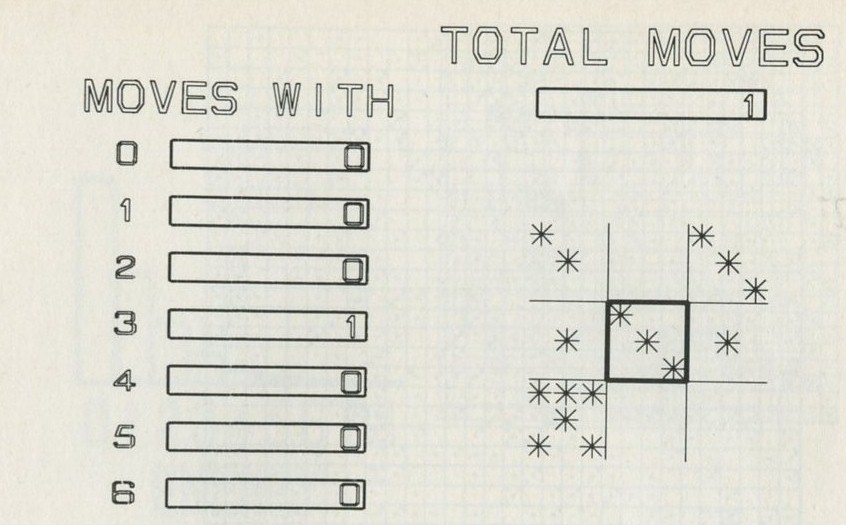

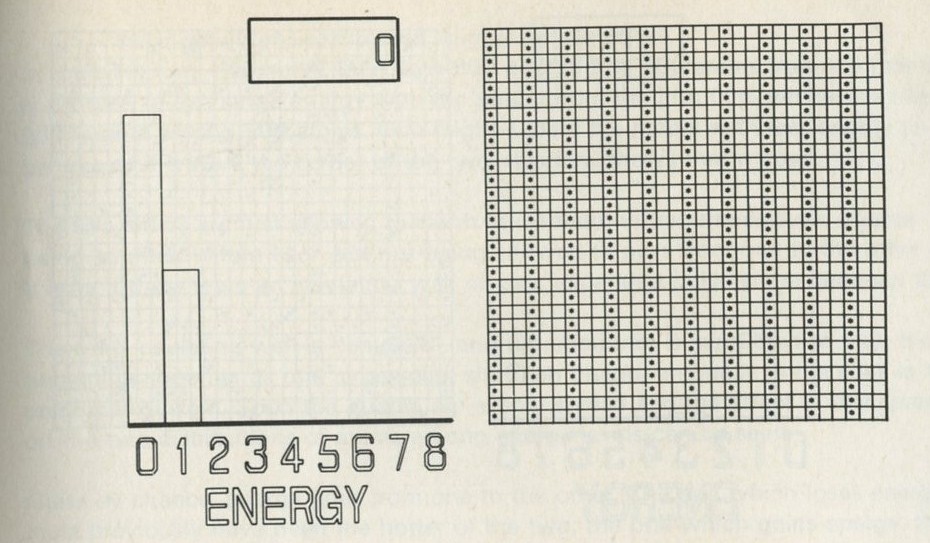

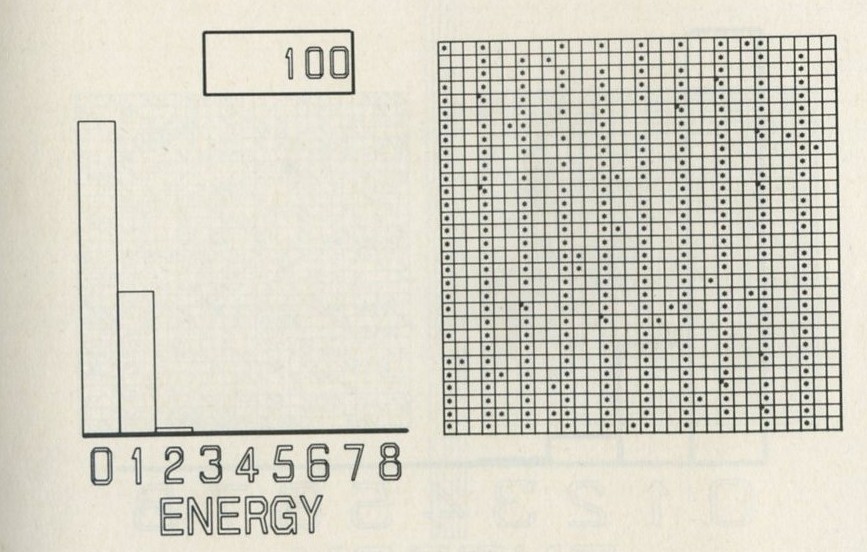

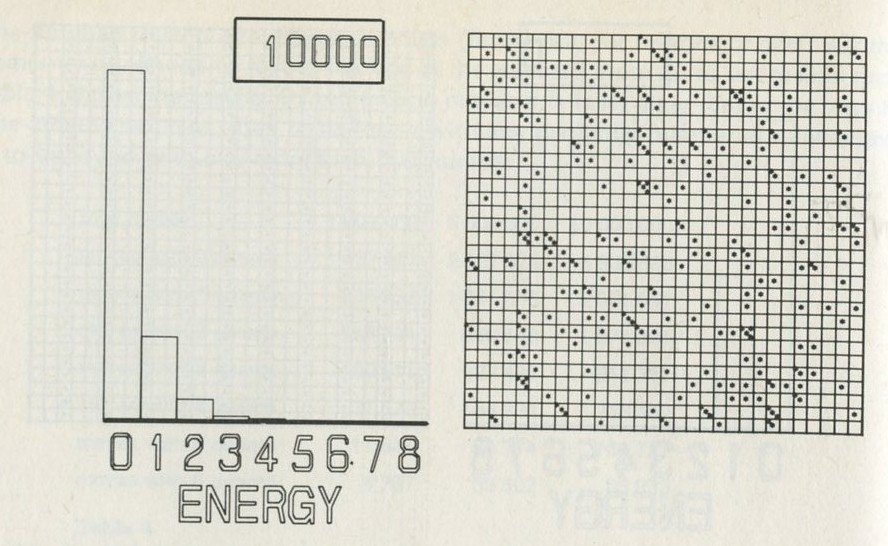

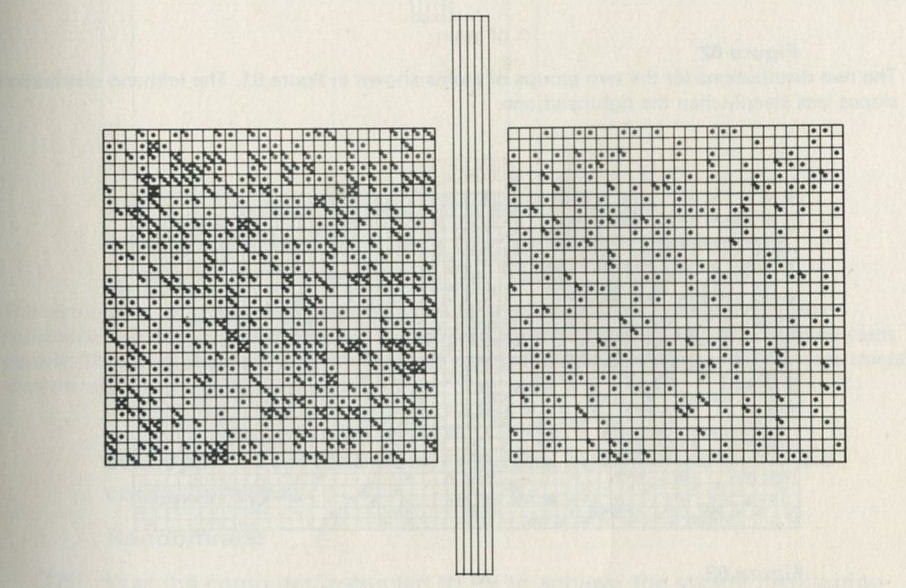

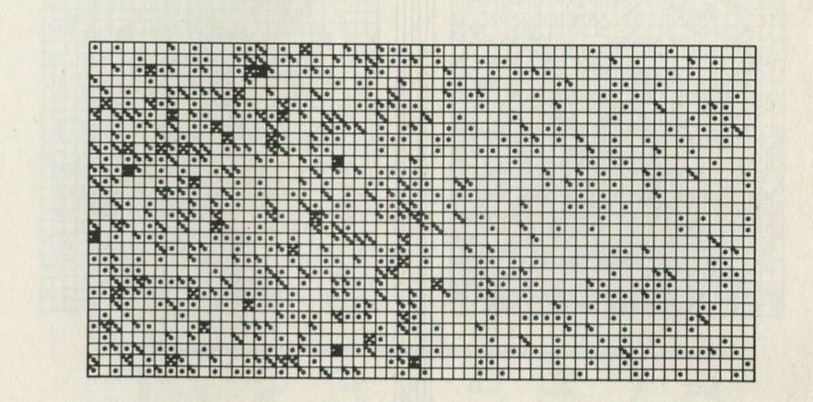

- Still pictures from the computer films of randomly shuffled quanta

- What can be learned about thermal equilibrium from the computer films?

- Summary: chance and the flow of heat

- The absolute Kelvin temperature scale

- The heat capacity of an Einstein solid

- Constant thermal capacity for many elements

- Dulong, Petit, Einstein, and heat capacities

- Value of the Boltzmann constant k and mean energy per atom

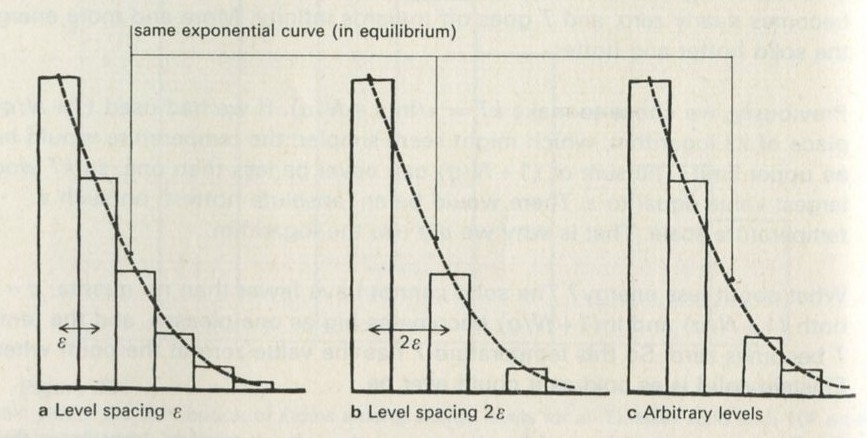

- The Boltzmann factor

- Summary of Part Four

- A place to stop

-

5. Counting ways

- An overall view of the argument

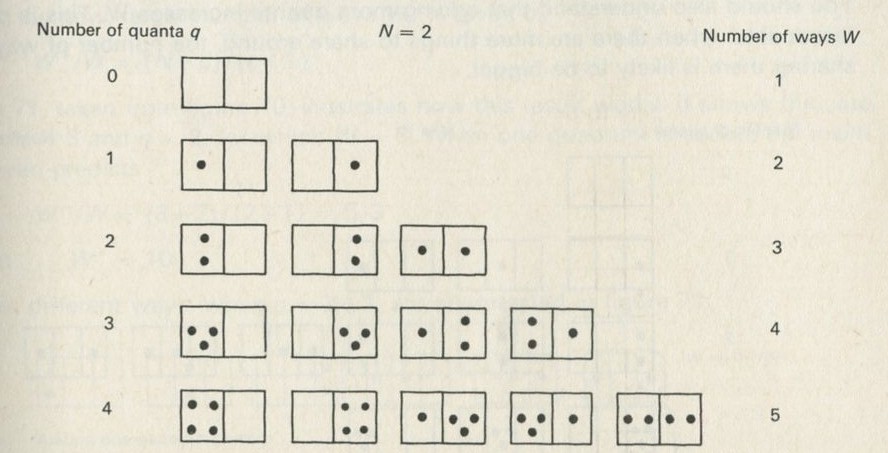

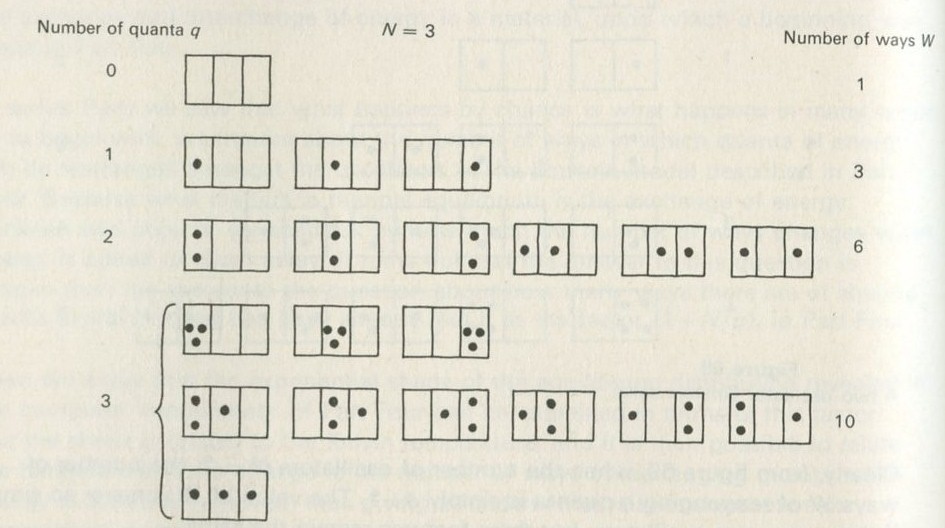

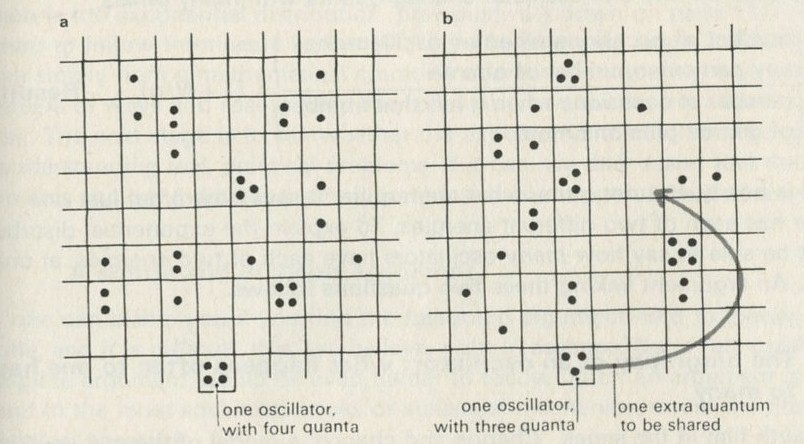

- Counting ways of sharing quanta in an Einstein solid

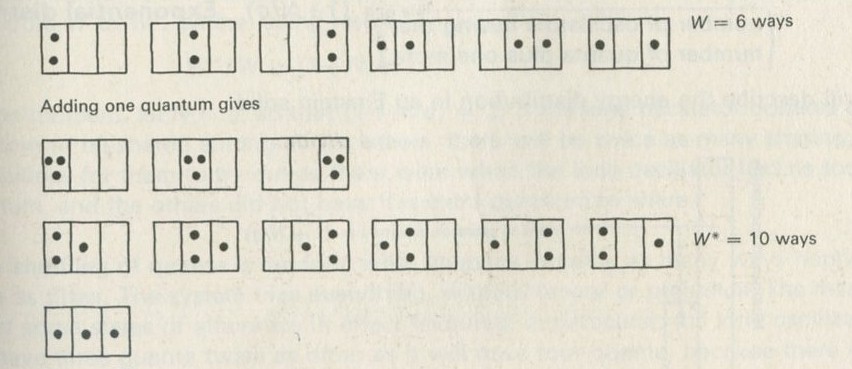

- Adding energy leads to more ways of sharing it

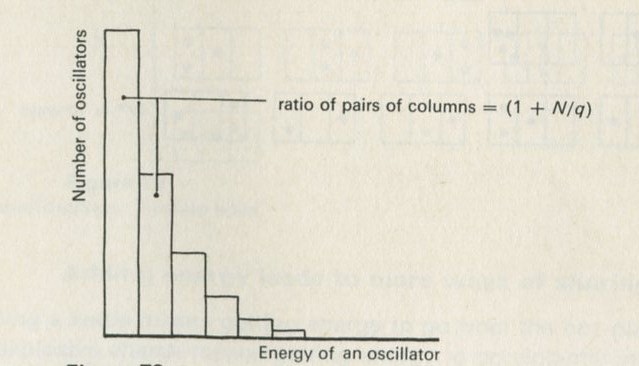

- The factor (1 + N/q)

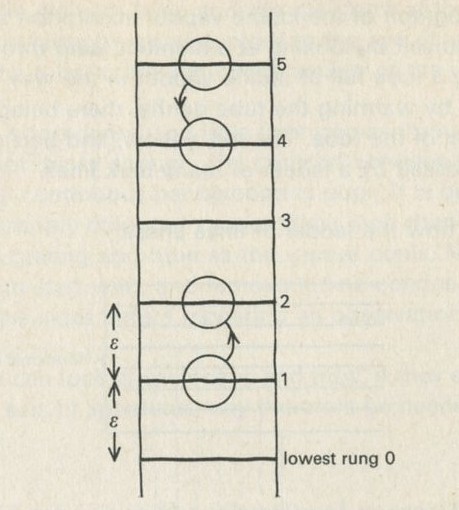

- One oscillator competing with many others for energy

- The biography of an oscillator: what happens often to one happens to many

- A subtle and difficult argument

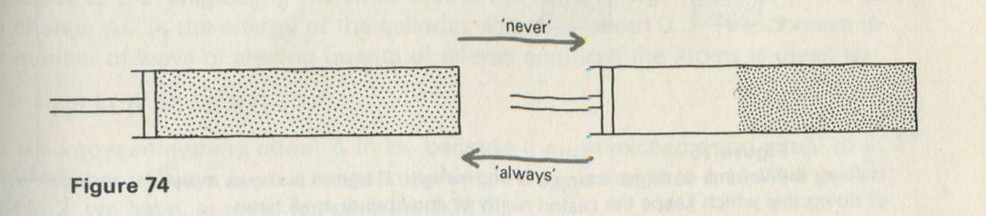

- The flow of heat from hot to cold

- The general meaning of hot and cold

- Temperature and changes to number of ways

- Kelvin temperature and the Boltzmann constant k

- Measurment of k: the Einstein solid as a means of counting ways

- The Boltzmann factor

- Counting ways with heaters and thermometers: entropy

-

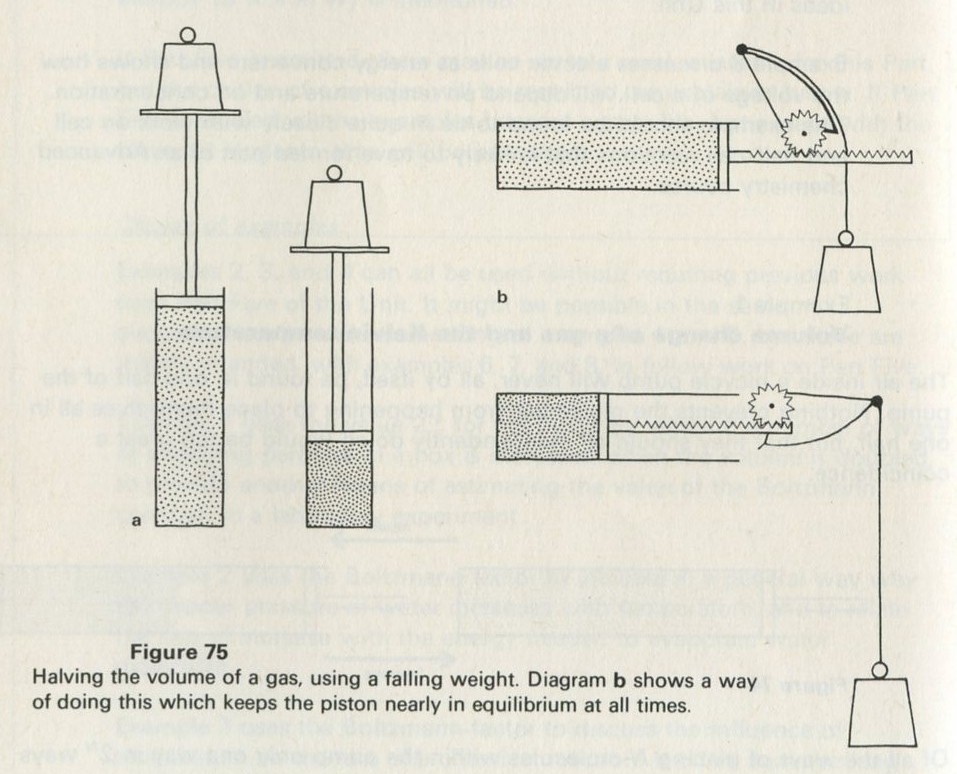

6. Uses of thermodynamic ideas

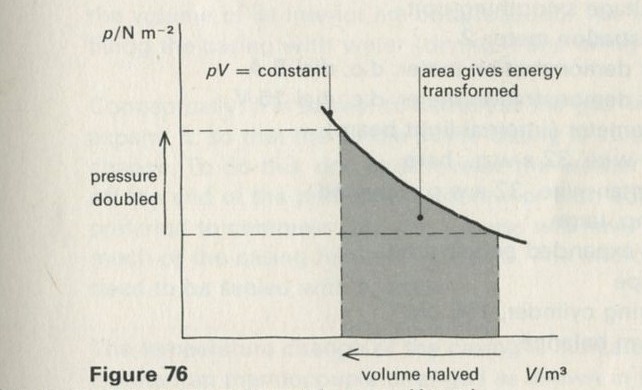

- Example 1: Volume change of a gas and the Kelvin temperature

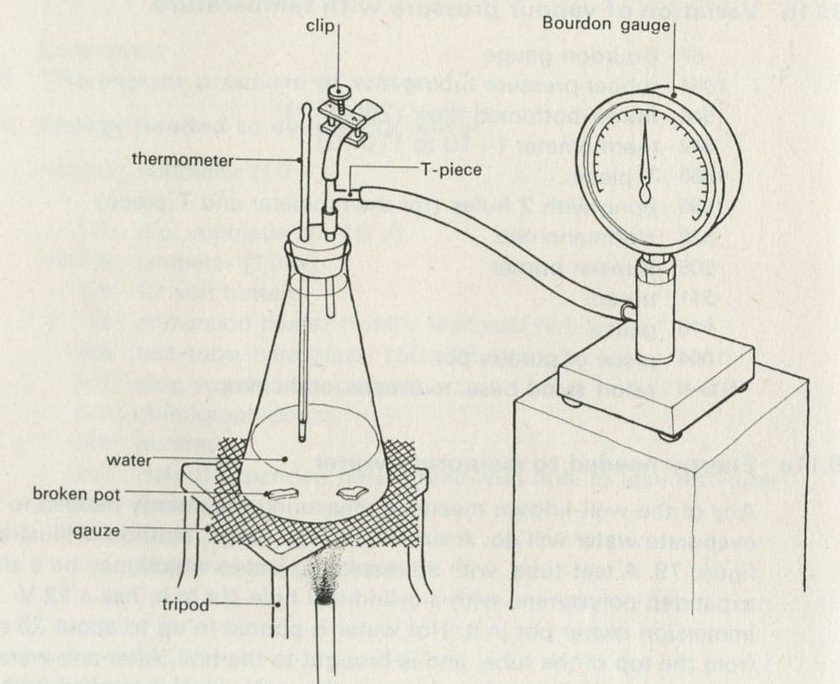

- Example 2: The vapour pressure of water

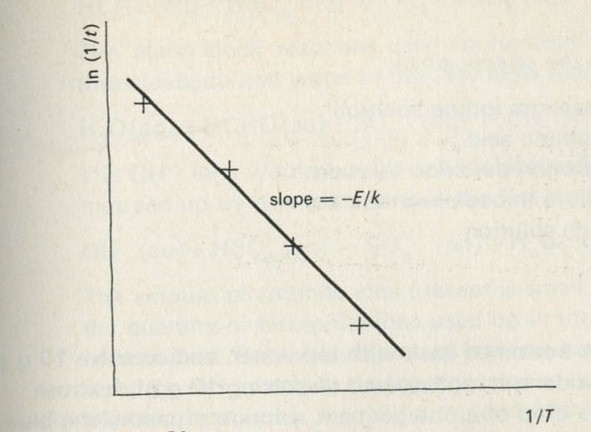

- Example 3: Rate of reaction

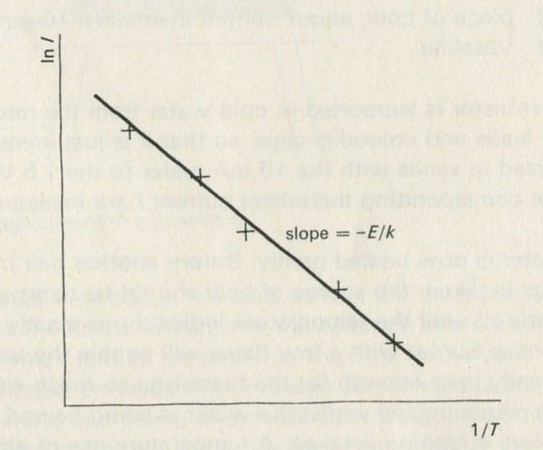

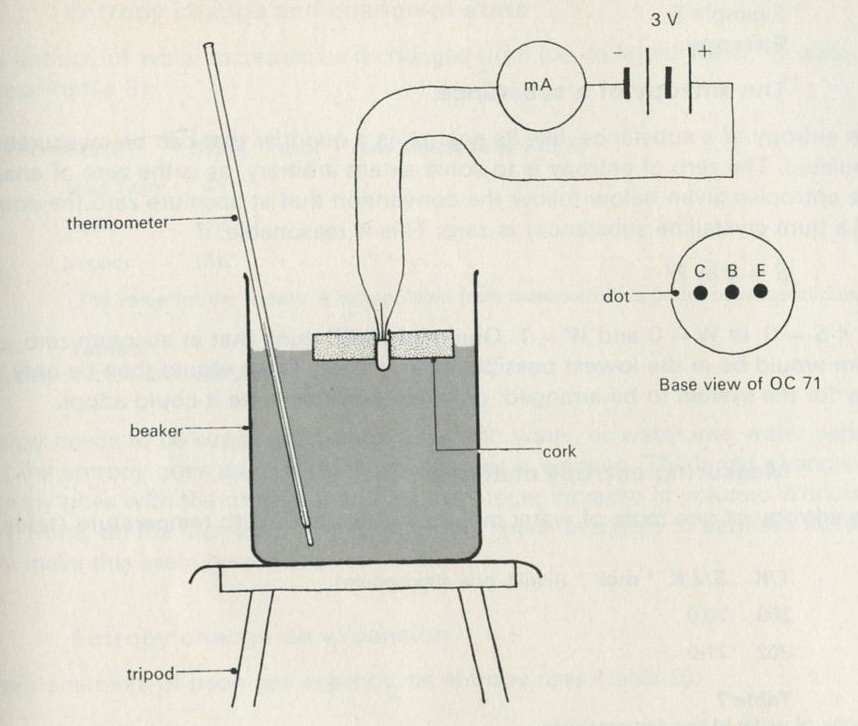

- Example 4: Conduction in a semiconductor

- Example 5: The entropy of a substance

- Example 6: Using entropy values

- Example 7: Inefficient engines

- Engines which cannot help being inefficient

- Entropy and engines

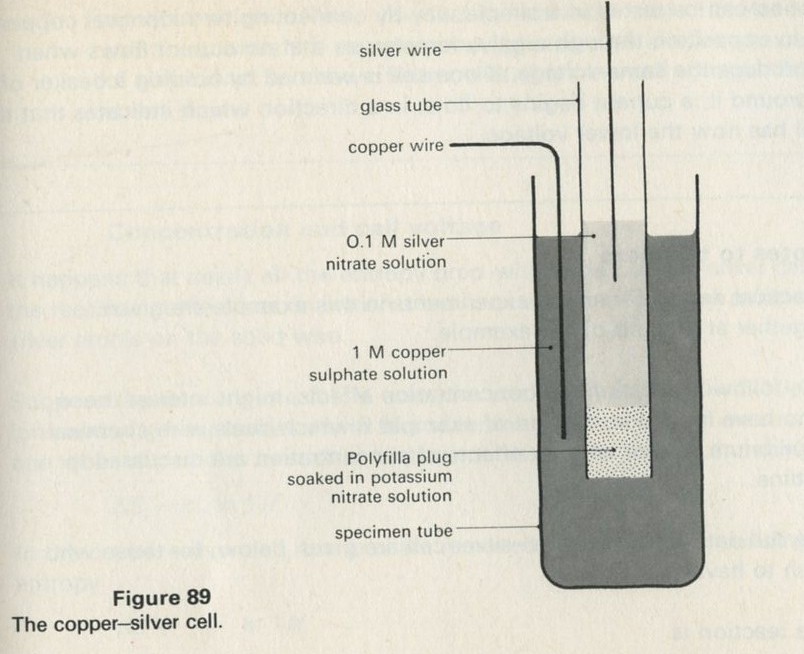

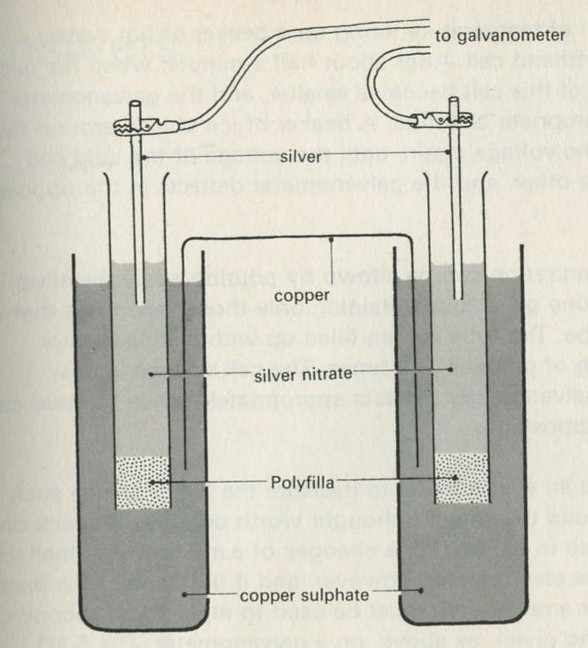

- Example 8: Cells as energy converting devices

- Entropy effects on a cell voltage

- Concentration and cell voltage

- Answers

Appendices

- A: Heat, work, the conservation of energy, and the First Law of Thermodynamics

- B: The Einstein model and the Kelvin temperature

- C: The quantum shuffling game for indistinguishable quanta

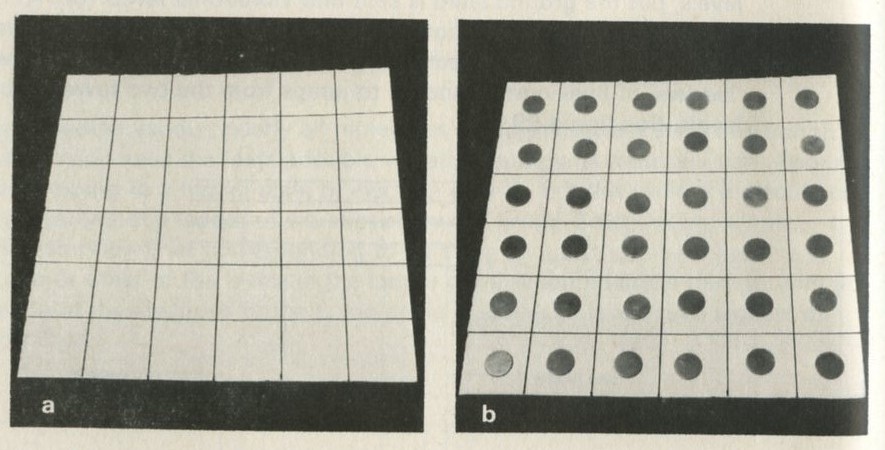

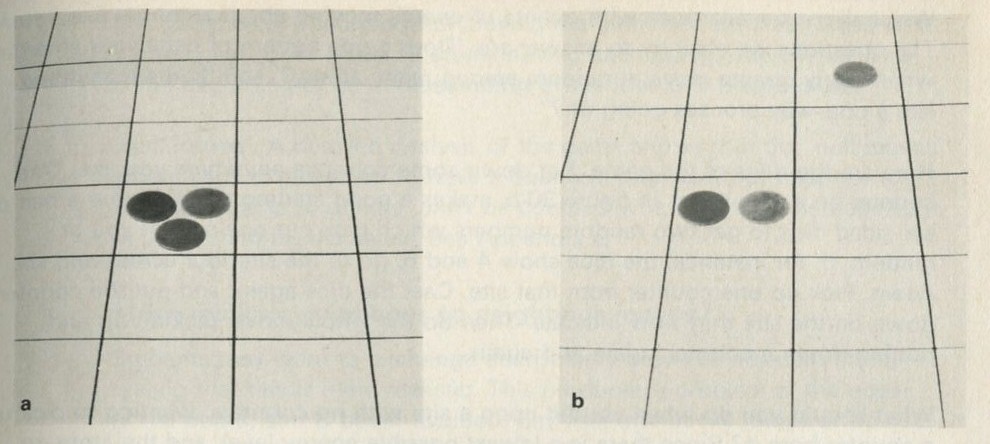

- D: Photographs of pucks distributed between two halves of a surface

List of books, films and film loops, slides and apparatus

Index

Nuffield Advance Physics team and special advisers

Joint organizers

Dr P. J. Black, Reader in Crystal Physics, University of Birmingham

Jon Ogborn, Worcester College of Education; formerly of Roan School, London SE3

Team members

W. Bolton, formerly of High Wycombe College of Technology and Art

R. W. Fairbrother, Centre for Science Education, Chelsea College; formerly of Hinckley Grammar School

G. E. Foxcroft, Rugby School

Martin Harrap, formerly of Whitgift School, Croydon

Dr John Harris, Centre for Science Education, Chelsea College; formerly of Harvard Project Physics

Dr A. L. Mansell, Centre for Science Education, Chelsea College; formerly of Hatfield College of Technology

A. W. Trotter, North London Science Centre; formerly of Elliott School, Putney

Evaluation

P. R. Lawton, Garnett College, London

Acknowledgements

The Organizers wish to express their gratitude to the many people who gave help with the development of this Unit.

Professor R. G. Chambers, Professor P. T. Landsberg, Professor P. T. Matthews, Professor D. J. Millen, Professor M. Woolfson, and Dr J. W. Warren formed a working party which met to discuss the proposals for the Unit.

The development of the computer-constructed films which play a large part in this Unit would have been impossible without the willing help of Dr F. R. A. Hopgood of the Atlas Computer Laboratory, Chilton, Berkshire, who was responsible for the actual production of the films. We are also grateful to Dr P. Davies for help with mathematical statistics, and to Dr G. G. S. Miller for help at an earlier stage with computer programming.

We wish also to acknowledge our debt to the Nuffield Advanced Chemistry and Physical Science Projects.

We have also been influenced by H. A. Bent's valuable book, The Second Law.

Consultative Committee

- Professor Sir Nevill Mott, F.R.S. (Chairman)

- Professor Sir Ronald Nyholm, F.R.S. (Vice-Chairman)

- Professor J. T. Allanson

- Dr P. J. Black

- N. Booth, H.M.I.

- Dr C. C. Butler, F.R.S.

- Professor E. H. Coulson

- D. C. Firth

- Dr J. R. Garrood

- Dr A. D. C. Grassie

- Professor H. F. Halliwell

- Miss S. J. Hill

- Miss D. M. Kett

- Professor K. W. Keohane

- Professor J. Lewis

- J. L. Lewis

- A. J. Mee

- Professor D. J. Millen

- J. M. Ogborn

- E. Shire

- Dr J. E. Spice

- Dr P. Sykes

- E. W. Tapper

- C. L. Williams, H.M.I.

Foreword

It is almost a decade since the Trustees of the Nuffield Foundation decided to sponsor curriculum development programmes in science. Over the past few years a succession of materials and aids appropriate to teaching and learning over a wide variety of age and ability ranges has been published. We hope that they may have made a small contribution to the renewal of the science curriculum which is currently so evident in the schools.

The strength of the development has unquestionably lain in the most valuable part that has been played in the work by practising teachers and the guidance and help that have been received from the consultative committees to each Project.

The stage has now been reached for the publication of materials suitable for Advanced courses in the sciences. In many ways the task has been a more difficult one to accomplish. The sixth form has received more than its fair share of study in recent years and there is now an increasing acceptance that an attempt should be made to preserve breadth in studies in the 16-19 year age range. This is no easy task in a system which by virtue of its pattern of tertiary education requires standards for the sixth form which in many other countries might well be found in first year university courses.

Advanced courses are therefore at once both a difficult and an interesting venture. They have been designed to be of value to teacher and student, be they in sixth forms or other forms of education in a similar age range. Furthermore, it is expected that teachers in universities, polytechnics, and colleges of education may find some of the ideas of value in their own work.

If this Advanced Physics course meets with the success and appreciation I believe it deserves, it will be in no small measure due to a very large number of people, in the team so ably led by Jon Ogborn and Dr Paul Black, in the consultative committee, and in the schools in which trials have been held. The programme could not have been brought to a successful conclusion without their help and that of the examination boards, local authorities, the universities, and the professional associations of science teachers.

Finally, the Project materials could not have reached successful publication without the expert assistance that has been received from William Anderson and his editorial staff in the Nuffield Science Publications Unit and from the editorial and production teams of Penguin Education.

K. W. Keohane

Co-ordinator of the Nuffield Foundation Science Teaching Project

Introduction

It seems to us to be worth a good deal of effort to make the ideas behind the Second Law of Thermodynamics intelligible to students at school. The approach in this book is through the statistics of molecular chaos, because we not only feel that this is the best way for a first insight into what entropy is, but also because the growing importance of statistical thinking in physics, chemistry, and in biology, not to mention sociology, economics, and education, makes it especially desirable to provide opportunities for students to think about the difficult concepts involved.

In addition, much that is new and powerful here emerges from a very few fundamental ideas: given quanta and atoms, one emerges with the Boltzmann factor, Kelvin temperature, entropy, and the Second Law.

The Unit contains a good deal more than an average class will wish to or will be able to follow. The first three Parts are simple introductory material. Part One is a brief introduction to the notion of an irreversible process, largely employing films shown forwards and in reverse. Part Two, helping to signal the practical importance of the ideas being developed, considers whether or not it matters that the fossil fuel reserves of the Earth are being used up. Part Three starts the statistical thinking off in the simplest of contexts: that of diffusion. Its purpose is to introduce a way of thinking, especially the use of dice-throwing games, so as to ease later and harder work on similar lines.

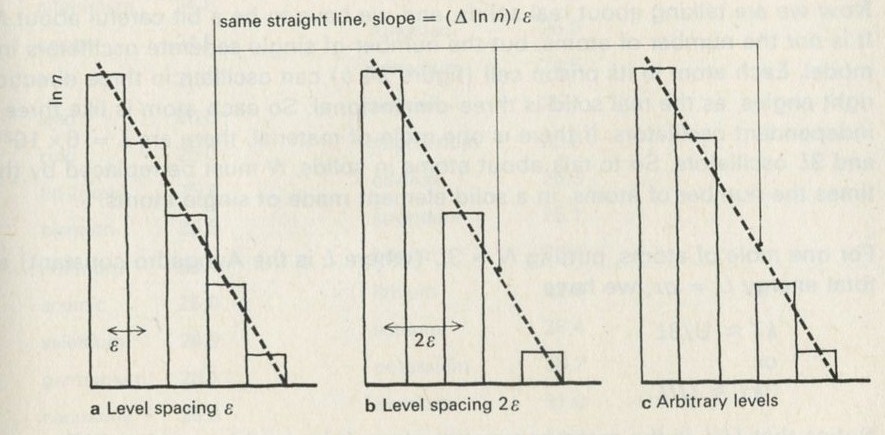

Part Four will complete the Unit for many students, apart from a brief glance at one or two applications from Part Six. It contains a study of thermal equilibrium in an Einstein solid. This problem is studied by means of random simulation, both with dice on the bench and with films constructed by computer. By this means, detailed statistical arguments can be avoided: in particular no knowledge of factorials or of permutations and combinations need be assumed. The outcome is an exponential (Boltzmann) distribution. After seeing that the direction of heat flow is governed by the steepness of this distribution, the Kelvin temperature is given a definition in terms of the slope of the distribution. This definition is less fundamental than one produced later, in Part Five, but we feel that it still represents a worthwhile achievement. The molar heat capacity of an Einstein solid at high temperatures follows at once, being 3Lk, so that the Boltzmann constant k can be measured, using observations of the molar heat capacities of a variety of solid elements. (L is the Avogadro constant.) The Boltzmann constant k is introduced in a fundamental way, as a scale constant which fixes the size of the Kelvin degree.

Students who pursue the theoretical arguments no further will still be able to appreciate some of the examples, given in Part Six, of the uses of the ideas, particularly those examples which use the Boltzmann factor to discuss the temperature dependence of rate of reaction, vapour pressure of water, or current in a semiconductor. The work described so far is to be regarded as the normal amount for an ordinary class taking Nuffield Advanced Physics, even though it occupies little more than half the whole book.

The further work in Parts Five and Six has been included for several reasons.

Part Five contains theoretical arguments, of no great complexity, which nevertheless take the ideas a large step forward. The Boltzmann distribution met in Part Four is seen to arise from changes to the number of ways W of arranging quanta among atoms, when quanta enter or leave the system. Heat flow is seen as taking the direction which increases W; the Kelvin temperature is given a fundamental statistical definition and entropy S = k ln W is introduced. This Part should not be too difficult for a proportion of students: those who can appreciate it will gain a great deal.

We have also included the work of Part Five because of the need for students in other subjects and at other levels, to have a simple, intelligible, but basically truthful introduction to entropy and the Boltzmann factor, and we think our material has something novel to offer for this purpose.

The ideas suggested in Part Six are a conscious attempt to bridge the gap between physics and chemistry. It has been a matter of regret among the members of the Advanced Physics, Chemistry, and Physical Science Projects, that the development of teaching proposals in this difficult area was so slow and arduous that the three Projects had too little time to integrate their various proposals before publication. For this reason, we have included in Part Six some preliminary attempts at bringing together ideas from physics and chemistry, even though these extend the work in this Unit beyond what is practicable in the short teaching time available to a physics course. However, we hope that this material may be of particular value where ideas from this Unit are used or adapted for courses which go beyond the limits of a sixth form physics course.

The kinetic theory of gases does not appear in this work to any great extent. It ought not to be missed out of a student's school course, and discussion of it should be substituted for part of the work offered here, with students who have taken an O-level course which does not include the theory.

Aims of the teaching

We think it better to succeed in achieving modest aims rather than to fail to achieve grandiose ones. This Unit is not likely to make a student a competent thermodynamicist. Those who stop at Part Four will not even explicitly meet the concept of entropy. But we do think the Unit can achieve some things of value. We hope students will recognize - albeit informally - the distinction between reversible and irreversible processes. We hope they will understand that the key to the problem of the natural direction of a process is statistical, and in particular, that heat flows from hot to cold by chance. We hope that they will appreciate what a statistical equilibrium is like, through becoming acquainted with it in various forms. We hope that they will understand that temperature - the clue to the direction of heat flow - is related to the chances for or against flow in a particular direction. We hope also that the work in Part Two and Part Six will show them that these ideas are of the greatest practical importance.

Difficulties

Many will feel this Unit to be a daunting prospect. Thermodynamics is widely acknowledged to be difficult, and statistical mechanics has no reputation for being an easy subject. Not a few expert physicists acknowledge their own inadequacy in this field. Yet in a sense, it is all very easy. What happens is what happens in many ways, expresses just about the sum total of the basic ideas underlying the subject.

Experience suggests that the problem is mainly one of confidence. Once one has begun to use the ideas, confidence comes quite quickly, because every problem is essentially the same problem: a process will only occur if the number of ways W or the entropy S = k ln W do not decrease as a result. We ourselves have found Bent's The Second Law a particularly helpful book, and we urge all who are concerned about their ability to cope with the material in this Unit to obtain a copy. Its particular merit lies in the large number of simple but penetrating questions, with answers given, which it contains. Because one of the teacher's worries is the need to be able to answer students' questions, this is a very valuable feature of the book.

We hope also that the material in Part Six will help teachers, even if it is not used with students. Examples 5, 6, and 8 may be particularly valuable for this purpose. Example 6 makes play with entropy values for various materials. (It is a peculiar deficiency of many courses in thermodynamics for physicists that one can emerge from them without having encountered a single numerical value for an entropy or an entropy change, and without having any idea of what order of magnitude to expect.) Example 7 deals with physical and chemical equilibrium, the area in which thermodynamics finds its largest practical applications. Example 8 discusses the special case of electrochemical cells, a natural link between physics and chemistry.

A book for teachers and students

In the absence of suitable textbooks, we have written this book so that it can be used by both teachers and students. In it, material intended particularly for teachers is printed in ruled boxes. Much use is made of questions incorporated into the text. Each question needs to be answered before proceeding. Answers are given on in the Answers part.

In the list at the end, details are given of books referred to in the text.

Time

In the Advanced Physics Course, four weeks have been allowed for work on this Unit. As suggested above, this will confine the work done with many classes to that offered in Parts One to Four, with a little from Part Six. However, we hope that work in this area in physics lessons will not be seen as divorced from similar work in chemistry. It may well provide an opportunity to join forces and this might make it a good deal easier to find time for the work of Parts Five and Six.

1. One-way processes

I am the undertow Washing tides of power Battering the pillars Under your things of high law. I am a sleepless Slowfaring eater, Maker of rust and rot In your bastioned fastenings, Caissons deep. I am the Law Older than you And your builders proud. I am deaf In all days Whether you Say 'Yes' or 'No'. I am the crumbler: tomorrow.

Carl Sandburg, Under (1916).

In Collected poems (1950) Harcourt Brace Jovanovich, Inc.

Timing

It would be best if this Part could be kept within two periods, even at the price of omitting some examples. The purpose is to start the thinking off, not to complete it, and the answers that emerge are too indefinite to carry the weight of several periods of teaching. If more than three periods are taken, some other Part, probably Part Two, will have to be taken more quickly.

On getting older

Every man or woman is born, grows older, and dies. Cars, bicycles, and washing machines wear out and have to be thrown away. Bridges and ships rust and corrode. Houses wear out; timber rots or cracks, bricks lose their sharp new edges, the mortar crumbles. Sand is what the sea, in time, makes of rocks and pebbles. A cup of tea becomes cold if forgotten - but not freezing cold. An abandoned ice cream melts away.

These may be some of the reasons why we all have such a strong sense of time going inexorably onward. People have always tried to understand what the flow of time means, but few would claim to have a full grasp even of the problem, let alone the answer. Philosophers continue to puzzle over it. Here all we want to point out is the simple fact that all by themselves things tend to get old and worn out; they don't spontaneously become new, clean, and tidy.

Time

In a sense, time is irrelevant here. The important thing is that these things happen inevitably. How quickly or slowly they happen is beside the main point. The discussion is not one about time machines, or the fourth dimension, and to present it as such would be irrelevant or misleading. The intention is simply to give a general point of view from which to think about the films that follow, which show events going forwards and backwards.

Scientists have been able to reach a little understanding of some aspects of these problems. They have found ways of explaining why change is such a one-way journey from new to old, fresh to rotten, clean and tidy to dirty and muddled, very hot or very cold to lukewarm. And the answers have thrown new light on some very practical problems - how to make blast furnaces yield more steel, for example, by finding out how to encourage the reaction to go the way the steelmaker wants. A good way to start thinking about such problems is to ask what several processes would look like if they went the wrong way.

Questions

There follows the first of many sets of questions incorporated into the text. Discussion of these questions and thinking about them are intended to be integral parts of the reading of this book. Answers are provided in the Answer part. It may sometimes be best to devote the discussion to arguments about the adequacy of these answers.

Q1 If a film of a tall chimney falling over and smashing to pieces were to be taken and projected, but in reverse, so that you saw the film backwards, what would it look like? Why would it be obvious that the film was being run backwards?

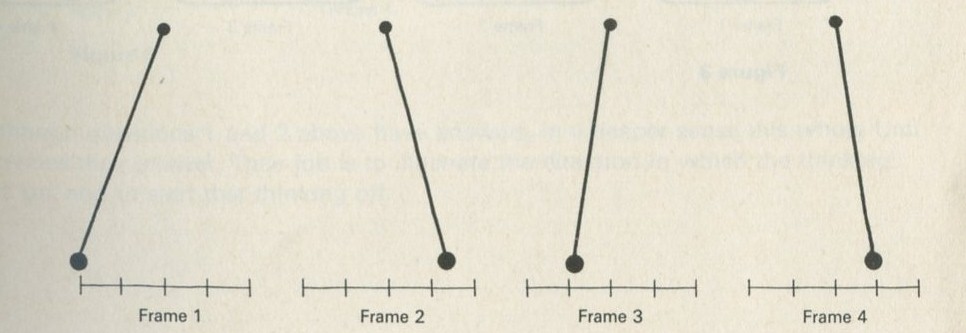

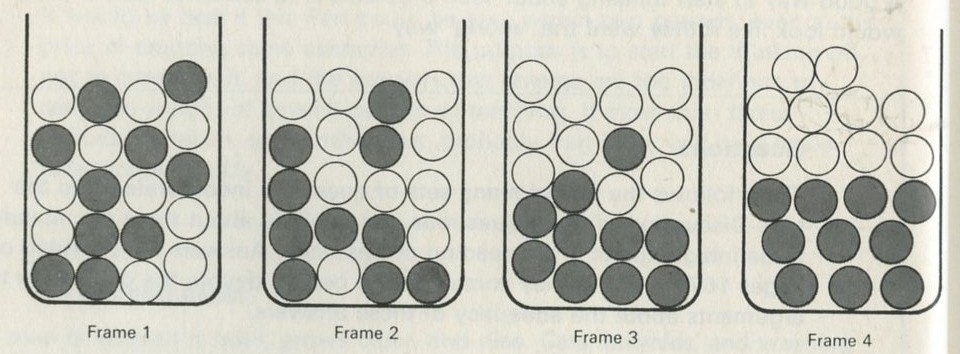

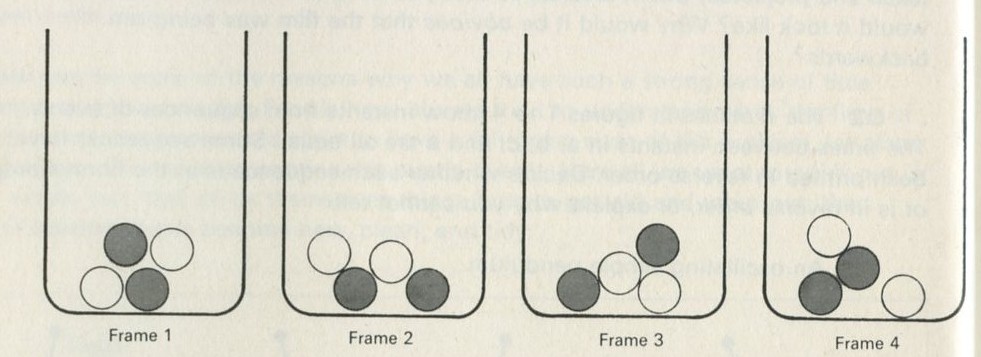

Q2 The sketches in figures 1 to 4 show instants from sequences of events. The times between instants in a, b, c, and e are all equal. Some sequences have been printed in reverse order. Decide whether each sequence is in the normal order or is in reverse order, or explain why you cannot tell.

a An oscillating simple pendulum.

Figure 1

Full Size Image

b Marbles being shaken in a container. The marbles are of two different colours, but they are otherwise identical.

Figure 2

Full Size Image

c Same as b but there are only two of each sort of marble.

Figure 3

Full Size Image

d Two beakers of water in thermal contact.

Figure 4

e A ball rolling on a horizontal table.

Figure 5

Although questions 1 and 2 above have answers, in a deeper sense this whole Unit provides their answer. Their job is to illustrate the direction in which the thinking will go, and to start that thinking off.

Film loops, Forwards or backwards?

Three 8 mm loop films, specially made for this Unit, are available. See the film list for details.

Forwards or backwards? Loop 1

- Event A: A brick falling to the floor, shown first forwards and then in reverse.

- Event B: A piece of red hot steel being dipped into water, shown first forwards, and then in reverse.

- Event C: A piece of paper burning, shown first in reverse, and then forwards.

Forwards or backwards? Loop 2

- Event A: A ball bouncing on a flat, horizontal surface, first shown forwards and then in reverse.

- Event B: A vehicle travelling to and fro along an air track, shown only in reverse.

- Event C: A collision between a pair of nearly frictionless pucks, shown first forwards and then in reverse.

Forwards or backwards? Loop 3

- Event A: Ink mixing with water, shown only in reverse.

- Event B: Shaking a box of coloured balls, starting with them sorted into two layers. Initially, there are many balls. The shaking is shown only in reverse. Then the shaking is repeated with just four balls, also shown only in reverse.

- Event C: The film shows a row of heavy pendula, which are not coupled but have various lengths. First, just one pendulum is shown swinging on its own, in reverse only. Then uncoupled pendula of various lengths, in a row, are shown swinging together, both forwards and in reverse.

The purpose of these films is to focus attention, through discussion, on the problem of why some events look absurd in reverse while others look sensible. In Part Two the implications of the special case of the one-way burning of fuels are considered.

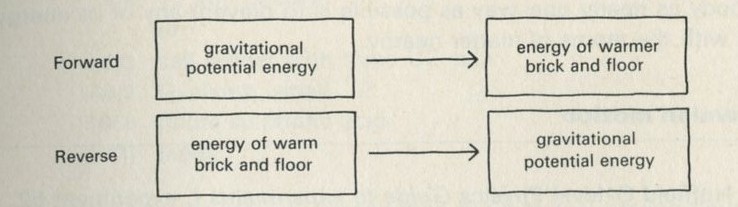

Energy changes

What energy transformations occur when a brick falls from a height onto the floor? Initially, the brick has gravitational potential energy. As it falls this energy is transformed to kinetic energy of the brick. When the brick hits the floor this energy passes to extra energy shared among the molecules of the brick and the floor - the temperatures of the brick and of part of the floor increase. The energy transfer of gravitational potential energy to energy shared among molecules is quite sensible - bricks often fall. The reverse event, with the brick jumping up off the floor, does not seem sensible.

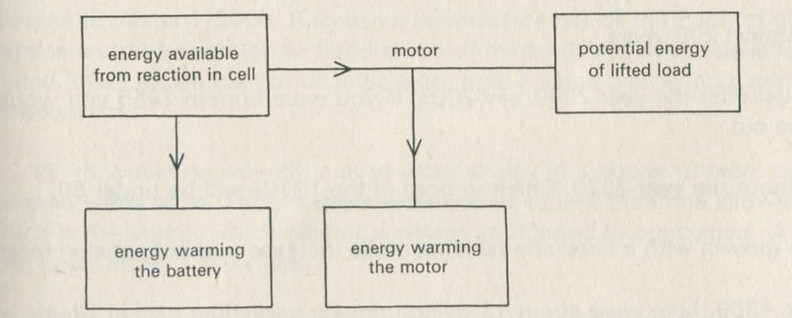

Figure 6: Energy boxes showing energy transfers

Full Size Image

Figure 6 suggests a way of drawing a diagram to represent energy transformations. Energy is conserved, so the energy in one form at one stage will appear in another form at another stage. This is represented by putting the name of the form of the energy at each stage into a box. Only the first and last stages are shown.

Figure 6 shows how the energy boxes could be drawn for the impossible event of the brick jumping upwards all by itself. So far as energy is concerned, this reverse event is not impossible. Energy can still be conserved; the reverse process does not break the law of energy conservation.

The First Law of Thermodynamics

Appendix A discusses the difference between the First Law of Thermodynamics and the Law of Conservation of Energy.

The Law of Conservation of Energy is thus no help in determining the direction of an event. The law would allow a hot brick and the floor to cool, and so to provide the energy for the brick to leap into the air. For this to happen the molecules would have to move in unison, so that they all hit the brick at the right moment, and lose energy as they push it into the air. As bricks do not generally leap off floors, such organized movement must be very rare. It does not appear likely that the molecules will move in just the right way to hit the brick upwards.

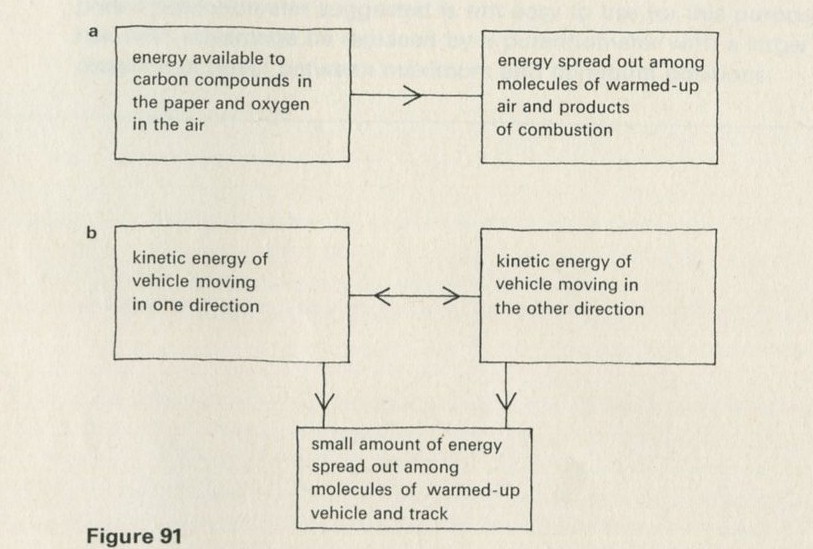

Q3 Draw energy boxes for a a piece of paper burning, b an air track vehicle travelling forwards and backwards along a horizontal track.

Q4The piece of paper burning does not look sensible when reversed; the vehicle moving along the air track does look sensible. Do your answers to question 3 give any clue to why one event looks sensible when reversed and the other does not?

If there is one word which is the key to the difference between one-way and two-way processes, it is spreading. Whenever motion or energy becomes spread out among many particles, something two-way has happened. The trick for making the motion of a body as nearly one-way as possible is to prevent any of its energy from being shared with the atoms of matter nearby.

Brownian motion

See Nuffield O-level Physics Guide to experiments I, experiment 52. Any student who has not seen Brownian motion should see it now.

In the Brownian motion experiment smoke particles are seen being knocked around as a result of gas molecules hitting them. Some of the kinetic energy of the gas molecules has been given to the smoke particles. This is similar to the hot brick and floor getting cooler and the brick jumping up from the floor; a sufficient number of gas molecules have moved together to hit the smoke particle (in one direction) at the right moment, lose energy, and give the particle a push. There is, however, one significant difference between the smoke particle and the brick - the smoke particle is considerably smaller. Not so many molecules have, by chance, to hit it in unison in order to produce an observable motion.

Q5 Which is more likely, that everyone in a class of only three students might choose by chance to go to the cinema at the same time, or that everyone in a class of forty students might choose to do so, again by chance?

Q6 Would a film of Brownian motion look sensible if run in reverse?

A process in which energy is shared out among many molecules looks implausible in reverse. The sharing must be among only a few molecules to be plausible in reverse. Two-way processes usually involve large numbers of objects.

9.1 Demonstration: Energy conversions

9A motor/generator unit

9C switch unit

9D lamp unit

9E flywheel unit

9L storage battery unit

9M driving belt

176 12 volt battery

or

1033 cell holder with three U2 cells

44/2 G-clamp, small 2

1056 dilute sulphuric acid

1000 leads

a A motor driving a flywheel; then the flywheel driving the motor as a dynamo.

See Nuffield O-level Physics Guide to experiments II, experiment 61 (21).

b A pair of lead plates dipping in sulphuric acid charged from a battery, then used to light a lamp.

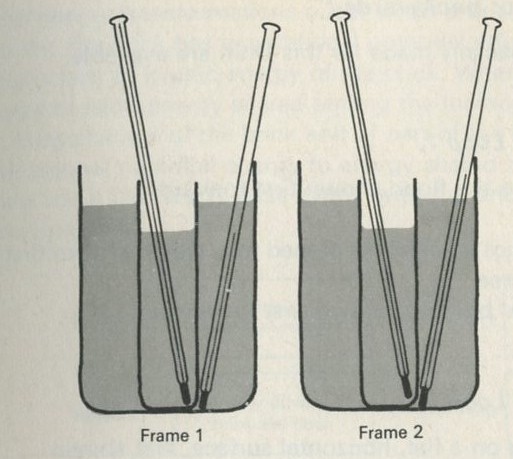

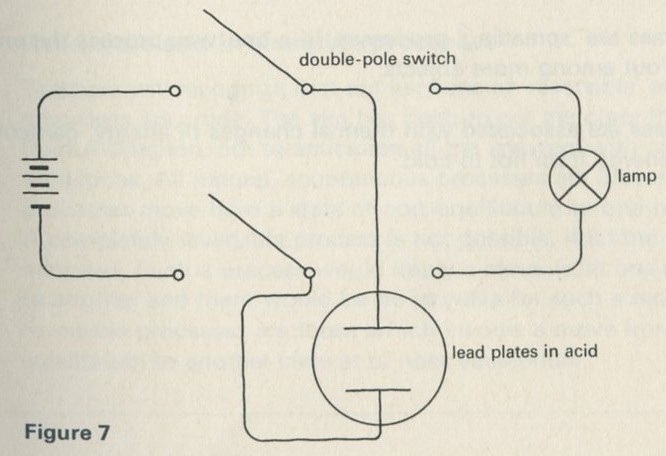

Figure 7

Full Size Image

The storage battery unit, filled with dilute sulphuric acid, is connected via the switch to the battery (4 or 4.5 V) and to the lamp unit (using one lamp only). When the switch is thrown over to connect the lead plates to the lamp, the lamp will light for a few seconds.

One-way and two-way machines

Some machines are used to change energy from one form to another. The transformation could be of electrical energy to mechanical energy, or of mechanical energy to electrical energy, for example. Films of some machines would look sensible in reverse.

Q7 Suggest a machine which, if shown running in reverse on a film, would still look reasonably sensible.

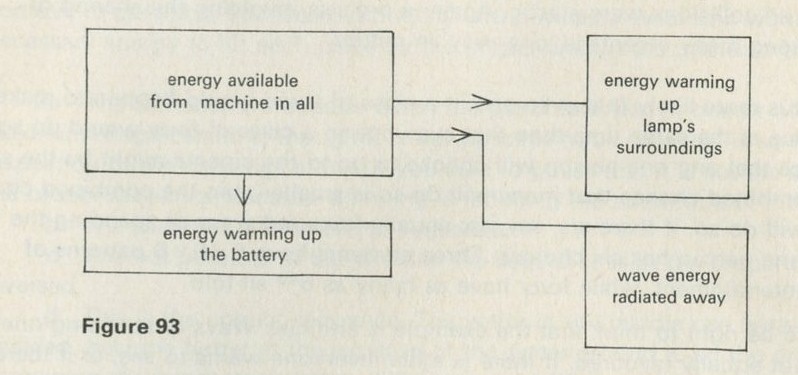

Q8 Draw energy boxes for a lead-acid battery driving a motor which lifts a load. Could this process work backwards?

Q8 Draw energy boxes for a lead-acid battery lighting a lamp. Could this process work backwards?

Clearly, the distinction between one-way and two-way processes is a matter of practical importance, and is not merely an intellectual puzzle.

Summary: What kinds of events are one-way?

The Law of Conservation of Energy is no help - every change it allows one way it allows the other.

One-way processes are spreading processes. In a one-way process the energy becomes spread out among more objects.

One-way processes are associated with thermal changes of energy, particularly the thermal flow of energy from hot to cold.

Mixing processes

In Part Three, we look at mixing, jumbling, and diffusion as other examples of one-way processes. If students raise such ideas now, the teacher may want to use material which occurs at the beginning of Part Three.

Difficulty over the meaning of one-way processes

There may be a difficulty over the meaning of one-way processes. As they have been described, the melting of a lump of ice is one-way, but students may object that the water can, after all, be frozen again. To freeze it, a cooling device is needed, driven by some source of energy. Perhaps the energy is electrical, from a hydro-electric power station; then some water ends up at the bottom of a hill instead of being at the top. How might it get back? The Sun could evaporate it, and it could then fall as rain, having spread the energy liberated on condensation out into the atmosphere. So the water can be frozen again, but not without other one-way processes going on. It is not possible to go back to the starting point with everything in the world exactly as it was before. Bent's The Second Law, Chapter 4, discusses this problem.

Reversible and irreversible processes

Teachers will recognize that our versions of reversible and irreversible processes are crude. The aim has been to get the class thinking in a fruitful direction, not to anticipate all the answers with careful definitions. All natural, spontaneous processes are irreversible. Such processes move from a state of non-equilibrium to one of equilibrium. A completely reversible process is not possible, if all the side effects are included. Such a process would imply a move from one equilibrium state to another and there would be no impetus for such a move. Nearly reversible processes are those which involve a move from a state near to equilibrium to another state at or near equilibrium.

2. The fuel resources of the Earth

That which burns never returns

(A slogan seen on the vans of a fire appliance company)

Timing

No more than a double period can be afforded for this Part, unless sacrifices are made elsewhere.

World consumption of energy

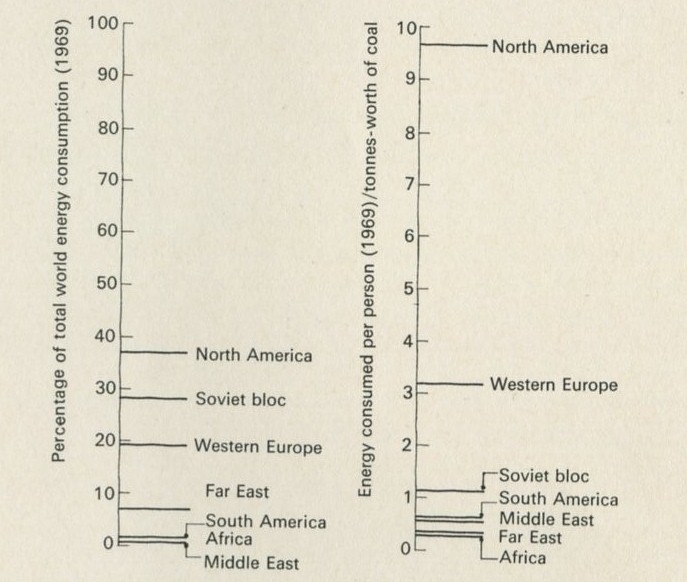

The United Nations statistical yearbook (1965) says that in 1964 the world as a whole consumed energy from fuels equivalent to the energy obtainable by burning nearly 5000 million tonnes of coal. (1 tonne is 1000 kg, very nearly 1 ton.) As there were about 3000 million of us, each person's share was the equivalent of about 1500 kg of coal; that is, about 1.5 tonnes-worth each. But of course the shares were not very even. North Americans consumed about 8.6 tonnes-worth each, and the United States with 6 per cent of the world's population consumed over 30 per cent of the total energy. Western Europeans consumed nearly 3 tonnes-worth each, while the inhabitants of the Far East and Africa consumed one-tenth as much each, equivalent to 0.3 tonne.

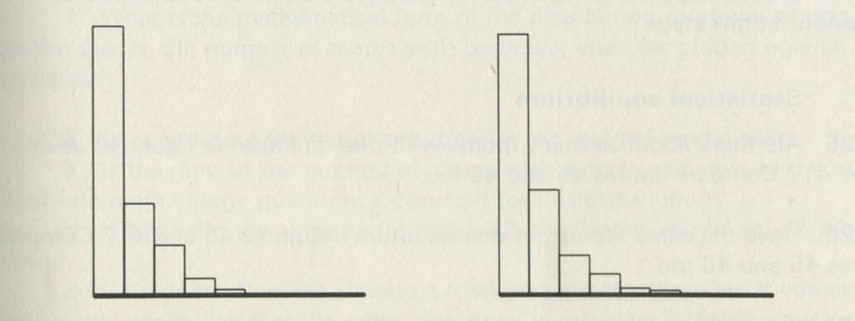

Figures 8 and 9

Full Size Image

From Statistical Office (1969) Statistical yearbook. United Nations.

This uneven distribution of energy use among areas of the world is not simply a matter of unfair shares. A country cannot use energy if it has few power stations or industries to make fuel-using devices, and the Middle East offers a clear example of the difference between having and using energy. It produced 568 million tonnes-worth, but used only 53 million, so that each person consumed about the same as anyone else in Africa and Asia, that is, the equivalent of about 0.34 tonne each.

The richer countries do help the poorer ones with aid, the total flow of international aid from all sources being estimated for 1960 at about 2.9 thousand million dollars. In 1952, the United Nations report, Measures for the economic development of underdeveloped countries, estimated the need for aid to be about 20 thousand million dollars a year. The largest single contribution to economic aid comes from the United States. The survey (1964) of the U.S. economy, by the Organization for Economic Cooperation and Development, quotes 725 million dollars for government grants and capital in the form of dollar payments abroad for 1964.

The energy figures should suggest at least two questions. How is energy consumption connected with the degree of industrialization, or advancement of a society? What prospects can we see ahead as more and more people want, for example, to have cars which use up petrol, and are made of steel which is costly in fuel to extract and manufacture? Secondly, how big a dent was made in 1964 in the world's stock of coal, oil, gas, and other energy sources as yet untapped?

We shall now consider the second question in detail. The equivalent of a million tonnes of coal represents a large amount of energy. It comes to 2.8 × 1016 joules. As was mentioned earlier, in 1964 the world as a whole consumed energy from fuels equivalent to nearly 5000 million tonnes of coal. This is 0.14 × 1021 joules or 0.14 Q, where Q ≈ 1021 joules and is a unit that has been used in calculations of world energy resources.

So in 1964, mankind used 0.14 Q. Table 1 gives some figures for the total reserves of fossil fuels left in the earth at the mid-twentieth century.

Reserves known to Additional

exist, which resources whose

can be extracted extraction may

using present or may not

methods under be technically

current economic and economically

conditions feasible

coal and peat 24.7 Q 53.6 Q

oils 2.0 Q 27.7 Q

natural gas 0.7 Q 7.6 Q

oil shale and tar sands 0 13.1 Q

Total 27.4 Q 102.0 Q

(Q = 1021 J)

Table 1: Reserves of fossil fuels

Data from Statistical Office (1965) Statistical yearbook. United Nations

Q1 If the world were to go on using energy at 0.14 Q per year, how long would the reserves that we know can be extracted last (assuming that only fossil fuels are used)?

As the reserves that we know can be extracted run out, no doubt men will find ways of tapping some of the 102 units of additional resources. But will the world go on consuming energy at the 1964 rate?

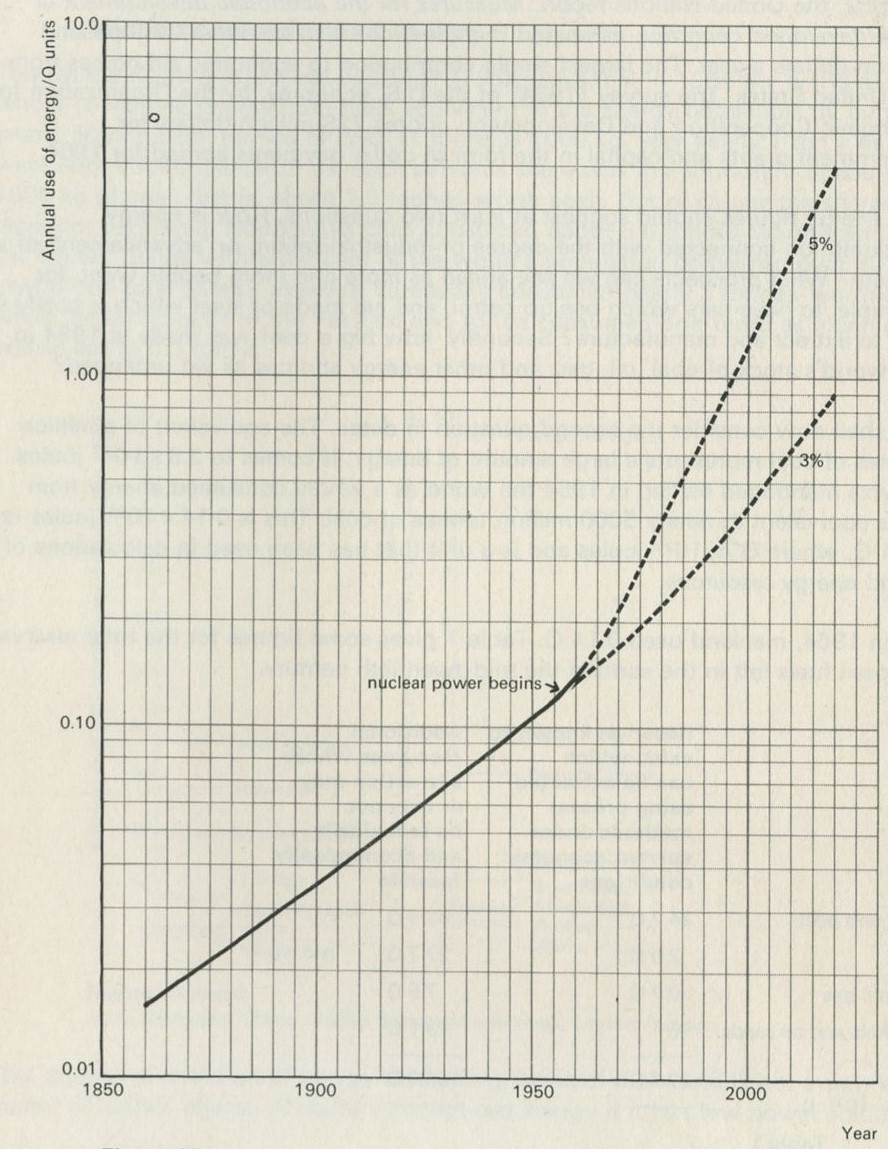

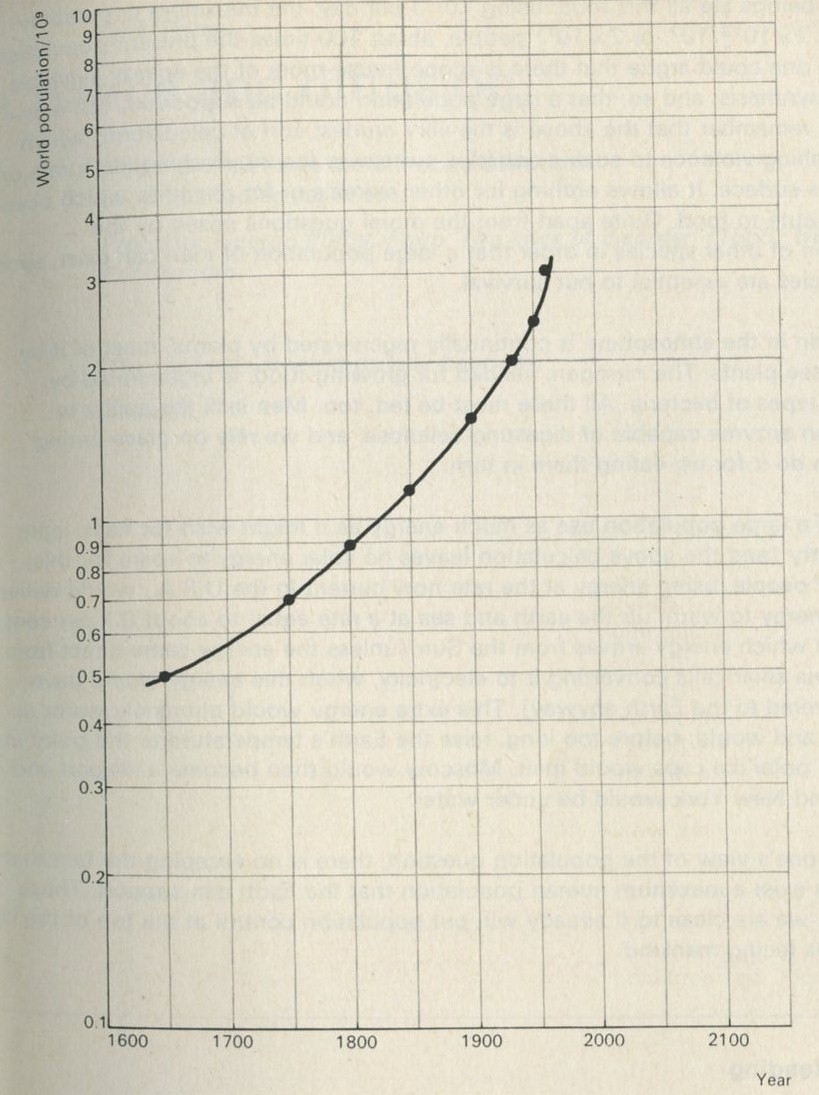

Figures 10

Full Size Image

From Putnam, P. C. (1954) Energy in the future, Van Nostrand Reinhold Co.

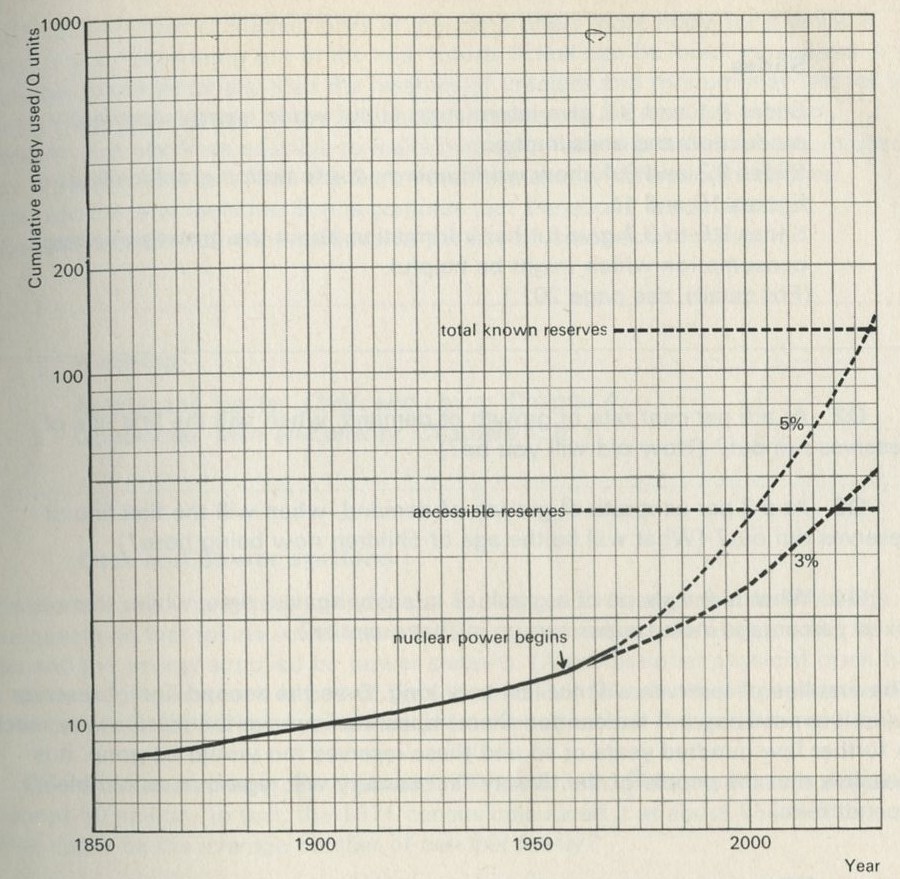

Figures 11

Full Size Image

From Putnam, P. C. (1954) Energy in the future, Van Nostrand Reinhold Co.

Figure 10 shows what has happened in the past, with some guesses about the future, based on the growth of population and the rise in the energy demands of each individual. Back in 1850, the world was spending about 0.01 Q per year; by 1950, this was about 0.1 Q (as we have just seen). Figure 11 shows the cumulative use of energy. In the 2000 years up to 1850, man is thought to have burned between 6 and 9 Q. By 1950, the world had burned nearly twice as much, about 13 Q, so that the century from 1850 to 1950 was nearly as expensive as the previous twenty centuries. Both graphs show what will happen if energy consumption per person increases at 3 per cent and also at 5 per cent per year. These are limits, based on the likely increase of population and industrialization, within which it is anticipated the increase will occur. Over the period 1931 to 1950 the rate of increase was 3 per cent, an increase over the previous period. The rate of increase was, however, far greater for industrialized nations.

Slides

Slides 9.1 and 9.2 give information about world energy reserves, production and consumption.

Slides 9.3 and 9.4 show world energy consumption graphically as in figures 10 and 11.

Slides 9.5 to 9.7 give further information about the growth of energy consumption which might be helpful.

(For details, see the slide list.)

Q2 At a 5 per cent rate of growth of demand, when will the first line of reserves run out? (How old will you be?)

Q3 At a 3 per cent rate of growth of demand, when will the first line of reserves run out? (What will be the age of children now being born?)

Q4 What is the shape of a graph of quantity against time, where there is a fixed percentage increase per annum in that quantity?

The first line of reserves will not last very long. Even the second line of reserves (and it is not known if we can tap them) does not improve the picture very much. A further few hundred years or so and these reserves too would be gone. It is possible that the people of the twenty-first century will regard us as terrible spendthrifts.

Why worry - energy is conserved?

Why should we worry about running out of fuels? Energy cannot be lost; it has merely been transferred from natural gas, coal, or oil, and oxygen, to warm the atmosphere. Why can we not use this energy, assuming we could trap it? There is a link here with Part One: burning was definitely one of the one-way processes. When fuel is burned, something irreversible has been done. Having done it, one can never go back.

Energy resources other than fossil fuels

The Sun delivers about 3000 Q every year to the surface of the Earth, but the energy is very much spread out, being roughly a few hundred watts to each square metre.

This flow of energy can be tapped without using up the store of fuel. The main way of using it is by hydro-electric power generation, but at present this source only provides about 1 per cent of world consumption. It is hard to imagine hydroelectric power being expanded enough to take over from other sources.

The available stock of nuclear fuels in the Earth offers more hope, for a while at least, of our not running out in the near future. If fuel can be bred in reactors from low grade materials, then the reserves of uranium and thorium may last for a long time. The disposal of the radioactive wastes will be a severe problem, however. If it becomes possible to make hydrogen fuse into helium in a controlled way - the reaction that fuels the Sun - then the energy future looks brighter. (The reaction that fuels the Sun is complex, but the above is its net effect. Proposed earthbound reactions involve the use of isotopes of hydrogen.)

Reading

Angrist and Hepler, Order and chaos, Chapter 4.

Ubbelohde, Man and energy, Chapters 4, 5, 7.

Cars and power stations

The motor car is one symbol of industrial society, and it is a great user of energy. The questions that follow invite you to make guesses about the energy used by cars and the energy supplied by power stations. (A professional physicist often has to make rough guesses. It is part of his scientific skill to make those guesses as well as he can, and also to have some idea of how good or bad the guesses may be.)

Q5 Guess how many cars there are in Great Britain. Assume that the population is about 50 million (in fact, the 1971 census calculated it at about 55½ million). What might be the average number of cars per family?

Q6 Guess how much total power there is installed in these cars. (One horsepower is three-quarters of a kilowatt.)

Q7 Guess how much power is installed in power stations in Great Britain. Will it be more or less than the car-power?

Q8 Guess how much petrol the cars would use in a year. How much energy is involved?

(One gallon of petrol is about 4 kg. One tonne of petrol is equivalent to 1.7 tonnes of coal. One tonne of coal is equivalent to 2.8 × 1010 joules.)

How well was this energy used? The energy accounts for cars suggest that they are rather wasteful. Typical figures are given in table 2 below.

Process using energy Percentage used

wind resistance 10

20

road friction 10

transmission, generator, fan, pumps, etc. 5 5

radiator and engine heat losses 35

75

exhaust, oil, and other losses 40

Table 2

Use of energy by a car driven at a steady speed, say 80 kilometres per hour.

From Burke et al. (1957) Trans. SAE, 65, page 715.

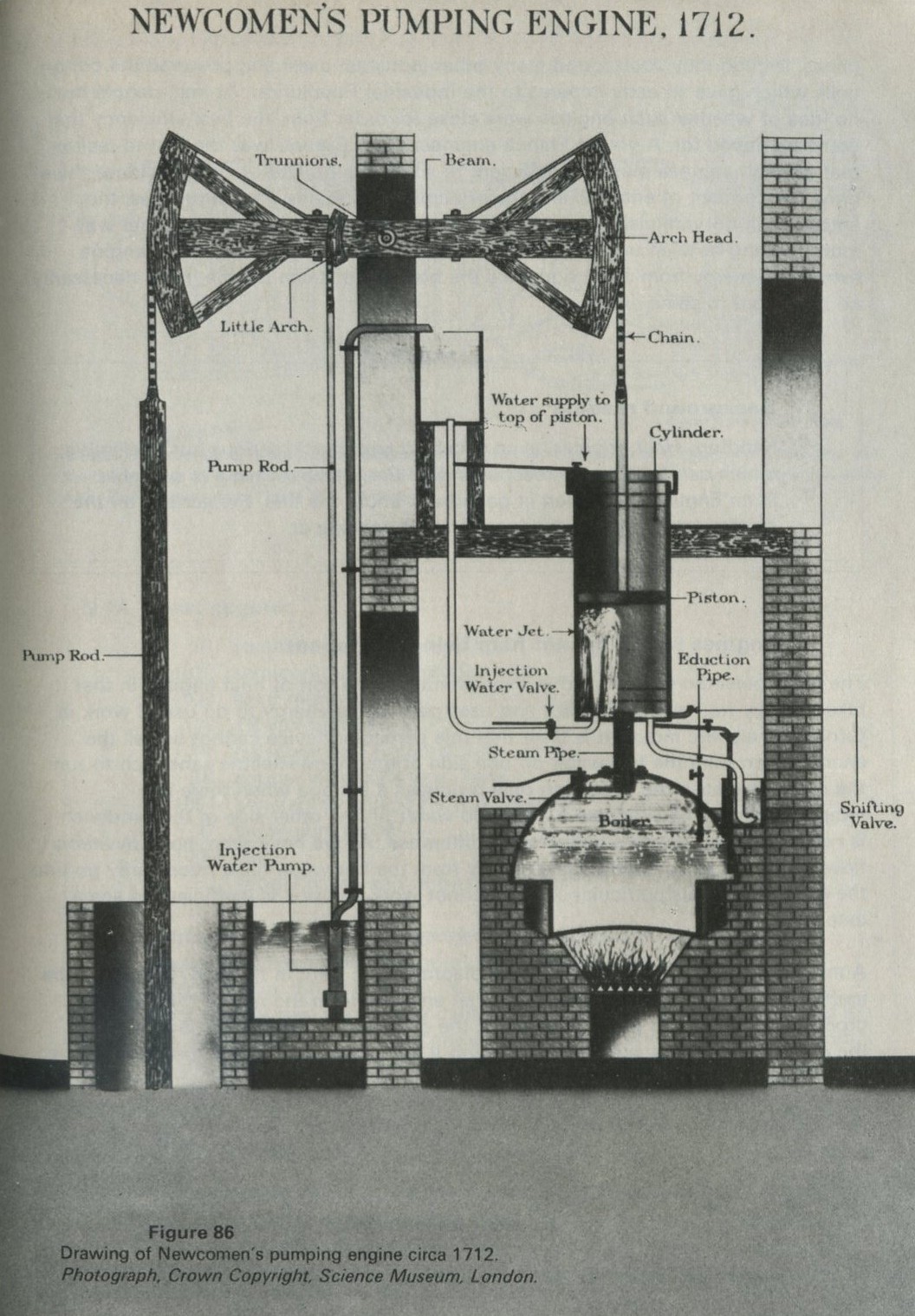

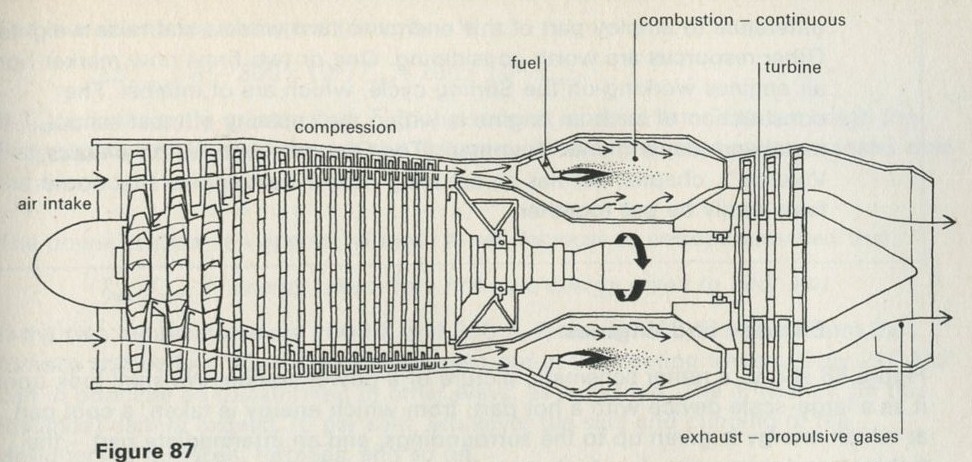

Only about 20 per cent of the power of a car's engine is used to move the car along. Three-quarters of the fuel energy goes directly to heat up the atmosphere. The study of heat and molecules will show, remarkably enough, that this regrettable state of affairs is not due to incompetence on the part of designers of engines. We shall see that it is inevitable that some heat is thrown away. See Inefficient engines.

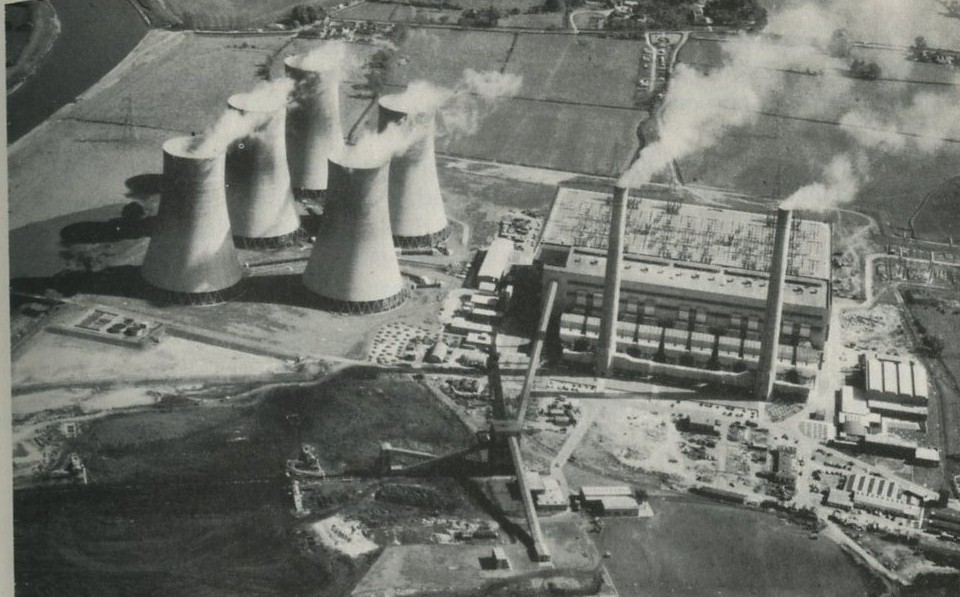

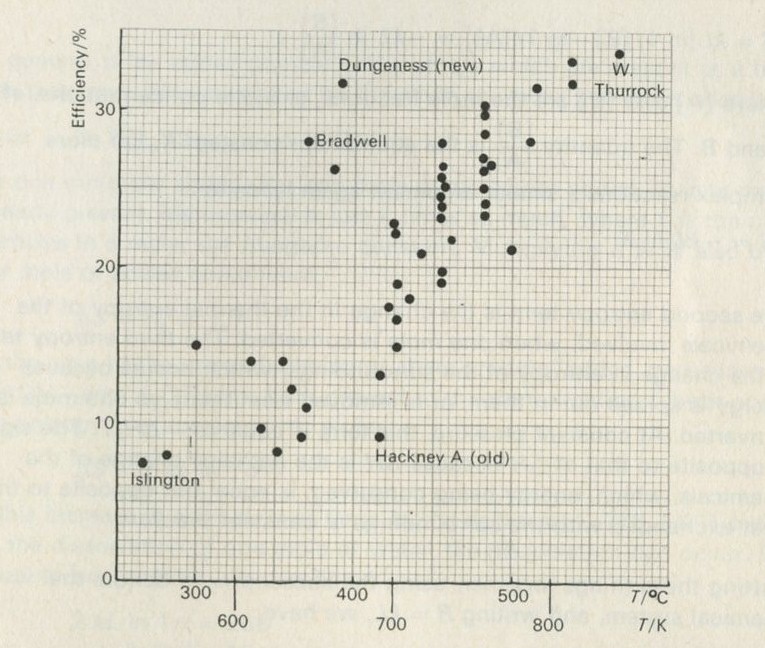

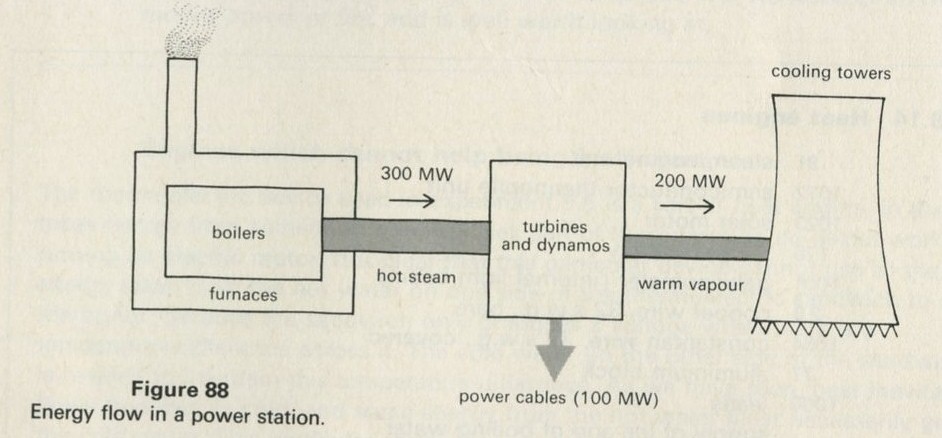

Power stations also throw heat away; indeed most people recognize power stations by their vast cooling towers. (Figure 12.)

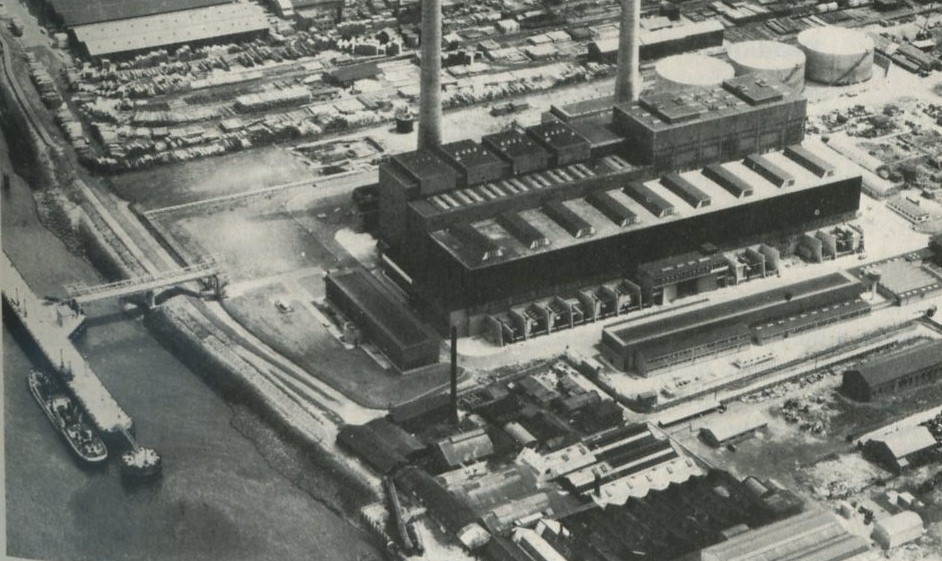

Q9 Figure 13 shows a power station with no cooling towers. How does this power station get rid of its exhaust heat?

Fortunately, power stations are more efficient than cars, and the best of them onvert nearly 40 per cent of the fuel energy to electrical energy. In 1925, the figure for an average power station was nearer 20 per cent. Possible further improvement in the efficiency of power generation is one important reason why people are interested in thermodynamics.

Q10 A question to think about. What might be some of the effects on the environment, and on the world as a whole, of the exhaust heat of the power stations needed to cope with the energy demand?

Slides

Slide 9.9 shows the growth in the number of cars in Britain.

Slide 9.10 presents the data on the energy account for a car.

Slides 9.11 and 9.12 show the power stations also shown in figures 12 and 13.

Supplementary material

This concludes the work we suggest for this Part. The extra suggestions that follow are intended for those who can find extra time and who wish to follow up an interest.

Figures 12: A power station, with cooling towers (High Marnham). Photograph, Central Electricity Generating Board.

Full Size Image

Figures 13: A power station, without cooling towers (Belvedere). Photograph, Central Electricity Generating Board.

Full Size Image

How men create energy

In primitive societies, most men can just grow enough food to feed themselves, and gather enough fuel to warm themselves. There is little left over from their basic needs for other purposes.

The beginning of coal mining made an important change in this situation. The energy available from burning the coal mined by one man was some 500 times larger than the food-energy needed to keep that miner healthy and working. The coal could then be used in engines to do more work; each man-day of a miner's effort created the equivalent of several more man-days of work which could not otherwise have been obtained.

This may sound desirable, but it has some social consequences that are being felt more and more. All that unskilled labour can offer is energy, and alternative sources of cheap energy leave the unskilled with little to offer in competition.

Q11 Guess how much work-energy a man can produce in a working day.

Q12 How much would an Electricity Board charge for supplying as much energy as the work-energy produced by a man in a day?

Q13 What would be some of the social consequences of always using the cheapest source of energy?

Reading

Angrist and Hepler, Order and chaos, pages 79-83.

Ubbelohde, Man and energy, pages 88-93.

Feeding the world

The conservation of energy makes no exception for men. Just to stay alive and warm, a person needs perhaps 5 × 106 J of food-fuel every day. More is needed if he is to be able to do any work, or even just to be able to move around; say 107 J every day. This food-fuel has to be grown, and its energy comes ultimately from the Sun, which delivers about 6 × 1022 J, or 60 Q, to the top of the atmosphere every day. Not all the energy reaching the top of the atmosphere reaches the Earth's surface: the amount that does so is about 30 Q every day. Less than half of this energy, say 10 Q, is available for photosynthesis. The present plant populations on land and at sea use about 1/5000 of this energy in photosynthesis, because they cannot possibly catch and use it all. So the Earth can produce roughly 2 × 1018 J (2 × 10-3 Q) edible joules per day.

Figures 14

Full Size Image

Data from Thomson, W. S., 5th revised edition (1965) Population problems, McGraw-Hill.

If human beings ate all this food, using 107 J per day, the maximum population would be 2 × 1018/107, or 2 × 1011 people, about 100 times the present population. Certainly, one could argue that there is scope to use more of the energy available for photosynthesis, and so, that a large population could be supported. But one must also remember that the above is the very crudest sort of calculation, which does appalling violence to such a complex system as the interlocking life forms on the Earth's surface. It allows nothing for other animals or for plant life which does not contribute to food. Quite apart from the moral questions raised by the destruction of other species in order that a large population of men can exist, such other species are essential to our survival.

The oxygen in the atmosphere is continually regenerated by plants, most of it by primitive sea plants. The nitrogen, needed for growing food, is regenerated by chains of types of bacteria. All these must be fed, too. Men lack the ability to produce an enzyme capable of digesting cellulose, and we rely on grass-eating animals to do it for us, eating them in turn.

Nor could a large population use as much energy as it might wish for heat, light, and industry (and the above calculation leaves no solar energy to spare for this). Only 1010 people, using energy at the rate now current in the U.S.A., would deliver enough energy to warm up the earth and sea at a rate equal to about 0.1 per cent of the rate at which energy arrives from the Sun (unless the energy came direct from the Sun, via solar cells converting it to electricity, when this energy would have been delivered to the Earth anyway). This extra energy would ultimately warm up the Earth, and would, before too long, raise the Earth's temperature to the point at which the polar ice caps would melt, Moscow would then become a seaport and London and New York would be under water.

Whatever one's view of the population question, there is no escaping the fact that there does exist a maximum human population that the Earth can support. Those who think we are close to it already will put population control at the top of the list of priorities facing mankind.

Reading

Angrist and Hepler, Order and chaos, Chapter 6.

3. Chance and diffusion

'There are few laws more precise than those of perfect molecular chaos.'

Professor George Porter. From 'The laws of disorder' (1965) BBC Publications.

'The probable is what usually happens.'

Aristotle.

Timing

In this Part, simple quantitative ideas about counting numbers of ways are introduced. With experts, the matter could be disposed of in half an hour. The difficulty for a student is not that the calculations are hard, but that what is going on and why it is being done are not obvious, and that time is needed to learn a new style of thinking.

Some students, especially those who have met the ideas elsewhere, will need little time. Others will need more, but the substance of Part Three is too slight to support more than about half a dozen lessons. It should be remembered that this Part serves to introduce ideas which will be used, and so revised, in later Parts.

The purpose of the discussion about chance and diffusion

This Part is, to some extent, a digression from our main theme, which is an understanding of the flow of heat and thermal equilibrium. Its purpose is to prepare the way for that understanding, which will come out of questions about the random sharing of energy amongst atoms. These matters, discussed in Parts Four and Five, are quite subtle, though they are far-reaching in their consequences.

In Part Three, therefore, we look at how similar ideas can be applied to a simpler, if less important problem: the mixing of one substance with another. The results of Part Three are not, however, all trivial. Most chemical reactions involve the mixing of substances, and this fact has direct consequences for the way the progress of a reaction depends upon the proportions in which each substance is present. Some of these consequences are drawn out in Part Six, which deals with applications of the ideas developed in the rest of the Unit.

In Part Three, we also introduce one other idea we shall use later. This is the notion of using dice-throwing games to imitate some features of the behaviour of systems which are subject to chance. This tactic makes it possible to find out how a system behaves by a mathematical experiment, so reducing the need for mathematical calculations.

Diffusion and mixing as one-way processes

As in Part One, there is something to be learned about the one-way nature of some processes by watching films of events, run backwards. While studying Part One, you may have seen the filmed episodes which we shall now discuss in some detail.

Film loop

Forwards or backwards? 3, is the loop which is appropriate here. The three events it shows are summarized in Film Loops.

Most mornings, the writer, while helping to get breakfast ready, pours hot milk into a jug which has been heated by having had hot water in it. On occasional bad days, he forgets to pour away the hot water before putting the milk in the jug. No doubt the feeling of tragic helplessness this always produces in him is partly the consequence of his half-awake early-morning state of mind, but it also owes something to the impossibility of undoing what has been done, in any simple way. The mixing of milk and water happens all on its own, without any assistance or encouragement, but unmixing them is a different matter altogether.

A film of the mixing of ink and water, shown in reverse, reinforces the point by the very absurdity of what one seems to see. The question is why such spontaneous unmixing looks absurd. Why is it one of those things which never happen? Although it is not at all hard to say more or less why spontaneous unmixing of this kind does not happen, or is at least most unlikely, it turns out that a good, clear account of this rather trivial matter can be used to help in the discussion of much harder matters, like the flow of heat. So it is worth pursuing a little further.

Spontaneous unmixing which does happen

Silt does separate from muddy water, and cream does rise to the top of a milk bottle. The unmixing of the two lots of balls in Event B in the film loop described above would not be absurd if one lot of balls were denser than the other. Such issues may need to be dealt with if they arise. Notice first that none of them would happen in a spacecraft, free of gravitational fields (or the imitation field produced by centrifuging). The falling silt particles, or the rising (low density) fat globules in cream, both transfer energy from gravitational potential energy to (ultimately) energy shared among all the atoms and molecules in the system. The water or milk becomes a shade warmer as the separation occurs. The separation does occur if the increase in the number of ways of arranging, among many molecules, the extra internal energy not now stored as potential energy, outweighs as a factor the decrease in the number of ways of rearranging the silt or fat particles if unmixing takes place.

A collection of many two-way processes can look one-way

A swinging pendulum with a massive bob, hung from a stout beam, is about as near as one can easily get in a school laboratory to a process which looks just as good if it is shown as a backwards-running film, as it does if the film goes forwards. Apart from a little damping, the pendulum's energy is not spread around amongst the molecules of nearby material, and its motion is nearly two-way.

A row of such pendula can be hung in a line. If they are not connected together, and the beam from which they hang is rigid, no one pendulum affects any other, and each one continues its undisturbed two-way motion if it is set swinging. Now suppose one saw such a row made of pendula of different lengths, swinging in no special relationship to one another. Then suppose that as one watched, all the pendula gradually began to come together, and all at one moment rose exactly together to their greatest height on one side. If one wasn't dreaming, the only way it could have been achieved deliberately, would be for someone to have worked it all out in advance, and then set each pendulum swinging with just the right motion so that, in the end, as all the swings changed their relationships to one another, the pendula swung together for a moment.

To achieve all this would have been a considerable feat of ingenuity. As you may have seen, another way to make it seem to happen is to start the pendula off together, film their motion, and then show the film backwards. To an excellent degree of accuracy, no one pendulum motion seen on the reversed film is impossible: the motion of a pendulum which is not damped is just as possible either way round. But the collection of many motions, everyone of which is two-way by itself, looks decidedly one-way. The effect solely depends on large numbers.

The effect is not one to puzzle over unduly, though it is worth seeing, if only for its visual charm. If one wants to, one can find some quite deep puzzles in it, which have concerned a number of physicists and mathematicians. For example, wouldn't the pendula come together if one waited long enough? Can a large number of truly reversible processes really add up to something irreversible, or is this just an effect of what one expects to see, not of what one ought to expect? But none of these are puzzles you need follow up now, unless you want to.

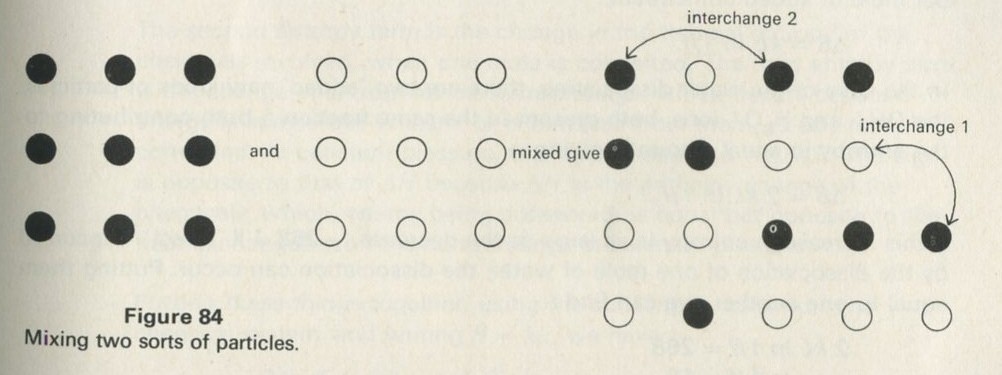

Large numbers and small numbers

The particular aspect of mixing which we shall follow up is that aspect which does not involve energy in any way, but simply the muddling together or sorting out of particles among one another, or the spreading out of particles into unoccupied spaces. A simple system which illustrates this aspect is a box containing two sorts of coloured balls. The balls differ only in having one colour or the other, and in no other way. If the box is shaken after the balls have been sorted so that those of each colour are together, everyone knows what to expect. The balls become mixed, and the process seen in reverse looks very strange; no less strange than the act of a conjurer who shuffles a pack of cards and ends up with them in perfect sequence.

What is interesting and illuminating is to see the same shaking process when there are only a few balls in the box, say two of each colour. The constant interchange amongst the balls now looks just as reasonable in reverse as it does in the real life direction. The mixing of many balls is a one-way process, but the mixing of just a few is not. The number of objects involved is an important factor in deciding whether a process is one-way or not.

Q1 When a box containing many marbles of two colours is shaken, is the reason why they do not sort themselves out that they cannot do so?

Q2 Shaking the box containing many marbles rearranges the marbles among each other, going from one way of arranging them to another, and to another. Would you say that there is just one way, or that there are a few ways, or that there are many ways of rearranging them, such that you would say that the marbles were well mixed?

Q3 There is at least one and perhaps there are many possible ways of arranging the marbles so that anyone would agree that they were pretty well sorted out into the two colours. Would you say there were more than, fewer than, or the same number of ways of doing this as of achieving arrangements which one would naturally call muddled or well mixed?

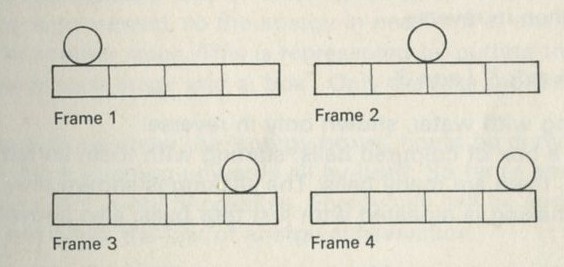

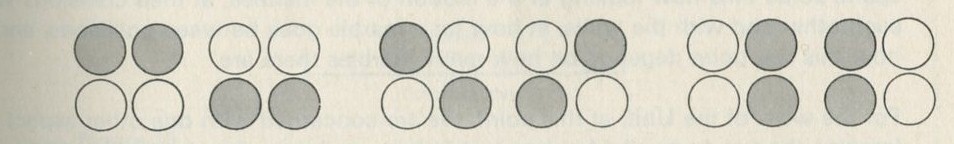

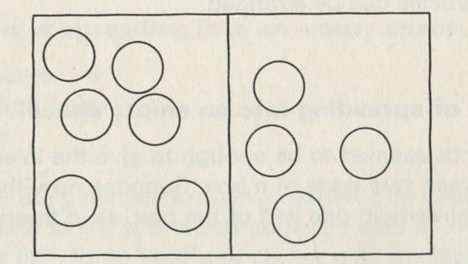

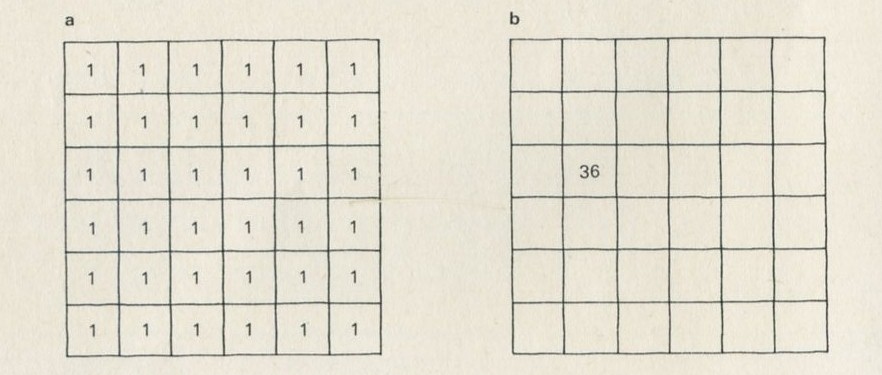

If just four marbles are shaken so as to stay in a two-by-two array, but so as to interchange places, all the possible ways of arranging them can be set down, as in figure 15 (ignoring interchanges of similarly coloured marbles).

Figure 15: Patterns of arrangement of four marbles, having two colours.

Full Size Image

One could say, of the two ways shown on the left in figure 15, and of only these two, that the marbles are vertically sorted. If you shook the marbles and then inspected them, they would have to be arranged in one of the ways shown. Suppose that the marbles are smooth and round, and that nothing favours one way of arranging them over another.

Q4 If they start off in one of the vertically sorted ways, and are shaken, could they ever, by chance, end up again in one of the vertically sorted ways?

Q5 If you shook them, and inspected them a great many times, about how often might you expect to see them vertically sorted?

Q6 Why, in question 5, would you not expect to see them vertically sorted quite as often as in some other pattern?

Chance is enough to work out what happens on average

In discussing the interchanges of four marbles (questions 4 to 6) we almost casually introduced the idea that one might reasonably suppose that nothing would favour any one of the ways of arranging the marbles (shown in figure 15) over any other way. We now propose to take this idea more seriously: indeed it is the key to understanding the work of the whole Unit.

Optional experiment: 9.2 Random motion of marbles in a tray

Much can be learned from watching marbles rolling about at random in a shallow tray. If for any reason you have not done so before, you would be well advised to spend some time now looking at the motion of the marbles, at their collisions with each other and with the walls, at how far a marble goes between collisions, and at how this last point depends on how many marbles there are.

For the work of the Unit, at this point, we are concerned with one other aspect. Imagine the tray to be divided into two halves, or draw a line across its middle, and look to see what proportion of the marbles is, on average, to be found in any one half. Look also to see how far the number in one half seems likely to deviate from the average number.

Optional experiment: 9.2 Random motion of marbles in a tray

12 two-dimensional kinetic model kit

optional extras:

133 camera

171 photographic accessories kit

1054 film, monobath combined developer-fixer

slide projector

Shake the tray with about ten marbles in it, keeping the tray level. There should be a line drawn across the middle of the tray, dividing it in half, drawn on a sheet of paper laid in the tray if it is desired not to mark the cork mat.

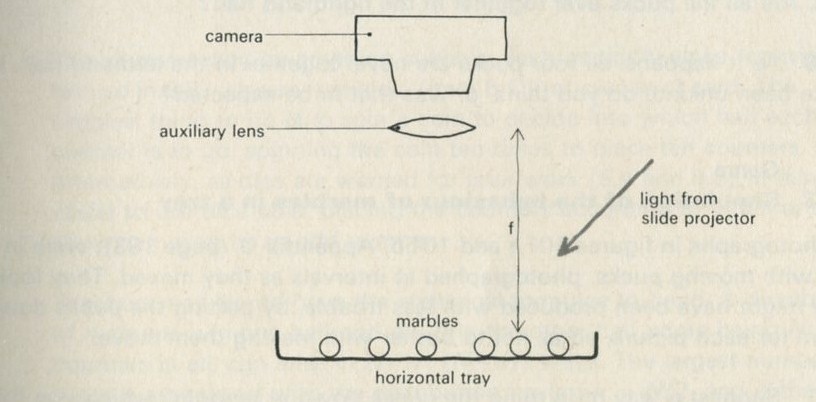

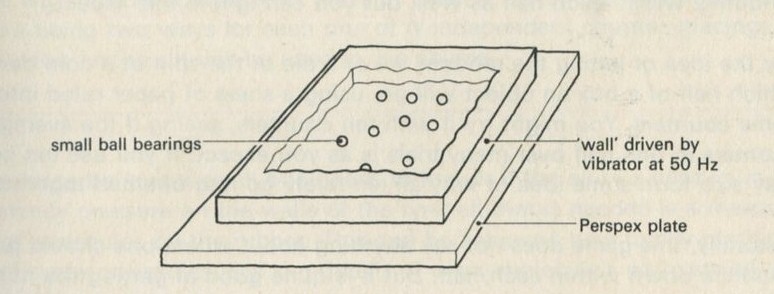

If it will be of interest, photographs like those in figures 101a and 101b, Appendix D, can be taken. The camera may need an auxiliary lens (figure 16), the lens being held with the tray at its principal focus and the camera being focused at infinity. The tray can be shaken by hand or with more trouble, by a crank driven from a motor. Good results have also been obtained with a Perspex plate, and 1 to 2 mm ball bearings contained within a wall made of a second sheet of Perspex with a rectangular hole cut in it, as in figure 17. The wall is driven at 50 Hz by a vibrator. This device can be fitted on an overhead projector.

Figure 16: Photographing balls rolling in a tray.

Full Size Image

Figure 17: Alternative ball-rolling apparatus.

Full Size Image

You may, however, feel that you have rolled marbles in trays often enough before. If so, you may prefer to look at the photographs of pucks moving on an almost frictionless surface, reproduced in figures 101 a and b, Appendix D.

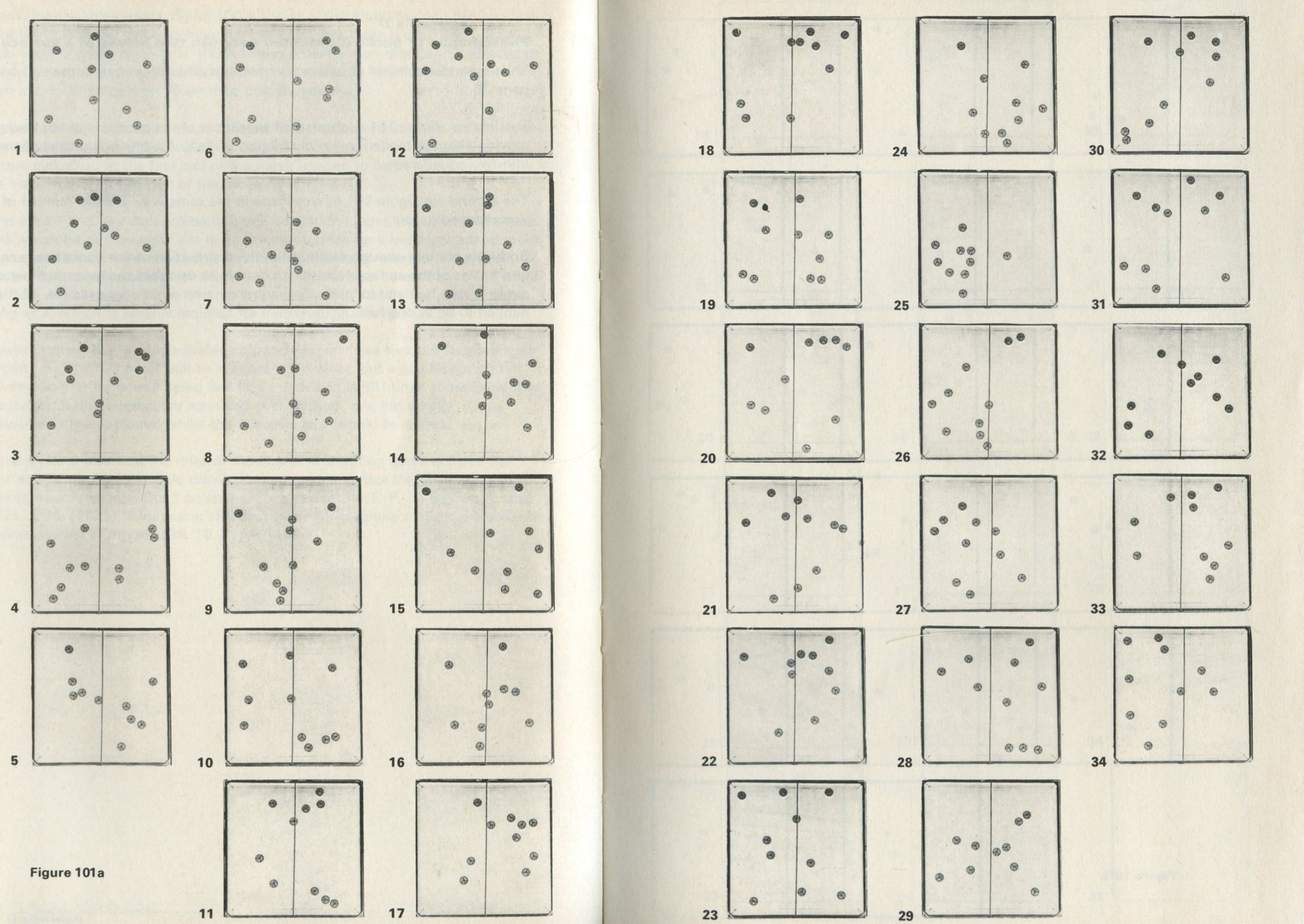

Q7 In figure 101 a, a series of snapshots of ten pucks moving about on a horizontal surface is seen. Are all the pucks ever together in the righthand half?

Q8 What is the average number of pucks on the righthand side? Is it what you expect? (There is a quick way to find the average. Guess the answer, then score 0, + 1, + 2, -1, etc. for each frame, according to whether the number in one half is different from your guess. The average is found from the total of these scores, many of which cancel as you go along, divided by the number of frames, and added to your guessed answer. The better your guess, the easier it is.)

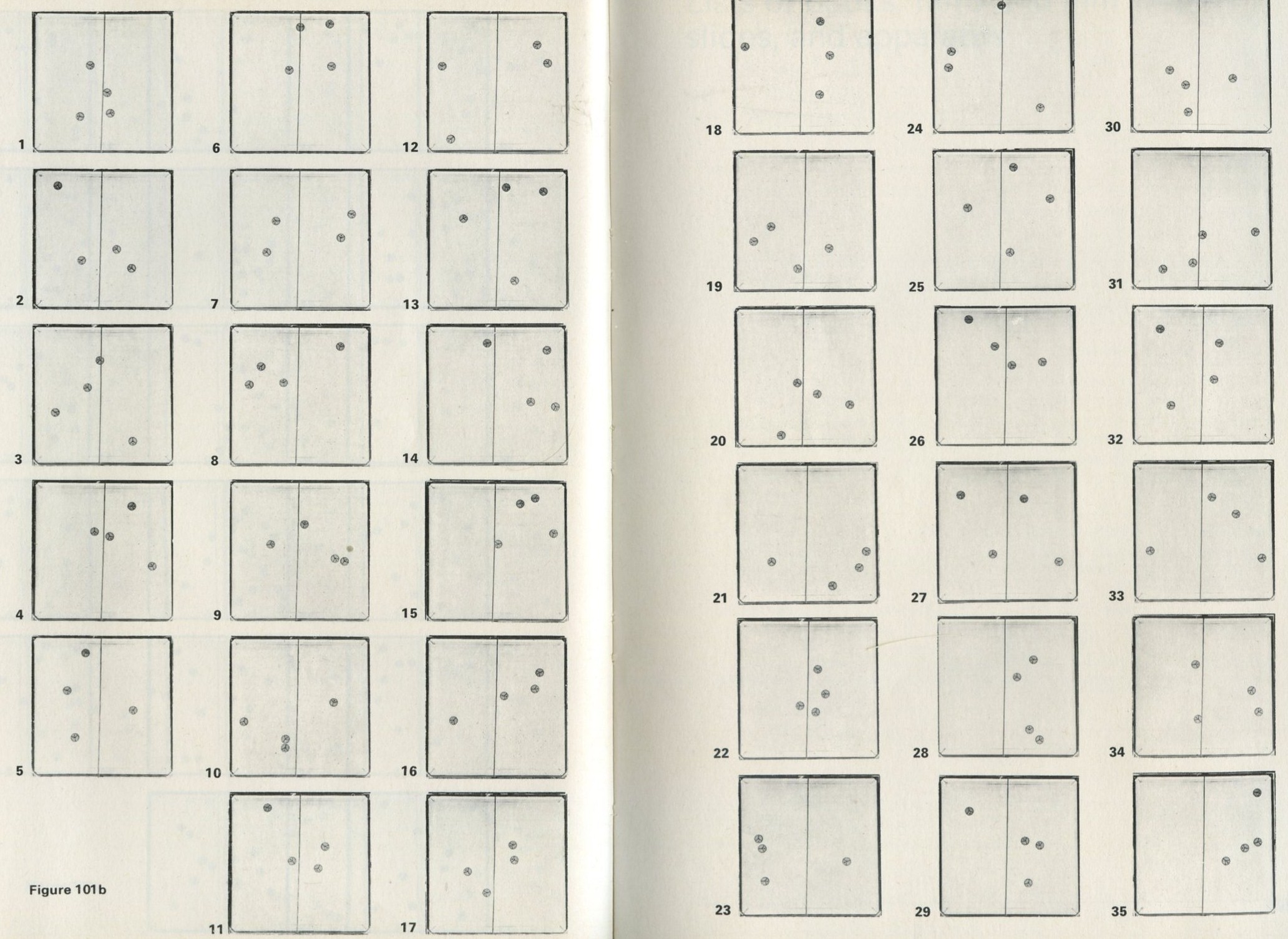

Q9 In figure 101 b, there is a similar set of photographs, but with only four pucks. Are all the pucks ever together in the righthand half?

Q10 As it happens, all four pucks are never together in the lefthand half. Has chance been unkind, do you think, or was that to be expected?

Game: 9.3 Simulation of the behaviour of marbles in a tray

The photographs in figures 101 a and 101 b, Appendix D , were in fact taken with moving pucks, photographed at intervals as they moved. They look as if they might have been produced with less trouble, by putting the pucks down at random for each picture, so as not to bother with making them move.

Q11 Suggest a way of putting the pucks down at random, which you think ought to divide them between the two halves in the same kind of way as they are divided in the pictures. (To imitate the pictures one would have to place the pucks randomly within each half as well, but you can ignore this aspect.)

Try the idea of letting the random fall of a die or the spin of a coin decide into which half of a box an object will go, using a sheet of paper ruled into two, and some counters. You might try it with ten counters, seeing if the average number of counters in one half over many trials is as you expect. If you use ten counters, you may also form some idea of why all ten rarely go into one half together.

Naturally, this game does not say anything about where one should put the counters down within each half. But it is quite good at getting the numbers in each half right. This is typical of random simulation methods: they often predict average behaviour well, without representing or predicting the detailed behaviour of a system at all.

Game: 9.3 Simulation of the behaviour of marbles in a tray

1053 counter 10

1054 graph paper

1055 die or coin

Figure 18

The counters may be anything suitable, such as tiddlywinks (convenient for use in 9.8), square pennies (item 5 C), or pieces of card. The simplest thing to do is to spin a coin to decide into which half each counter is to go, spinning the coin ten times to place ten counters. Alternatively, as dice are wanted for later work (9.4 and 9.8), it may be easier to use dice now, placing the counters according to whether the die shows an even or an odd number.

Teachers may like to have the statistical formulae to hand. A distribution ofn counters in one half and N-n in the other half, there being N counters in all, can arise in N!/n! (N-n)! ways. The largest number of ways is associated with the distribution having n = N/2, and is the most probable distribution. The two distributions which have all the counters in one half are less likely than any other, each arising in only one way. The total number of ways of placing the counters is simply 2N , there being two ways for each one of N independent counter-placings. Only this last result will be used in the course.

Often, the average behaviour is all one needs to know. If the air in a car tyre is to maintain a steady pressure on the walls of the tyre, all that is needed is a reasonably steady hail of molecules on the rubber. This can be achieved by a totally random movement of many molecules, and the details of every molecule's path, speed, and collisions are of no importance.

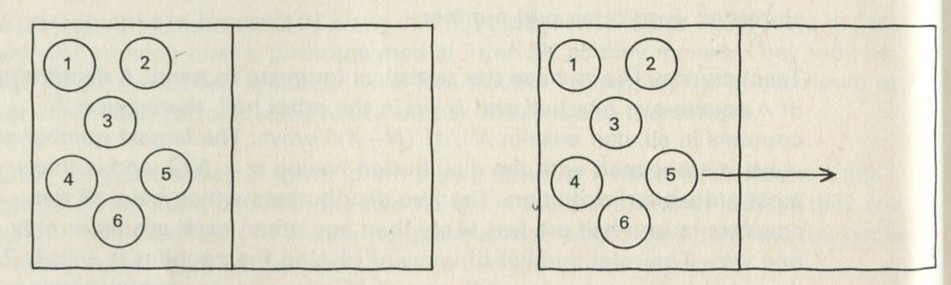

The first game, 9.3, suggests what the average division of pucks or molecules between two halves of a space might be. The next game, 9.4, suggests how one might simulate some aspects of how a system reaches that more or less steady average behaviour if it starts a long way from the average behaviour. The main point of introducing it is that a later game, 9.8, which is crucial to the whole Unit, uses a similar idea, but it will also help in working out a detailed analysis of how the spreading of particles in a mixing or diffusing process depends on the numbers of ways in which the particles can be arranged.

Game: 9.4 Simulation of spreading into an empty space

In the game 9.3, chance seemed to be enough to give the average behaviour of particles divided between two parts of a box. Suppose now that we agree to start with all the counters already in one half of the box, as in figure 19.

Q 12 Is the distribution which has all counters in one half near to the average behaviour if the counters can go in either half?

The question is, how might one now use a die to move the counters randomly between the halves of the box? If there are six counters, as in figure 19, and each is numbered, a six-sided die can be thrown and the counter whose number comes up can be moved to the half it is not now in.

Figure 19

Full Size Image

Q 13 What must happen on the first throw?

Q 14 On the second throw, out of the six possible moves, how many will move a second counter to the initially unoccupied half?

Q 15 On the second throw, how many of the possible moves will take the first counter moved over (counter number five in figure 19) back again to the full half?

Q 16 If everyone in a large class makes two moves, what proportion might end up with all the counters back in the full half?

It is worth going on to make several more moves. Although the die does not know or care when all the counters are in one half, you should find that that distribution arises rather rarely. You should be able to say why it arises infrequently.

Game: 9.4 Simulation of spreading into an empty space

1053 counter 6

1054 graph paper

1055 die

The rules of the game are explained above. The counters need to be numbered one to six. It is worth going on until all the counters happen to go into one half, for at least one player, after the first few moves have spread them between the two halves. After the second move, it is good to take a poll of those whose first-moved counter has gone back into the full half.

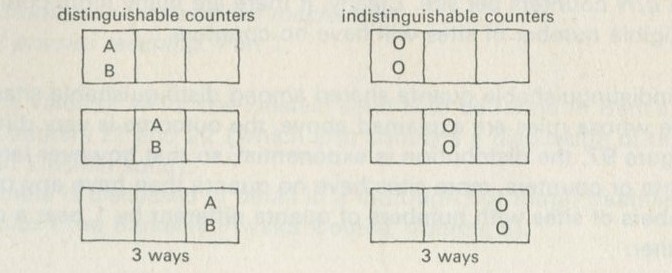

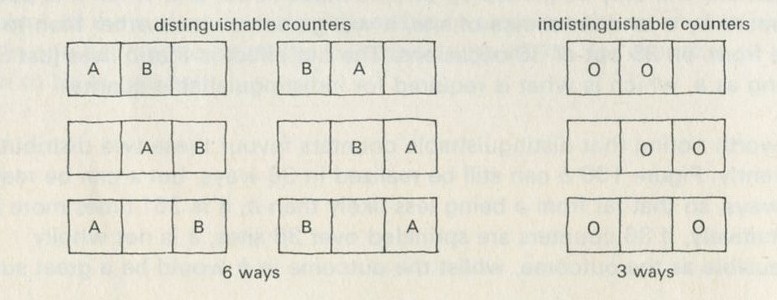

Counting numbers of ways

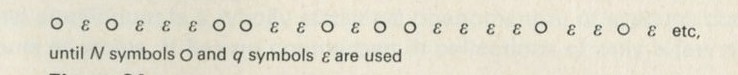

In several discussions in this Part, it has been helpful to think about the number of ways in which a state of affairs can arise. This idea is a crucial one. We shall now use it to calculate one aspect of the behaviour of particles spreading into an unoccupied space. This calculation is the basis of a chemist's thinking about the mixing of reacting molecules. It also serves as an example of a method which we shall put to more important uses in Part Five, in discussing why heat goes from hot to cold, and what hot and cold mean at the level of molecules sharing energy amongst themselves.

One way six counters can be divided between two halves of a box is to have them all in one half. But there are many other ways of arranging them. Suppose there are altogether W ways of arranging the counters between the two halves (including the one way with all in the left and the other one with all in the right).

Q17 Out of many observations, in what fraction of them might you reasonably expect to see all the counters in, say, the lefthand half, in terms of W?

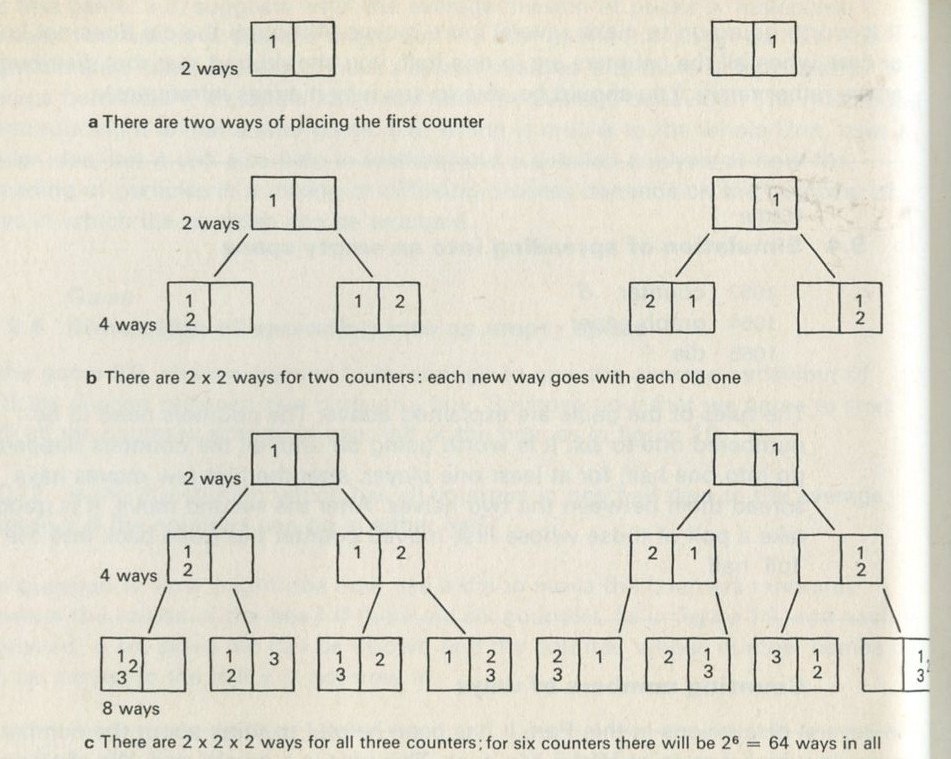

The total number of ways W is easy enough to calculate. Figure 20 shows how to do it. The first counter can be put down in two ways, as in figure 20 a. The second counter can also be put down in two ways, each of which can go with either of the two Ways for the first counter, as in figure 20 b. The third counter can again be put down in two ways, as in figure 20 c.

Figure 20: Counting numbers of ways for particles in two halves of a box.

Full Size Image

Each of the two ways for the third counter can go with any one of the previous four ways, giving now 4 × 2 = 8 ways (not 4+2). This last point is of the greatest importance: numbers of ways multiply when they are independent of one another.

If there are four counters, there will be 2 × 2 × 2 × = 24 = 16 ways in all. If there are six, there will be 26 = 64 ways. If there are N counters, there will be a total number of ways given by W= 2N .

Note for teachers

The result W = 2N will be used in Part Six, in a discussion of the entropy change of an expanding gas and, less directly, in a discussion of the effect of concentration on the e.m.f. of a cell. Here, however, its main purpose is to provide an example of the calculation of numbers of ways.

The calculation makes a definite prediction. If N pucks move about at random on a horizontal surface, or if N gas molecules move about in a container, on one observation out of 2N, on average, all N particles may be expected to be in one particular half.

We tried it out using a computer, which was asked to play the game in 9.3 in its head, using four imaginary counters. Instead of spinning a coin, the computer calculated random numbers to decide the fate of each counter. Using N = 4, we expected 1/24 = 1/16 out of a great many trials to end up with all four in one particular half. In 1 minute 11 seconds, the computer made 16 000 trials and 1/16 therefore is just 1000. The outcome is shown in table 3.

Number of counters in the 'lefthand half' 0 1 2 3 4 Number of occasions 1086 3823 6188 3900 1003

Table 3: Results of a computer trial, distributing four counters in two halves of a box.

Optional game: 9.5 Test of calculation of number of ways of arranging four counters

1053 counter 4

1054 graph paper

1055 die or coin

Unless students would think it tedious and unnecessary, there may be value in repeating the game in 9.3 but with four counters, trying it a good many times. This is not too lengthy a business with a large class. The aim would be to see whether, out of many trials, about 1/16 put the four counters all in one half.

An interested student could try to reproduce photographs like those shown in figure 101, though the test may reduce to a statistical method of discovering if the table was level.

If the school has access to computing facilities, the game could also be tried out on a computer, should a student be able to write a programme to do it.

Processes that happen inexorably, but quite by chance

In the game 9.4, with six counters, the counters were more likely to spread into both halves of the box, starting all in one half, than to stay or go back into one half. The larger the number of particles, the smaller is the fraction 1/2N , which indicates the proportion of occasions on which all the particles may concentrate by chance in one half.

Think, for example, of an ordinary gas jar full of air. There will be perhaps 1022 molecules in the jar. (One mole of molecules, N = 6 × 1023 , occupies about 24 dm3 at room temperature. A gas jar might contain 1/50 of a mole.) On less than one occasion in 21022 , all the molecules might by chance be in the bottom half of the jar. The number 21022 is unimaginably large. Its logarithm to base 10 is given by

lg 21022 = 1022 lg 2 ≈ 0.3 × 1022 = 3 × 1021

The number whose logarithm is 3 is 1000, that is, 1 followed by three zeroes.

The number 21022 is 1 followed by 3 × 1021 zeroes. If it were written out, with each zero only 1 mm across, it would stretch a distance 3 × 1021 mm, or 3 × 1018 m. In a year, a flash of light travels nearly 1016 m (a year is just over 3 ≈ 107 s, and the speed of light is 3 ≈ 108 m s-1 ). So the number 21022 would stretch out for 300 light-years, nearly as far as the Pole Star, and more than thirty times as far as the star Sirius.

It follows that the chance of seeing all the molecules in one half is so small as to be negligible, even if one only allows as little as a microsecond for the time needed for molecules to rearrange themselves. (This is not generous, since a molecule takes over a tenth of a millisecond to cross a jar.) See questions 18 to 20. The chance is there, but it is smaller than the chance that all the houses in a country will burn down by accident on the same day, or that all the people in a country will just happen to catch measles. Insurance companies do well enough despite the chance of such disasters, while physicists, chemists, and engineers can rely on chance working the way they expect, so huge are the numbers of molecules involved. It follows that if all the molecules are in one half, but are allowed to pass into the other half, they will do so with all the appearance of inevitability. Diffusion is a one-way process because it is so very, very likely, that in effect it always happens.

Nor should you suppose that very large numbers of particles have to be involved for the chance of reverse diffusion to be negligible.

Q18 Suppose there are only 100 molecules in a jar. Write down the logarithm of the number 2100 as a power of ten.

Q19 Now suppose you look at the jar every microsecond. For how many seconds might you have to wait on average before, by chance, all the 100 molecules were in one half?

Q20 People think the Universe is about 1010 years old, which is about 3 ≈ 1017 s. How many Universe-lifetimes does the answer to question 19 represent?

Everyone is disturbed by these large numbers when they are first encountered. Their very vastness, which so defeats the imagination, is what lies behind the paradox in the previous heading, Processes that happen inexorably, but quite by chance.

Summary

Chance is blind: it tries out everything impartially. Things which can happen in many ways therefore happen often. Molecules which can spread into a larger space will do so. This need not be because they are pushed into the empty space, but can simply be the result of chance. There are more ways of arranging the molecules when they are spread than when they are not, and chance will try out all the ways open to the molecules. When there are many molecules, there are many more ways of being arranged when they can spread, and so they do spread. They are so busy trying out all these ways that only rarely will chance take them back to the original unspread condition. The larger the number of molecules, the less likely is it that chance will soon produce a spontaneous reversal of diffusion.

If chance tries out all ways of arranging molecules impartially, an event which arises in twice as many ways as another will be observed twice as often.

Numbers of ways multiply if the choices are separate ones that do not influence each other.

For teachers: further discussion of numbers of ways

Concepts having to do with randomness and probability are not easy to form, and some students may need a good deal of further discussion of examples to help them. The examples should be simple ones, where the answer to the question, How many ways?, is easy to obtain. The more complicated examples such as runs of coin tossing, or the paradoxes involved in, say, the gambler's ruin problem are likely to confuse more students than they assist. A few suggestions follow, and others are to be found in the Teachers' guide Supplementary mathematics.

How often will a die show a four? (One time in six.) Why? (Because there are five other possibilities or ways for it to come up.)

If you don't know which of three books to do your homework in, how many of the class will choose one book? (One in three.) If each person can write in red or black ink, how many choices has each now? (Six - three multiplied by two.)

Why is your desk (or room) more often untidy than tidy? Tidy means evervthing in its place; untidy means some things in other places. If it is left to chance, only rarely will chance hit on the tidy arrangement, just because there are more untidy ones. So chance or forgetfulness will nearly always make a desk (room) untidy and hardly ever make it tidy. The argument that is often used, that, It is no more effort to put things in their proper place than in the wrong place may (or may not) be true, but it is not entirely relevant. It may even be easier to put things away tidily. But there are so many more untidy ways than tidy ones that chance, given the smallest opportunity, will untidy them again. A moment's forgetfulness, or a breeze through a window, and some things will be in the wrong place. If nothing is done about it, there will soon be a lot of things in wrong places.