Owing to shortage of space in FORUM 30 not all the meeting notes from the Representative's meeting could be published. The notes which were omitted are now presented here.

For a variety of reasons this has proceeded more slowly than hoped.

Current plans are to convert the following sites: RLGB, APPLETON, READING, SOTON, DURHAM and DARSBRY. This will be followed by further GEC4000 and Daresbury PDF machines during January and February. A schedule will be published which will also include plans for other workstations.

We are in the process of implementing multiple connections between both packet switching exchanges (PSE-1 and PSE-2) at RAL and the IBM systems (MVT and VM). Currently, a simple 'wide-band' connection is used between RAL PSE-1 and each of the IBM mainframes. The existing and the new connections all run with the less efficient 'Binary Synchronous1 link protocol. Consideration is currently being given to the use of an intermediate processor to act as a High Data Link Control (HDLC) converter, interfacing to a block multiplexor channel. If this project is successful, an even bigger improvement in data throughput and network response would come about.

Approval has been given for the establishment of a gateway between SERCNET and the network based at ULCC in London. This will eventually lead to a much improved service for SERC users within London University and improved access to the current 'Metronet' machines. The mechanisms for using this gateway have not yet been defined and the machine to be used for this function has to be provided. The new service is expected to become available during the first quarter of 1983.

Soon appearing on the scene will be new devices called JNT PADs (made by Camtec Ltd). JNT PAD stands for 'Joint Network Team Packet Assembler /Disassembler'. The primary function of these devices is to assemble input from terminals into Network Packets for transmission to a computer, or to receive output packets from the computer and disassemble them for presentation to a terminal, or eventually to a printer. The device provides a very economical means of supporting up to 16 terminals on a simple network connection. Currently, software is loaded from cassette recorders although a down-line loading option is being worked on for the near future. It is envisaged that these devices will become available with the GEC2050 replacement programme and provide networking terminal support for certain local areas, eg in buildings or on sites. In particular, these will replace the Rutherford PACX service.

There is a widely held belief that many of the intermittent faults, and quite a few of the solid faults, on leased private wires are due to equipment associated with both speech and signalling facilities. An early requirement for fault diagnosis in telecommunication circuits was that speech on a private circuit would make diagnosis quicker and more effective. It was for this reason that all RAL circuits were provided with these facilities.

Practical experience shows that the use of these in-built facilities during diagnosis is rather cumbersome. The co-ordination of transfer between speech and data profiles is not always as easy as would appear. In practice therefore, there is a tendency to use a second channel (ie a call over the public telephone network) to co-ordinate such tests. It therefore seems to be in the general interest that this should be removed and a programme for this is to begin. Ideally, this would require that a telephone with access to the public network should be available within a reasonable distance of communications equipment. In general, the use of such telephones would be for calls originated from RAL, though it may occasionally be necessary to initiate a diagnosis by a short call into RAL. Where such a telephone does not exist we would like arrangements to be made for its provision. This activity will be co-ordinated from RAL and is expected to take place during the first half of 1983. There will be some planned interruptions to services while the work is carried out. The final result should be an improved service at marginally lower cost.

A Networked Job Entry (NJE) link has now been established between the RSCS (VNET) machine at RAL and the IBM complex at CERN. This link provides the following facilities:

Further facilities are planned but they will require more testing/development.

The CERN IBM system is known as node GEN to VNET. It also has an alias RM102. The status of this link may be found by typing:

VNET Q GEN (from CMS) Q GEN (from a VNET workstation)

as for any other VNET link. The RAL VM system is node RLVM370 also known as node N4 to CERN.

The EXEC SUBCERN on the U-Disk may be used to submit CMS files to the CERN IBM system for execution.

The ELECTRIC obey file JB=B2B.OSUBCERN(NJ) can be used to submit jobs to the CERN IBM System for execution.

A job executing on the CERN IBM system can send output to the virtual reader of a CMS machine at RAL by a JES2 ROUTE card in the CERN job.

eg

/*ROUTE PRINT RLVM370.<CMSID> or /*ROUTE PRINT NU.<CMSID> or /*ROUTE PUNCH N1.<CMSID> for punched output.

For a job submitted from Wylbur:

RUN DEST N4.<CMSID>

The exec CERN on the U-Disk may be used to send a command to JES2 on the CERN IBM computers and return the reply to the users terminal.

The calling sequence is :

CERN < JES2 Command >

In the ICF area a small number of sites are being given larger machines and the redundant machines will be reconfigured to provide a larger and improved workstation facility at certain GEC2050 sites. The displaced GEC2050 hardware will be used to enlarge non-networked GEC2050s to enable them to be connected to the SERC network. At other sites existing ICF facilities will be modified where necessary to accommodate an existing population of GEC2050 users and the GEC2050 removed.

The following table indicates those sites for which changes have been agreed:

| Site | Current equipment | Upgrade | Comments |

|---|---|---|---|

| Bangor | DEC10-Gateway | Replaced GEC2050: was RM90 | |

| Durham | GEC4070 | Done July 82 | |

| Westfield | GEC2050 | GEC 4080 | Jan 83 |

| Edinburgh (Univ-Phys) | GEC2050 | GEC4080 | Jan83 |

| Leics (Univ) | GEC4090 | New M/C at Leics Poly with links | |

| DESY | GEC2050 | GEC4065 | Mar 83 |

| CERN | GEC2050 | GEC4065 | Mar 83 |

A new version of the PACX software was introduced on Monday 2 August. The important change was that services may now be selected by alphanumeric names eg CMS, RLGB. Speed selection will be done automatically. However, if a terminal set at say 4800 baud finds that there is a queue for the service, the user will have to change the terminal speed before attempting the same service at another speed. PACX can recognise SERC network names plus CMS, ELEC, and CERN. The existing numerical system will no longer be appropriate in most cases.

The MVT version of the MVT1D routine has been rewritten so that it now returns the hardware machine identification (currently 3032 or 3081) instead of the software identification which can be unreliable.

The writeups For the RHELIB routines can now be accessed on CMS via the help system. 'HELP RHELIB MENU' will give a full list of routines, while 'HELP RHELIB x' will access the writeup for routine X.

A number of bugs have been fixed in the library. In particular, the routine INTRAC in GENLIB now works correctly and an overwriting bug in TIMEX has been cured.

As mentioned in an earlier FORUM (26), the routines UZERO, etc in SYS1.CERNLIB and KERNLIB TXTLIB R (but not CR.PUB.PRO.GENLIB4) were replaced by the versions previously in RHELIB. These have since been modified so that they issue a warning message if they are not called with the correct number of arguments.

The MINUIT package has been installed on MVT and CMS as part of the standard CERN library. Its usage differs slightly from the previous versions available at RAL. See 'NEWS MINUIT' for details.

The CERNLIB short writeups can now be accessed on CMS via the help system. They have been modified so that they are reasonably presentable when output on a terminal but they do contain non-printing characters such as Greek characters and subscripts which will normally appear as percent signs. They are accessed using 'HELP CERNLIB MENU' for the menu, and 'HELP CERNLIB name', where 'name' is the catalogue name, eg B102.

RLR31 - The RLR31 workstation has been removed from service by NERC whose computing service no longer has an office at RAL.

Recent extensions to the HRESE exec and associated processing by JOBSTAT, now permit output files, queued for VNET, to have their destinations changed. Since job output may be controlled by various machines on its passage through the system there are inevitably some limitations. The aim here is not only to define how such resetting of ouput destination can be done but also when and why it may not be done.

The command format for resetting output destination is as follows:

HRESE jno (<ACCT acct>< ID id><ROUTE destination>

jno -specifies the HASP job number

acct -gives the account number under which the job was run

id -gives the user identifier under which the job was run

destination -specifies the output destination and is composed as follows:

primary<.secondary><(qualifier)>

This is a single parameter and no embedded spaces are permitted.

primary specifies either:

Remote w/station given by either its full name or its alias, eg RLR26 or REMOTE19 or RM19. When the output is under the control of HASP no secondary value is permitted. When under the control of VNET the secondary field defines the category associated with the output file.

or RLVM370 This is valid only when the output is under the control of VNET. It is a request to send the output to the virtual machine named as the secondary. If secondary is omitted then output will be produced on the VM system peripherals.

The (qualifier) field specifies the output type which is affected by the HRESE command. By default only print output will be reset. If the qualifier is given as (PUN) then only punch output is reset. If given as (ALL) both printer and punch output will be reset.

The following limitations are imposed on the HRESE command.

Routine Maintenance on the 3032 IBM computer is currently undertaken once a month on Thursdays between 18.00 and 22.00 hours. The probable dates for the remainder of 1982 and 1983 are undecided, but adequate notice will be given.

The date of the next shutdown of all computer systems (except network equipment) for the maintenance of air-conditioning plant has now been fixed. It has been scheduled as follows:

1600 hrs on Fri 8 April till 0745 hrs Mon 11 April

The duration of the shutdowns has now been agreed with Engineering Division. There will be 2 shutdowns each year, one in the spring, date of which is given, and the other in November. The autumn date will be published later.

The User Interface Group intends to run a number of courses for users of the IBM and Prime Computers, at the Atlas Centre, during 1983.

4 × IBM New Users Courses

The course is designed for those people who have been using the IBM systems for a few months and are ready to learn more about the facilities, including both Batch and 'Front-End' (simple CMS).

Dates are: 21 - 24 February, 25 - 28 April, 4-7 July, 24 - 27 October.

3 × ELECTRIC/CMS Conversion Courses

This course will introduce those who are currently using the ELECTRIC system to the facilities of CMS. Most candidates should have attended the 'IBM New Users' course.

Dates are: 6/7 April, 29/30 June, 19/20 October.

3 × Advanced CHMS Courses

This is to introduce those who are regular CMS users to the more advanced facilities and RAL enhancements to the system. All candidates should have attended the 'ELECTRIC/CHS Conversion Course'.

Dates are: 16/17 March, 20/21 July, 16/17 November.

2 × Prime New User Courses

This is to introduce users to the facilities of the Prime Computer.

Dates are: 23/24 May, 21/22 November.

For further information and enrolment, please contact the Program Advisory Office (0235 446111 or ext 6111) or R C G Williams text 6104).

This is a summary of the information to be found in an RAL Computing Division internal paper CCTN/P43/82 which is available from the secretary of Systems Group.

Initially some raw statistics were presented on the current usage of all types of data. This was done in order to give a feeling for the magnitude of the problem.

| User disk contents (files) | 5000 approx. |

| Most popular file size(Kbytes) | 20 |

85% of all files were created in the last 7 mths and 90% of all files were used in the last 6 mths

| Different tapes used per day | approx 200 |

| Tape mounts per day | 600-800 |

| Local library size | 6000> |

| Total library size | 55000 |

| Library growth rate(tapes/week) | 100 |

| Average no. of files per tape | 7 |

| Average data/tape (Mbytes) | 62.5 |

| Mean file size(Mbytes) | 8.5 |

This was followed by a description of a model which will enable an understanding of the implications of various actions.

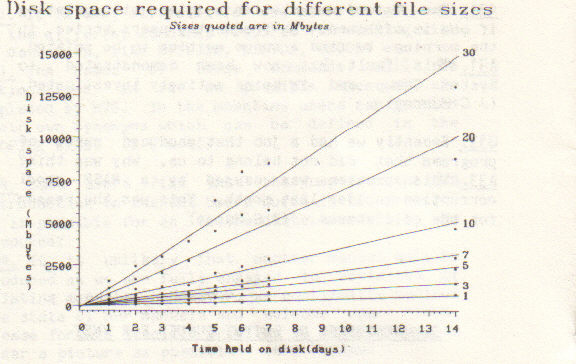

Figure 1 shows the disk space required for a system which keeps files of a certain size or less on disk for a period of time without them being used and then archives them to some alternative medium. It is a plot of the disk space (in Mbytes) required against the length of time a file is kept on disk (in days). This plot is repeated for various file sizes ( in Mbytes).

Figure 2 shows the number of tapes in the library plotted against the number of days since that tape was last used and represents a measure of the tape use pattern. If it is assumed that the pattern of tape use can be applied to tape files which were transferred to disk and that this is equally applicable to all size subsets of this then we may estimate the data transfer rate (Mbytes/day) between the primary (disk) and secondary (tape or MSS) storage. This is done in figure 3 for different sizes of tape dataset.

Figure 4 shows the tape mounts which would still be required if tape datasets of various sizes were kept on disk instead of tape and enables some estimate of the saving in operator time which could be achieved.

The Perq is a powerful single-user minicomputer, capable of approximately one million high-level assembler operations per second. At least as important as the cpu power available are the high-quality A4 graphics display (resolution approx 100 pixels per inch) and the high interaction rate. These features mean that the Perq is well-suited to the role of a personal scientific workstation.

The recommended Perq configuration is: cpu, 1 Mbyte memory, 24 Mbyte Winchester disc, display, tablet and puck. I/O ports are provided by one each of RS232 and IEEE 488 CGPIB) interfaces. At present the POS operating system, together with Pascal and Fortran 77 is available. Over the next few months UNIX Version 7, with new Pascal and Fortran 77 compilers will be released. This version of UNIX will offer virtual memory and a full 32-bit address space and the compilers will also offer 32-bit addressing.

At present communications to the Perq are limited to the Chatter system, which enables a relatively low speed connection to other machines via the RS232 interface. Developments are in hand to provide both Cambridge Ring connections and X25 (SERCNET) access. Hardcopy output devices are yet to be announced by ICL although it is known that the Versatec V80 electrostatic printer/plotter will be available in a few months.

It was originally proposed that Perqs be supplied on loan for the grant period to grant-holders whose requests for them had been approved by the appropriate SERC committee. This policy has now been changed (retrospectively where necessary). Perqs are now treated in much the same way as other equipment purchased for a grant, the exception being that they are purchased and maintained for the grant period, centrally by SERC. At the end of the grant period the Perq is owned by the grant-holder, in exactly the same way as other equipment. Note that this means that maintenance costs become the responsibility of the grant-holder's institution unless a further SERC grant has been obtained to cover such costs.

There are some exceptions to the above paragraph. Some Engineering Board committees have organised small loan pools of Perqs for specific (usually short-term) tasks. The areas concerned, with suitable RAL contacts and telephone extensions are:

Perqs are applied for in the same way as other equipment on SERC grants - via section 20 on the RG2 application form. The only difference is that costs should rot be inserted. This will be done by Central Office staff, with advice from RAL if necessary. In this way SERC can take advantage of bulk purchase discounts and maintenance and any price reductions (costs in general are falling rather than rising). A typical section 20 entry might read:

| (1) ICL Perq with 1 Mbyte memory | ... |

| (2) Maintenance cost for grant period, COSTS TO BE SUPPLIED BY SERC | ... |

| (3) Any other equipment | £ cost |

| ... | ... |

| ... | ... |

Note that the cost of the Perq is part of your grant cost. In no way are Perqs 'free'!

It is obviously necessary to be aware of approximate costs. Currently the cost (including VAT) of the recommended 1 Mbyte memory Perq is £18700 (this includes software costs). The maintenance charge is £115 per calendar month. Both these prices are subject to change, so do not put them on the RG2. Other useful costs to know (these should be quoted) are:| Cambridge Ring connection | £1.5k |

| X25 connection | £2.0k + cost of line to PSE + cost of port |

There may well be occasions when extra advice is necessary. In such cases it is best to contact me, preferably well before the closing date for grant applications, on ext 6491 or by direct dialling 0235 44 6491

.System development is currently scheduled on Wednesday mornings from 08.30 to 10,30 and Thursday evenings from 17.30 to 19.30. It should be noted that these times are under consideration and may be changed.