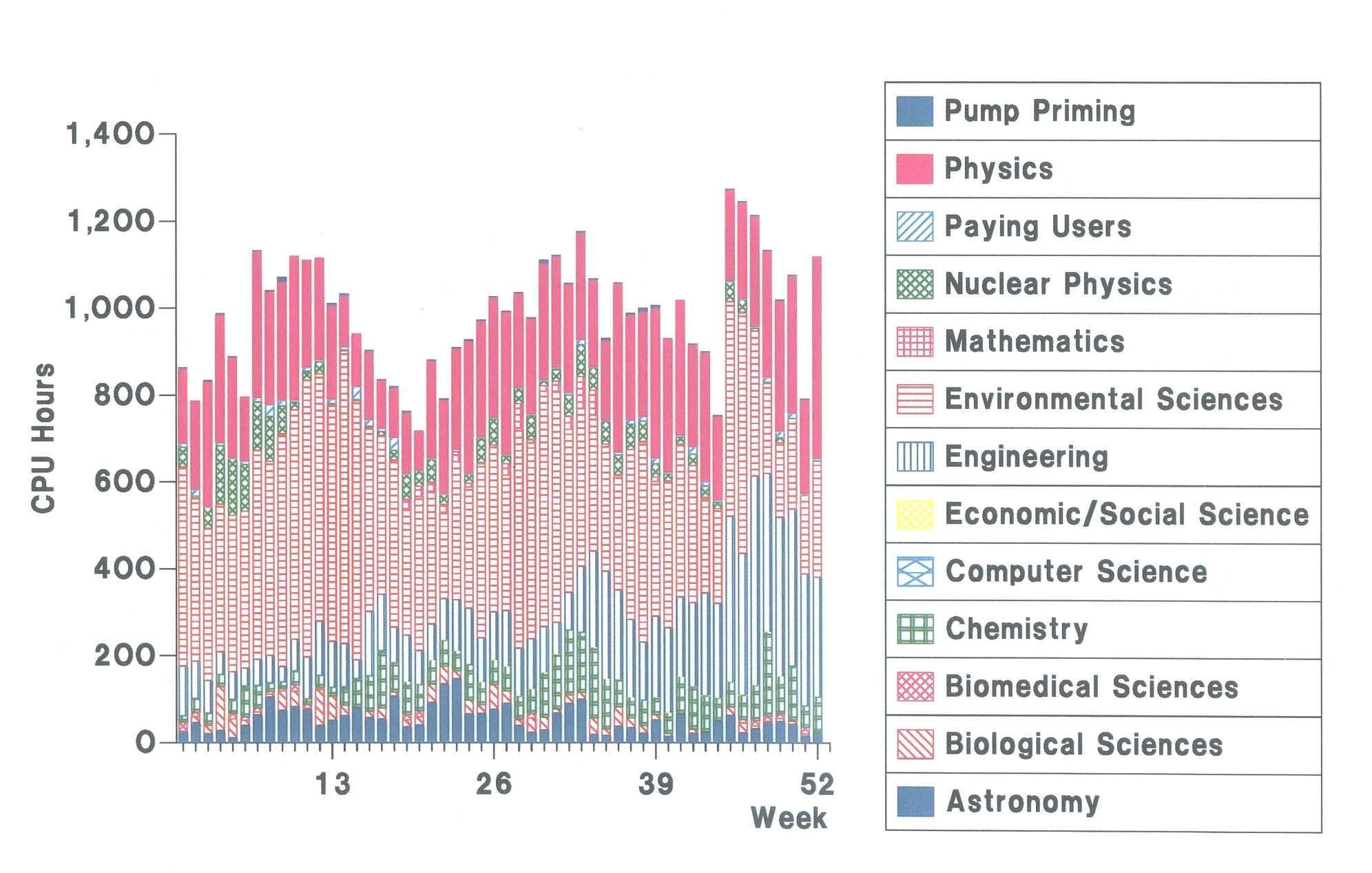

The aim of this report is to indicate the range and quality of the work carried out on the Cray X-MP and Cray Y-MP supercomputers at the Rutherford Appleton Laboratory over the period 1991 to 1993. The material was obtained in response to a letter sent to over 160 users in May 1993 requesting contributions by October 1993.

Although the response from the different user communities was variable, we hope that the contributions give some indication of the scope of the current on the Cray vector supercomputers. We are grateful to all who contributed to this report and look forward to future reports which will highlight the growing diversity of the field.

Professor C R A Catlow

Chairman, Atlas Cray Users' Committee

January 1994

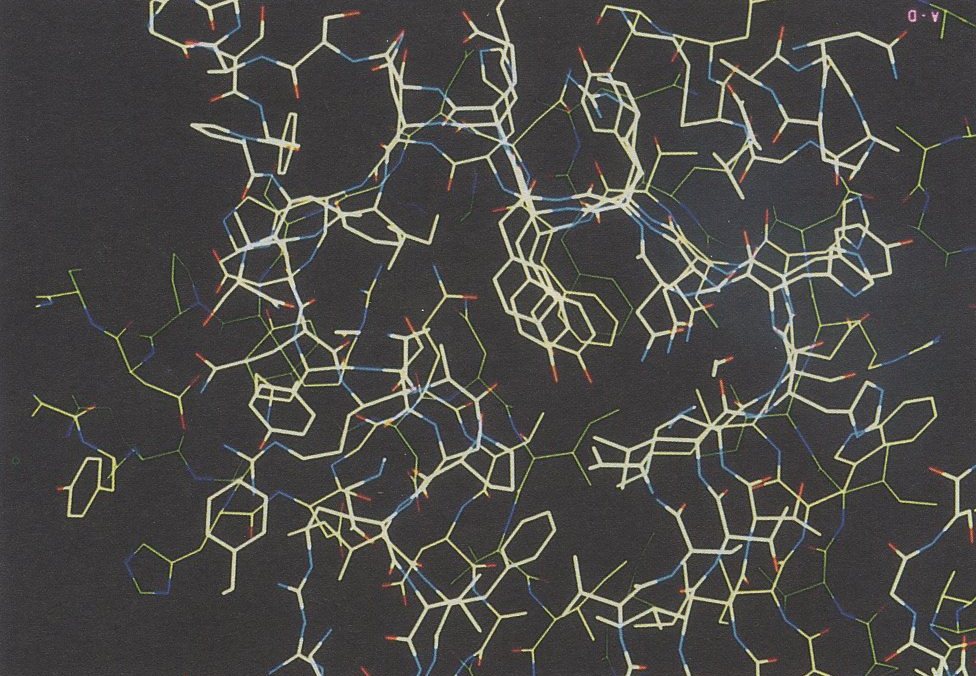

Computational methods can now make detailed and accurate predictions of the structures and properties of inorganic materials. Electronic-structure calculations are being performed on increasingly large systems, and simulation methods, based on "effective interatomic potentials", may now be used to model complex materials. One of the most exciting fields for the application of computer modelling is mineralogy. Mineralogy poses major challenges to the predictive capacity of contemporary computational techniques. But, as a discipline, mineralogy can also benefit immeasurable from the capability of computer methods (a) to simulate the inaccessible atomistic or microscopic processes that underlie macroscopic phenomena, and (b) to simulate pressure and temperature conditions normally beyond the range of experiment. Hence as a subject computational mineral physics has grown rapidly in recent years, attracting solid state physicists and chemists interested in testing and establishing their theories and methodologies on real, structurally-complex materials, and minerologists, petrologists and geophysicists who require data (currently unavailable by direct experiment) to constrain models for and to obtain insights into the processes that determine and underpin planetary evolution. Brief reference to the many papers now appearing in Earth Science journals shows how the subject as a whole is benefitting from this synergism. Below, we outline some of the topics which have been studied in the past few years, using the super computer facilities at RAL.

DEECTS AND DIFFUSION IN PEROVSKITES: We are carrying out a series of calculations on petrovskite materials, with the aim of understanding the rheology of the lower mantle. We are using the defects code CASCADE to predict the energies of defect formation and migration in perovskites. So far we have excellent quantitative agreement with known data for titanates. Recently, we have also used our atomistic computer simulation techniques to investigate the site partitioning of iron in (MgFe)SiO, petrovskites. Our calculations predict that the most energetically favourable reaction for iron substitution will be a direct exchange of Fe2+ for Mg2+. Substitution of Fe into the octahedral site and Si into the 8-12 fold coordinated site, as recently proposed by Jackson and co-workers, is predicted to be extremely unlikely. This conclusion has just been confirmed by MAS NMR (Kirkpatrick et al., (1991) Amer Mineral, 76, p 673).

DEFECT FORMATION VOLUMES IN MgO: The variation of the activation volume and free energy of formation of Schottky defects in MgO have been investigated with PARAPOCS. The approach used is based on the construction of a charge neutral supercell of atoms containing the defect, which is equilibrated ( within the limits of the quasi-harmonic approximation) at the required P and T. The results demonstrate that the supercell method works well. We predict that both the activation volume and free energy formation of Schottky defects in MgO are highly dependent on pressure, but only weakly affected by temperature. The activation volume is predicted to decrease by more than 50% as the pressure increases to that in the lower mantle. Moreover, the assumption used by many previous workers that the activation volume has the same pressure dependence as the atomic volume has been shown to be incorrect. We have recently extended this work to include the calculation of diffusion coefficients in MgO using Vineyard theory.

MOLECULAR DYNAMIC & THERMODYNAMIC MODELS OF MELTING: Work has begun to extend the phenomenological dislocation model of melting, successfully used by Poirier to model Fe, to other metallic systems. The model assumes that a melt is isostructural with the crystal saturated with dislocation cores. By considering the volumetric dilation introduced by dislocations in a metal, estimates of the entropy and volume of melting can be obtained. So far excellent agreement has been obtained for over 12 metals between the observed melting temperature and that predicted by our model. This finding underpins the validity of Poirier's work on the melting of Fe and its geophysical implications. We propose to extend our study to consider the melting of silicates.

Molecular dynamics offers the only approach to understanding melting at an atomic level. We are using both constant T and constant P molecular dynamics to investigate the details of melting and pre-melting processes in MgO and MgSiO3-perovskite. Our calculations (based on empirical potentials) predict reasonable volumes of melting, but the absolute temperature of melting seems to be overestimated by 500 to 1000K. We are particularly interested in investigating the effects of defects and surfaces on the predicted melting behaviour of materials, as these will destabilize the crystal and hence encourage melting at lower temperatures than predicted for the perfect bulk. We are also studying the effect of pressure on the structures of the melts produced in these systems. We have again confirmed the pre-melting enhanced oxygen mobility in the perovskite lattice, and will carry out further investigations into the nature of superionic conduction in perovskites. Work on fluoride perovskites is also in hand to widen the basis of our analysis of the pre-melting behaviour of perovskite-structure phases.

THE LIMITS OF THE QUASI-HARMONIC APPROXIMATION: Our code PARAPOCS performs free energy minimization using interatomic potentials, and so enables the thermodynamic properties of a silicate to be calculated from their predicted lattice dynamical characteristics. The approach depends upon the validity of the quasiharmonic approximation, which is known only to hold at temperatures below the Debye temperature of the crystal. Above this temperature, phonon-phonon interactions become significant, and in the past quantitative simulations in this regime have required the use of molecular dynamics (MD) techniques. MD, however, cannot usually be used with the more sophisticated potentials used in lattice dynamical calculations. We are carrying out a series of parallel calculations using both methods to establish firmly the point at which the quasi-harmonic approximation collapses for mantle materials. We are particularly interested in the effect of pressure on this, since it is known that pressure suppresses intrinsic anharmonic processes, and so at depth in the mantle, the quasi-harmonic approximation may become valid over a wide temperature range. Our preliminary conclusions, from calculations at simulated geothermal temperatures, are that for geophysical systems the quasi-harmonic approximation will only become valid at pressures greater than 100 GPa.

THE EFFECT OF P ON THERMAL EXPANSION: Recent experimental work has shown that the pressure dependence of the thermal expansion coefficient can be expressed as (α/α0) = (V0/V)-δT , where δT, the Anderson-Gruneisen parameter, is approximately independent of pressure, and for the materials studied has a value that lies between 4 and 6. Calculation of δT from seismic data, however, appears to suggest a contradictory value of between 2 and 3 for mantle-forming phases. Using an atomistic model based on our previously successful many-body interatomic potential set (THB1), we have performed calculations to obtain values of δT for four major mantle-forming minerals. Our model results are in excellent agreement with experimental data, yielding values of between 4 and 6 for forsterite and MgO, and values in the same range for MgSi03-perovskite and Mg2SiO44-spinel. The apparent conflict between the values of δT predicted from seismic data and those obtained from experiment, and now from theory, must be due to invalid approximations in the complex inversion of the seismic data.

THE VIBRATIONAL SPECTRA AND THERMODYNAMICS OF MINERALS: We have used the PARAPOCS code to model the vibrational properties of crystal lattices, which are subsequently used to interpret infra-red and Raman data obtained on perovskites, MgSiO3 and Mg2SiO4 polymorphs, etc. The use of such microscopic models is the only way to fully assign the spectra of such complex structures. We have also used this free energy code to predict the phase diagram for a number of ABO3 systems, and to calculate oxygen isotope equilibria. We have just started to use this lattice dynamical code to establish the microscopic basis for many of the approximations used in the variety of definitions of the Gruneisen parameter.

Al/Si DISORDER AND THE MULLITE PROBLEM: Modelling disorder is much more difficult than modelling an ordered system, and modelling an incommensurate phase is much more difficult than modelling a normal material. Hence simulating mullite, which involves both disorder and an incommensurate structure represents a major challenge. We have now established a methodology for modelling disorder, and are now approaching the problem of simulating what are considered to be the key defects in the mullite structure (namely the so-called T-T-T* cluster). We propose to pursue this work and extend it to modelling Al/Si disorder in other silicates, and particularly in feldspars.

AB INITIO CALCULATIONS ON MINERALS: Modelling of atomic interactions requires an accurate description of interatomic forces. It is now possible to perform quantum mechanical calculations on complex phases, from which insights into bonding, and hence accurate interatomic potential models, can be derived. We are investigating the suitability of the code CRYSTAL (C Pisani et al, Lecture Notes in Chemistry, vol 48, Springer-Verlag) as a way of performing such quantum mechanical calculations. CRYSTAL enables calculations (with periodic boundary conditions) to be carried out, within the limits of the Hartree-Fock approximation. In addition, in association with Dr Renata Wentzcovitch, we have been carrying out a series of quantum mechanical molecular dynamics calculations to predict the structure and stability of MgSiO3 polymorphs as a function of pressure.

G D Price, I G Wood and D Akporiaye

The prediction of zeolite structures

In: Modelling of structure and reactivity in zeolites (eds C R A Catlow) Academic Press,

London (1992) 19

S Padlewski, V Heine and G D Price

Atomic ordering around oxygen vacancies in sillimanite: A model for the mullite structure

Phys Chem Minerals 18 (1992) 373

M Matsui and G D Price

Computer simulation of the MgSiO3 polymorphs

Phys Chem Minerals 18 (1992) 365

B Reynard, G D Price and P Gillet

Thermodynamic and anharmonic properties of forsterite: computer simulations vs high

pressure and high temperature measurements

J Geophys Res 97 (1992) 19791

S Padlewski , V Heine and G D Price

The energetics of interaction between oxygen vacancies in sillimanite: A model for origin of

the incommensurate structure of mullite

Phys Chem Minerals 19 (1992) 196

A Pavese, M Catti, G D Price and R A Jackson

Interatomic potentials for CaCO3 polymorphs (calcite and aragonite) fitted to elastic and

vibrational data

Phys Chem Minerals 19 (1992) 80

P Chandley, R J H Clark, R J Angel and G D Price

An investigation of the site preference of Vanadium doped into ZrSiO4 and ZrGeO4

Dalton Proceedings of the Royal Chem Soc (1992) 1579

R A Jackson and G D Price

A transferable interatomic potential for calcium carbonate

Molecular Simulation 9 (1992) 175

S Padlewski, V Heine and G D Price

A microscopic model for a very stable incommensurate modulated structure: mullite

J Phys Con Matter 5 (1993) 3417

M Catti, A Pavese and G D Price

Thermodynamic properties of CaCO3 calcite and aragonite: a quasi-harmonic calculation

Phys Chem Minerals 19 (1993) 472

R Nada, J Stuart, G D Price, C R A Catlow and R Dovesi

Comparative study of all-electron and core pseudo-potential basis sets for periodic ab initio

Hartree-Fock calculations: the case of MgSiO3-ilmenite

J Phys Chem Solids 54 (1993) 281

R M Wentzcovitch, J L Martins and G D Price

Ab initio molecular dynamics with variable cell shape: application to MgSiO3

Phys Rev Letts 70 (1993) 3947

P Gillet, F Guyot, G D Price, B Tounerie, and A LeCleach

Phase changes and thermodynamic properties of CaTiO33 Spectroscopic data,

vibrational modelling and some insights on the properties of MgSiO3 perovskite

Phys Chem Minerals 20 (1993) 159

G D Price

Computer modelling of defects and diffusion in minerals

Terra Abstracts 4 (1992) 37

L Vocadlo and G D Price

Computer calculations for absolute ionic diffusion in MgO using the supercell method

Terr Abstracts 4 (1992) 45

J H Davies and G D Price

Are the lateral thermal variations constant with depth through the interior of the

mantle

EOS 73 (1992) 61

G D Price

Molecular dynamic simulation of melting

Terra Abstracts 5 (1993) 522

An understanding of the interaction between magnetic fields and velocities in a conducting fluid is essential to our understanding of the dynamics of the Earth's core. Kinematic dynamo calculations are one important step in obtaining such an understanding; they assume that the fluid velocity is known, and solve the electromagnetic induction equation for the magnetic field produced. The numerical problem reduces to that of finding eigenvalues and eigenvectors of a large, sparse, real but non-symmetric matrix. Thus we can get some idea of the sort of fluid motions that must be occurring in the Earth to produce the observed magnetic field.

Codes we have developed solve the induction equation in a spherical shell geometry, using a truncated spherical harmonic expansion, and either a finite difference radial grid representation or a parallel shooting method. We solve both the full 3-dimensional equations, and a simplified 2-d set obtained in the nearly-symmetric limit.

Our investigations to date have involved simple, large-scale velocity fields. Even so the computational requirements of the work are fairly heavy; the low truncation limits enforced by the machine memory size and speed effectively limit the spatial resolution obtainable. Access to the Atlas Crays, and in particular the Y-MP service, has allowed us to investigate systems previously beyond our resolution, resulting in worthwhile new findings which will be published in the near future. (One paper submitted to Nature, another in preparation for the Journal of Fluid Mechanics).

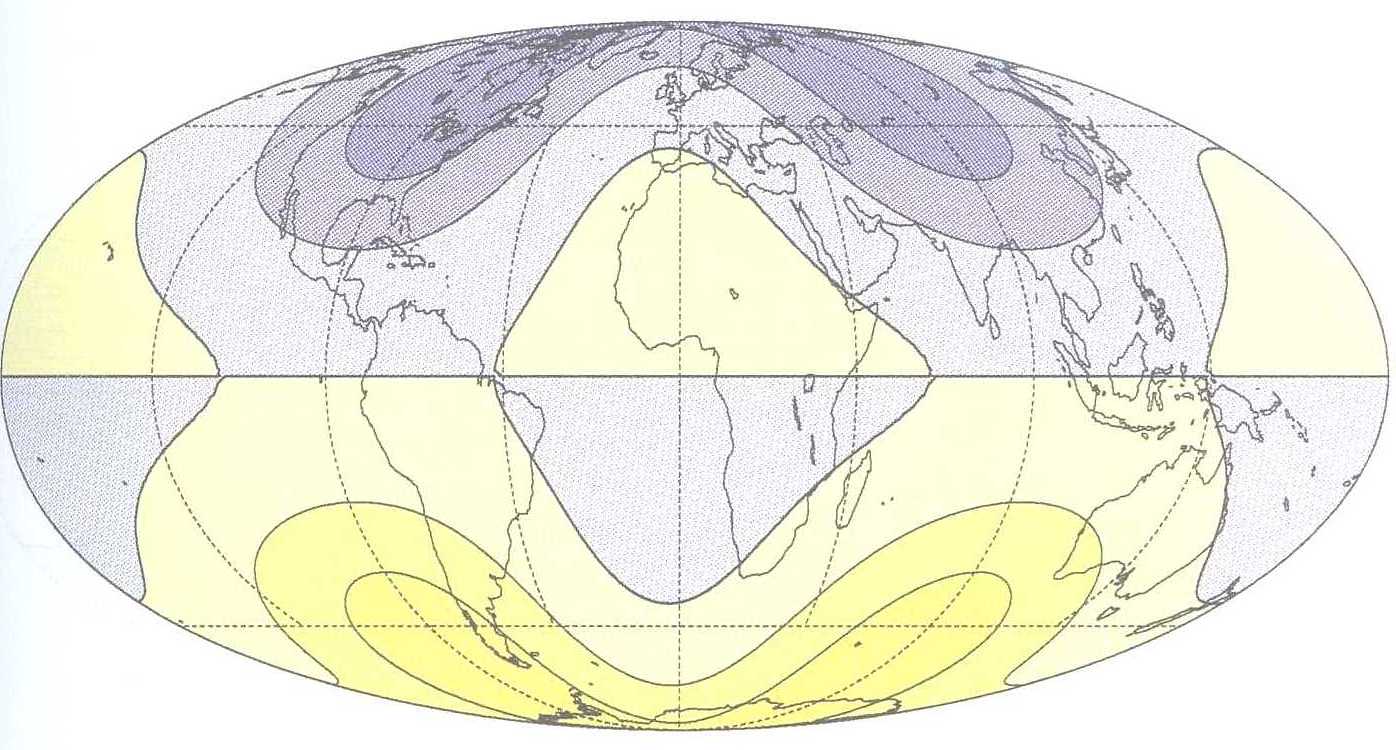

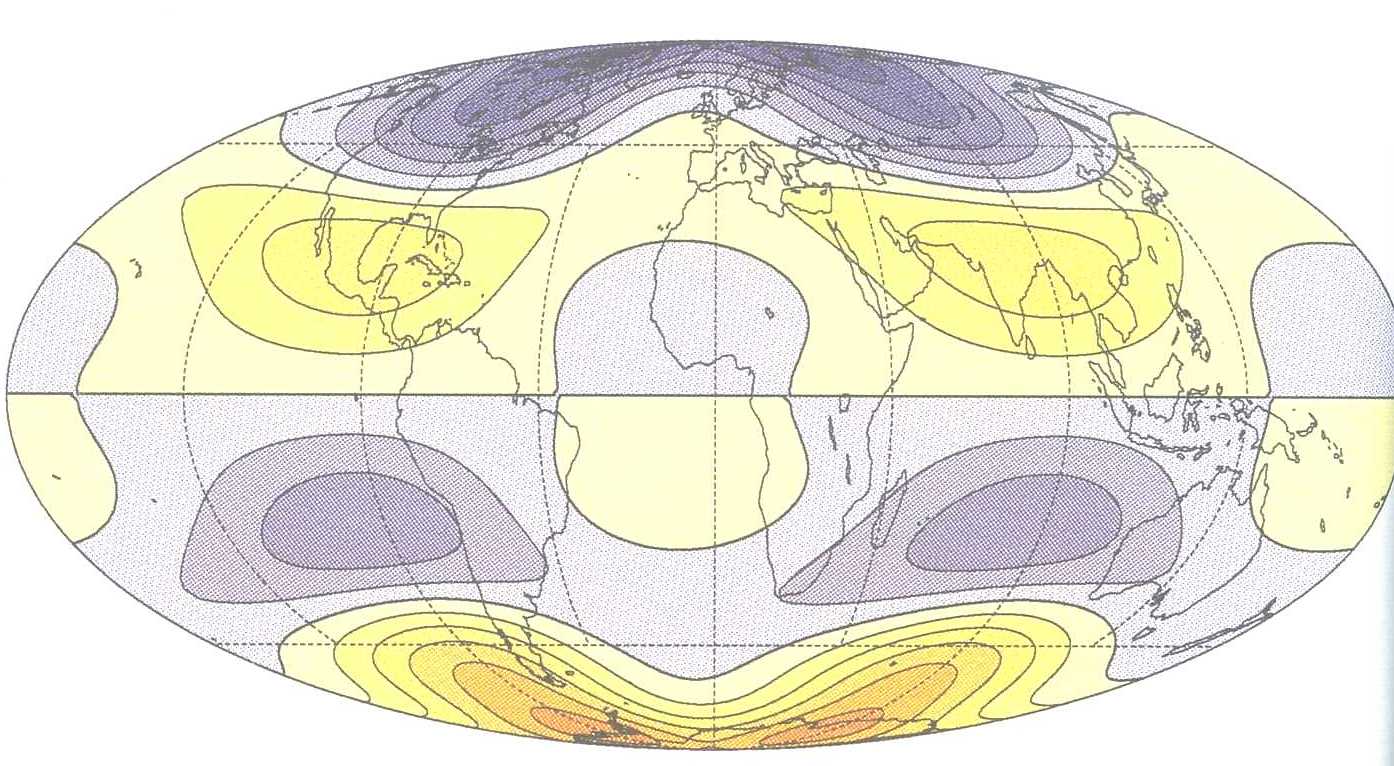

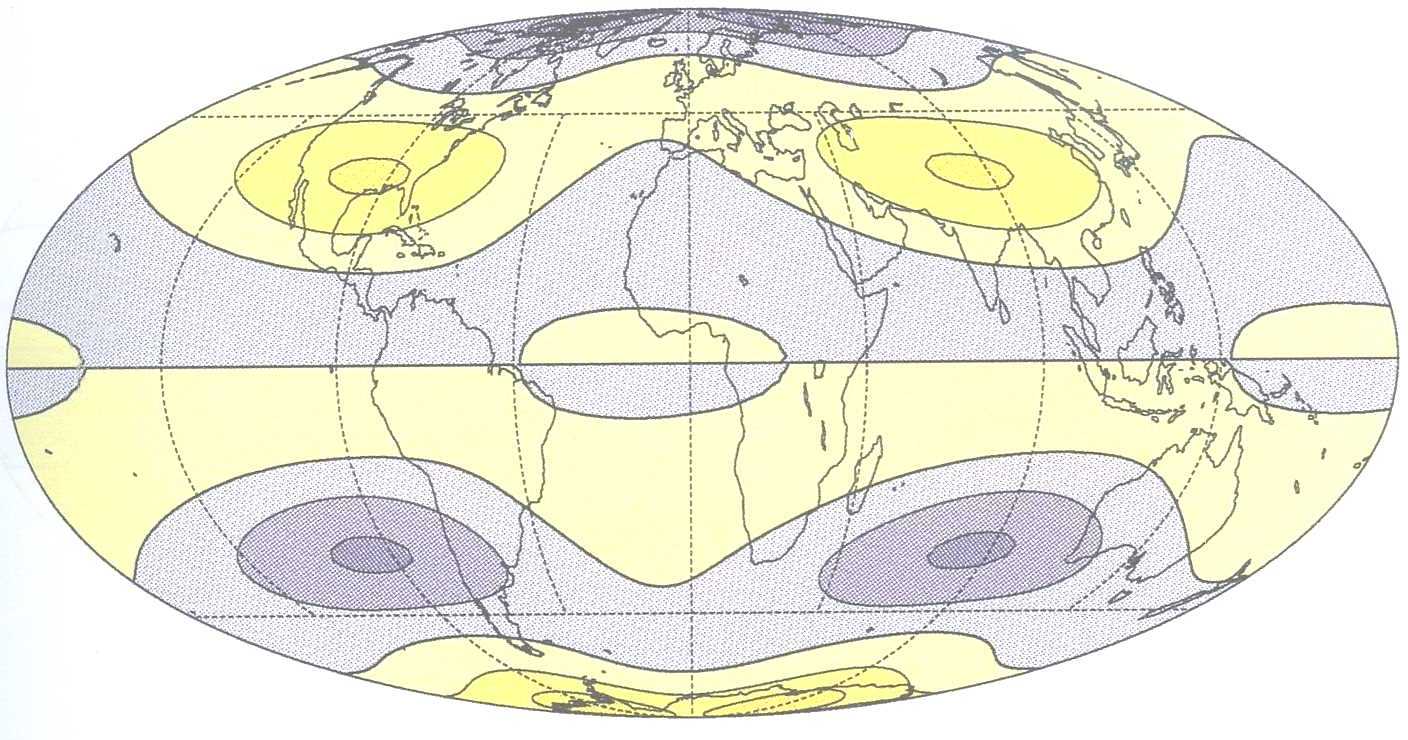

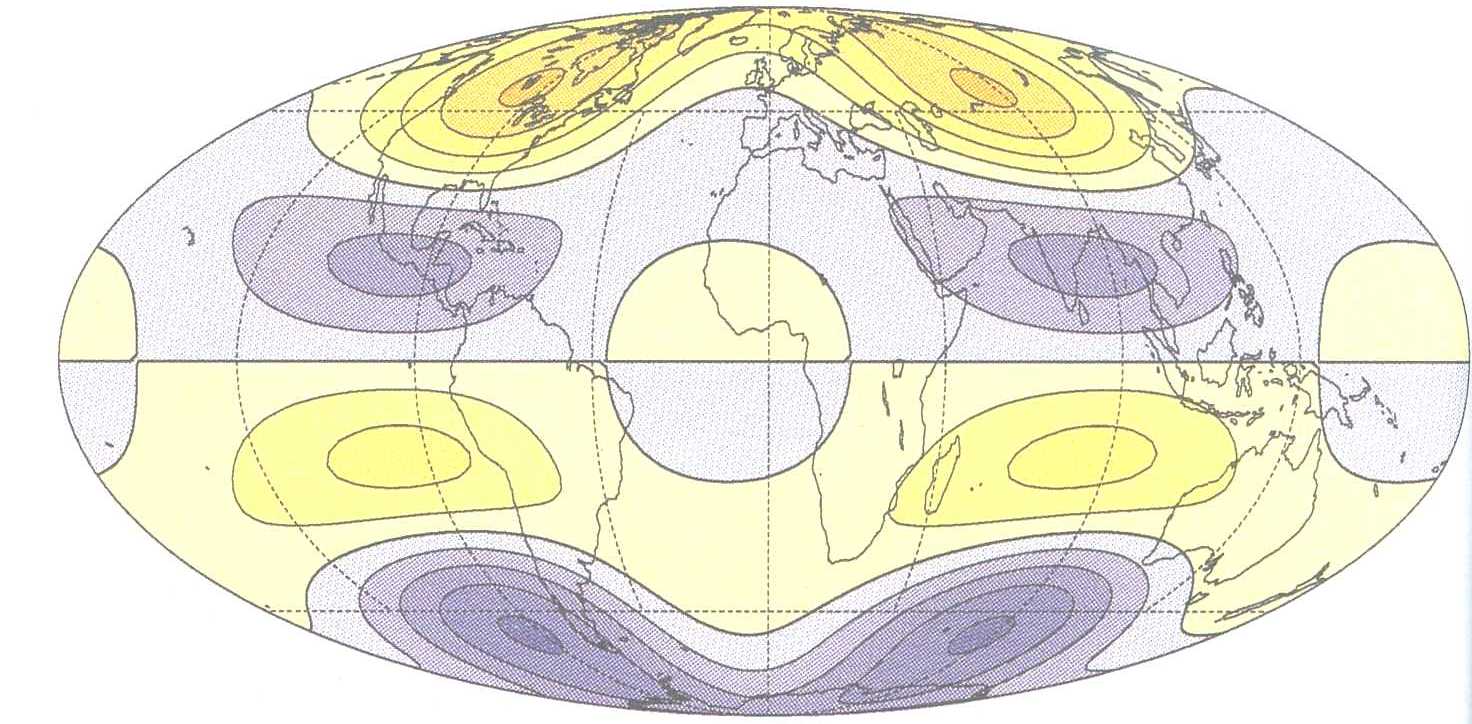

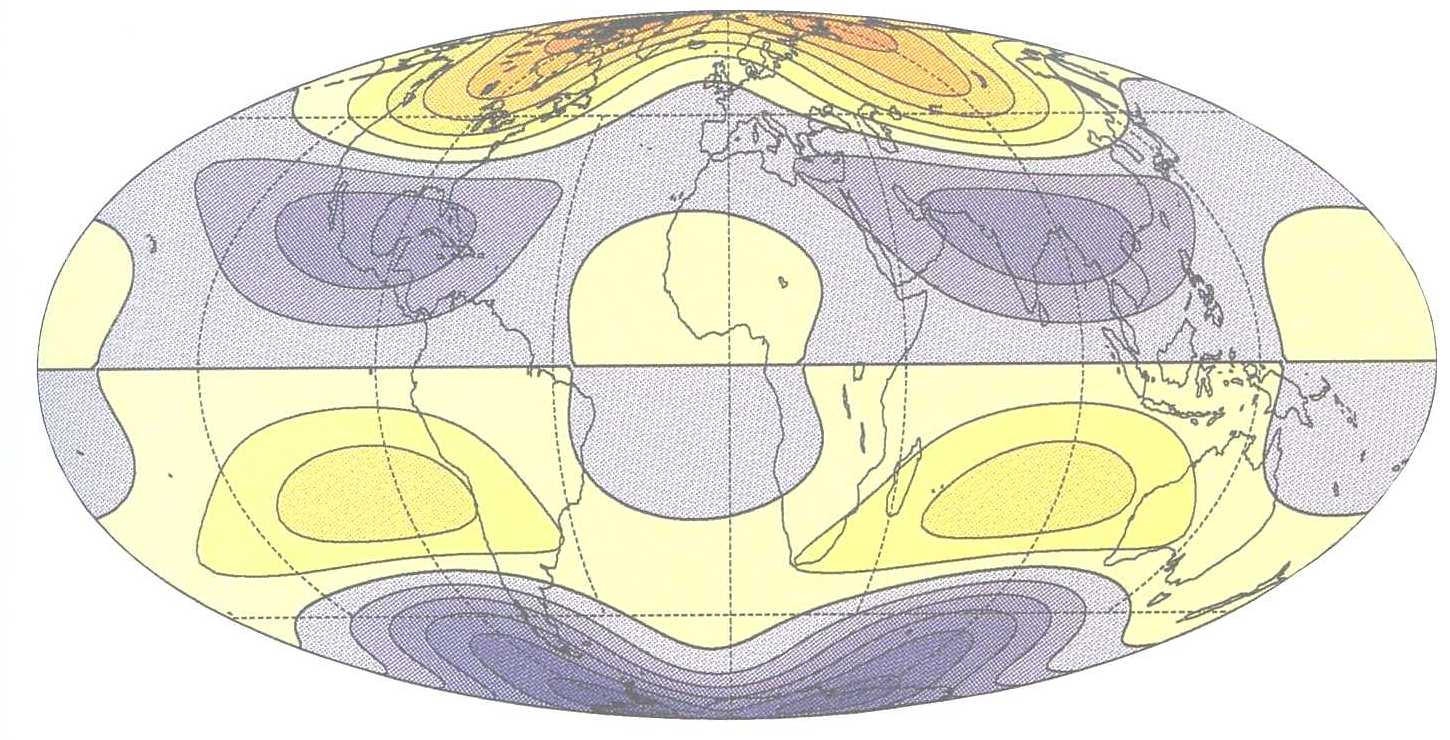

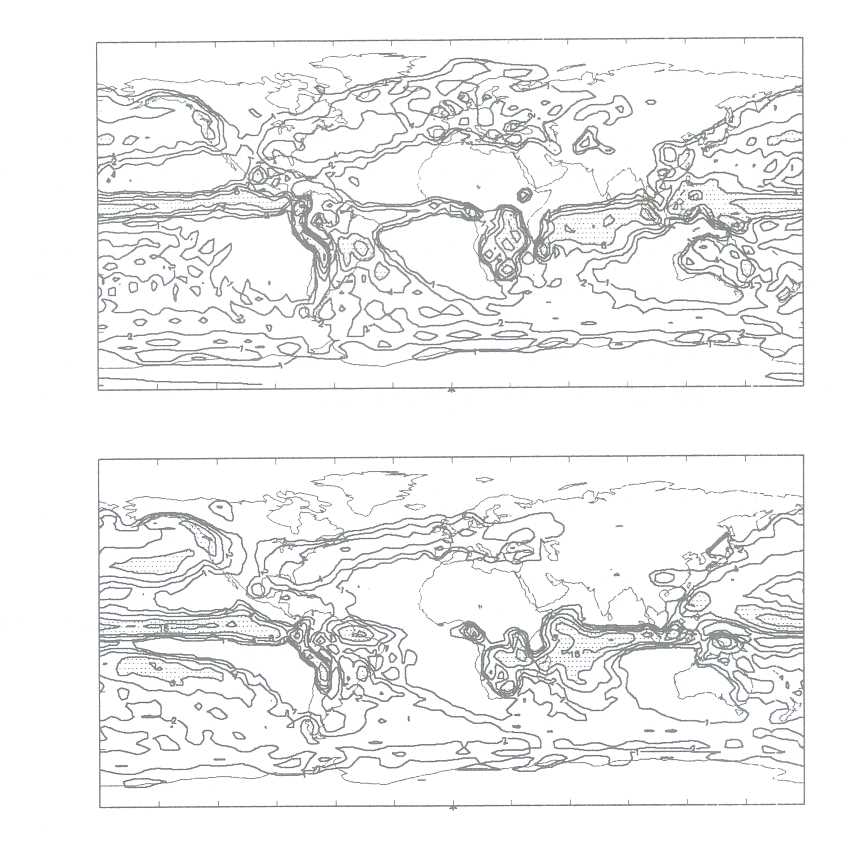

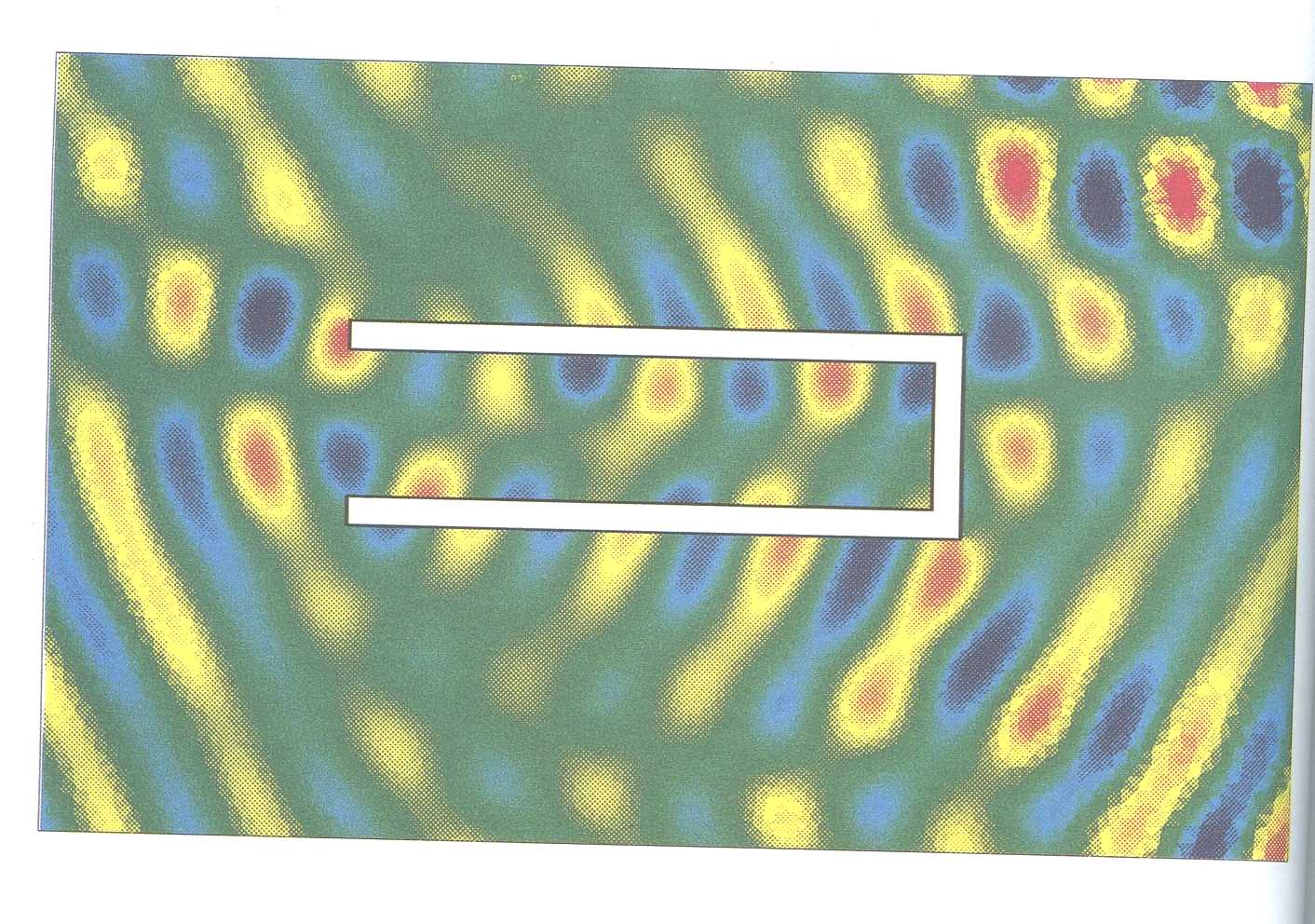

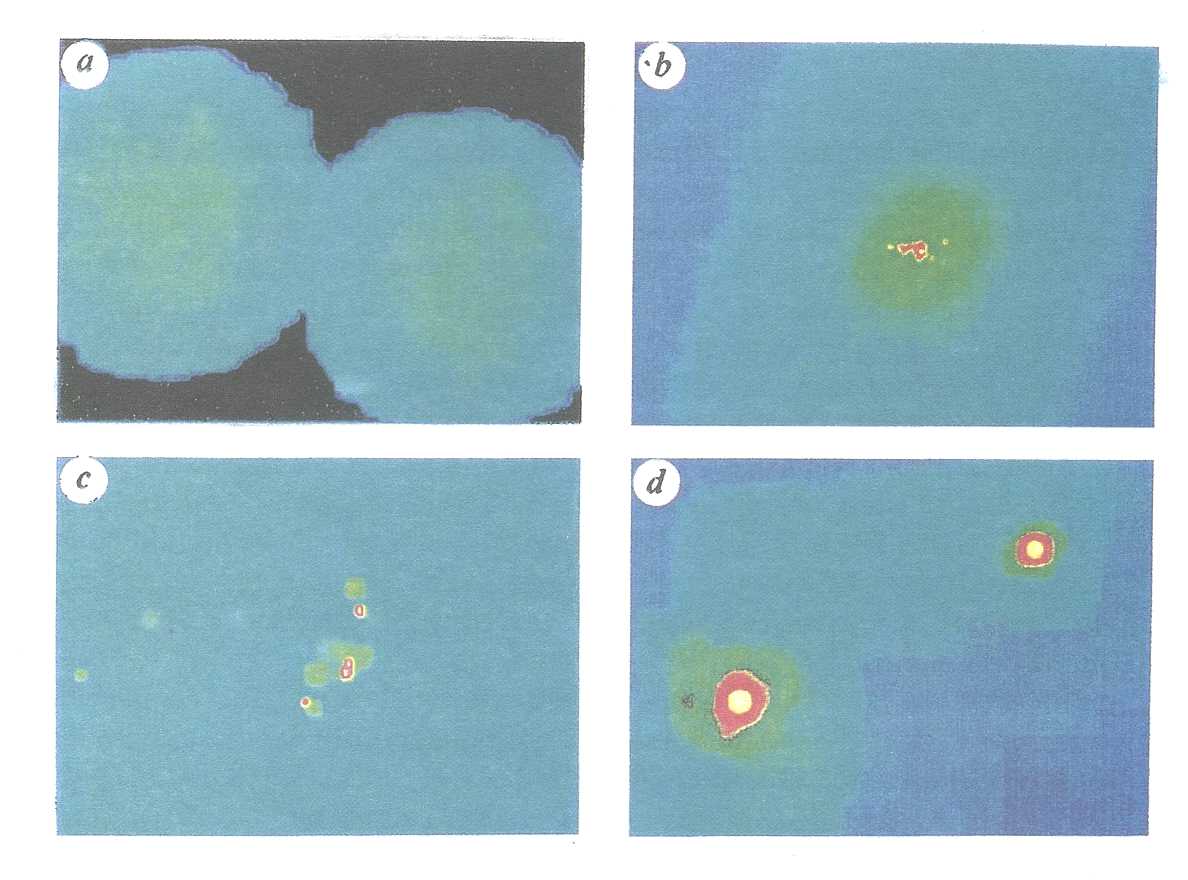

Recent work has focused on the role of meridian circulation in determining the time-dependence of the magnetic field: the presence of such an overturning circulation tends to stabilise an oscillatory magnetic field. The point at which the change-over in stability from stationary to oscillatory solutions occurs has been investigated in some detail, and we hope that it will shed new light on the reversal behaviour of the Earth's magnetic field. The figures enclosed show examples of the radial magnetic field at the outer surface of the dynamo region, for both stationary and oscillatory fields, from work carried out on the Y-MP Cray.

It is hoped that future work, using more realistic velocity fields, and incorporating dynamic effects, will lead to greater understanding of the interaction of planetary scale velocities and magnetic fields. Such work will require even more intensive calculations to be carried out.

The May 23, 1989 Macquarie Ridge earthquake (Mw = 8.2) was the largest to have occurred globally in the last fourteen years. In addition, it was the largest to have occurred on the plate boundary between the India/ Australia tectonic plate and the Pacific plate, south of New Zealand in more than seventy years. It is also the largest known submarine strike slip earthquake. (An earthquake is called "strike slip" if the net slip is practically in the direction of the fault strike.) At the time of the event, the newly installed worldwide distribution of seismometers were in operation. These instruments are "broad-band", that is, they have a flat response over a very broad frequency range (100s: period to 1 hz. frequency) and have a very large dynamic range, faithfully recording ground motions for magnitude 8 earthquakes at distances as close as 30°. This was therefore the largest well-recorded strike slip earthquake and offered a unique opportunity for its study in detail.

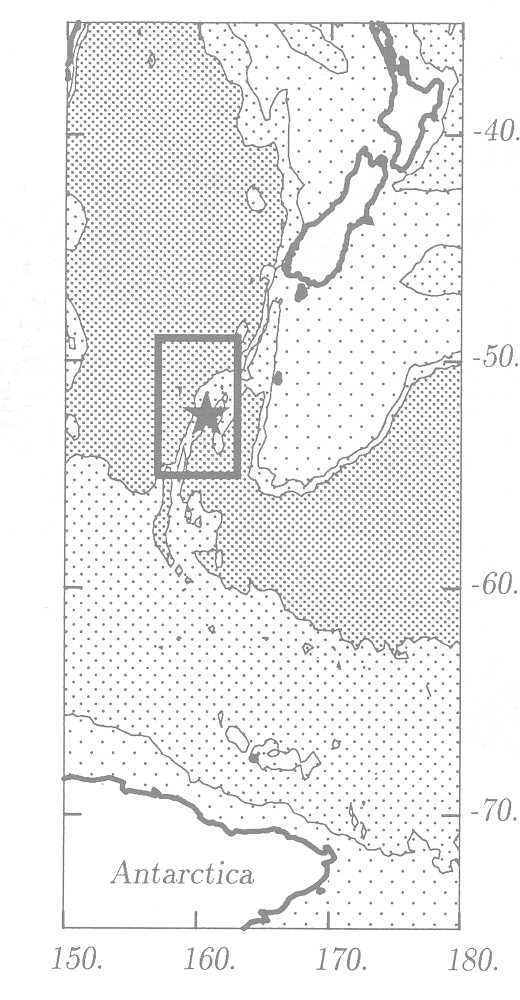

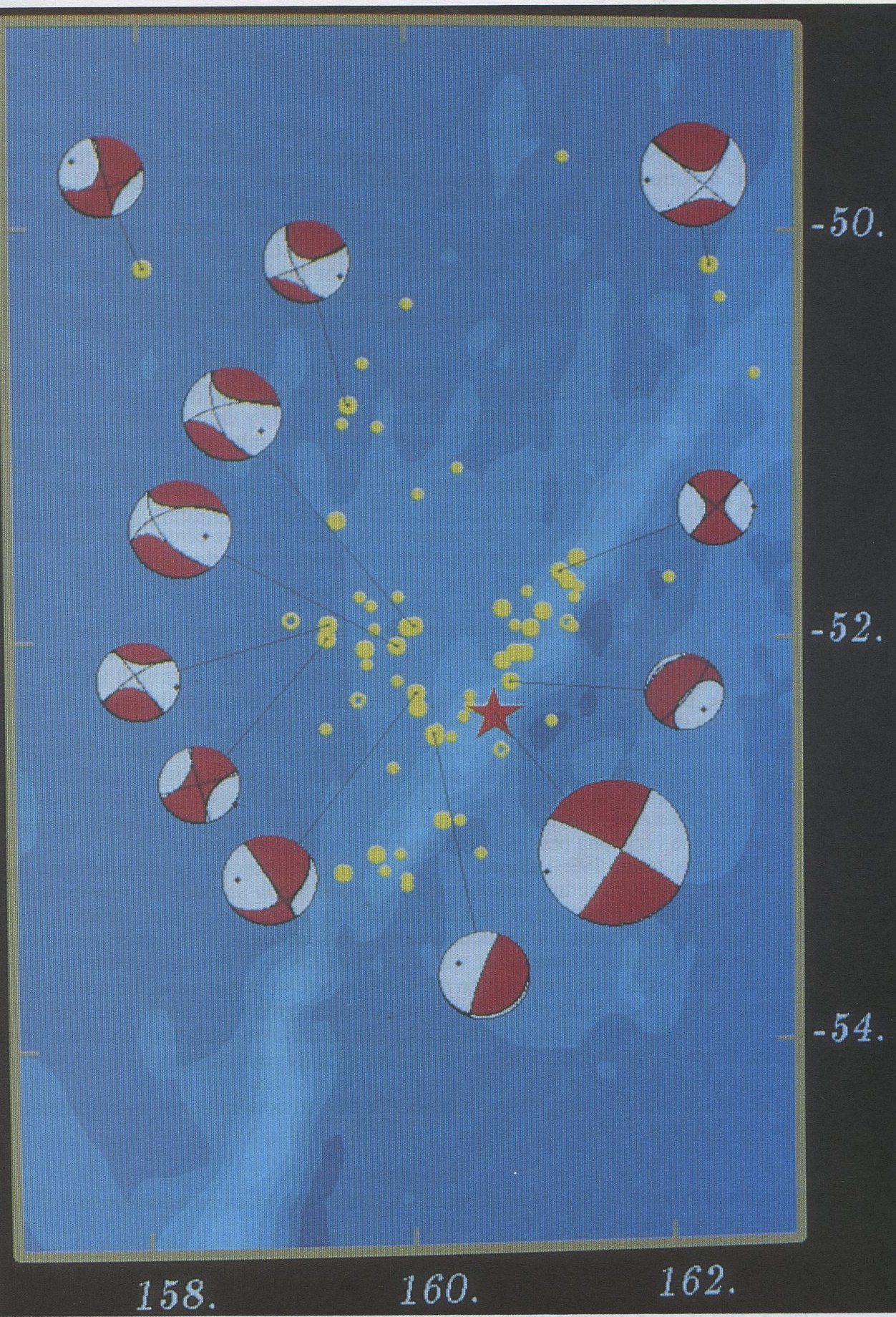

Figure 1 shows a map of the Macquarie Ridge (MR) area with bathymetric contours and the location of the Macquarie Ridge earthquake. The Macquarie Ridge is seen to extend south from South Island, New Zealand. The India/ Australian plate is subducting beneath the Pacific plate in the northern and southern parts of the MR but on the portion of the plate boundary where the 1989 event occurred the motion is primarily of strike-slip type.

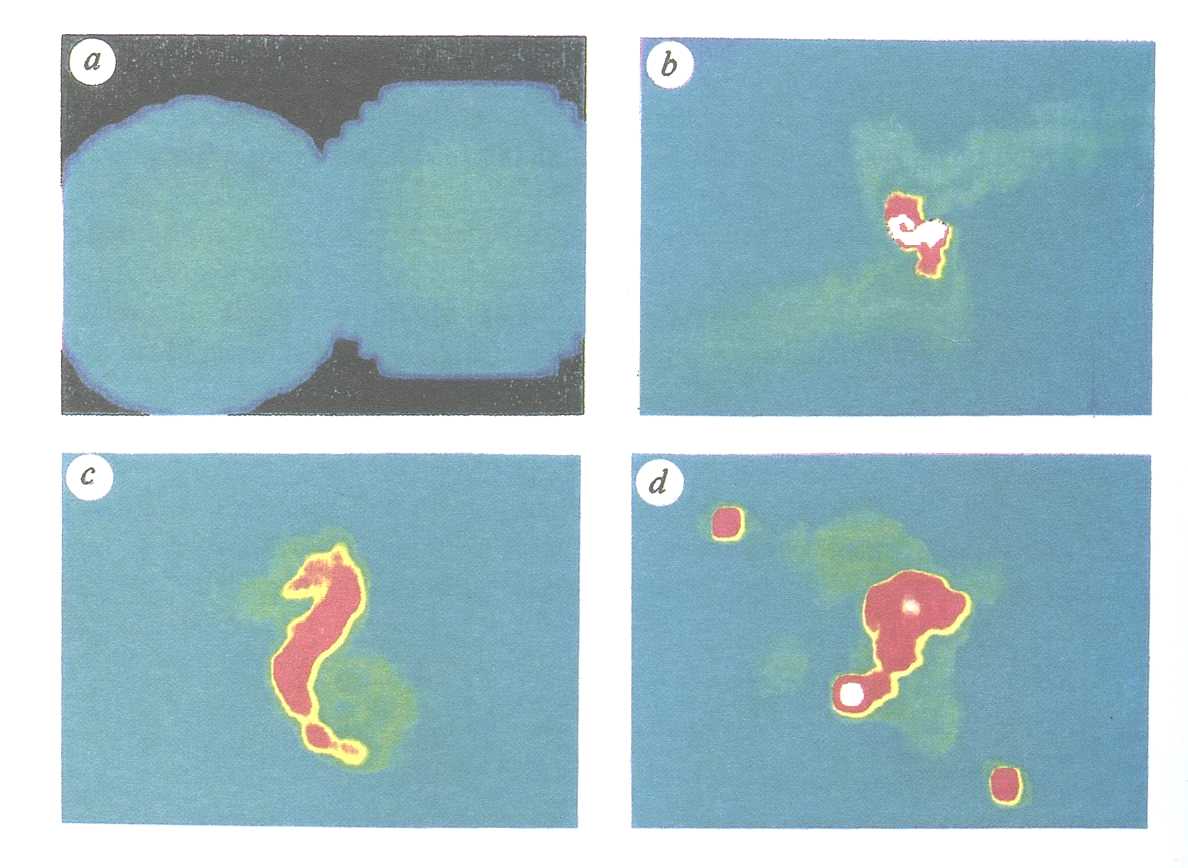

The smaller events which accompany great earthquakes ("aftershocks") generally give an indication of the ruptured area of the fault. Such an aftershock study was made and shows (Figure 2) that there were remarkably few and small aftershocks for an event of this size. The aftershocks were distributed along a 220 km portion of the India/ Australia-Pacific plate boundary and indicate that the motion was bilateral on a vertical fault. In addition, the earthquake reactivated a 175 km section of a fault to its west which had been dormant at least since 1964 (as seen from the study of the seismicity on the central portion of the MR in the 25 year period before the earthquake) and 44% of the aftershocks occurred on this feature. Moreover, the largest aftershocks in the five month period following the event occurred not on the main fault plane but on the reactivated one. Based on available bathymetry, gravity and magnetic anomaly data from the region, this reactivated feature is interpreted as being an old oceanic fracture zone and hence a pre-existing zone of weakness. The aftershock distribution and their faulting mechanisms (Figure 2) are interpreted in terms of the tectonics of the region and suggest that a triangular piece of crust to the west of the Macquarie Ridge is being "squeezed" to the north and west between the India/Australia and Pacific plates (Das, 1992).

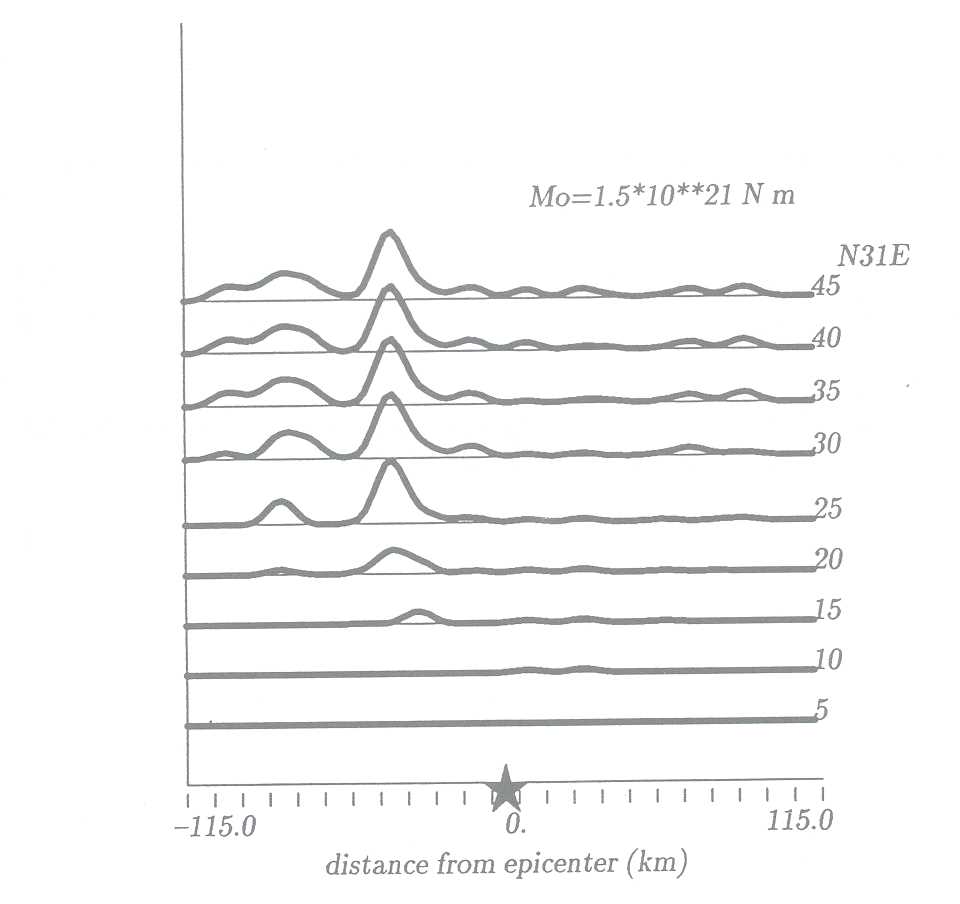

The evolution of slip rate with time as the fault ruptured during the earthquake was obtained by inversion of the amplitudes and shapes of compressional and shear waves that are transmitted through the earth and recorded by the seismometers located on the earth's surface. A rectangular section of the fault was divided into square cells and the source duration into discrete time steps. The method of linear programming, developed for this problem by Das and Kostrov (1990), was used for the inversion on the CRAY-YMP8 supercomputer at RAL. The method is an improvement of standard linear programming methods with proper protection against errors due to loss of accuracy that invariably occurs when working with very large matrices. The development and extensive tests that were required for this could not have been performed without the use of a supercomputer.

More than 100 inversions were performed in which material properties of the ruptured rock, the fault orientation, faulting duration and rupture area were varied. The weighting of data from different stations was also varied. It is well known that the inverse problem solved here is unstable and that additional constraints are necessary to stabilize the solution (Das and Kostrov, 1990). An implicitly imposed constraint is the size of the rupture area. Two explicitly imposed constraints are that the slip rate on the fault must be positive and that the seismic moment of the earthquake (defined as the average rigidity of the rock ruptured by the earthquake multiplied by the rupture area and the average slip on it) is equal to that obtained from the study of the very long period waves generated by the earthquake.

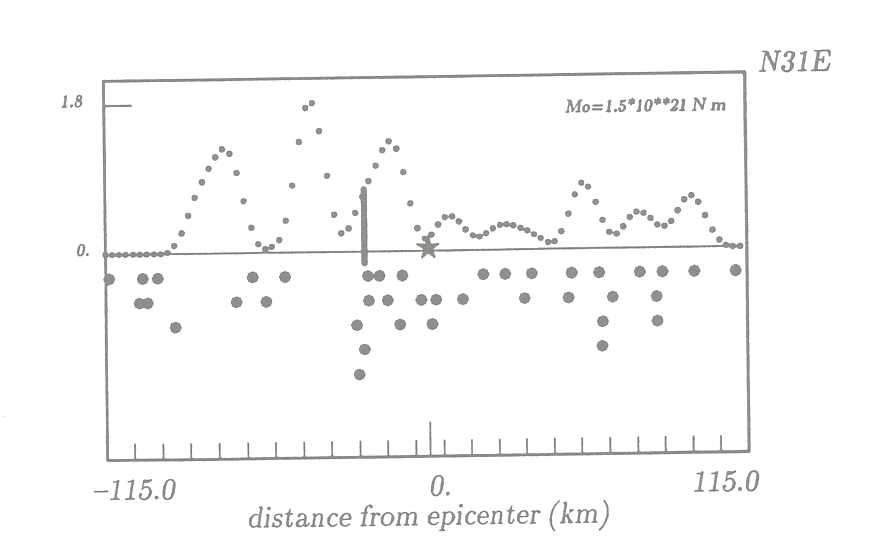

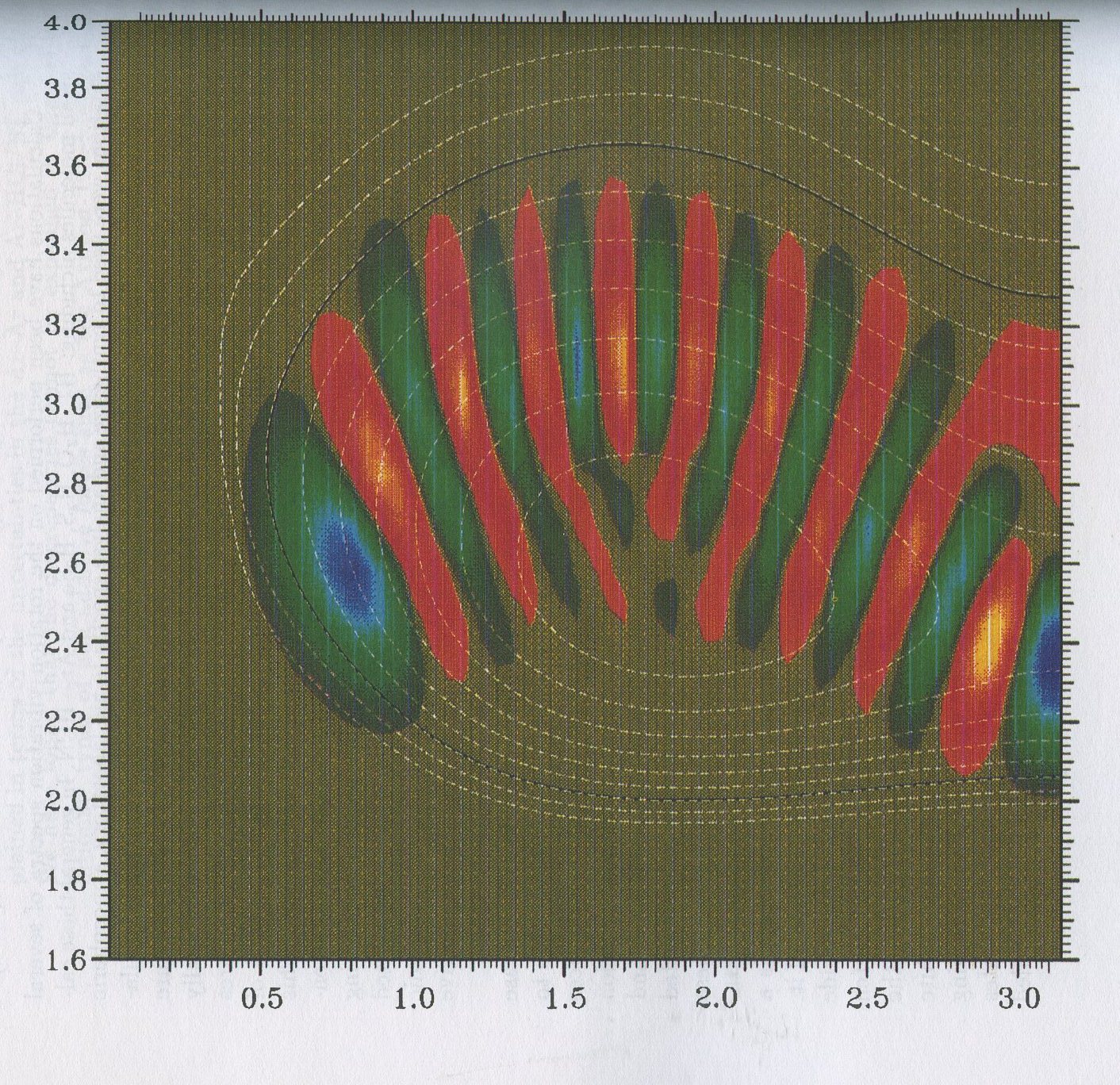

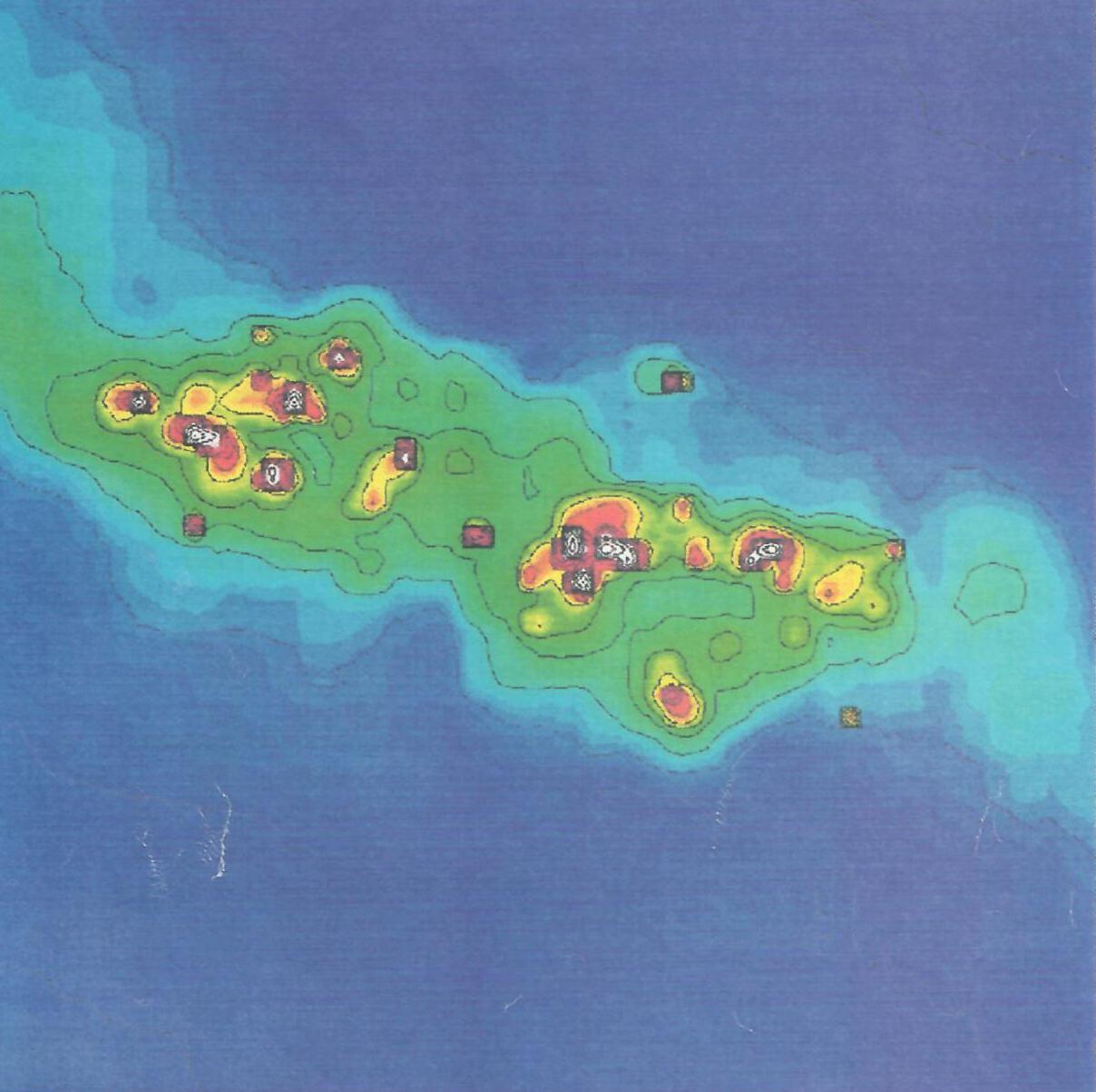

This study (Das, 1993) showed (Figure 3) that the rupture is bilateral with the propagation first starting towards the north-east and a few seconds later towards the south-west. The moment release was low in the area where the rupture initiated, with the highest areas of moment located towards the south-west. The average rupture speed was approximately the shear wave speed of the rock through which the fault ruptured. No simple relation between the moment release pattern and the distribution of aftershocks on the fault plane was found. Instead, the aftershock distribution is seen to be dominated by the intersection of the reactivated fault and the main fault plane with most aftershocks occurring to the north of this intersection. (Figure 4).

The study is still in progress with further additional constraints being considered (Das and Kostrov, 1993).

Das, S. (1992) Reactivation of an oceanic fracture by the Macquarie Ridge earthquake of 1989, Nature, 357, 150-153, 1992.

Das, S. and B.V. Kostrov (1990) Inversion for fault slip rate history and distribution using linear programming. The 1986 Andreanof Islands earthquake, J. Geophys. Res., 95, 689 9-6913, 1990.

Das, S. and B. V. Kostrov (1993) Diversity of solutions of the problem of earthquake faulting inversion. Application to SH waves from the great 1989 Macquarie Ridge earthquake, Phys. Earth Planet Int., preprint.

Das, S. (1993) The Macquarie Ridge earthquake of 1989, Geophys. J. Int., in press.

Das, S. Reactivation of an oceanic fracture by the Macquarie Ridge earthquake of 1989 Nature 357 (1992) 150-153.

Das, S. The Macquarie Ridge earthquake of 1989, Geophys. J. Intl. (1993) in press.

Das, S. and B. V. Kostrov Diversity of solutions of the problem of earthquake faulting inversion. Application to SH waves from the great 1989 Macquarie Ridge earthquake ( 1993) preprint.

The UK Universities Global Atmospheric Modelling Programme (UGAMP) is a community research project funded by NERC. UGAMP is a collaboration between six universities; Cambridge, East Anglia, Edinburgh, London (Imperial College), Oxford and Reading as well as the Rutherford Appleton Laboratory.

UGAMP's aim is to improve our basic understanding of a variety of large scale atmospheric phenomena and processes that are important in predictions of our climatic-chemical environment, thereby advancing the ability to simulate them correctly in models of the climate system. UGAMP's approach is to use controlled experiments with a hierarchy of models of varying degrees of complexity as research tools to further basic understanding.

The UGAMP science plan is ambitious and broad and it concerns the wealth of important atmospheric problems that need to be solved. It therefore covers a wide range of research areas, one of which is paleoclimate modelling. Predictions for climate change have been developed in the context of our current climate. However predictions are required for parameters outside this range and one very demanding test of such models is to apply them to past climates. The last glacial cycle (up to 120kyr before present) should prove an especially good test for the models because there is relatively good data coverage. This is particularly true for the last glacial maximum for which there is extensive land and sea coverage.

It is now widely accepted that the primary driving force of our Quaternary glacial/interglacial climate cycles is the change of seasonal distribution of insolation induced by orbital parameter changes. These changes are periodic, occurring at approximately 20, 40 and 100 kyr periods. However the climatic response to these forcings is currently a maximum at the 100kyr period, whereas the dominant forcing is at the 20 and 40 kyr periods. Thus feedback processes must be important and the strongest of these is thought to be related to the growth of the ice sheets.

Of central importance to the growth of ice sheets is the mass balance, which strongly depends on the simulated temperature and precipitation patterns. These are a function of elevation and the general circulation. In particular, the mid-latitude depressions (especially the storm tracks) play a vital role in transporting heat, moisture and momentum towards the pole and onto the ice sheets.

In order to accurately model mid-latitude depressions, general circulation models (GCM) have to be used. These types of models cannot be run for 100,000 years. Instead a "snapshot" of the typical climate of a particular period is obtained, having given it the appropriate boundary conditions. These include the ice sheet extent and elevation, the orbital parameters, the atmospheric CO2 concentration, and for an atmospheric GCM, the sea surface temperature. The model is then run for up to 10 simulated years and the results can be diagnosed. In the following section, we will describe preliminary results for a simulation of the last glacial maximum, 21 kyr BP.

The GCM that is used for this study is based on the UGAMP General Circulation Model (UGCM). The UGCM has been adapted for long-range climate simulations starting from the high resolution weather forecasting model developed and used by the European Centre for Medium-range Weather forecasts

The model is modified to include the ice sheet and sea surface temperature reconstructions of CLIMAP (1981). The model is spectral and the spectral coefficients are truncated at total wavenumber 42 (corresponding to a horizontal grid of 128 × 64 longitudes/latitudes). This is the first time that such a high resolution model has been used for simulations of the last glacial maximum. The model also includes a seasonal cycle. Previous results from perpetual February and August simulations were reported in Valdes and Hall(1993).

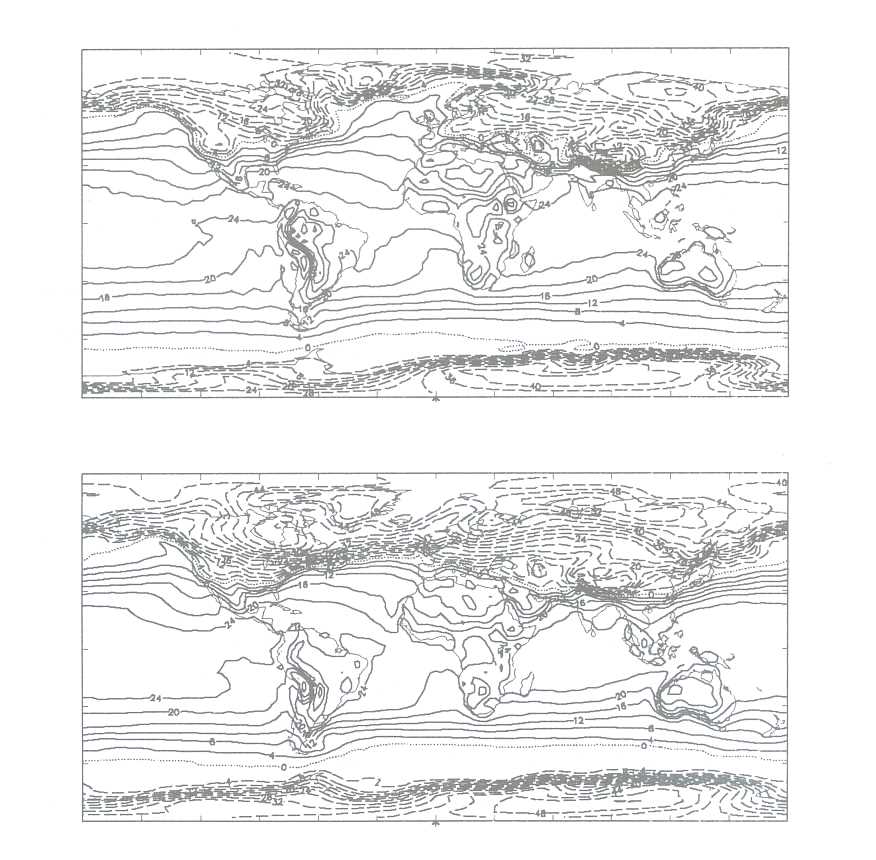

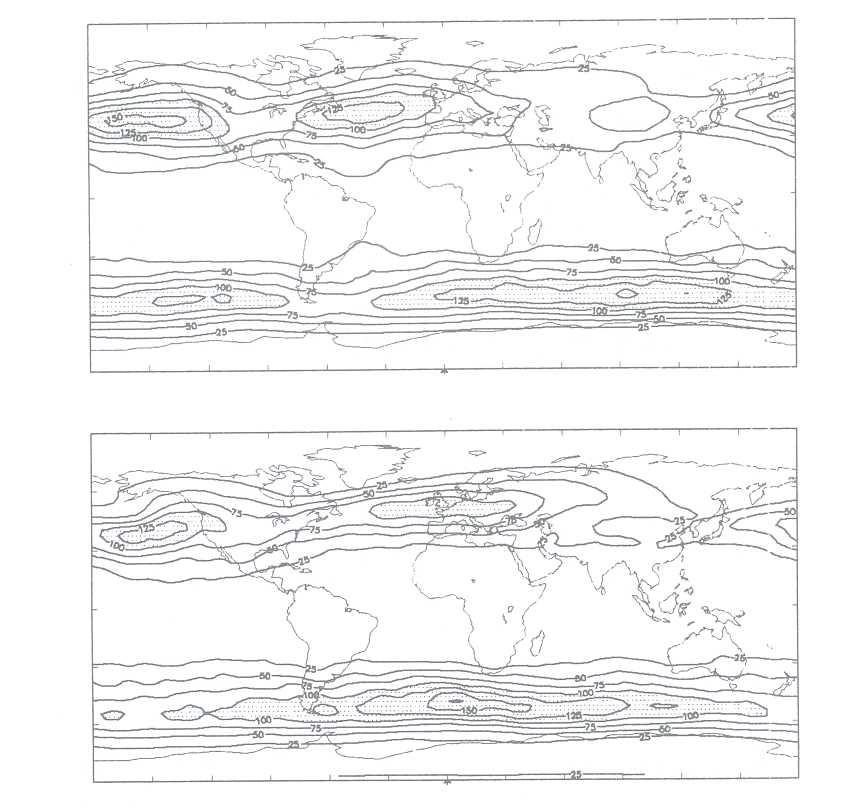

Figure 1 shows the model simulation of mean surface air temperature (at a height of 2m above surface) for the December - February season for (a) present day and (b) LGM conditions. The 0°C isotherm approximately shows the limit of the sea ice and is prescribed from the CLIMAP data set. The present day surface temperatures are remarkably good. This is particularly true for the Arctic region. Observations of January surface temperatures (Schutz and Gates, 1971), show Arctic regions dropping to -25 to -35°C, very similar to the model simulations. Similarly, land temperatures over the US and Eurasia are close to observations. Southern hemisphere temperatures are not quite so realistic. The model has Antarctic temperatures dropping as low as -50°C, whereas observations suggest temperatures nearer -35°C. However, this discrepancy occurs only in areas of high orography. In regions of low elevation, the model is closer to observations.

The simulation for the LGM shows enhanced temperature gradients in the Northern hemisphere, particularly over the Northern Atlantic. In these regions, the temperatures have dropped by up to 40°C. This is comparable to previous studies (e.g. Joussaume, 1992). Temperatures decrease rapidly with distance poleward of the ice edge and the enhanced temperature gradient extends across the entire ocean. The gradient is also strong over North America. This appears, in part, to be a product of the high elevations of the Laurentide ice sheet which cools to below -56°C. Similar low temperatures are found over the Greenland and Scandinavian ice sheets.

These changes are important for the mid-latitude depressions. The altered temperature gradients in the Northern Hemisphere result in modified activity in the storm track regions. Figure 2 shows the transient eddy kinetic energy per unit mass at approximately 250 mb for (a) present day and (b) LGM conditions. This has been temporally filtered to include only the relatively fast moving mid-latitude depressions with time scales for growth and decay of the order of six days or less using the filter described in Hoskins et al., 1989.

The maxima over the Pacific, Atlantic, and Southern hemisphere oceans are commonly used as locators of the 'storm tracks'. The present day simulation is in reasonable agreement with observations (Hoskins et al., 1989). The peaks in the Pacific and Atlantic are similar to observations, but both storm tracks are further south than observed, by about 5°.

For the LGM simulation, the region of maximum eddy kinetic energy is considerably more confined meridionally, but extends much further into Europe. Thus over the Atlantic, the eddy kinetic energy is considerably reduced, whereas over Europe there is a substantial increase. This increase extends well into the continent, to 90°E. These changes are generally consistent with the changed surface temperature gradient. The temperature gradients at the edge of the sea ice result in mid-latitude depressions closely following the edge of the ice. The sharpness of the storm track is a result of the relative efficiency with which the depressions can transport heat, and the relatively narrow meridional band over which heat needs to be transferred with such strong temperature gradients.

Finally we show the total precipitation for the December-February season for (a) the present day and (b) the LGM. In mid-latitudes and especially in the European region, there is a dramatic change in the pattern. The precipitation is in a much more narrow band but, like the storm track itself, it extends considerably further into Europe. The band of precipitation closely follows the edge of the ice.

For both ice sheets, there is accumulation virtually everywhere, both in the interiors of the glaciers and at the ice edges. However, the largest changes occur at the ice edge. There is substantial accumulation over the northern Rockies, over the Eastern seaboard of the U.S.A. and over Southern Europe. This accumulation is closely related to the changes in storm tracks and precipitation already discussed. There is also net accumulation of snow over the Tibetan plateau and the edge of the Antarctic continent (not shown). The former result is of interest in that the existence of a Tibetan ice sheet is controversial (Kuhle, 1987).

This has been a very brief description of one area of research within UGAMP. This work was carried out by P.J. Valdes, N.M.J. Hall and D. Buwen at the University of Reading. It shows that a GCM can be used to understand better the atmospheric transport of moisture onto the ice sheets. The GCM used was run on the SERC YMP/8. The 10 year LGM simulation described here required approximately 450 hours of CPU time on the YMP/8 generating some 15Gbytes of data that needed to be analysed subsequently.

CLIMAP, 1981, Seasonal reconstructions of the earth's surface at the Last Glacial Maximum, Geological Soc. America, Map Chart Ser., MC-36, Boulder, Colorado.

Hoskins, BJ., H.H. Hsu, I.N. James, M. Masutani, P.D. Sardeshmukh and G.H. White, 1989: Diagnostics of the global atmospheric circulation. WRCP report 27.

Joussaume, S., 1991: Paleoclimatic tracers: An investigation using an atmospheric general circulation model under ice age conditions. Part 1: Desert dust. J. Geophys. Res. In press.

Kuhle, M., 1987: Subtropical mountain and highland glaciation as ice age triggers and the waning of the glacial periods in the Pleistocene. GeoJournal 14, p393-421.

Valdes, P.J., and Hall, N.M.J., 1993, Mid-Latitude Depressions during the last ice-age. Submitted to Proceedings of Nato Advanced Summer School on Palaeoclimates.

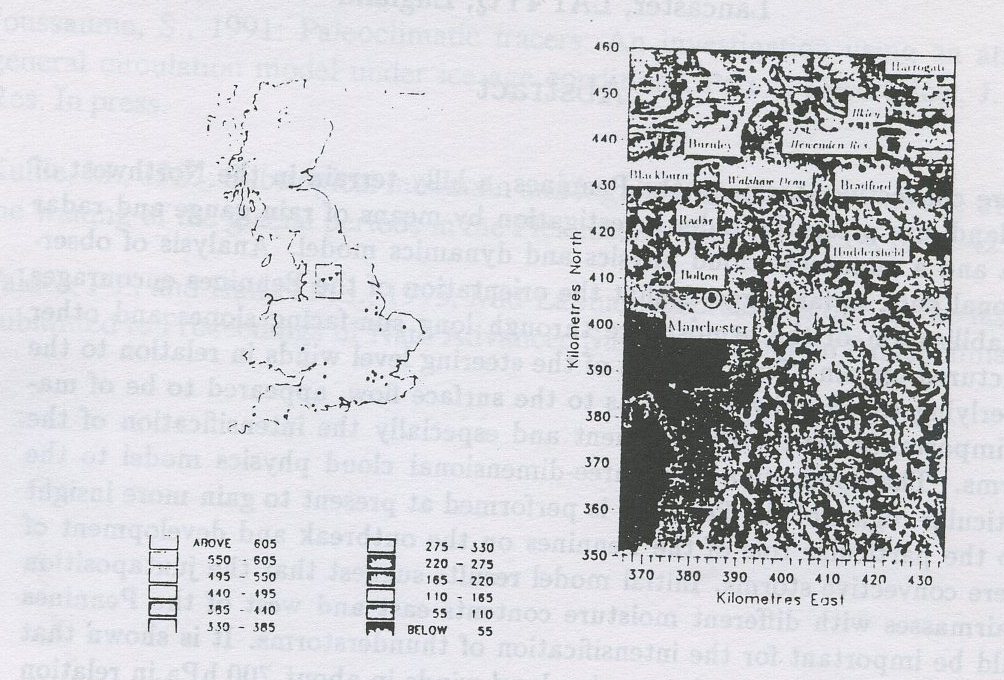

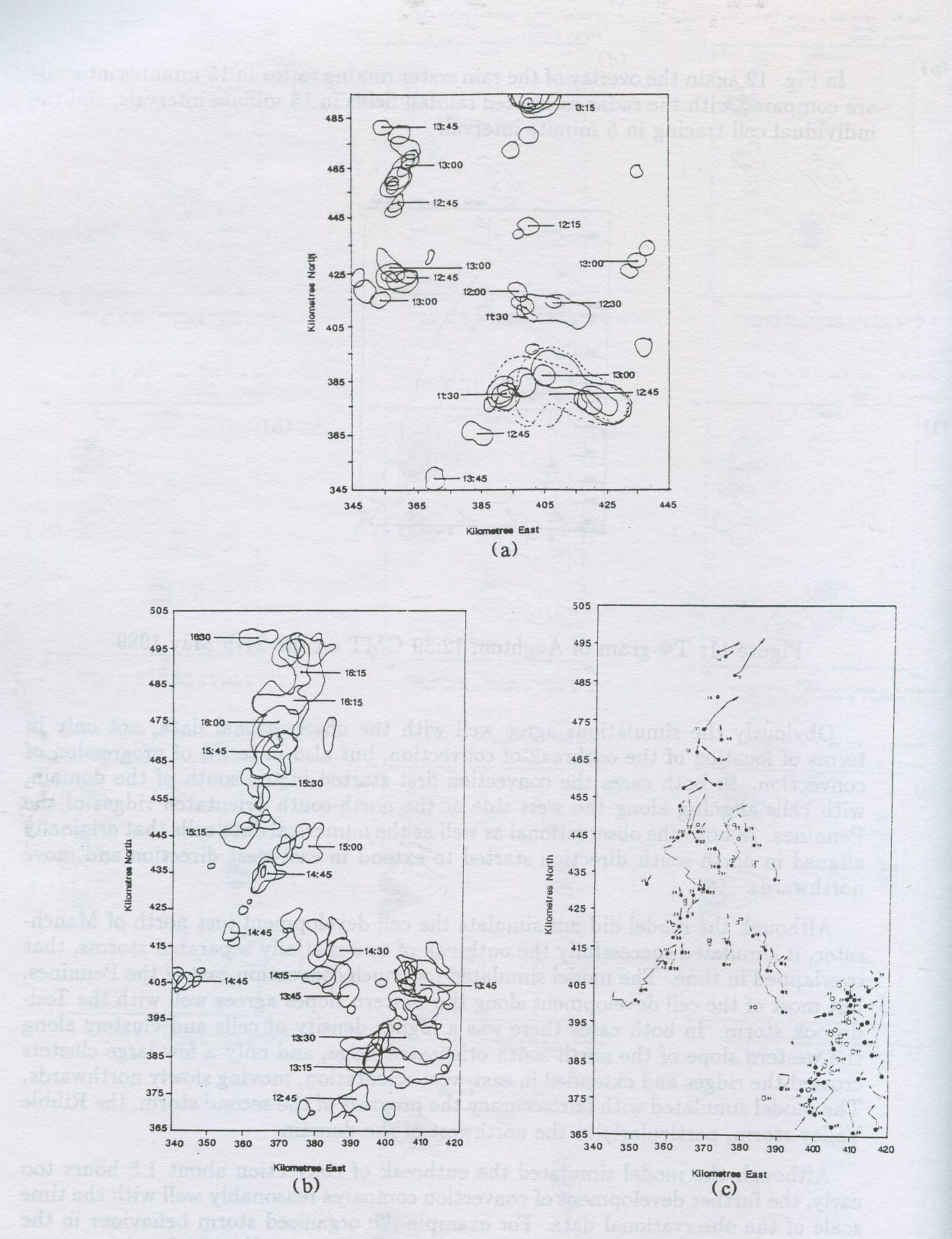

Severe convective storms in the Pennines, a hilly terrain in the Northwest of England, are presently under investigation by means of rain gauge and radar data and a numerical cloud physics and dynamics model. Analysis of observational data so far suggests that the orientation of the Pennines encourages destabilisation of the atmosphere through long sun-facing slopes and other structured terrain. The direction of the steering level winds in relation to the underlying topography, as well as to the surface flow, appeared to be of major importance for the development and especially the intensification of the storms. The application of a three-dimensional cloud physics model to the particular region of the Pennines is performed at present to gain more insight into the particular role of the Pennines on the outbreak and development of severe convective storms. Initial model results suggest that the juxtaposition of airmasses with different moisture contents east and west of the Pennines could be important for the intensification of thunderstorms. It is shown that the wind direction up to the steering level winds in about 700 hPa in relation to the underlying topography has major influence on the favoured locations of cell initiation. Similarly, development of urban heat island effects in the Bradford and Manchester area also have a strong impact on the outbreak of convection.

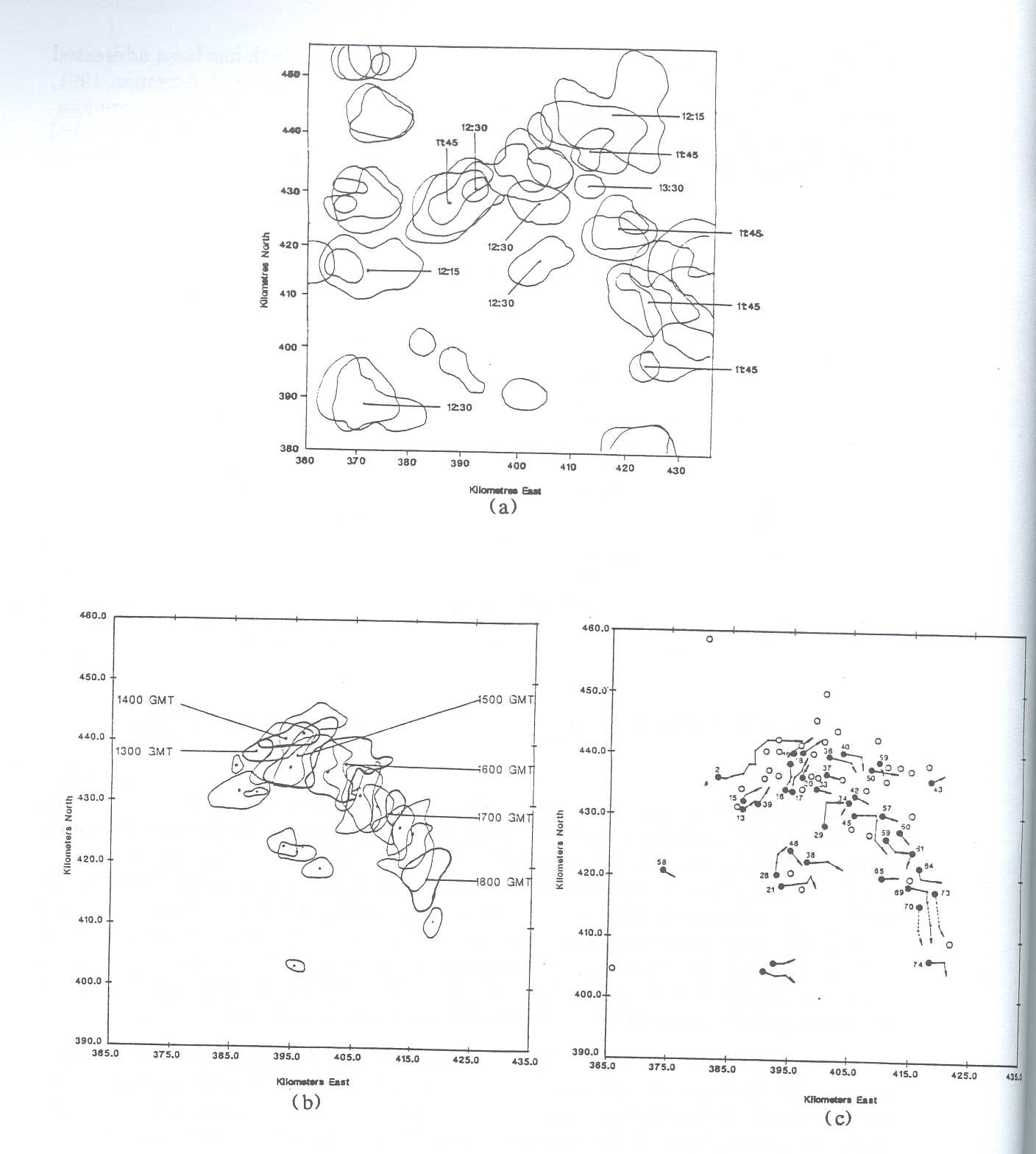

The outbreak of severe convective storms with rainfall intensities of more than 100 mm/2hrs in Britain is often considered concentrated in the lowlands of the Southeast of England; only a few have been reported in the hilly terrains of Wales, Northwest England or Scotland (Reynolds 1978). Of these few, three in Northwest England occurred in a radius of 30 km in a particular part of the hilly Central Pennines, and they rank among the 10 highest short-period rainfalls in all Britain (Fig. 1 ).

Although reports of severe convectives storms are biased towards areas of dense gauge network, the scarcity of severe storms in hilly terrain on one hand, and the spatial concentration of the few extreme rainfall events in the Central Pennines on the other hand, appears significant. The underlying study therefore aims to investigate the particular role of the Central Pennines on the initiation and further development of convective storms. At the present stage emphasis is put on the locations of cell outbreaks rather than rainfall intensities.

Analyses of the influence of the Pennines on the development of convection has been studied by means of gauge and conventional C-band weather radar data. However, the quality and resolution of both the gauge and radar data was too poor to study the subject in sufficient detail. It was therefore decided to apply a numerical model to the area. Because of the highly three-dimensional character of thunderstorms a three-dimensional model had to be chosen.

In the next section the model used for the underlying investigation is briefly described. In section 4 the set-up of the model and results of three different simulations are presented, as well as a comparison with observational data. In section 5 the results are summarised and concluded.

The model used for the underlying study was developed by T.L. Clark and improved and modified since amongst others by Clark (1977, 1979), Clark and Farley (1984), Smolarkiewicz and Clark (1986) and Clark and Hall (1991). The model has been chosen for this work because it has proved to simulate convection successfully over structured terrain over the past years.

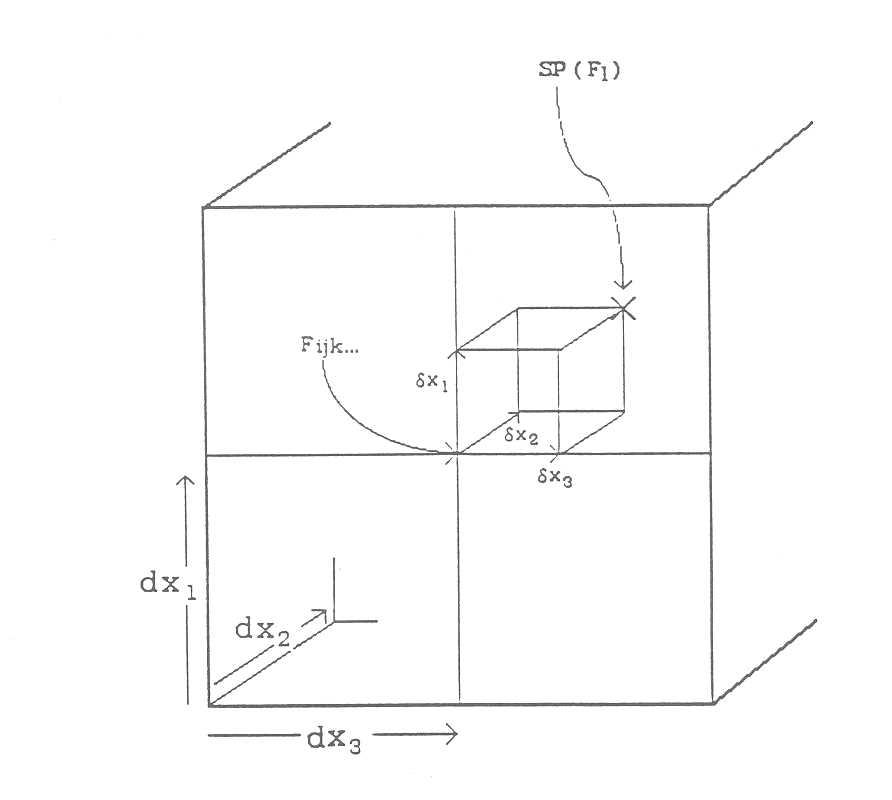

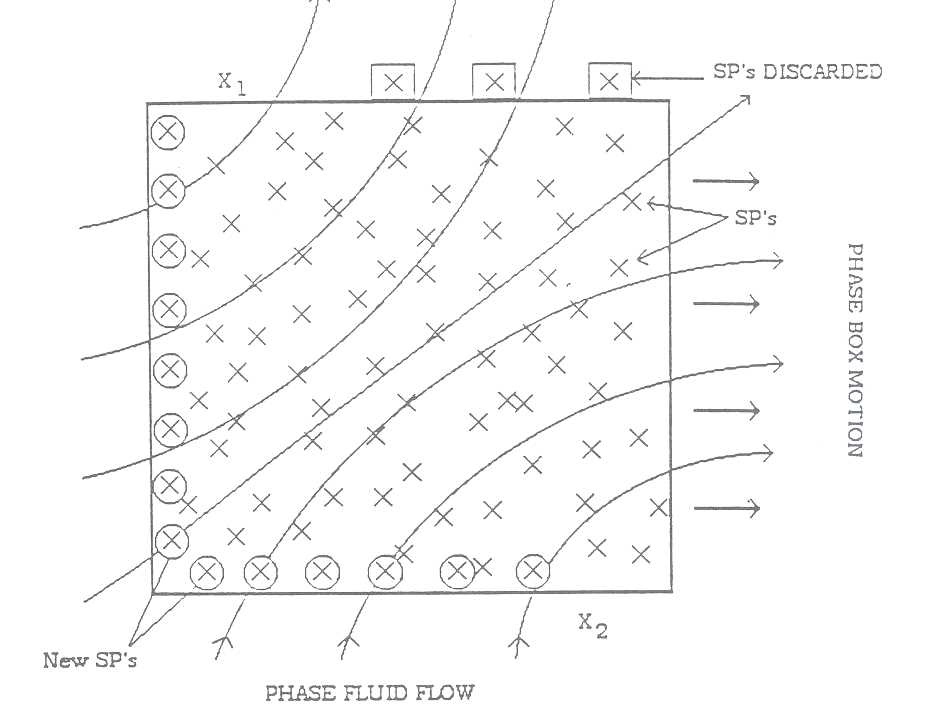

The model is a three-dimensional second order finite difference storm model that is based on the equations of motion .

the first law of thermodynamics.

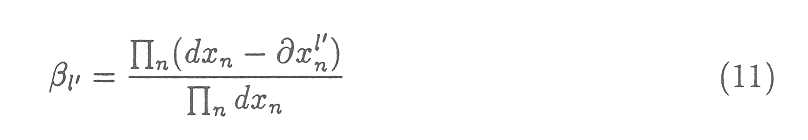

(The variables have been split into three components, the base state, x, which represent the atmospheric conditions for an idealized atmosphere of constant stability S, the difference between the constant stability atmosphere and a hydrostatically-balanced atmosphere, x'(z), and perturbations that evolve with time x"(x, t), and that actually drive the motion.)

where ui are the components of wind velocity, f is Coriolis parameter, ρ is density, P is pressure, Θ is potential temperature, and x, y and z are cartesian coordinates. δij is the Kronecker symbol, g is gravitational constant, ℇ = Rv/Rd - 1 is the ratio of the gas constants for moist (Rv) and dry air (Rd) minus 1, γ = cp/cv is the ratio of the specific heat constants, qv,c,R,IA,IB are the mixing ratios of vapour, cloud water, rain water, and the two ice types A and B. KH is the eddy mixing coefficient for heat, and Cdk are evaporation, sublimation or nucleation rates, depending on the described process, and Sk is the transfer of cloudwater to rainwater. υij is the stress tensor defined as

![]()

where Km is the eddy coefficient for momentum and Dij the deformation tensor.

The surface sensible heat flux, Ss, is specified dependent upon the incident solar radiation at z=0 as

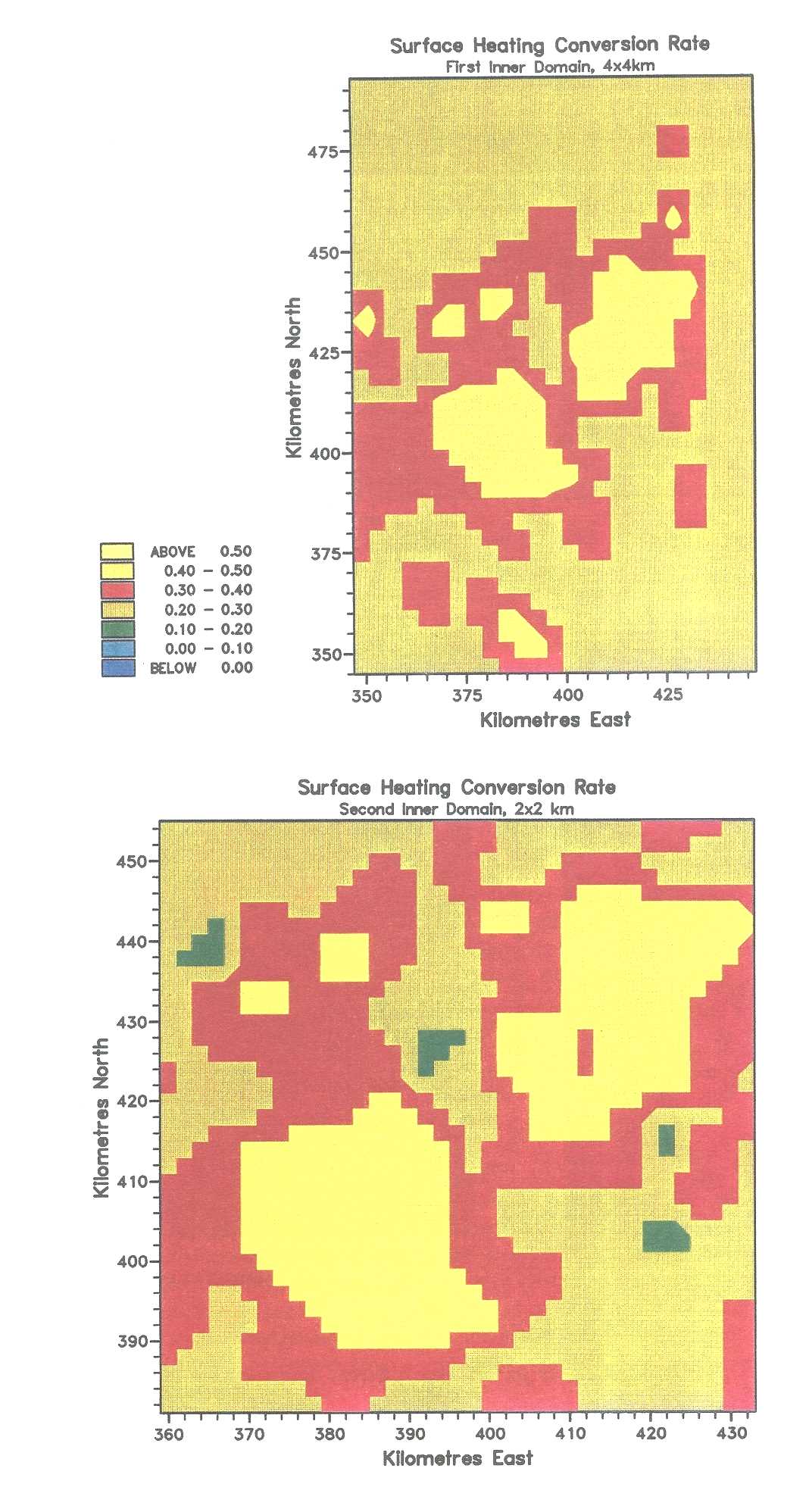

where μ(x, y) is a factor which determines the conversion rate from incoming solar radiation to sensible heat flux, So is the solar constant (1395 Wm-2), Z is the angle of the zenith angle of the sun, φ latitude, δ declination angle, H hour angle and hx,y are the gradients of topography in the east and west direction respectively. In this study, a latent heat flux, Si, has been added to the code in a similar way than the sensible heat flux, in order to account for continuous inflow of moisture. The conversion factor μ(x, y) ranged between 30 % (μ=0.3) in urban areas to account for the heat island effect, and very small (μ ≈ 0.0) above sea.

A simple method of cloud cover feedback to surface heating has also been added to the code. It was assumed that the shadow of clouds reduced the conversion of incoming solar radiation to sensible heat or latent heat by about 30% at the surface. The area shadowed by the clouds has been determined by simple geometry including the zenith angle and the azimuth angle of the sun and the dimensions and location of the cloud.

The model equations are written in terrain following coordinates following Gal-Chen and Somerville (1975), which simplify the lower vertical boundary conditions over structured terrain. The cloud and ice parameterisation of the model are based on the Koenig-Murray ice parameterisation (1976). The model has the option to nest several domains with different grid size resolutions. The information from the outer domains are used for initialisation of the inner domains at the beginning of each time step. After completion of each time step the more detailed information gained by the inner domains are fed back into the outer domains. This saves computing time and allows simulations of bigger areas.

The model has been chosen for this work because it has proved able to simulate convection successfully over structured terrain over the past years.

In the present study the newest version of the model available in June 1993 (G2TC36) has been applied. The model code contains about 36000 lines of FORTRAN code and was written for parallel processing on CRAY supercomputers, and is presently run on the YMP at the Rutherford Appleton Laboratories near Oxford. The model output is mainly graphical and uses NCAR-graphics, which has also been installed on the YMP. A simulation of 5 hours real time consumes about 5000 sec user CPU-time, and 180 sec system CPU-time.

The model simulations were aimed to investigate the role of the Pennines on the outbreak and development of convection under influence of different wind directions and surface conditions. The internal fine scale structure of individual cells was not investigated, because there was no observational data to compare and verify the numerical results with, and it would have considerably increased the computing time.

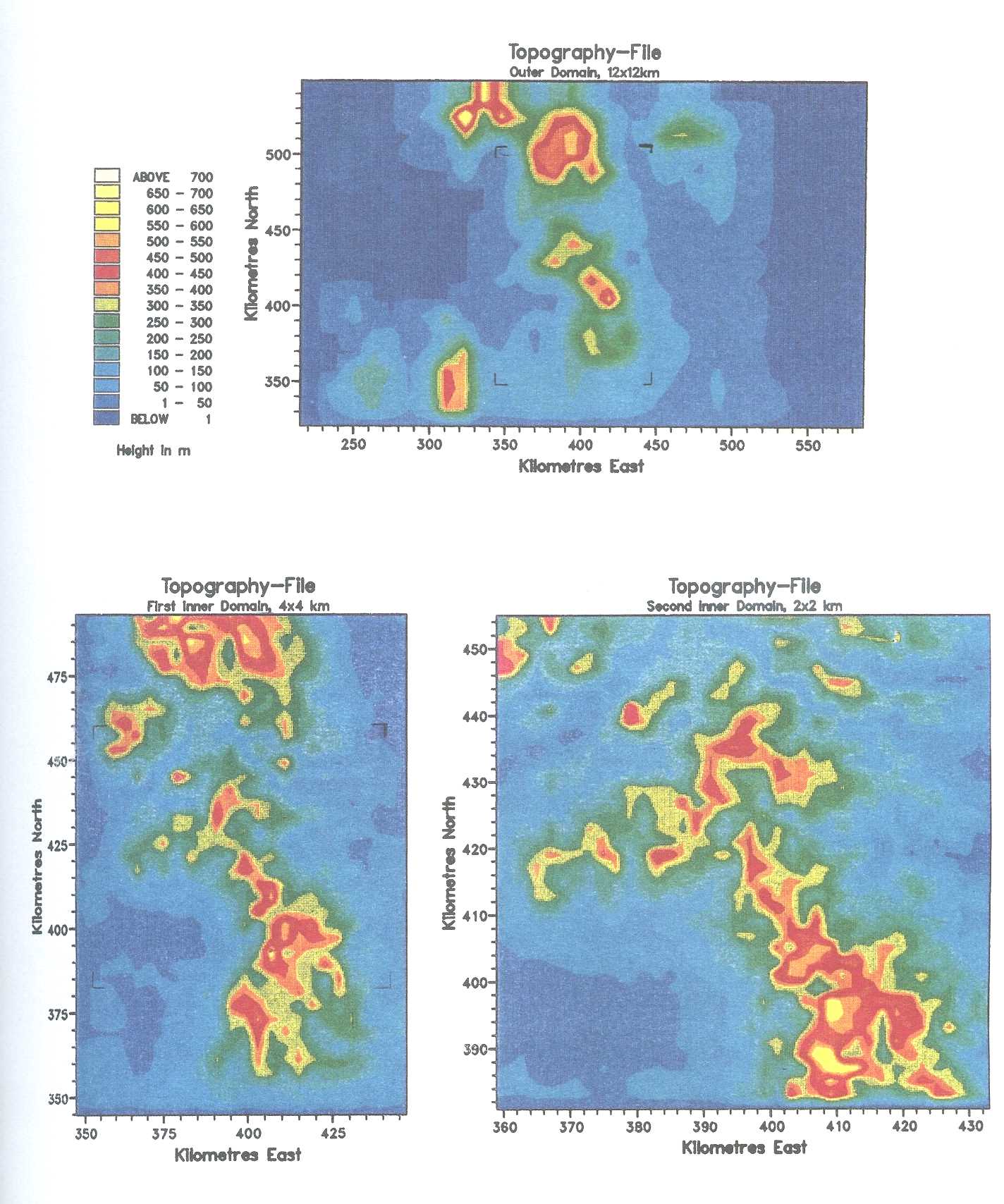

The model has been set up for this study with three nested domains. The first and outer domain extends from 215 km East and 321 km North to 599 km East and 561 km North in 12×12 km2 grid steps (Positions in National Grid Reference). At the southwest corner of the domain steep topography gradients are present due to the Welsh mountains. Test runs showed that the model copes with the elevated terrain at the boundaries, and that the topography data does not have to be smoothed in this particular area.

The second domain extends from 347 km East and 345 km North to 451 km East and 497 km North in 4×4 km2 grid steps. The second domain is not much larger than the third domain in east-west direction, because there are no major topographical features present that have to be resolved on a finer scale. In north-south direction it extends further than the innermost grid to resolve the influence of the Lake District and Yorkshire Dales, as well as of the structured terrain to the south.

The third domain extends from 359 km East and 381 km North to 435 km East and 457 km North in 2×2 km2 grid steps. Although a grid size of 2×2 km2 is still too coarse to resolve fine scale dynamic structures of convective cells, it represents a reasonable compromise between the resolution of convection and the consumption of computing time.

The topography for most parts of the two inner domains was provided by the Meteorological Office, the data for the outer domain was extracted from Ordnance Survey maps.

The conversion rate from incoming solar radiation to sensible heat flux μ was also estimated from Ordnance Survey maps. It was assumed that the average conversion rate over land is 30% , but that in case of urban heat island effects cities have a conversion rate of 40%, whereas rivers and lakes only have 20%. In case of no urban heat island simulation the conversion factor was set to 30% throughout the inner domains. In order to simulate converging winds east and west of the Pennines, the conversion rate in the outer domain was set to zero over sea and to 30% over land. This developed a sea breeze effect which then resulted in convergent winds in the two inner domains. If no converging winds were wanted the conversion factor was set equal over sea and over land. The topography of each domain as well as the conversion factor map for incoming solar radiation to sensible heat flux for the two inner domains is shown in Fig. 2 and 3.

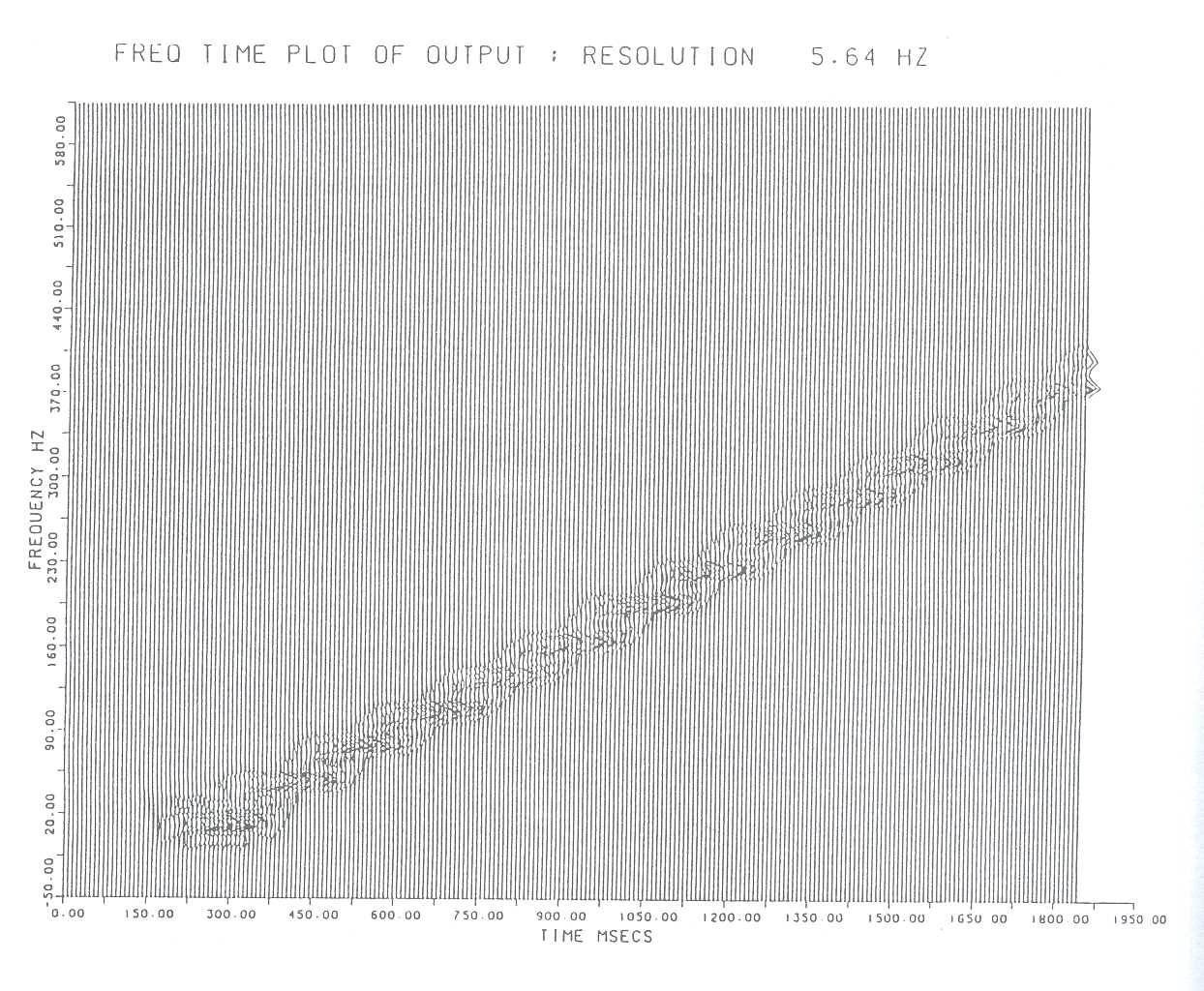

In the following 3 model runs are presented. Run FER3DL simulates the outbreak of convection under westerly to southwesterly air flow, without influence of surface wind convergence or urban heat island effects. The numerical results are then compared with radar data (Radar data provided by the Hameldon Hill radar, a conventional C-band weather radar, Plessey type 45C, operating at a wavelength of 5.6cm ) from a storm that developed on the 19th May 1989. The last two model runs simulate convection with mostly southerly winds. Both runs are initiated with the same profiles, but run FER3DJ assumes the presence of urban heat island effects. The numerical results of both runs are compared to convection that broke out on the 24th May.

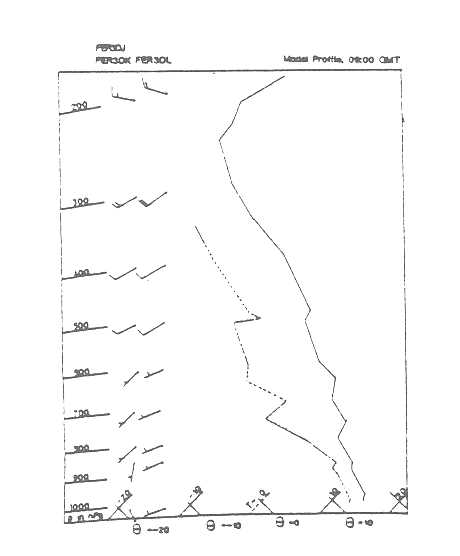

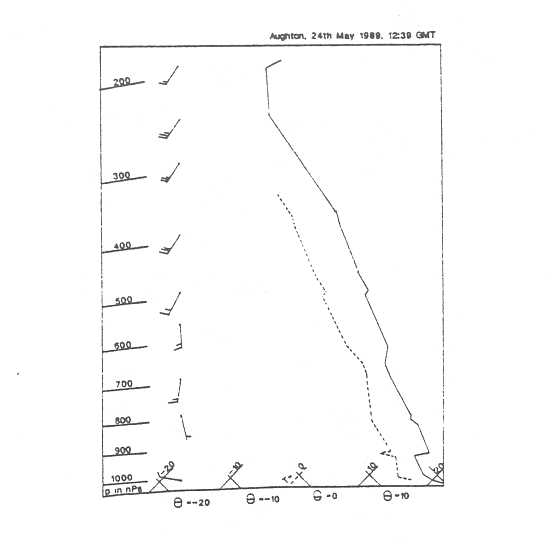

Run FER3DL was set up with μ=0.3 throughout all domains, thus no surface wind convergence or urban heat island effects were initiated. The temperature and humidity profile used for the simulation was based on meteorological conditions on the 19th May 1989, because observational storm data was available for this time period (Fig. 4). The wind profile was set to 250° from the surface up to 500 hPa, and to northerly veering winds in levels higher than that. The simulations were started at 09:00 GMT in order to give the model time to build up the airflow.

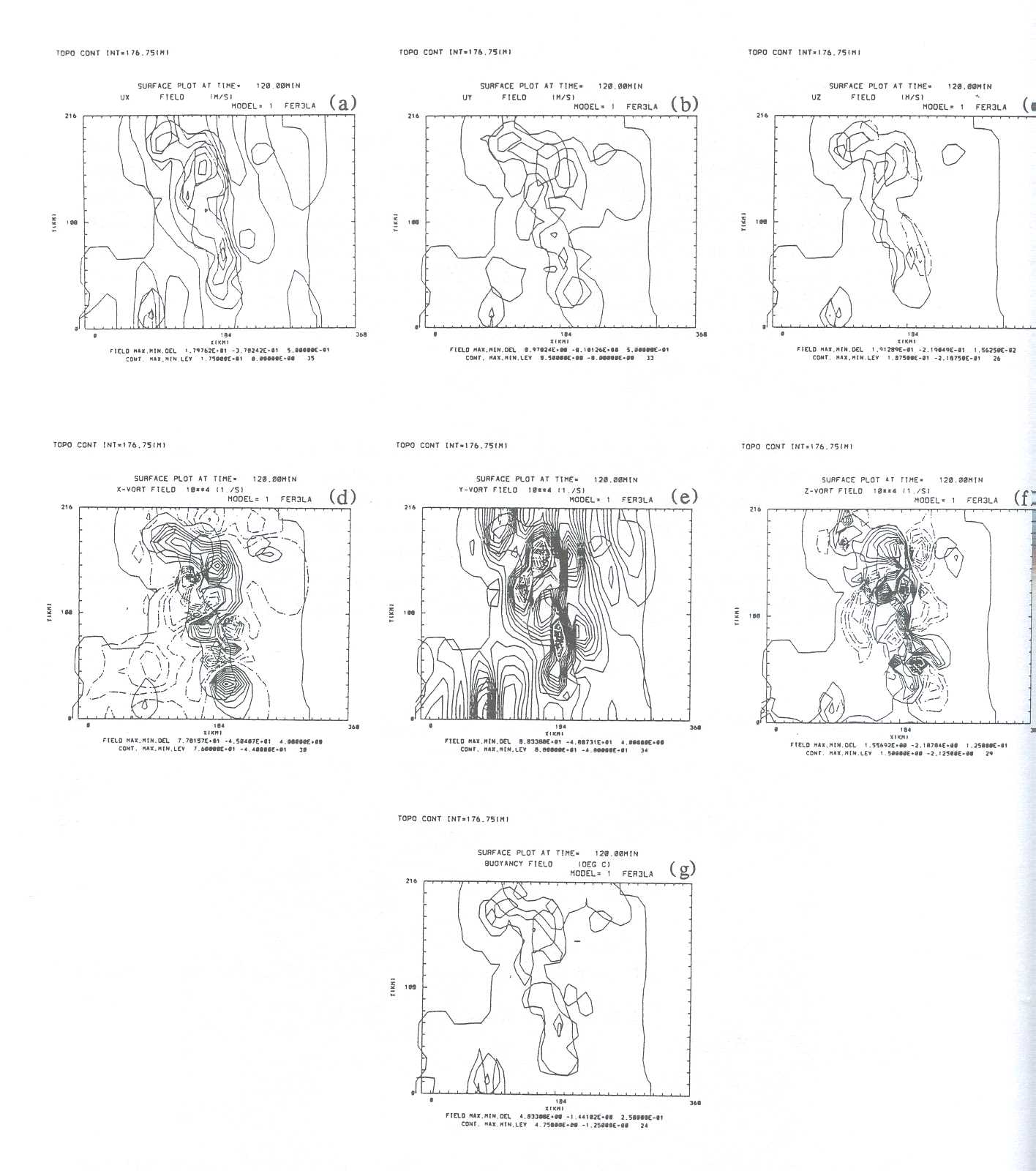

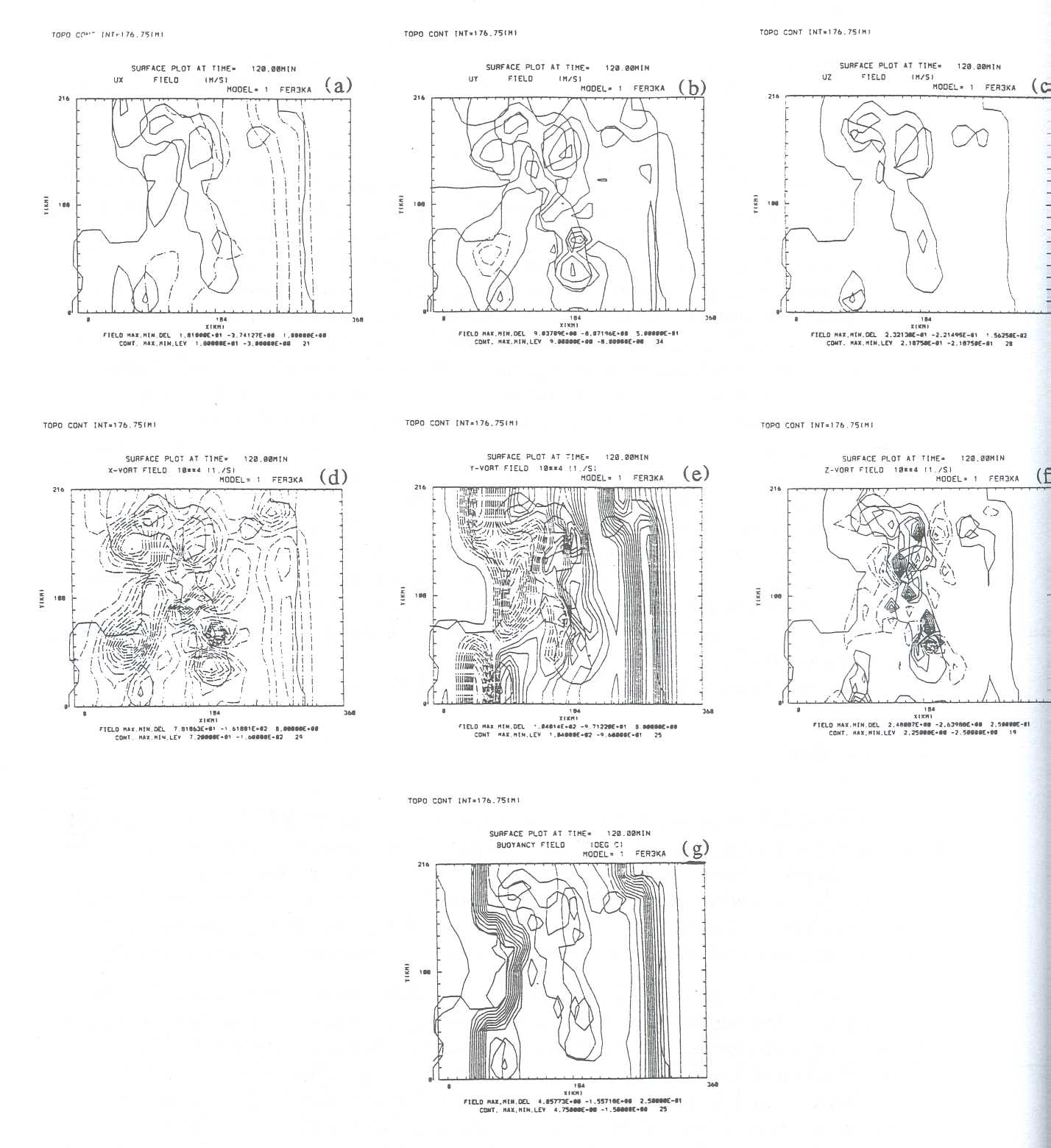

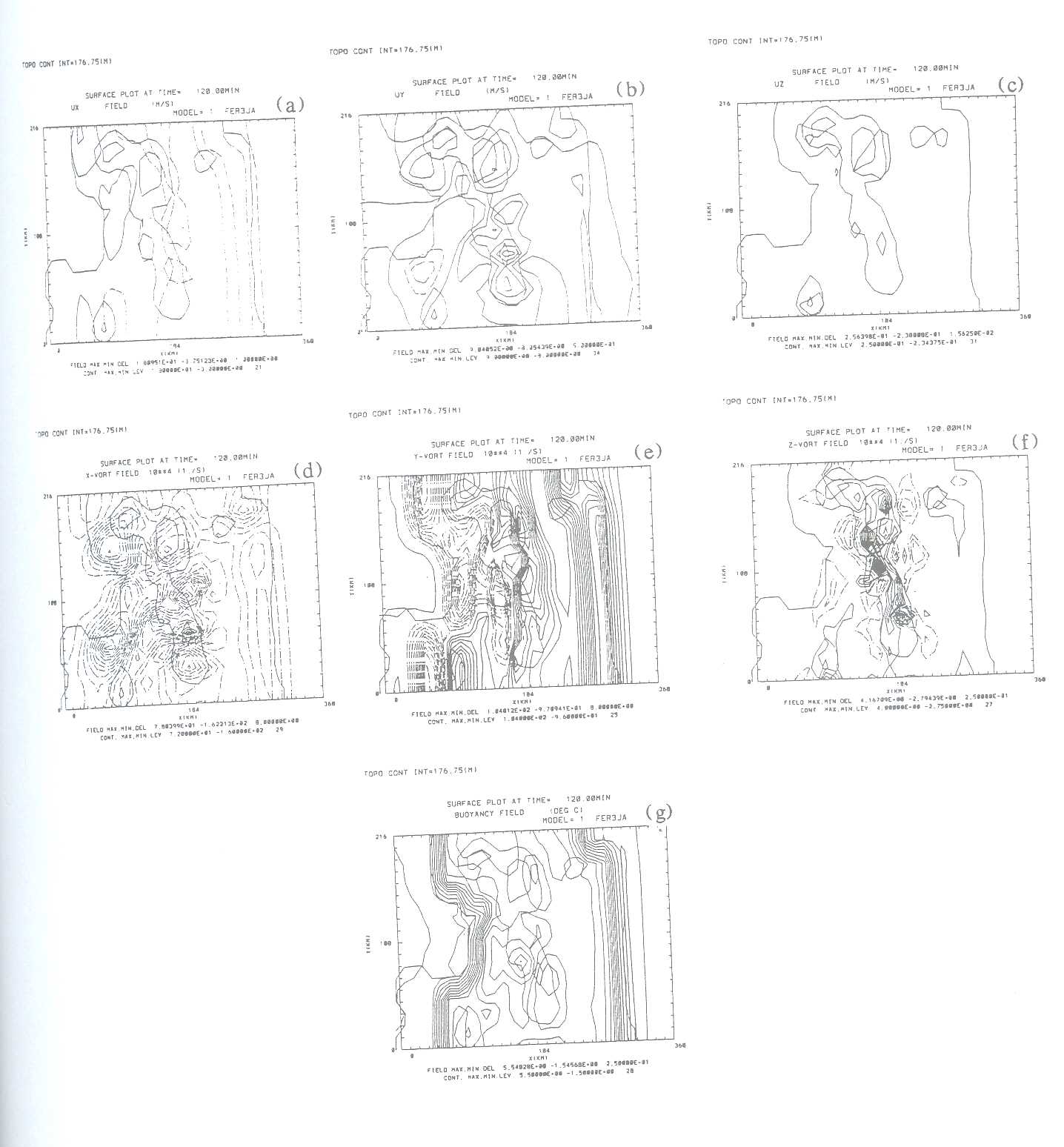

Fig. 5a-g presents the x, y and z components of the wind field (u) and of vorticity (ζ), as well as buoyancy after 120 minutes (=11:00 GMT) of simulation at the surface. Negative values are dashed.

Not surprisingly all three vorticity fields are strongly influenced by the hilly terrain, and steep gradients developed particularly over the ridge of the Pennines. ζx is positive over the hilltops and negative in the valleys, ζy is positive throughout most of the domain, except for a few places where the inflowing air first encounters a valley before rising at the hill slopes. ζx appears to be positive and strongest at the hill tops, whereas in the low lands east and west of the Pennines ζx is predominantly negative.

ux is positive, thus westerly, throughout the domain. It is strongest on the windward side of the ridges where the air is forced to rise and weaker on the leeward side. Because the flow is mainly westerly uy is very weak. However, the hills in the north also force the air to accelerate northwards. uz shows clearly the forced up-draughts at the windward side of the ridges due to forced lifting, and the consequent down-draughts at the east side of the ridges. As expected buoyancy is slightly stronger on the sun-facing eastern slopes than at the western side of the ridges.

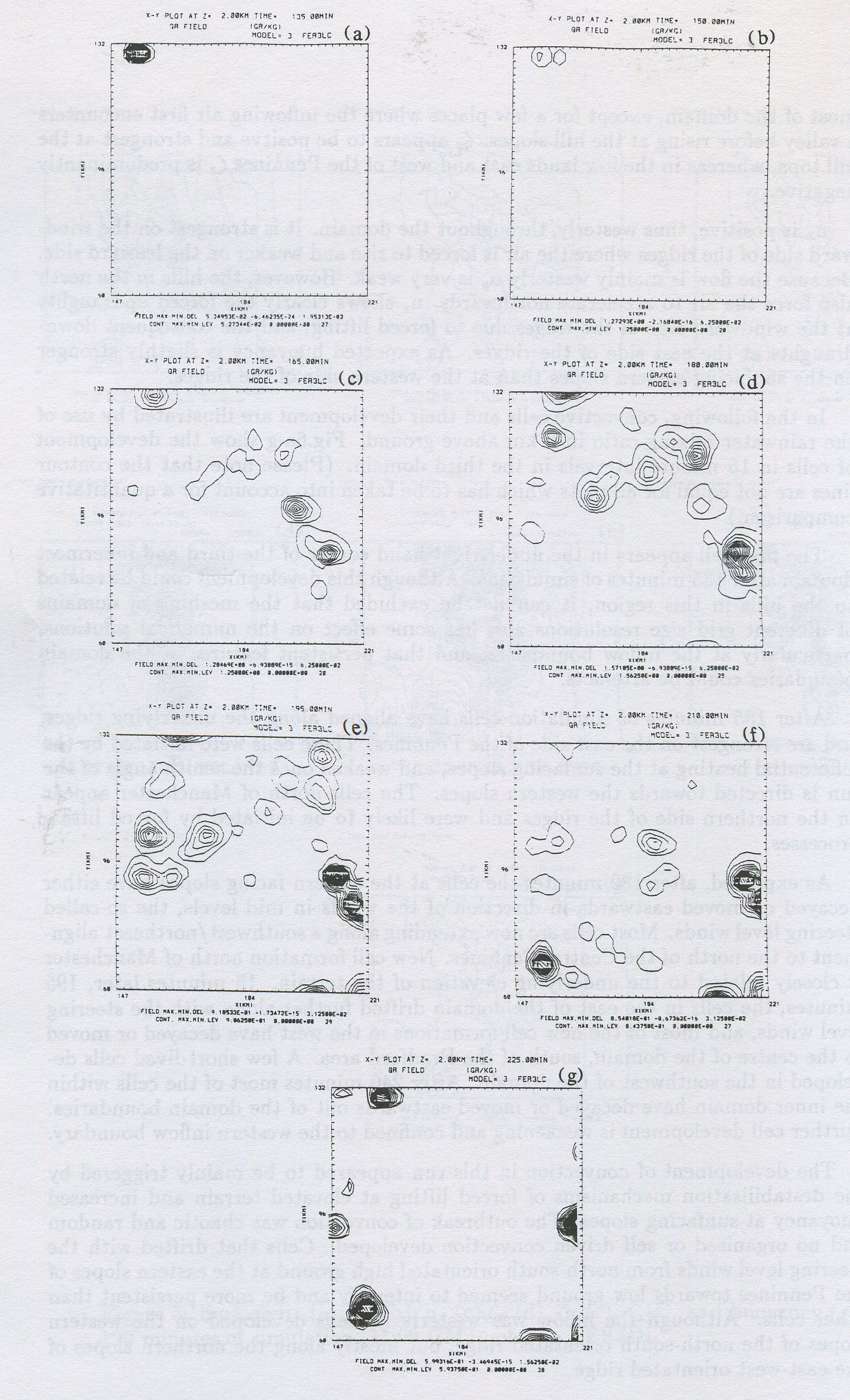

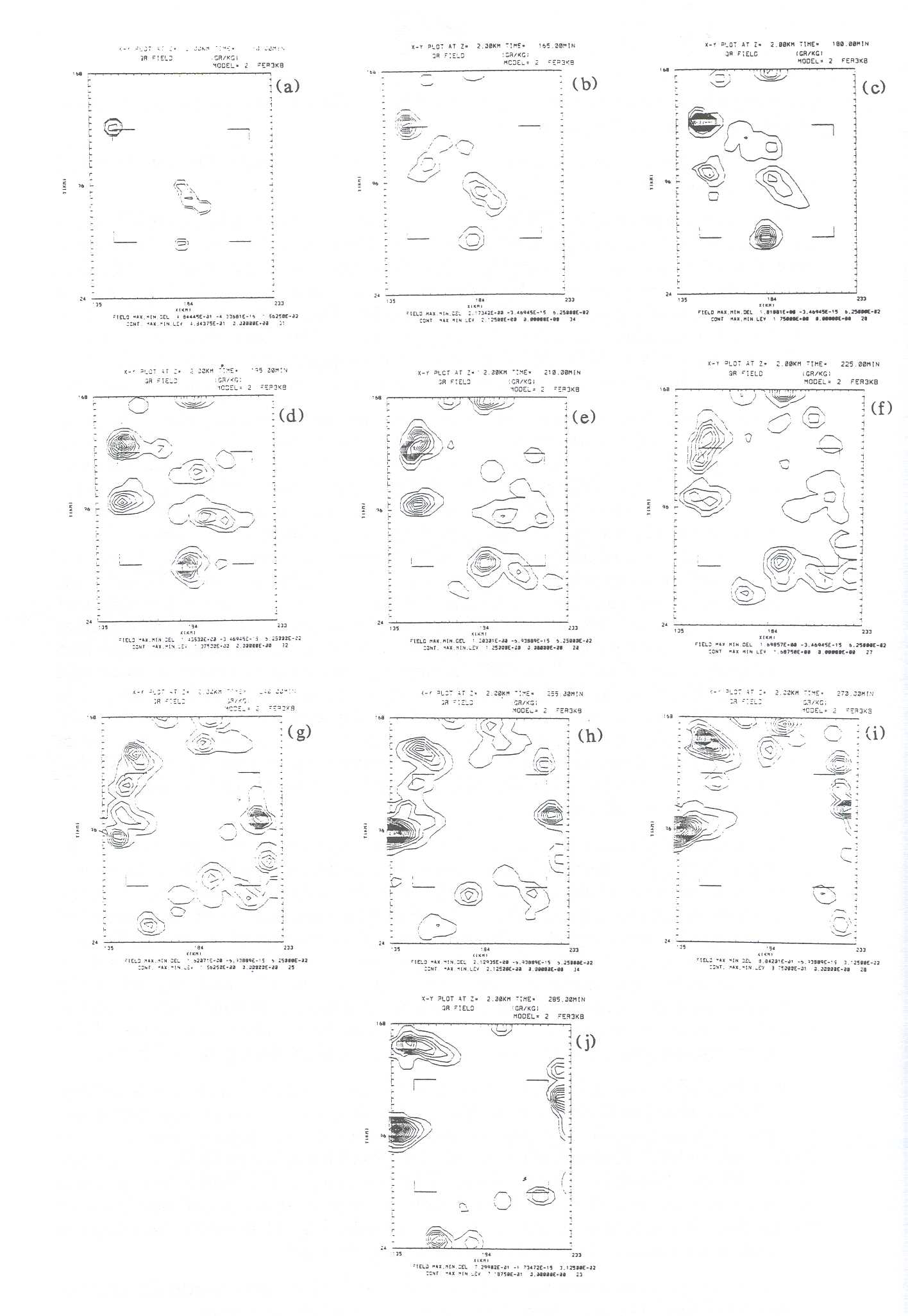

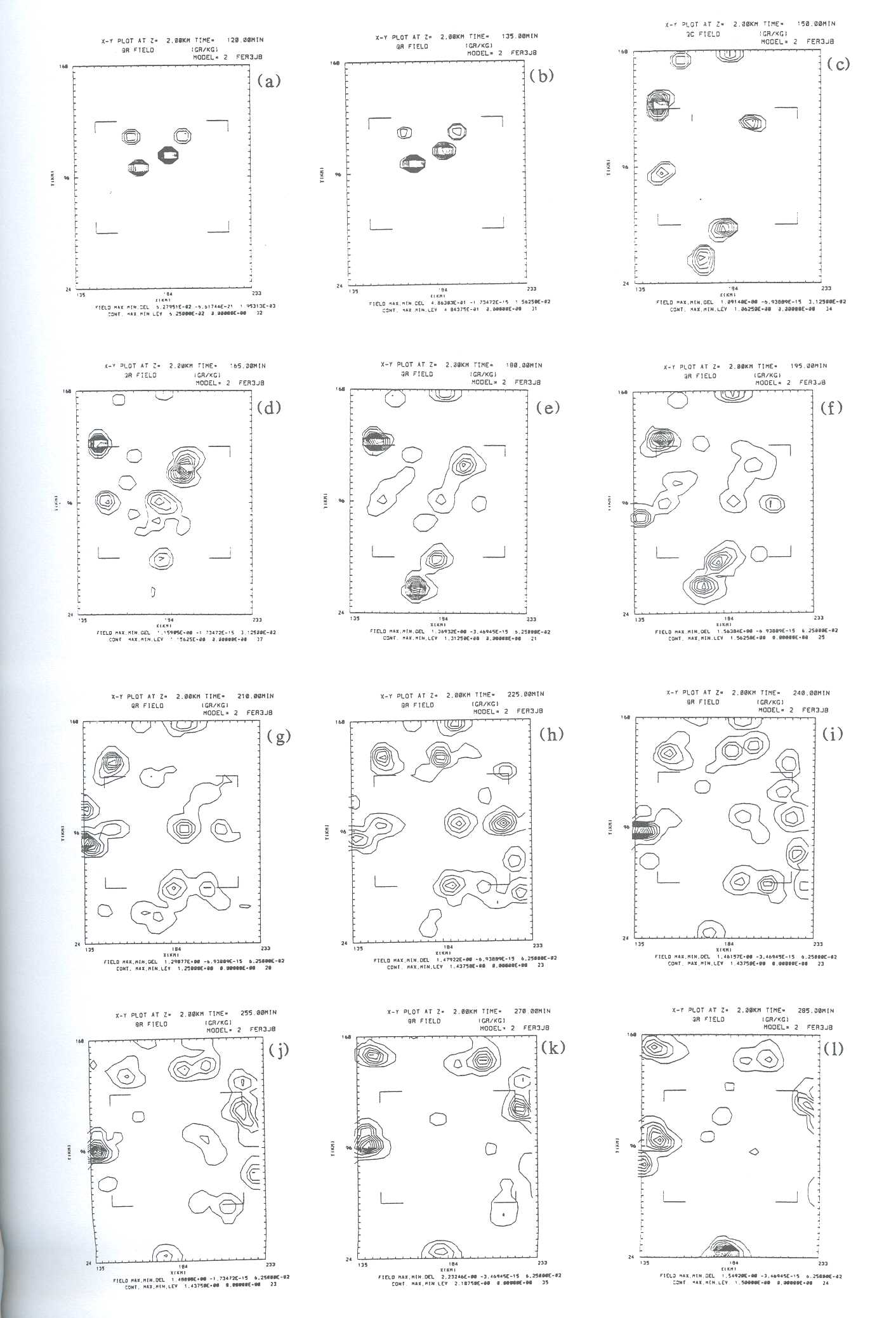

In the following, convective cells and their development are illustrated by use of the rainwater mixing ratio in 2 km above ground. Fig.6a-g show the development of cells in 15 minute intervals in the third domain. (Please note that the contour lines are not equal for all plots which has to be taken into account for a quantitative comparison.)

The first cell appears in the upper right hand corner of the third and innermost domain after 135 minutes of simulation. Although this development could be related to the hills in this region, it can not be excluded that the meshing of domains of different grid size resolutions also has some effect on the numerical solutions, particularly at the inflow boundaries, and that persistent features at the domain boundaries could be artefacts.

After 165 minutes of simulation cells have aligned along the underlying ridges, and are strongest on the east side of the Pennines. These cells were initiated by the differential heating at the sun-facing slopes, and weaken once the zenith angle of the sun is directed towards the western slopes. The cells north of Manchester appear on the northern side of the ridges and were likely to be initiated by forced lifting processes.

As expected, after 180 minutes the cells at the eastern facing slopes have either decayed or moved eastwards in direction of the winds in mid levels, the so-called steering level winds. Most cells are now extending along a southwest/northeast alignment to the north of the Central Pennines. New cell formation north of Manchester is closely related to the underlying elevation of the terrain. 15 minutes later, 195 minutes, the cells in the east of the domain drifted further along with the steering level winds, and most of the new cell formations in the west have decayed or moved to the centre of the domain, south of the Bradford area. A few short-lived cells developed in the southwest of the domain. After 240 minutes most of the cells within the inner domain have decayed or moved eastwards out of the domain boundaries. Further cell development is weakening and confined to the western inflow boundary.

The development of convection in this run appeared to be mainly triggered by the destabilisation mechanisms of forced lifting at elevated terrain and increased buoyancy at sun-facing slopes. The outbreak of convection was chaotic and random and no organised or self driven convection developed. Cells that drifted with the steering level winds from north-south orientated high ground at the eastern slopes of the Pennines towards low ground seemed to intensify and be more persistent than other cells. Although the inflow was westerly no cells developed on the western slopes of the north-south orientated ridge, but mostly along the northern slopes of the east-west orientated ridge.

The results of the simulation were compared to a storm that developed on the 19th May 1989 in the Central Pennines, the Halifax storm, which has been addressed in several papers (Acreman 1989, Collinge et al. 1990, Collinge and Acreman 1991, Swann 1993). During the Halifax storm the winds in the steering level were predominantly westerly to southwesterly. The TΦ-gram of the storm as well as the wind profile of the closest radiosonde station upwinds of the storm (Aughton) is given in Fig. 7.

The Halifax storm started off with chaotic and random outbreak of convection along the northern slopes of the east-west orientated ridge of the Central Pennines. After about 2 hours the storm became stationary for about 45 minutes west of Bradford, and then moved on quickly southwards along the eastern slopes of the north-south orientated ridge while intensifying. In Fig. 8 the overlay of contour lines of the radar estimated surface rainfall field in 15 minute intervals are compared with the overlay of the rain water mixing ratio in 2 km height in order to compare the development of convection. For further insight into the observational data the cell tracing in 5-minute intervals of the Halifax storm is also shown.

Apparently there are similarities between the simulation and the Halifax storm, but also some severe differences. In both cases the development of convection seemed closely linked to the underlying topography. In both cases cells developed at the northern slopes of the east-west orientated ridge, and on the eastern slopes of the north-south orientated ridge. No cells developed on the western slopes of the north-south orientated ridge. There was also some independent convection in the corner where the north-south orientated ridge and the east-west orientated ridge are perpendicular.

The cell tracing plot shows that cells during the Halifax storm tended to move with the steering level winds, similarly to the results in the model. The cell tracing also shows that the storm seemed to have split into into two parts, one in which the cells seemed to continue moving northeastwards, while in the other part cells moved southwards along the east side of the north-south orientated ridge. This 'splitting' of convection can also be seen in the model simulations, Further, analysis of the observational data suggested that cells moving from high ground towards low ground were likely to intensify (not shown), which also agrees well with the model results.

However, although the favoured location of cell development seem to be similar between the model results and the simulation, there are severe differences between the development of the actual and model storms. Although in both cases first cells broke out around midday, and developed in a more or less random order along the northern slope of the east-west orientated ridge, there was no outbreak of convection along the eastern slopes of the north-south orientated ridge during the first stages of the Halifax storm. Only after the storm had become stationary around 15:00 GMT, which is indicated in Fig. S8b by the higher density of contour lines in that area, the storm developed into an organised storm with continuous cell formation at its advancing flank. The storm then moved southwards along the ridge, with individual cells passing from its right advancing flank to the left flank where they decayed. During this stage the individual cells intensified which suggests that cells under influence of westerly winds intensify when being directed from high ground of the eastern slopes towards low ground of the east. The model failed to develop both the stationary phase of the storm as well as the organised convection along the eastern slopes during the afternoon. In fact, during later stages the model did not simulate any convection at the eastern slopes. Apparently during the Halifax storm there were other trigger mechanism for convection than just forced lifting and surface heating, which the model did not reproduce. This is also mirrored in the much shorter duration of convection in the model. While the model simulated convection only for about 2 hours, the Halifax storm lasted for 7 hours.

Run FER3DK was set up with the same profiles as FER3DL, except for the wind directions (Fig. 4). It was assumed that there were southerly winds in the lower levels up to 800 hPa, but that the winds in the steering level winds were more westerly. Surface wind convergence was imposed, but no urban heat island effects were considered. During this run the outbreak of convection was not confined to the third domain and therefore the second domain is presented in the following.

The vorticity fields for FER3DK (Fig. 9d-f) differ significantly from FER3DL. ζx is negative throughout the domain with strongest negative (ζx at the windward side just when the air is forced to rise at the ridges. Unlike during run FER3DL the hills of Wales and the Lake District seem to have great influence on ζx on the west coast. Both ζx and ζy are very homogeneous at the east coast. ζy is negative west of the Pennines and west of the welsh mountains, but positive over the ridges and east of the Pennines. However, there is not much difference between the ζz-fields of the two runs, with positive ζz at the hill tops and negative ζz in the lowlands.

The surface wind convergence is evident in Fig. 9a which shows clearly the easterly winds east of the Pennines and westerly winds west of the Pennines. Gradients of Ux are weak just above the Pennines probably due to the convergence zone. Unlike in FER3DL where uy was strongest east of the Pennines, in FER3DK it is strongest west and at the Pennines. The air is accelerating northwards over the hill tops. The air seems to flow around the welsh mountains causing southerly components to the north of the hills. The up-draughts are greatest at the bottom of the hills on the west side of the Pennines, but no down-draughts on the leeward side have developed yet. As expected the buoyancy fields have steep gradients along the coasts where the conversion rate μ steps up from 0% to 30%. Again, buoyancy is slightly stronger at the sun-facing eastern slopes.

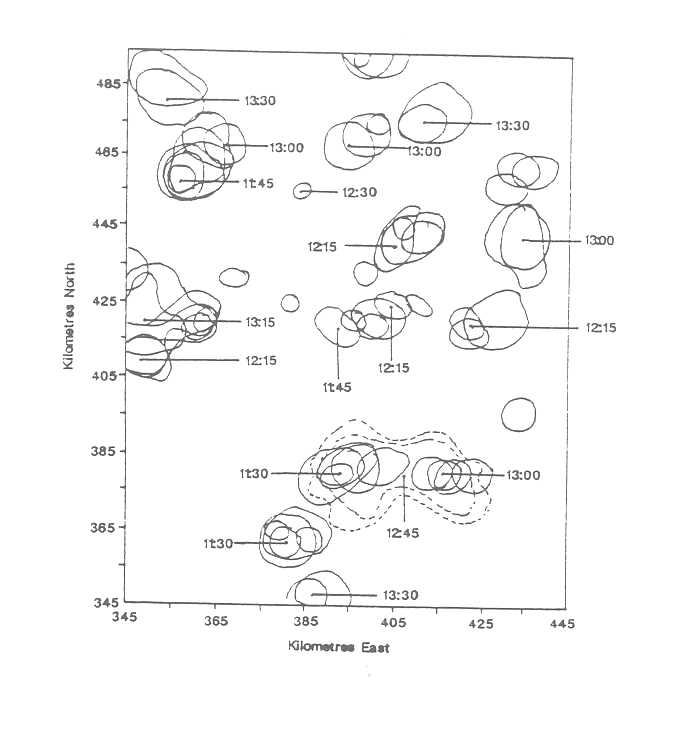

Fig. lOa-j present the development of the rain water mixing ratio in 2 km height in 15 minutes intervals.

Convection started after 135 minutes with first cells starting in the south of the innermost domain at the west side of the ridge. The amount of rainwater however is very little at this stage. It seems that these first cells are initiated by forced lifting at the ridge. 15 minutes later, 150 minutes, convection continues to develop along the western side of the north-south orientated ridge. Similarly to run FER3DL there is convection breaking out at the left upper corner of the innermost domain, and although again this could be due to the underlying topography it seems possible that actual convection is embedded in artefacts due to the meshing of different domains.

After 180 minutes two bands of convection have developed, one along the ridge of the Pennines and one at the western boundary of the inner domain. There seem to be new cells forming in the south of the domain. The cell clusters that were first elongated in north-south directions, following the shape of the underlying ridge, have now extended in east-west direction across the Pennines. These cell clusters continue to move northwards within the next 15 minutes, while similar cell behaviour can be observed in the south of the domain. After 240 minutes of simulation, the separation of the two cell developments becomes more evident. Similarly to run FER3DL cells intensified when moving from the high ground of the eastern slopes of the Pennines towards low ground. While the cells that were confined to the Central Pennines weakened and moved north or eastwards, the convection in the west develops into an elongated band of cells. After 255 minutes of simulation the convection around the Central Pennines have continued to decay, while some new cells developed in the south of the two inner domains. The cells in the west have now intensified and moved northwards, with a light component to the west, thus veering to the left of the steering level winds. Comparison with the underlying topography suggests that the cells in the northwest of the domain move into the valley, which could cause the sudden deviation to the left.

The main destabilisation processes during this run seemed to be forced lifting at hill slopes in the predominant inflow region. Unlike during run FER3DL there was no outbreak of convection in the morning along the sun-facing eastern slopes of the north-south orientated ridges. This is surprising because one could have expected that the easterly surface winds in this region would have enforced the destabilisation process. Convection moved northwards along the underlying ridges, thus with a component to the left of the steering level winds in 700 hPs, and only when the cells were not constrained by underlying elevated terrain individual cells moved in accordance with the winds in 700 hPa. Convection during the second stage of the storm simulation seemed to less random and chaotic than during run FER3DL.

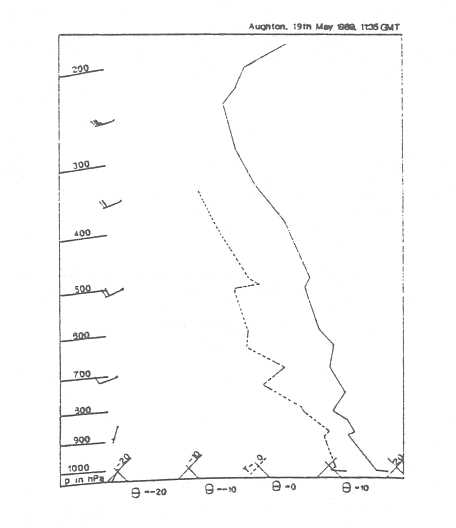

Run FER3DK was compared with the outbreak of convection on the 24th May 1989, 5 days after the Halifax storm. The outbreak of convection on that particular day was wide spread all over Britain and two storms developed in the area, the Toddbrook and the Ribble Valley storm. The Toddbrook storm broke out around 1200 GMT and lasted for about 2 hours. The beginning of the Ribble Valley storm overlapped in time with the end of the Toddbrook storm. It developed northwest of the Toddbrook storm and moved quickly northwards. Fig. 11 presents the TΦ-gram of Aughton on the 24th May 1989 and the upper air winds.

The winds in lower levels up to the steering level winds were southerly, and the surface wind field was convergent towards each side of the Pennines.

In Fig. 12 again the overlay of the rain water mixing ratios in 15 minutes intervals are compared with the radar estimated rainfall fields in 15 minute intervals, and the individual cell tracing in 5 minute interval.

Obviously the simulations agree well with the observational data, not only in terms of location of the outbreak of convection, but also in terms of progression of convection. In both cases the convection first started in the south of the domain, with cells aligning along the west side of the north-south orientated ridges of the Pennines. In both the observational as well as the numerical data cells that originally aligned in north-south direction started to extend in east-west direction and move northwards.

Although the model did not simulate the cell development just north of Manchester, it simulated successfully the outbreak of two spatially separated storms, that overlapped in time. The model simulated too much convection east of the Pennines, but most of the cell development along the western slopes agrees well with the Toddbrook storm. In both cases there was a higher density of cells and clusters along the western slope of the north-south orientated ridge, and only a few large clusters crossed the ridges and extended in east-west orientation, moving slowly northwards. The model simulated with fair accuracy the progress of the second storm, the Ribble Valley storm, particularly in the northwest of the domain.

Although the model simulated the outbreak of convection about 1.5 hours too early, the further development of convection compares reasonably well with the time scale of the observational data. For example the organised storm behaviour in the northwest of the domain lasted both in the simulation as well as in the observations about one hour.

In order to investigate the influence of possible urban heat island effects on the development of convective storms in the Pennines, the same run as FER3DK was performed with the only difference that the conversion rate from incoming solar radiation to sensible heat flux over land was not a constant of 30%, but varied according to Fig. 3. After 120 minutes of simulation, there are hardly any differences in the vorticity or wind fields (Fig. 13a-f). The easterly component of the winds at the eastern slopes of the Pennines are slightly higher than without the urban heat island effects, due to the increased buoyancy over the Manchester and Bradford area (Fig. 13g).

Fig.14a-l show the development of cells in 15 minute intervals in the second domain. Obviously the urban heat island effects force convection to develop about 30 minutes earlier that without heat island effects. First cells appear along the northern slopes of the Central Pennines. After 165 minutes of simulation the results are similar to the ones of run FER3DK, except for strong cell convection in the area of Bradford, and slightly stronger convection south of the Manchester area. Overall convection seemed to align more in a southwest-northeast direction than in the FER3DK run. The development of convection east of the Pennines is stronger and more wide spread than without urban heat islands. Comparison with the observational data shows that during the morning the inclusion of the urban heat island effect seem to worsen the results of the simulation, whereas it seems to improve them during the afternoon (Fig. 15). This is conclusive with results from Oke (1978) which found that urban heat island effects only develop during the afternoon and are greatest during evenings and nights.

The model simulations as well as the investigations of the observational data suggest that the outbreak of convection is very closely related to the winds in the lower levels up to the steering level winds, but that the actual surface winds do not play an important role.

Westerly winds seem to favour the outbreak of convection along the northern slopes of the east-west orientated ridges, thus along an imaginary line from Blackburn to Harrogate. Under influence of westerly winds in lower levels the outbreak of convection along the eastern slopes of the north-south orientated ridges are likely before noon when the slopes are exposed to direct sunlight. However, later during the day outbreak of convection is not likely along the eastern slopes, unless the convection is organised by some self-driven mechanism. Most of the convection then seems to develop along the western slopes.

Southerly winds up to the steering level seem to favour the initiation of convection west of the Pennines. The outbreak and movement of cells seem to be very closely related to the underlying ridges. All cells tended to move northwards, and particularly the valley north of Blackburn and Burnley seem to be favourable for the outbreak of strong convection when under influence of southerly winds.

A could be expected from theory urban heat island effects only seem to improve results during afternoon simulations. Under influence of southerly winds urban heat island effects seemed to favour the outbreak of convection in the area of Bradford and south of Manchester. It can therefore be assumed that storms that break out during the afternoon under influence of southerly winds favour the development of fairly stationary convection in the Bradford area.

The results presented above have shown that the model used for this study is a powerful tool to investigate the influence of the Pennines on the development of storms in the area. The model reproduced convective development on the 24th May 1989 with great detail in terms of outbreak of location of cells, cell movement, and cell development. Although the model failed to simulate the temporal progress of the Halifax storm on the 19th May, it succeeded in calculating the favourable locations of cell outbreaks.

Acreman M. (1989) Extreme rainfall at Calderdale, 19 May 1989; Weather, Vol. 44, pp 438-446

Clark T.L. (1977) A small-scale dynamic model using a terrain-following coordinate transformation; J.Comp.Phys., 24, pp 186-215

Clark T.L. (1979) Numerical simulations with a three-dimensional cloud model: lateral boundary condition experiments and multi-cellular severe storm calculations; J .Atmos.Sci., 34, pp 2191-2215

Clark T.L. and Hall W.D. (1991) Multi-Domain Simulations of the Time Dependent Navier-Stokes Equations: Benchmark Error Analysis of Some Nesting Procedures. J. Comp. Phys., 92, No. 2, pp 456-481.

Clark T. and Farley D. (1984) Severe Downslope Windstorm Calculations in tow and three spatial dimensions using anelastic grid interactive grid nesting: a possible mechanism for gustiness; J.Atmos.Sci., 41, pp 329-350

Collinge V.K. and Acreman M. (1991) The Calderdale storm revised: an assessment of the evidence; BHS 3rd National Hydrology Symposium, Southampton

Collinge V.K, Archibald E.J., Brown K.R. and Lord M.E (1990) Radar observations of the Halifax storm, 19 May 1989; Weather, Vol. 45, No. 10

Koenig and Murray (1976) Ice-bearing cumulus cloud evolution, Numerical simulation and general comparison against observation; J .Appl.Met., 15, pp 747-762

Ogura Y. and Phillips A (1962) Scale analysis of deep and shallow convection in the atmosphere; J Atm.Sci., 19, pp 173-179.

Oke T.R. (1978) Boundary Layer Climates; Methuen & Co .. Ltd, London

Reynolds G. (1978) Maximum precipitation in Great Britain; Weather, May 1978, Vol. 33, No. 5, pp. 162-166

Smolarkiewicz P.K. and Clark T.L. (1985) Numerical simulation of the evolution of a three-dimensional field of cumulus clouds, Part I: Model description, comparison with observations and sensitivity studies; Journ. Atm. Sci., Vol. 42 (5), pp. 502-522

Swann H. (1993) Modeling convective systems on the large eddy simulation model; Internal Report, Joint Centre for Mesoscale Meteorology, Newsletter No. 4, Feb 1993

I would like to acknowledge the support of Dr. A. Gadian and R. Lord who have helped me to understand the model and to overcome the numerous problems associated with the code. I would like to thank Dr. T. L. Clark and Dr. W. Hall for information, advice and help with the latest version of the code, and also Dr. J. F. R. Mcilveen who has spend valuable time discussing both observational and model results with me. Financial support for this study was given by the SERC (GR9/814) and the EC (CEC 900898). I would also like to thank the user support group of the RAL for helping to solve problems related to the use of the CRAY.

The Atlantic Ocean is important because of its influence on the world's climate. Warm near-surface waters flow northwards and release their heat to the atmosphere in the wintertime at high northern latitudes. This results in sinking and the production of deep cold return flows to the south. The whole process has been dubbed the "Atlantic Conveyor Belt" and is responsible for a large heat exchange to the atmosphere. It is therefore important to understand the processes which contribute to the heat transport and circulation in the Atlantic.

Isopycnic-coordinate models, consisting of a set of layers of constant density but varying thicknesses, are now emerging as a useful tool for the investigation of these ocean processes. These models are in contrast to the more usual gridpoint models which have a set of levels at fixed positions in the vertical at which the model variables are known, and which have been in use for many years. Isopycnic models may possess certain advantages over the gridpoint models, but so far most ocean modelling studies and climate prediction models have been based around the gridpoint models.

It is therefore important to intercompare these two model types to assess their relative merits. With this in mind, the James Rennell Centre for Ocean Circulation have implemented the Miami isopycnic model to describe the Atlantic Ocean from about 15°S to 80°N, and a 30-year integration has been carried out at a relatively low resolution of, nominally, 1° horizontally (latitude-longitude). As a collaborative exercise, the Hadley Centre for Climate Prediction and Research (part of the UK Met. Office) has carried out a parallel integration with a gridpoint model, and significant differences are now emerging. In particular, the overall heat carried northwards differs by more than 50% between the two models, and there are significant differences in the outflows of deep water masses across the Greenland-Iceland-Faeroes rise, and in the path of the North Atlantic Current. These discrepancies are likely to cause large differences in climate models which may be integrated for long periods, but it is still too early to say precisely what causes the differences, although further study is in progress. The low resolution isopycnic model is also giving significant insights into the interdecadal variability of the subtropical gyre, and has been compared with observations to good effect, and is also showing the significance of an accurate representation of the Gulf Stream.

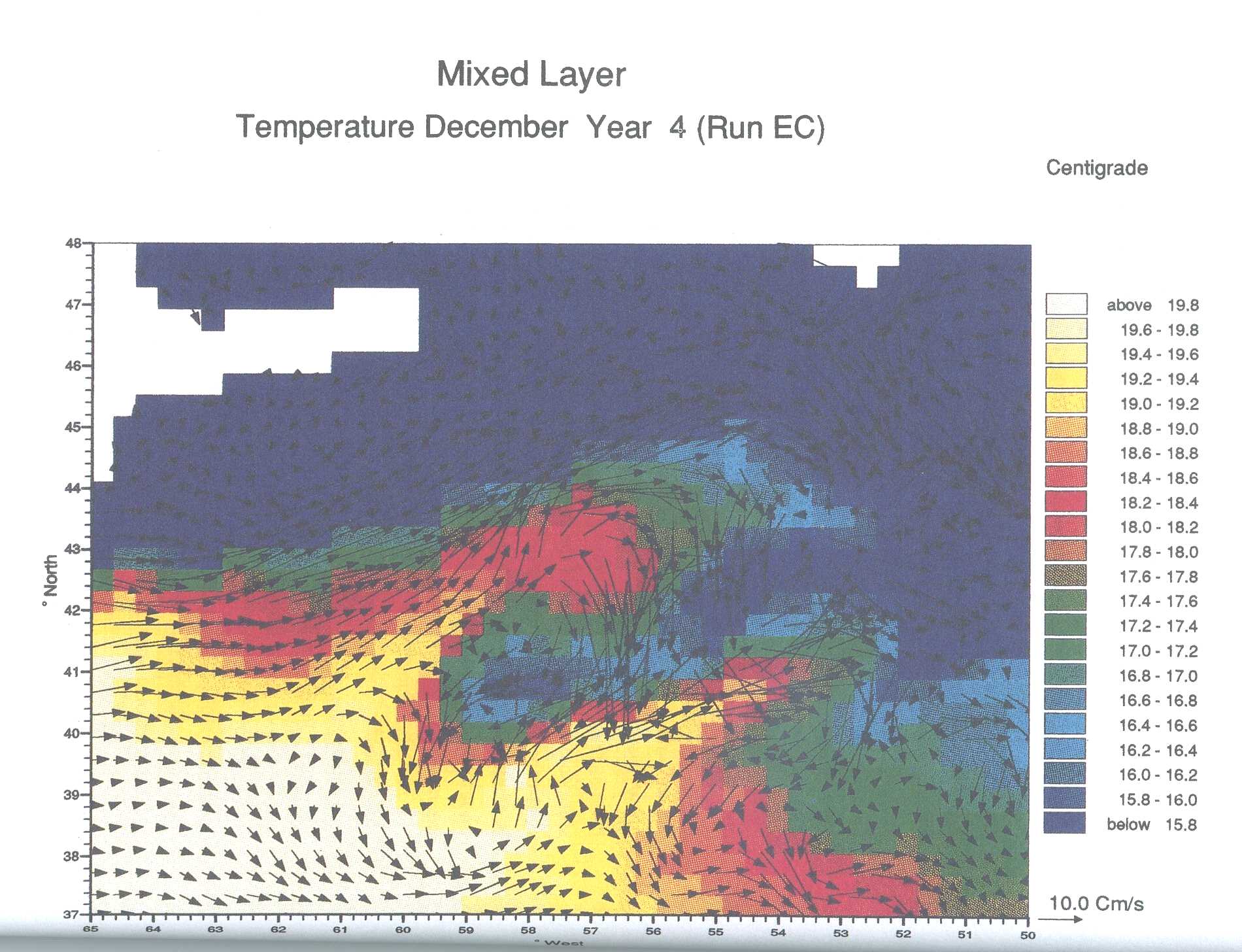

The model is now also being run at a higher horizontal resolution, about 1/3°, which is sufficient to allow eddies to form. Eddies are the weather systems of the ocean, and usually occur on scales of 20-200km or thereabouts. As such, they are too small to be adequately described by typical climate models, which for reasons of computer size and speed, usually have a resolution of 1° or 2° horizontally. Nevertheless, it may be that these eddies contribute significantly to the basin-averaged northward heat transport and also to the interactions and transfers between the ocean surf ace and its interior. It is therefore necessary to perform model integrations containing eddies so that these effects can be studied and, if found to be important, so that parameterisations can be developed for inclusion in the climate models. Several initial tests with the Miami code have now been undertaken on the RAL CRAY YMP. Work has so far concentrated on varying the available parameters to obtain a realistic eddy field. Although further tests are still required, it has already been possible to obtain realistic eddies in certain cases. As in the real world, a cyclonic (anti-clockwise) cold-core eddy has been observed south of the mean position of the core of the Gulf Stream, with a corresponding anticyclonic warm-core feature to the north. This is shown in the figure, which reveals the sea-surface temperature and current structure in one of the eddy-resolving runs just south of Nova Scotia (the cold-core eddy occurs at 58°W, 41°N, the warm eddy at 57°W, 43°N). The net effect of these eddies must be to increase the northward heat transport, at least locally across the Gulf Stream, since cold water from north of the current is being drawn southwards, to form the cold-core feature, and conversely for the warm feature. Collaboration is now beginning with similar modelling groups in Germany and France to intercompare three different model types at eddy resolution, in order to assess their relative merits.

New, A. L. 1991a. Atlantic Isopycnic Model. p 5 in Sigma, The UK WOCE newsletter, number 4, May 1991.

New, A. L. 1991b. Atlantic Isopycnic Model (AIM) - current status. p 2 in Sigma, the UK WOCE newsletter, number 5, September 1991.

Barnard, S. Y. Jia and A. L. New, 1992. A study of Labrador Sea Water in the Atlantic Isopynic Model. Proc. Challenger Soc. conference "UK Oceanography 1992", Liverpool 21-25 September 1992. (Abstract only.)

Marsh, R. and A. L. New, 1992. The Atlantic Isopynic Model, AIM: progress and plans. Sigma, the UK WOCE newsletter, no.7, 4-6.

New, A. L. 1992. The Atlantic Isopynic Model: AIM. Proc. Environmental Sciences Seminar "The power in your predictions", Utrecht, Holland, 22 October, 1992, organised by CRAY Research B.V. Rijswijk, Holland. 14pp.

New, A. L. R. Bleck, Y. Jia, R. Marsh, S. Barnard and M. Huddleston, 1992. A simulation of the Atlantic Ocean with an isopycniccoordinate circulation model. Proc. Challenger Soc. conference "UK Oceanography 1992", Liverpool 21-25 September 1992. (Abstract only.)

New, A. L. Y.Jia, R. Marsh, S. Barnard, M. Huddleston and R. Bleck, 1992. An isopycnic model of the North Atlantic Ocean. Annales Geophysicae, supplement II to volume 10, p 179 (abstract only). 248pp.

Nurser, G. and R. Marsh, 1992. Subduction and buoyancy forcing in the Atlantic Isopycnic Model. Sigma, the UK WOCE newsletter, no. 8, 10-11

Barnard, S. Y.Jia and A. L. New, 1993. Labrador Sea Water in the Atlantic Isopycnic Model (AIM). Sigma, the UK WOCE newsletter, no. 9, 8-9.

When the purchase of a Cray X-MP was announced by ABRC in 1986, the NERC Ocean modelling community put forward a proposal to make a significant advance in modelling the ocean. The resulting project, FRAM, produced the first high resolution model of the Southern Ocean. The Southern Ocean was chosen as an important, yet relatively unstudied region, whose physics is very different from that of other ocean basins, primarily because of the lack of eastern and western boundaries. The Antarctic Circumpolar Current (ACC), which dominates the Southern Ocean, provides an important connecting link transporting and mixing water masses between the other major oceans of the world. It also acts as a barrier to the transport of heat by the ocean between the tropics and Antarctica.

The main FRAM run started on the Cray X-MP at the Atlas Centre, Rutherford Appleton Laboratory, in the spring of 1989 and ended in April 1991. A total of 16 model years was completed. Following this, two-and-a-half model years was completed of a second run, which included a sea-ice model This model was transferred to the CRAY Y-MP.

The model results were very realistic. They showed that the ACC had a large scale braided structure with localised, very strong barotropic flows associated with sharp topographic features. Some unexpected features, such as strong, narrow jets flowing through the fracture zones of the South Pacific, were also evident. These have since been confirmed by observation.

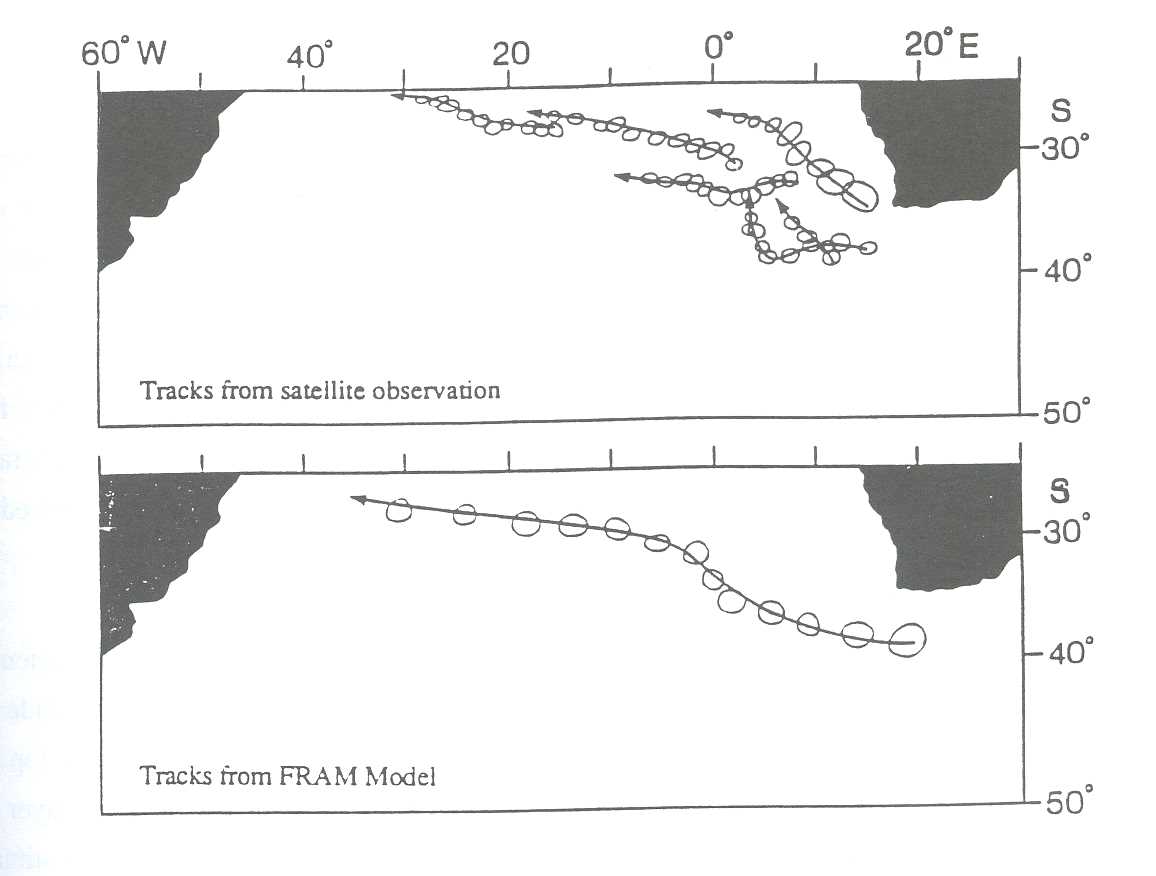

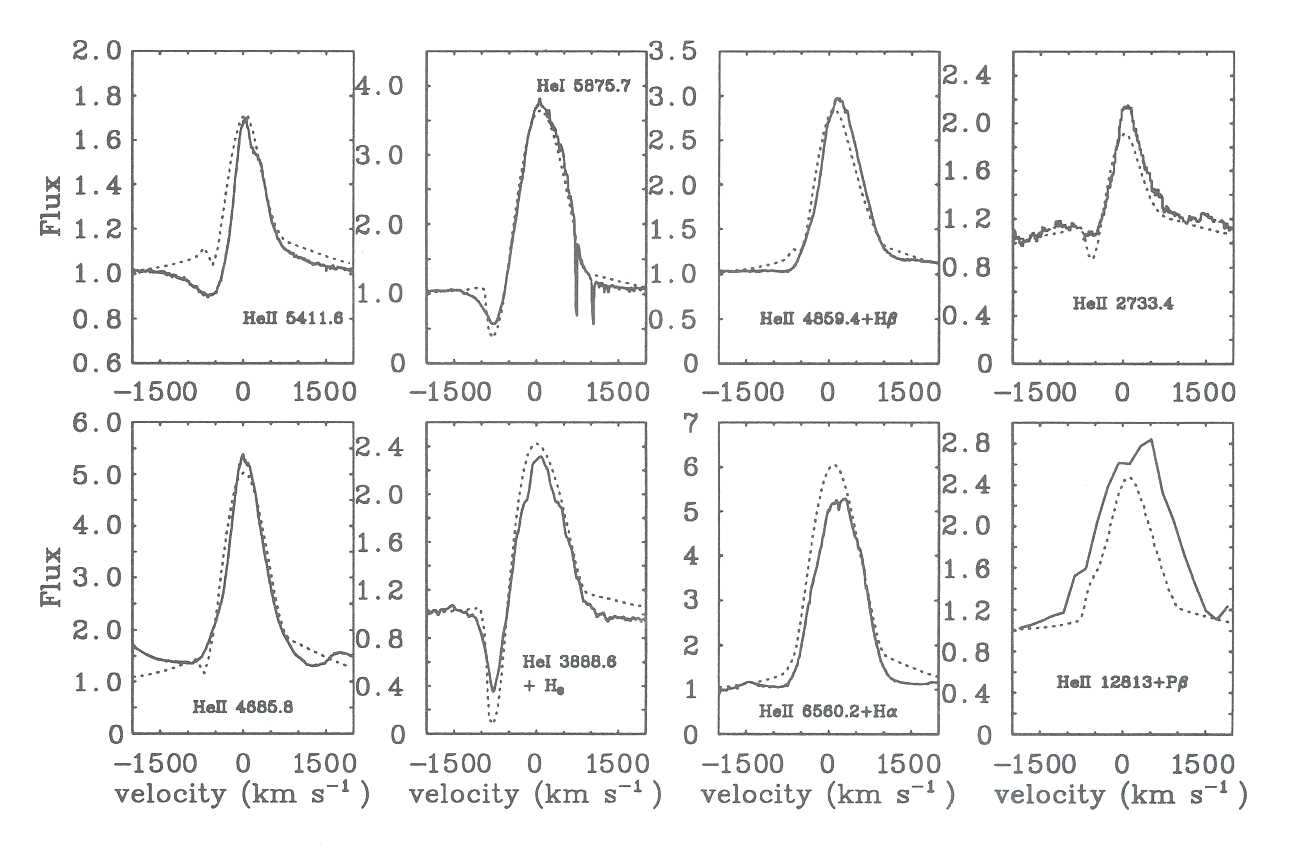

Although the Levitus historical data used to initialise the model do not show sharp fronts, the model dynamics proved very effective at sharpening up the temperature gradients to produce fronts. In Drake Passage, for example, the model shows the ACC to consist of three fronts, in agreement with hydrographic and current measurements in the region. South of Africa the model produced a strong Agulhas Current and a realistic re-circulation zone. Eddies are generated in this region and are shown by the model to drift slowly north-westward into the South Atlantic (Figure 1). Comparisons between model results and satellite data in this region confirm that the strength of the currents is realistic, but that the model re-circulation zone is slightly further east than it should be. This appears to be related to the smoothing applied to the model topography.

The results from the FRAM model were published in the form of an atlas which illustrated the model state at the end of the six year spin-up period. Over 400 copies have been distributed worldwide. As a result, FRAM data have been supplied to researchers in Europe, North America, South Africa and Australia. A video was produced in collaboration with the Atlas Centre of time series of the model fields in key areas. This has been used as a research tool and at international seminars.

FRAM was a NERC Community Research Project and much important analysis of the model has been undertaken at IOSDL and the Universities of East Anglia, Southampton, London (Imperial College and University College), Oxford, Cambridge and Exeter. This analysis is still continuing. Three areas will be highlighted here.

Dr D Stevens (UEA) and Dr V Ivchenko (Southampton University) studied the momentum balance of the ACC. They found that at the latitudes of Drake Passage, the surface wind stress is balanced by the northward Ekman transport and that this is lost to the Deacon Cell (see (iii) below) return flow region below 2000 m. They also looked at the zonal momentum balance, where, in addition to the balances above, they found that in the top 2000 m, the Coriolis term is balanced by the eddy Reynolds stress. Standing eddies (departures from zonal flow of the mean current) make the largest contribution to this balance. Integrating around Antarctica, the standing eddies act as a drag on the ACC but the transient eddies cause a small acceleration of the flow.

Dr P Killworth and Dr M Nanneh of the Hooke Institute, Oxford, studied the momentum balance in the Southern Ocean working on density surfaces and averaging in longitude and time. They found the momentum balance in each layer to be primarily between top and bottom form stress. In some of the deep layers the mean north-south flow in the layer is a result of a long term thermohaline response of the model to the initial conditions. Estimates indicate that this would take about 200 years to empty or fill a layer, which is comparable to the flushing time of the ACC area. These results are important in that they show that the 16 year run of the FRAM model is not in dynamical equilibrium. This will be true of most other models in which long term changes in the thermohaline circulation induce Coriolis forces, which in turn require bottom pressure torques to balance them.

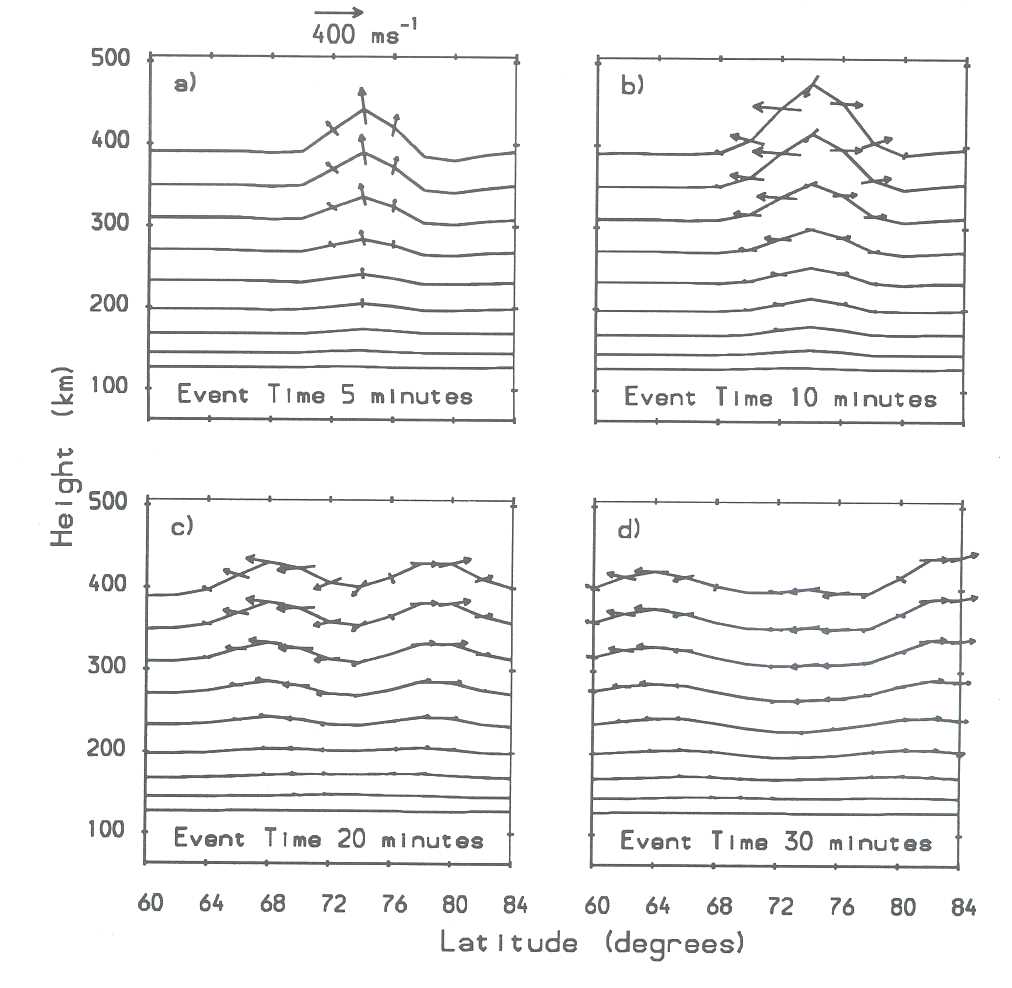

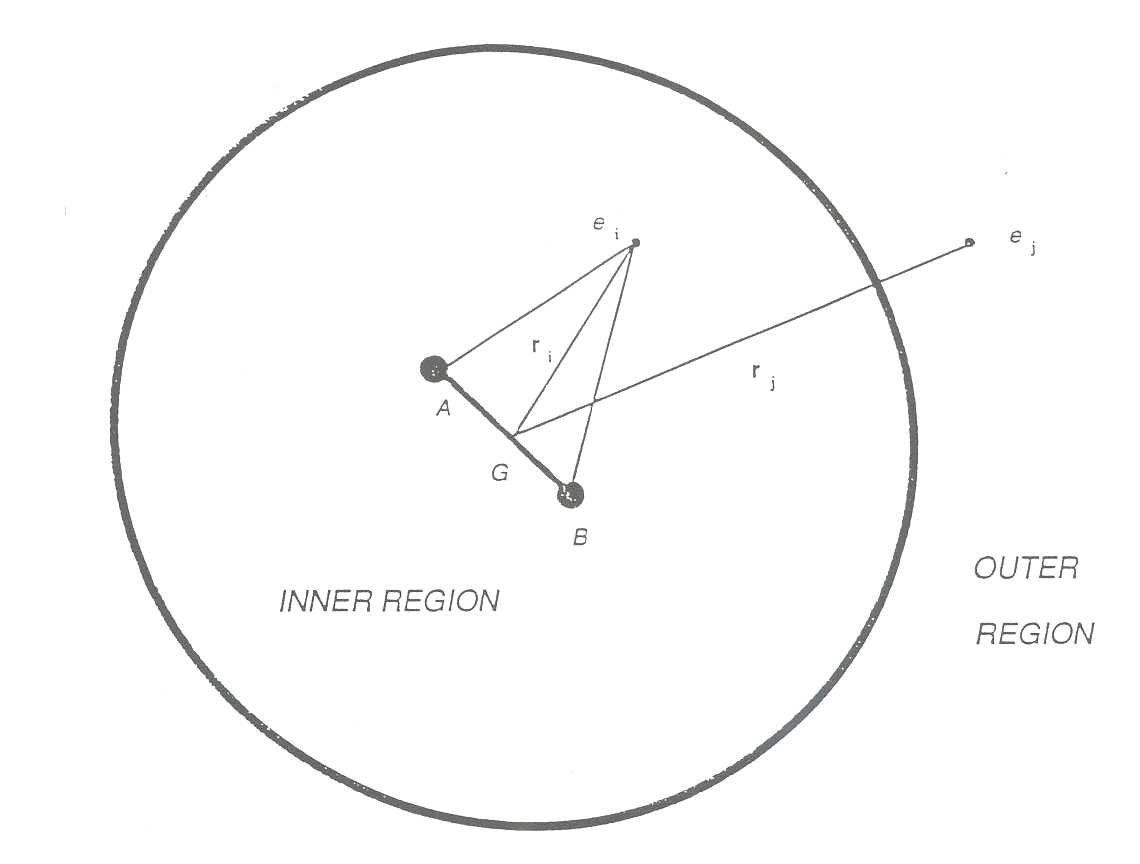

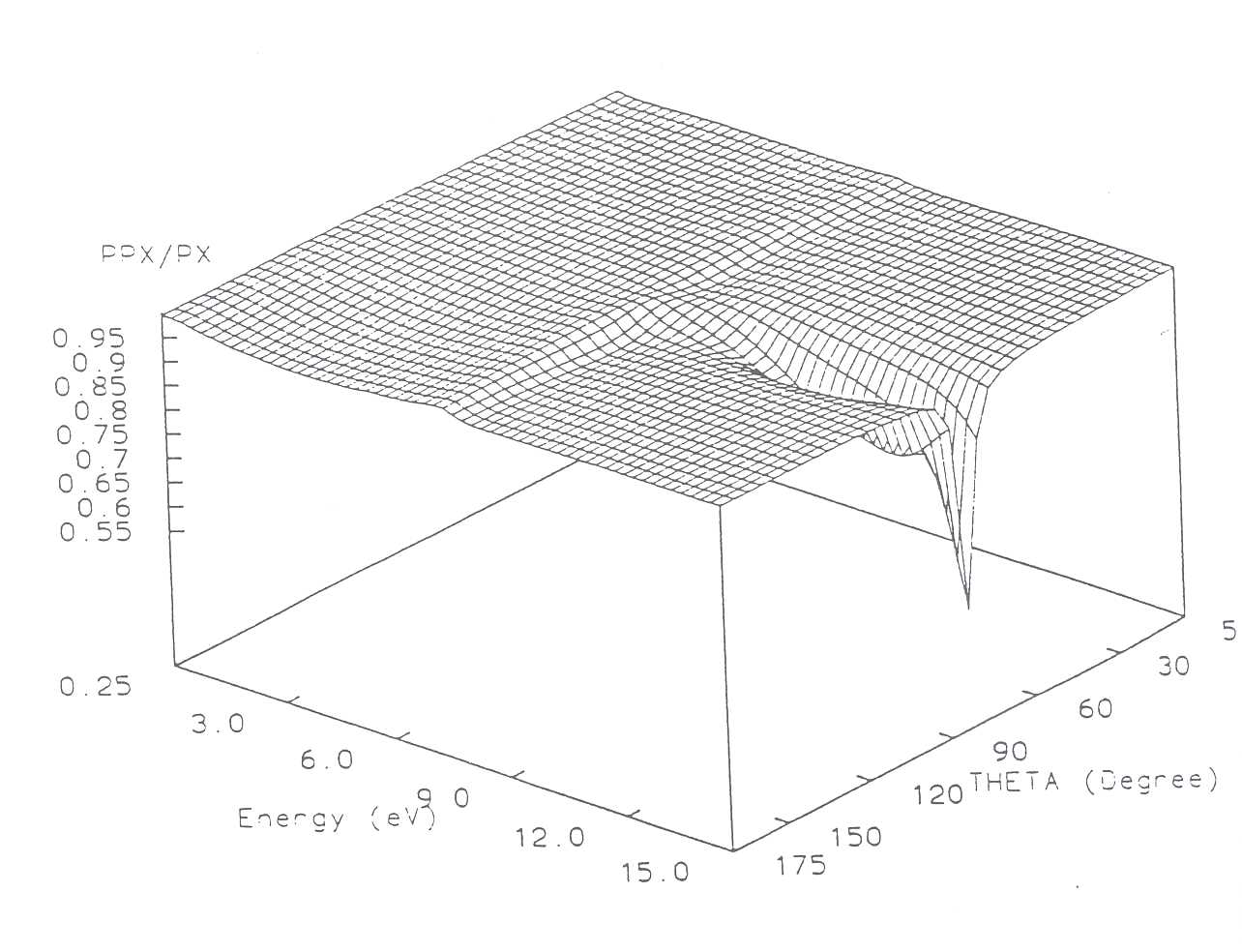

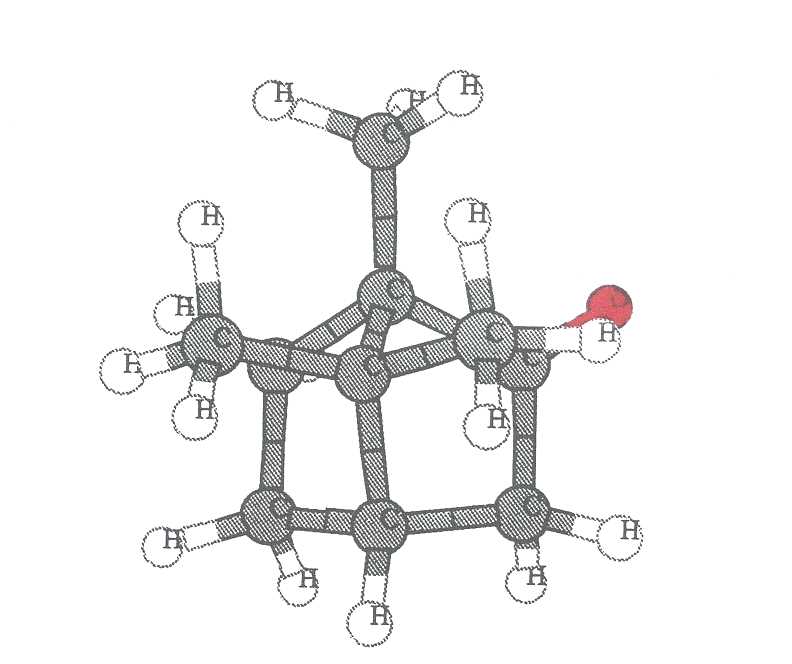

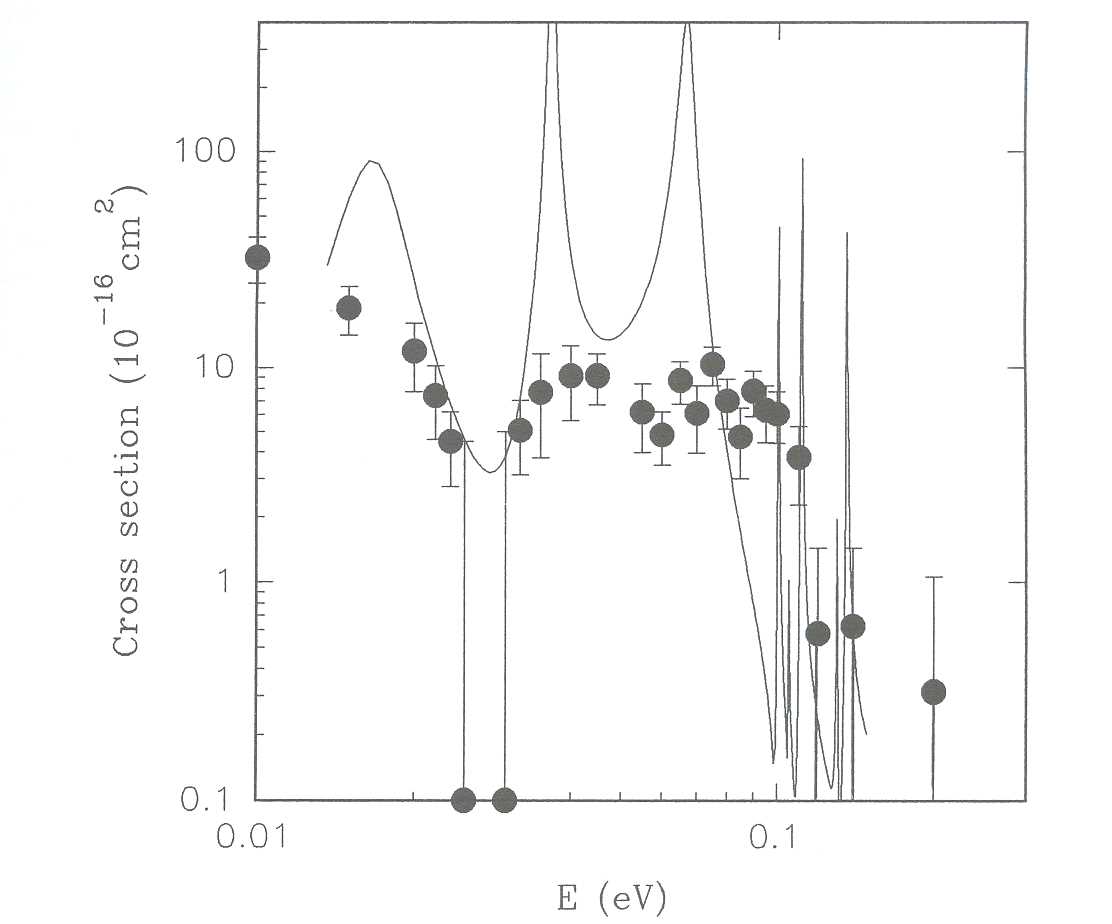

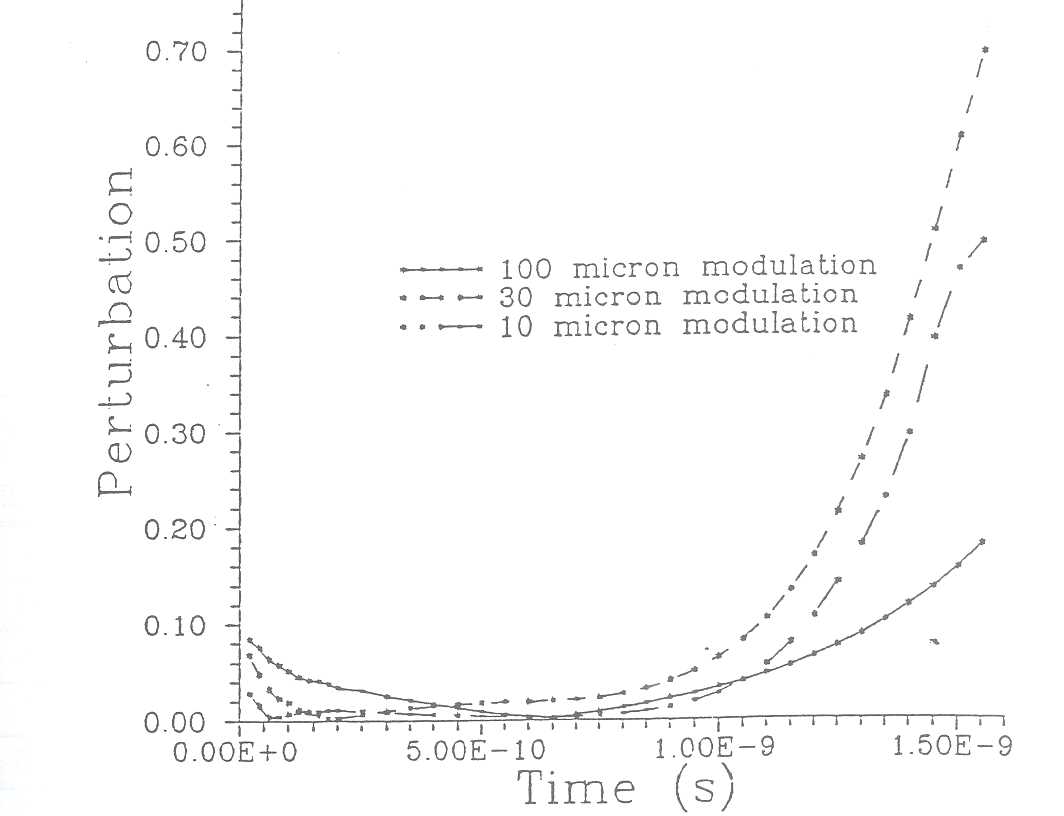

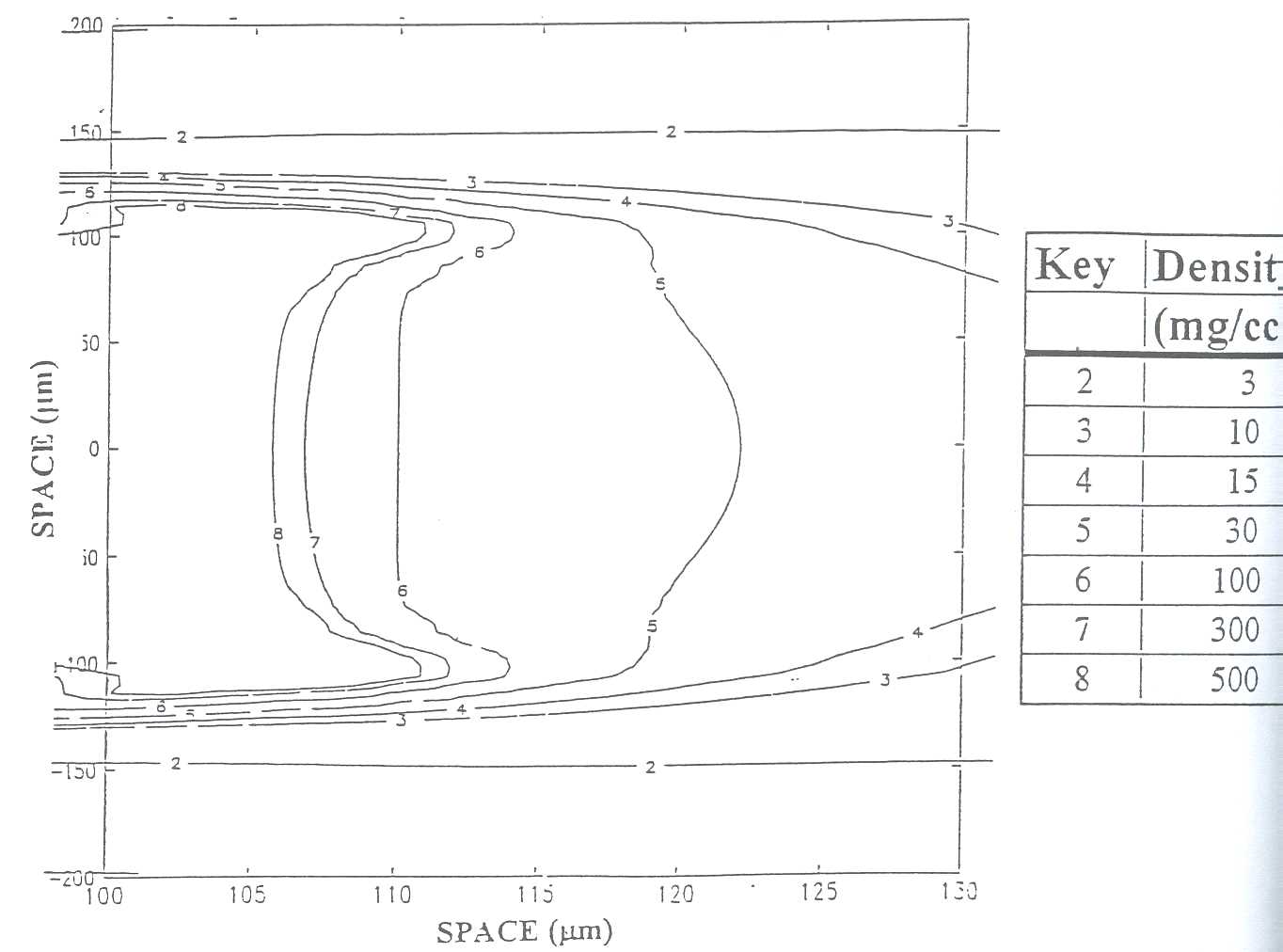

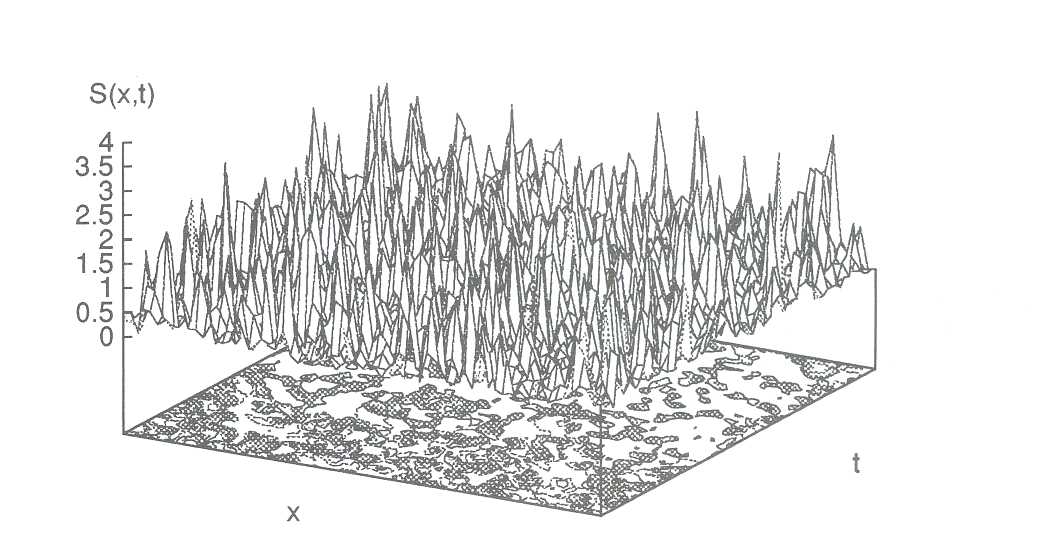

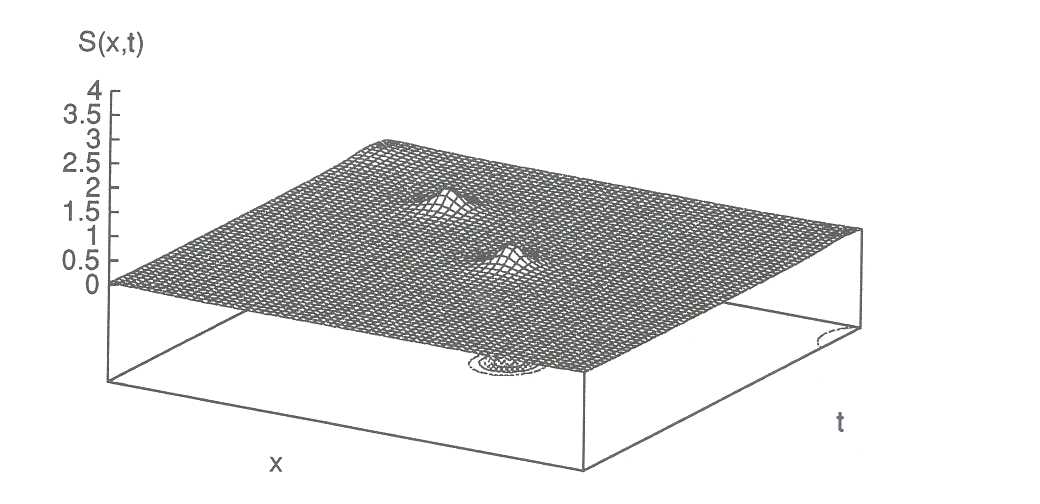

Dr P Saunders and Mr S Thompson of IOSDL have studied the heat transport in FRAM. They have found the net heat transport to be southwards at all latitudes. North of 35°S the heat is carried primarily by the mean circulation. In the South Atlantic this is dominated by the thermohaline circulation, but in the other oceans it is dominated by the gyre scale circulation. Opposing this southwards transport is a strong northwards heat transport due to the surface Ekman layer. Near 40°S, at the latitude of the Agulhas retroflection region, the heat transport by the mean current field is northwards and the main southward heat transport is due entirely to the fluctuations in the flow. These continue to be important north of 60°S, but further south the mean circulation again dominates. This is an important result as it is the first time that the eddy field has been found to dominate the heat transport in a large scale ocean model. As studies of other regions of the world ocean have failed to find such behaviour, the Southern Ocean between 30°S and 60°S may be the only region of ocean in which the fluctuations are responsible for a significant fraction of the heat transport.