This period is covered in less detail than others. Much of the Departments' documentation providing detailed information on projects and services was created and disseminated electronically and has not been preserved. The main sources of information used in preparing this page are the printed newsletters of the time: Flagship until the end of 1995 and then Atlas. However most of the articles in Atlas were brief, referring the reader to (now lost) web pages for details.

In 1994 RAL and the Daresbury Laboratory had merged into one organisation, known as DRAL, which came under the auspices of the Engineering and Physical Sciences Research Council (EPSRC). In 1996 DRAL was separated from EPSRC to become the Central Laboratory of the Research Councils (CLRC), with its own Council (the CCLRC) alongside the other Research Councils from which it received much of its funding.

Towards the end of 1994 the RAL Central Computing and Informatics Departments were merged into a Computing and Information Systems Department (CISD), and in 1996 there was a further merger of CISD and the Daresbury Theory and Computational Science Department and the Daresbury IT infrastructure groups to form a CLRC-wide Department for Computation and Information (DCI). Both the merged Departments were headed by Brian Davies. On his retirement in February 1999 DCI was split into two new Departments, each spanning both the Daresbury and Rutherford sites, one dealing mainly with scientific computing and the other mainly with information systems.

DCI's Daresbury-based activities are not covered on this Chilton-specific site.

CISD and DCI staff participated in IT R&D activities funded by the European Commission and EPSRC, collaborating with universities, research institutes and companies across the continent. Recurring themes were applications of IT to business processes, knowledge-based approaches and collaborative working methods for dispersed partners. The table below lists those projects which were summarised in the Flagship and Atlas newsletters of the period.

Formal information (aims, funding details, partner organisations etc) on the EC funded projects may be found via searches on the project names at the Cordis website, but sources of more detailed write-ups on these projects have not been located.

| Newsletter | Project | Topic | Funded by |

|---|---|---|---|

| Flagship 37 April95 | HYPERMEDATA | Hyperlinked Multimedia - medical applications | EC: Copernicus |

| Atlas 1 Feb 96 | CHARISMA | Desktop Videoconferencing | EC: RACE II |

| Atlas 2 April 96 | MIDAS | 3-D modelling for electromagnetic design | EC: ESPRIT III |

| Atlas 2 April 96 | ProcessBase | Data management and exchange for process plant design, construction and operation | EC: ESPRIT III |

| Atlas 4 Aug 96 | I-SEE 1 | Enhancing software with co-operative, explanatory capabilities | EC: ESPRIT III |

| Atlas 4 Aug 96 | HICOS | Business Processing Technology | EC: ESPRIT III |

| Atlas 4 Aug 96 | WeDoIT | Business Processing Technology | EC: ESPRIT IV |

| Atlas 5 Feb 97 | TallShiP | High-level, well-founded sharing in parallel and distributed systems | EPSRC |

| Atlas 5 Feb 97 | TORUS | Re-using engineering design information | EC: ESPRIT III |

| Atlas 7 June 97 | Aquarelle | Information Network on Cultural Heritage | EC: Telematics Applications |

| Atlas 7 June 97 | MANICORAL 2 | Collaborative working | EC: Telematics 2C |

| Atlas 8 Sept 97 | STEP | Standard for Exchange of Model Data | EPSRC |

| Atlas 9 Nov 97 | SPECTRUM | Formal Methods | EC: ESPRIT IV |

| Atlas 9 Nov 97 | Tcl/Tk Cookbook | Web-based tutorial for Tool Command Language | Advisory Group on Computer Graphics |

| Atlas 10 Feb 98 | TAP-EXTRA | Co-operative explanatory capabilities in control room monitoring | EC: ESPRIT IV |

| Atlas 11 Mar98 | Toolshed | Interactive open environment for engineering simulations | EC: ESPRIT IV |

| Atlas 11 Mar 98 | VIVRE | Visualisation through an Interactive Virtual Reality Environment | EC: ESPRIT IV |

| Atlas 12 May 98 | COENVR | VR techniques for interactive grid repair | EPSRC |

1 I-SEE was included in a CEC publication 101 Success Stories from ESPRIT

2

More information

In 1994 the main focus of the Research Councils' national supercomputing programme had moved to the University of Edinburgh with the installation of a Cray T3D, a distributed memory - distributed processor system with 256 processors, which was considerably more powerful than the Atlas Centre's Cray Y-MP.

The Cray-YMP continued in service at the Atlas Centre, as part of the Research Councils' high performance computing programme, supporting projects which benefitted from vector processing and/or had not (yet) been adapted to large scale parallelism. In 1996 the Y-MP was replaced by a Cray J90 machine with 32 vector processors and a 4 Gbyte shared memory, with peak performance of 6.4 Gflop/s. The J90 offered more aggregate performance, greater scope for parallel processing and was much less expensive to run than the Y-MP in terms of maintenance costs and power consumption. It remained in service until 1999.

In 1997 a Fujitsu VPP 300 was installed alongside the J90. Its architecture complemented those of the J90 and T3D, having extremely high-performance individual processors and distributed memory: three 2.2 Gflop/s processors each with 2 Gbytes of memory. The machine was funded by NERC for the exclusive use of some of its major projects. It remained at Atlas until 1998 when it was moved to the University of Manchester where the Research Councils' next major high performance computing facility had been installed.

The Cray J90 service was closed down in March 1999 when RAL's role as a provider of services for the Research Councils' high performance computing programme came to an end.

From the mid-1990s onwards the Atlas Centre provided substantially more computing capacity through facilities based on RISC superscalar architectures, tailored to meet the requirements of specific user communities, than it did on its supercomputing facilities.

The CSF farm that had been introduced earlier in the 1990s primarily as a facility for Monte Carlo simulation work was expanded at the end of 1994 and opened up to more general analysis and work that had higher demands on I/O.

A review in 1996/7 of the computing facilities for particle physics at the Atlas Centre set the scene for the rest of the decade. It recommended that the CSF farm be expanded by 50%, that a production service based a cluster of 20 PCs using Windows NT be established, and that the data store capacity should increase from 2 TB to 20 TB by 1999. To help fund these expansions the OpenVMS and OSF1 services were closed and their workloads were transferred to the expanding services.

The particle physics computing facilities at RAL would be vastly increased in the early 2000s as a Tier-1 computing and data centre for CERN LHC experiments became established.

This was launched in September 1995 as an alternative to the Cray Y-MP and other supercomputing facilities for EPSRC peer-reviewed computing projects. The service was based on a Digital 8400 superscalar computer (known as Columbus) with six EV5 Alpha processors and a 2 Gbyte memory, running Digital's version of Unix, OSF/1. The EV5 processors had a 300 MHz clock and a theoretical peak performance of 600 Mflop/s each. The aggregate peak performance of the facility exceeded that of the Cray Y-MP.

The service was successful and demand for it soon outstripped supply. It was augmented in 1998 by adding a second, closely coupled four-processor system (Magellan) which used the latest 625 MHz Alpha processors. The peak theoretical performance of the coupled system, at 8.6 Gflop/s, exceeded those of either the Cray J90 or the Fujitsu VPP.

A major user of the facility was the Facility for Computational Chemistry in the UK, which had been funded by EPSRC for an initial four years from January 1997 and would be renewed into the 2000s with substantial expansion of the computing facility.

The overall computing capacity of these facilities at the end of this period was in the order of 100 times that of the 6-processor IBM 3090 scalar capacity of the early 1990s.

The Atlas Centre's IBM and StorageTek tape robot silos continued in service into this period providing a total capacity of about 20 Tbytes. Most of the capacity was on the IBM robot and the StorageTek was taken out of service towards the end of the period. The datastore successfully handled a rapidly growing demand, especially from particle physics users.

A major project was undertaken in 1995 to transfer the controlling program for the data store from the IBM3090, which was being taken out of service, to a set of IBM RS6000 machines. This was accompanied by continuing developments throughout the period to improve latency and transmission rates for accessing data, and to optimise the operational procedures in the absence of operator cover outside normal working hours.

Large scale expansions of the datastore facility were to follow in the early 2000s.

A Virtual Reality Centre was established at RAL during this period. The aim was to provide a facility which would help the comprehension of complex multi-dimensional representations, whether of scientific or commercial data or models of physical entities, and to be able to immerse oneself in them if appropriate and to interact with them.

The initial equipment, installed in 1995, was a Silicon Graphics Onyx computer with Reality Engine 2 graphics, video projectors to provide large screen stereo displays and Division's virtual reality software suite. The Centre was co-located with existing graphics and digital media activities within the Department. Early applications included a study of the ability to assemble part of one of the detectors to be used at the CERN Large Hadron Collider and a visualisation of complex, non-steady flows on seashores.

By 1997 the facility had been expanded to a powerful 8-processor Onyx with three Reality Engine graphics pipelines with Division's dVS software and other tools including the virtual reality markup language VRML for displaying 3D information on the web (see issue 8 of the Atlas newsletter for an article by Bob Hopgood on the development of VRML and its progress towards becoming an ISO standard). New projects using the facility at this time included design work for a neutron scattering detector at ISIS, the modelling of the interior spaces of buildings and planning and training for operational tasks in hazardous spaces

Two research projects carried out by the VR group are shown in the table of projects above, and issue 9 of Atlas contains a description of how a VR booth was put together in response to a challenge to demonstrate virtual reality to visitors to the 1997 Open Days at Daresbury.

Further information on the the VR Centre and its applications can be found in the 1998 RAL Open Days issue of Atlas

The rapid growth in video conferencing that had started in the early 1990s continued in this period, and by 1996 there was on average more than one videoconference per day at RAL and sometimes as many as four. Both DL and RAL had centralised equipment with fibre optic links to rooms, including lecture theatres, elsewhere on the sites that were equipped for video conferences. The conferences extended to all continents, with the numbers of participants ranging from handfuls to hundreds. At the same time desktop videoconferencing was becoming widespread, stimulated by the growing power of desktop machines and the capabilities of local area networks (see the CHARISMA project listed above for an investigation of international desktop videoconferencing over an ATM pilot network).

The successful Video Facility of the late 1980s and early 1990s evolved into a more general digital media service in the late 1990s. The MediaLab which housed it was linked by local area network, video and audio, to the VR Centre, enabling the rendering of complex VR models. Demand for the facility continued to be high and output from the MediaLab was shown on all the (then) UK TV channels.

ERCIM (European Research Consortium for Informatics and Mathematics) was launched on 13 April 1989 by INRIA (France), GMD (Germany) and CWI (Netherlands) as an informal club to discuss projects and areas of mutual interest.

RAL (CLRC) joined as the UK Member in 1990. An ERCIM Executive Committee was set up, with Bob Hopgood representing RAL, late in 1990. One objective was to broaden the membership and in 1991 CNR at Pisa (Italy)and INESC (Portugal)joined. The membership continued to increase in 1992 with FORTH (Greece), SICS (Sweden) and SINTEF (Norway) joining. All the members were government-funded non-commercial laboratories with a strong interest and involvement in mathematics and computing.

Bob Hopgood chaired the Executive Committee in the period 1992-1995 where a major activity was transforming ERCIM into an EEIG (European Economic Interest Grouping), a legal entity able to act in many European countries as though it were a company in its own right.

The membership continued to grow and, in the period 1993-1994, AEDIMA (Spain), VTT (Finland), SGFI (Switzerland) and SZTAKI (Hungary) joined.

Keith Jeffery (2008) is currently the Chairman of ERCIM.

In 1994, the World Wide Web Consortium (W3C) was set up to lead the Web to its full potential with a US Host at MIT and with the intention of having the European Host located at CERN, the birthplace of the Web. Quite late on CERN withdrew from this role and INRIA stepped in to host the European Host. Funding was provided by the European Union via the WebCore Project which ran from December 1994 until January 1997. The aim was to create a European information market that improves the European economy and quality of life.

To achieve a critical mass early on, INRIA asked and received support from the ERCIM members. GMD, CWI and RAL seconded staff to INRIA and the ERCIM EEIG and most of the individual ERCIM members joined W3C.

In 1996, the European Commission indicated that they would consider funding a follow up Project to raise awareness in Europe but require it to have more partners than just INRIA. The follow-on project called W3C-LA was a Leveraging Action with RAL and INRIA as partners aimed at establishing a set of W3C Offices across Europe as National Points of Contact providing mirrors of the W3C site, performing translations of key documents and running local awareness events and producing a set of shrink-wrapped demonstrators of the new emerging standards such as CSS, HTTP 1.1, SMIL, WebCGM, and eventually SVG. Jeff Abramatic, later to become W3C Chairman, managed the project with Bob Hopgood as Deputy Manager. W3C-LA ran from November 1997 until March 1999.

European Offices were established at six ERCIM Members (RAL, SICS, GMD, CWI, FORTH and CNR). RAL staff helped at 8 major events across Europe including two in London providing demonstrations and talks related to SMIL, WebCGM, XML, PNG, RDF, SVG.

A third European Project QUESTION-HOW (QUality Engineering Solutions via Tools, Information and Outreach for the New Highly-enriched Offerings from W3C: Evolving the Web in Europe) was defined by Bob Hopgood and Jeff Abramatic and ran from September 2001 to September 2003. It extended the coverage of Europe in terms of Offices and ran two Conference tours across Europe. The Interop Tour ran in 2002 and the Semantic Tour in 2003.

To complete the ERCIM/W3C involvement, the European Office moved from INRIA to ERCIM.

WWW Articles in Atlas:

ERCIM Article - Atlas 5, February 1997

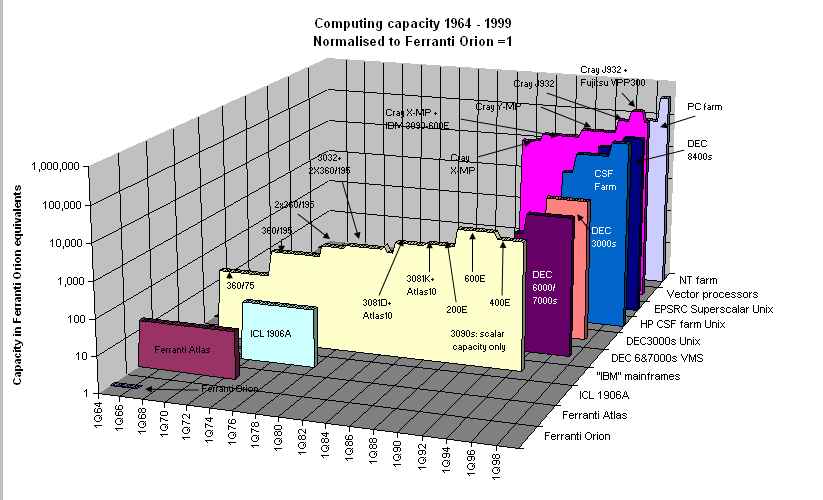

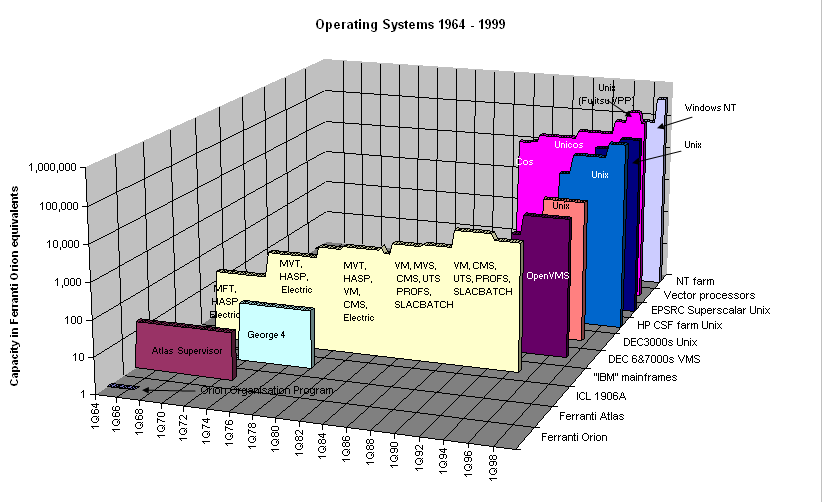

The diagrams below are intended to give an overview of the evolution of the Atlas/RAL computing facilities over the whole time period covered by the site.

Estimating relative capacities of machines with diverse architectures over such a long period can at best be only an approximate exercise. The preferred information sources have been newsletter articles written when new facilities were being introduced which gave guidance on how new facilities might be expected to perform compared to their predecessors for users' 'real workloads'. Otherwise, we have used supplier documentation to get the performance ratios of certain models in the same range, and Specint95 and Specfp95 benchmarks to relate some of the late-1990s facilities. Where all else failed we have made our own estimates, but this has been for needed for only two comparisons.

The capacities of vector supercomputers have been related to those of scalar machines via newsletter guidance on the average performance improvement to be expected by Atlas Centre users on the IBM 3090s with and without the vector facilities, and (in the absence of better data) scaling up by peak performances for the Cray and Fujitsu machines. Note that the averages conceal wide variations among individual codes, and that the comparisons with the scalar facilities should be taken as indicative rather than definitive.

The second diagram shows the operating systems used on the various facilities and illustrates the convergence on Unix towards the end of the period (the powerful Windows NT farm which was the only non-Unix system in use after 1997 was to be converted to Unix shortly after 1999).

Our thanks to UKRI Science and Technology Facilities Council for hosting this site.