The Secretary's Department and Central Office Administration provide funds for general SRC facilities. The items funded at Chilton include the facilities for general computing, based upon an IBM 360/195 and 3032 computer complex and a network linking over 70 universities and laboratories in this country and overseas centres. An Energy Research Support Unit offers a service for energy research in universities and polytechnics and the Council Works Unit provides an engineering and building works service to SRC establishments.

This year has seen the consolidation of the IBM 3032 as the front-end machine. It was placed in this role on 27 May after extensive testing and trial runs. The machine runs under the hypervisor VM/370 (Virtual Machine) which enables a number of 'virtual machines' running different operating systems to co-exist within it. One such machine runs under OS/MVT (Multiprogramming with a Variable number of Tasks), and this communicates with the two IBM 360/195 computers, which run the same operating system. Batch work is controlled by the front-end 3032, and passed to the back-end 195s for processing.

An embryo CMS (Conversational Monitor System) service has been started mainly on site - this is the interactive system which will replace ELECTRIC. It is envisaged that, with the introduction of VNET software and the installation of more hardware, the full CMS service can be launched and the gradual run-down of the ELECTRIC service begun. The Communications network has continued to grow and we have seen the introduction of true networking with GEC 4000 series machines acting as nodes.

The IBM 360/195 computers are now very old machines and although they perform extremely well they will have to be replaced shortly. To this end the Facility Committee for Computing has set up a Working Party under the Chairmanship of Professor R J Elliott (Oxford University) which is reviewing the whole of SRC needs in computing and reporting its findings in December 1980.

The schematic of the triple system is shown in Fig 5.1. The front-end machine (FEM) is now the IBM 3032 which provides HASP, ELECTRIC and COPPER control facilities as well as the CMS service, while the batch computing has been carried out on the IBM 360/195s. The increase' in power, caused by the change over of back-end machine from a 3032 to a 195, has meant that the backlog has been reduced. There has been some reconfiguring of the hardware; for example, the fixed head me is now used as a paging device for the CMS service. Additional hardware installed or on order is as follows: a second Director (containing 6 channels, one Byte, five Block Multiplexors) and two megabytes of main storage for the IBM 3032, and seven gigabytes of disc space (providing additional space for CMS filestore and the OS/MVT system, and reducing the disc mounting by Operators).

The performance throughout the year has been satisfactory except for ELECTRIC, where response has deteriorated. The system on average has been delivering over 200 equivalent 195 hours, and processing just under 20,000 jobs per week.

| Jobs | % | CPU Hrs | % | |

|---|---|---|---|---|

| Nuclear Physics Board | 349,845 | 40.8 | 6,812 | 62.8 |

| Science Board | 144,193 | 16.8 | 1,920 | 17.7 |

| Engineering Board | 32,526 | 3.8 | 662 | 6.1 |

| Astronomy, Space & Radio Board | 78,724 | 9.2 | 662 | 6.1 |

| SRC Central Office and Central Computer Operations | 150,539 | 17.6 | 344 | 3.2 |

| Other Research Councils | 72,998 | 8.5 | 302 | 2.8 |

| Government Departments | 28,655 | 3.3 | 145 | 1.3 |

| Totals | 857,480 | 100.0 | 10,847 | 100 |

Throughout the year a full service has been provided to the Natural Environment Research Council. In March a new release of the Operating System (36R2) was installed and after initial problems it appears to be very robust. It will allow better management of resources through the use of a facility called QUOTA. The Institute of Geological Sciences (London) now has remote job entry and terminal facilities on Univac 1108.

The FR80 microfilm recorder has continued to provide a high quality service to users. New hardware installed this year includes an additional 32K of main storage. Two notable films have been made, one by Dr S J Aarseth (Cambridge University) on galaxy clustering, and one by R E Peacock (Cranfield Institute of Technology) on compressor response to spatially repetitive and non-repetitive transients. The new control software, DRIVER, has been introduced to provide a more robust operating system. Microfiche is still the dominant medium used on the FR80.

The major event of the year was the transfer of the FEM MVT system to the IBM 3032. This was delayed by a series of hardware and software faults early in the year and eventually took place in June. The change released extra computing capacity for the batch, and this was used partly to improve the turn-round for medium sized jobs, ie those requiring a region of up to 550K. The performance of the FEM was not as good as test sessions early in the year had suggested, and it deteriorated as the load on the 3032 increased. ELECTRIC response was particularly badly hit. A number of attempts were made to tune the system for better response but they were largely unsuccessful. It looks as if the main hope for improving the service to users is to press on as quickly as possible for the transfer to CMS.

The other major activity has been the development of the VNET software which will take over from HASP the control of workstations. This has gone more slowly than was hoped, partly because effort has been diverted into trying to solve the FEM problems. However, the end is now in sight, and it should be possible to run guinea-pig workstations early in the new year. The major progression of transferring workstations to VNET will have to wait for the installation of the extra main store which is on order. VM has continued to work well. Release 6.11 was installed in October and the latest release, VM/SP, has been delivered. This should be introduced early in the new year.

Because of the planned transfer of users from ELECTRIC to CMS, the ELECTRIC system was frozen towards the end of last year and all development work on user facilities ceased. In the first half of the year the effort was concentrated on the trials of the Front End MVT system, including ELECTRIC, on the IBM 3032.

There was a need to compensate within ELECTRIC for the reduced CPU power of the 3032 compared with the 195 in order to maintain the same performance. The provision of a software cache of size equal to 200 ELECTRIC blocks and introduction of asynchronous output to the file store via the DRIO package were the main changes aimed at reducing ELECTRIC's overheads. In addition, the program was expanded by 190K by removing most of the overlay structure and hence the I/O associated with swapping.

The front-end trials during April and May indicated that these changes were indeed giving ELECTRIC performance on the 3032 which was as good as on the 195. Consequently the decision was taken to go live with the FEM on the 3032 and this occurred on 17 June. However, with front-end software development complete, the activity in other virtual machines and the use of CMS increased, leading to a rapid deterioration in ELECTRIC response during peak periods. Attempts were made to remedy this situation by adjusting parameters in the VM scheduler and giving maximum priority to the FEM. These and other attempts to tune the system gave only slight improvements to ELECTRIC performance. In a further attempt to provide adequate response during prime shift, an experiment was initiated at the beginning of October in which the maximum number of simultaneous users was reduced from 60 to 55 for a period of 4 weeks and again to 50 for a further 4 weeks. These experiments confirmed that there are other factors which dominate the response at peak times.

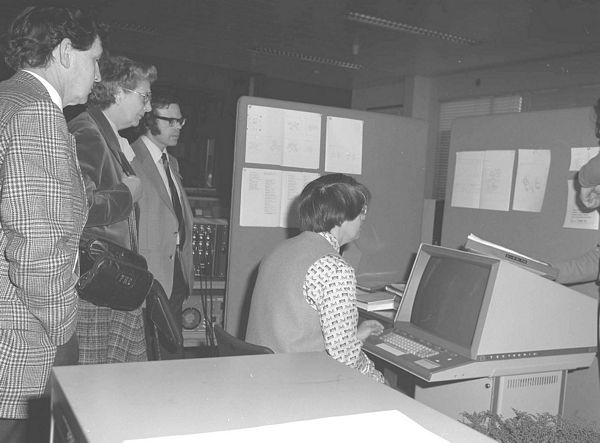

The Conversational Monitor System (CMS) service was opened to users outside the Computing Division in January when 20 users were invited to attend an introductory course given at Chilton by IBM. Since then, two further courses have been given by RAL staff and the number of registered users has risen to 180. Most of the users are on the Chilton site because access has been restricted to terminals connected to the Gandalf PACX switching device and to IBM compatible screens which are used for system development. The number of PACX ports into CMS has increased to 16, with 12 at 1200 baud and 4 at 300 baud. The number of simultaneous active users has risen to around 20 at peak periods. Users have been provided with an introduction to the VM/CMS service as implemented at Chilton and a VM reference manual which documents all available commands. Other documentation is provided in the form of IBM manuals and there is a very good on-line help facility.

There have been problems with filing system errors but the latest version of the software seems to have eliminated most of these. A major revision of VM/370 including CMS was received from IBM at the end of November and is being evaluated. The main developments in user facilities are a new editor, XEDIT, which has improved support for ASCII terminals and a new EXEC processor whose main advantage is the removal of the 8-byte token restriction. On-going local developments include an incremental dumping system for the CMS filestore, utilities to ease the transfer of files from ELECTRIC to CMS and the production of reference cards containing all commands, editor sub commands and EXEC language control statements.

A new Graphics Section was set up in April to bring together into one group all the graphics activities which had previously been carried out within the separate projects. The section is responsible for graphics software on the Interactive Computing Facility (ICF) and Starlink computers as well as on the central computers.

Version 2.6 of GINO-F, containing substantial new features and bug fixes, was received from the CAD Centre and installed on the IBM system. The IMPACT system is being augmented to allow users more text and font facilities on the FR80. Work is in progress to rationalise the higher level graphics facilities available to users.

Reference Microfiche - Early in the year a reference set of 17 microfiche was made. They listed the entire contents of the Crystallographic Data Centre's Database (ie greater than 28,000 structures) in 7 different orders. The extract and index facilities of the FR80 IBM print program were used. The eye readable titles carry information about the first and last frames on the microfiche, while the last frame of each microfiche contains an index to the others.

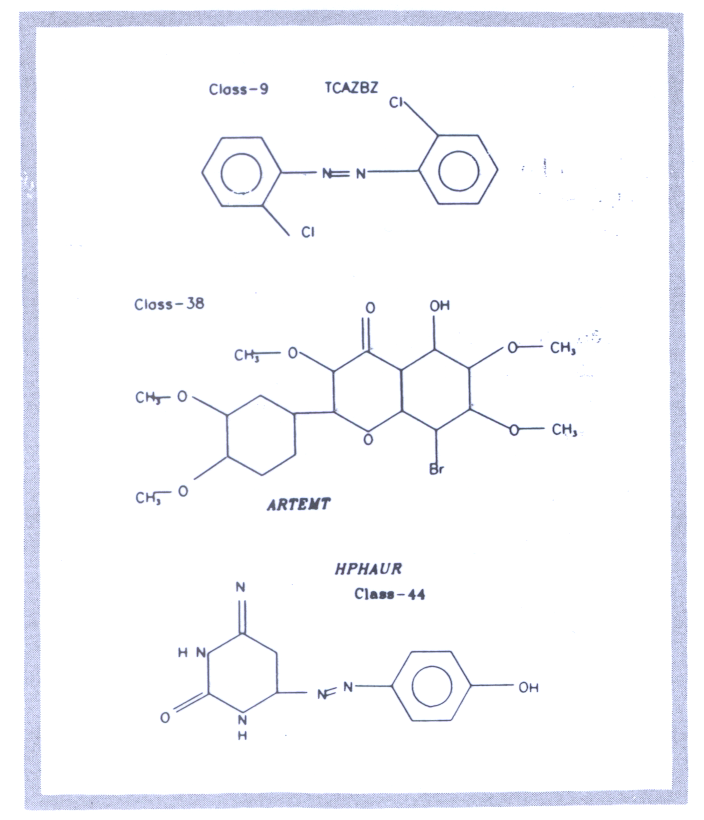

Annual Bibliography - In March, camera ready copy was prepared for 390 pages of the book Organic and Organometallic Crystal Structures, Bibliography 1978-79; Volume 11 in the series Molecular Structures and Dimensions. It has 6 different indexes with differing page layouts, and contains over 4,600 references and cross references to crystal structures published in the literature during the past year. The production runs took 90 seconds of central IBM computer time and 2 hours of FR80 plotting time. Discussions are taking place with Cambridge on how to include chemical structure diagrams in future volumes. During the summer, a study was undertaken to determine the feasibility of entering such diagrams to the computer using the Opscan device. Fig 5.2 shows a sample diagram generated by the test program.

Computer Simulation of Electron Microscope Images - The computer simulation of electron microscope items has now become a routine tool for the interpretation of experimental images. These simulations have traditionally produced half-tone images on lineprinters by overprinting, but these are rarely a very good approximation to. the photographs themselves. In Autumn 1979 it was suggested that photographic techniques using the FR80 might produce more realistic simulations. An image calculation program (supplied by the Department of Physical Chemistry, Cambridge University) was mounted on the IBM central computers, and adapted to use some SMOG utility routines which use the hardware character generator of the FR80 to produce 'grey levels' by space filling symbols. Fig 5.3 shows a sample image.

Following the submission of a paper on the support for data bases to the January meeting of the Facility Committee for Computing, a working party was set up to prepare a more fully considered case. The instructions were to:

Representatives were invited to serve on the working party from all the SRC establishments, and from large user groups at the central computing facility who had expressed an interest in data bases. Four areas were considered, each needing rather different aspects of data base technology.

Computer Aided Design - Fast access is needed to many reference data bases containing information ranging from physical properties and dimensions to names and addresses of suppliers. Data base techniques are also used to store details of sub-assemblies of the artefact being designed during the interactive design processes.

Data Acquisition and Cataloguing - The problem here is the safe management of the hundreds of datasets obtained from accelerators and satellites. They are often collected on small computers at one site and moved to another, larger one, for analysis" The experimenters want a portable system with both interactive access to the data catalogue for random queries. and batch access where their programs can record the results of processing.

Scientific Information Retrieval - Following analysis, the results may be transferred to a data base, providing research workers with access to large collections of similar data. Specialised graphical routines may be needed, and the storage of real numbers, in addition to the more usual character based information, eg retrievals on author or publisher names and dates more commonly stored in bibliographies.

Resource Management - The SRC maintains growing numbers of buildings; computers, terminals and specialised libraries and equipment for both high energy physics and astronomy. Manual methods and batch computer programs are proving too slow, and data bases accessible to the SRC network are seen as. the way to get faster access to more accurate management information of all types. The database management systems must have a very friendly user interface, as they will be used by clerical or stores officers, not by specialist computer staff.

A report was submitted to the September meeting of the FCC which has now been published as an RAL report and passed to the main boards of the SRC. The main recommendation was for better co-ordination and support of data base activities throughout the SRC. This was to be provided by establishing a permanent database group at RAL to work in collaboration with the existing group at Daresbury Laboratory. No single data base management system was found which could satisfy all the requirements of the four sub-groups, but several packages were suggested as partial solutions and effort was requested for their further evaluation. It was recognised that resource management (and possibly scientific information retrieval) should probably be moved to a data base machine accessible to the SRC network. Funds were requested to purchase such a machine at some time during the next five years.

The working party did not deal with the possible associated problems of office automation, bibliography production associated with databases, and the information network already being requested by some users. Such areas need to be considered in association with other Government Institutions, and need considerably more effort.

During the year there has been growth in every aspect of networks and telecommunications. An important feature of the SRC network is the continued growth of traffic between minicomputer hosts in addition to the provision of access to the SRC mainframe computers.

There are now 31 hosts connected to the GEC 4000 series Packet Switching Exchange (PSE) situated at the Chilton site. In order to safeguard the integrity of this system, which is vital to the network, a second GEC 4000 PSE has been installed and is used both for development testing of new PSE software and for preliminary connections to hosts or gateways with newly developed network software. In addition to nest links handled by the PSE, there are now 5 exchange links: 3 to Daresbury Laboratory, 1 to the second RAL PSE and 1 to the DECnet gateway at Edinburgh. The overall growth of PSE links amounts to a three-fold increase during the year. Among the hosts which have been connected, many have been GEC 4000 systems for use as Multi-User Minis for the Interactive Computing Facility or Enhanced Workstations for the nuclear physics community.

The connection of the DECsystem 10 at Edinburgh via a DN200, using the SRCnet-DECnet gateway software developed at York University, represents an important landmark in the development of the network. Terminal access both to and from the DECsystem 10 is provided as well as file transfer and a limited form of job transfer to the IBM system at RAL. Also brought into operation during the year was a network connection to an on-site DEC VAX 11 /780 used for bubble chamber research. This is used to provide both file transfer and job input to the IBM system.

Other important telecommunications activities include the provision of a job transfer system (designed and built by Daresbury Laboratory) connecting the RAL IBM system to that at DESY in Hamburg. Five Starlink V AX systems are now connected to the Chilton Starlink VAX by means of DECnet to form a star configuration. This has been achieved by redeploying or sharing existing telecommunication lines. Finally, in order to provide early access to the ICL Distributed Array Processor at Queen Mary College, terminal access to the QMC 2980 may be gained via the RAL Gandalf P ACX system which has dial-in facilities.

Stimulated by the requirement for future connection of the network to British Telecom's Packet Switched System (PSS) and the necessity to connect PRIME computers to the network, a number of protocol changes have been made in SRCnet. The RAL PSE now accepts HDLC at level 2 and this is used for the SRCnet- DECnet gateway connection and has also enabled the first PRIME systems to be connected. Changes to enable SRCnet to evolve toward compatibility with the UK Transport Service have also begun.

Considerable development of networking software on the PRIME system has taken place and successful X25 calls have been achieved which enable either PRIMEnet or SRCnet connections to be made for both terminal access and file transfer. This development is very important since there is an existing community of 10 PRIME systems awaiting connection.

On the IBM triple system, internal operating system changes to enable VM network access are largely complete but are not yet in service. In June a file transfer system was brought into operation in MVT to enable transfers to be made to and from as datasets, as well as submission of jobs to the HASP job queue.

A continuing activity is the definition of interim UK network protocols under the auspices of the Data Communication Protocols Unit of the Dol. The preliminary specification for Job Transfer and Manipulation Protocol was published in April and in Maya document was circulated describing the technical changes to be made in the revision of the UK Network Independent File Transfer Protocol. The draft version of the complete revision was published in November.

This project, which will eventually link together CERN, DESY, Pisa and RAL with a 1 Mbit/sec satellite link, is now entering an operational stage. Protocol testing between CERN, Pisa and RAL is complete and operational procedures at CERN and RAL have been set up, so that users can send their magnetic tapes via this link.

Recently, failure of the travelling wave tube klystron in the high power amplifier caused an interruption of 3 to 4 weeks in the service; repairs are now complete and the system is operational again. The principal remaining weakness of the earth terminal is the pattern sensitivity of the modem; this prevents the bit error rate from being improved beyond the current figure of 1 in 100 million.

An Ethernet connection between the STELLA GEC 4080 and VM in the IBM 3032 has recently been developed which allows files to be transferred at high speed. These files could then be transmitted via the satellite, either directly or by saving them for transmission later. The transfer rate is limited by the filing system on the 4080 and is of the order of 100 kbit/sec.

The Ethernet local area network, which was described in last year's annual report, is now being used quite considerably. The link between the STELLA GEC 4080 and the IBM system is described above. In addition, an Intel 8086 system running the TRIPOS operating system from Cambridge University has been connected to Ethernet. This connection has been developed using a new interfacing method which results in a much smaller, single board interface, packaged in a small metal box. This interface is less than a quarter of the size of the older controllers, but it is restricted to sending packets of about 280 bytes, whereas the larger controllers are able to transmit much larger packets of up to 4 kbytes.

Simple file access protocols have been developed which enable the Intel 8086 and the GEC 4080 to read files record by record from the CMS file store on the IBM system. The TRIPOS operating system is now capable of having its own filestore remote from the 8086, thus enabling TRIPOS discs to be kept as files on either CMS or the GEC 4080.

These investigations are providing valuable information on how best to use a high speed local area network and in future work it is hoped to connect the satellite and local area network systems. These two systems have very similar data rates, but very different response times, and much research is necessary on the best method of interconnection.

The Joint Network Team, based at the Rutherford and Appleton Laboratories, has as its main remit the formulation of plans to integrate data communications arrangements within and between universities and Research Council institutes.

An important element of the team's programme is the promulgation of standard protocols for computer-computer and terminal- computer communications. International standards are being adopted where available and interim standards are being recommended to fulfil functions for which formal standards have not yet emerged. The work of manufacturers and staff in computer centres to implement these protocols is being supplemented by a development programme (funded by the Computer Board and SRC) which is co-ordinated and monitored by the Joint Network Team. Already £400,000 has been committed to ensure that the principal types of machine in the academic community will be capable of supporting the agreed set of protocols. The long-term aim is to give users flexible access to the computing resources best suited to their applications. It is envisaged that this will be achieved by a communications hierarchy with local and wide area networks interconnected via gateways.

Projects are being supported based on various technologies for local communications including campus packet-switches, Ethernets and Cambridge Rings. The emphasis is on the development of components which could eventually lead to commercially supported products of widespread applicability. Wide area communications are at present carried out by a diversity of disjoint schemes such as university regional networks, SRC networks and numerous point-to-point dedicated links. The rationalisation of these arrangements could be achieved by means of an integrated network and the Joint Network Team is investigating some of the available options. These include British Telecom's recently opened Packet Switched Service and the possibility of a separate network serving the academic community. An appropriate solution could lead to significantly increased opportunities for interconnection as well as the more effective use of lines and manpower.

The SRC Grants system has been maintained and further developed during the year. An upgrade to the ICF 2904 computer should stabilise the system for foreseen requirements. The new Awards project has been defined, the overview design completed and the joint team established. The use of advanced data analysis and procedural analysis techniques has been combined with some prototyping capability to ensure user involvement in proving the design.