The first four sections of this Report were concerned with those parts of the Laboratory's programme that fall within the subject areas of the four Boards of SERC. However, there are some programmes that cover areas relevant to more than one Board and in those areas Council provides the funds through Secretary's Department or Central Office Administration. Computing, Networking, The Energy Programme and the activities of the Council Works Unit are the main areas funded centrally and this section of the Report is devoted to them.

Major development of the year in computing has been the replacement of the two IBM 360/195 computers by an IBM 3081D and a resulting reconfiguration of the batch system equipment. The introduction of this modern hardware will allow work to start on the introduction of the MVS batch operating system which will eventually replace the old MVT system. In addition, a contract has been signed which will lead to the installation of an ICL Atlas 10 computer in 1983 thus completing the provision of batch computing as recommended by the Computer Review Working Party.

The computer network has continued to grow and much effort is expended in keeping abreast of the changing standards. There is considerable discussion about the possibility of a national network which would include both SERC and Computer Board machines. Work is in hand to install a number of local area networks on the RAL site.

Development of PERQ software in collaboration with ICL has made considerable progress. Machines are now being installed outside RAL and are expected to make a big impact on the way in which computing will be done in the future.

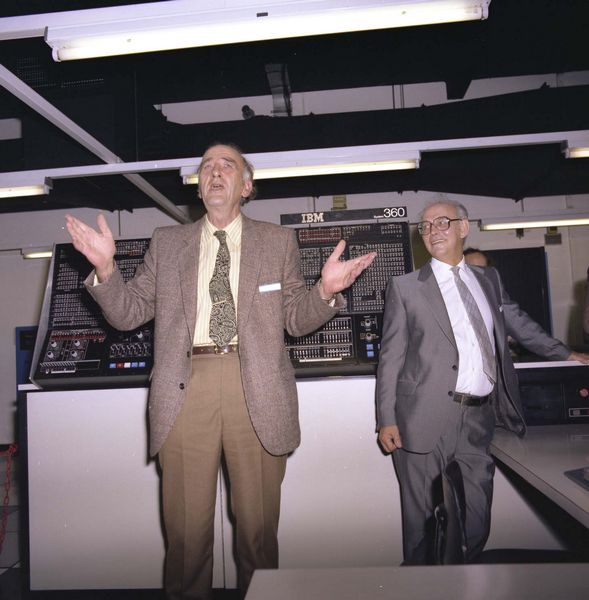

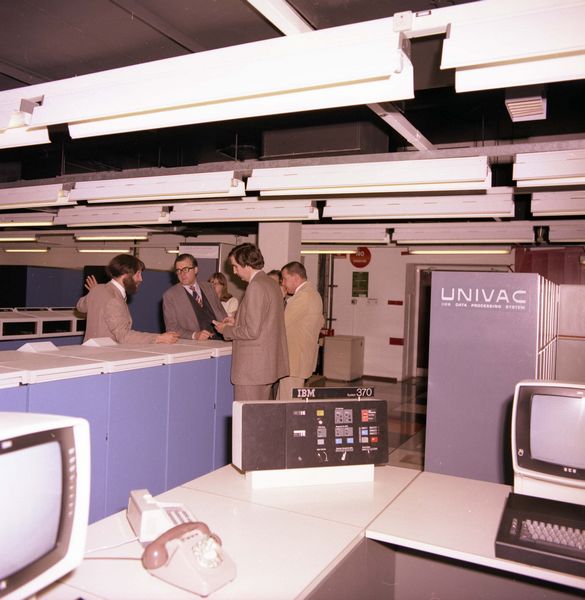

A major programme of replacement of the central mainframe complex was initiated. The UNIVAC 1108, owned by NERC, ceased service in March. Into the space created, a 16 Mbyte 16 channel IBM 3081D (Fig 5.1) was installed in July and was put into service in place of one of the IBM 360/195s. The 360/195s were still giving good service, although 11 years old, but were becoming prohibitively expensive to run, could not be enhanced, and ran operating software no longer supported by IBM. The second 360/195 was removed in October, leaving the configuration shown in Fig 5.2. The MVT batch system is running on the IBM 3032 and on the 3081D. The latter, under control of VM/370, also supports CMS, ELECTRIC, the network and MVS development.

Departure of the second 360/195 left a slight net decrease in processing power (although a substantial increase in memory available to user programs). This will be amply compensated for by the second part of the replacement programme - an ICL Atlas 10. This machine, two and half to three times a 360/195 in power, was ordered in September and is expected to be delivered in May 1983.

Other enhancements included the addition of eight Memorex 300 Mbyte disc drives to assist in the migration from MVT to MVS.

The MVT batch operating system is now frozen, although the installation of the 3081D involved effort in generating and testing the system for the new configuration. A firm development plan for transfer to MVS (a more modern batch operating system) has been produced, and the extra memory on the 3081D has allowed this plan to begin. Although a version of the target system (MVS SP Version 1 Release 3) is already running in development under VM (a hypervisor which allows other operating systems to run under it), transfer of the user service cannot take place until 1984. This is mainly because of the large number of local facilities that have been implemented in ten years of system development on MVT and which need to be phased out, or replaced by MVS features.

One MVS facility has already been tested - that which enables communication links to be established to a VM system on another machine. The RAL VM system has been connected to the CERN MVS system in this way to enable transfer of jobs and files. This will replace the awkward and somewhat unreliable back to back system used in the past. The link to the DESY system (in Hamburg), which also runs MYS, will be treated similarly.

The use of CMS (an interactive system which controls terminals) has continued to increase as users transfer from ELECTRIC (an early terminal system). The number of simultaneously active users is around 50 at peak time, with 70-80 logged in. Response, which had begun to deteriorate, was restored by the installation of the 3081D.

There have been numerous enhancements; three major facilities developed at RAL were as follows:

Other enhancements to CMS include the provision of command files (EXECs) to interface to the JOBSTAT system (which provides details about batch jobs), an extension to the HELP menu facility and an EXEC to allow users to change the blocksize of their disk.

Additional program products have been obtained for use under CMS. CA-SORT/CMS from Computer Associates (CA) is the CMS version of the OS SORT program that has been available to batch users at RAL for many years. Also from CA, the FORTRAN interactive debug program known as SYMBUG has been installed. This supports the FORTRAN G, H and VS-FORTRAN compilers. A fully supported compiler for PASCAL has been obtained from IBM and is at the level of the current ANSI standard. A version of PROLOG, a logical problem-solving language, has been installed.

| Jobs | % | CPU Hrs | % | CMS AUs | % | |

|---|---|---|---|---|---|---|

| Nuclear Physics Board | 241,054 | 41.9 | 7,034 | 73.0 | 3,335 | 22.8 |

| Science Board | 63,216 | 11.0 | 896 | 9.3 | 975 | 6.7 |

| Engineering Board | 33,476 | 5.8 | 712 | 7.4 | 559 | 3.8 |

| Astronomy, Space & Radio Board | 50,222 | 8.7 | 442 | 4.6 | 661 | 4.5 |

| SRC Central Office and Central Computer Operations | 122,279 | 21.3 | 281 | 2.9 | 8,460 | 57.7 |

| Other Research Councils | 34,907 | 6.1 | 159 | 1.6 | 364 | 2.5 |

| External | 29,672 | 5.2 | 118 | 1.2 | 296 | 2.0 |

| Totals | 574,826 | 100.0 | 9,642 | 100 | 14,650 | 100 |

More than 100 workstations of various types are connected to IBM central batch systems, some on direct lines but the majority via SERCnet. The workstations used to be under the control of HASP which is an integral part of the MVT system due to be phased out in 1984. In order to provide continuity for these workstations, they have to be supported by the VM system, under a considerably adapted version of an IBM sub-system known as VNET.

An important feature to users of the RAL batch system is the ability to enquire of the system the status of jobs. Because output of jobs run in the MVT batch are passed from HASP to VNET for processing, a new status mechanism is required. That has been provided by the locally written JOBSTAT system which interfaces to both the HASP spool and the VM spool used by VNET.

After a long development phase, VNET conversion began with a local GEC 2050 at the end of 1981. A number of local workstations followed in quick succession. This provided valuable experience with real users which isolated development tests cannot really simulate. However, by February some serious problems had been identified in both VNET and JOBSTAT; these had to be solved before further conversions were appropriate. They were overcome and between May and October most of the GEC 2050s not scheduled for replacement in the short term were converted to VNET. The complex variety of connections has revealed a succession of minor problems but these are systematically being dealt with, and VNET workstations are in heavy use. Tackling these problems delayed the conversion of workstations involving GEC multi-user minicomputers and Daresbury workstations which began near the end of 1982 along with work on workstations from the miscellaneous group. Some HASP workstations are expected to be superseded by other facilities and will not be moved to VNET.

Computing Division is committed to some form of large-scale data storage device to enable a move away from operator intensive magnetic tape activities. It is expected that eventually a central file store will be accessible at very high speeds from a number of computers on site and to that end a Network Systems Corporation Hyperchannel (Fig 5.3) was purchased for studying as a possible communication mechanism. This is a local area network designed to link computer systems and peripherals at channel speeds over coaxial cable. An experimental link between the 3081 (VM) and a GEC 4090 is being produced, integrating with the X25 level 3 protocols.

Detailed studies of tape and data set usage have been made to help define the required capacity and access characteristics. An operational requirement elicited proposals from a number of manufacturers. None was totally satisfactory and they are being discussed.

The past year has seen the installation of two PRIME computers at RAL: a PRIME 750 to enhance the chip design facility provided by Technology Division, and a PRIME 550 mark II to run an automated office pilot project using PRIME's office automation system (Fig 5.4). This brings the number of SERC PRIMEs to 14, including six at RAL, five of which are connected via PRIME's high-speed ring hardware. Only one, the office automation PRIME, is not connected directly to SERCnet. Most of the PRIMEs have had memory upgrades and East Anglia and Warwick Universities have had 300 Mbyte disk drives installed.

The major event has been installation of revision 18 of Primos, PRIME's operating system. Benefits to users include the introduction of a command procedure language and the adoption of more rational file naming conventions. New facilities include a general software event logging mechanism which supports a performance monitoring package. There is continued development of networking protocols to keep up with evolving standards and the compatibility problems of international interworking. The University of Manchester Institute of Science and Technology (UMIST) archiver is now running on all sites that require it, and a new post command, LPOST, was developed by UMIST to bring the PRIME post system into line with that available on the GEC. The Georgia Tech software tools sub-system had been found to be used mainly for a limited number of its utilities, although heavier use has been made by several aficionados. In particular, the screen editor has proved popular and reflects the need for a generally available screen editor on the PRIMEs. A FORTRAN77 compiler developed by Salford University has been purchased for all SERC PRIME sites and will replace the PRIME supplied FORTRAN77 compiler currently in use.

A completely new suite of programs for providing accounting information on the usage of the MVT system was brought into production, replacing old programs that had undergone many changes over the years. Combined information for category representatives on the usage of MVT time at the various priority levels, CMS account units and ELECTRIC account units are now provided. Consideration is being given to the requirements for policing and accounting systems which will be required with MVS. Although the two 360/195s have now gone, RAL will allocate and account time on the MVT system in 195 hours until it is replaced by MVS. The background jobs of accounting, registering users, allocating resources and reporting to the Boards have continued.

Implementation of Council policy of reducing support at the Multi-user Minicomputer (MUM) sites continued and sites are now accepting a greater level of financial responsibility with a correspondingly greater percentage of the resource available to local university users. Applications for resources on the MUMs have been maintained at a constant level, but with excess resources available, little local shedding between systems has been necessary. Requests for terminals have exceeded the capacity of the terminal pool and the majority of grant applications seeking terminal facilities have required funds to be provided by the Subject Committees.

ISO Draft International Standard GKS has been the major project. Version 7.2 of GKS achieved this status at an ISO meeting in Eindhoven in June due in no small measure to the continued efforts of RAL staff at British Standards and International Standards meetings. RAL staff produced GKS version 7.0 from the decisions taken by ISO at Abingdon and have accepted the task of producing the definitive GKS version 7.2 document. Production of such a large document, which must adhere to ISO rules and be internally self-consistent, is a considerable job.

During the first quarter of the year, a decision was taken that GKS should be made available to all computer systems where graphics was provided by RAL. Since June, a project has been under way, jointly staffed with ICL, to develop a portable GKS version 7.2 implementation. The product of this collaboration will be installed on PERQ, VAX, IBM, GEC and PRIME computers during 1983 and early 1984. One of the main design targets for the implementation is that it should utilise any intelligence in graphics devices.

Some work has gone into promoting and explaining GKS within both academic and commercial arenas; the principal example of this was a two- day tutorial to 50 delegates at Eurographics '82. There was also a heavy involvement in this international conference by providing part of the local organisation committee. As a result of the intensive work on GKS, less has been done on the standard graphics systems than in other years. However, various improvements have been made to all the systems. A revised release of GINO version 2.6 has been distributed to GEC and PRIME systems, as has the Simple plot package and GINO drivers for the Hewlett-Packard 7221 plotter and two new Sigma terminals. GKS version 6.2 drivers for the same plotter and the Cifer 2634 terminal have been installed on the VAX and the Versatec and Printronix drivers improved. On the IBM systems, new drivers for all Sigma terminals and the Calcomp 81 plotter have been written.

The RAL graphics manual was published. Although incomplete, at present, it provides a basis for unified documentation of all RAL graphics systems, including GKS for which a part has been reserved. It is hoped to complete and expand this document in 1983.

In March, camera ready copy was made for volume 13 of the series Molecular Structures and Dimensions, Organic and Organometallic Crystal Structures. Bibliography 1980081. The 670 pages of FR80 hardcopy paper contained references and cross references to more than 5200 crystal structures published in the literature. For the first time, there was a 218-page index containing 3,400 chemical structure diagrams. The remaining 470 pages were the same six textual indexes as those made in previous volumes. The additional index contributes a great deal to the usefulness of the book. Chemists can now find structures by browsing through the diagrams which convey information about the structures much more quickly than words.

In May, a reference set of 22 textual microfiche was made containing information in several different orders concerning the more than 35,000 structures now stored in the Cambridge Crystallographic Database. They have eye readable titles for greater ease of use.

A forms design language has been implemented on the PRIME to enable form changes to be made more easily. Output is produced on a screen during the design phase and can be sent to the FR80 for final processing.

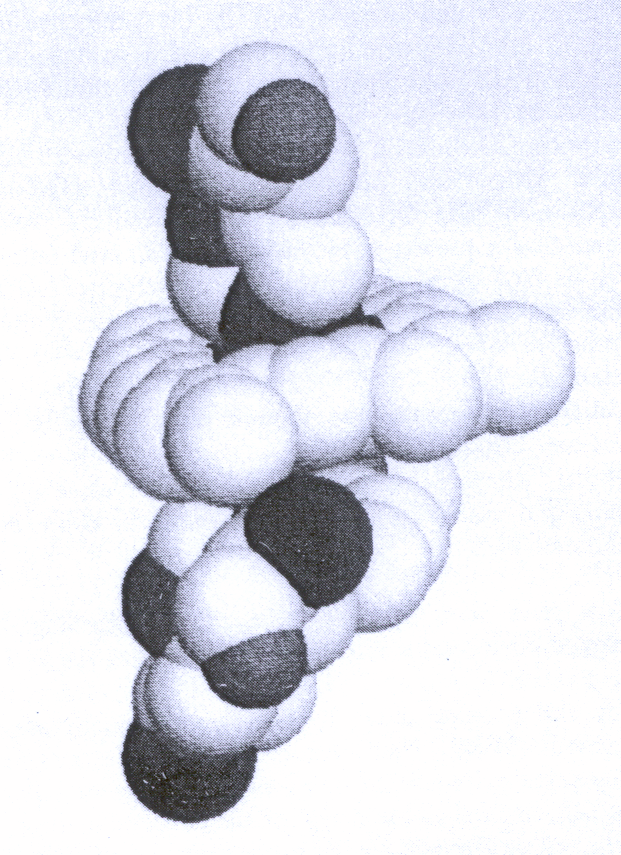

The vector drawing program PLUT078 has been enhanced and mounted on the GEC computers. Users on the SERC computer network can now draw and redraw molecules at low resolution on a visual display unit until they are satisfied with the orientation and type of drawing. They can then send it through the network for high resolution output on the FR80 microfilm recorder. New options include use of colour to make 35 mm slides, use of four different grey scales on hard copy for inclusion with reports and a changed stereo option to make film which fits directly into a cheap plastic stereo viewer designed by the Open University. There have also been experiments with ways of producing shaded space filling molecular drawings (Fig 5.6).

The Database Section set up in 1981 has been organised into subsections to reflect the different types of work. The subsections cover scientific systems, RAL administrative systems, Central Office administrative systems and office automation. Use of techniques for both analysis and implementation of database systems is orthogonal to this structure.

The section has increased its activities. In the area of work being done for (and in conjunction with) staff at Central Office, the existing system for research grants has been developed further and performance has been improved. In particular, joint work with the Electronic Data Processing Unit at Swindon has resulted in a new set of fewer forms for input by administrative staff to the grants system. Design of the new system for handling awards to students is proceeding and proto typing of the system to ensure both correctness and user acceptance is just starting. A management accounting system has been introduced into Central Office and after an initial phase involving Establishment and Organisation Division only, is being extended as a prototype to the whole of Central Office. After six months the specification for the definitive system will be laid down. A pilot project to provide more scientific information about research involving staff of Science Division has begun. The project is named ASCENDA and has already met enthusiastic response from Science Board and from committee secretaries. The project is to be extended, within Science Division, and after some pilot running and evaluation might be extended to cover the total requirement.

On the RAL site, the library project is proceeding. A prototype bibliographic retrieval system covering the years 1981 and 1982 for both RAL books and reports has been set up and is being tested by library staff and by users throughout SERC. User response is enthusiastic. The bibliographic system is to be extended to take in earlier years and, in parallel, the design of the circulation control system will be finalised and this component of the system implemented. The linking of two subsystems for bibliographic retrieval and circulation control is novel and has attracted interest in information retrieval circles. A project has been started with Engineering, Building and Works Division to produce for them systems to cover plant maintenance, running contracts and job progress. All these projects are proceeding well, in various phases from prototype system to full production. The project has required particular attention to user requirement in providing a friendly user interface and has also involved output in graphic form. The section has also given advice and assistance to staff from Computing Division who are using database techniques for computer housekeeping functions.

Staff from the section have been involved in the ECFA (European Committee for Future Accelerators) working group 11 concerned with database facilities for future accelerators in Europe. This participation has meant that the problems of handling high-energy physics data have been appreciated by database section and, hopefully, some of the database techniques used in other areas of work have been considered by the high -energy physics community. Work has continued on the ASR Board projects. In particular a lot of effort has gone into the World Data Centre, not only to make its data storage and retrieval activities more efficient, but also to make computer-readable output from the Centre available to users. The Centre links both in logical scientific and physical communications senses to other ASR Board work, in particular to EISCAT (European Incoherent Scatter) project.

Database section also provides, with what little capacity is left having satisfied the requirements of the funding sources, a general database advice service to users of the computing facilities. This is useful in avoiding duplication of data and effort among users writing their own data handling software. The result is more efficient data access through well-designed data structures and use of optimal software techniques for data access. The section has reduced the number of supported database software packages to a chosen few, allowing the section to provide maximum database application support with minimal overheads in system support. The supported packages are machine-independent and cover the range of types of system from relational through network to bibliographic. The section has also been keeping abreast of developments in database hardware: the emergence of specialist database machines has been monitored.

Database section is concerned with office automation projects, because of the strong link between office automation and database system technology stretching from the user interface through to the considerations of data storage and retrieval. There are three projects in progress:

These three pilot projects should provide a great deal of experience of both the technical and human considerations in office automation technology. They should provide the basis for a specification of the ideal system. The inter-connection using local area network and wide area networks, and the interaction of office automation systems with data processing and database systems are areas of research which should provide products of use both to the academic community and to UK industry.

The SERC/NERC network has continued to grow at a rapid rate. New GEC 4000 series machines have been installed as packet switching exchanges (PSEs) at Edinburgh, Cambridge, University of London Computer Centre and CERN. The number of host machines connected, to the network has also continued to increase and is now about 115. Those directly connected include the central IBM complex and substantial numbers of GEC 4000s, PRIMES, VAXs, PDP11s and GEC 2050s. Many others are connected to SERCnet via inter-network 'gateways'. In particular there are gateways between SERCnet and PSS, SERCnet and ARPANET, and SERCnet and DEC 10 systems such as those at York, Edinburgh and Bangor.

The network is now considered to be PSS-compatible in terms of the protocols it uses up to X25 level 3. Host machines use UK interim standard protocols such as Yellow Book Transport Service and Blue Book File Transfer protocol (FTP). Some machines have implementations of the Grey Book JNT Mail protocol. Implementations of the Red Book Job Transfer and Manipulation Protocol are being developed.

Most host machines support the CCITT X29 protocol for terminal-type activities and often in addition the Transport Service compatible variant of this known as TS29. Two private protocols, packetized HASP for remote job entry and ITP for interactive terminals, are still in large scale use over the network. It is envisaged that these will be superseded over a long period by JTMP and X29/TS29 respectively.

For two main reasons, it has become clear that a move to manufacturer-supported software in the GEC PSEs would be desirable. First, it would ensure long-term maintenance and further developments to keep abreast of possible changes in the CCITT protocols without permanently tying up RAL resources. Second, the locally-written software does not have the network management tools needed to cope with possible further large-scale network growth in the future. Work is already in progress to see whether the GEC-supplied product can be made to interwork with the existing SERC software in different PSEs within the network. This will be an essential requirement for bringing the new software into service in a phased manner.

Another important development is the arrival on the scene of the JNT PAD, which is primarily a small machine through which clusters of interactive terminals can be connected to the network. Evaluation of this equipment is currently in progress at RAL.

Finally, a transition exercise has been in progress to move FTP implementations from the original FTP77 definition of the protocol to the Revised FTP80 definition. This has proved to be a fairly difficult and time-consuming task but it is now nearing completion.

With PSS-compatibility now effectively achieved up to level 3, the focus is likely to shift to level 4 (Transport level). An international standard for level 4 is now taking firm shape and a draft specification is being finalised in ISO. The European Computer Manufacturers Association (ECMA) has also issued a level 4 specification which is in line with the ISO work.

The UK academic community has adopted the Yellow Book Transport Service as an interim standard, but it is becoming increasingly clear that this will not have a long-term future. Studies need to begin on how to move over to the new standard. Such work will be started in British Telecom study groups as well as in SERC committees and it is intended that RAL will continue to participate fully in such activities.

International standardisation of the high level protocols (file transfer, job transfer, mail) still seems to be distant and the UK interim protocols for these activities therefore seem to be assured of a fairly long life.

Preceding sections have dealt with wide area X25 networks. This is now an established technology and satisfies current needs reasonably well. The relatively low speeds that can be achieved are a limiting factor, however, and the use of high speed local area networks is a way of overcoming this factor within a limited geographical area. For instance local area networks on the RAL site, with data rates in the megabit range, would make considerable sense.

There are four possible approaches:

It has been decided to install a number of Cambridge Rings. This decision is based on a number of factors:

There are plans to install four Rings initially:

A large amount of trunking and cabling has been necessary, but this should be completed early in 1983. Further Rings might be installed.

Acorn Orbis will produce Ring interfaces for GEC, PRIME and DEC equipment. The PERQ interface is being engineered by a manufacturer from an RAL design. The IBM interface will be based on an interface from AUSCOM and will make use of work already done in the UNIVERSE project. It is hoped that JNT contracts with industry will result in a Ring PAD and a Ring-to-X25 gateway. All this equipment should become available in 1983. Local Area Networks should therefore become fully operational in late 1983 and 1984. Within a few years it is anticipated that even higher speeds will become available, possibly up to 100 Mbits per second.

The Computer Board and SERC fund the Joint Network Team (JNT), which is based at RAL and is responsible for co-ordinating the development of networking throughout the academic community. Its main activities are:

The community has adopted a set of protocols known as the coloured books for applications ranging from terminal handling to job submission. The JNT acts as custodian for the interpretation of these definitions. Projects are in progress to implement the protocols on a number of different machines, either under JNT sponsorship or on the initiative of individual computer centres. Important opportunities to secure manufacturers' support for the protocols occur when new systems are procured and the JNT participates extensively in negotiations with manufacturers to achieve that objective.

Whilst recognising that products based on a number of high-speed local network technologies will shortly be on the market, the JNT has concentrated its resources on the Cambridge Ring. With collaboration from several sources, including staff of SERC's Distributed Computing Systems SPP, the JNT has produced a specification of standards for internal ring operations and external interfaces. This forms the basis of a major procurement of ring components. In addition, a development project is being funded to produce intelligent high performance adaptors for attaching several types of machines to Cambridge Rings.

As secretariat to the academic community's Network Management Committee, the JNT has provided material as input to the Committee's recommendations for unified wide-area networking. It has now been agreed that existing networking services used by the community are to be brought together under common management. A small network executive is to be established at RAL to co-ordinate this operation under a Director of Networking.

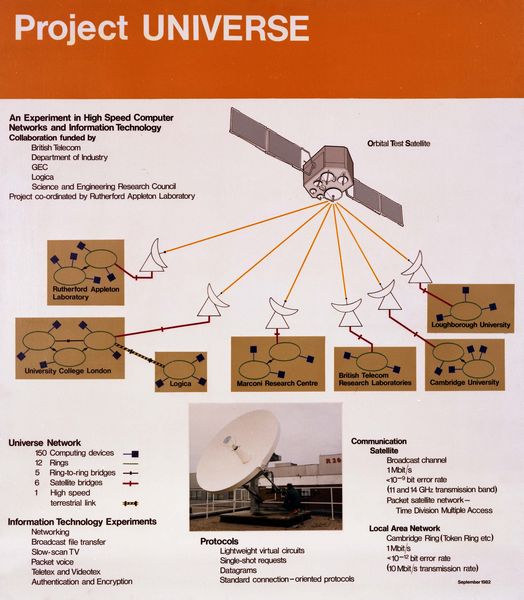

The UNIVERSE Project is a co-operative investigation into distributed computing and high bandwidth networking. In addition to Rutherford Appleton Laboratory, the participants are Cambridge University, Loughborough University and University College, London, from the academic sector, and British Telecom, GEC-Marconi and Logica from the industrial sector. The project is supported by the Department of Industry; RAL provides the project management. The project centres around the construction and use of a high bandwidth communication network. For local area communication, each site has one or more Cambridge Ring networks. For wide area communication, the first six sites in the above list are linked together by a broadcast satellite channel, using the OTS satellite. The seventh site (at Logica) is connected to University College by a terrestrial link of comparable performance. Project effort this year has been concentrated in two areas: the assembly and commissioning of the network infrastructure, and the planning of the first phase of the experimental programme.

The UNIVERSE experimental group at RAL played a key role in assembling the necessary equipment and in programming the computers which link the satellite Earth terminals to the Cambridge Rings. This involved a GEC4000 series machine commanding a microprocessor-based interface machine, originally developed for the STELLA project. Initial communication was achieved between the RAL ring and the satellite early in the year. Currently five of the six sites are connected operationally by the satellite network.

Experiments are planned to cover a wide range of applications, including distributed computing, voice communication and slow scan television. Preliminary experiments on the remote access to a distributed filing system have been carried out between the Cambridge University Group and the Group at RAL, and more than a dozen different experiments are in progress or being planned in detail by various different groupings of the participants.

The next year is expected to bring a stable network infrastructure. The consequence will be a substantial increase in the level of experimental and demonstrational activity, giving new insight into the uses of high bandwidth communication between computers.