1989 has seen exciting developments in the range of advanced computing services provided at the Atlas Centre. As a result of a joint study agreement with IBM the mainframe service has been upgraded to a powerful 3090/600E supercomputer with six processors and six vector facilities. Overall, the power of the six processor IBM machine is comparable to the four processor Cray X-MP/48 operated at the Atlas Centre on behalf of the Research Councils.

In order to fulfil the increasing expectations of supercomputer users for better networking facilities two trial high speed connections have been installed, to the Council's Daresbury Laboratory and to a major user at Imperial College. These are intended to be the forerunners of higher speed and more functional networking to the user community.

A facility for the routine production of animated graphics sequences on videotape is now in production and adds to the impact and the scientific interpretation of the large volumes of data generated on supercomputers.

The installation of the new IBM 3090/600E has doubled the computing power available at the Atlas Centre for advanced scientific computing and more importantly it has allowed a large increase in the memory size available to users. The Cray X-MP / 48 is limited to 8 Mword of 64 bit memory (64 Mbyte) but the 3090 has 256 Mbyte of main memory and 1 Gbyte of extended memory for fast paging. Users can specify a job size of up to 999 Mbyte under the VM operating system.

As part of the joint study agreement with IBM a small number of strategic users have been selected who have scientific problems that need the parallel computing power and large memory capability of the 600E. The projects selected are:

The IBM 3090 is also used extensively by the UK particle physics community for data analysis from CERN and other particle accelerator laboratories. The commissioning of the LEP accelerator at CERN in summer 1989 will lead to a further increase in this use.

The use of the Cray X-MP/48 by scientists and engineers supported by the Research Councils has continued reliably through the year. A book of scientific highlights was published in January 1989 and in March a meeting was held to survey the achievements and future needs in scientific supercomputing. The Cray X-MP/48 and IBM 3090/600E still provide for state of the art supercomputing but work in many scientific disciplines, particularly drug design and global environmental modelling, can profitably make use of machines ten to one hundred times more powerful.

A shallow sea model used in oceanographic research has been structured to make best use of the four processor multi-tasking on the Cray X-MP. The resulting performance of a sustained 558 Mflop is one of the fastest sustained speeds recorded for real user applications. However the measure of progress in supercomputing performance is that the same code runs at 1.7 Gflop on an eight processor Cray Y-MP in the USA.

The IBM 3090 model 200E was upgraded to a model 600E at the end of May increasing its performance to about 80 Mip. The 600E has six processors, each with a Vector Facility, 256 Mbyte of main memory, 1024 Mbyte of expanded storage and 64 channels. An additional 20 Gbyte of 3380 disk space and six 3480 tape drives were installed as part of the joint study contract upgrade.

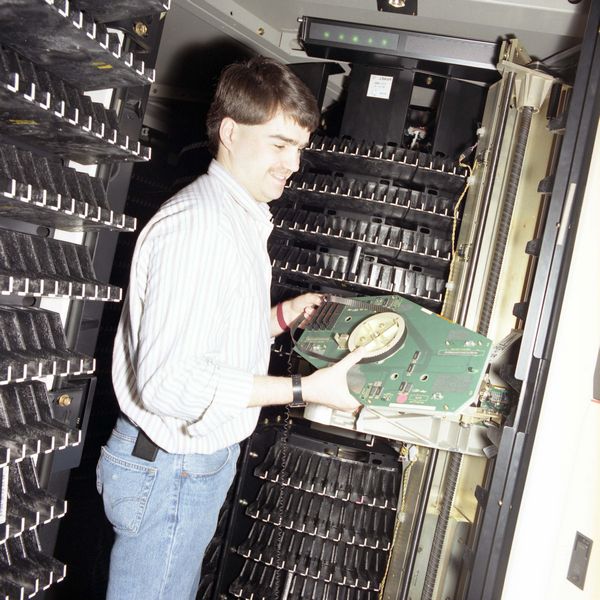

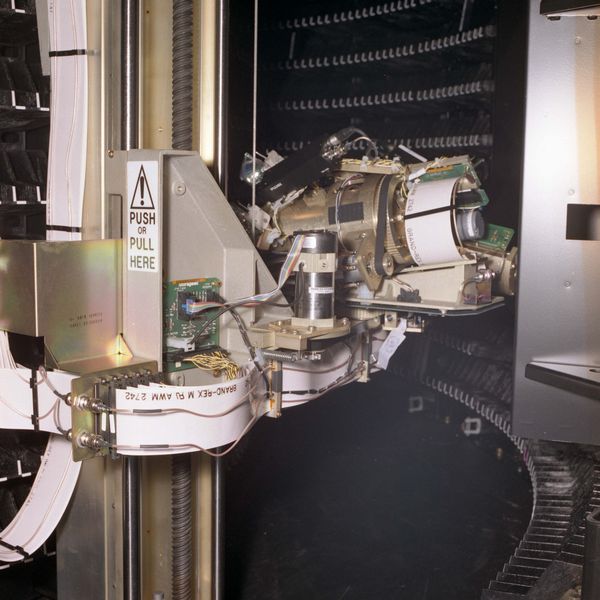

A robotic system for handling 3480 cartridge tapes automatically has been supplied by StorageTek. The STK 4400 includes a Library Storage Module with a capacity of 5,500 tapes (approximately 1 Tbyte of data), 2 tape drive units, each with 4 transports and a Library Management Unit. A Host Software Component, which will run under VM/XA on the 3090, will provide access to the STK4400 from the VM, MVS and Cray operating systems.

Other major hardware installed this year includes:

The migration of the VM system to the XA (extended architecture) version was completed by the installation of CMS release 5.5. This has enabled several users to run with virtual machines and thus program memory sizes of several hundreds of megabytes. All the software and system modifications which form the HEPVM suite were reworked for XA and the collaboration with CERN and other HEP sites has continued to prove beneficial for all concerned.

The RAL Tape Management System (TMS) was put into production and is being interfaced to all three mainframe operating systems and to the ACS. TMS has also been installed at IN2P3 in Lyon and at CERN where it forms part of the FATMEN project. Other HEPVM sites are evaluating it.

Version 5.0 of UNICOS, Cray's version of the Unix operating system, has been installed as a guest operating system on the Cray for extensive testing. Once it has been determined that it provides equivalent functionality to COS, particularly in the area of dataset archiving, the process of migration from COS to UNICOS will begin.

The Amdahl 4705 communications controller has been replaced by the substantially faster IBM 3745 which has necessitated the installation of new versions of the Network Control Program and the Network Packet Switching Interface which provides access to the 3090 from the JANET network.

IBM's version of TCP/IP was installed under VM on the 3090 to provide connectivity to UNIX workstations and PCs via the RAL ethernet. An IBM 8232 LAN channel station provides the physical connection between the ethernet and the 3090. The service provides terminal and file transfer capabilities and is expected to be used for full-screen terminal access to CMS and for backing-up Unix filestores.

Two trial high speed TCP/IP links have been installed to provide a greater speed and functionality than is available over JANET. A 256 kbit/sec connection to SERC Daresbury Laboratory links the two site ethernets in a transparent fashion via Vitalink bridges. A file transfer rate of 29 kbyte/sec has been achieved out of a nominal 32 kbyte/sec bandwidth. The other line is to an individual user at Imperial College who requires high volume data transfer between the RAL Cray and IBM systems and a local cluster of high performance workstations.

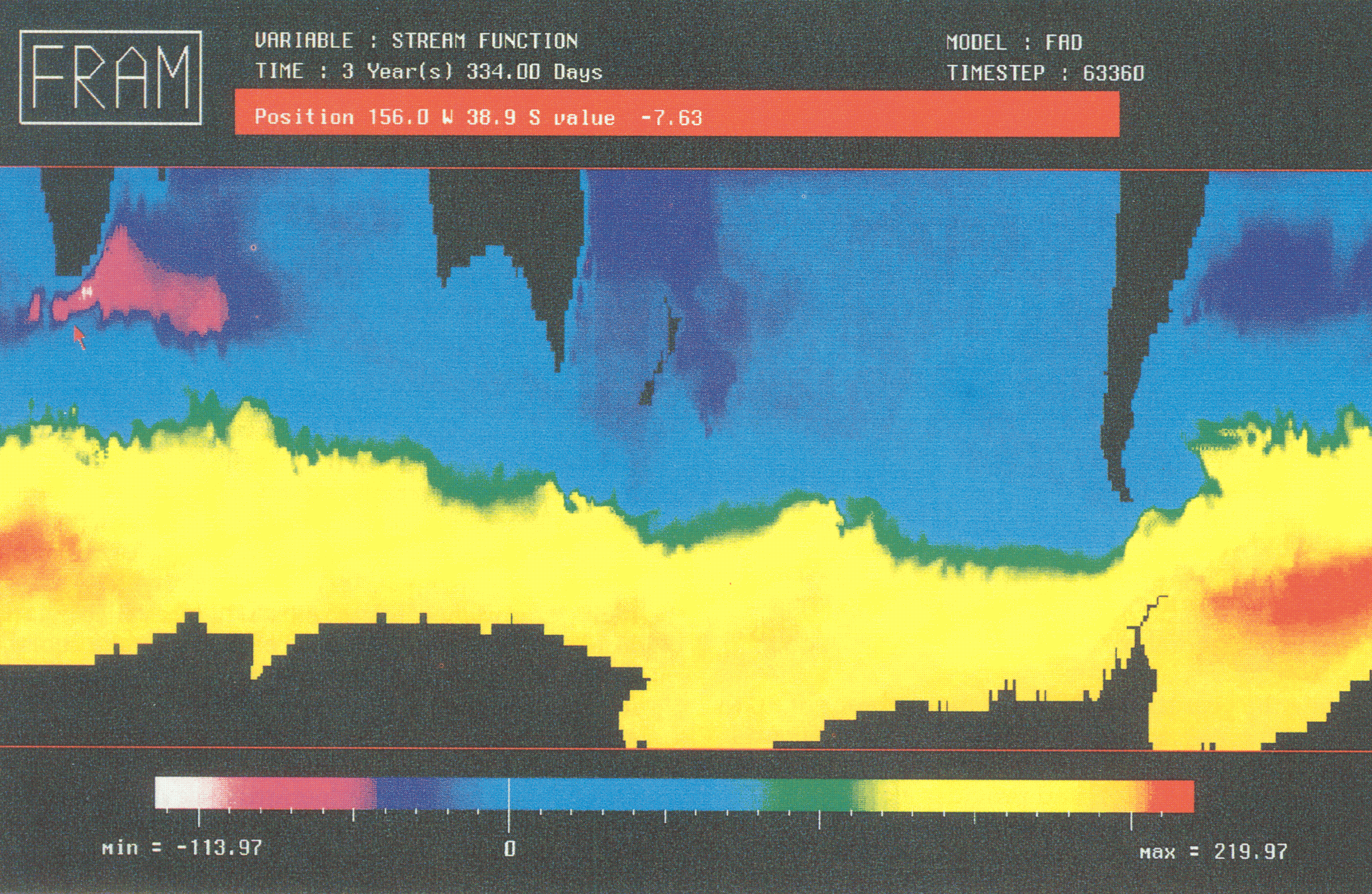

A demonstration of output from the RAL Video Output Facility was shown at the Eurographics UK'89 conference in March and since then a steady stream of users has made use of it. Several computational fluid dynamics sequences have been produced for St Andrews University. The Fine Resolution Antarctic Model (FRAM) project (Fig 5.2) running on the Cray has produced a video of the output of their model to date. This sequence, together with a demonstration of the model on the Silicon Graphics workstation at Atlas, has recently been included in the Open University oceanography course.

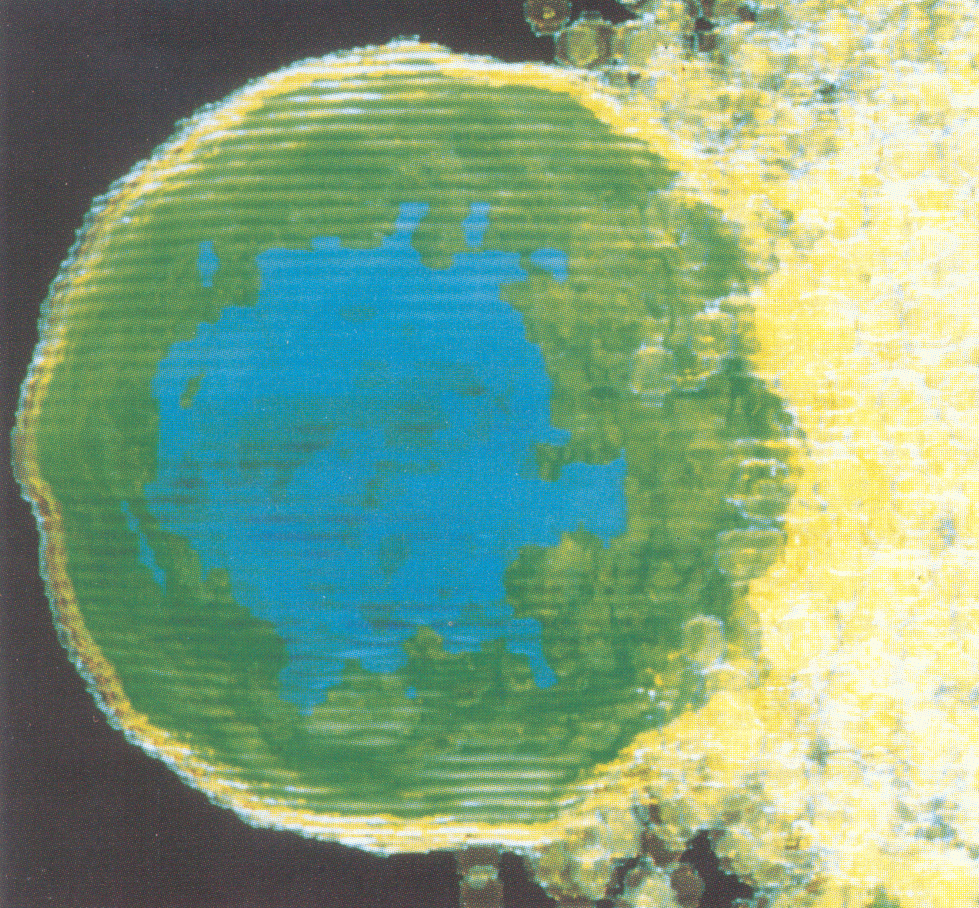

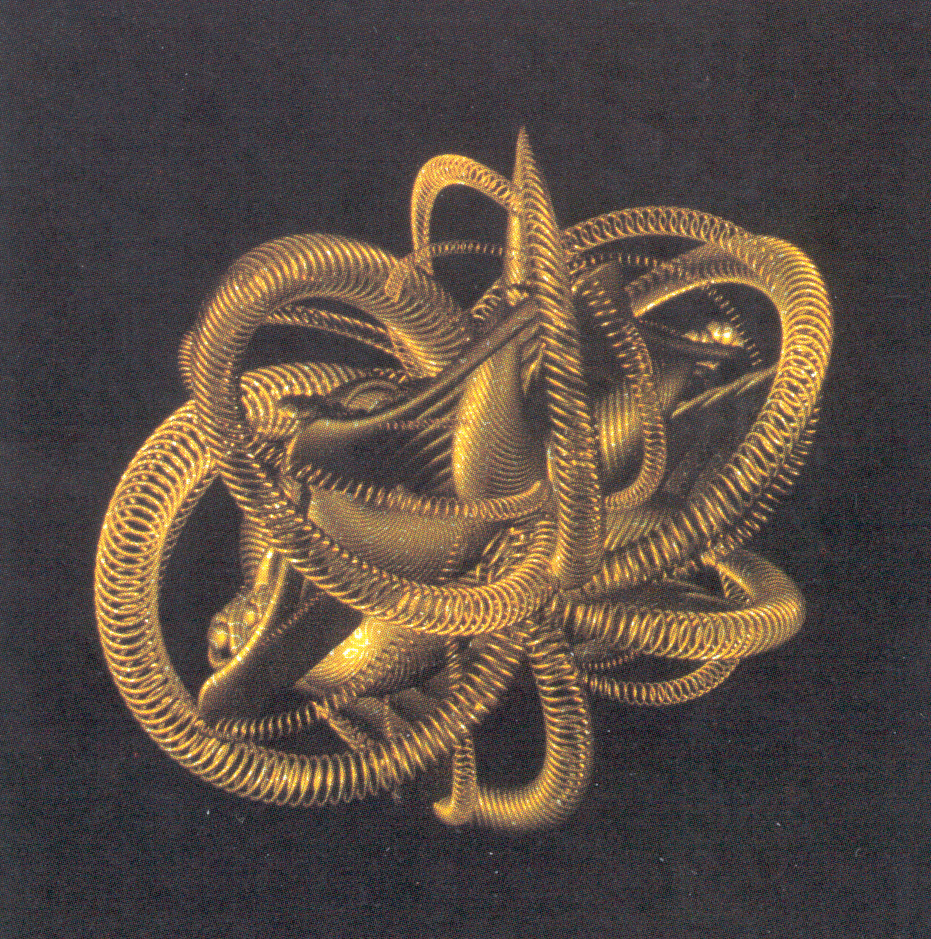

As a result of a collaboration with IBM, a very powerful solid modelling system known as WINSOM (Fig 5.3) is now available on the IBM 3090 at the Atlas Centre, further extending the range of software provided for the presentation and visualization of scientific results.

The installation of UNlRAS on the majority of computers in the UK academic environment has addressed a long-felt requirement for high quality graphics. SERC, in common with the other Research Councils, most universities and many polytechnics, has installed this comprehensive set of packages which contains facilities for the display of scientific data in many different forms.

The number of users registered to use the RAL Office System has increased to about 1000 over the year. The major growth has been at SERC Swindon Office.

Approximately half the staff at Swindon are registered PROFS users. The number of PCs in use has increased markedly. Most Swindon users have PCs. The number of transact10ns carried out daily has increased from 3626 in January to 4426 in October. At the end of the year it will be at least 5000, an annual increase of 38%. The average number of users active during peak times of the day is at least 200. The main functions used are:

| Type of Transaction | Usage% |

|---|---|

| Electronic Mail | 52 |

| PROFS Diary | 19 |

| PROFS Documents | 14 |

| PROFS Directory | 5 |

| Other Functions | 10 |

| TOTAL | 100 |

Following the production use of PROFS at RAL and Swindon Office, a pilot project has been started at Daresbury Laboratory.

During the year the PROFS system was brought up to the current release level, ready for the move towards OFFICEVISION which is an evolutionary development of PROFS and provides close integration of office system facilities across the workstation (PS/2) and mainframe.

The new Library database system is being implemented on the IBM3090 using STATUS (for the bibliographic information) and SQL/DS (for Library housekeeping). General users will see catalogue and loan information. This system replaces that which ran on Prime E for eight years.

The Grants Decision Support system has been set up and is being used by a community of PROFS users at Swindon Office. The system utilises a copy of data from the Transaction Processing Grants System running on JACS (Joint Administrative Computer System). The advantage of the system is that it provides ad hoc query and reporting facilities and a method for downloading information from the database to systems running on personal computers.

A prototype heterogeneous distributed database containing information about current research projects from 8 countries (EXIRPTS) has been implemented. The feasibility of retrieving data from the catalogue, and using a high-level query /response protocol to obtain over the network detailed information from the originating country, has been demonstrated to the Presidents of Research Councils who initiated the project.

Support for the World Data Centre and Geophysical Data Facility continues to be a major service. Growth in the use of both facilities continues to justify this effort. Advice and assistance for many scientific projects where data handling is of importance continues to be a major item in the workload.

R-EXEC, the RAL-developed relational data handling system for scientists, has been developed further and a new version has been released. The system is now used on IBM (VM and MVS), VAX (VMS) and PC (DOS) systems. It was developed originally under Unix.

During the year the Unit has received funding for two major new networking initiatives in the UK academic community.

The first initiative covers a major upgrade of the JANET network which interconnects university, polytechnic and Research Council sites. The aim of the initiative is to create JANET MK II, a 2 Mbit/sec switched network serving all universities and major SERC and NERC laboratories. Initial performance tests are promising and it is expected that the first high performance links to sites will be available early in 1990. A phased upgrading of the other site access links and the JANET trunk network is planned over a four year period. This upgrade is seen as a stepping stone towards the development of a much higher bandwidth wide-area network, dubbed Super JANET, perhaps offering performance in excess of 100 Mbit/sec.

The second initiative is aimed at upgrading the performance of the university campus LANs. A programme of development and procurement activities over the next four years is aimed at providing each university with a backbone LAN operating at 100 Mbit/sec or greater. Initially the backbone LANs will interconnect lower performance LANs serving departments and other groups on the campus. The next phase will allow the direct connection of large host systems and campus servers followed by the direct connection of workstations. Interfaces to connect the backbone LAN s to a high performance wide-area network are included in the later stages of the programme and this will provide the foundation for a community-wide high performance backbone network.

At the international level the implementation phase of the COSINE project commenced during the year. This is a three year project involving 19 European countries and the European Commission aimed at providing a coherent data communications infrastructure for the European research community. The community involved includes academic and industrial research workers. Funding for the implementation phase was approved during the year. UK participation in this project will be funded jointly by the Computer Board, DTI, ESRC, NERC and SERC. A major part of the project is the provision of IXI, a pan-European X.25 network. This is expected to start a pilot service in February 1990 and will interconnect national networks in 18 European countries.