The vector supercomputing service at RAL operated on behalf of all Research Councils has moved to a new Cray Y-MP8I/8128 supercomputer of almost 3 Gflop peak performance and with a fast memory of 1 Gbyte.

The need for fast scalar computing continues to grow and a two processor VAX computer with the new Digital ALPHA processors offers nearly 400 Mflop of scalar processing at a very competitive price performance ratio.

The bulk file storage capacity at the Atlas Centre has more than doubled with a second StorageTek STK4400 tape silo and the use of higher capacity tapes. The Virtual Tape Protocol makes this storage capacity available to Unix workstations on the Janet IP Service.

The national networking capability within the UK is set to make a major leap forward with the placement of contracts for the provision of the Super JANET service early in 1993.

For some years now the Cray X-MP service at RAL has been severely overloaded with peer reviewed work demanding the power of central supercomputers. As a result of a procurement process beginning late in 1991, a Cray Y-MP8I/8128 was ordered for installation at the Atlas Centre. The machine has eight processors, each with a clock cycle time of 6 nsec and a peak performance of 333 Mflop, giving a total of 2.7 Gflop and a memory of 128 Mword (1 Gbyte). The initial installation has no SSD (solid state device for high speed storage) but has 100 Gbyte of disk space split between fast DD60 disk drives (20 Mbyte/sec) and slow DD61 disk drives (3 Mbyte/sec).

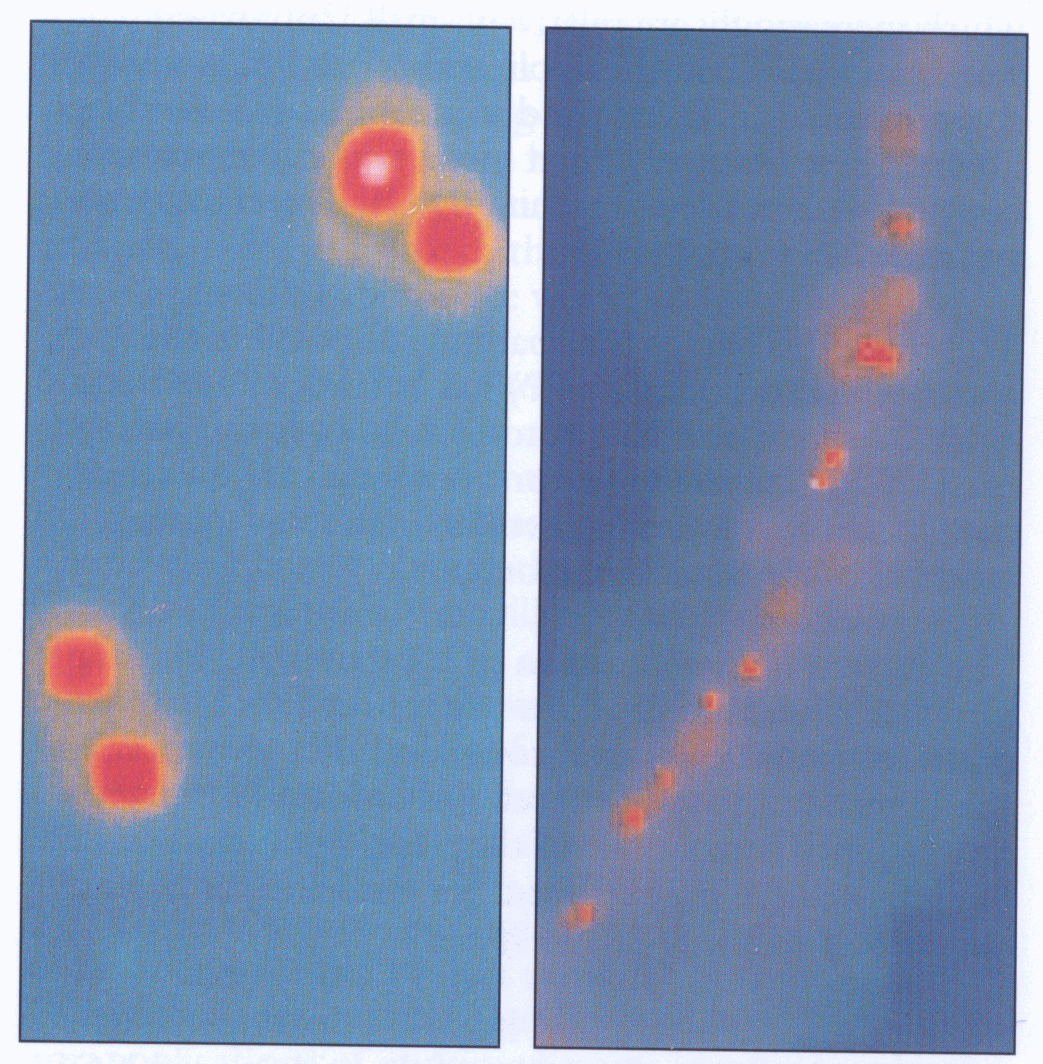

The Y-MP8I was delivered to the Atlas Centre on 6 August (Fig 5.1), first powered up on 8 August and software installation began on 11 August. A formal 14 day acceptance trial from 17 August to 31 August allowed some users to run the benchmark codes on real scientific research problems and some of the results of simulation of star formation from the Cardiff group are shown in Fig 5.2. The migration of the user workload from the X-MP to the Y-MP was accomplished very smoothly and the Y-MP became the main production service on 5 October. The X-MP remained as a fall-back service for a few weeks and was finally turned off on 7 November.

Demand for the new Y-MP service is already growing close to the limit of what is available (see Facts and Figures) and NERC in particular have large environmental modelling programs that will consume the whole of the NERC share of the Y-MP.

The tender for the Cray Y-MP8I included new application packages and library modules which substantially enhance the capability of the Cray service.

Cray's Unichem application software for chemistry is a highly integrated package with a graphical front-end running on Silicon Graphics workstations that may be located anywhere on the JANET IP service (TIPS) and a choice of structure and energy codes including a new density functional module DGAUSS.

The Multi Purpose Graphic Software (MPGS) is targeted mainly at the engineering community with finite element models and again is a distributed application with a Silicon Graphics workstation front-end. The Cray Visualisation Toolkit (CVT) library enables developers to port X-windows and Motif applications to the Y-MP very quickly.

This year has seen the installation of a major new computer system from Digital into the Central Computing Department at RAL. The system is one based upon Digital's recently announced ALPHA microprocessor with its advanced super-scalar architecture. With its clock time of just 5 nsec ALPHA is one of the fastest scalar processors available on the market today and actually has a faster cycle time than the Cray Y-MP8I. Deployment of the ALPHA-based service will enable SERC to meet the forecast demand from its scientific community for an increase in scalar processing capability of three to four times the capacity of the current IBM 3090. The ALPHA currently runs Digital's VMS operating system and also has the capability to run the emerging Unix standard operating system OSF /1. VAX/VMS is already in widespread use throughout the SERC community which will benefit from extended central support in this area.

The Digital system has a connection to the StorageTek automatic cartridge store and has connectivity to all Higher Education Institutions served by the JANET network. It is available for use by the same community as is currently served by the central IBM system.

There is a growing need for large scientific applications to run on the most cost-effective hardware platforms, which increasingly are relatively small Unix-based workstations. When the applications need to access shared data, or data archived in a robot-controlled tape library, there is usually great inconvenience in manually copying data between machines with different operating systems.

To bridge this gap as transparently as possible the Virtual Tape Protocol (VTP) has been developed at the Atlas Centre. The VTP protocol runs over the widely used TCP /IP networking protocols and can be used over local or wide area networks, in principle giving worldwide access to tape storage.

The current implementation of VTP uses a C language interface allowing full access to tape read/write and positioning and the tape command that gives access at the Unix command line level. Other Unix commands can be piped into or out of the 'tape' command so that the remote tape may be used, for instance, for backup and restore of the local file system.

An input queue scheduler has been developed that greatly increases the flexibility of the widely used NQS (Network Queue Scheduler) software that is used to manage batch queues on the Cray service and on other Unix machines. The input queue scheduler works by routing all input work to queues that never run. It then regularly interrogates the executing batch queues and according to the workload, the time of day and other configurable requirements, it will move work from the dummy queues to real NQS queues that are then allowed to start normally. NQS provides local queue management and browsing commands to the user and can either treat all users as equal, or prevent users from hogging queues with too many jobs, or give users high priority if their status and allocations require it.

Through the initiative of the Advisory Group on Computer Graphics (AGOCG) the AVS visualisation software is available on a site licence basis to all UK HEIs. AVS is capable of running its most computationally intensive components on remote computers and modules are available on the Cray YMP to supplement the workstation front-end display.

The Atlas Video Facility has been used to display simulations of the atmospheric circulation vortex at the North Pole together with satellite images from UARS. A still image from the video sequence is shown in Fig 5.3. The near universal support for Postscript as a graphical description language has meant that most of the printing on the RAL site is now via Postscript. The large central printing services are increasingly being replaced by distributed print servers for both text and graphics.

A large amount of effort has gone into release 11 of the Harwell Subroutine Library and its associated documentation. Release 11 contains many updates and significant new routines, particularly for the solution of large sparse linear systems.

The LANCELOT package was fully launched during the year with the publication of a users' manual for the code by Springer Verlag. Over 50 copies of the package have already been distributed and the algorithm is being used by others as a benchmark for comparisons with newer experimental codes.

Work with the Fortran standards organisations is currently concentrating on the handling of exceptions within the language and the proposed Language Independent Arithmetic standard.

The involvement of the group in parallel computing continues to grow with projects at Oxford Parallel and at CERFACS as well as the definition and creation of a benchmark suite for the proposed procurement of a massively parallel supercomputer in 1993.

In December 1991, following support from the then Department of Education and Science, the Universities' Funding Council approved funding for the first phase of the SuperJANET initiative. SuperJANET is the foundation for the development of a national broadband network to support UK higher education and research. The planned network is based on optical fibre technology to give very high performance with a development path leading to Gbit/sec transmission rates.

The SuperJANET network will be provided under a contract with BT in which they will collaborate with the UK academic community to develop an advanced broadband switching platform based on ATM (Asynchronous Transport Mode).

BT will provide an optical fibre network together with a range of services to support the development of SuperJANET. The aim is to maximise the number of sites connected and offer a range of access speeds to meet different site requirements. The academic community will help BT to test and pilot new broadband services.

The contract with BT covers the provision of an SDH (Synchronous Data Hierarchy) network serving up to 16 sites and offering access at 155 Mbit/s and 2 × 155 Mbit/s, with trunk network performance of up to 622 Mbit/s. Prior to the availability of the SDH network the sites will be provided with 140 Mbit/s PDH capacity. The SDH network will be complemented by BT's SMDS (Switched Multimegabit Data Service) network offering access initially at 10 Mbit/s. The total number of sites connected is expected to exceed 45.

SuperJANET will support a wide range of new applications. The Pilot Network in March 1993 will be used to demonstrate the potential for SuperJANET to support activities such as remote access to supercomputers, electronic publishing, remote consultation, group communication, medical imaging, remote browsing of image databases and distance learning.

This year has seen a rapid expansion in the capabilities of JANET and its services. The so-called JANET Mk II initiative, to provide 2 Mbit/s access to the UFC-funded universities, has been completed with over 50 sites now connected at that speed. A programme of upgrades to 64 kbit/s for PCFC-funded universities is well underway. See Facts and Figures for the growth in traffic carried by JANET over the last few years.

The JANET IP Service (JIPS) has been launched after a highly successful pilot activity, and is expanding rapidly. At the time of writing some 90 JANET sites have requested connection to JIPS and it now accounts for about half the traffic carried over JANET.

Preparatory work has started to develop the technical requirements for the widespread deployment of the new lower layer Asynchronous Transfer Mode. Work has started on developing a wide range of applications that can fully exploit, at an early stage, the new facilities offered by Super JANET. In the coming years there will be numerous opportunities for collaboration between industry and academia in advanced network research, development and service provision.

RAL is part of a consortium that made a successful bid to carry out a project called CHARISMA under the Commission of the European Communities' RACE II programme. CHARISMA is an applications project in the area of generic case handling with multimedia data. Everyone is familiar with the concept of a case if only in the Sherlock Holmes sense. As a computer application, case handling takes two forms, structured and unstructured. In structured case handling, sometimes called work-flow, the possible processing flows for cases are known in advance, as for example in the processing of a planning application or an insurance claim. In unstructured case handling, processing paths are determined by events and are not known in advance, as for example in the investigative cases that occur in medical and police work.

All types of digital data are to be handled, including text, graphics, images, and audio and video clips. The case data will be held on a server which will combine the functions of a normal database server with the ability to handle large binary objects, the video and audio clips. Case data from the server will be made available to multimedia user stations via a broadband network. Cooperative working on cases will be catered for. Work is progressing on the design of a Fourth Generation Language for customising the basic case handling elements to particular applications.

The UK groups are developing the networking components for the project using ATM technology. Plans have been made to demonstrate broadband wide-area applications of multimedia case handling using the SuperJANET network in the UK and the RECIBA network in Madrid.

On 1 April 1992, RAL switched to a new accounting system (DBS) running on JACS at Swindon. The need to make the financial information widely available to project managers meant a new Financial Data System (FDS) which aims to provide instant, easy access to accounting data, initially integrated with the SERC Office System. The implementation exploits powerful tools such as QMF and ISPF on top of an SQL/DS relational database. These provide users with better, more flexible access to information and allow a quicker development schedule.

CCD's implementation of a computer system for the RAL Library is a novel integration of an information retrieval text base (using STATUS) for the catalogue with a conventional relational database (SQL/DS) for the housekeeping (eg loans records).

The latter also links to the personnel database. Designed for use by the library staff, the software has now been extended to provide full screen access to catalogue and loans data for Office System and other general users.