The benefits that can be brought to many fields of research by an intimate dialogue between man and machine are now widely recognised. During 1979 the SRC's initiative in setting up the Interactive Computing Facility (ICF) to provide such benefits, especially in engineering, can be said to have come of age. By the end of the year the number of registered ICF users had risen to 1300 on the 23 machines listed in Table 3.1. Sites had been chosen for five more Multi-User Minicomputers (MUMs), and the end of the present programme for installing MUMs became visible. Most important of all, the first courses were organised to acquaint research workers with application software mounted in accordance with Special Interest Group recommendations.

The year began with the acceptance by Council of a review of the progress so far in implementing the report of the Technical Group on Engineering Requirements (Rosenbrock Report), and the approval of a Six Year Plan for development of the ICF. In particular, it was agreed that it was practicable to establish a set of some 15 Multi User Minis, each serving a local population of 60 to 80 users at a university or polytechnic, yet managed centrally at the Rutherford Laboratory and interlinked by a network. The programme would be accelerated and if possible completed in 1981/82. Experience so far suggests that this is a very satisfactory means of providing a majority of SRC users with interactive computing for a period of about 5 years, although a minority can only be served efficiently on larger machines with more support staff.

A prominent feature of the Six Year Plan was the emphasis on centrally supported software so as to free the research user as much as possible for the creative aspects of his work. The software provided by ICF now ranges from systems and networking software especially for the GEC and PRIME MUMs which constitute the majority of the computers, through discipline-independent programs (eg graphics packages, interactive pre- and post-processors) to complete packages and libraries of subroutines which are specific to a single discipline, for example stress analysis.

| Site | Computer |

|---|---|

| Edinburgh | DEC 10 KI |

| UMIST | DEC 10 KI |

| Sheffield | Interdata 8/32 (a) |

| Swansea | PDP 11/40 (a) |

| Oxford | PDP 11/45 (a) |

| Southampton | PDP 11/45 (a) |

| Leeds | VAX 11/780 (a) |

| Manchester Graphics Unit | PDP 11/45 (a) |

| Nottingham | PRIME 400 (a) |

| East Anglia | PRIME 400 (a) |

| Bradford | GEC 4085 (b) |

| Birmingham | GEC 4085 (b) |

| Chilton (RL) | PRIME 400 |

| Chilton (RL) | PRIME 400 |

| Chilton (RL) | GEC 4070 |

| Bristol | GEC 4070 |

| Cambridge | GEC 4070 |

| Glasgow | GEC 4070 |

| Newcastle | GEC 4070 |

| Cranfield | GEC 4070 |

| Sussex | PRIME 550 |

| City | PRIME 550 (b) |

| Cardiff | GEC 4085 (b) |

(a) upgraded Mini System (b) yet to be installed

During the year most of the Special Interest Groups - set up to advise the ICF on application software requirements finalised their specifications for programmes of work covering 3 to 5 years and implementation of these programmes is under way in earnest in four main areas: Artificial Intelligence, Finite Element techniques, Electromagnetics applications and Circuit Design. It is recognised that provision and support of SIG software will constitute a major part of the expenditure on ICF from 1982 onwards.

A major success in 1979 was the completion of a joint effort by the GEC Support Group of ICF and the Network Group of the 360/195 team, aimed at providing interactive terminal protocol (ITP) and file transfer protocol (FTP) for the interconnection of all six GEC 4070s (see Table 3.1) together with remote job entry (RJE) to the central batch computers. All this was based on the SRC's implementation of the X-25 low level protocol, the MUMs being connected by leased lines to ports on the GEC switching node located at Rutherford Laboratory. The result is the first completely networked set of minicomputers available to UK universities and polytechnics. The network has settled down reliably since its inauguration, and additional GEC MUMs will be added to it as they are installed (3 more by March 1980). It is now possible, for example, for an architectural research worker in Glasgow to log in to an interactive machine at Bristol and routinely run a program maintained there. To what extent this may change or improve the pattern of collaborative research between different university groups - especially smaller ones - is an exciting experiment, the outcome of which will not be known for some years. Completion of the GEC network leaves ICF machines grouped in three principal subnetworks of which the other two are:

The remaining task is to integrate these three subnetworks (see Fig 3.1) and progress here has proved difficult.

The DEC10 network uses proprietary DECnet protocols which are widely incompatible with anything else. Although a satisfactory link was established during the year to allow RJE to the 360/195 from the ERCC DEC10 using 2780 protocol, this is not robust enough for bulk file transfers. Work has been commissioned by ICF through the Joint Network Team at York University to develop software for a gateway between the DEC network and SRC X25. This has gone well and a working gateway providing ITP between the DEC machines and those on SRC X25 should be installed in the first part of 1980. Work has started on a general FTP implementation using the same gateway.

The PRIME network uses proprietary PRIMEnet protocols. These are incompatible in detail with SRC X25 and there is also a hardware incompatibility with the GEC switching node. Integration with the X25 network is nevertheless expected during the coming year.

Some 500 users have kept both DEC 10s well loaded during the year, and full capacity is reached on both machines frequently during prime shift. There has been extensive consultation with user groups during 1979 to obtain their views on more modern replacement machines and a consultant was engaged to carry out an independent study of users' particular requirements. The quality of user support at both sites was especially praised. The outcome was a policy agreed by the Interactive Computing Facilities Committee in November:

Some of the GEC 4070s have now been in service for 12 months and it is beginning to be possible to assess the popularity of the style of service they provide. Each is run under contract by the host site and for the first three years ICF supports 80% of the total recurrent cost including one full-time equivalent staff member. This post must cover some operator duties as well as first-aid user support and some local management, so that the successful running of a MUM is greatly dependent on very few people in the host department. It is intended to be largely a self-help operation in which a kernel of local users familiar with the system assist with problems encountered by others. At some sites, for example at Bristol University, this has worked extremely well. The GEC there has also been welcomed by the central computing service as filling an important gap in the facilities available to demanding research users. At other sites the build-up has been slower, hampered in particular by difficulty in moving files from the DEC10 machines. The effectiveness of providing all sites with new releases of systems software from the GEC support group at the Rutherford Laboratory has been convincingly demonstrated.

Activity on the PRIME MUMs has centred throughout the year on two PRIME 400s run at Chilton with a combined user population now approaching 160. About 60% of the total workload on this service derives from intra-mural programmes, among them development of the Electron Beam Lithography facility and applications development work for ICF's own Special Interest Groups. Response time is being monitored and extrapolation of the existing workload has shown that the upgrade of at least one of these machines to a PRIME 750 will be necessary. Remote PRIME 400s at Nottingham and East Anglia are being connected via PRIMEnet and the first of several new remote PRIME installations, a 512 Kbyte PRIME 550, took place at Sussex in November.

During the year more modern machines running the same operating systems appeared in both the GEC and PRIME ranges, so that the standards for ICF MUMs are now GEC 4085 (not 4070) and PRIME 550 (not 400). In both cases the computing power available for the same cost has risen significantly and this trend will continue.

There are now well over 400 terminals and items of associated communications equipment installed in nearly 60 university and polytechnic departments. The demand for terminal equipment in SRC grant applications continues to grow and the management and allocation of this equipment involves a large amount of technical discussion with users as well as with the contractor through whom maintenance of the entire pool is arranged. ICF policy is still to lend terminals to SRC grant holders for the duration of their grant, allowing a user the possibility of later exchanging his terminal for a more advanced type if his work demands it. On average, however, it must be recognised that each pool terminal will have to be shared among 3 or 4 users of the ICF.

The aim here is to provide a suite of program modules of general use which can be thought of as high-level systems software or discipline-independent applications software. The idea springs from the possibility of providing general purpose interactive pre- and post-processors for large analysis programs run in batch mode, but sometimes the same processors may be applied also when the analysis program runs interactively.

Recommendations of an ICF Working Party were accepted by ICFC in September and a high priority has been attached to setting up a small group to implement these, predominantly in the pre-processing area. The modules to be supported will include general purpose geometric modellers, mesh generators, high level graphics software, command decoders and aids to data management. It is hoped that everyone of the disciplines covered below will be able to make use of some of these modules.

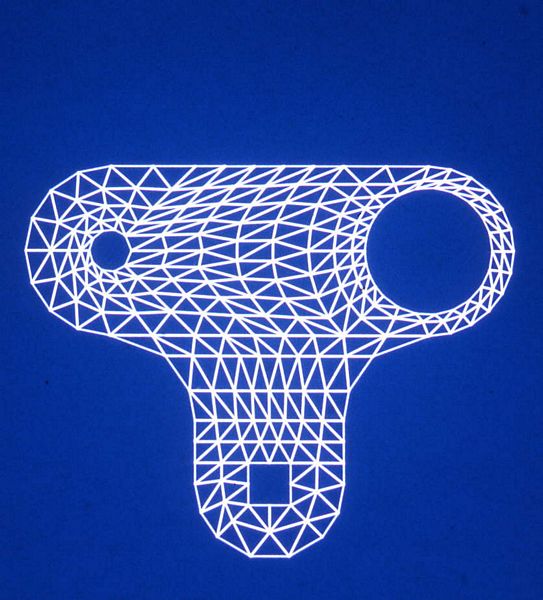

Use of the finite elements (FE) technique pervades a number of applications areas, including stress analysis, fluid mechanics, electromagnetics and electrostatics. An ICF team of 4 people has been set up to cover the first two of these areas. After a careful comparative study of four available FE packages, ASAS and BERSAFE have been selected as the most suitable for ICF to support for the benefit of the research worker who wishes to use a complete black box for a specific problem. For the researcher who is concerned with development of the FE techniques required for new problem areas, a different approach is supported by ICF: a two-level library. The first level contains a set of basic building blocks while the second consists of a set of example programs showing how the blocks may be used to solve a wide range of problems. The initial release of this library was marked by a course run at the Laboratory in October this year, when interested research workers in universities and polytechnics were given a detailed introduction to, and hands-on experience of, the modules and examples available so far. A package-independent FE mesh generator, FEMGEN, has been obtained and interfaced to ASAS, BERSAFE and the FE Library. An example of a mesh generated by FEMGEN is shown in Fig 3.2.

Work in this area also makes substantial use of FE solution techniques and will benefit from further development of pre- and post-processors. A considerable amount of the software now supported was originally developed within the Laboratory and was incorporated into the plans made by the Special Interest Group, which also include electrostatics packages. During the year, the THESEUS data base management system was made available in the PRIME 400: it can be used for interactive data preparation for the magnet design program GFUN3D. Improvements have been made to the ease with which several other packages can be run via interactive machines. PE2D, a program for nonlinear 2-dimensional problems in eddy currents, electrostatics, magnetostatics, and current flow, can be run either in the PRIME 400 or the 360/195 depending on the size of the problem. A new boundary-integral program BIM2D aimed at 2-dimensional magnet design runs on the API20-B array processor as well as on the PRIME 400. EDDY, a 2-dimensional integral equation eddy-current code can now be run in the 360/195 from data files generated in the PRIME 400. Progress has also been made with BIM3D, a 3-dimensional version of the boundary integral method program and with TOSCA, a new 3-dimensional program for magnet design based on the use of total and reduced scalar potentials.

Plans proposed by two Special Interest Groups for software to be supported in this area were accepted by ICFC early in the year. The field of digital design in particular is moving so fast that it has been difficult to agree longer-term goals for an integrated design system. However, much progress has been made during the year in providing analysis facilities for immediate use. ITAP, ICAP and SP are three interactive analysis programs which the Southampton University group mounted on the PRIME 400, to allow DC, AC and transient analysis of circuits specified at a keyboard. Several large batch packages (ASTAP, SPICE, and NAP) have, like the large electromagnetics programs, been made easier to operate through ICF machines while a version of NAP now runs entirely on the PRIME 400 for smaller problems. This software is supported by an extensive HELP and information system which is kept up-to-date on the PRIME and GEC machines.

Close liaison has been maintained with the Laboratory group responsible for the production and support of software used with the Electron Beam Lithography facility - particularly the GAELIC integrated-circuit design package which currently runs on ICF's PRIME 400 and Edinburgh DEC10 machines.

Future work will, it is hoped, include providing a microprocessor development system on ICF machines to help a user make a rapid assessment of the relative merits of different devices for a certain application, and then to design and test software to run on the selected device. The emphasis in new software will move from the provision of analysis facilities towards design facilities, although it must be recognised that it may not be practicable to set up an integrated design system within the present limits on staff numbers.

Two ICF staff at Edinburgh continued throughout the year on the programme of work defined by the Special Interest Group in 1977. This included support of the language POP2 and its new derivative WONDERPOP. The AI SIG also played an active role in the choice of a replacement machine for the ERCC DEC10.

In June this year the Special Interest Group presented its proposals for application software and these were accepted by the ICFC. A small group is being formed at Kingston Polytechnic to implement them on behalf of ICF. The work will include mounting a library of control subroutines, a comprehensive dynamic system simulation facility, a data analysis/identification package and the Cambridge University multivariable system design package on a number of ICF machines. If successful, this will serve as a precedent for ICF commissioning more applications work of this type from university and polytechnic groups.

The number of grant applications involving interactive computing and referred to ICF by the SRC Subject Committees rose to about 250 this year. Many of these applications emerge after detailed technical discussions between ICF staff and potential users, and many more are handled by staff at the ICF host sites. All ICF computing resources are authorised, allocated and accounted in terms of units of processor cycles, connect time, and core utilisation: the Subject Committees are advised of the notional cost of each grant application before a decision is reached. The year saw a major increase in work put into all the above areas, and into the management of the growing number of machines at remote sites: this work requires a blend of technical knowledge, management ability and administrative efficiency and the need for this can only increase as the user population, now 1300, grows towards its target of 1700.

Integration of ICF's three sub-networks in collaboration with the Joint Network Team should be complete by 1981. The MUM network so achieved will be a facility with enormous potential. To realise this potential will require an increase in the use of staff to acquire, document, and where necessary create applications software: some reduction of staff currently used on system software and installations will help to make this possible. It is hoped that much of this work can be done under contracts with university and polytechnic departments, as with the Control Engineering and AI programmes mentioned above. It may become desirable to separate the management of applications software work (which normally relates closely to the areas of interest of individual Subject Committees) from that of the computing service and network, now that the latter is emerging from the development stage.

Provision of a good interactive computing service beyond 1985 demands advanced work on new hardware facilities. These may well be based on very powerful, cheap single-user processors with fast links to local centres having specialised equipment for array processing and file storage, and exotic peripherals. Work in the Distributed Computing Systems programme will be invaluable here, and it is hoped to collaborate with ERCC also, starting with assessment of the Three Rivers PERQ system which may be particularly suitable for Artificial Intelligence work. In parallel with this, some ICF users have been supplied with IBM 5100 single user machines which are proving successful in providing good APL facilities; assessment of such single-user systems will continue. The long term goal will be to provide an interactive interface for the majority of users of all SRC's central computing facilities within the next ten years.

The primary objectives of the programme are to seek an understanding of the principles of Distributed Computing Systems and to establish the engineering techniques necessary to implement such systems effectively. In particular, this requires an understanding of the implications of parallelism in information processing systems and storage, and devising means for taking advantage of this capability.

The more general objectives of the programme may be described as follows:

Within the context of the proposed programme, a Distributed Computing System is considered to be one in which there are a number of autonomous but interacting computers co-operating on a common problem. The essential feature of such a system is that it contains multiple control paths executing different parts of a program and interacting with each other. Such systems might consist of any number of autonomous units, but it is anticipated that the more challenging problems will involve a large number of units. Thus, the spectrum of Distributed Computing Systems includes networks of conventional computers, systems containing microprocessors and novel forms of highly parallel computer architecture with greater integration of processing and storage.

The DCS programme has been co-ordinated and supported by the Laboratory since January 1978. Laboratory staff have been engaged in promoting communication and cooperation between the 40 university projects funded by the DCS programme as well as linking the university research with the industrial world. The Laboratory actively supported the research teams by providing a Unix service, by maintaining and distributing a pool of equipment currently containing over 50 items worth £150,000, by placing EMR contracts to develop generally useful tools such as Unix X25 communications and by circulating a monthly newsletter.

As of September 1978 the DCS programme consisted of 24 projects totalling £1.4 million. During the year from Sept 78 to Sept 79 the SRC, acting on the DCS Panel's recommendations, awarded a further 16 grants and two Senior Visiting Fellowships worth £906,000, bringing the programme up to 40 grants (31 distinct projects) totalling £2.3 million. The DCS programme was originally allocated £1.6 million. The extra funds awarded are an indication of the importance attached to the DCS initiative.

With 4 grants awarded in 1977, 17 in 1978 and 16 in 1979, the programme overall is very much in its initial phase with the majority of projects only just getting into their stride. Amongst the more established groups, significant progress is easier to identify. Such examples include the successful commissioning of the 16 processor CYBA-M system by Professor Aspinall's team, despite the move from Swansea to UMIST; Professor Aspinall's team were paid the compliment of being invited to California to present their course on reliability; York's Modula compiler has been distributed world-wide and has been selected for the KSOS secure operating system project and an industrial project; Mr Shelness is well on the way to producing the DCS's first implementation of a dynamic process/processor system; Professor Evans' discovery of new algorithms suitable for parallel execution has led to the award of a more powerful four processor system for Loughborough University and the theoretical projects of Drs Lauer, Plotkin and Milner have continued to produce papers of the highest quality.

In conclusion, the end of the 1978/79 academic year has seen the Distributed Computing Systems programme established as a substantial, co-ordinated portfolio of research projects well set to produce significant results during the next few years.