NOTE: many of the urls referenced in this newsletter are no longer active.

At the end of January, a new Cray supercomputer arrived at the Atlas Centre to replace the Cray Y-MP8. The new machine is a Cray J932/4096 which CLRC will continue to operate under the Research Councils' High Performance Computing Programme.

The J90 offers more than twice the computing capacity and four times the available memory of its predecessor. Even so it is regarded as an interim resource pending the decision on the spend of the £10M capital resource available to the Research Councils for High Performance Computing in 1997 (see article on page 2).

The Atlas J90 has 32 vector processors, binary compatible with the Y-MP, each of almost 200 Mflop / sec sustainable performance, connected to a shared global memory of 4 GBytes and some 300 GBytes of disk space. Like the Y-MP, the J90 will be connected to the Atlas Centre's two mass storage devices, the Storagetek 4400 tape silo and the IBM 3494 tape robot of 20 TByte capacity on IBM's new NTP tape drives.

The input / output section of the J90 is based on the industry standard VME bus and offers low cost connectivity compared with the very expensive circuitry in the Y-MP. In keeping with the Cray's drive to reduce the cost of ownership, the J90 is entirely based on low power consumption CMOS chips and the whole 6.4 Gflop computer with its 216 GByte internal disk space, consumes only 8 kWatt of electricity.

An attractive option on the J90 is that industry standard SCSI disk drives can be attached, giving the option to enhance the disk space at moderate cost. Initial tests with the latest generation of 7200 rpm SCSI-2 disks have already shown a very respectable performance and it is hoped to expand the total disk space to some 300 GBytes in the very near future.

The Atlas Crays have been used over almost the whole range of Research Councils' programme areas and we expect that the environmental modelling community and some other grid based modelling areas such as fluid dynamics will be amongst the first to make use of the increase in capability provided by the new J90.

The move from the eight 333 Mflop processors of the Y-MP8 to the thirty-two 200 Mflop processors of the J90 marks a profound change in the philosophy of running a mixture of applications on the machine and in some ways the J90 is more like a parallel computer than a traditional vector computer. The traditional job classes will largely be retained for continuity of work but it is inevitable that jobs wishing to take advantage of the whole of the machine resource in memory or disk space will also be expected to make good use of the 32 processors. Cray's Unicos operating system and Message Passing Toolkit libraries (with PVM and MPI) provide a range of options to generate parallel codes. Managing the optimal mix of work will present quite a challenge and will doubtless be resolved iteratively in close consultation with the user community.

The J90 went into service on 19 March and the Y-MP was closed down at the end of March.

For full text of this article see: http://www.cis.rl.ac.uk/publications/ATLAS/apr96/j90.html

For more information on the cray J90 see: news:ral.services.cray

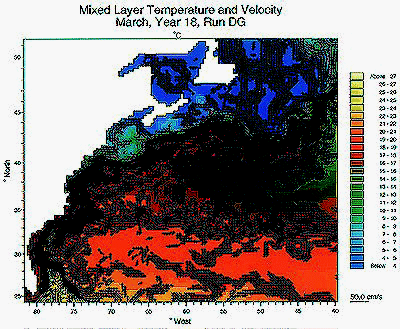

The James Rennell Division of the Southampton Oceanography Centre has, in the Atlantic Isopycnic Model (AIM) project, implemented an eddy-permitting isopycnic (layers of constant density) model of the Atlantic Ocean on the CRAY Y-MP. The model will be compared with, and used to help interpret, observations which are being collected within the World Ocean Circulation Experiment (WOCE), and will lead to an improved understanding of the way in which the oceans circulate and transport heat.

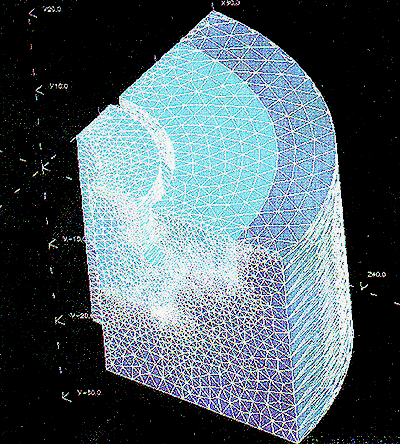

The figure illustrates some of the detailed current and temperature structures which are being produced by the present model, which covers the Atlantic from about 15 degrees South to 70 degrees North at an average horizontal resolution of about 25 km. In particular, the figure shows a section of the model domain off the coast of North America, and reveals the separation of the Gulf Stream from the coast, and the formation of the Azores Current near 30-35 degrees North (east of 50 degrees West), as well as several whirls, meanders, eddies, and current streams.

The model currently takes most of the memory available on the Y-MP and yet there are many features of the ocean circulation which are not adequately resolved, even at the present resolution of 25 km. For instance, many eddies in the ocean have horizontal scales of 50 km or less, which would require a model with a resolution of at least 12 km, twice that possible on the Y-MP. However, the new J90 has 4 times the memory of the Y-MP, and this will allow a model with such a resolution to be run. Improvements in job turnaround time will also help to improve our productivity in these important calculations, feeding into the global climate modelling programme.

For full text of this article see: http://www.cis.rl.ac.uk/publications/ATLAS/apr96/view.html

From 26 to 28 February the EPSRC High Performance Computing Group held three User Meetings respectively at Edinburgh, London and Manchester. The aim of these meetings was to trawl user input to help formulate the strategy for the future of High Performance Computing (HPC) in the UK.

The meetings were run by Rob Whetnall and Alison Wall of the Engineering and Physical Science Research Council (EPSRC) High Performance Computing Group and staff from all of the national centres were present to give talks on HPC hardware and support issues and to participate in the discussion.

Rob Whetnall described the situation for the forthcoming year in which there is a £10M capital sum to be spent on major new hardware purchases. Strategic decisions need to be taken soon as to how this is divided between a 'top of the range' supercomputer which is likely to be a distributed memory parallel machine (although the architecture will not be specified in the Operational Requirement) and a smaller machine of more conventional vector or super-scalar architecture that would provide a more flexible large scale computing resource.

A second major issue is whether the UK should concentrate its HPC hardware at a single site for economic advantage or maintain (probably no more than) two sites for an element of competition and a diversity of styles. There are other related support issues as to how much should be spent on support, whether it should be organised by hardware needs or by subject area, and whether the support is necessarily collocated with the hardware.

The three days produced much interesting discussion and needless to say a variety of views. There was considerable support for the 'mid range' machine although the larger research groups felt that such a machine should be local and not a national facility. Support through the Collaborative Computational Projects (CCPs) and the High Performance Computing Initiative (HPCI) was well regarded and issues for the future were related to how funding for such activities should be organised given the pressure to devolve computing funding to the programme managers at EPSRC.

For the official reports on these meetings see http://www.epsrc.ac.uk/hpc

The Atlas Data Store, one of the major facilities at the Atlas Centre of CLRC Rutherford Appleton Laboratory, is currently undergoing a major upgrade. The Atlas Data Store has been developed over the last few years to store large amounts of user data independently of the physical medium currently in vogue, and as far as is possible independently of the hardware platform that originally generated the data. The current intention is that data will be kept forever since the constantly falling cost per byte of storage implies that the marginal cost of keeping legacy data is a small fraction of the cost of storing the new data.

Currently the Atlas Data Store is based on an IBM 3494 tape robot with IBM's new technology tape drives, and a trio of RS6000 workstations which maintain a disk cache of most recently used data. The Atlas Data Store has a capacity of 20 TBytes within the 3494 robot. The concept of the Data Store is intended to scale to the volumes of data that can realistically be expected during the next five years.

Physically, the access to the Atlas Data Store is a network connection to the client machine so that the Data Store can be connected easily and efficiently to any of the computers at the Atlas Centre or even to computers at a remote site if that is the most convenient form of access. Logically, the access to the data store is through the concept of a virtual tape which shares some of the attributes of a physical tape, alternative user interfaces are under development. Client software to access the Atlas Data Store exists for most flavours of Unix, for Digital Open VMS and for PCs running DOS and Windows with suitable TCP/IP networking software.

The Atlas Data Store is available to all Research Councils' grant holders using the scientific computing services at the Atlas Centre, i.e. the Cray service, the Engineering and Physical Science Research Council Superscalar service and the Open VMS service and the HP CSF farm used by Particle Physics and Astronomy Research Council. Anyone else interested in using the Atlas Data Store, either via a Research Council grant or as a commercial user should contact Tim Pett (Marketing Manager).

For more information on this topic see http://www.cis.rl.ac.uk/services/dstore/index.html

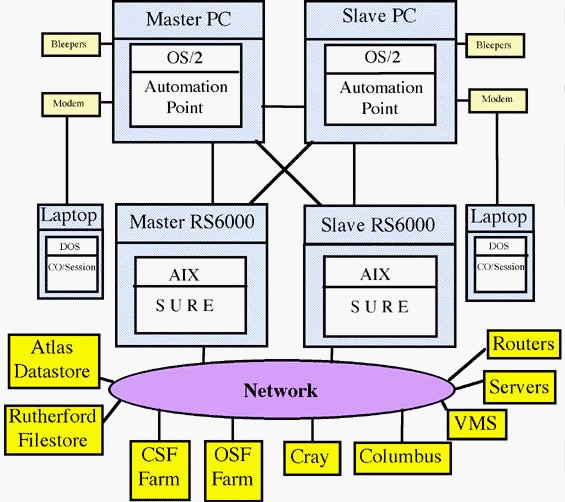

The Operations Group in Computer and Information Systems Department (CISD) has developed and introduced a system that has enabled the operator shift system to end after many years. The Automatic Operations (AO) system now monitors our network systems and computers. According to rules established by the Operations Group, the AO system decides whether to call an operator to deal with an incident. The current level of call-outs is averaging two or three calls per week.

The central monitoring system is built around two closely coupled systems:

The illustration shows the overall architecture. The SURE system pings the remote computers, collects information from them and displays status on a display. It passes error messages and codes to the Automation package in the PC, which contains a rulebase to determine what action to take. In 'attended' mode the operator, who may be away from any monitoring displays at the time, will always be bleeped and can therefore attend to the problem without delay. In 'lights out' mode, only a high severity alarm invokes a call-out message to an operator who can dial in from home for more information. Depending on the severity of the problem, the operator can fix the problem remotely, attend in person or call in support from systems staff.

For full text of this article see: http://www.cis.rl.ac.uk/publications/ATLAS/apr96/auto.html

Finite Element (FE) analysis in electromagnetics is a very demanding discipline with a number of challenges, including free-space regions and multi-material domains. The Magnetic Integrated Design and Analysis System (MIDAS) Consortium has developed a three-dimensional (3-D) modelling 'environment', specifically for electromagnetic design. This environment consists of a Graphical User Interface (GUI), a powerful geometric modeller, a STEP-based data import/export system and analysis modules for two- and three-dimensional modelling.

A two-dimensional subset of the tools, fully integrated with the 3-D environment, offers SMEs the opportunity to use FE analysis without the high training costs which previously inhibited such use.

MIDAS has proven effective in tests on a wide variety of electromagnetic problems and contributed to the development of future ISO standards. This success paves the way for future progress in Integrated Design Engineering, as well as providing a sound basis for short-term exploitation.

The ProcessBase Project partially funded by the CEC under its ESPRIT III programme, addressed the data management and exchange requirements for process plant design, construction and operation. Rutherford Appleton Laboratory was involved in the project because of its expertise in data modelling, Express and standardisation activities.

The principal objectives of the work were the establishment of a neutral form for the exchange and management of technical data concerned with process plant, and validation of this exchange form by the development of interfaces to perform 'real world' exchanges of data in two types of process plant (power generation and chemical).

The approach was to develop data models using the information modelling language Express, together with software tools to process the Express code. Existing work on data models and software tools (in the STEP standard and other projects) was evaluated and used wherever possible in the project. This approach introduced a high degree of automation in the development of specific data exchange and management software.

Pilot implementations of exchange software were developed at two industrial sites, testing the data models and tools in two different process industries. The software demonstrated the exchange of plant information and the access of the catalogue of a plant equipment supplier through the WWW.

The success of these pilot implementations demonstrated that:

Additionally, the data models developed in the project formed a significant input to the ISO STEP project. This increased the overall European contribution to STEP and ensured the interests of European industry were properly represented in the international arena.