The upgrade to the CSF Farm (described in FLAGSHIP 35) has now been completed. Twenty new HP70001712 machines have been installed and fully integrated into the existing cluster. This upgrade increases the farm to more than three times its old power. The original farm was modelled on the Central Simulation Facility (CSF) at CERN and was intended to run only CPU-intensive Monte-Carlo simulation. The use of the farm has now been widened to include general analysis and higher I/O jobs (which benefit from the proximity of the tape robots of the Atlas Data Store). Any UK particle physicist may register to use CSF.

A new batch job scheduler has also been installed. Jobs are now scheduled on a round robin basis rather than first in first out. Jobs are ordered in the input queue to give each experimental group a fair share of running work. The experimental group's share is then divided amongst the members of the group.

It is no longer antisocial to submit many jobs at one time: jobs from different submitters are interleaved and if you have no work running, your job will go to the top of the input queue. This allows users running small jobs doing analysis work to get good job turnaround ahead of long queues of production work. Because the input queue is managed by the CSF Job Manager (rather than NQS), to find your job's queued position, you should use the command:

CSFqorder

Further developments are planned on CSF over the next few months, so watch this space!

Atlas Cray Users met at the Atlas Centre, Rutherford Appleton Laboratory on Thursday 6 April 1995.

Following a welcome from the Chairman, Professor Catlow, Roger Evans gave a progress report which showed that the standard performance indicators of machine availability, machine utilisation and job turnaround indicated a continuing high level of service. Staff at the Atlas Centre were, however, beginning to be concerned that load on the Cray Y-MP was reaching a critical level beyond which the perceived quality of service could suffer. It seemed that the T3D service at Edinburgh was giving a ratchet effect to the need for Y-MP time: those who were getting good performance at Edinburgh are likely to need Y-MP resources for data analysis and those who are not getting efficient use of the T3D want more Y-MP time to compensate!

A simple queuing model shows that beyond 90% loading the average queue length increases rapidly and turnaround suffers. On many weeks, the loading on the Y-MP was over 90% and occasionally topped 100% due to jobs starting the previous week but accounted in the current week's utilisation figures!

Growth of the filestore was continuing in a linear manner which inevitably increases the ratio of off-line to on-line files. Data Migration (dm) continues to cope well with the average load, but worst case delays for file retrieval are very annoying to the users affected. As a result, many users have taken to storing files on the jtmp filesystem for periods that are well beyond the intended use of the temporary filesystem and in the last few months jtmp had filled on average once per month and caused some disruption to batch work while free space was created.

Since it is not feasible to buy more disks to attach directly to the Y-MP, it is planned to alleviate these problems with a large (50-100 GBytes) file store attached to a small Unix machine by FDDI. Small files (less than about 2 MBytes) will migrate to this disk area using the ftp protocol and retrieval will be almost immediate since there is no wait for tape setup. This change will be transparent to the users, who should see only a much improved dm response. Part of the new files tore will be used as a second jtmp area through an NFS mount but will be more difficult to manage unless some users can be persuaded to be more altruistic in their behaviour.

John Gordon described the recent trial of reduced operator cover to a single daytime shift - as is common at many other supercomputer sites. A single operator had remained in the building to observe the systems but had not intervened unless there had been a risk of the Cray service undergoing a lengthy disruption.

The trial had been mostly successful but had revealed unexpected interdependencies and knock-on effects following some failures. There would be a detailed review of the trial to establish the best balance between the need to demonstrate reduced running costs while maintaining a high standard of service.

John continued to describe the new editors, shells and mail user agents which had been recently introduced. Elm and Pine are now available for mail handling and the shells tcsh, bash and zsh have been installed. An EDT-like editor (ed) and an IBM XEDIT like editor (the) were now available. MicroEMACS had been built and, subject to some problems with keyboard mappings from certain workstations, was ready for testing. EMACS itself is such a large executable that UNICOS regards it as memory "hog" and swaps it to disk from time to time, giving poor interactive response; it is hoped that MicroEMACS will be much better in this respect.

With the demise of the IBM 3090 service due later in 1995, changes are needed to the way that magnetic tapes are managed. To help prepare for these changes, tape access from VM will be withdrawn very soon. This should not affect Cray users except those who use CMS to copy tapes. The 3090 also currently manages the tape security and access permissions and this will change and be managed by a new data store command to interface to all virtual tape properties. Some frequently-used command options will be made available through a simpler interface; for example, an option will display a useful subset of information about a particular virtual tape. Access permissions will be a superset of those supported by TMS and users should be prepared to define an owner for all virtual tapes in the near future.

These changes will be detailed in news files and on-line technical notes.

Manjit Boparai described the new philosophy of using World Wide Web (WWW) as the preferred entry route for information about the services at the Atlas Centre and all those present indicated they could access WWW from their desks. There will be Web pages describing the Cray service, how to register, how to find documentation and FAQs (Frequently asked Questions) and listing some scientific highlights. If any users have Web pages of their own, describing their research, would they please contact User Support who will arrange for suitable hot links to be inserted in our Web pages.

The Cray User Guide is being rewritten with the philosophy of being a much smaller document with pointers to other information and Cray's own on-line documentation.

Chris Plant described how the Atlas Centre has for a long time supported the Cray Unichem software which gives a graphical design and analysis interface on a local Silicon Graphics workstation and access to the Y-MP for major computation of molecular properties. This has been very popular with our chemistry users but there have been some who did not have the necessary Silicon Graphics machines locally.

Cray have now upgraded Unichem so that although it still only runs on Silicon Graphics hardware, it can be accessed across a network by any X windows capable machine. This has resulted in several of our users having access to the Indigo on Chris' desk as a trial service and we have now purchased a more powerful Indy to improve the responsiveness of this service. Any potential new Unichem users should contact Chris Plant.

As most users will be aware, there has been a recent merger of two Departments at RAL; this has resulted in a larger and more powerful graphics group, called Visual Systems Group. Julian Gallop, the group leader, described recent developments in high performance visualization, such as the use of A VS to make an intelligent and flexible split of an application into a local part which runs on the workstation on your desk and the remote part which needs to run on the Y-MP or other machines at RAL.

A new venture for the Visualization group was into Virtual Reality with twin aims of improving the capabilities of scientific visualization and of helping design engineers with construction and maintainability of complex systems. A Silicon Graphics Onyx machine had recently been delivered with the capability of driving either a stereo projection display or a head mounted display. There was also a 3D mouse and the powerful DVS and dVISE software from the UK based VR company Division. Any Cray users interested in a new angle on complex three dimensional scalar or vector data were invited to contact Julian with a view to setting up a pilot project.

Following the distribution to all supercomputing users of a questionnaire designed to help formulate the future strategy in this area, Professor Catlow gave a short talk on the present position. Responsibility for preparing the strategy document lies with the High Performance Computing Group in EPSRC led by Jean Nunn-Price, advised by an Advisory Panel chaired by Professor Alistair MacFarlane. The development of an effective strategy was currently delayed following the withdrawal of the capital spend line from the 1995/96 forward look. A paper was currently in preparation for the Director General of the Research Councils on the future of HPC and development of the strategy would depend on the outcome of this paper.

Margaret Curtis described how the current resource management database resided on the IBM 3090 and how a new database was being developed to cope with an increasing variety of services, many of which were based on "vanilla" Unix without the UNICOS concept of an account.

In future, grants would normally have a single sub-project and the setting up and management of sub-project allocations would be less flexible; existing sub-projects would be honoured wherever possible. The default resource control for CPU usage will simply be on the total allocation with no weekly limits. This is in accord with the comments received from the National Audit Office report, but clearly there are some users who need a tighter control on usage. Scripts will be provided for weekly or monthly control but it will be the responsibility of users to run these scripts themselves if they need this facility.

Due to the lack of consistency between filespace management on different machines, the use of files tore will be monitored in total (on-line and off-line) and users exceeding limits will in the first instance be contacted by Resource Management; only then will more drastic action be taken.

The first stage of the build-up of the Visual Laboratory, described in the last issue of FLAGSHIP, is now complete with the delivery of the Silicon Graphics Onyx computer that will form the basis of the Virtual Reality Centre. Video projectors, allowing stereo large screen display of the output from the Onyx, have also been delivered.

This is one of the world's more powerful graphics systems and has rapidly established itself as the required base for most intense virtual reality work. The system at RAL currently consists of a standard Onyx system, with Reality Engine 2 graphics and one Raster Manager subsystem. This is attached to a Multi-Channel Option, allowing up to six independent output streams from the graphics pipeline. In particular, this allows either interleaved stereo (viewed with synchronised-shutter glasses) or parallel stereo (viewed with a headset or via video projectors).

In addition to the basic hardware of the Onyx system, RAL have attached a Division VR4 headset, complete with Polhemus position sensor and 3D mouse. Two LitePro 580 video projectors have been purchased, which, with polarizing filters and glasses will allow stereo viewing on an 8ft by 6ft screen.

As well as the standard Silicon Graphics Performer software, RAL has purchased the Division's virtual reality software suite, which together with Performer, will assist in the conversion of CAD models into suitable form for the Onyx and with the handling of interaction between user and model in the virtual world.

In the next couple of months, Visual Systems Group will be familiarizing itself with the whole system and working on pilot projects to bring CAD models and the results of visualizations into the Virtual Reality system. Once the general method of working has become familiar, we will be glad to collaborate with users on projects where virtual reality can help them with their problems: contact Chris Osland for more information.

The EC Copernicus Programme has funded a project in hyperlinked multimedia.

The partners are:

The project is co-ordinated by RAL; local management and co-ordination in Central Europe is by Masaryk University Institute of Computer Science and the four software development and consultancy companies are organised with T -Soft and AMIS managing the work of MDS and ELAS respectively. The modelling and design work, and the theoretical background, is the work of Masaryk University and RAL; the user requirement analysis, software development and testing that of the commercial companies.

The objective is to provide an interoperation mechanism between autonomous, heterogeneous medical information systems utilising advanced techniques in heterogeneous distributed database technology coupled with advanced hyperlinked multimedia. Clearly, interoperation between heterogeneous autonomous systems is of great importance economically, and in the health care domain there are additional societal imperatives.

In the medical domain there are several well-known and widely-used systems, each with its own DBMS (Database Management System) - sometimes a well-known commercial DBMS, sometimes a home-grown one - and a rather generic database schema. These systems support some or all of the end-user requirement areas of administration (including payments/claims), patient observation/diagnosis (the patient record) and laboratory instrument results, including images (e.g. X-ray photographs) as well as treatment records. The general schema of one of the healthcare systems may be specialised for a particular hospital or healthcare centre. The information is characterised by aspects not handled well by conventional DBMSs: temporal information, uncertainty and incompleteness.

In the case of the Hypermedata project, the medical information systems are CareSys, an American/Australian system adapted by T-Soft to the Czech environment and AMIS*H, a locally-produced system from AMIS. For commercial and logistical reasons there is no hope of either converging these (and other) medical information systems to one standard system, nor of designing and building a completely new system that will be used by all healthcare centres. Thus the legacy produces the requirement for heterogeneous interoperation of autonomous systems.

The requirement can be distilled into two major components:

Clearly, such an interchange mechanism requires a toolset to examine, browse, edit and analyse the interchange document schema and instances, and also tools to assist in the interfacing of this common interchange mechanism to specific healthcare systems. These tools are used to assist the transformation between the Hypermedata exchange format (including schema and instances) and the schema (and therefore data model/representation) of the particular medical information system. In general this involves extraction of information for projected attributes (including their entity constraints and relation constraints such as cardinality) and providing the additional information for parametric input for constraints in the data loading or input/update program(s) of the particular medical information system. The overall effect of the data exchange mechanism - including the tools - is to reduce the interchange problem of n*(n-1) conversions to 2n conversions.

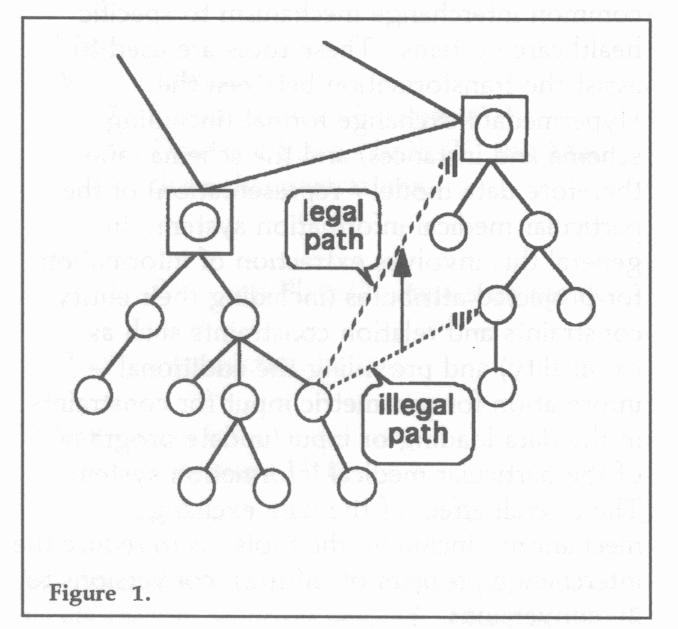

The graph theory foundations of the technique provide some insights into its use; in particular the ability to simulate behaviour depending on instance values is valuable for adjusting and tuning particular application interoperations by data exchange. See Figure 1.

The use of expressions in first order logic (with second order syntax) to express conditional arc traversal echoes work on constraint enforcement and data structuring in the deductive database environment. It also allows the possibility of intelligent mediation between the exchange information and the participating autonomous systems. The extent to which such a technique can be used will be investigated in the later stages of the project.

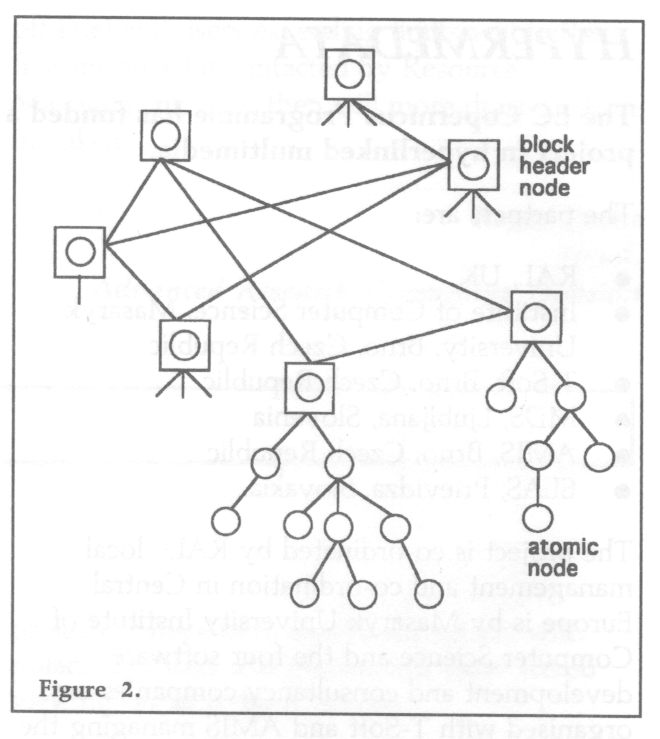

Similarly, the restriction on hyperlinks from an atomic node within a block to a block header node reflects an object-oriented philosophy _ each block is encapsulated by the block header node above the block (including constraints on the arc-path arriving to that block header node).

This lays the foundations for interoperating with yet-to-be-developed commercial medical information systems that utilise CORBA technology, or deductive object-oriented techniques. See Figure 2.

We believe we have established an interesting method for data exchange which is general, but which is demonstrated in the medical domain where there are particular difficulties of representation and expressivity. The method is practical yet grounded firmly in graph theory. The prototype system to demonstrate interoperability between CareSys and AMIS*H each at one hospital with its own schema is scheduled for June 1996. Further refinement, proof of generality across several hospital sites and with prototypes for other application domains will lead to a commercial product in December 1997.