Much of modern computer aided engineering is concerned with design. A major part of the design process is concerned with setting up models of real world objects and simulating how they behave. This ensures, for example, that structures can be designed to withstand earthquakes or high winds, or that fuel is burned more efficiently in a car engine.

Much design work relies on the exploration by trial and error of alternatives by using existing knowledge and past experience to home in on acceptable solutions. In Computational Modelling Division (CMD) the emphasis is on the development of new techniques for modelling of engineering systems, the production of libraries of useful software, the implementation of software on parallel processing computers and the presentation of multi-dimensional results. Specific application areas are particularly addressed: process and device modelling of semi-conductor devices ('silicon chips'), computational fluid dynamics and electromagnetics.

The CMD consists of five groups:-

This Group is primarily concerned with the development of new computational techniques and the implementation of these into software packages and libraries. A library of software using the Finite Element method was started in 1978 and is now distributed by the Numerical Algorithms Group (NAG) in Oxford. Over the years, new releases of the library have been produced which broaden its applicability.

The Group has a special interest in device and process modelling of semiconductor devices. The work has been largely collaborative with industry and university departments, both in Britain and the rest of Europe. The funding came from both the Department of Trade and Industry's Alvey Programme and the Commission of the European Community's ESPRIT Programme. A 2-dimensional device simulator was started some years ago and the software package, TAPDANCE, contains the current state of this work. A more demanding 3D simulator is being developed as part of the ESPRIT-funded EVEREST project.

The Rutherford Appleton Laboratory (RAL) has a long interest in the development of computational methods and software relevant to magnet design and similar activities. A range of application software packages has been developed and these are now marketed by a software house, Vector Fields, based in Oxford.

The major thrust of this Group's activities now is the development of these programs for new computing systems, in particular parallel and vector processors. The existing software, with suitable development and tuning, has been mounted on a wide range of computers from the CRAY X-MP to more dedicated systems such as the Stellar, the IBM 6l50/transputer system and the Ardent TITAN. The emphasis is on the development of efficient new methods and software for well defined existing problems.

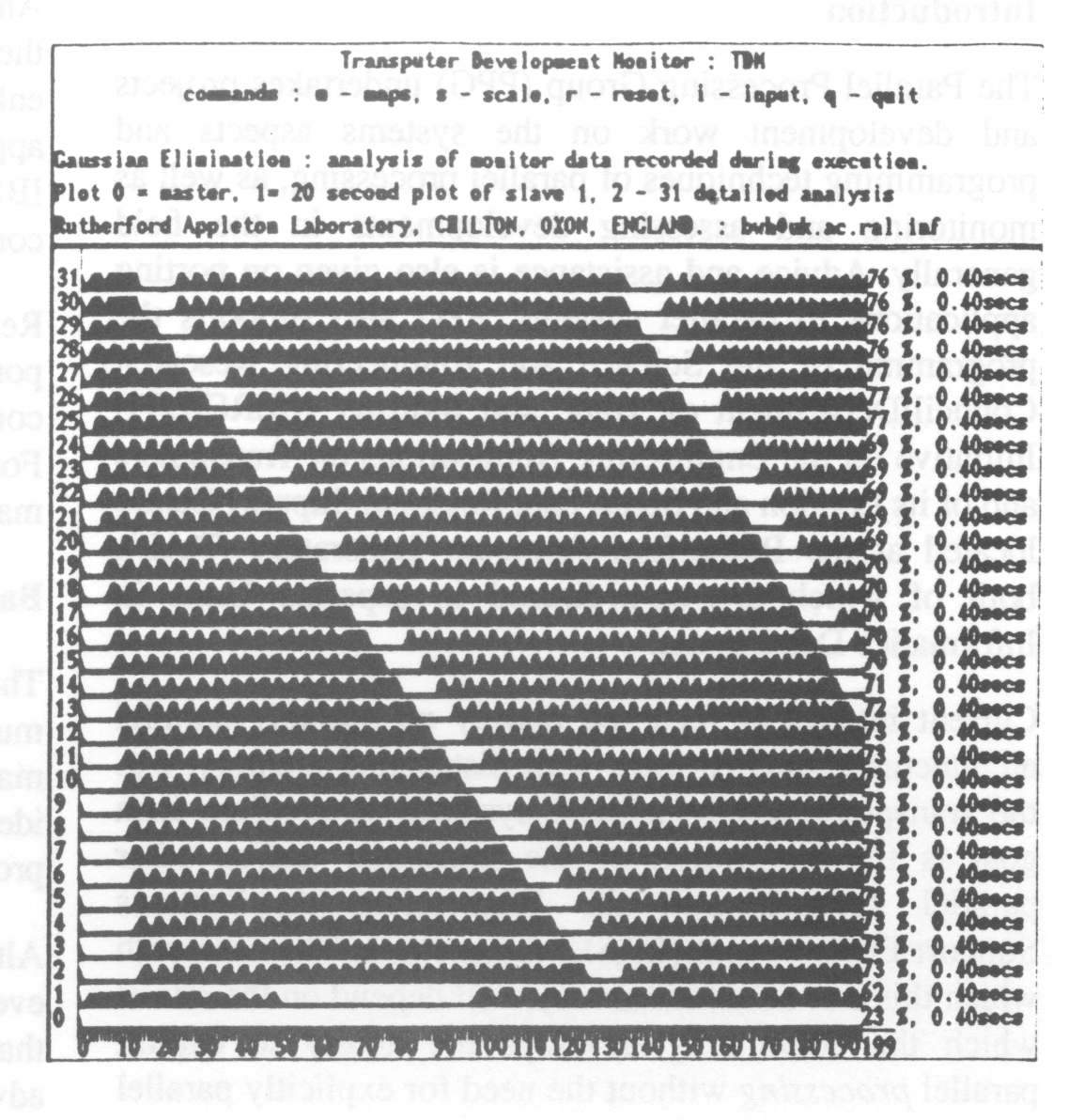

To achieve realistic simulations in real time and at economic cost means that large problems will need to be tackled using parallel processing systems. The Group has used workstations such as the IBM6150 with attached transputer systems both to develop the necessary software infrastructure and then to port a number of engineering applications to this hardware configuration. Significant performance improvements have been achieved.

The Group also provides technical support to the local London and South East Regional Transputer Support Centre.

The need to provide better ways of transforming computational results into images that can be observed is becoming more apparent as the advances in high speed computation allow larger and more complex problems to be tackled.

The Group evaluates the usefulness of superworkstations such as the Stellar in this area and works closely with the applications of interest to the Division in devising new ways of visualising complex data.

The Group has a research interest in interpretation of images and is producing an image processing library with NAG.

Computational Fluid Dynamics is an area of interest to all the Committees of the Engineering Board of Science and Engineering Research Council (SERC). The Group has set up a Community Club to provide a forum for researchers to share common experience, to exchange software and test data, and to propose new activities. Initially the focus will be on better methods for visualisation of results and improvements in numerical methods and accuracy.

The Mathematical Software Group (MSG) is a small group of mathematicians, physicists and engineers engaged in research and development programmes that will result in the production of software packages and libraries solving computational problems in mathematical physics.

The main purpose of the MSG is to promote the use of up to date mathematical software and techniques within the engineering community and to provide a focus for computational techniques. The MSG actively encourages research workers to make use of the latest numerical and software developments by providing numerical software, providing advice on computational techniques, by organising seminars and courses on numerical software and by being actively involved in collaborative research and development projects.

These software products are made available to the UK research community and consequently the MSG provides a focus within the research community for a number of application areas.

The use of numerical techniques within the engineering community has grown with the increasing availability of computing hardware and with the complexity of the problems requiring solution.

The need for good quality mathematical software and support has been recognised by the Computing Facilities Committee (CFC) of the Science and Engineering Research's (SERC) Engineering Board. As part of the Engineering Applications Support Environment (EASE) the MSG is providing the community with implementations of robust mathematical software and suitable training courses to enable research workers to use the software effectively. Software developments will centre around finite element and finite volume techniques and the related techniques in linear and non-linear algebra. The Numerical Algorithm Group/SERC Finite Element Library is one important example of this support to the community.

As the use of parallel computing systems become more wide spread, utilising these architectures in computational software is very important. The MSG has already been working in this area and will continue to provide advice and software developments for both vector and concurrent systems.

The MSG draws on a long history in computational methods applied to a number of application areas: electromagnetic analysis, semiconductor device and process simulation and computational fluid dynamics. This experience has been complemented by involvement in a number of ESPRIT, Alvey and SERC funded research projects. These included EVEREST (three-dimensional device simulation) and ACCORD (the application of vector and concurrent computing to computer integrated manufacture (CIM)).

Over the past few years the MSG has developed a number of software libraries and applications packages that are available to the community. This software has been the result of SERC development projects or external collaborative research and development projects funded under programmes such as ESPRIT.

The MSG is always seeking to develop its contacts with both the UK and European academic community. This is being achieved by informal and formal collaborations. The Group will also be developing user courses and technical seminars, under the EASE Education and Awareness Programme, to promote the use of good mathematical software.

Whilst continuing the development and provision of the mathematical software needed by the community the Group will continue developing links with institutions in which these techniques are being used to solve engineering problems.

The Group hopes to be involved in new UK and EC funded research and development projects on the use of computational techniques in science and engineering and is always actively seeking new collaborations.

The Visualisation Group pursues investigation, research and development into the handling of visual and spatial information. Many techniques are involved including computer graphics, image processing and pattern recognition.

"Visualization in Scientific Computing" is a term that was made widely known in a report published by ACM Siggraph in 1987. The term and the field that it describes arise from the complex data that present day computers are called upon to handle.

Such data may originate from external sources such as a camera in a factory inspection process, a sensor on a satellite or a scanner in medical applications or on vehicles.

The data may also result from a calculation, often on a high-performance computer. The results would be too voluminous or complex to understand by conventional means. For example the analysis methods In fluid dynamics are increasing the sophistication of the problems they can solve and the refinement of the problem meshes.

For the human user, information needs to be extracted in some way and visual methods are the key to this, especially when the data itself has some spatial content. This is visualisation in the sense that computer techniques are helping the human user to visualise the data - "call up distinct mental picture of thing imagined" (Concise Oxford Dictionary). A number of skills and techniques are employed. The

data often needs reducing, reorganising, projecting and refining; image processing techniques are often useful here. Human factors need to guide how the information is presented to the user. Computer graphics techniques are needed to produce the display and handle the interaction. Different applications and different funding levels affect the methods used and there needs to be practical experience of these choices:

In other circumstances the data is not immediately presented to a human viewer, but is analysed using pattern recognition techniques in an attempt to automatically extract further meaning. Although data emerging from a calculation could undergo this analysis, this is particularly relevant when the data has come from an external source. This is often in an attempt to automate a process that relies on visual input. Examples are visual inspection in manufacturing processes, real-time control of aircraft based on visual input and analysis of information derived from a medical scan or a scientific experiment.

Among its responsibilities the Group aims:

The Parallel Processing Group (PPG) undertakes projects and development work on the systems aspects and programming techniques of parallel processing, as well as monitoring and assessing developments in the field generally. Advice and assistance is also given on porting applications to parallel systems. PPG also supports the programme of the Science and Engineering Research Council/Department of Trade and Industry (SERC/DTI) Initiative on the Engineering Applications on Transputers and of its London and South East Regional Support Centre located at the Rutherford Appleton Laboratory (RAL), both of which are co-ordinated in separate Units in Informatics Department.

Current interest is focussed mainly on scalably parallel architectures such as ones with distributed memory, e.g. the transputer and hypercube systems. A medium-term goal is the integration of the transputer and/or other parallel systems into the Engineering Applications Support Environment (EASE) programme. The ease with which this can be achieved may well depend on the rate at which the community develops the ability to exploit parallel processing without the need for explicitly parallel programming. The long-term goal is to provide an effective environment for developing applications on and for parallel systems.

Part of the Group's work centres on the porting of applications, with particular aims both to gain practical experience of the most useful parallelisation techniques, and to consolidate an understanding of user needs for an effective applications environment for parallel systems. This work is typically undertaken jointly with an applications specialist, e.g. with other groups internally, or via Transputer Centre contacts, or on consultancy to an external applications party.

An IBM Joint Study has provided particular stimulus for the applications work. This study has concerned enhancing workstations with transputers, then porting applications so as to exploit the combination. To date the IBM 6150 workstation has been used, and the work will continue on the new IBM RS/6000 Powerstation range.

Research activities include: migration strategies for porting existing software; development of techniques and compilers for detecting and extracting parallelism from Fortran programs; and the issues associated with providing machine-independent parallel software.

The benefits of parallel processing are almost self-evident: multiple processors operating in parallel can perform many jobs faster than a single processor alone, and in the ideal case with a speedup equal to the numbers of processors employed.

Although parallelism has been studied for decades, indeed even in Babbage's original designs, it is only in the 1980's that parallel systems have become affordable with the advent of the microprocessor. Systems built from many cheap microprocessors operating in parallel now offer an order of magnitude better price/performance than conventional uniprocessor systems.

There are now many such systems available commercially, differing widely in their architecture. Because of their novelty, and variety, there is as yet a paucity of software for them.

In spite of this, predictions have been made that nearly all future systems will be parallel ones, perhaps with up to 1000 processors on the desktop by the turn of the century. The successful exploitation of the potential power available, across systems of varying physical architecture, sets the challenge and context for the Group's work.

The transputer is a super-microcomputer on a single silicon ship, introduced in 1985. It is distinguished by having four communication links on-chip, providing a basic building block for parallel systems in which the interconnection of the processors may be chosen to suit the application.

The Group's activities began with the launch of the SERC/ DTI Transputer Initiative (TI) in 1987 and were incorporated into Computational Modelling Division in 1988. Current activities are:

It is expected that the Group's work will continue broadly along the present lines, but with increased emphasis on techniques and methods, such as virtual parallel architectures, that are applicable across a range of parallel systems, and on the steps needed to bring about a mature parallel programming environment.

The Engineering Applications Group is concerned with the exploitation of new computer architectures in the writing of software for engineering design and analysis. The hardware includes supercomputers such as the CRAY X/MP, supermini workstations like the STARDENT 2000 and TITAN series, and parallel machines, for example the AMT DAP and transputer arrays. The work is geared to the EASE (Engineering Applications Support Environment) programme. There are two aspects to this: Firstly the question of assessment of the new hardware so that university research groups may have good advice on which is the best configuration for their project; secondly to develop packages for these machines so that there will be efficient engineering software available.

Rutherford Appleton Laboratory has had a requirement for computational modelling of engineering problems since the time when beam handling magnets for high energy physics experiments were required to be analysed. From this requirement grew the Computing Applications Group, which in time provided finite element analysis tools for the academic research community under the old Interactive Computing Facility. This expertise is now being used as a component of EASE.

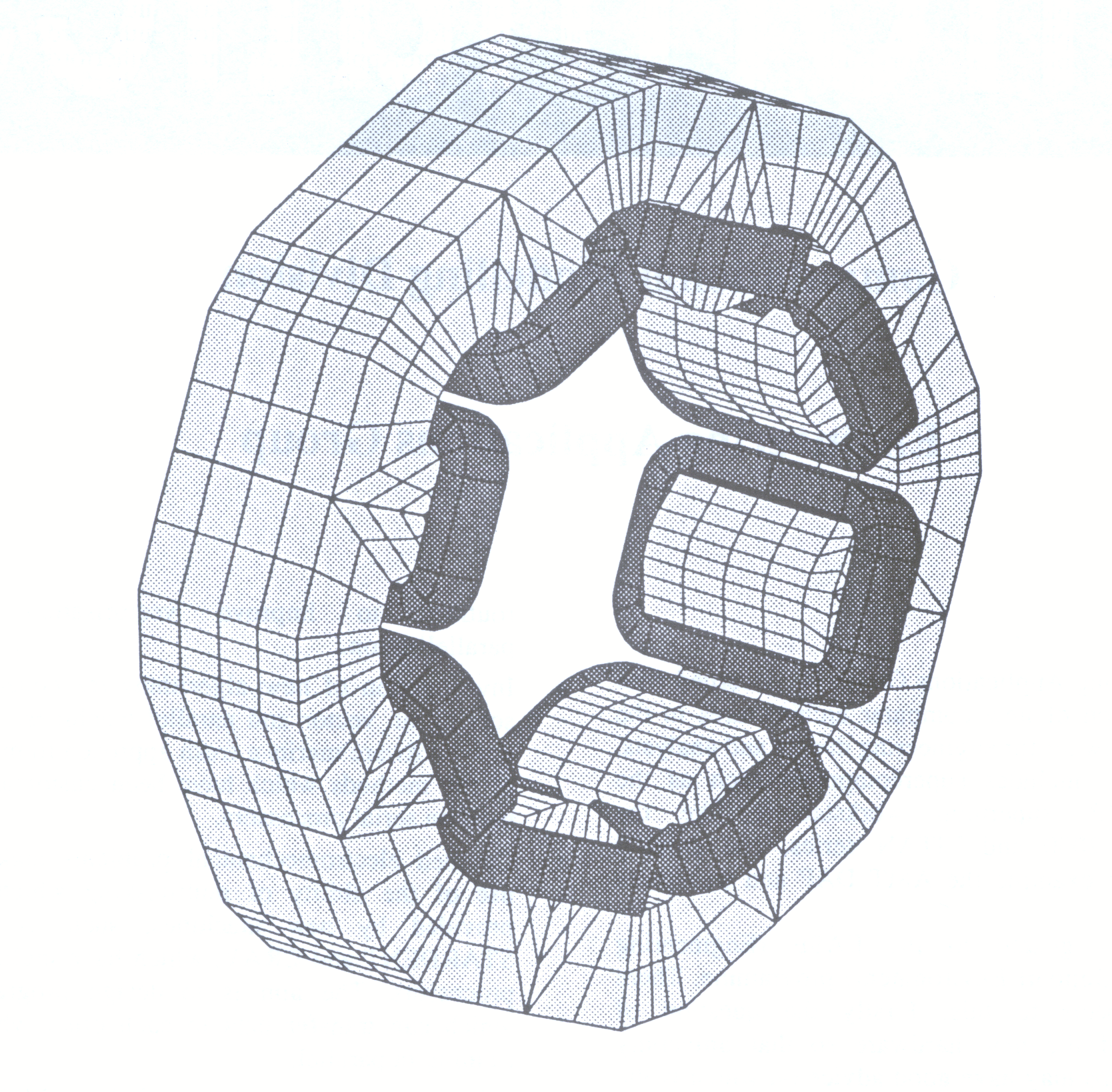

The picture shows an octupole magnet which is required as a component in a particle accelerator. This has been modelled using the TOSCA finite element package on the STELLAR GS2000 supercomputing workstation. Because of the symmetry only one thirty-second of the magnet needed to be represented in the analysis (a 22.5 degree slice, with half the thickness). However, even that involved the solution of 22800 equations, which required about one hour on the GS2000 and would have taken about 15 hours on the SUN3.

Computational Fluid Dynamics (CFD) refers to the analysis, using computer techniques, of fluid flow, and related phenomena such as heat transfer, mixing and chemical reactions. Its current and potential range of applications is very wide, including:

CFD has for many years been a vital element in the aerodynamic design of aircraft and gas turbine engines, and there is rapidly increasing use of CFD in designing power plant, and combustion chambers of gas turbines and reciprocating engines. Research supported by the Science and Engineering Research Council (SERC) includes use of CFD as a technique for analysis in the majority of the application areas listed. It includes related experimental work to produce basic data for the physical processes being modelled and for validating the predictions of CFD codes. There is also work on the mathematical techniques for modelling fluid flows, and on the numerical techniques for solving flow equations.

The ultimate aim of CFD research is to provide a capability comparable with that provided by stress analysis codes, which have become standard tools for computer aided design. In the coming decade, CFD will be a key to design efficiency and competitiveness in a number of industries, including aerospace, road vehicles, marine technology and process engineering.

SERC has recently carried out a review of its activities in CFD. An Advisory Group produced a report in Summer 1988, and its recommendations were agreed at a Workshop in January 1989. In particular the Electromechanical Engineering Committee intends to appoint a Computing Coordinator for CFD.

The CFD programme in Informatics Department consists of two parts:

RAL will provide back-up support to the Coordinator to enable the tasks recommended in the report to be carried out. The support will be both administrative and technical, ranging from the provision and maintenance of an index of CFD software available at UK academic sites, to the use of tapes of computational data generated by direct simulations of turbulent flows on the Cray computers at the NASA Ames Laboratory in the USA.

RAL is setting up a Community Club in CFD to provide a forum for researchers to share common experience, to propose new activities within EASE, to exchange software and test data, to disseminate information to the CFD community, etc.

A major thrust of the technical programme is to collaborate with developers and users of CFD systems to extend the capability of their software, and to ensure that it can be easily transferred between different computing systems approved by EASE. This is especially true of the use of supercomputing workstations. There will be a focus on better techniques for visualisation of results, and a contract will be placed in an Higher Education Institute in July 1990 on use of advanced visualisation techniques in CFD.

Further activities will be carried out with the cooperation of the Community Club. It is expected that these will include

Specification and production of well-defined interfaces between Pre- and Post-processing software and analysis software.

Development and maintenance of a range of benchmark problems.

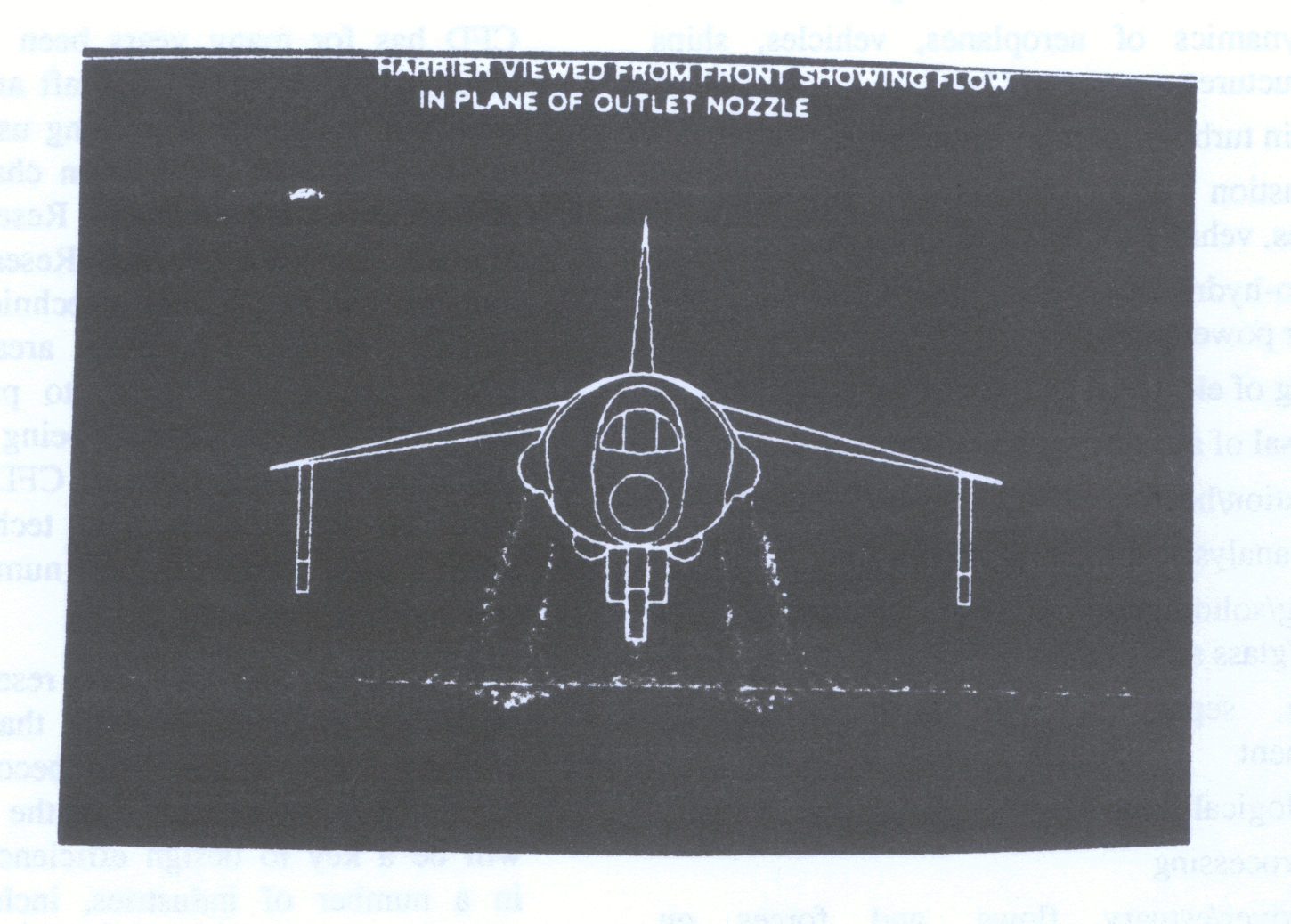

The evaluation of Hot Gas Recirculation in V/STOL aircraft, using PHOENICS, identifies design modifications which will reduce severe thrust losses and the risk of compressor stall.

Workstations have been widely used in the past to solve 2D and to some extent 3D engineering problems. However workstations in wide use have insufficient power to solve demanding 3D problems and display their solutions, without having to ship the analysis off to a remote mainframe. What has been missing is the equipment to interactively explore a complex solution. What is more, interactively exploring a space of plausible solutions has been even more difficult.

Within the last year, new workstations have become available, which increase both the computational and graphics power available to the user, but even more importantly absorb these increases into an integrated architecture so that there are no 'thin wire' effects or bottlenecks internally.

Systems have become available from start-up companies Stellar and Ardent which then combined to form Stardent, and comparable systems have become available from Hewlett Packard (Apollo Division) and Silicon Graphics.

A Stardent ST2000 has been installed at Rutherford Appleton Laboratory (RAL): to assess for Engineering Applications Support Environment (EASE) this class of workstation on demanding problems; to provide a facility for suitable internal and external projects; and to provide an effective vehicle for the development and installation of visualisation software. (When it was installed, Stellar was a separate company and the system is often referred to colloquially as the "Stellar").

The details of the RAL ST2000's processor, memory and peripherals are as follows:

The details of the ST2000's graphics hardware:

The degree to which the system is operated as an integrated whole is illustrated by the internal data paths which can operate at 320 MBytes/sec.

The ST2000 is connected to the Informatics Department's Ethernet system.

The ST2000 runs:

For graphics, the ST2000 has the following software:

A major part of the work is to assess for EASE the effectiveness of this class of workstation and the ST2000 in particular for engineering research. Analytic tests can be carried out on the computational and graphics power, but demanding applications also need to be mounted to assess how effective the ST2000 is as an integrated superworkstation.

The ST2000 will be used to host the development and installation of visualisation software for EASE.

The ST2000 is available for suitable internal and external projects, that would benefit from the particular power of the ST2000 and can provide feedback on its use.

The Image Processing Algorithms Library (IPAL) has been developed jointly by the Rutherford Appleton Laboratory (RAL) and the Numerical Algorithms Group Ltd (NAG), Oxford with support from the Alvey programme. (Alvey Project No. MMI/127.)

IPAL aims to be a portable and well-documented library incorporating the best available algorithms to cover the main subject areas of image processing. Alternative versions of the library have been developed in Fortran and C. IPAL will provide an accessible and inexpensive resource for research and teaching in the many application areas and disciplines where image processing techniques are used.

It conforms strictly to language and graphics standards and will therefore be usable across the widest possible range of computing equipment. (Although C is not yet covered by an ISO standard, the deliberations of X3J11 leading to an ANSI standard have been watched with interest).

The library should remove the need for recoding standard algorithms which often confronts a researcher who wishes to apply them in a different context. IPAL will be distributed and fully supported by NAG.

The idea behind the IPAL library was that, in common with other NAG libraries, it should be developed on a collaborative basis to include software provided by a number of contributors, from outside the project, as well as material originated by the project partners. The integration of contributed code into the library is the responsibility of the partners.

An example of this integration is the use of the utilities to set and recover information concerning the area to be processed. Another example is the use of standard routines to report errors. The partners provided the utilities which form the first chapter of the Fortran code. The utilities provide the infrastructure which allows contributions to be accepted, and maintained, in the library.

The library will therefore provide a facility by which software generated in the course of a particular project can be preserved and propagated for future re-use.

Collaborative software libraries have gained widespread support and have been recognised as a significant contribution to the technical infrastructure of other disciplines, but nothing of this kind has so far existed in image processing.

The library provides a comprehensive set of algorithms with emphasis particularly on the standard techniques of low-level image processing which underlie many different applications systems, and provide the starting point for higher-level knowledge-based or model-based image interpretation and recognition techniques.

The main chapter headings of the library are:

The two versions of the library each include a similar but not identical range of algorithms.

The C library makes full use of the facilities available in the C language for processing structured data. This gives it more flexibility since the images are no longer restricted to rectangular arrays and the area to be processed can be specified as a more general shape. Operations which are concerned with the shapes of objects fit naturally into the C language arm of the library.

The libraries are mainly concerned with algorithms to process images rather than reading in or displaying images. It is assumed that potential users will in general have either their own pool of images or image grabbing hardware. However some images will be distributed with the libraries for test purposes and simple routines to read or write images in a portable format are provided.

The library was developed under a three year Alvey Project, and test versions of both the Fortran and the C code have been released to selected sites.

It is expected that a fully tested and documented product will be released by NAG during 1990.

We expect that in future the library will be further expanded and revised to maintain continuing coverage of the subject.

The Visualisation Group in Computational Modelling Division is involved in two collaborative project in computer vision with academic and industrial partners.

Computer vision is concerned with the automatic interpretation of natural or artificially contrived scenes within a wide range of application domains. These include robot-vision, automatic inspection in industrial environments, medical image interpretation and navigation for land-based or airborne autonomous vehicles.

The actual complexity of the recognition task varies greatly. One of the least complex might involve locating 2-D man-made objects in an environment where factors such as object depth and scene illumination are tightly controlled. At the other extreme, the location of 3-D objects in outdoor scenes presents a situation where few factors can be controlled.

It is problems of the more complex type that are being addressed in the Rutherford Appleton Laboratory (RAL) programme. For the past five years RAL has been involved in a research programme into the development of methods for recognition of objects in open world situations where the scenes to be interpreted have very diverse appearances. This work has been conducted as part of two collaborative projects. The first, 'Object Identification from 2-D Images', was concerned with bringing enhanced image processing, pattern recognition and artificial intelligence capabilities to bear on the design of effective vision systems for outdoor scenes. The recognition task was that of locating vehicles in outdoor scenes. Figure 1 shows the result of using colour information to interpret a typical image in terms of meaningful objects.

The second project, 'Vision by Associative Reasoning', has just commenced. It aims to apply a variety of approaches to the visual guidance of airborne vehicles. The research is concerned with producing better methods for interpreting uncertain or incomplete image data rapidly and reliably. In so doing the project will concentrate on developing several parallel and distributed model matching techniques for vision systems. The best known of these are neural networks, which try to imitate the mainly parallel information processing in the brain. In addition, a variety of other methods based on handling uncertainty and probabilistic reasoning will be considered.

In both of these projects RAL's contribution has been in the development of a class of scene interpretation methods referred to as relaxation algorithms. The basic aim of these algorithms is to combine uncertain or incomplete image data together using prior expectations about structure of a scene and produce a consistent interpretation. This type of computational process is common to pattern recognition, neural network and artificial intelligence approaches to vision. The appeal lies in the distributed nature of the algorithms which allows them to be computed in parallel.

The basic image entities are each assigned a processing element. High level knowledge about scene structure is converted into constraining relationships between processing elements. Each processing element is then equipped with the set of constraints relating itself to its immediate neighbours. With this distributed representation of the high-level knowledge, each processing element can adjust itself in parallel to achieve a consistent interpretation of a scene.

A major research achievement to date has involved formulating relaxation processes in a way that allows them to make use of more powerful constraints. A concrete example of using this type of improved processing is provided by boundary-finding in outdoor scenes. The high level model for legal boundaries is that they must be continuous and not exhibit noisy features such as rapid changes in direction. This behaviour is easily encapsulated as local connectivity of the low-level picture elements. Figure 2 shows the result of applying relaxational processing to the scene shown in Figure 1.

The direction of research at RAL involves embedding the relaxational processing into a neural network. It is hoped that this will produce symbiotic benefits to both areas. Relaxation processing gains from the learning capacity of neural networks. Neural networks will gain from the ability of relaxation processes to represent and distribute high-level constraints.

The Finite Element Library (FELIB) is a program subroutine library for the numerical solution of partial differential equations using the finite element method. It has been designed to meet the needs of the algorithm developer and is a set of software tools and not a package addressing one particular area. As a result the library is very flexible and enables its users to apply the finite element technique to any new research area in mathematical physics where packages do not exist, e.g. in free-surface flows and non-Newtonian mixing. Salient features are thus:

The first library was developed at the University of Manchester and has subsequently been rationalised and extended at the Rutherford Appleton Laboratory of the Science and Engineering Research Council (SERC), where it is still under continuous development. Because of its very wide range of application the software has been released through the Numerical Algorithms Group Ltd. for use by industrial and university research workers.

The design objectives of FELIB have been met by the provision of a fully documented, easy to understand, two-level library:

The library philosophy requires that the source text of all programs is readily available to users. It is envisaged that each will select the example program that solves the problem 'most like' their own and use this as the foundation of their program. They will then tailor the selected program to meet their own particular needs. In many cases, the only changes required could be adjustments to array sizes, or changing element types. Because of the library philosophy, each application program has very few lines of active FORTRAN compared with most applications packages. Thus understanding and modifying programs is quite easy.

The current library contains examples of both steady state and time-dependent problems. All the software is written in ANSI(66) FORTRAN and conforms to the PFORT subset. Thus the library software and any programs written using the library are very portable. This makes the use of the library very attractive in many forms of co-operative research.

Although the Library is firstly a research tool it is nevertheless very useful in the teaching of the finite element method. Its basic modular nature ensures that all the steps in a finite element analysis are transparent.

The Level 1 library contains thirteen programs grouped into five main areas:

Below these programs in the Level 0 Library are 100 routines directly accessible by the user. They may be conveniently grouped under the ten headings:

Both the Level 1 and Level 0 software are supported by their own separate volume of documentation; these together make up the FELIB Manual.

The Level 1 documentation consists of a four chapter introduction before the program documentation. The introduction describes the terminology, methods and mathematical basis of finite element calculations; each program has its own document offering an extensive description of the program under the headings: Problem Statement, Theory, Solution, Numerical Methods, Program Description, Listings.

The Level 0 documentation consists of a general introduction followed by an individual document for each subroutine in the Level 0 Library.

FELIB has been widely used within the UK research community. Some of the applications include:

FELIB is available under the Engineering Applications Support Environment (EASE) Programme.

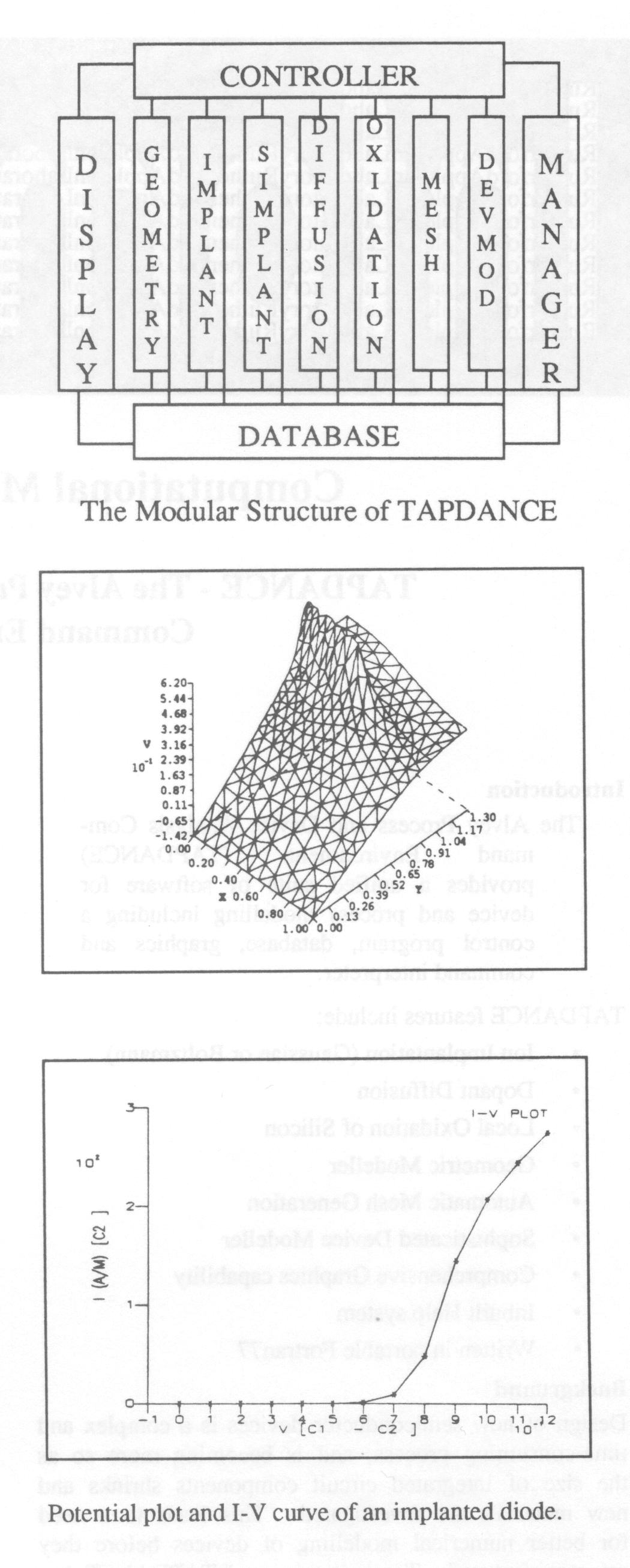

The Alvey Process and Device Analysis Command Environment (TAPDANCE) provides a unified suite of software for device and process modelling including a control program, database, graphics and command interpreter.

TAPDANCE features include:

Design of new semiconductor devices is a complex and time-consuming process, and is becoming more so as the size of integrated circuit components shrinks and new materials are investigated. This leads to a need for better numerical modelling of devices before they are manufactured. To meet this need TAPDANCE has been developed.

TAPDANCE provides an integrated environment for modelling the processing and operation of semiconductor devices. The three most important processes in device fabrication (implantation, diffusion and oxidation) are modelled using advanced numerical techniques. Other processes can be modelled simply using the geometric modeller. Mesh generation for both diffusion and device modelling is automatic, though with the possibility of user control. DEVMOD, TAPDANCE's device modeller, uses advanced numerical techniques and a comprehensive set of physical models to provide a flexible, general purpose device simulator for most silicon technologies.

The multi-faceted nature of process and device modelling requires close coupling between the various elements of software. This is achieved in TAPDANCE by data passing through a purpose-built database which eliminates the need for special interface files.

All kernels share a common user interface with localised command sets and on-line help facility.

At all stages in a simulation, graphical display of the device is possible via the DISPLAY module. This features geometric display, mesh display and contouring, isometric plots and sections of nodal variables as well as graphs such as I-V characteristics.

A description of the Kernels is given below:

In addition to the on-line help system provided by TAPDANCE full user documentation is available for all kernels. The user manuals give details of all the commands and their associated parameters, together with tutorial examples.

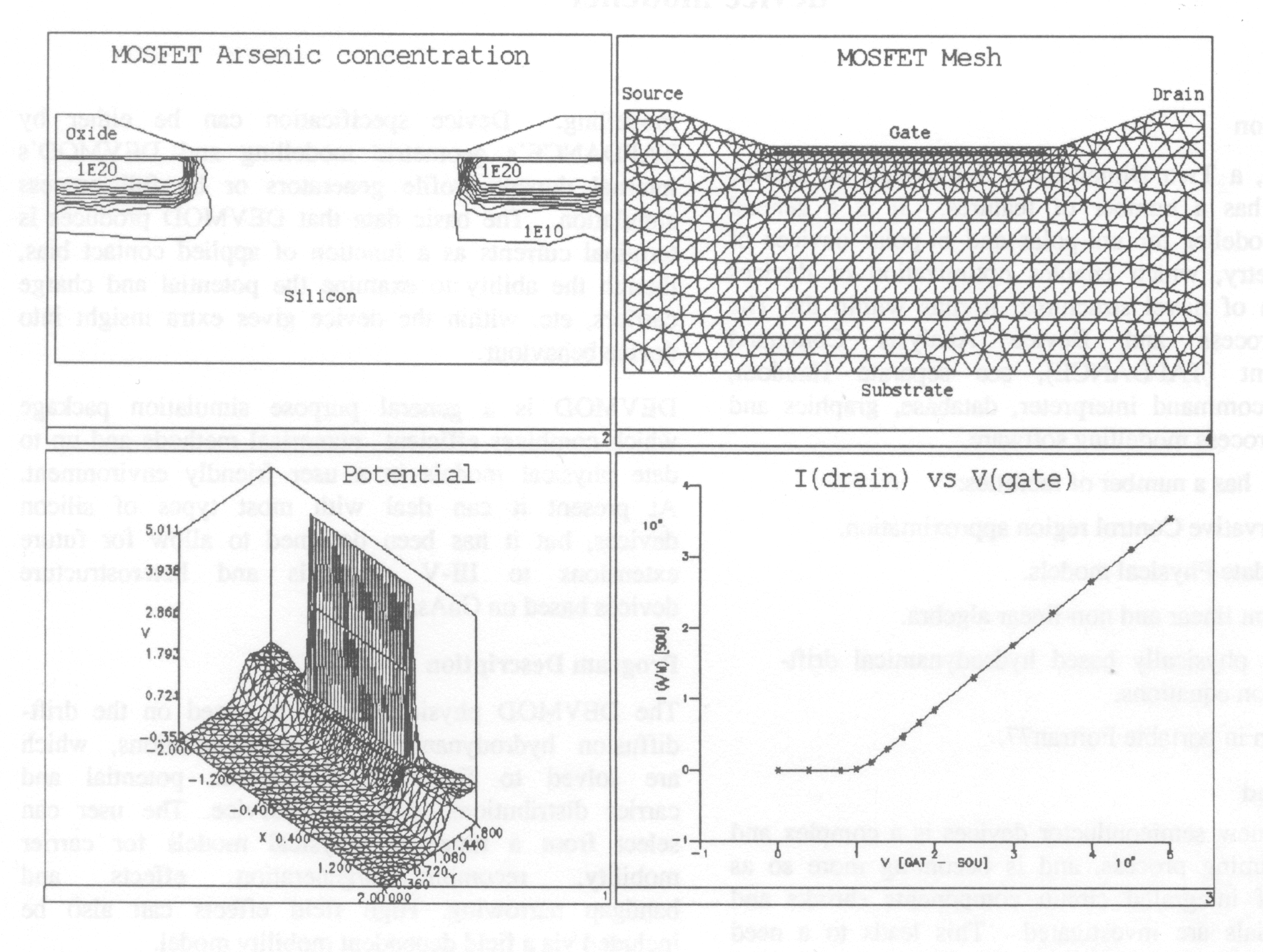

DEVMOD, a Two Dimensional Semiconductor Device Modeller, has a number of features. It is a general purpose modeller for uni-polar and bi-polar devices of any geometry, which enables optimization of devices and design of novel structures. It runs within the The Alvey Process and Device Analysis Command Environment (TAPDANCE), see separate Handout, providing command interpreter, database, graphics and access to process modelling software.

DEVMOD has a number of facilities:

Design of new semiconductor devices is a complex and time consuming process, and is becoming more so as the size of integrated circuit components shrinks and new materials are investigated. This leads to a need for better numerical modelling of devices before they are manufactured. To meet this need TAPDANCE and kernels such as DEVMOD have been developed.

TAPDANCE provides an integrated environment for modelling the processing and operation of semiconductor devices. Within this system DEVMOD provides two dimensional device characteristic modelling. Device specification can be either by TAPDANCE's geometric modelling and DEVMOD's internal dopant profile generators or by full process simulation. The basic data that DEVMOD produces is terminal currents as a function of applied contact bias, though the ability to examine the potential and charge carriers, etc. within the device gives extra insight into device behaviour.

DEVMOD is a general purpose simulation package which combines efficient numerical methods and up to date physical models in a user friendly environment. At present it can deal with most types of silicon devices, but it has been designed to allow for future extensions to III-V materials and heterostructure devices based on GaAs/GaAlAs.

The DEVMOD physical model is based on the drift diffusion hydrodynamical transport equations, which are solved to find the electrostatic potential and carrier distributions within the device. The user can select from a range of physical models for carrier mobility, recombination/generation effects and bandgap narrowing. High field effects can also be included via a field dependent mobility model.

The solution process is based on a Newton type iteration scheme and iterative methods are used for the linear algebra to give efficiency. The particular solvers used arc ICCG (Incomplete Cholesky Conjugate Gradient) and CGS (Conjugate Gradient Squared) for symmetric and non-symmetric cases respectively.

In addition to the on-line help system provided by TAPDANCE full user documentation is available for DEVMOD. The user manual gives details of all the commands and their associated parameters, together with worked examples.

A series of benchmarks has also been compiled which illustrates the performance of DEVMOD and shows the range of devices which can be modelled.

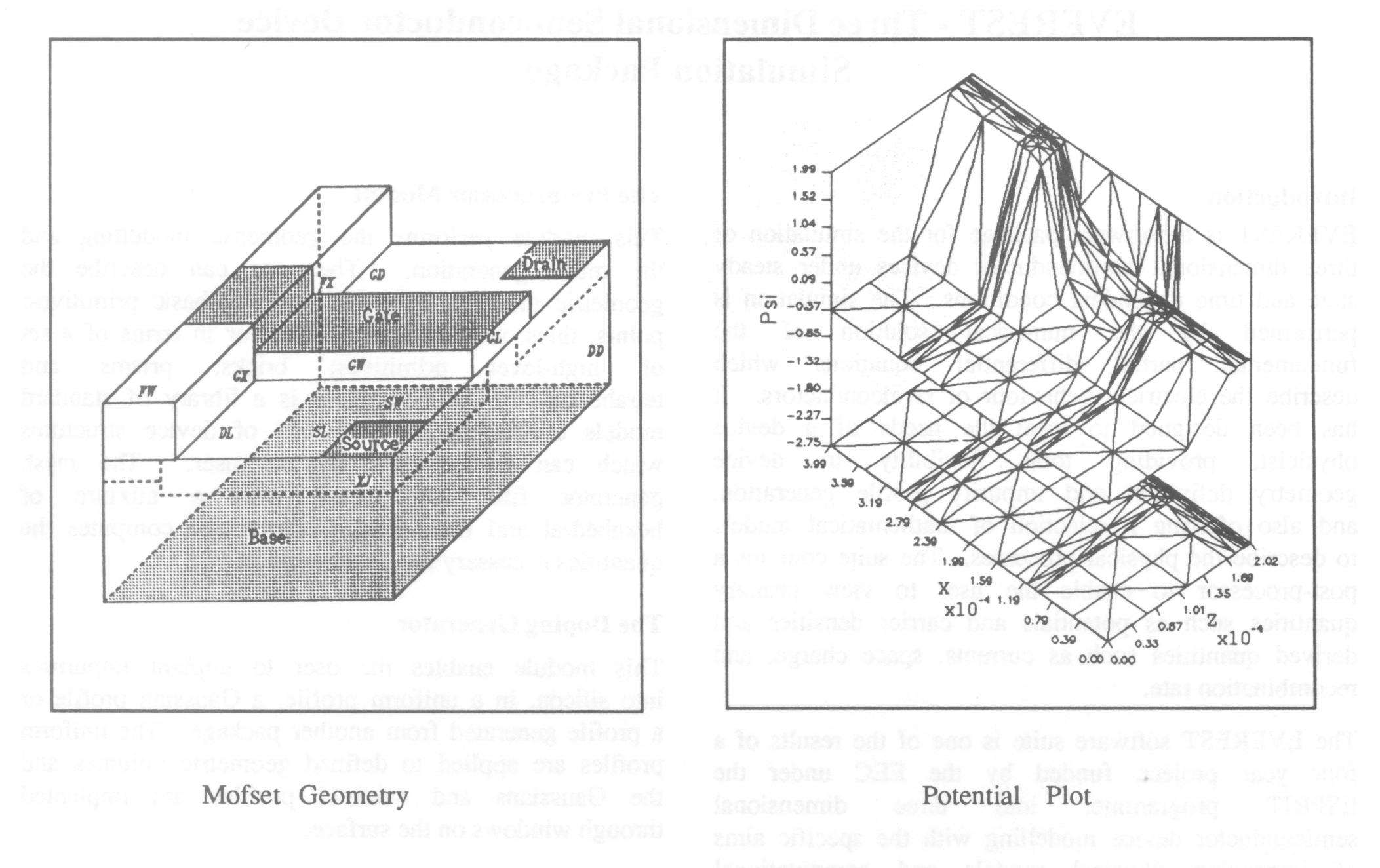

The figure below illustrates the output that is produced by DEVMOD in the modelling of a MOSFET. The concentration of arsenic in the source and drain implants is shown along with the mesh used for the solution. The calculated potential distribution at one bias point is illustrated by an isometric plot and a typical I-V curve is shown.

EVEREST is a software package for the simulation of three dimensional semiconductor devices under steady state and time dependent conditions. The simulation is performed by the numerical solution of the fundamental partial differential equations which describe the electrical behaviour of semiconductors. It has been designed to meet the needs of a device physicist, providing total flexibility in device geometry definition and impurity profile generation, and also offering a selection of mathematical models to describe the physical processes. The suite contains a post-processor to enable the user to view primary quantities such as potentials and carrier densities and derived quantities such as currents, space charge, and recombination rate.

The EVEREST software suite is one of the results of a four year project, funded by the EEC under the ESPRIT programme, into three dimensional semiconductor device modelling with the specific aims of improving physical models and computational techniques used in semiconductor device simulation.

The complete software package consists of four standalone modules: the pre-processor, the doping generator, the solver module and the post-processor, each with its own command parsing environment and on-line help system. The modules communicate via a set of neutral files and are used interactively or in background mode.

This module performs the geometric modelling and the mesh generation. The user can describe the geometric model in terms of a set of basic primitives; points, lines, surfaces and volumes or in terms of a set of high-level primitives; bricks, prisms and tetrahedra. In addition, there is a library of standard models containing the skeletons of device structures which can be extended by the user. The mesh generator fills the model with a mixture of hexahedral and tetrahedral elements and computes the quantities necessary for the simulation.

This module enables the user to implant impurities into silicon, in a uniform profile, a Gaussian profile or a profile generated from another package. The uniform profiles are applied to defined geometric volumes and the Gaussians and external profiles are implanted through windows on the surface.

This module solves the coupled time dependent drift diffusion semiconductor equations, using the control region method in space and the Gear method in time. The nonlinear systems of equations are solved by a modified damped Newton method and the linear systems are solved by the incomplete Choleski conjugate gradient method and the preconditioned conjugate gradient squared method.

The user is given a choice of models to describe the bandgap narrowing, the recombination rate and the carrier mobility, and control over some parameters in the numerical techniques to improve computational speed.

This final module provides a facility to view the output from all the other modules. It will display the geometry, the mesh, the impurity distribution and various aspects of the solution in variety of ways; one dimensional graphs, contour plots, isometric projections, vector plots and I-V graphs

EVEREST suite has now been used to simulate a large number of device structures, from simple one-dimensional p-n junctions to more complex and realistic transistors. A series of benchmarks have been simulated using EVEREST which illustrate the performance of the code. The results of these are available on request.

Below is shown the geometry and potential of a simple MOSFET with a 2-micron channel length. The device has 2-volts applied to the gate and a source drain bias of I-volt.

In addition to the on-line help system provided by EVEREST full user documentation is available for all the modules giving details of all the commands and their associated parameters together with worked examples.