At the beginning of the year the operating schedule of the Central Computer was extended to a full seven days (168 hours per week). The only new equipment added was a second set of IBM 3330 disks (eight drives), and no major changes were made to the operating system. In this stable atmosphere the performance and reliability of the machine have proved quite outstanding. Maintenance has been reduced to four hours every four weeks and system development to less than three hours per week, thus invariably leaving more than 160 hours available to Users in each normal working week.

The pressure of work has increased, so that for the greater part of the year all available CPU time has been used. With a full work load, CPU utilisation varies between 70% and 80%. This provides about 100 CPU hours per week to Users, and the total of jobs now exceeds 10,000 per week. The most notable growth during the year has come from Users sponsored by the Atlas Laboratory, who accounted for over 20% of the total work load by the end of the year.

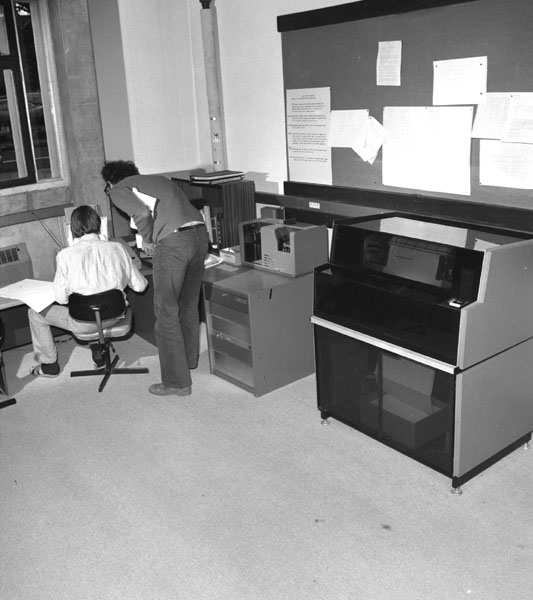

Remote computing has undergone a quiet revolution during the year. This has arisen partly from the increased popularity of ELECTRIC: over 50% of jobs are now submitted from local or remote ELECTRIC terminals. The number of ELECTRIC users rose from 100 to 200 in the year. Fifteen remote work stations, based mainly on GEC 2050 computers supplied by the Laboratory, are now in service; in an average week about 4500 jobs are loaded on the central computer via work stations.

Early in December, after measuring 1.25 million bubble chamber events, HPD 1 was taken out of service for rebuilding to HPD2 specification. HPD2 met all 70mm spark chamber film measuring requirements during the year, and had just measured a large sample of bubble chamber film satisfactorily.

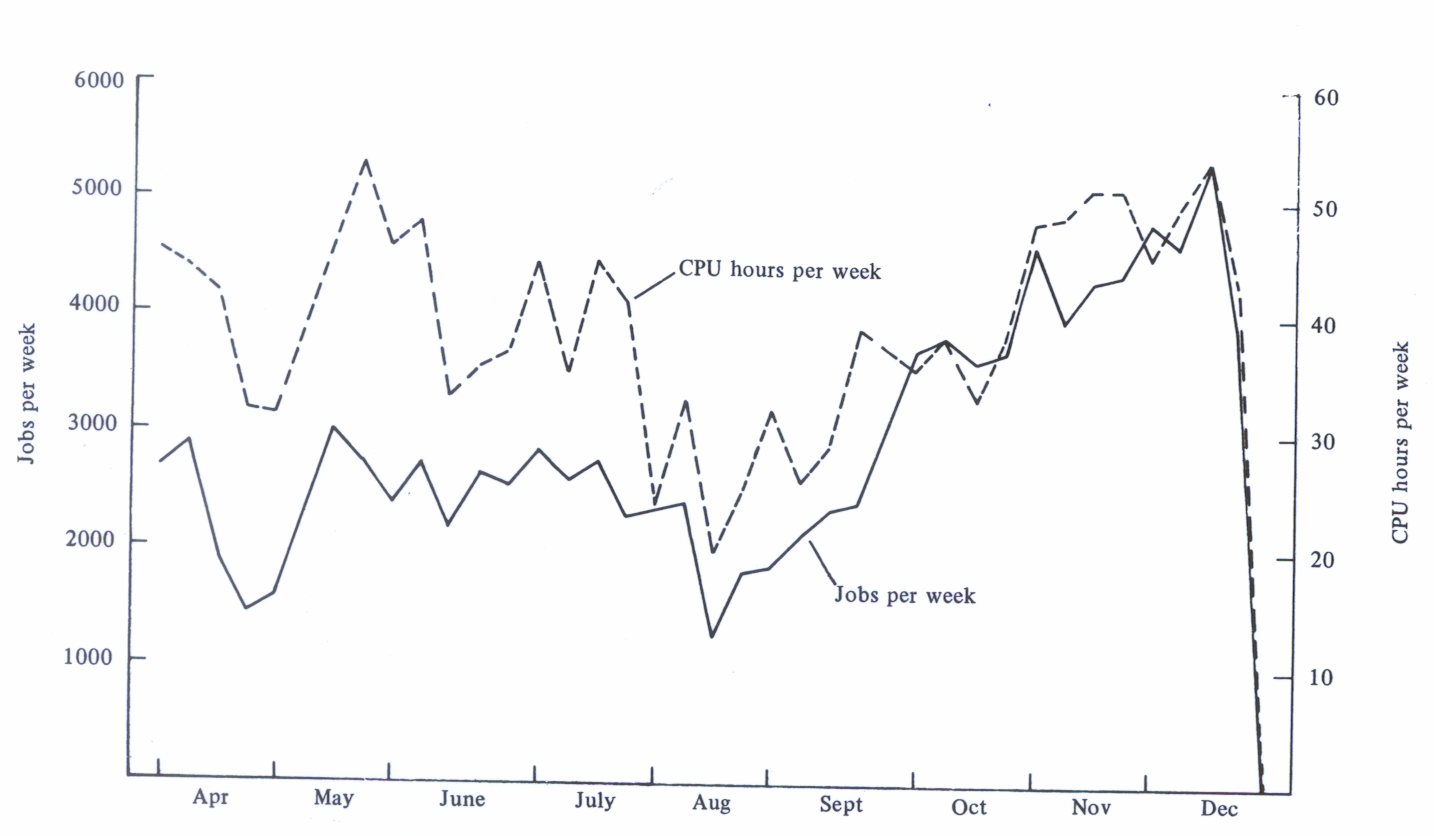

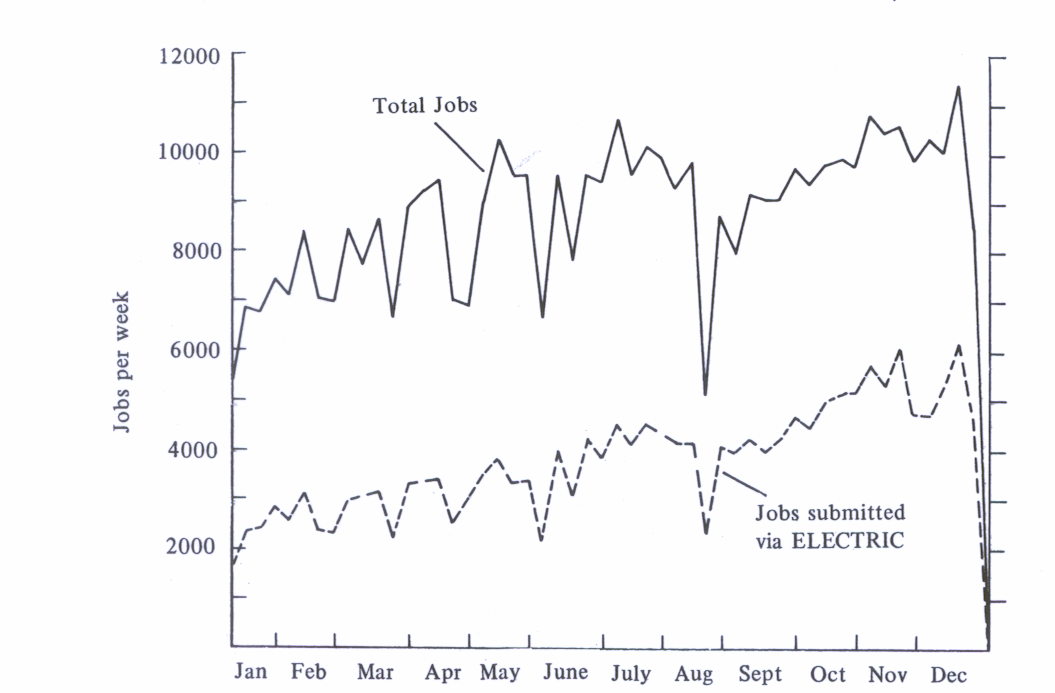

This is the first year of full round-the-clock operation of the IBM 370/195, and the 450,000 total of jobs processed shows a rise of 70% on last year (which had itself shown a rise of 60% on 1971). A statistical summary of operations is made below and in Figures 96, 97, 98, 99, showing the numbers of jobs submitted from remote work stations, via ELECTRIC, and in total, and the CPU time in each case, the weekly averages of machine efficiency (scheduled-downtime/scheduled-time) and CPU utilisation (CPU-time-used / (scheduled -downtime) ), and other details.

| Category | Thousand of Jobs | Average CPU Time Used |

|---|---|---|

| Short (<90 seconds CPU) | 422 | 12 seconds |

| Medium (<5 minutes CPU) | 14 | 3 minutes |

| Long (>5 minutes CPU) | 13 | 14 minutes |

The status of work stations attached to the central computer at the end of 1973 is summarised below:

| Location | Type | Acceptance date |

No. of jobs in normal week Average for Nov 1973 |

Comments |

|---|---|---|---|---|

| Inst. Comp Science | PDP 9 | 1968 | 321 | Development Project |

| Birmingham University | IBM 370/145 | 1972 | 684 | (Originally IBM 360/44) |

| Glasgow University | IBM 370/145 | 1972 | 137 | (Originally IBM 360/44) |

| Bristol University | GEC 2050 | 10 April 1973 | 248 | Purchased by RL |

| CERN | GEC 2050 | 5 June 1973 | 267 | Purchased by RL |

| Durham University | GEC 2050 | 3 April 1973 | 197 | Purchased by RL |

| Imperial College | GEC 2050 | 27 March 1973 | 324 | Purchased by RL |

| Oxford University | GEC 2050 | 25 May 1973 | 407 | Purchased by RL |

| Queen Mary College | GEC 2050 | 17 April 1973 | 58 | Purchased by RL |

| University College | GEC 2050 | 29 March 1973 | 65 | Purchased by RL |

| Westfield College | GEC 2050 | 7 June 1973 | 131 | Purchased by RL |

| Rutherford Laboratory | GEC 2050 | 22 February 1973 | - | Used for software development |

| NBRU (RL) | PDP8 | 1972 | - | Used for data input |

| Atlas Laboratory | IBM 1130 | 1972 | 593 | Purchased by ACL |

| Mullard Space Lab | GEC 2050 | - | - | Purchased by ACL (PO Link due Jan 1974) |

| Reading University | GEC 2050 | - | - | Purchased by ACL (PO Link due Jan 1974) |

| Appleton Laboratory | GEC 2050 | 29 June 1973 | 201 | Purchased by ACL |

| Inst of Geological Sciences, London | PDP11 | - | - | - |

The Nuclear Physics workstation installations are complete except for attaching additional ELECTRIC terminals.

The software initially provided with the GEC 2050 computers was designed to emulate an IBM 2780, which can be handled by the work station support program HASP-RJE resident in the central computer. The IBM 2780 is a device which cannot be programmed, and this software package allowed only card reading or line printing. It was used successfully on all stations for the first half of the year. The package was subsequently replaced by multi-leaved software which makes full use of the programmable facilities of the small computer, enabling ELECTRIC terminals to be connected and permitting simultaneous (and faster) card reading and line printing. This multi-leaved program package (which simulates an IBM 1130) was written at the Rutherford Laboratory, using a GEC 2050 simulation system (provided by GEC) for the IBM 370/ 195 to facilitate software development on the central computer. Responsibility for maintaining it has been accepted by GEC.

The normal HASP-RJE software allows one typewriter console plus a number of card-readers and line-printers to be attached to an IBM 1130 work station. Modifications made to HASP and the MAST message-switching program allow auxiliary terminals (typewriters, VDU's and storage tubes) to be attached.

The weekly number of jobs submitted from remote work stations and CPU time used are shown in Figure 97.

The Laboratory terminal facilities have hardly changed during the year, but several big users have acquired their own terminals. There is a Computek and a Tektronix T4010 graphics terminal dedicated to different Counter groups, and Applied Physics Division has a Computek and a thermal printer. The T4002 display installed for the Bubble Chamber Research Group was already in use last year and a VDU has been added this year. There are new VDU's in the Program Advisory Office" and on the local GEC 2050 work station. Finally, there are four public terminals situated at the Atlas Laboratory (one teletype, one IBM 2741 typewriter and two T4010 graphics displays).

For external users in institutions without their own work stations there remain six lines on the public telephone network, to which teletypes or graphics terminals can be connected for low speeds (l0 characters/second) transmission. Extensive use has been made of these facilities by more than a dozen user groups.

Tests have been made to enable users with terminals, who now dial directly to the central computer via the public network, to communicate instead by the public network to a nearby work station, at some saving in cost. Tests were also made on a fast (2400 bits/second) telephone link; which if successful would be a cheaper alternative to a leased private line for those work stations in contact with the central computer for only two or three hours per week.

The basic operating system software for the IBM 370/195 central computer remains OS/MVT/HASP as supplied by IBM but with some local additions to meet specific needs. The numerous on-line activities are supported by MAST/DAEDALUS/ELECTRIC, written locally. Late in the year IBM provided version 21.7 of OS/360. Version 3.1 of HASP 2 continues in use, but some further modifications to MVT have been made locally, as described below.

Local developments during the year have been primarily in the three areas of automating various operational tasks, introducing facilities specific to large multiprogramming machines, and monitoring system behaviour. Many of the developments are ultimately directed to maximum utilisation of main memory, which at two megabytes will eventually limit the growing use of the machine.

IBM software intended to conserve memory in management of the system queue (Dynamic System Queue Area) was introduced and routine modifications to accommodate the second bank of 8 type 3330 disk drives were made; the opportunity was taken to re-distribute the system data-sets.

There are several long-lived programs (MAST, ELECTRIC, etc) for which the MVT system was modified to allocate reserved high-address partitions in main memory, leaving the remaining memory available to MVT in the standard way. During the year this principle has been extended to accommodate anyone long-lasting application program in a core position where it will not cause prolonged fragmentation.

An autocleanup program, which detects when a program library is nearly full, has been introduced to remove program modules which have been superseded or are no longer in use. This prevents jobs failing through lack of library space.

A program library will generally contain some defunct members, some which rarely change (because they are well developed and in production use), and some being developed which change frequently. By recording for each library member the date of creation or latest re-creation, the date of most recent use, and a use-count, new library management software ('automigration') now decides when to move unchanging members which are still in use to an associated library.

Another new library management facility, called autoarchiving, is just being put in to effect. It will copy library members which have not been used at all for some pre-determined period, such as three months, to magnetic tape. Once on tape, the programs will not encumber disk management at all, but can be recalled at some future date if they are needed.

Practically all Fortran programs need standard Fortran service routines (collectively known as Fortlib) when executing. This has hitherto been done by incorporating copies of these routines in each program, which meant there could easily be a dozen copies of some routines simultaneously taking space in main memory, and several hundred included in the user program libraries on disk. The situation is accentuated by the heavy dependence on Fortran for scientific programs at the Laboratory and heavy multiprogramming, and has led to pioneering arrangements to make Fortran 're-enterable' for the IBM 360/370 series.

In this re-enterable system a single copy of the most frequently used Fortlib members is held permanently in main memory, so that all concurrent jobs can use it without interference. Preparation and testing for this reform took most of one man-year, but it was introduced smoothly. The Fortlib version chosen was that accompanying the new H Extended Plus compiler (see below), but is compatible with programs compiled by the older G and H compilers.

IBM have provided a new Fortran compiler (Fortran H Extended Plus) specially designed for the model 195. The new compiler generates machine instructions in an order not usually achieved by the older compilers but which is particularly suited to the high degree of concurrency possible in the 195 central processor. This compiler, intended to be the standard for production jobs at the Laboratory, was introduced late in the year. It has already shown that 20-30% of CPU time can be saved for many programs, and bigger savings are likely for programs heavily dependent on the standard mathematical functions.

In addition, a collection of matrix algebra routines has been started, specially written for the model 195 and faster than any Fortran compilation. For example, the scalar product of two vectors of order N can be formed in 0.3N microseconds.

This software is now reliable and robust. DAEDALUS-224 is not expected to need any further changes, though minor modifications were made to DAEDALUS-516 during the year as operational experience with HPD2 was gained.

Use of the ELECTRIC job entry/retrieval and file handling system continued to grow during the year. By the year end there were over 70 terminals, the number of active users had approximately doubled (100 to 200) and the number of jobs submitted weekly via ELECTRIC risen similarly (2500 to 5000), Figures 98,99 show this growth. Provision was made for up to 30 simultaneous users (instead of 20), and the total space within ELECTRIC (currently a complete type 3330 disk) is now fully allocated. The graphics space is 70% occupied, and will shortly be doubled to accommodate future growth.

Developments of MAST continue as new on-line hardware devices, which it must control, are introduced, but the basic framework of 1966 remains.

COPPER checks that the user identifier and account number are a valid combination, and downgrades the requested priority if the user's allocation is exhausted, while SETUP tells the computer operators the tapes and disks required, and allocates them to drives. Both are sub-tasks of HASP which are on-line to MAST, and can thus participate in job control and employ standard methods of communication.

During the year, realistic values were inserted in the COPPER control tables. Most users require rapid turnround for short development jobs but can wait a while for long production jobs. Accordingly, most user groups have been allocated some time at high priority and much more at low priority. Development runs at high priority can be completed during the day in normal circumstances, while long production jobs at low priority are usually completed overnight or at weekends. Users can enquire from any terminal how much of their weekly time allocation remains.

The COPPER tables have been extended to handle more levels of priority, if this should prove necessary. It was also found convenient to use COPPER as a vehicle for messages to appear in a job's print-out heading, either from the computer operators or drawing the user's attention to any excessive (and thus wasteful) request for main memory.

Phase 2 of SETUP has been introduced. This not only displays messages for tapes and disks to be fetched in advance of need, but also takes over from as the allocation of drives. At the end of a job it looks ahead to check if the same volume will soon be needed again. Overall, jobs now spend very little time waiting for appropriate mounting.

The job classes have been simplified by using SETUP, which can accommodate jobs with various peripheral requirements without separating them into different streams. It can also detect the memory and time demands for a job, so the only class a user need declare is X for express. All other classes are calculated internally.

The job status information given in response to a user's typed request has been improved, and will be extended to include reports on recent successfully finished jobs. Such refinements are increasingly desirable as more work is submitted from terminals.

The SMF (System Management Function) records, which are produced for every job for accounting purposes, have been extended to include the data sets, magnetic tapes and disks which a job uses, and the time it spends in a waiting state. The collection of weekly statistics on utilisation of input/output channels has started, both in total and split by groups and division. In addition to the above recording, which proceeds continuously while the machine is running, special short-term monitor jobs are sometimes run at busy times of day to record fine detail of machine behaviour.

The waiting state time is important, for if it uses tapes or disks a job's duration bears little relation to its CPU time, which is the control quantity in COPPER. If the wait time (which is now reported to the user with each job printout) greatly exceeds the CPU time, the job is running inefficiently from the point of view of main memory utilisation. It may need redesigned input/ output methods, or possibly to take more memory (for input/output buffers) so that its wait-time decreases and it releases memory more quickly.

It is of course important to foresee computing limitations before they become serious, and channel capacity could become a bottleneck as activities extend and diversify. There are now 16 (instead of 8) type 3330 disks on block multiplexor channel No 2, and while this allows a better spread of data sets and eases operations, with some disks mounted permanently and others free for anticipatory set-up, the load on the channel is considerably increased. ELECTRIC is one user of this channel, and it is important for a very wide spectrum of terminal users that overloading of the channel should not degrade the ELECTRIC response.

Bringing the new work stations into full operation was a major activity of User Support during the year. Visits to the sites were made to help users become familiar with the system and conventions, and to monitor performance of the stations. A Work Station Users Manual (RL041) was issued during the year, and weekly accounts are now distributed to Work Station Representatives.

There is growing awareness of the advantages of permanently mounted disk space, and an increasing demand for it. When the second set of eight type 3330 disk drives became available and activity was conveniently low (over Easter) the user library space was increased from 180 to 250 Mbytes and that for users' private data sets from 80 to 275 Mbytes.

During the year a disk (called FREEDISK) was introduced on which users' data sets could reside for a limited period (about a month) without registration. This facility has become very popular and may prove worth extending at the cost of other, registered, data set space.

A computer based registration system was introduced during the year. It enables better records to be maintained and facilitates better control of disk space.

The general accounting facilities have been improved. Daily, weekly, monthly and quarterly information can now be produced. Each User Group Representative receives monthly accounts of his group's usage and that of other groups in the same category.

The information has proved useful, for example in setting up data for COPPER (to speed jobs requiring quick turn-round) and in predicting possible new requirements, such as higher density tape drives or extra terminals.

Considerable progress has been made in tidying-up routines in the Program Library. Some 80 routines have been scrutinised so far, and a short descriptive guide produced for each. New versions of the AERE library and of the CERN program SUMX were implemented. An easier method of accessing members of the Computational Physics Communications (CPC) library was devised, and some commonly used mathematical functions from the CERN library were converted for 370/195 usage. New versions of FORTRAN documentation programs were made available.

The extending use of ELECTRIC and consequent pressures on space have led to some tidying-up, particularly of the JOBFILE. This is an area set aside for general purpose programs and utilities, which it is hoped to make more readily accessible.

The Program Advisory Office has continued to handle most day-to-day programming queries, which again totalled some 2500 in the year. Some special utility programs and procedures have arisen from queries, particularly in the field of tape and disk handling.

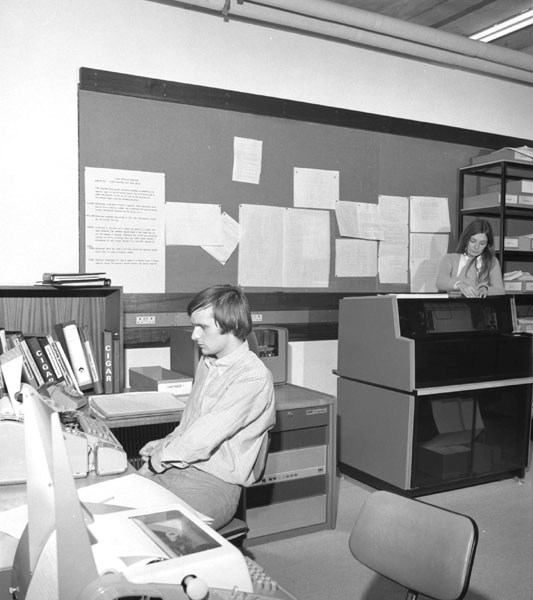

A new version of the main users' document CIGAR (Computer Introductory Guide and Reference) is being written and will be available in 1974. It will include description of routines in the Program Library.