Perhaps in five years time the major SERC computing event of 1984 will be seen as the report of the Macfarlane Working Party set up by Council to review its strategy for computing. Its major recommendations, though accepted, have not yet been implemented, the one exception being the appointment, in October, of a Director of Computing with responsibility for the RAL Central Mainframe Service and strategic planning for SERC computing. His appointment was preceded by major organisational changes in the RAL Computing Service.

With many new projects arriving at RAL in the computing area, the Computing Division had grown to over 200 people and the decision was made to split the Division into two in July 1984. The Computer Review Working party had recommended that control and funding of the Interactive Computing Facility and Single User System programmes back to the Engineering Board. This meant that a Division of viable size could be formed whose interests were in the information systems area in the widest sense and which was funded completely by the Engineering Board. Bob Hopgood, the Head of the Computing Division, took over this new Division called Informatics in July 1984 with Cliff Pavelin taking responsibility for the new Central Computing Division.

Brian Davies of Daresbury Laboratory was appointed the SERC Director of Computing and by the end of 1984 he had moved to RAL and took over responsibility for Central Computing Division with Cliff Pavelin moving to Informatics division.

While these changes were taking place, RAL continued to provide a Central Computing Service for about 2,000 users whose support is derived from all four of the Council's Boards. Some idea of the scale of this usage is given in Table 5.1.

| Funding Source | Batch (Hours) | CMS (Account Units) |

|---|---|---|

| ASR | 301 | 1,535 |

| Engineering | 773 | 1,748 |

| Nuclear Physics | 8,542 | 7,539 |

| Science | 1,316 | 2,464 |

| External | 160 | 377 |

This report concentrates on major changes to the service and new projects undertaken during the year. Effort in both scientific and administrative computing has been directed at two growing needs, viz the increasing amounts of data and improved terminal facilities. Users' requirements continue to evolve from a large batch processing environment to one in which they need to interact with large amounts of data from a terminal.

Major additions to the hardware of the Central Computing complex were necessary to keep pace with expanding data storage and terminal response requirements of an increasing user community. Both the IBM 3081 and the Atlas 10 were enhanced by the addition of eight megabytes of memory, making the IBM 3081 a 32 megabyte processor and the Atlas 10 a 24 megabyte processor.

The increasing number of interactive users placed heavy demands on paging space and the Memorex 3864 solid state paging device was increased by 24 megabytes to a total of 72 megabytes. The addition of another Channel Processor on the Atlas 10 provided improved access to the paging device and to online disk storage devices. Eight channels were added to the IBM 3081. Communication between systems running on the IBM 3081 and those on the Atlas 10 was improved by the installation of two ICL channel-to-channel adaptors.

The advent of the MVS system, PROFS (Office Automation System), UTS (UNIX Terminal System) and the increase in the number of CMS users from 1,300 to 1,600 over the year put heavy demands on disk storage space which was increased by 5 gigabytes to 32 gigabytes.

The Tape Library currently contains approximately 58,000 volumes many of which have remained unused for many years. A major exercise was carried out in 1984 to identify unwanted tapes. This resulted in approximately 9,000 tapes being identified for disposal. However, the number of new tapes issued in 1984 was more than 5,000 and the maintenance and space required by such a library continued to be a problem (see Fig 5.1).

The aged IBM 1403 lineprinters were pensioned off this year as was the FR80 microfilm recorder. These were replaced by an IBM 3203 lineprinter, a Xerox 8700 laser printer, an online NCR microfiche recorder and an IBM 4250 electro-erosion printer. The Xerox 8700 is a medium speed laser printer producing mixed text and graphics output, double-sided, on cut A4 sheet at a rate of seventy pages per minute. The NCR 5330 high-speed text microfiche recorder has inbuilt chemical processing and cutting subsystems. It is an online device capable of producing a fully processed microfiche every 30 seconds. The IBM 4250 electro-erosion printer also produces mixed text and graphics output. The machine prints at very high resolution on special aluminium coated paper and is intended for the production of master copies for subsequent reproduction.

Release 3 of the Conversational Monitor System (CMS) was installed in April. The major enhancement in this release is the introduction of a new and very powerful command file interpreter called the Restructured Extended Executor or REXX for short. Release 3 of CP, the Control Program part of the VM operating system, was installed in November. Apart from the desire to keep up to date with manufacturers' software, this release was needed to run Structured Query Language/Data System (SQL/DS), a new IBM database system which will interface to MVS to provide query facilities for users of the tape and disk management system.

Other changes include modifications to the CMS mail system to make it more user friendly, and production of a system called TESTSOFF to allow users to try out new products or major changes to existing software before they go into full production.

Considerable progress has been made towards the introduction of MVS, IBM's mainline batch operating system, on the RAL Central Mainframes. The main areas of effort have been the provision of a tape management system, a real-time accounting/resource management system, and interfacing to the M860 Mass Storage Device.

There has been a large increase in the use of OFFICE AUTOMATION during the year. A service using IBM's PROFS system (PRofessional OFfice System) was introduced on a trial basis at the Laboratory in 1983 and has been developed significantly during this year. Use of PROFS has expanded from a pilot project to become an integral feature of the Laboratory's everyday life. The number of users served by it started at 40 in mid-1983 but has now risen to a number approaching 250 7% of whom are at remote locations including Swindon, Edinburgh and CERN (Geneva). The system serves most of the senior managers and their secretaries plus a representative cross-section of other administrative users drawn from Finance, Personnel and General Administration.

The RAL PROFS installation offers:

The PROFS service at RAL means that staff who have no previous computing experience are now using a computer as one of the tools of their trade. Indeed PROFS was used in the preparation of text for this Annual Report as it allows easy document transmission and co-editing.

Significant steps have been made in two areas of administrative computing.

The IBM 3032 at RAL has been used throughout the year as a development machine for administrative computing systems. The work has involved the installation of many software components. These include the MVS/JES3 operating system and packages to control a terminal network and provide menu-driven program development facilities. Three application projects are under development on the IBM 3032 - post-graduate studentships, finance systems and payroll/personnel systems. RAL staff were involved in design studies for the first of these and have been responsible for installation and maintenance of the large commercial packages used for the others.

A large and increasing part of RAL administrative activity is handled by decision support systems based on the use of INFO - a small relational database system, and STATUS - a database system for handling full-text databases. During this year, the Library bibliographic and circulation control system has gone into full production and its success has necessitated work on performance improvements. Decision support systems for other divisions of the Laboratory have been developed to meet changing user requirements. These systems allow both scientific and administrative staff to make selected abstracts from large amounts of data by entering simple commands at a terminal. Another large system built this year handles a central mailing list and assists administrative users with the distribution of large quantities of Laboratory publications.

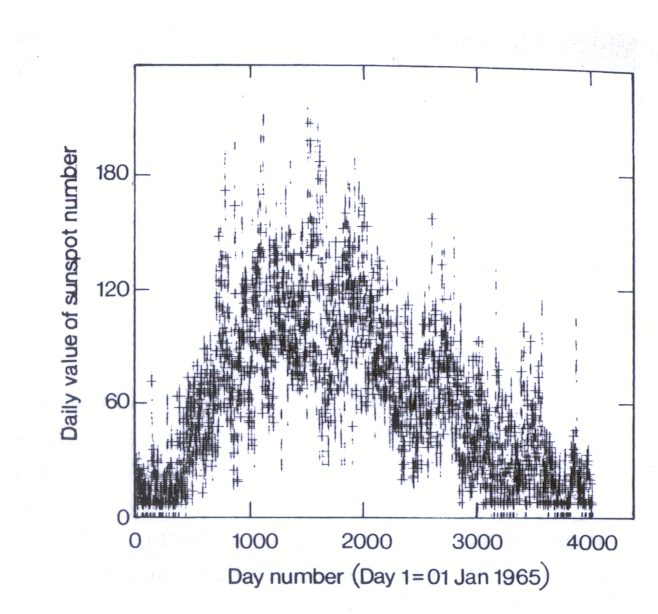

Modern experimental apparatus, and in particular scientific satellites, can generate data at the rate of millions of bits per second. Usually, the large volumes of data are processed by fairly standard overnight batch data processing runs. However the problem of finding the sector of data relevant to a particular scientific problem requires sophisticated database retrieval. Such a system has been developed for the Solar-Terrestrial Physics World Data Centre at RAL (see Fig 5.2). It is a small interactive system which processes indexes to the main data to allow users at terminals fast access to information about the large data sets of interest before submitting batch jobs to process them. Joint work with the staff of the World Data Centre has resulted in several such index systems now being available to users in the academic community.

Staff working on scientific databases have continued to work on Committees concerned with European future accelerators where access to large databases is seen as a real issue.

During the year, the initial implementation of GKS was completed. It was released by ICL on PERQ systems (using PNX) and by RAL on VAX/VMS systems on the RAL site. A GKS User Guide and a Reference Manual have been produced and distributed to users.

GKS is the Draft International Standard for computer graphics programming. RAL staff have contributed considerably to its evolution. As part of this, the final editorial changes were made this year to bring GKS to a full International Standard. By the end of 1984, GKS was able to drive Tektronix storage tubes, certain Sigma 5100 series terminals, Calcomp 81 plotters and graphics metafiles. The software was supported by VAX/VMS and PERQ/PNX. Work was in hand to extend the range of terminals supported and to provide machine implementations for IBM/CMS and PRIME.

This year has seen the inception of a function known as Service Line. This is intended as a single point of contact for all user queries (see Fig 5.3). It is currently handling about 750 telephone calls per month and deals with many of the simpler queries, leaving the more highly-trained Program Advisors free to deal with the detailed technical problems.

Two regional meetings have been held this year, at Edinburgh and Bristol. These gave local universities and polytechnics a chance to hear about SERC's computing facilities and its plans and to raise questions on all aspects of the Computing Service.

1984 was a busy year for the distribution of information to our widely dispersed user population with about 3,500 manuals and 45,000 newsletters, user notes and manual updates being issued to users of both the Central Mainframes and the Interactive Computing Facility. With this amounting to a stack of paper some 500 feet high, it is easy to see why studies are under way to increase the amount of online documentation.

One large development has been the production of a system allowing output to be routed to a lineprinter attached to a PAD (Packet Assembler-Disassembler) anywhere on the JANET network. This was required especially by several remote sites whose GEC multi-user minis have been phased out and replaced by PADs. Cambridge ring software was installed on the GEC machine at Swindon and on four of the systems at RAL.

A refurbished VAX 11/750 was installed in March to allow central support of VAX network software. This involves providing help with the initial installation of network software and problem solving at over 30 VAX sites in the UK and overseas (CERN, DESY, Saclay, etc).

The software to support a Hyperchannel link to the central mainframes was installed and tested. This project was in preparation for the possible use of such a link by SNS.

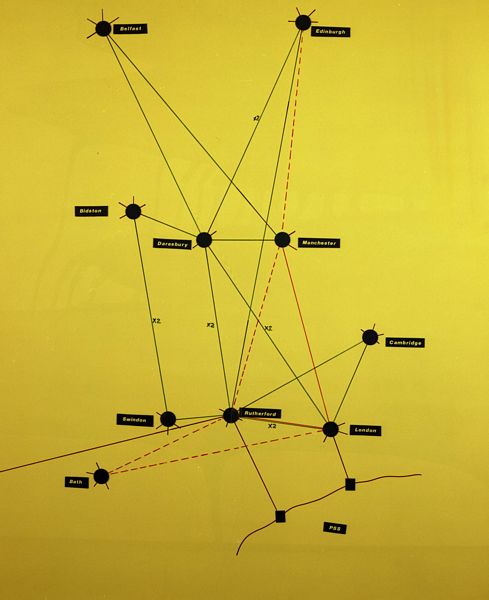

April the first marked the formal handover of SERCNET to the Network Executive, and its change of name to JANET (see Fig 5.4). Since then a process of consolidation and expansion has been taking place. Elderly network switches are being replaced with more modern equipment and new switches provided at Belfast, Manchester and Bath. Selected trunk lines have been upgraded from 9.6 kilo bits per second (kbps) to 48 kbps and advantage taken where possible of the Kilostream digital transmission services now offered by British Telecom.

During the year the JANET team has doubled the size and communication speed of the gateway from the network into British Telecom's Packet Switched Service thus providing better communication facilities from the network both to UK industry and overseas.

The Joint Network Team for the Computer Board and Research Councils has continued its programme of development and directed procurement. The programme is aimed at the production of a uniform set of open protocols often called the 'Coloured Books', allowing communication between computers of different manufacturers to take place in a standard way. The X25 transport and file transfer protocols are now available for over twenty types of computer and operating system. They are in use on hundreds of machines.

The JNT has also been active in coordinating efforts to standardise networking of personal computers and other micro computers.

The increased use of PROFS and the Administrative Computing pilot project together with rationalisation of the network and its extension have led to a heavy programme of equipment installation.

During the year, more than 300 offices in major buildings at RAL have been wired with coaxial cable connections for IBM-type full screen terminals, and the buildings linked to the Central Computing System by optical fibres. Over 100 such terminals have been installed at RAL and other Council establishments.

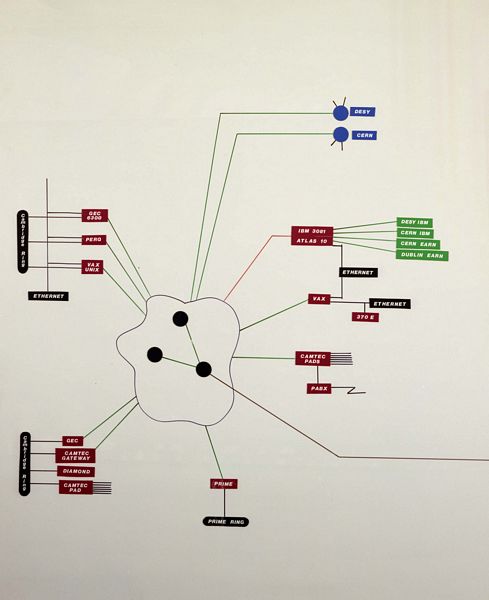

Five GEC machines have been installed at RAL, three for JANET development, one as a test system and one to replace the gateway to British Telecom's Packet Switched Service (PSS). See Fig 5.5. A further machine was installed as a packet-switching exchange (PSE) at DESY.

New terminal maintenance arrangements took effect in April. These provide users with replacement terminals while faulty devices are repaired by a maintenance contractor. These arrangements resulted in significant cost savings. They dealt with 839 terminal faults in the first six months of operation.

As part of the plan to move computing power away from the central machine and into the user's terminal, the IBM PC has been investigated this year. Expertise has been developed in both hardware and software and advice offered to prospective purchasers. Software has been provided to connect the PC to the Central Mainframes. Work is in hand to connect the machine to JANET using standard protocols.

The Summer School entitled Good Practices in the Production and Testing of Software run for SERC staff was repeated at Easter. Presentations about aspects of SERC computing by staff from the Laboratories were interspersed among the main course lectures, most of which were given by staff from Salford University.