The Secretary's Department and Central Office Administration provide funds for general SRC facilities. The items funded at the Laboratory include the facilities for general computing, based upon an IBM 360/195 computer system and a network linking over 70 universities and laboratories in this country and overseas centres. The Laboratory has brought into service the computer-based system for the SRC's Grants and Awards Administration. The Energy Research Support Unit offers a service for energy research in universities and polytechnics and the Council Works Unit provides an engineering and building works service to SRC establishments.

An IBM 3032 computer was delivered at the end of March and brought into service in April. The computer has 4 Mbytes of main storage and a set of 8 IBM 3350 disk drives.

The existing dual IBM 360/195 computer system continued in operation under the operating system OS/360 with MVT (Multiprogramming with a Variable number of Tasks). The IBM 3032 runs under the Virtual Machine (VM/370) operating system which permits the concurrent running of many virtual machines within a single real computer. Under the system, one machine has been constructed to provide additional OS and MVT processing capacity and linkage with the IBM 360/195 system to form a three processor complex. At the same time, development work has been performed in another virtual machine to enable the 3032 to take on all the 'front end' functions concerned with handling the data communications of the 360/195 complex, spooling the job input and output, providing the ELECTRIC facilities and scheduling the work queued for the three processor complex; this will release more of the processing power of one of the 360/195 computers for users' jobs. Other development work leading to the introduction of the Conversational Monitor System has been carried out in other virtual machines.

There has been a continued expansion in the number of workstations connected to the 360/195 complex, chiefly as a result of the installation of Interactive Computing Facility's Multi-User Minicomputers, which provide job submission and access to the central facilities.

An indication of the growth of the use of the central computing facilities is given in Fig 5.1, which shows the amount of cpu time used and the number of jobs run over the period 1973-79. The drop shown in 1977 relates to the saturation of the single IBM 360/195 processor and the programme of installing the second 360/195 and relocating the first in the Atlas Centre.

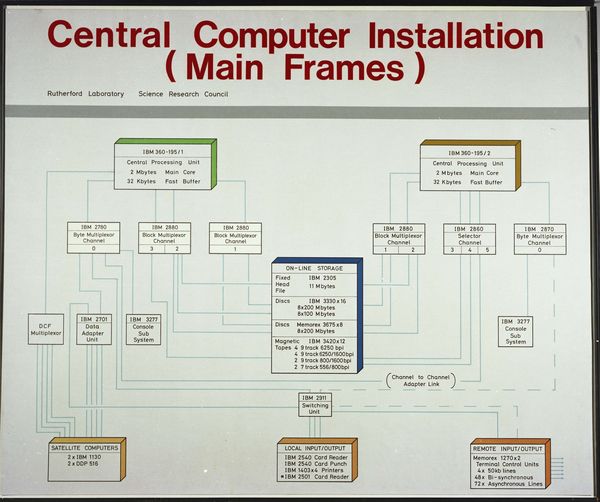

The layout of the three machine complex is shown in Fig 5.2. The front end machine (FEM) providing the HASP, ELECTRIC, and COPPER control facilities has been run mainly on one of the 360/195 computers. Two back end machines provide the bulk of the batch job processing facility, one running on the 360/195 and the other on the 3032.

The IBM 3032 computer executes its instructions more slowly than the 360/195, some instructions being about 10% slower while others mainly undertaking floating point arithmetic take more than twice the time. An average ratio between the power of the machines of 2 to 1 has been assumed in assessing the 360/195 cpu hours delivered by the 3032. Table 5.1 shows the usage made of the whole OS MVT system during the year within each of the funding areas. The cpu usage is reckoned in IBM 360/195 equivalent hours. The 3032 itself in the last three quarters has contributed about 20% of the overall capacity. Performance of the system during the year has been generally satisfactory. There has been an increase in the number of interruptions to the service to allow changes to test the three machine systems but the overall effect on the use made of the system has been marginal.

| Jobs | % | CPU Hrs | % | |

|---|---|---|---|---|

| Nuclear Physics Board | 348,481 | 42.6 | 6,610 | 66.3 |

| Science Board | 112,160 | 13.7 | 1,570 | 15.7 |

| Engineering Board | 31,760 | 3.9 | 443 | 4.4 |

| Astronomy, Space & Radio Board | 81,871 | 10.0 | 647 | 6.5 |

| SRC Central Office and Central Computer Operations | 145,934 | 17.9 | 367 | 3.7 |

| Other Research Councils | 78,524 | 9.6 | 280 | 2.8 |

| Government Departments | 18,826 | 2.3 | 57 | 0.6 |

| Totals | 817,556 | 100.0 | 9,974 | 100 |

In March at the request of the Natural Environment Research Council, the UNIVAC 1108 system previously housed at the Institute of Hydrology in Wallingford was re-installed alongside the IBM computers at the Rutherford Laboratory, where the computer is now operated by Laboratory staff. Notwithstanding the age of the machine an acceptable service was being provided within 14 days of its closure at Wallingford.

The FR80 microfilm recorder continued to provide a service for users requiring high quality output on photo-sensitive hard copy paper, microfiche and microfilm. During the year a number of film sequences were made on the device. Some were designed to demonstrate time-dependent behaviour in the solution of problems arising in the fields of astronomy and engineering.

When the IBM 3032 acts as a front end (handling telecommunications and primary input and output) for the two IBM 360/195s, they will undertake the arithmetic tasks for which they are more suited. The 3032 can also be used to introduce new interactive services (such as the Conversational Monitor System) and to facilitate system software development through its VM (Virtual Machine) hypervisor.

Tests have confirmed that the IBM 3032 computer executes sequences of non-arithmetic instructions typical of operating system software at about the same rate as the 360/195. The plan of adapting workstations to the more modern and versatile VNET code was modified as a result. It was decided to introduce the 3032 to the front end functions performed by HASP as a means of changing the roles of the computers more quickly and thus releasing the greater arithmetic power of the 360/195 previously made inaccessible by front end functions. Locally-produced modifications have been implemented to prevent requests for input/ output operations from causing VM to suspend to OS MVT and HASP virtual machine, and testing of the resultant system in an operational environment has been started.

Meanwhile work has continued on adaptations to VNET to make it drive the existing network facilities and to interface it with other established parts of the software system.

The major activity during the first half of the year was to provide support for three-character identifiers both in ELECTRIC itself and within its many associated utility programs and packages. The scheme adopted was to keep the existing two-character identifiers unchanged and to use the letter O as a prefix for the three-character identifiers, giving in excess of 1000 new identifiers. The first batch of these was issued to general users towards the end of September.

The number of simultaneous users was increased from 55 to 60 in January without significant deterioration in performance. The continued growth in the user population gave rise to the requirement for more file space and this was achieved by moving the level 2 archive to a 3330 model 11 disk in January (additional 70,000 blocks) and the on-line file store to a 3350 disk in April (additional 62,000 blocks). The latter move was accompanied by the performance enhancement of siting the most frequently used files on the fixed-head part of the 3350 disk.

Turning to user facilities, a new decoder was written early in the year to allow positional parameters on all ELECTRIC commands and edit sub-commands. Keyword parameters can still be used and may be interspersed with the positionals. The decoder allows commands to be abbreviated to the minimum number of characters which retains unambiguity and edit sub-commands to be abbreviated to single letters by the omission of the $ prefix.

A command macro facility has been implemented to allow entries in a file to be processed as if they were commands entered from a terminal. A new command, OBEY, activates the file of commands which are normally processed sequentially. A set of sub-commands, which must also be stored as entries in the obey file, allow the user to control the flow of commands by branching operations, to set and test variables, to test the ELECTRIC fault number and to read from and write to the terminal. The obey file may itself contain an OBEY command; obeys may be nested down to five levels.

Other improvements to the system include an extension to the ELECTRIC text layout processor to provide better tabbing facilities and the ability to edit the overflow portions of lines which are longer than the terminal page width.

The Conversational Monitor System (CMS) is an interactive time-sharing system which runs in a virtual machine under the VM/370 operating system on the IBM 3032. It gives users the ability to create and update files, to run jobs in a CMSBATCH virtual machine and to run jobs interactively in their own virtual machines.

Work was begun on evaluating the CMS facilities and planning a general service for users. Some enhancements have been implemented including an improved version of the LISTFILE command which lists a user's files and the provision of message and mail facilities.

Because users of the central computer system have a requirement for job submission to the MVT batch in the 360/l95s, it was necessary to provide a system which makes the production and parameterisation of OS Job Control Language (JCL) much easier than is possible in the standard CMS system which does not require JCL. This has been done by implementing the PLANT/SUPPLY program from the GEC 4080 Data Editing System. This program is written in the language BCPL and the work has involved coding an interface between this program and the CMS file store.

During the year the IMPACT system has been improved and extended. A code generator for Tektronix 4000 series displays has been added and output from IMPACT may now be sent to the FR80, to disk files or to Data Editing System GEC 4080s for viewing. The error reporting has been improved and log-axis options added to the graph and histogram routines. Many chapters of the Graphics User's Guide have been completed.

A copy of SMOG and the high level graphics routines is being installed on the Bubble Chamber Group's DEC VAX 11/780 and all the routines of IMPACT have been generated so that they may be used under CMS on the IBM 3032.

The FR80 microfilm recorder has again been used to produce the main part of the annual publication for the Cambridge Crystallographic Data Centre. This year's publication was volume 10, Molecular Structures and Dimensions, Bibliography 1977-78, Organic and Organometallic Crystal Structures. It contained 3018 entries arranged in 86 chemical classes with 1476 cross-reference entries. The page layout used was the same as that for volume 9; there were 6 different indexes with 395 pages made by the FR80 in 45 minutes plotting time for the final production run.

In addition to the main library catalogues, the archives catalogues and the address labels facility, a procedure has been made for the Photographic Section to index their collection of negatives. Input of the first year's entries showed that the method is feasible, and work is continuing on the entry of the remainder. It should greatly improve Laboratory access to over 30,000 negatives.

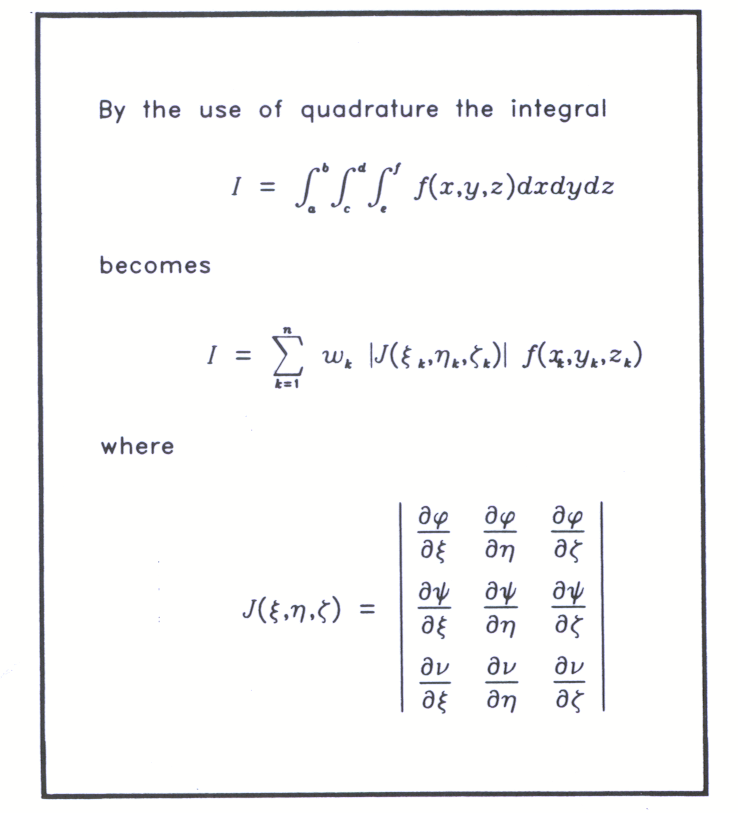

A general program has been developed to allow the use of a simple language for the layout of mathematical text and to include diagrams with the text. It is being used for the documentation of the Finite Element Subroutine Library (see Fig 5.3 for a sample output).

Several developments this year, both within SRC and elsewhere, have served to emphasise the growing requirement in the research community for fully integrated network access to central computing facilities such as those at Daresbury and Rutherford Laboratories as well as to more specialised machines such as mini-computers acting as either Interactive Computing Facility Multi-User Minis or as enhanced workstations.

The establishment of the Cray service at Daresbury is beginning to bring about a situation in which it is now common for an external site to require equally good access to both Daresbury and Rutherford central computing facilities. It is anticipated that the development of the Spallation Neutron Source at Rutherford Laboratory and the Synchrotron Radiation Source and Nuclear Structure Facility at Daresbury Laboratory will ensure the continuation of this trend.

The increasing degree to which networks are now permeating the whole of the university and Research Councils community is exemplified by the requirement to connect several ICF MUMs to the associated University Computer centres in addition to being a host on SRCnet. Planning and implementations of the future expansion of SRCnet is now undertaken in consultation with the recently established Joint Network Team of the Computer Board and Research Councils.

In order to provide file transfer by means of job submission between Rutherford and CERN, a Daresbury back-to-back RJE system based on a PDP11/34 has been installed at Rutherford Laboratory and is in service. A similar connection is being installed between Rutherford and DESY laboratories.

Use of the US ARPAnet link from the Laboratory's central system via University College London continues, with some 25 research groups funded by SRC making use of facilities in the USA. In view of the imminent provision of the International Packet Switched Service by the PTTs, the future of he current arrangements for this link is under review.

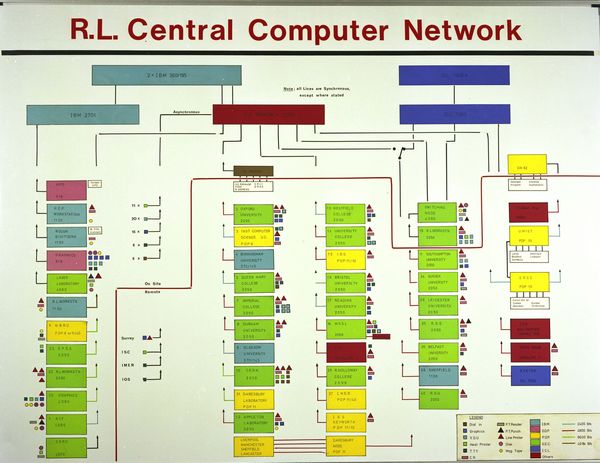

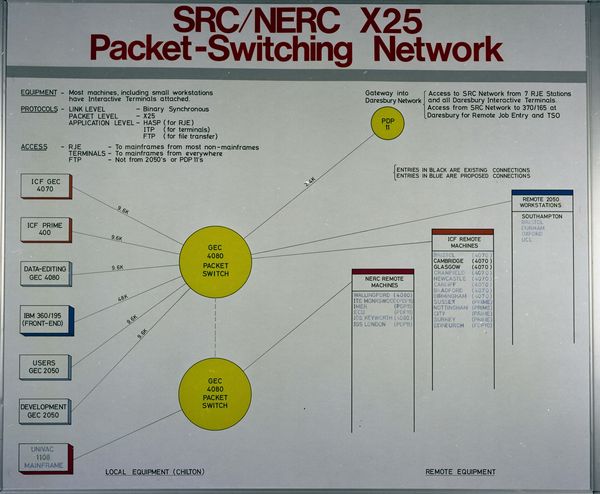

In broad terms the network has doubled during the year, both in the number of machines connected and traffic carried (see Fig 5.4). There are now 25 hosts connected, in addition to the mainframes; 13 of these are connected to the Laboratory's X25 Packet Switching Exchange (PSE) and include 6 ICF MUMs, the Data Editing system and Royal Observatory Edinburgh: all of these are GEC 4000 series systems. Four network 2050 workstations are also connected.

The Laboratory's central IBM system typically handles 5,000 network calls in a week, consisting of about 1 million packets carrying approximately 100 Mbytes of data. The build-up of traffic through the X25 exchange has been gradual. During an hour of working under recent peak loading conditions, typically a total of 150 calls are made, reaching a peak of 20 simultaneously and a total data throughput average of 1 Kbyte per sec.

Considerable effort during the year has been directed towards increasing the reliability of the network. In addition to the Network Development Committee of some years' standing, there is now a Network Operations Committee, with representatives from both Daresbury and Rutherford Laboratories, which deals with the issues raised by the need to keep the network in a continuous state of operation as well as the requirements for operator notification to the community about the status of facilities on the network.

The EPSS gateway connection to the 360/195s continues to provide an important means of terminal access for members of the community whose institutions do not have a local host computer or workstation to provide access to SRC computing facilities.

A notable achievement has been the completion of the networking programme for the GEC 4000 series machines which can now offer the full range of protocols accepted for use on SRCnet. Interactive Terminal Protocol enables a user either to access another host or to use his home machine from elsewhere on the network. Implementation of the UK Network Independent File Transfer Protocol now enables users to transfer files between hosts (currently only to other 4000 series machines); this has been of particular importance to the ICF in maintaining remote MUM operating systems from the central site. Finally, implementation of the SRC network adaptation of IBM's HASP multi-leaving protocol enables simultaneous RJE connection to more than one HASP host on the network.

An important step towards network integration occurred with the completion of a prototype SRCnet to DECnet gateway by York University under an SRC contract. This has successfully provided terminal access from SRCnet to the York DEC System 10 and vice versa.

The Post Office's plan to make PSS available in 1980, together with the recent publication of the PSS protocol specification details, has stimulated study of the relationship between the SRC and PSS versions of X25 protocol. Planning for an SRCnet gateway to PSS has begun; it is expected to take over from the current EPSS gateway at the time when EPSS closes.

There has recently been considerable activity in the field of high level network protocols, largely as a result of the imminence of PSS and the creation of the Data Communications Protocols Unit of the Department of Industry. The Laboratory is actively involved in the definition of Job Transfer and Manipulation Protocol and the first maintenance revision of Network Independent File Transfer Protocol. It also participated in work leading to the current draft version of the Transport Service.

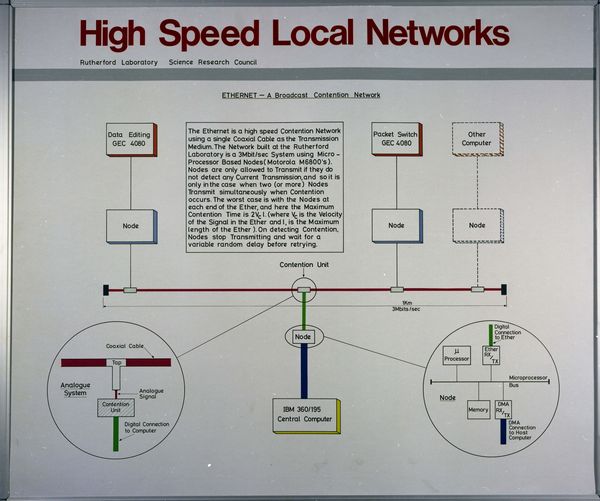

Two new developments, which the Laboratory has been actively pursuing for several years, are broadcast satellite data communication and local area networks. The STELLA project and ETHERNET are described below. The ETHERNET is one particular technique in use for local area networks and the Laboratory has also collaborated with Cambridge University in the design of two microelectronic circuits using Ferranti uncommitted logic arrays for use in a future version of the Cambridge ring, which is an alternative technology for local area networks. One of the most important new areas of study which is now opening up is that of the relationship and interconnection of local area networks, packet switching networks, such as SRCnet and PSS, and satellite broadcast networks.

The STELLA project, which will enable physicists working at CERN and DESY to transmit data via the OTS satellite at 1 Mbit/sec to the Chilton site, has progressed rapidly during the year. A ground station and dish (see Fig 5.5) were installed by Marconi Ltd and handed over to the Laboratory in November. At the same time, CERN staff assisted with the final installation of a special-purpose interface between the link driving computer, a GEC 4080 situated in the main computer hall, and the ground station. Both the ground station and the CIM (Computer Interface Module) have been working reliably since their installation.

A special-purpose modem, designed to work at high speed (2 Mbit/sec), was developed by Marconi to convert the digital information into an analogue form for transmission via the satellite. This modem initially gave problems due to its sensitivity to strings of repeated ones or zeros. A slight redesign of the CIM seems to have mostly eliminated this problem and data have been reliably sent to the satellite and back. Currently we are experiencing a bit error rate which, in the worst case, is around 10-7. Such an error rate would allow the system to function satisfactorily. However it is hoped to improve the rate to the 10-9 or 10-10 level as further small systematic problems are removed.

A Marconi ground station has been installed at the CERN Laboratory and was handed over to the Swiss PTT in December. It is hoped that the CERN station will be transmitting to the satellite by the end of January 1980 and that data will be transmitted to Rutherford Laboratory from CERN during February 1980. The other participants (Pisa, DESY and Sa clay ) will become active from the middle of the year; Pisa will be the first, possibly as early as April 1980.

The initial system will enable 1600 bpi magnetic tapes to be copied from CERN to Rutherford Laboratory. An early extension at CERN will allow physicists to use CERNET to transmit their data to their link-driving NORD 10 computer. Data will then be recorded on magnetic tape to be transmitted later via the satellite. At Rutherford Laboratory a similar extension is being considered which would enable the data to be transmitted to the IBM system via the ETHERNET.

The Laboratory has been involved for several years in the development of hardware for local high-speed data transmission. The ETHERNET approach uses a coaxial cable, known as the ETHER, to which equipment in the form of ETHERNET nodes may be connected at any point. The ETHER can be up to a kilometre or so in length, and should be able to support speeds of up to 3 Mbit/sec. This compares with the much lower speeds achievable on existing long-distance networks, typically up to 9.6 kbit/sec, or at most 48 kbit/sec on special wideband lines.

The node hardware and software has been designed and built at the Laboratory and uses a Motorola M6800 microprocessor. To use the ETHERNET for high-speed communication between two or more computers it is necessary to connect them via an ETHERNET node. Computers so connected are then referred to as ETHERNET "hosts". The general scheme is illustrated in Fig 5.6, in which the method of tapping into the cable is reminiscent of that used for providing Cable TV.

The ETHER itself functions as a passive broadcasting medium. When a node has some information to send to another node, it broadcasts it onto the ETHER with the appropriate node address on the front. Although all the nodes can detect the transmission, only the addressed node will actually read it in. As the ETHER can carry only one transmission at a time, the hardware refrains from transmitting until ETHER seems to be quiet. If two nodes begin broadcasting simultaneously, the resulting interference between the transmissions is detected, and the transmissions are aborted and re-issued.

The software in the nodes provides a simple point-to-point protocol, whereby each successful transmission is acknowledged by the receiving node. Absence of an acknowledgement within a given time interval implies an unsuccessful transmission, which must therefore be re-broadcast.

At the present time, four ETHERNET nodes have been built, and as a preliminary step towards bringing them into production use it is intended to connect three of them to local machines on the main SRC Network, namely the 360/ 195, the 4080 packet switch and the Data Editing 4080. This will allow the performance of ETHERNET to be evaluated in a real environment, and it is hoped that it will also relieve the main network of a significant volume of traffic.

Connecting ETHERNET to the SRC Network implies that X25 packets will be carried across it. However there is no intention at the present time of implementing X25 protocols inside ETHERNET. The packet protocol remains entirely the concern of the hosts, the nodes being in fact unaware that the data they are carrying consist of X25 packets.

Following the recommendations of the Network Unit, the Computer Board and the Research Councils have established a Joint Network Team, based at Rutherford Laboratory, to co-ordinate the evolution of computer networking among universities and Research Council institutes. The aim is to provide common data communications facilities so that users throughout the academic community may have access to a wide range of local and remote computing resources. The initial phase of the Team's programme includes support for the development of widely applicable components to be used in constructing networks and attaching computers and terminals to them. Strong emphasis is being placed on adherence to the emerging national and international standards for data communications protocols for open system interconnection. The Team also collaborates with computer centres in the formulation of specific local and remote networking plans to ensure that these conform to the overall national objective.

The interactive Grants administration system went live on the ICL 2904 computer at the end of June. This date was chosen as being one likely to cause the least inconvenience to the users at Central Office, Swindon. The live 2904 system was preceded by several months of running in parallel with the previous batch system, the computing for which was provided by the 1906S computer at Sheffield University. During that period, programming work was concentrated on checking out the conversions of numerous report programmes used extensively by Central Office to monitor the progress of individual grants and to obtain statistics for general grants administration. The 2904 system was checked out and evaluated by regular meetings of the computer users liaison panel.

The first three months of operating on the 2904 showed up a number of teething troubles as well as bringing to light a few problems with data in the 1906S system. However, at no time was a significant amount of data irretrievably lost or mis-processed. The introduction of an interactive service and data base software represented an increase in the complexity of the system over the batch system, but the development of suitable standards and procedures has meant that the new system is not appreciably more difficult to maintain than the old.

Since the end of September, the 2904 system has been in a more settled state. Work has concentrated on enhancements which will lead to a better use of the system, the establishment of recognised procedures for processing enquiries and fault reports. The major programming exercise was the implementation of automatic indexation of research grants to take into account staff salary rises and increases in London weighting. The first run of this program indexed over 3000 grants and authorised an additional commitment of £7.5 million.

The 2904 cpu has been very reliable although a number of problems have arisen on peripherals including the local lineprinter and with communications to Swindon. The latter were further complicated by shortcomings in the ICL communications software. The machine now has 96K words of store, following a recent upgrade which did much to improve throughput and response on the terminals. The ICL EXEC IS software has been quite reliable and it is now planned to implement EXEC 2S. This should make it easier to control the work from Central Office and reduce the need for operator cover at Rutherford Laboratory during the day.

Some data analysis work for the Awards system has been carried out at Rutherford Laboratory, but the main need has been for manpower to initiate the collaboration between Swindon and Rutherford Laboratory in designing the new system. Studentships are still handled by programs running on the Sheffield computer.