Most of the Laboratory's programmes fall within subject areas covered by one of the four Boards of SERC and are fully funded by that Board. The first four sections of this Report cover such work. There are some programmes that cover areas relevant to more than one Board and in those cases Council provides the funds through the Secretary's Department or Central Office Administration. Such programmes - which include Computing, Networking, the Energy Programme and Council Works Unit - are described in this section of the Report.

The computing facilities managed by RAL are amongst the most powerful in Europe. They provide a service to more than 3000 users in UK universities and polytechnics. About half of the computing power is distributed through the universities and the rest is provided by central machines at RAL. The central facilities include an IBM 3032 as a front end for two IBM 360/195 mainframes. The distributed systems include about 100 minicomputers situated in the universities and these provide interactive computing and remote entry to the central systems.

All these computer systems are connected by a network using private telephone lines and it is possible to access the central computers and the majority of the distributed computers via a terminal connected to any of the systems. The Laboratory has a major development programme in network communications including wide area and local networking. The Joint Network Team, funded jointly by the Computer Board and research councils, is based at RAL and is concerned with the introduction of networking standards throughout the universities and the research councils and with the interconnection of research council and university networks.

The Laboratory is also involved in several collaborative ventures in computing with industry. An example of this is the development of software for the PERQ powerful single user computer - this work is in collaboration with International Computers Ltd (ICL). We are also involved in two pilot projects on office automation which are funded by the Department of Industry: one is with ICL and involves the PERQ, the other is with Data Recall and involves Diamond word processors.

An Energy Research Support Unit based at RAL provides support for energy research in UK universities and polytechnics. The programme is selected and controlled by the SERC's Energy Committee. Some facilities are centred at the Laboratory including a fluidised bed combustion facility and a vertical axis windmill. In addition to experiments undertaken, other activities include co-ordination of programmes in the universities and nationalised industries.

The Laboratory provides a Council-wide consulting and building works service to SERC establishments through the Council Works Unit. These activities include the design of buildings for telescopes on La Palma and Hawaii.

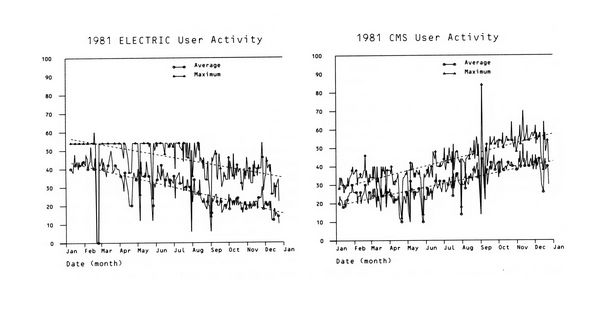

During the year, the IBM 3032 computer has been enhanced and the new interactive system, CMS (Conversational Monitor System) has been introduced as a service. The VNET software came into operation in December and this will eventually provide access to CMS for all the existing users. In order to apportion the existing facilities fairly, a new system of allocation units has been introduced to CMS and to ELECTRIC (the old interactive system). Users are beginning to move from ELECTRIC onto CMS, as planned.

With the presentation of the report of the Computer Review Working Party (CRWP) some changes in the organisation of SERC relating to computing took place. The Facility Committee for Computing was replaced by a Central Computing Committee, which has responsibility for the IBM systems and the multi-user minicomputers, formerly run by the Interactive Computing Facility under the Engineering Board. Consequently, a report on these machines appears in this section this year. The findings of the CRWP were generally accepted and work has begun on the implementation of the programme to replace the IBM 360/195 computers.

1981 also marked the appearance in England of the PERQ single user computer and the agreement between International Computers Ltd (ICL) and SERC to exploit this interesting new machine. Proposals to connect this to local area networks will keep RAL in the forefront of computing development.

The system has been enhanced by the addition of hardware. The IBM 3032 has had its main memory increased by 2 Mbytes of store and a second-hand additional fixed head file (IBM 2305) attached to increase the paging area available by 11 Mbytes of store. The backing store for all three machines has been increased by 7 Gbytes which will be used to improve performance, reduce disk mounting and provide users with more filestore. The latest configuration of the system is shown in Fig 5.1.

Performance has continued satisfactorily but with the increase in the CMS load, a noticeable lack of front-end power was apparent such that little or no batch has been run on the IBM 3032. Considering the age of the IBM 360/l95s, their performance is excellent. The number of CPU hours has remained over 200 per week even though the number of jobs has dropped to 15,000.

The system has remained stable with no hardware or software changes. As well as the normal service, there is an orderly transfer of work to the NERC Honeywell installation at Bidston; the service at RAL is due to cease in March 1982. The numbers of batch jobs and terminal jobs executed per week are 480 and 668, respectively, and this provides 1,330,230.5 standard units of accounting (SUA) per week. The Institute of Hydrology and the Institute of Geological Sciences (London) are the largest user institutes.

Microfiche is still the dominant form of output. The workload has shown a modest increase over the year. DRIVER software is now in full use in place of that supplied by Information International Incorporated. The FR80 disk was replaced with a much larger one and, after initial problems, enough extra space has been provided to allow the convenient use of multiple fonts. A cine film was made by C Eilbeck of Heriot-Watt University called Sine-Gordon Solitons. It took 40 magnetic tapes of data and produced 10,000 frames of film.

Apart from the changes required to support VNET, work on MVT/HASP has been restricted to essentials only. A new system had to be generated to support the Memorex disks which were installed early in the year. This also involved the reorganisation of the disk space allocations to take advantage of the extra space. This has not yet been completed because of the performance problems with the disks.

Attention has been given to the perennial task of preventing the ELECTRIC performance deterioration too far. It seems that the only effective way of improving the performance of ELECTRIC in the present environment is to reduce the number of users.

VM (Virtual Machine) has benefited from the addition of hardware to the IBM 3032. In March an extra 2 Mbytes of storage almost trebled the space available for CMS. This resulted in very low paging rates, which improved performance and allowed the development of operating systems, eg MVT (Multiprogramming with a Variable number of Tasks) and VM, without serious impact on the users. The addition of a second fixed head file also provided extra fast access paging space, which will allow a large expansion of the number of simultaneous use with no reduction in service.

A completely new release of VM, VM/SP, was introduced in the summer. This provided new facilities, but also caused problems for ELECTRIC which have now been fixed.

Some local modifications to VM have proved to be necessary. It is policy to reduce these to a minimum, but so far it has been found impossible to eliminate them entirely. Changes were necessary to support VNET and the accounting/control facility introduced in September. These were moved to VM/SP with virtually no effort, thus it is hoped that maintenance will not prove to be a problem.

The programme of work is now virtually complete; the interface from VNET to CMS was finished by December. This means that we can now support all networked workstations, and all non-networked workstations that have no secondary consoles. The first workstation was transferred successfully to VNET in November. The programme for transferring the remainder will take several months because of the large number of workstations involved. When complete, it is not expected that any further development of VNET will be necessary.

In the summer a working party was set up to study the problems of transferring to MVS (Multiple Virtual Systems). Time has been spent on looking at our existing system modifications and the way the system is run, to see how essential facilities can best be provided in MVS. The intention is that, as far as possible, existing system modifications should be replaced by standard facilities. A detailed programme of work should be ready by the end of the year. An amount of system development will be necessary before user jobs can be run on an MVS system.

The only development to ELECTRIC has been the introduction of an allocation and control system. Users are issued with a weekly ration of allocation units (AUs) for each account number. An additional command for querying the rations, the AUs used and the charge factor was provided. The accounting part of this system was installed at the beginning of April. The control part, which prevents users from logging in during prime shift on an account number which has run out of AUs, was installed in September.

The average number of simultaneous users gradually decreased during the year as more users gained access to CMS. The Allocation and Control system may also have contributed to this decrease. The terminal response was very variable but has been generally good with less than 30 users, the normal situation towards the end of the year.

The VM/SP version of CMS was installed early in the year giving access to the new and more powerful editor, XEDIT, and the improved performance and facilities of the new EXEC processor, EXEC 2. The number of simultaneously active users has increased to around 35 at peak times. Nevertheless, the performance has remained excellent with 98% of trivial commands giving a response time of less than 1 second.

An allocation and control system was developed to provide accounting and control of resources. The mechanism adopted was to provide a special virtual machine, AXEMAN, which runs continuously in disconnected mode and maintains all the details of AU allocations and amounts used for each account number. The VM control program, CP, also sends accounting information to AXEMAN at each time zone boundary and at logoff. A user-callable EXEC file has been provided so that users can query their rations, the current charge factor and the amount of AUs used in the current session.

An incremental dump/restore system for the CMS filestore was developed to reduce the frequency of full pack dumping whilst still maintaining the ability to restore to the previous night's backup. Only one full pack dump is taken each night, the disks being dumped in rotation. Also, an incremental dump is taken of all the files which have been created or modified since the last incremental dump, usually the previous night. The restore part of this system allows the restoration of individual files or complete minidisks to a level which is never more than 24 hours old.

A stripped down version of ELECTRIC has been implemented to run in a CMS virtual machine. This accesses the ELECTRIC filestore in read only mode and a subset of ELECTRIC commands is available. Its main purpose is to allow users to copy ELECTRIC files directly into the CMS filestore, thus avoiding the necessity of going via an intermediate OS data-set. Other modifications to CMS include the improvement of the HELP facility, the provision of a special minidisk for general purpose user-written software and an abbreviated HELP system for XEDIT subcommands. The second edition of the Introduction to CMS at RAL was issued in February and a considerable effort was devoted to re-writing large sections of the VM Reference Manual which was ready for publication towards the end of the year.

The OS/MVT version of IBM's Storage and Information Retrieval System (STAIRS) has been replaced by a version which runs under CMS. In the past, STAIRS has been used exclusively by the HEP community with limited periods of access, but it is expected that STAIRS/CMS may have wider applications as it will be more readily available.

A new fully-supported version of the text processing system, SCRIPT, was obtained from the University of Waterloo. This has many new features including a macro system which simplifies the process of preparing papers, memos or reports. It also has an option to support files developed under the old SCRIPT.

The IBM FORTRAN Interactive Debug program was obtained in October, initially for a three month trial period. This allows interactive debugging of FORTRAN programs compiled with the FORTRAN G1 compiler under CMS.

Also on trial was Amdahl's version of the UNIX operating system known as Universal Time-sharing System (UTS). This runs in a virtual machine under VM on the IBM 3032 and has been made available on a limited basis. It has been found to consume a large proportion of the resources of the already hard-pressed IBM 3032 and to adversely affect the response of CMS users. Discussions with the software supplier are continuing to try and identify the problem.

Much work has been done on improving the analysis of VM's monitor records. A graphical presentation was developed to replace the rather cumbersome lineprinter plots. Weekly graphs are produced showing number of users against time of day and a selection of variables against number of users for a single day. Also, trend graphs show the daily averages plotted over a period of two months.

| Jobs | % | CPU Hrs | % | |

|---|---|---|---|---|

| Nuclear Physics Board | 305,416 | 41.6 | 7,373 | 67.7 |

| Science Board | 118,583 | 16.2 | 1,457 | 13.4 |

| Engineering Board | 33,773 | 4.6 | 864 | 7.9 |

| Astronomy, Space & Radio Board | 63,485 | 8.7 | 567 | 5.2 |

| SRC Central Office and Central Computer Operations | 128,881 | 17.6 | 249 | 2.3 |

| Other Research Councils | 53,790 | 7.3 | 190 | 1.7 |

| External | 29,653 | 4.0 | 197 | 1.8 |

| Totals | 733,581 | 100.0 | 10,897 | 100 |

Some time was spent evaluating a number of hardware monitors and a case has been prepared for obtaining one of these as a better tool for collecting performance measurements than the software monitors currently used.

As part of the plan for the proposed enhancement of the central computer facilities, an interactive benchmark was developed to be run under CMS. This benchmark consists of two scripts of commands representing a. novice and a more advanced user and a virtual machine to simulate the CPU and I/O load of the front-end MVT system.

During the course of the year, allocation systems, based on account units, have been installed for both ELECTRIC and CMS. Suitable algorithms were defined for calculating the account units, based on CPU time used, connect time and I/O activity, and paying users are now being charged for this activity. This has meant the programs have had to be produced to provide accounting information on both ELECTRIC and CMS, as well as MVT. Also, allocations of account units were calculated and set up for all account holders. These are still being adjusted in the light of experience; for existing grant holders we have no guidelines on what allocations are required, but this is a situation that will gradually change as new grant applications are submitted.

The locally written database, which holds information on users' allocations and usage of the MVT system, is becoming inadequate, since we now need to store similar information for ELECTRIC and CMS, and we are actively looking for a replacement for this. The background jobs of providing account information where required, making the facility available to new users, keeping the register of users update, allocating CPU time and providing reports for the Boards on a regular basis has continued throughout the year.

This year RAL has contributed even more than in previous years to national and international efforts towards agreement on a graphics standard. This culminated in an ISO (International Standards Organisation) graphics meeting organised at Abingdon, which produced a document that has now been adopted as an ISO Draft Proposal for computer graphics. It is intended that an implementation of this proposal (Graphics Kernel System) will be made available soon on most of the computer systems we support. RAL is also involved in SERC and BSI (British Standards) working groups that are trying to standardise a format for exchange of graphics information. A successful symposium on Computer Graphics was held at RAL immediately prior to the ISO graphics meeting. Five experts who were attending the meeting gave talks at the symposium and then participated in a panel discussion.

The GKS system on the Starlink VAX systems has been augmented by improved handlers for the ARGS raster display and the Versatec printer/plotter. Work on handlers for the other devices provided on the Starlink systems is in hand at RAL and other Starlink sites. The majority of the high level routines available on the IBM, GEC and PRIME systems have been converted so that they are now also available above GKS on the Starlink system; work continues to improve the functionality and reliability of these routines.

Version 2.6 of GINO has been installed on the GEC and PRIME systems without major incident. New handlers include a buffered handler to provide display file facilities on a raster scan display and one for a four-pen drum plotter. The SMOG, MUGWUMP and GINO packages have been released under VM/CMS on the IBM 3032; currently output to terminals and disk files is available to users, with output to the FR80 via VM spool in the final stages of testing. The SMOG system has been extended to allow control of an on-line terminal. Work is in hand to reduce the storage and computer time requirements of graphics programs running under VM/CMS.

The DRAFT system, which provides a method of preparing pictures and text, has been extended to support the Hershey characters, to output all standard flow chart symbols and to allow the user to select different line styles. In addition, a version in which all commands may be specified by interaction with a graphics menu has been developed and an on-line HELP facility added. A means of cataloguing fonts has been evolved; when this is complete, users of SMOG and GKS will have access to a large number of fonts as hardware characters on the FR80 and software characters on less versatile devices.

As part of the PERQ software project, work started in the autumn on installation of GKS on the DCS PDP 11/70 under UNIX and a device handler for the PERQ is being developed. The aim is to provide GKS under UNIX on the PERQ next year.

There is close involvement with the Eurographics Association; two papers were presented at EG'81 in Darmstadt and assistance is being given with the organisation of the EG'82 conference, exhibition and seminars in Manchester.

In January a reference set of 20 textual microfiche was made for the Cambridge Crystallographic Data Centre. They listed information on more than 30,000 structures in 7 different orders, and needed one hour of FR80 microfilm recorder plotting time.

In March, just under two hours of FR80 time were used to prepare the camera-ready copy for volume 12 of the series Molecular Structures and Dimensions, Organic and Organometallic Crystal Structures, Bibliography 1979-1980. It has 7 different indexes with different page layouts. The 480 pages contain over 5,800 references and cross-references to crystal structures published in the literature during the past year. Improvements to the program this year include the addition of an italic font used for journal names and an option to horizontally justify lines of text.

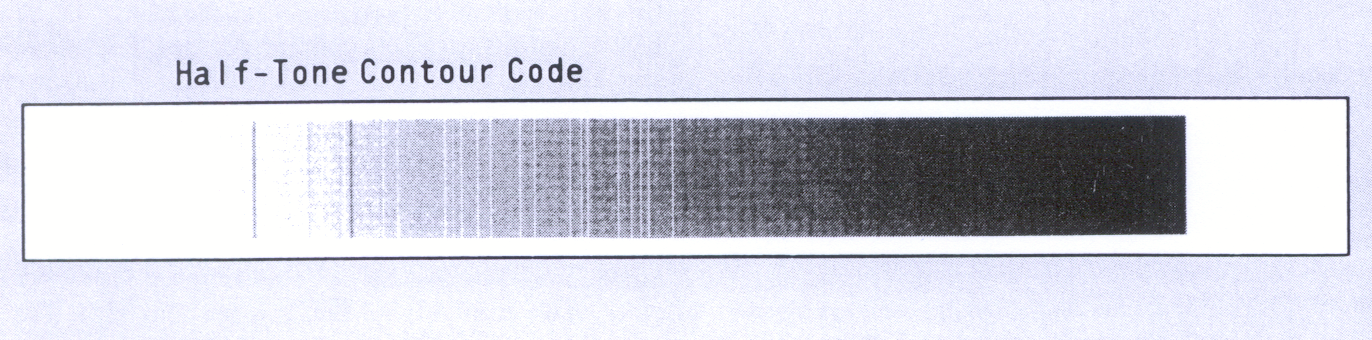

The success of last year's simulation of electron microscope images encouraged us to try further physically interesting simulations, and to attempt to mix text with grey scale images in one frame of FR80 film. Fig 5.3 shows a simulation of the powder diffraction line spectra of benzil superimposed on a continuous background.

A special type of computer terminal (a micropad) was purchased in order to study the human and computing problems associated with increasing amounts of data entry to computers. During the past year the micropad has been connected as an asynchronous terminal to the Interactive Computing Facility and software written to simplify its use with the package FAMULUS.

To use the micropad, a sheet of paper is placed on its pressure-sensitive surface and data printed on the paper with a pencil or ballpoint pen. A microcomputer, inside the pad, recognises the shapes of the letters (or numbers) while they are being written and transmits the character to the computer in the same way as characters are sent from the more usual keyboard. This facility might prove to be useful in providing assistance to people who normally maintain manual records.

In April a Database Section was formed at RAL by amalgamating the Grants and Awards Database Section and staff who provided support for administrative databases on site. The result is an integration of database expertise and activity covering SERC Libraries, RAL Administration, Computing Division Resources, Engineering and Building Works, ASR Board Activities and (with Central Office) Administration of Research Grants, Administration of Studentships and Management Accounting. A standard method of database project management has been evolved to ensure consistency across projects. A major problem is the lack of sufficient staff with database expertise to support all the applications requiring database facilities.

The work involves data analysis (both entity analysis and normalisation), procedural analysis, sizing, design, development (using structured and modular programming techniques), implementation (usually from a machine-independent design base) and followed by maintenance and further development. In the last two areas of activity, support is provided in the form of training and documentation.

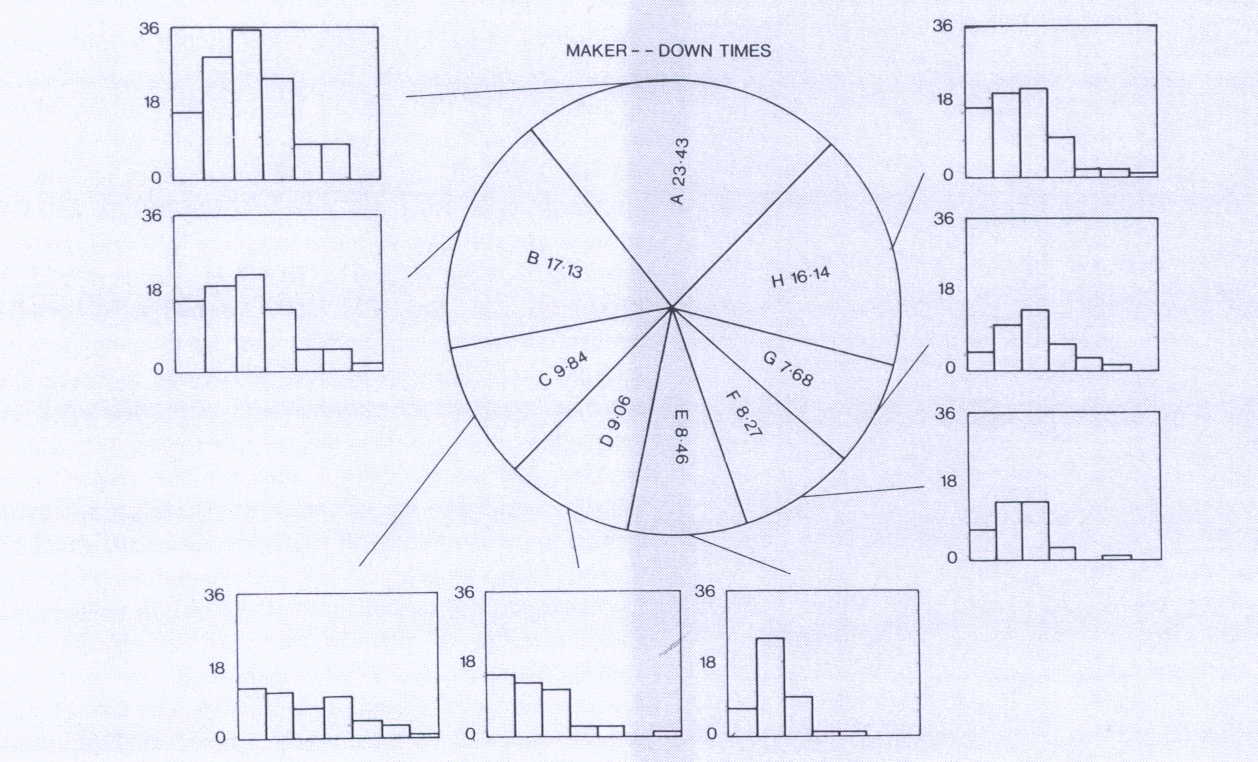

An example of one such database project is the storing of details of the computer terminals together with the history of their faults. Using the database, it is possible to extract statistics for regular reviews of the performance of both the terminals and the maintenance companies, and should thus provide a better service to users. Fig 5.5 shows a typical plot of the faults which occurred during the first half of the year. A macro provides selection of up to 9 different plots for the user specified time interval. The plots can be displayed interactively on a graphics terminal, on a simple teletype, or sent in batch mode to the FR80 microfilm recorder.

The main development in the network area has been the initiation of plans to expand the network by installing more Packet Switching Exchanges (PSEs). Initially a GEC 4065 was purchased by Daresbury Laboratory for use as a PSE; subsequently, similar machines have been purchased by RAL for installation at Edinburgh (at ERCC) and in London (at ULCC). In addition, NERC has purchased two GEC 4070 machines for use as PSEs in the NERC computing service and for connection to the SERC network.

At RAL a GEC 4065 has been purchased for use as a second service PSE in order to share the load imposed by the increasing number of network connections. The number of such connections has almost doubled during the year and has now reached about 60 at RAL. The majority of these are host connections and include 18 workstation connections (mostly GEC 2050s), 14 GEC 4000 series machines and 11 PRIME computers. Most of the GEC and PRIME machines are ICF MUMs. A VAX 11/780 for use by the High Energy Physics community at Oxford University has also been connected to the network using the software developed at RAL. More recently, the first of the Starlink VAX systems has been connected to the network, using X25 software recently released by DEC.

In July a GEC 4065 was installed at RAL as a gateway between British Telecom's Packet Switched Service (PPS) and the SERC network. In August PSS became available; since then the PSS gateway has been in service operation, handling about 250 successful calls per week. The programme for altering SERC X25 to be compatible with PSS X25 has reached the stage where several types of host may now be connected to either the SERC network or PSS. This is important because it enables the software and hardware developed by manufacturers for connection to PSS to be used to connect machines to the SERC network. It also allows a more flexible approach to be taken in planning future network provision.

A major achievement during the year has been the attachment of the PRIME computers. This makes use of one of the prerequisites of the PSS X25 compatibility programme, the ability of the network to accept HDLC protocol. The PRIME computers now submit jobs directly to the IBM MVT batch system by file transfer. PSS compatible X25 software recently released by DEC for the VAX VMS system has been installed in the Starlink VAX at RAL and a successful connection made to the network. This is the first step towards the provision of a fully supported range of network software for this type of computer.

The advent of the PSS PAD (Packet Assembler/ Disassembler) service, which offers users a terminal dial-in service to any host on the network, has stimulated the introduction of the terminal protocol X29 (often referred to as XXX or triple X because of its companion protocols X3 and X28). All the systems on the network are now able to accept calls using this protocol; the PRIME and GEC systems are also able to make outgoing X29 calls. Development is in progress on the IBM VM system to add this capability. On the IBM 3032, development of X25 connection software for VM has been completed and installed. A preliminary version of software to enable CMS users to initiate tile transfers has been developed and installed.

The Laboratory has continued its participation in the definition of interim UK network protocol standards, through its membership of the working groups of the Data Communication Protocols Unit of the Dol. In February the revised version of the UK Network Independent File Transfer Protocol (NIFTP) was published. During the year definition of the UK Network Independent Job Transfer and Manipulation Protocol was completed and the protocol was published in September.

The ICF multiuser minicomputer (MUM) programme was completed during the year. All the PRIME machines are now connected to the SERC network so that all resource management control can be handled centrally.

The Council's policy of gradually reducing the level of recurrent support at the standard MUM sites has commenced with the expectation of only covering maintenance and communication costs during year 6 of the support agreements. Support of the enhanced machines (ie the DEC PDP 11/45s, the DEC VAX 11/780s and the INTERDATA 8/32) is being reduced to reflect more accurately the level of SERC grant supported activity. Development work at the Manchester Graphics Unit will continue to be supported by a rolling three year grant which recognises the very special nature of the work being undertaken. Considerable efforts have been made during the year to renew all the support agreements on time.

The user community continues to expand with over 1,800 currently being supported. Initial development work is being undertaken to provide a more flexible project management resource control facility. This should provide greater control of the allocated resources available to project leaders without the unnecessary involvement of resource management staff.

Terminal allocation now exceeds 550 and with the loan pool financial resources being limited, future expansion is being funded via grant applications.

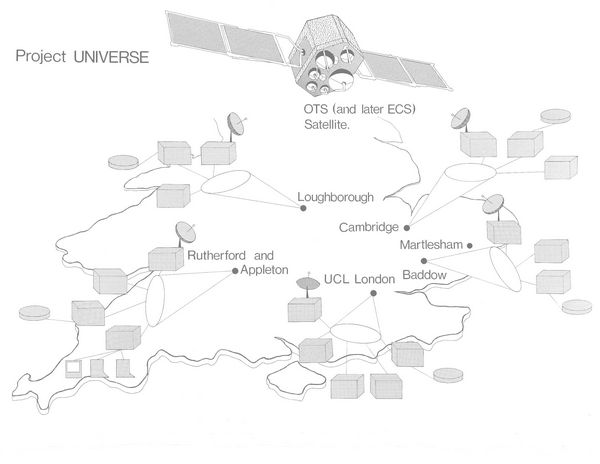

For a number of years the Laboratory has been carrying out development work on new technologies for high-speed computer networking, that is, networking with megabit per second data transmission rates rather than the current kilobit rates. Two projects involved have been the STELLA project which uses the OTS satellite to give high-speed data transmission between the Laboratory and CERN and other European Laboratories and a small project to build an experimental Ethernet to give high-speed local communication. To build on this work the Laboratory joined with Cambridge University, Loughborough University and University College London in late 1980 to put a proposal to SERC for the UNIVERSE project, an experiment in high-speed computer networking. UNIVERSE stands for Universities Expanded Ring and Satellite Experiment. The proposed experiment was to investigate the use of a concatenation of Cambridge Rings and a small dish satellite network to provide a wide-area computer network. The general configuration envisaged was the use of interlinked rings within a site for local distribution with the satellite network joining the sites together. Subsequently the project was expanded to include seven sites, the four SERC sites together with GEC/Marconi Research Centre at Great Baddow, British Te1com research at Martlesham and Logica offices in London. The project was approved as a national effort in Information Technology in April with funding being shared between the DoI, SERC, British Telecom, GEC and Logica.

Because of the limited life of the OTS satellite, the project is working to very tight time-scales. It is planned to bring the network into operation during the first half of 1982. RAL and Martlesham will use existing earth stations and four new Marconi earth stations are planned for delivery to the other sites during February and March 1982. The Logica site is not to have a separate earth station but is to be connected to the VCL site by a high-speed ground link which has already been installed by British Telecom. The collaboration has agreed an initial design for the protocols essential to the operation of the network. Work is in hand on the construction of the computer bridges which will use these protocols to join rings to rings and rings to the satellite network.

Four working parties have been set up within the collaboration to produce the initial programme of experiments to be carried out on the network. These are: