The experimental facilities available to users of the Rutherford Laboratory for scientific studies are capable of generating very considerable quantities of data. To allow the analysis of such data, some of the most powerful computing facilities in Great Britain are installed at the Laboratory. These are used not only for the analysis of the very large volumes of data, which are collected in Particle Physics experiments and aboard space satellites, but also in computations employing complex mathematical models developed for the theoretical study of physical and chemical phenomena.

he use of the computing facilities is implicit in much of the work reported in other sections. The work reported in the following sub-sections, however, has taken place mainly in the two Divisions of the Laboratory responsible for the development of the computing facilities. During the year the work and structure of the two Divisions was rationalised. Broadly, the Computing and Automation Division assumed the responsibility for running the existing IBM 360/195 and ICL 1906A computers and the FR80 microfilm recorder, for the provision of computing services based on this equipment to a widely distributed community of users, and for the development of the systems and applications software and the telecommunications facilities necessary to sustain a high level of service. The Atlas Computing Division, again broadly, was assigned responsibility for the detailed implementation of the outline proposals on the SRC's Interactive Computing Facility and for continuing work on applications programming in such specialised fields as Quantum Chemistry, Crystallography, and Databases.

On 1st April 1976 the Atlas Computing Division's responsibility for activities on the ICL 1906A and its share of the IBM 360/195 was transferred to the Computing and Automation Division, which then became responsible for all computing activity on both machines, with the associated network of workstations, and for the FR80 recorder service.

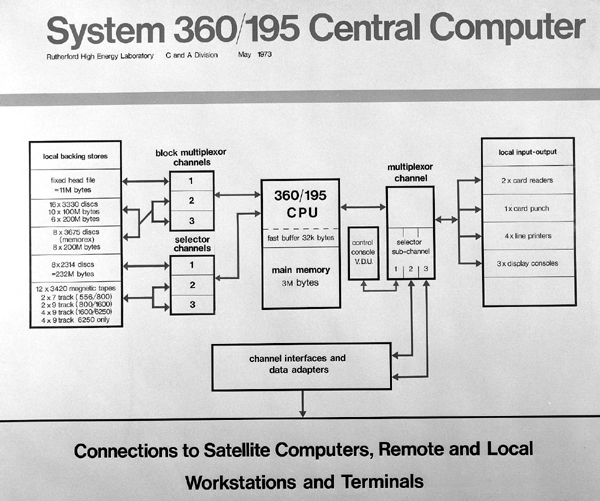

Under a long term Science Research Council plan for computing facilities a second IBM 360/195 central processor, with 1 Mbyte core and channels, was ordered in June. It was decided to house the major computers in the Atlas building, which was promptly modified so that installation of the new machine began on schedule in November. Core memory and peripheral devices will be transferred from the first 360/195 (195/1) to the new processor, and the central computing service will be based on 195/2. A major factor in the schedule has been to reduce to an absolute minimum the time with no service available. After the service has been re-established, 195/1 will be moved to the Atlas building and a coupled two-processor system will be created. Software development has naturally been concentrated this year on preparations for the new system.

Funding arrangements have also changed under the Council plan. The 195/1 was funded by the Nuclear Physics Board and the ICL 1906A and FR80 by the Science Board; but in October responsibility for funding and overall planning was transferred to the new Facilities Committee for Computing. Some of the operations statistics which follow were prepared on a different basis for the final quarter of the year because of this change, but the effects are small in most instances.

The central computer (195/1) operated throughout the year under saturation conditions and achieved record figures. Nearly 8300 hours of good machine time were available to users, and provided 6108 hours of accountable job processing-time after deducting overheads. Turnround and time allocations, decided by committees of users, were effectively controlled by the COPPER priority system. Peripheral equipment was augmented by 8 200 Mbyte Memorex type 3675 disc drives and another block multiplexor, plus upgrading of all remaining 9 track tape decks for 6250 bpi recording.

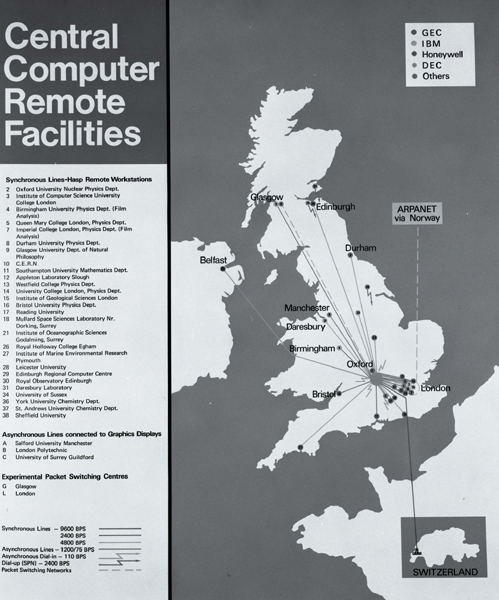

The established star network of over 30 remote workstations changed slightly during the year. Connections were made for several more workstations by private or public Post Office lines, and re-arrangements enabled some stations to access either the 360/195 or 1906A. As the Atlas-Daresbury-Rutherford private network came into use further workstations were able to access Laboratory computers, although of course jobs can only be run by authorised users.

Except during the disruption of the last quarter caused by building work, over 1000 hours of CPU time per quarter were available to users of the ICL 1906A, with an average of 76 hours/week job processing time after overheads. The machine is not under intense pressure and normally operates on a five-day week, but seven-day cover was provided for the UK5 space satellite project. Over 240,000 jobs were processed during the year.

A GEC 4080 computer was bought in 1974, initially to liberate the large section of core in the central computer required by certain interactive graphics programs. Software has been developed which already allows the computer to be accessed by up to six users simultaneously from terminals attached to 360/195 workstations. During the year this subsidiary computer complex became an Operations Group responsibility. An attached interactive system (ASPECT, which is still being developed) allows rotation of a displayed picture in three dimensions and zooming, etc. by hardware.

Although average machine availability, (1 - down time/ scheduled time), was slightly above last year at a record 98.1% the IBM 360/195 was invariably saturated, with a backlog of 20 - 60 hours of low priority work on Monday mornings. CPU utilisation, CPU time/(scheduled - down time), was also a record at 89.7%, with CPU time at 7431 hours for the year (7310 in 1975). These excellent figures were achieved with over 30 remote workstations attached, from which jobs taking 3786 hours of CPU time were submitted. Machine statistics appear in Tables 5.1 and 5.2.

| First Quarter |

Second Quarter |

Third Quarter |

Fourth Quarter |

Total for Year |

Weekly Averages | ||

|---|---|---|---|---|---|---|---|

| 1976 | 1975 | ||||||

| Job Processing | 2010 | 2113 | 2010 | 2044 | 8177 | 157.3 | 155.6 |

| Software Development | 27 | 26 | 33 | 25 | 111 | 2.1 | 2.2 |

| Total Available | 2037 | 2139 | 2043 | 2069 | 8288 | 159.4 | 157.8 |

| Lost time attributed to hardware |

34 | 16 | 61 | 38 | 149 | 2.9 | 3.1 |

| Lost time attributed to software |

4 | 3 | 2 | 3 | 12 | 0.2 | 0.4 |

| Total Scheduled | 2075 | 2158 | 2106 | 2110 | 8449 | 162.5 | 161.38 |

| Hardware Maintenance | 13 | 20 | 14 | 13 | 60 | 1.1 | 1.1 |

| Hardware Development | 12 | 5 | 0 | 4 | 21 | 0.4 | 1.3 |

| Total Machine Time | 2100 | 2183 | 2120 | 2127 | 8530 | 164.0 | 163.7 |

| Switched Off | 83 | 1 | 64 | 58 | 206 | 4.0 | 4.3 |

| Total | 2183 | 2184 | 2184 | 2185 | 8736 | 168.0 | 168.0 |

| First Quarter |

Second Quarter |

Third Quarter |

Fourth Quarter |

Total for Year |

Weekly Average | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

|

| Astronomy, Space and Radio Board | 59 | 12478 | 72 | 11845 | 71 | 13136 | 97 | 21533 | 299 | 58992 | 5.8 | 1135 |

| Engineering Board | 65 | 3891 | 59 | 5365 | 57 | 4558 | 59 | 5109 | 240 | 18923 | 4.6 | 364 |

| Nuclear Physics Board | 1118 | 88111 | 1210 | 102774 | 1009 | 88874 | 990 | 92926 | 4327 | 372685 | 83.2 | 7167 |

| Science Board | 220 | 17058 | 209 | 17750 | 259 | 16181 | 169 | 16454 | 857 | 67443 | 16.5 | 1297 |

| Other Research Councils | 19 | 7882 | 15 | 6646 | 19 | 8323 | 31 | 10150 | 84 | 33001 | 1.6 | 635 |

| Miscellaneous | 70 | 30660 | 73 | 27628 | 74 | 29028 | 84 | 36573 | 301 | 123889 | 5.8 | 2382 |

| User Totals | 1551 | 160080 | 1638 | 172008 | 1489 | 160100 | 1430 | 182745 | 6108 | 674933 | 117.5 | 12980 |

| System Control and General Overheads |

276 | 709 | 328 | 666 | 338 | 729 | 381 | 675 | 1323 | 2779 | 25.4 | 53 |

| Totals for 1976 | 1827 | 160789 | 1966 | 172674 | 1827 | 160829 | 1811 | 183420 | 7431 | 677712 | 142.9 | 13033 |

| Totals for 1975 | 1685 | 145562 | 1872 | 153482 | 1883 | 149660 | 1870 | 159626 | 7310 | 608330 | 140.6 | 11697 |

| (a)Nuclear Physics Board HEP Counters |

599 | 38962 | 480 | 40965 | 365 | 32915 | 405 | 34899 | 1849 | 147741 | 35.6 | 2841 |

| RL - Film Analysis | 54 | 6154 | 120 | 11124 | 77 | 7507 | 90 | 9696 | 341 | 34481 | 6.6 | 663 |

| RL - Others | 25 | 8867 | 28 | 8911 | 24 | 8308 | 80 | 8896 | 157 | 34982 | 3.0 | 673 |

| Theory | 64 | 4452 | 74 | 6237 | 59 | 7294 | 39 | 6756 | 236 | 24739 | 4.5 | 476 |

| Universities Nuclear Structure |

55 | 6736 | 64 | 7967 | 103 | 7194 | 103 | 7176 | 325 | 29073 | 6.2 | 559 |

| Universities Film Analysis |

321 | 22940 | 444 | 27570 | 381 | 25656 | 273 | 25503 | 1419 | 101669 | 27.3 | 1955 |

| Totals Nuclear Physics | 1118 | 88111 | 1210 | 102774 | 1009 | 88874 | 990 | 92926 | 4327 | 372685 | 83.2 | 7167 |

| (b) Science Board Chemistry |

112 | 6026 | 96 | 6792 | 94 | 4844 | 86 | 5651 | 388 | 23313 | 7.5 | 448 |

| Physics | 68 | 7985 | 92 | 8115 | 147 | 8574 | 42 | 4709 | 349 | 29383 | 6.7 | 565 |

| Miscellaneous | 40 | 3047 | 21 | 2843 | 18 | 2763 | 41 | 6094 | 120 | 14747 | 2.3 | 284 |

| Science Board Totals | 220 | 17058 | 209 | 17750 | 259 | 16181 | 169 | 16454 | 857 | 67443 | 16.5 | 1297 |

| First Quarter |

Second Quarter |

Third Quarter |

Fourth Quarter |

Total for Year |

Weekly Average | |

|---|---|---|---|---|---|---|

| Job Processing | 1429 | 1438 | 1424 | 919 | 5210 | 100.2 |

| Software Development | 24 | 44 | 54 | 0 | 122 | 2.3 |

| Total Available | 1453 | 1482 | 1478 | 919 | 5332 | 102.5 |

| Lost time attributed to hardware |

45 | 43 | 35 | 17 | 140 | 2.7 |

| Lost time attributed to software |

26 | 9 | 5 | 1 | 41 | 0.8 |

| Lost time attributed to Miscellaneous |

27 | 7 | 12 | 17 | 63 | 1.2 |

| Total Scheduled | 1551 | 1541 | 1530 | 954 | 5576 | 107.2 |

| Hardware Maintenance | 104 | 104 | 104 | 64 | 376 | 7.2 |

| Total Machine Time | 1655 | 1645 | 1634 | 1018 | 5952 | 114.4 |

| Switched Off | 529 | 539 | 550 | 1166 | 2784 | 53.6 |

| Total | 2184 | 2184 | 2184 | 2184 | 8736 | 168.0 |

Growth in use of the ELECTRIC remote job entry and file handling system has continued, with a rise in the weekly average from 350 users to 450 and from 1750 hours logged - in to 2350 over the year. By the end of the year jobs loaded via ELECTRIC took some 75% of CPU time used.

The average availability of the ICL 1906A advanced to 95.6% and the CPU utilisation, after a relatively slack final quarter, averaged 69.7%. The total CPU time used was depressed by building alterations in the autumn to 3718 hours (3851 hours in 1975), of which over 30% was taken by jobs loaded from remote workstations. Statistics are given in Tables 5.3 and 5.4 and the ICL 1906A configuration is shown in Fig 5.2.

The main developments for the existing central computer lay in providing increased disc and tape storage capacity, extending the SETUP subsystem, and in detailed changes to improve .efficiency and limit system overheads. Software for the dual IBM 360/195 array took high priority after the new machine was ordered.

Early in 1976 eight 200 Mbyte Memorex 3675 disc drives were installed, and the remaining 1600 bpi tape decks were upgraded to 6250 bpi. Congestion of disc traffic was significantly reduced by installation of a third block multiplexor channel and matching software.

SETUP was introduced in 1972 to provide advance information of data set requirements and allocate volumes to drives (automatically, by scanning the job-queue). It has served well, but disc packs and tapes are now handled on such a scale in the computer room that further automatic assistance is needed, particularly to take advantage of the dual machines. The new version will indicate volumes currently rarely called, which can therefore be relegated to a more remote store-room, and will also assist the librarian with the system formalities for introducing new tapes.

Various modifications were made to HASP to improve servicing of the numerous RJE (Remote Job Entry) workstations, and MAST was streamlined, with much of its data-handling time now properly accounted to users (instead of appearing as an overhead).

Fig 5.3 shows in outline the planned dual machine layout. The machines will be run asymmetrically, with a front-end machine (FEM) handling telecommunications, line printers, card readers and punch via HASP, MAST and ELECTRIC. The majority of batch-stream jobs will be fed from the single job-queue to the back-end machine (BEM), but residual resources of the FEM will also be utilised for such jobs. Both machines will access all external storage devices but a user may, if he wishes, direct that a job be run on a particular machine. If the FEM fails it will be possible for the BEM to take over its role, continuing the ELECTRIC service in combination with reduced batch processing. The design philosophy, based on the available large increase in processing power unaccompanied by extra disc/tape storage, is for a modest rise in system overheads at the FEM and a, larger decrease at the BEM, freeing that machine for high speed batch job processing.

| First Quarter |

Second Quarter |

Third Quarter |

Fourth Quarter |

Total for Year |

Weekly Average | |

|---|---|---|---|---|---|---|

| ASR Board | 100 | 101 | 60 | 41 | 302 | 5.8 |

| Engineering Board | 132 | 114 | 91 | 50 | 387 | 7.4 |

| Science Board | 472 | 476 | 449 | 162 | 1559 | 30.0 |

| Other Research Councils | 34 | 45 | 72 | 39 | 190 | 3.7 |

| Miscellaneous | 257 | 270 | 301 | 185 | 1013 | 19.5 |

| User Totals | 995 | 1006 | 973 | 477 | 3451 | 66.4 |

| System Control and General Overheads |

69 | 63 | 79 | 56 | 267 | 5.1 |

| Totals for 1976 | 1064 | 1069 | 1052 | 533 | 3718 | 71.5 |

| Totals for 1975 | 867 | 1007 | 992 | 985 | 3851 | 74.1 |

Development of the new software benefited considerably from studying the coupled IBM 360/65 and 370/145 system at the University of Gothenburg, although the machines are different and the starting-point was the MFT (not MVT) version of 0S. It was also valuable to have access to a 370/158 machine at IBM Birmingham, operating under the VM (Virtual Machine) version of OS, which proved simple to use. This system allows several programming teams to act simultaneously as sole users, with a dedicated computer and peripheral devices, even simulating two coupled 360/195 machines with different implementations of MVT and HASP.

A sample test batch of programs was built up over the last six years, but is no longer representative of the current workload and does not adequately test some features of the 360/195 CPU. It was therefore thoroughly revised, and run extensively on the existing machine, prior to tests with the coupled machine configuration.

A paging version of the ELECTRIC program was introduced. When a user becomes active, a work area (page) is transferred from a disc data set to a core buffer for him, and returned to disc when another user becomes active in ELECTRIC. The initial transfer is overlapped with an overlay transfer and the return is made asynchronously, during processing of the next user command. System overheads are thus kept down, and the new version allows an increase in the maximum number of users without significant increase of program region size. In the second quarter, this maximum was raised from 40 to 50.

The PRINT command was modified to allow output of two or more columns of text on a line-printer page, and has been so used in producing several Laboratory Reports. Another PRINT modification, plus changes to the off-line ELMUC program, allows ELECTRIC and graphics files to be output to the FR80 plotter, for reproduction there in hardcopy microfiche or film of standard types.

Demands for more filing space were met by increasing ELECTRIC storage from 108000 to 137700 blocks (an entire 3330/11 disc), and by doubling the data sets for archived ELECTRIC files and for graphics.

Work has begun to adapt the IBM STAIRS program for the local MAST message handling system, so that it can be accessed from any 360/195 terminal. STAIRS is an information retrieval program which can pick out required words or phrases in a database. A service was made publicly available in June 1976 from two databases, the first compiled from the SLAC weekly publication Preprints in Particles and Fields and the second comprising titles in the Rutherford Library. So far some 40 people have made use of the service, together taking on average 25% of the 1.5 hours/day scheduled.

The Laboratory has continued its active involvement in developing computer networks. The three main areas of interest remain the operational use of the ARPA network, the development of a private network linking the Laboratory's IBM 360/195 and ICL 1906A computers with the IBM 370/165 at Daresbury, and the implementation of connections to the Post Office's Experimental Packet-Switched Service-(EPSS). The Laboratory is also pursuing its interest in the collaboration with CERN, DESY and ESA (European Space Agency) on high speed data transmission experiments via the ESA Orbital Test Satellite, and arranged an international Meeting in July. The proposed network is outlined in Fig 5.4. This does not show temporary test and development connections.

The U.S. Advanced Research Projects Agency (ARPA) has established a computer network linking over 60 sites in the USA, mostly university and Government research laboratories. The network is linked to the Department of Statistics and Computer Science at University College, London, and thence to the Laboratory's 360/195. Some 1000 jobs were submitted to the Laboratory via this network during the year, using about 3 hours CPU time, and there was also some traffic in the reverse direction.

Progress has continued on a private network linking the 360/195 at Rutherford with the 370/165 at Daresbury and the ICL 1906A on the Atlas site. This network was designed to use EPSS-compatible protocols, so that it can use EPSS itself (or its successor) at some later date, if this becomes desirable. Meanwhile it permits the Laboratory to offer network-type facilities to users in advance of the full EPSS service.

The network is now operational via Daresbury to the 360/ 195 and offers an RJE service for a large part of each day to workstations at Liverpool, Manchester, Sheffield, Lancaster and Daresbury. These are all connected to a PDP-11 computer at Daresbury which functions as a network node, capable of switching each workstation to either the 360/ 195 or the Daresbury 370/165. Similar facilities for workstations connected to the GEC 4080 node on the Atlas site, with switching between the 360/195 and 1906A, should soon be available.

Work is in progress on further developments including a File Transfer Protocol permitting users' files to be moved from one computer to another, and an Interactive Terminal Protocol allowing users anywhere on the network to use the various interactive facilities available at the different sites. Both will be compatible with the protocols defined for EPSS.

This experimental Post Office network, which is scheduled to run for two years in the first instance, has now become available for a few hours each day. It will not be officially opened as a service until it can operate reliably for at least eight hours daily.

The network comprises three packet-switching exchanges (at London, Manchester and Glasgow) linked by wide-band 48 kbaud lines. The Laboratory has a 48 kbaud line to the London Exchange, a 2-4 kbaud line to Manchester and a 4.8 kbaud line to Glasgow. A GEC 2050 minicomputer is used as a front-end to the 360/195 for communicating with the line to London, its function being to resolve the major differences between EPSS network protocol and the protocol used by IBM.

The connection to the London Exchange was first made in June, and has since been successfully used in a variety of test situations, including the driving of a HASP workstation at University College, London. The Manchester connection was also established and used for testing interactive access to the Laboratory's 1906A via the network.

Work is at an advanced stage to enable GEC 2050 computers connected directly to an EPSS Exchange to function as HASP workstations. This involves networking software in the 2050 itself, thus avoiding the need for front-end machines. When this software is ready, it can be tested very thoroughly by driving a workstation at the Laboratory via the line to the Glasgow Exchange and thence back to the 360/195 via London.

The experience gained from this work should provide for the Laboratory's future data transmission needs.

The GEC 4080 is a medium size computer with processing power of about one Atlas Unit for FORTRAN programs. One installed at the Laboratory in 1974 now provides workstation and interactive computing facilities (particularly interactive graphics) for some local and remote users. The central Operations Group became responsible for normal running of the 4080 system during the year, including clean-up of disc space, taking back-ups, etc. The present configuration is shown in Fig 5.5, and there are now over 20 registered users from 360/195 terminals, including a few outside the Laboratory.

The production system is DOS 2.2 supplied by GEC, but considerably modified to provide a full multi-user system. Warwick University supplied modifications for multi-access and a scheduler, and locally-written extensions include two-way links to the IBM 360/195, multi-shell facilities at terminals and spooling for line printers.

The GEC 4080 can thus be used as a standard HASP workstation, with ELECTRIC facilities available at all its terminals. It can also be accessed from any terminal attached to an IBM 360/195 workstation or to the central computer, but a limit of six simultaneous users has been imposed. Files can be transferred between 4080 and 360 disks, including ELECTRIC, for example enabling a user in CERN to run a program on the 4080 creating a temporary file for line printer output and then submit a job to the 360/195 to print the contents on the CERN workstation line printer.

The new GEC operating system OS 4000 was delivered during the year and is being developed to provide the facilities available to DOS. The new system provides much better accounting and security, and some new software packages will only be provided by GEC within OS 4000. Currently work on HASP multi-leaving and terminal access has been completed, enabling the 4080 to act as a standard 360/195 workstation and to be accessed from any ELECTRIC terminal. The remaining major development for file transfer is scheduled for early completion.

This interactive facility comprises a Hewlett-Packard 1310A electrostatic display head refreshed by an Interdata 7/16 minicomputer, connected to the GEC 4080 by a 50 Kbaud line. Two alternative 1310A heads are available. The first allows only light-pen interaction and is used for Patch-up. The second, using special hardware which is still under development, allows interaction by keyboard and tracker balls as well as light-pen, and offers windowing, zooming, and translations and rotations of the displayed picture in two or three dimensions. It is intended to add hardware characters and broken lines to the display facilities, and variable intensity levels.

ASPECT software comprises a package of routines in the GEC 4080 called by the user's program and communicating with a program in the Interdata 7/16. This graphics package was written locally, partly in BABBAGE and partly in FORTRAN, but the GINO-F graphics package (from the Computer Aided Design Centre) is being adapted to support the ASPECT system and will in due course replace the present package. There are two versions of the Interdata 7/16 program for handling light-pen, tracker ball and keyboard interactions, refreshing the display, and responding to instructions from the GEC 4080. The first and simpler (1.0) is used in production for Patch-up while the second (2.2) is the development system containing more extensive facilities. Version 2.2 is being adapted to suit Patch-up and the modified GINO package in the 4080, and made more robust.

Rescue of bubble chamber track failures (Patch-up). The Patch-up system based on GEC 4080, Interdata 7/16 and Hewlett-Packard 1310A has been in regular use for more than a year. It has generally worked well, being faster than the previous very similar system and more convenient to use. In all nearly 24,000 faulty events have been displayed, of which over 10,000 were 'rescued' successfully and passed quality criteria in the GEOMETRY program.

Magnet Design. The magnet design program GFUN proved considerably too big for the present 4080 FORTRAN compiler and linkage editor and had to be split into several (currently four) processes. This necessitated much program reorganisation, of which advantage was taken to rewrite the input section in a much more general form suitable for design of any three dimensional object, not necessarily a magnet. Engineers have shown a lot of interest in this package, which uses GINO and strict ANSI FORTRAN and can therefore be easily transferred to other computers. When rewriting is completed next year, the user will be able to design an object on the GEC 4080, run an IBM 360/195 analysis program and display the results on the 4080.

To run the whole GFUN program the user must load four processes, which necessitated that access be provided from a terminal to several shells in the GEC 4080. As mentioned previously, the DOS 2.2 operating system was adapted locally to meet this need, but no similar modification exists for the new OS 4000 system and if written locally may conflict with future development of OS 4000 by GEC. However, a new virtual FORTRAN compiler to be offered by GEC next year should handle large programs without splitting.

COMPUMAG Conference. An international conference on computing magnetic fields was held at Oxford in April. A 4080 with two graphics terminals, installed there temporarily and linked by a Post Office line driven at 4.8 Kbaud to the Laboratory's IBM 360/195, was used for demonstration purposes. The GFUN program running in the 360/195 was demonstrated at one terminal (Computek 400), using the 4080 as a workstation, and simultaneously the part of GFUN then available in the 4080 was demonstrated at the other (Tektronix 4014). The ASPECT system was also attached to the 4080 and some of its potential demonstrated by displaying picture files created on the 4080.

An III (Information International Incorporated) type FR80 recorder was installed in the Atlas Laboratory in 1975. The FR80 comprises an III 15 computer (similar to a PDP 15) with magnetic tape deck for data input on 7 or 9 track tapes, a precision cathode ray tube and cameras. From data supplied on tape it produces high quality graphical output on colour or black and white film (16 or 35 mm), on hardcopy (12" width sensitive paper) or on 105 mm microfiche (at 42x or 48x demagnification). The input data is processed and displayed, as vectors or alphanumeric characters, within a range of 256 intensity levels on a square mesh of 214 x 214 units. The resolution on 35 mm film is about 80 line-pairs/mm.

| First Quarter |

Second Quarter |

Third Quarter |

Fourth Quarter |

Total for Year |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jobs | Time | Frames | Jobs | Time | Frames | Jobs | Time | Frames | Jobs | Time | Frames | Jobs | Time | Frames | |

| Hardcopy: Single Image Format |

1889 | 56 | 24800 | 2186 | 45 | 30500 | 1920 | 43 | 24900 | 2352 | 58 | 32600 | 8347 | 202 | 112800 |

| Multiple Image Format | 784 | 13 | 4300 | 927 | 15 | 6100 | 651 | 11 | 4500 | 632 | 16 | 5100 | 2994 | 55 | 20000 |

| (number of Images) | 14300 | 21800 | 18500 | 20500 | 75100 | ||||||||||

| 35mm Monochrome |

310 | 28 | 7100 | 558 | 22 | 9100 | 262 | 19 | 8700 | 323 | 22 | 8600 | 1453 | 91 | 33500 |

| Colour | 82 | 13 | 1900 | 212 | 30 | 5900 | 104 | 27 | 2400 | 132 | 39 | 3600 | 530 | 109 | 13800 |

| 16mm Monochrome |

16 | 1 | 1900 | 4 | 1 | 500 | 6 | 1 | 3900 | 46 | 12 | 29700 | 72 | 15 | 36000 |

| Colour | 36 | 10 | 16000 | 45 | 26 | 17600 | 27 | 20 | 16200 | 22 | 30 | 22700 | 130 | 86 | 72500 |

| Precision Monochrome | 61 | 18 | 53200 | 51 | 7 | 29700 | 90 | 23 | 105400 | 43 | 7 | 33200 | 245 | 55 | 221500 |

| Microfiche | 1076 | 100 | 2700 | 1615 | 106 | 4500 | 1047 | 120 | 2700 | 1153 | 198 | 4500 | 4891 | 524 | 14400 |

| Totals | 4254 | 239 | 111900 | 5598 | 252 | 103900 | 4107 | 264 | 168700 | 4703 | 382 | 140000 | 18662 | 1137 | 524500 |