The Science Research Council provides very powerful computing facilities, based at the Laboratory, for a widespread community of Users. With the purchase of a second IBM 360/195 processor late in 1976, a dual 360/195 system was established in the Atlas building and commissioned during 1977. It is intended primarily for batch processing and is linked to some fifty remote sites for easy access under the control of the Facility Committee for Computing. Interactive computing facilities were substantially increased by upgrading the DEC10 computers at Edinburgh and Manchester and six other university mini-computers, and improving the PRIME P400 system at the Laboratory. Telecommunication networks have been further developed during the year to provide better access to computer services for both groups of users.

The FR80 precision microfilm recorder was used in the preparation of a reference book on molecular structures. Published jointly by the International Union of Crystallography and the Crystallographic Data Centre at Cambridge, volumes of this type are technically difficult to produce by normal methods. An animated film generated on the FR80 was selected by the British Film Institute. The ASPECT interactive graphics display system based on a GEC 4080 computer was shown at the Systems 77 international exhibition in Munich.

Broadly, the Atlas Computing Division is responsible for the management and development of the SRC Interactive Computing Facility. The Computing and Automation Division is responsible for the services based on the batch computing facilities.

The Science Research Council drew up new plans for computing last year, including the installation of a second IBM 360/195 at the Laboratory, which were implemented in 1976/77. During January the second 195 (195/2) processor with 1 Mbyte of core was brought into limited service in the Atlas building and on 1st February the 195/1 was switched off. The peripheral equipment and core were then transferred and a service based on the 195/2 with 3 Mbyte of core and a full set of peripheral equipment opened on 14th February. During March the 195/1 processor and remaining core were moved to the Atlas building and a service based on the dual 195 system started on 18th April, temporarily keeping 3 Mbytes of core on the 195/2 and 1 Mbyte on the 195/1. The final arrangement of 2 Mbytes on both machines was completed on 30th May. A prime aim of local planning was to reduce disruption to users to a minimum, and this was achieved with a total loss of service for only 17 days.

Moving the computer hardware, re-connection of local online equipment and re-routing of the extensive network of links from remote sites required considerable telecommunications work. The Post Office was not able to re-route private lines to the new site for several months, so temporary arrangements had to be made which delayed the intended change to Racal modems. Additional private lines have since been installed from institutions which will have access to the new Interactive Computing Facility at the Laboratory. Keyboard terminals and lineprinter facilities at the new site and in the old building (where many on-site users remained after the 195/1 was moved out) were rearranged. All 360/195 workstations were out of action during the break in IBM computer service and most suffered temporarily from Post Office work at the Laboratory site on re-routing lines and introducing extra telecommunications equipment nearby. Some stations suffered additional disruption this year from faults of their local computer or peripheral equipment, or individual Post Office lines, but the effects were generally not serious.

The whole system had settled down by mid-year and provided considerable computing power. Over 7000 hours of CPU time were taken by users' jobs during the year, including 4577 after the final configuration was established on 30th May, compared with 6108 hours from the single 360/ 195 computer in 1976. This was sufficient to eliminate the long-standing backlog of work in the Autumn, but there is no doubt that more CPU time can be made available in a full year by further tuning of the system and further pressure of work. The proportion of jobs submitted from remote workstations increased from 54% in 1976 to 67% in 1977. Modifications were made to ELECTRIC to improve the response time and significantly ease remote job entry and communication with the central computer. Detailed figures of jobs processed, lines of output printed and CPU time taken are given in the Divisional Quarterly Reports.

The plans for computing include the closure on 31 March 1978 of the service based on the ICL 1906A. A gradual rundown has therefore taken place and assistance has been given to those transferring work to the IBM 360/195 computers. Since its inception in 1972, this service has processed some 1-25 million jobs covering most scientific and engineering fields outside nuclear physics. The SRC computer network linking the Daresbury and Rutherford Laboratories was consolidated and brought into more regular use during the year. Nearly a dozen workstations can now access the Daresbury IBM 370/165 computer or the Rutherford IBM 360/195 or ICL 1906A computers via the network. A second GEC 4080 computer was brought into service during the year and the hardware and software facilities for public users was extended. The ASPECT interactive visual display system, based on a GEC 4080, was successfully demonstrated at the Systems 77 international computer exhibition in Munich.

The emphasis in bubble chamber film measuring has shifted to experiments with fewer, more difficult events. The HPD is being modified for its future tasks and being made more robust; the responsibility for this equipment will pass to the High Energy Physics Division in 1978.

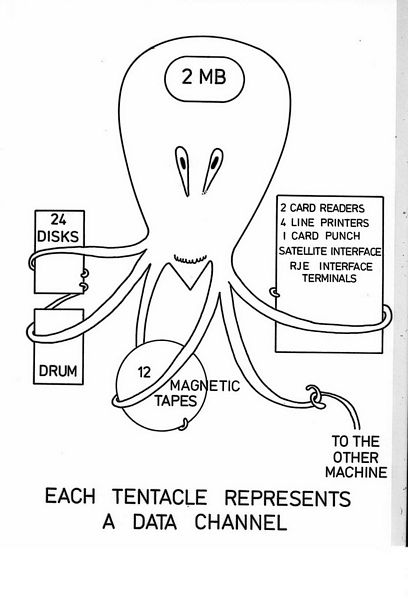

Production processing was carried out on the 195/1 computer (in building R1) until 1st February, with specially selected long CPU-bound jobs running on the 195/2 in building R26. The 195/2 at that time had only 1 Mbyte of core and restricted peripheral equipment, but provided 441 CPU hours for user jobs. On 14th February service was resumed on the 195/2 with 3 Mbyte of core and a normal set of peripheral equipment, but with reduced channels. On 18th April the coupled system of two 195s (in building R26, with 1 Mbyte core on 195/1) became available. On 30th May the 3 + 1 Mbyte core arrangement was replaced by 2 Mbyte on both machines. The present layout of the dual 360/195 system is shown in Fig 5.2.

Normally the 195/1 is the front-end machine running the online programs HASP, ELECTRIC, HPD (when necessary) and some batch jobs, while the 195/2 is the back-end machine for most batch job processing. However, the most immediate requirement for many users is to access ELECTRIC, and the dual system is designed to provide front-end machine facilities as far as possible during periods when either machine is out of action because of faults or maintenance, at the expense of reduced batch processing capability.

Operations and performance figures have naturally been dominated by the changes in computer configuration in the first half of the year. Some hardware and software problems occurred, without causing serious disruption, but by June the mean time between hardware breaks (83 hours) was the best for over a year and the system settled down well in the second half of the year. Detailed figures are given in the Divisional Quarterly Reports and some figures for the year are shown in Tables 5.1 and 5.2.

In a mid-year review, target turn-round times for different priority levels were defined. Targets were also established for user-CPU hours per week (a minimum 160, rising progressively to 180) and for average ELECTRIC response times (2-3 seconds normally, and not exceeding 5 seconds at times of peak activity). During the third quarter, the turnround target was achieved and the average CPU time for user jobs was 169.4 hours per week (205 hours in one week), with average operational overheads at 34.0 CPU hours per week. Because versions of OS and HASP must run in both machines, the overheads are of course higher than for a single machine system. For the first time in many months, the backlog of work vanished during the Autumn.

The development of software for the coupled 195s began last year and continued into 1977, Tests were carried out at IBM Birmingham on a 370/158 computer operating under the VM (virtual machine) version of OS, and during March some time was made available on a VM system by courtesy of the Copenhagen Handelsbank. Thus all was ready to run a dual system of 3 Mbyte front-end machine (FEM) and 1 Mbyte back-end machine (BEM) in April, and move to the planned 2 + 2 Mbyte dual system at the end of May. The present software options allow ten batch initiators to run in 1450 Kbytes in the BEM and four in 770 Kbytes in the FEM (640 Kbytes when the HPD production program is in use). These figures have varied slightly from time to time (eg HASP was modified in June to improve disc exploitation).

A few elusive hardware faults complicated the debugging of software and held back the process of 'tuning' for the best performance, but steady progress has been made. Facilities have been adapted to the higher proportion of work now submitted from remote stations, and delays due to an uneven spread of disc usage across all available drives are being studied. The methods of handling SYSOUT data sets and of maintaining COPPER tables and SETUP queues have been improved. More systems information is now saved across machine stoppages caused by hardware or other faults, so that the loss of production time may be reduced.

A new highly automated system of handling storage media (TDMS - tape and disc management system) has been designed and partly coded. Experiments with a new method of managing overlaid programs have been made, already to the benefit of ELECTRIC, and changes to the internal classification of jobs are proposed to take more advantage of the differences between the front and back end machines. Overall, experience with the dual machine software has shown it to be a solid base for further advances. Several performance-measuring program packages have continued in use for indicating pressure points and monitoring the results of changes made.

With a steady growth in use of this job submission and retrieval and file handling system, effort has been directed to improving response times. Code was inserted in ELECTRIC to monitor its own service overheads and an external program written to analyse the results. Several problem areas were identified and excessive average service times were associated in particular with overlay swapping and OS data set allocation. It was arranged that all overlay segments should be relocated when the program starts, which considerably reduces the time taken to bring a new segment into core. This new method of overlaying cut an average of 60% off overlay times and 15% off total service overheads, with a noticeable improvement in response. The second problem was alleviated by moving data set allocation into a sub-task, so that ELECTRIC could continue to process the commands while allocation took place asynchronously.

A major change to scheduling of the ELECTRIC input messages was introduced with the concept -of a 'string well', which can contain variable-length strings of characters and queues of strings instead of the fixed length spaces previously reserved. A package of routines was written for manipulating these and dealing with space management. Input messages are taken from MAST buffers and stored in the well, which gives ELECTRIC more control over the sequence in which messages are processed. The number of MAST buffers was halved to 32 and the space set free used for the string well and its associated control section. Such a well may hold 80-90 input messages, thus almost doubling the number in the total message buffer space. The string well method also facilitates changes to message scheduling. For example, it is planned to eliminate queueing for copy operations by allowing several to proceed simultaneously. This will improve the average response time.

Once again the free space for file storage has almost vanished on both the online and archive discs. As there is no further permanently mounted disc space available, the archive system is being modified to allow a second archive disc and to enable a file to be restored from the first archive disc during prime shift, while ELECTRIC is online. A second edition of the ELECTRIC User's Manual was produced from stored files using the ELECTRIC text layout facilities and the FR80 plotter to generate 35 mm film.

| IBM 360/195s Weekly Average |

ICL 1906A Weekly Average |

|||

|---|---|---|---|---|

| 1977 | 1976 | 1977 | 1976 | |

| Job Processing | 148.3 | 157.3 | 117.3 | 100.2 |

| Software Development | 2.5 | 2.1 | 0.6 | 2.3 |

| Total Available | 150.8 | 159.4 | 117.9 | 102.5 |

| Lost time attributed to hardware | 4.8 | 2.9 | 1.4 | 2.7 |

| Lost time attributed to software | 1.3 | 0.2 | 0.2 | 0.8 |

| Lost time attributed to Miscellaneous | 0.0 | 0.0 | 3.4 | 1.2 |

| Total Scheduled | 156.9 | 162.5 | 122.9 | 107.2 |

| Hardware Maintenance | 1.1 | 1.1 | 8.0 | 7.2 |

| Hardware Development | 1.5 | 0.4 | 0.0 | 0.0 |

| Total Machine Time | 159.5 | 164.0 | 130.9 | 114.4 |

| Switched Off | 8.5 | 4.0 | 37.1 | 53.6 |

| Total | 168.0 | 168.0 | 168.0 | 168.0 |

ICL figures, and IBM for 1976, are averages for whole years. The 1977 IBM figures are averaged over the April-December period and over both machines, thus showing dual system operation.

| IBM 360/195s | ICL 1906A | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Weekly Average for 1977 |

Totals for 1977 (53 weeks) |

Totals for 1976 (52 weeks) |

Weekly Average for 1977 |

Totals for 1977 (53 weeks) |

Totals for 1976 (52 weeks) |

||||

| CPU (hrs) | Jobs | CPU (hrs) | Jobs | CPU (hrs) | Jobs | CPU (hrs) | CPU (hrs) | CPU (hrs) | |

| Astronomy, Space and Radio Board | 8.3 | 1166 | 440 | 61813 | 299 | 58992 | 13.3 | 704 | 302 |

| Engineering Board | 7.2 | 423 | 379 | 22433 | 240 | 18923 | 13.9 | 737 | 387 |

| Nuclear Physics Board | 84.8 | 6578 | 4495 | 348618 | 4327 | 372685 | 0.0 | 0 | 0 |

| Science Board | 22.6 | 1361 | 1197 | 72138 | 857 | 67443 | 17.3 | 915 | 1559 |

| SRC/London Office | 5.9 | 2195 | 312 | 116352 | 0 | 0 | 7.0 | 372 | 0 |

| Other Research Councils | 2.9 | 883 | 154 | 46783 | 84 | 33001 | 3.0 | 161 | 190 |

| Government Departments | 0.6 | 167 | 34 | 8866 | 0 | 0 | 3.3 | 174 | 0 |

| Miscellaneous | 0.1 | 141 | 6 | 7450 | 301 | 123889 | 0.7 | 37 | 1013 |

| User Totals | 132.4 | 12914 | 7017 | 684453 | 6108 | 674993 | 58.5 | 3100 | 3451 |

| System Control and General Overheads |

31.7 | 89 | 1680 | 4704 | 1323 | 2779 | 6.0 | 316 | 267 |

| Totals for Year | 164.1 | 13003 | 8697 | 689157 | 7431 | 677712 | 64.5 | 3416 | 3718 |

There has been some reduction in use of the ICL 1906A computer pending its closure in 1978 and costs have been cut where possible. Engineering maintenance was reduced from three to two shift cover on 1st February, and to prime shift only on 1st April. The operating system software was frozen during the second quarter after some development in the first, the Program Advisory Office attendance was reduced to afternoons only from April and maintenance support for software packages subsequently discontinued. However, utility programs were provided for those users wishing to transfer MOP files on the ICL 1906A to ELECTRIC files on the IBM 360/195 and for transferring data on magnetic tapes. A three-day tuition Course was given to assist transfers and to serve as an introduction to the IBM 360/195 system. Despite the reduced maintenance cover, which lost some 60 hours in the first half of the year, Table 5.1 shows good overall reliability. Machine availability (viz 1 - down time/scheduled time) reached a record 97.0% in the third quarter, when the scheduled time included weekend running on several occasions for the Ariel 5 space satellite project.

The FR80 recorder produces high quality graphical output on colour or black and white film (16 or 35 mm), on hardcopy (12 inch-wide sensitive paper) or on 105 mm microfiche. It is an established facility used on a 5 days per week basis. Apart from staff shortages (which effectively limit it to 2-shift working) the main unscheduled break occurred in February, when further replacement of the CRT became necessary. Hardware performance has otherwise been generally good and software virtually trouble free. Detailed performance figures appear in the Divisional Quarterly Reports and some figures for the year are given in Table 5.3. Accounting routines have been transferred from the ICL 1906A and the slide-making program has been set up as a procedure on the IBM 360/195, with a version for viewing output on a graphics terminal.

FR80 applications have included an animated Arts Council colour film (entitled Reflections), graphs and tables of experimental results, computer output on microfiche, and text and graphics for publications. The film Computer Simulation of the Expanding Universe, which was produced in collaboration with Cambridge University, has been chosen by the Science Selection Committee of the British Film Institute for preservation in the National Film Archives.

| Jobs | Time (hrs) | Frames | |

|---|---|---|---|

| Hardcopy | |||

| Single Image Format | 8644 | 301 | 114,200 |

| Multiple Image Format | 2074 | 81 | 17,400 |

| (No of Images in M.I.F) | (84,700) | ||

| Universal Camera | |||

| 35mm Monochrome | 2753 | 112 | 113,000 |

| 35mm Colour | 625 | 74 | 10,000 |

| 16mm Monochrome | 137 | 28 | 64,500 |

| 16mm Colour | 100 | 63 | 71,000 |

| Precision Camera | |||

| 16mm Monochrome | 352 | 292 | 203,000 |

| Microfiche Camera | 4512 | 770 | 18,300 |

| Totals | 19197 | 1721 | 611,400 |

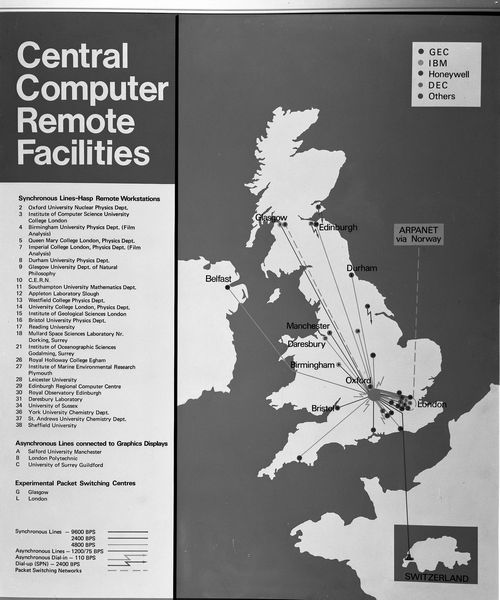

This network links the Daresbury and Rutherford Laboratories. There is a major connection to the London Exchange of the Post Office Experimental Packet Switched Service (EPSS), temporary links for test purposes to the Glasgow and Manchester Exchanges, and connections to the CERN Laboratory and to the USA ARPA network. The main features are outlined in Fig 5.3.

Five remote job entry (RJE) stations (at Daresbury, Lancaster, Liverpool, Manchester and Sheffield), based on Daresbury, regularly use the network to submit and retrieve batch jobs on the Rutherford IBM 360 computers and use the ELECTRIC filing system. Six other stations (at Belfast, Edinburgh, Leicester, Rutherford, Southampton and Sussex) are connected to a GEC 4080 on the Rutherford site, functioning as a network node. They have access through it to the local IBM 360s and ICL 1906A computers and the Daresbury IBM 370/165 computer for similar purposes, including use of Daresbury TSO facilities from terminals.

At the end of 1977 about 400 calls from keyboard terminals (85% to access ELECTRIC) were made in a typical week, and 60,000 terminal messages and 750,000 lines of printed output from the 360/195s were routed via the network. By allowing a workstation simultaneous access to more than one main computer, the network has been particularly valuable this year for users transferring work from the Daresbury 370/165 or Rutherford 1906A to the 360/195 computers.

The network employs packet-switching techniques based upon (and compatible with) EPSS protocols, and is available on a 24 hours per day basis. Work continues to remove anomalies, facilitate extensions and improve performance and to implement a file transfer protocol based on a design of EPSS Study Group 2, in which the Laboratory actively participated. Representatives of the Natural Environment Research Council are involved in the planning, with a view to attaching their computing equipment in the future.

This Post Office service was declared open in April 1977 and is scheduled to run for at least two years to acquire experience in all aspects of using such a public network. It is clear that its protocols, both at line and packet level, do not have a long-term future because new international standards have emerged recently, but the basic techniques are very similar. The file transfer protocol mentioned above was designed for use across any packet-switching network, not specifically related to EPSS, so it is hoped it may become a UK standard. Less use has been made of the Laboratory's computers via EPSS than originally expected, but the experience gained has been extremely valuable and will facilitate connections to the new Post Office network service (which is widely anticipated to follow EPSS, although not officially announced).

A second GEC 4080 computer (denoted 4080B) became available to the Computing and Automation Division during the year, after its previous use at the Nimrod injector. The hardware was upgraded by providing 160 Kbytes of memory for the 4080B in July, with another 96 Kbytes in November, and by replacing the single density discs with double density on both 4080 computers.

Throughout the year the original 4080 computer (4080A used the DOS operating system, augmented by the Warwick University multi-access system and local modifications giving workstation and file transfer facilities to and from the IBM 360/195. Development work to provide these local facilities in the new OS4000 GEC operating system were completed in July. At the same time the new Virtual FORTRAN compilers became available, so a service with OS4000 was started on the 4080B and most users changed over to it. DOS was retained on the 4080A for running bubble chamber patch-up and THESEUS production and preparing for the Systems 77 exhibit. However, work on modifying patch-up to run under OS4000 started in the Autumn, and it is intended to phase out DOS early in 1978. Hardware performance of both computers has been generally good, apart from recurring faults with tape drives. Systems software has also been reliable, but some problems have been encountered with utility programs. Both machines are normally available 24 hours per day.

This system, an adjunct to automatic film measurement by HPD, continued in regular use and enabled over 10,000 faulty events to be recovered (of 33,000 displayed). The Interdata 7/16 mini-computer and Hewlett Packard 1310A display used for patch-up were required for the Systems 77 exhibition, so an Interdata 70 and HP 1300 display (kindly loaned by Oxford Nuclear Physics Laboratory) were temporarily substituted, with satisfactory results.

The 360/195 Magnet design program GFUN had to be split into three sections for use with a GEC 4080. They are the preparation of data, ie construction of the object and division into mesh elements, analysis of the object and calculation of the magnetic field, and the display of results, usually in the form of contour maps. The second section involves much calculation and is still run on the IBM 360/195, but the first and third (which together comprise the THESEUS system) are run on a GEC 4080. Development of THESEUS was expected to go ahead smoothly when the new FORTRAN compiler became available in July, but its mesh element generating sections proved too big for the link editor. GEC are investigating this problem. Limited production work with THESEUS became possible in July, using DOS, and this system was successfully demonstrated at the Systems 77 exhibition. In addition, a program for tracing particle paths through magnetic fields was mounted on the 4080.

This international exhibition at Munich, held every two years, is the largest in Europe devoted to computing development and the widespread uses of computers. The laboratory showed ASPECT, its sophisticated interactive graphics display system which offers windowing, zooming and translation and rotation of displayed pictures in two or three dimensions. Live demonstrations were given of the use of THESEUS, which ran in a GEC 4080 on the adjoining GEC stand, to design various objects (from magnets to a model of Munich Cathedral). These, and others such as a protein molecule, were displayed on ASPECT and attracted some large audiences.

A system was developed on the GEC 4080 for communication with a network of Intel 8080-based micro-computers controlling manual machines for measuring film from bubble chambers. The measured coordinates are collected on disc on the 4080 and transmitted to the IBM 360/195 using the file transfer system. At the end of the year the system was in production use on one machine.