Computing at Rutherford Laboratory has two main functions: to carry out the extensive data handling and analysis required for the High Energy Physics and other research programmes with which the Laboratory is directly involved, and to provide computing services for university engineers and scientists supported by specialist Science Research Council committees. This broad split of activities is reflected in the organisation of the Laboratory's computer resources into the Atlas Computing Division, catering mainly for outside users, and the Computing and Automation Division, supporting the Laboratory's experimental research programme. The two divisions work in close cooperation in areas of common interest to all users, especially in data communications and networks.

The central Rutherford Laboratory IBM 360/195 computer is a 3 Mbyte machine with an extensive range of peripherals and remote links (see Figure 5.2). The main enhancements to this system were upgrades to tape drives and discs. Four 9-track tape drives were upgraded to quadruple density (6,250 bpi) during the first quarter and the remaining four during the last quarter. Six of the sixteen IBM 3330 disc drives were upgraded from 100 Megabytes to 200 Megabytes, and eight new 200-Mbyte Memorex drives were delivered for installation during the first quarter of 1976. A second Block Multiplexor is on order.

The system remained under saturated conditions throughout 1975, with central processor utilization averaging 89%. Over 8200 hours of good time were available to users. After deducting operational overheads, this provided 5892 hours of accountable central processor time. Despite this extreme pressure, allocations to projects and job turnround have been effectively controlled by means of COPPER, a priority system designed to hold back long production runs to off-peak periods and allow several levels of fast turnround during prime shift.

A dominant feature of the installation is the large star network giving access from many remote sites. These remote workstations provide almost the full range of facilities available to local users, in particular card input, line printer output and on-line keyboard terminal access. The terminals provide file handling facilities (mainly via the Rutherford Laboratory's ELECTRIC system), job entry, output retrieval and graphical output, and can access any of the other facilities provided, including the connections to the US ARPA network. The number of remote workstations has increased by 10 to over 30, and over 50% of all jobs now enter the central computer this way. Printing capacity and line speed have been upgraded for the most heavily used workstations, and some have had an extra 8K of core and more terminals added. Remote computing is now an established and reliable service.

Progress has been made on a number of projects to allow intercommunication between sub-networks. ARPANET in the United States is the best-known linked-computer network, and a link to ARPANET from the IBM 360/195 via the UK node (directed by Professor Kirstein) at University College, London, has been in operation for some time. Any terminal connected to the 360/195 can now submit authorised jobs to computers on ARPANET, and jobs can be submitted from terminals on ARPANET to the IBM 360/195. This latter service is used mainly by collaborators in the United States working with UK groups on high energy physics experiments.

In the UK, the Post Office is well advanced in setting up an Experimental Packet Switching Service (EPSS) with aims similar to ARPANET. Transmission should begin during 1976 and plans are well developed for the Rutherford Laboratory to test this system in collaboration with Daresbury, Edinburgh Regional Computing Centre, Glasgow and other EPSS centres. In parallel with this work and using the same communications protocols, a less ambitious project is in progress to link the 360/195 directly to the Atlas ICL 1906A and the IBM 370/165 at Daresbury.

The Honeywell DDP224 satellite computer was taken out of service towards the end of the year. It was bought in 1965, originally as a front end to the ORION central computer, and at one time served two automatic measuring machines, an interactive display, twelve terminals of various types, and a fast link to the experimental area. Recently it was used mainly as an interface for interactive graphics terminals based on the 360/195, an activity now taken over by a new GEC 4080 computer, which has a processor power of approximately one Atlas unit and is therefore a substantial computer in its own right, It is linked to the central computer, and software has been written to allow job submission, output retrieval and file transfer. Several applications are now using the GEC 4080 for interactive graphics computing. The GEC 4080 is regarded as a natural development of a conventional workstation, giving local processing power backed by a remote job entry service to the 360/195.

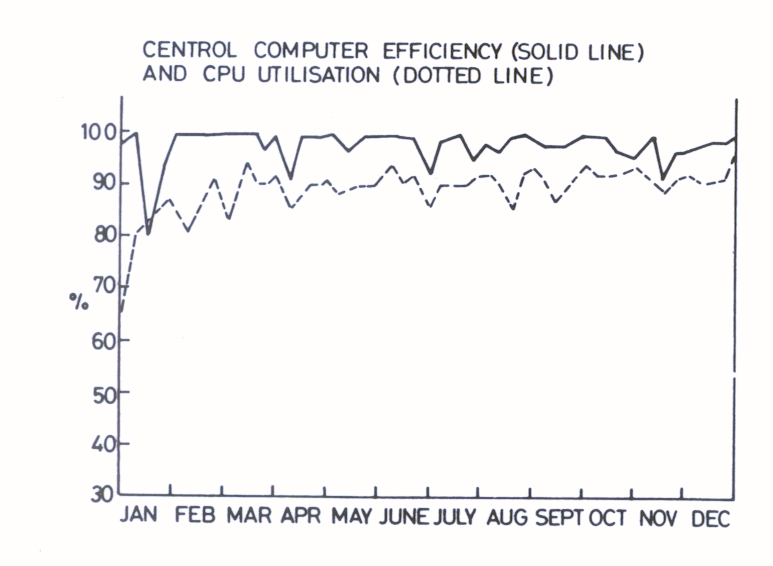

A statistical summary of computer operations appears in the following tables while machine efficiency = (scheduled time - down time) / (scheduled time) and CPU utilisation = (CPU time used) / (scheduled time - down time) are shown in Figure 5.3. Machine availability remained high, averaging 97.8% (98% in 1974) and CPU utilisation increased to 89% (83% in 1974), representing an extra 601 hours CPU time this year, of which 480 were taken by user programs. This was achieved by system improvements and a full year's use of the third megabyte of core. User jobs rose by 75,000 to over 600,000.

| First Quarter |

Second Quarter |

Third Quarter |

Fourth Quarter |

Total for Year |

Weekly Averages | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1975 | 1974 | |||||||||||||

| CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

CPU hours |

No of Jobs |

|

| HEP Counters and Nuclear Structure |

427 | 40916 | 424 | 40249 | 454 | 41058 | 459 | 40273 | 1764 | 162496 | 33.9 | 3125 | 26.2 | 2568 |

| RL - Film Analysis | 65 | 10721 | 160 | 9702 | 125 | 8310 | 193 | 9815 | 543 | 38548 | 10.4 | 741 | 10.4 | 704 |

| RL - Others | 77 | 15662 | 115 | 17007 | 94 | 17442 | 92 | 15376 | 378 | 65487 | 7.3 | 1259 | 6.3 | 1352 |

| Theory | 52 | 5101 | 24 | 4277 | 30 | 3812 | 33 | 4119 | 139 | 17309 | 2.7 | 333 | 4.6 | 541 |

| Universities Nuclear Structure |

105 | 5724 | 72 | 5830 | 68 | 7082 | 90 | 8104 | 335 | 26740 | 6.4 | 514 | 6.4 | 402 |

| Universities Film Analysis |

204 | 16312 | 300 | 22423 | 382 | 22322 | 321 | 22098 | 1207 | 83155 | 23.2 | 1599 | 20.5 | 1502 |

| Atlas | 372 | 30306 | 351 | 34672 | 322 | 31893 | 316 | 35961 | 1361 | 132832 | 26.2 | 2554 | 27.4 | 1905 |

| Miscellaneous | 41 | 19836 | 36 | 18768 | 39 | 17099 | 49 | 23183 | 165 | 78886 | 3.2 | 1517 | 2.3 | 1213 |

| User Totals | 1343 | 144578 | 1482 | 152928 | 1514 | 149018 | 1553 | 158929 | 5892 | 605453 | 113.3 | 11642 | 104.1 | 10190 |

| System Control and General Overheads |

342 | 984 | 390 | 554 | 369 | 642 | 317 | 697 | 1418 | 2877 | 27.3 | 55 | 25.0 | 60 |

| Totals 1975 | 1685 | 145562 | 1872 | 153482 | 1883 | 149660 | 1870 | 159626 | 7310 | 608330 | 140.6 | 11697 | ||

| Totals 1974 | 1523 | 126460 | 1866 | 135584 | 1664 | 132689 | 1656 | 138217 | 6709 | 532950 | 129.0 | 10249 | ||

| Increase | 162 | 19102 | 6 | 17898 | 219 | 16971 | 214 | 21409 | 601 | 75380 | 11.6 | 1448 | ||

| First Quarter |

Second Quarter |

Third Quarter |

Fourth Quarter |

Total for Year |

Weekly Averages | ||

|---|---|---|---|---|---|---|---|

| 1975 | 1974 | ||||||

| Job Processing | 1964 | 2060 | 2054 | 2016 | 8094 | 155.6 | 154.7 |

| Software Development | 36 | 23 | 31 | 22 | 112 | 2.2 | 1.7 |

| Total Available | 2000 | 2083 | 2085 | 2038 | 8206 | 157.8 | 156.4 |

| Lost time attributed to hardware |

70 | 34 | 22 | 38 | 164 | 3.1 | 3.1 |

| Lost time attributed to software |

3 | 5 | 7 | 4 | 19 | 0.4 | 0.3 |

| Total Scheduled | 2073 | 2122 | 2114 | 2080 | 8389 | 161.3 | 159.8 |

| Hardware Maintenance | 13 | 12 | 18 | 15 | 58 | 1.1 | 1.6 |

| Hardware Development | 37 | 8 | 0 | 21 | 66 | 1.3 | 2.1 |

| Total Machine Time | 2123 | 2142 | 2132 | 2116 | 8513 | 163.7 | 163.5 |

| Switched Off | 61 | 41 | 52 | 69 | 223 | 4.3 | 4.5 |

| Total | 2183 | 2184 | 2184 | 2184 | 8735 | 168.0 | 168.0 |

The operating system of the central computer and its workstations is OS-360 plus HASP, supplemented by many locally written extensions and improvements.

Release 21.8 of OS-360 was installed, to provide support for the 6250 bpi tapes and the 200 Mbyte discs. Local modifications allowed tape density to be recognised automatically. The concept of job-class has been abolished for most purposes: instead of having to be defined by users, class is now deduced by the system from other characteristics such as main memory requests, time estimates, and set-up peripheral statements.

HASP has received several improvements. A NORESTART option has been created, and workstations can print jobs in priority order. A new MULTIJOB facility has been introduced, whereby a string of jobs can be defined in such a way that execution of certain jobs in the string can be made to depend upon codes returned by earlier jobs. Another new capability helps a user (or group of users) to ensure that jobs which could otherwise conflict with each other in the resources they require, such as writing to common data sets, are not run simultaneously.

The maximum HASP job-number has been increased to 999, which has entailed an increase in HASP's checkpoint area on disc. It will be difficult to increase this number further because a maximum of three decimal digits seems rather deeply written into HASP by its originators. Before the increase to 999 the job queues were temporarily filled on several occasions. The state of the queues is now made known to the ELECTRIC file handling system, so that it can turn away jobs being submitted to a full system, instead of going into a waiting state.

A close analysis of system overheads, which rose more sharply than expected with increased activity at remote workstations, revealed that much of the HASP activity was concentrated in a single area (£GETUNIT). Modifications were made which saved about 10 hours of CPU time per week, and ELECTRIC response improved.

Message switching facilities are provided in the 360/195 by the locally-written MAST/DAEDALUS software subsystem, which also controls local satellite computers and their attached devices. During the year this subsystem took on further work.

This is an interactive multi-access file handling system designed and written at the Rutherford Laboratory. It has proved very popular, with currently some 615 users out of the total of 866 registered to use the system. In response to user requests, several additions and modifications were made during the year.

A scheme to allow transference of files between ELECTRIC and OS data-sets was introduced. This mechanism was also built into the PRINT command for outputting files to the line printer, and into the ELSEND procedure for entering ELECTRIC files from cards.

A new version of the ELECTRIC reference data card was issued. This was produced from line printer output of an ELECTRIC file using ELECTRIC's text layout facilities. Work began on typing the User's Manual into ELECTRIC files, where it can more easily be kept up to date. A new version is due in 1976.

The file storage data-set was transferred to a 200 Mbyte 3330 disc, and increased in size from 70,000 to 108,000 blocks. This can be increased by a further 30,000 blocks on the same disc should the need arise.

In the first quarter of 1975, the maximum number of simultaneous ELECTRIC users was increased from 30 to 40, and it was not long before the 40 limit was reached. ELECTRIC suffered a gradually deteriorating response, which became intolerable towards the end of June. Monitoring code was written and changes subsequently made, both to HASP and ELECTRIC, to improve the performance.

The changes in ELECTRIC itself may be summarised as follows:

These and other system improvements combined to bring the response time down to an acceptable level before the end of the year, even with 3540 users logged-in.

The MUGWUMP graphics filing system has filled up on a number of occasions, despite automatic deletion of unused files. Work is beginning on a major change to the filing structure in order to increase the available space and make it more compatible with ELECTRIC's filing system.

The COPPER facility was designed at the Rutherford Laboratory to share out CPU time according to agreed limits, and to control turnround. As demand has increased, COPPPER criteria have been adjusted to provide an effective means of sharing out the available CPU time.

Two further levels of turnround control were added (priorities 6 and 10) during the year. The general user now has a choice of five levels at which to submit his work:

With the addition of these two extra levels it is believed the user has a sufficiently wide choice. As the pressure increased through the year the times issued at different priority levels were adjusted to keep turnround within the agreed limits.

The Laboratory has continued to take an active interest in computer networks with their potential advantages in providing very flexible remote access to computing facilities. During the year work has progressed on three main projects; operational use of the ARPA network, network connection of the Daresbury Laboratory's 370/165 and the Atlas Computer Division's 1906A with the Laboratory's 360/195, and the design of protocols for use with the Post Office's Experimental Packet-Switched Service (EPSS). The Laboratory has also collaborated with CERN, DESY, ESA (European Space Agency) and EIN (European Informatics Network) to make a proposal to the EEC Commission for support of experiments in high-speed data transmission between CERN and Rutherford using the EIN network and the Orbital Test Satellite (to be launched by ESA in 1977).

ARPANET is a telecommunications network connecting some 65 sites in the USA, mostly University and Government research laboratories. An experimental link to the Department of Statistics & Computer Science at University College, London, has been created by the Advanced Research Project Agency (ARPA) to investigate all aspects of such an international link. This Department has had a line to the 360/195 for some time, and as part of the above experiment this line has been connected to the ARPANET via a PDP 9 gateway.

During the year traffic has been growing between the USA and the 360/195 via ARPANET. By the end of the year some five groups of users, all from collaborating teams involving US members, were accessing the 360/195 from across the Atlantic. The collaborations are involved in High-Energy Physics, Nuclear Structure and Seismology, and nearly 700 jobs were submitted to the 360/195 via ARP ANNET during the year, taking 2.5 hours CPU time.

There is also a growing traffic in the reverse direction, with five UK groups accessing US computers via terminals on the 360/195 connected through ARPANET. Again they are mostly parts of collaborating teams, in High-Energy Physics (accessing machines at Harvard, Illinois and Carnegie-Mellon Universities and at Lawrence Berkeley Laboratory), Atmospheric Physics (accessing the University of California, San Diego) and in Computer Science (using ILLIAC IV at NASA-AMES, and accessing MIT, Boston).

During the year facilities have been arranged to permit Professor F Walkden of Salford University to undertake three dimensional supersonic fluid flow calculations on the AMES Research Centre ILLIAC 4 computer through the ARPA network. The CFD compiler has been obtained and mounted on the IBM 360/195 to permit code written for the ILLIAC 4 to be written and tested by simulation. Some backing store transfer routines have been written with the interface defined by the CFD compiler documentation.

The UK national EPSS computer network experiment has been under development for some time, and the Laboratory has continued its active role in the Study Groups set up by the Post Office to design common high-level protocols for use in communications across the network. Protocols for file transfer (FTP) and the use of interactive terminals (ITP) have been agreed and published during the year.

The first test facilities have recently been made available by the Post Office on the London Exchange, and full service is scheduled for the London, Manchester and Glasgow Exchanges by mid-1976. The Laboratory has a 48K bits/sec line to the London Exchange, and work has started on programming a GEC 2050 to act as gateway for this line to be connected to the 360/195. This gateway will be ready for the start of regular service on the network. Work has also started on converting the GEC 2050 Remote Job Entry (RJE) software to allow direct connection of these workstations to EPSS. A slow-speed line (4.8K bits/sec) to the Glasgow Exchange has been installed for testing the new station software, which will also be ready for the start of EPSS service.

In parallel with the design work for EPSS, work has proceeded on a private network to join the IBM 370/165 at the Daresbury Laboratory, the ICL 1906A at Atlas and IBM 360/195 at Rutherford Laboratory. A joint working party has agreed EPSS-compatible protocols for this network, and testing of the first phase of the development will start at the beginning of 1976. The general plan is for a phased implementation of full networking with protocols compatible with EPSS, allowing the system to function either on the Post Office network or on the existing private lines linking the sites. This will allow the Laboratories to take full advantage of national computer network facilities without excessive dependence on the public network, which at this stage is still experimental.

By end-1976 the connections to the Rutherford Laboratory will be as shown in Figure 5.4.

The GEC 4080 was bought in 1974 to replace the old DDP 224 computer (installed ten years ago and the only one of its type still working in Europe). The 4080 is a powerful minicomputer with processing power of approximately one Atlas unit for FORTRAN programs, and will enable interactive graphics programs to be removed from the central. computer. This is important because two standard graphics programs (for magnet design and rescue of bubble chamber events) occupy valuable main memory space during their operation.

The GEC 4080 configuration at the end of 1975 included 128K of core, a card reader, 200 lines/min Tally printer and a Tektronix 4014 storage display all added during the year, and one magnetic tape unit temporarily attached. A high-speed refresh display with light pen driven by an Interdata 7/16 minicomputer is being used for rescue of failed bubble chamber events, initially as a direct replacement for the old IDI display driven by the DDP224.

There have been some problems with power supplies, magnetic tape drives and disc units, including one severe head crash necessitating replacement of an entire unit, but the hardware has been generally reliable.

At the start of the year the GEC DOS 2.0 operating system. was in use. This was upgraded to DOS 2.1 in February and to DOS 2.2, the current version of DOS, in August. Under DOS many tasks require use of the main console, which effectively restricts the system to one, or possibly two, users at a time. A multi-access system, allowing several users simultaneous access, has been developed at Warwick University and was incorporated into the standard system in September. Apart from the console there are now five terminals in operation, including one mobile plug-in VDU in another part of the Laboratory.

Software has been written which enables the GEC 4080 to be used as a HASP workstation of the central computer. Thus from any terminal attached to the 4080, jobs may be submitted to the IBM 360/195, files edited there or transferred between the two computers, and output retrieved.

For example, source files of a program may be held on the central computer and, on instructions from a 4080 terminal, transferred to the GEC machine for compilation and execution. Conversely, work is in progress to give access to the 4080 from any terminal attached to the central computer.

The new bubble chamber rescue system based on the Hewlett-Packard 1301 A was put into operation in November and 2000 events have already been processed. Slight improvements have been introduced but the system, as seen by the operators, is very similar to that which it replaced and the results are very similar. The input data arrives on magnetic tape, and the output, comprising new master point coordinates, is collected on disc and transferred to tape in large blocks for subsequent processing on the central computer.

The standard graphics program package GINO-F (Version 1.8), developed at the Computer-Aided Design Centre in Cambridge, was installed on the GEC 4080 in the summer and is being used in the magnet design program GFUN. The design process falls into three stages: setting up magnet parameters and making preliminary small calculations; calculating magnetic fields and other variables; and display and analysis of results. The first and third stages are highly interactive and will be carried out on the GEC 4080 (most of the first has already been implemented). The second stage requires the computing power of the IBM 360/195, so it will be submitted as a batch job, with priority turnround if necessary.

Apart from the simple point-plotting display for bubble chamber work already mentioned, a much more versatile refresh display with hardware coordinate transformations (rotation in two and three dimensions, translation and scaling) has been constructed. It uses another Hewlett-Packard 1310A unit, showing up to 40,000 points without flicker, and may be attached to the GEC 4080 via the Interdata 7/16 when no bubble chamber work is in progress, and files stored in the 4080 may then be displayed. The present version of the GINO package does not support refresh displays, but version 2.0 does and is due for release early in 1976. Further developments of the display planned for 1976 include hardware vectors, cursors and a light pen.