Under recent research initiatives, European scientists have made considerable advances in user interface design. We review seven illustrative projects (see Appendix). Both basic and pre-competitive research projects have yielded significant findings, and we can characterize the major strands of these advances as follows:

The majority of this article addresses the last two of these strands. But first let's look at the progress in the first three of these as illustrated by one European Strategic Programme for Research in Information Technology research project, which ran from 1984 to 1989: Human Factors Laboratories in Information Technologies (project 385).

The Human Factors Laboratories in Information Technologies (Hufit) project was the flagship project for ergonomics and human factors in ESPRIT I. Under the general objective of placing companies in a position to produce world class products, Hufit focused on ways of closely matching products to users and their goals. Of course, such products should be economic, incorporating effective and flexible user interfaces developed through user-centered design and drawing on supporting development tools.

To achieve this objective, the project was divided into three action lines. The first action line, entitled From Conception to Use, embodied human factors psychology in task analysis techniques to specify the design. Researchers who followed this action line developed design aids incorporating computing human factors design guidelines in the Intuit computer-aided systems engineering tool, the Computer Human Factors online database, and the Hufit Toolset for use in (user-centered) product design. Finally, they investigated usability assessment techniques and the structure and role of usability laboratories for evaluation of user interfaces. More focused research (1), suggested alternatives to the methods of presenting design guidance advocated in Hufit. These alternative suggestions overcome some of the limitations of these methods, but they have not yet been developed into tools for the wider community. Under the first action line, research was also undertaken on flexible, adaptable interfaces and a management support system for low technology environments.

The second line developed interface technologies such as direct manipulation, speech I/O, and command languages and keyboard design for European languages other than English. This line produced two pre-competitive products: the Maitre development environment for multimedia interfaces (integrating speech, command languages, etc.), and the Diamant object-oriented tool for building prototypes and applications.

The third line focused on the transfer of human factors technology from the research base into development companies without human factors skills. This line included seminars, workshops, exhibitions, consultancy, and publication.

The context of the project can be illustrated by the Hufit Toolset (and by Intuit to some extent):

These tools aimed primarily to enable designers to adopt a user-centered design approach. The project has produced increased human factors contact within design groups and increased awareness among managers of the benefits of human factors in products. Evaluation laboratories have been established among the partners themselves during the project, but also more generally among European developers. Human factors activity increased during the period of the project, as did a demand for more human factors input from companies, especially in the context of design teams.

While researchers in the Hufit project worked on methods of presenting interface guidance to developers, the technologies and techniques available for user interface design progressed in parallel. (The technology underlying this rise in human factors awareness was mostly that of personal computer screens with menus, not the rich forms of interaction required for dealing with interactive graphics.) Graphics systems that can deliver manipulable 3D displays are no longer priced so as to be limited to specialized graphics tasks such as the animation of television commercials and corporate logos. Computer hardware technology has become so miniaturized that portable machines now have the power to perform complex tasks previously limited to workstations. We no longer need to connect portable machines to hotel telephones to communicate, but we can use cell telephone technology to communicate while on the move. AI systems have developed so that user modelling and functional natural language interfaces are no longer independent research systems but can now be incorporated into intelligent and cooperative user interfaces. Such developments allow the combination of graphics and other media into single affordable multimedia systems for performing complex tasks.

Much current user interface research addresses the fourth and fifth strands listed earlier. Researchers now incorporate technological advances into systems that can perform more complex tasks. In these systems, the interface matches the user's task and offers a rich interaction style.

An example of the change in style appeared in a Community Action Programme for Education and Training for Technology (Comett I) project to develop a course to teach human interface design through human-computer interfaces themselves. Buhmann, Gunther, and Koberle (2) gave an overview of the project, which consisted of two parts. The first part used mainly PC technology to provide a slide show to accompany a lecture (as described by Messina et al. (3)). The second part used a windows, icons, menus, and pointing devices interface within a task constrained by both the technology and the users' intentions to learn.

Researchers designed this latter course to introduce human factors issues in the design of the user interface, particularly for emphasizing current technical workstations. In the current version of this course, participants are expected to have some familiarity with computers. Each attendee had access to a machine throughout the course. Researchers emphasized hands-on use of the machine and individual experimentation and used newer instructional techniques to support this emphasis.

The newer instructional techniques are based on the dedicated use of a high-power technical workstation for each course participant. These machines offer a highly interactive, multiprocess, windowed working environment, and the participant interacts with a pointing device (mouse) rather than a keyboard. Although this style of working may be viewed as currently applicable only to a small range of users working mainly in engineering and science, this type of environment will increasingly become the norm for business and home computing systems of the near future. Various financial organizations are currently making the transition from a standard PC to such an environment. A more static presentation style (suitable for conventional "glass teletype" interface aspects of design) does not take full advantage of the highly interactive, direct-manipulation style of these powerful graphics workstations. Some aspects of design that can be shown in a static manner are still common over a variety of systems (such as font size and spacing for particular tasks), but the ability to set up comparisons interactively adds to the level of participation in the course and also reinforces specific points of design.

Instructors still use traditional methods in the current version of the course: an overhead projector (with copies of overheads provided and additional material like course notes), lectures with some tutorial discussion, and selected videotapes to illustrate salient points. However, the course does incorporate significant dynamic and novel teachware features in a context that permits researchers to evaluate the effectiveness of these newer techniques.

In line with this dynamic approach, researchers provided the following teachware techniques for the course:

Although precluded (by considerations of time and effort) from the initial phase of this project, a likely future extension of the material will be the packaging of the course overheads, tutor's notes, and possibly extracts from original sources and appropriate software modules combined into a hypertext document suitable for self-paced, remote learning. This document will require some additional work on guidelines that lead the participant through the experimentation encouraged.

The Comett I project illustrates the application of WIMP technology to provide an enhanced style of interaction and closer match between the interface and the user's intentions. This technology is established in the general research community. But the fifth strand of human interface research involves devices not yet established in mainstream use and shows how these can be matched to tasks and integrated into systems.

The ESPRIT II Euroworkstation project (EWS) provides an example of a European attempt to enhance the productivity of engineers and scientists by producing a multiprocessor, modular-architecture, high-performance scientific workstation. (The Euroworkstation system is described more fully by Robinson et al.) Among other goals, the EWS project aims to improve visualization techniques. Developers realized that simply improving raw power–by enhancing basic workstation performance, adding additional coprocessor boards (for circuit simulation and symbolic (AI) processing), and providing special-purpose graphics hardware – would not in itself improve productivity. Consequently, developers emphasized improving user-system interaction in two major ways.

Let's concentrate on improving interaction techniques. One major research area for human computer interaction and visualization is the development of effective techniques for the display of, and interaction with, 3D images. In a quasi-3D environment, we can overcome the limitations of a 2D pointing-device such as the mouse by using on-screen sliders (PC flight simulation games give good examples of these techniques). However, this tends to limit the naturalness of the interaction. We notice this limitation especially when the system is producing high-quality moving images. We can extend the parameter space of the mouse by adding potentiometers, using a 3D joystick, or taking advantage of the extra dimensions offered by such devices as the Space-Ball. These effectively control simple rotation of 3D objects, but can raise problems for compound rotations and more complex interactions.

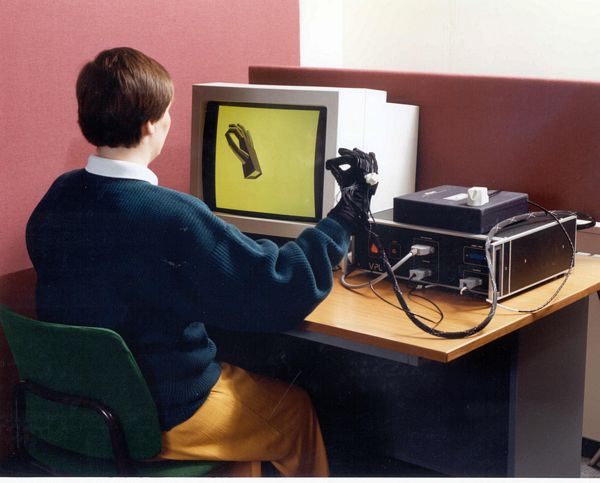

One device under investigation by the EWS project is VPL Research's Dataglove (4) (shown in Figure 1). This is basically a neoprene fabric glove with a Polhemus cube mounted above the wrist to give hand position (after calibration) in 3-space. Additional fiber optic sensors register the positions of the fingers and thumb. This device is used in the United States principally for remote manipulation, robotics control, games, and in the experimental area of virtual reality. Japan is also interested in the device, especially in the animatronics industry.

Researchers have developed a basic repertoire of commands for the Dataglove. An on-screen object can be grasped and manipulated using the glove in a way analogous to everyday manual manipulation. Researchers still need to work on the repertoire of gestures to extend beyond this simple mapping, including controlling window activity, dismissing processes from the screen, walking through an image or a file store, and so forth. (The international dimensions of gesture present an interesting challenge–one group's complimentary gesture might be a deadly insult to another.)

In this context, a major issue for the user interface is feedback for the user. Currently a wireframe outline hand appears on the screen with other elements of the current display. This involves a cognitive mapping problem similar to (although more complex than) that of coordinating mouse movement with onscreen cursor activity. The Dataglove the EWS project uses has no proprioceptive feedback. However, sound can provide additional cues to the user, such as when an object has been successfully grasped.

Researchers in the US have informally studied head-mounted displays to attempt to enhance the realism of the computer-generated image and improve Dataglove interaction (Reference 4). This would not really be practical within the envisaged working context of a Euroworkstation. Furthermore, the implications for longer term use of devices such as the Dataglove–fatigue effects, recalibration during a session–remain to be explored. Whereas most scientists and engineers accept such human computer interaction and visualization as a simple extension of their current working environment, in the business sector the everyday use of graphics and direct manipulation techniques in the everyday working environment is just starting to catch hold.

Another ESPRIT II project – the Elusive Office – attempts to introduce ideas of graphics and direct manipulation into a range of application areas not only in the fixed office environment but also for truly portable and personal systems that use cell-phone techniques for communications (see Figure 2).

An example application for the Elusive Office is the work of the traveling salesperson. Typically these individuals work from home, contacting a central or district office on an intermittent basis and conducting their business largely on the customers' premises. This involves setting up a schedule of visits, making the visit perhaps with computer support if quotations need to be calculated, and reporting the results of the visits to a central office. As well as using the computer to produce letters, make appointments, and prepare quotes, the salesperson makes frequent telephone calls to customers, central office staff, and domain experts. Additionally, a telephone answering machine is present at the home base.

The portable part of the system (which may include a small printer and scanner unit) has to be relatively unobtrusive for user (and customer) acceptance. Flat-screen technology imposes constraints on color and screen size, but the screen appearance, apart from applications differences, will have similarities to the working environment offered by today's scientific workstations, such as Siemens, Matra, or Sun workstations (see Figure 3).

Current battery and hardware technology, however, impose further limitations. However, additional components of this modular system can be made available in the user's vehicle or at the home base.

Note that developers did not intend the system to solve the Traveling Salesman Problem, although it is hoped to alleviate some of the traveling salesperson's problems.

Major effort is going into integrating all Elusive Office applications into a consistent interaction model. This will include security and communications aspects of the system. Transparent communications support has to be offered for voice telephony, facsimile, electronic mail, interaction with remote systems, and data transfer. Because of the variety of applications, we must be able to tailor the interface easily to very different domains, users, and user organizations. The Elusive Office should also support the possibility of chunking system objects into complete action sequences. Because of factors such as the nature of the cell-phone connection, the Elusive Office system must keep the user informed of likely costs and implications of requested actions. The user will have the option to alter choices in the light of this information.

An interesting issue for an Elusive Office system is the direct manipulation device to use. A conventional laptop does not provide a convenient flat area for a mouse. A device such as the Dataglove is currently rather expensive and may also attract strange looks even in the first class cabins of aircraft. The route taken by Apple for their new portable system is a rollerball with a click-bar, and Psion has a touchpad below the screen area for their MC-200 and MC-400 machines. The use of a stylus directly onto a paper-quality touch-sensitive screen seems to offer the most acceptable route for mid-1990s remote office systems.

One novel feature of an Elusive Office system will be the highly integrated Computer Learning System that will support remote users. This will offer a range of assistance, from simple help to complex linked simulations of system objects under control.

Although traveling salespeople are stereotypically individualistic, for many potential application areas of an Elusive Office system, they will need to work cooperatively. Face-to-face meetings, especially where participants are based far apart or even mobile, are difficult to organize and to support. And they are frequently seen as an expensive use of resources. Support is also required between meetings. Currently there is little information technology support for this kind of collaborative process.

The Elusive Office project is exploring yet another aspect of computers and work. Initially funded under the UK Alvey Program, carried out at the Science and Engineering Research Council (SERC) Rutherford Appleton Laboratory (RAL), the Musk (Multiuser Sketchpad) program works on standard Unix workstations such as the Sun, connected by Ethernet. In addition to a facility similar to the Unix talk routine, all participants in a Musk session share a common sketch area. All participant screens display the same copy. This sketch area supports simple mouse-sketched graphics, text, and cutting and pasting of bit maps from elsewhere on the screen. The system supports selective sketch erasure, addition of users to the system (if they are currently on the LAN), waking up (by ringing the workstation bell) of quiescent participants, and a single-user pointing device (with user ID displayed).

As shown in Figure 4, the bottom of the Musk window contains a talk area into which the session participants can type. Each text line is preceded by the user ID. In the currently released version, a number of function buttons at the top of the sketch area permit free-hand drawing or drawing of lines, circles, and boxes under mouse control. The symbols to the right permit the user to exit from the Musk session and to use an image of a pointing hand to point to items in the sketch area. The pointing hand can only be used by one user at a time in the current implementation (the user ID appears below the hand symbol in the sketch area). With the cut-and-paste facility, the user can paste a bit map from the screen into the sketch area. Another facility rings the terminal bell of other users, a method of calling others on the network into a Musk session. The sketch area shows some examples of drawing, text, and bitmaps that have been pasted into the sketch area. The user named fred is currently pointing toward the clock near the center of the sketch area.

The Elusive Office system was first publicly shown at the 1987 Alvey Conference Exhibition (University of Manchester Institute for Science and Technology) in the context of remote cooperative working for design of a laser experimental setup. Studies at RAL indicated that a voice channel is required for effective interaction between Musk session participants.

This type of work reminds us of the need for multimedia support on workstations, whether fixed or mobile. The Comett program, and both the ESPRIT II EWS and Elusive Office Projects, emphasize enhancing the user's interaction with the system by improving both the system graphics and the quality of the user interface. An alternative approach to enhancing the style of interaction is to introduce intelligence into the interface and allow the user to interact in a subset of natural language while the interface adapts to the user. A group of projects are developing interfaces in this category. They are also exploring the interaction of command and natural languages with graphics-based interaction.

The Zeitgeist of interface design demands the direct manipulation of graphics representations of world objects. Although the direct manipulation paradigm has vociferous advocates, it also has well documented limitations. For example, direct manipulation is unsatisfactory for complex tasks. Other limitations include

Researchers have attempted to overcome these limitations by introducing mechanisms from conversation that allow repetition and the use of macros. Advanced knowledge-based applications support the complex tasks that prompt most of the problems with direct manipulation.

The standard alternative to direct manipulation is a command language, but such languages give rise to the problems that motivated the development of direct manipulation. Users fail to recall commands or arguments (for example, performing operations on the wrong files). Users fail to meet unspecified preconditions (for example, performing operations on nonexistent files or those with the wrong extension). And users also fail to specify commands completely (for example, omitting option flags to commands).

For database applications, developers have promoted natural language interfaces that include those aspects of human conversation that overcome the specific problems that novice database users encounter. Novice database users are those unfamiliar with the specific dictionary or syntax of a large command language, or who are easily overwhelmed by a vast array of menus or icons–that is, those aspects of the interface that support the use of approximate and incomplete reference.

Some commercial English natural-language products available interface to databases and translate queries in English (such as "Who makes more than his manager?") into query language statements that can access the database and produce responses. To do this, the products must understand such complex structures as ellipses, pronoun references, anaphora, relative clauses, and ambiguous or poorly specified references. They must also use an internal semantic representation language to handle ambiguity, synonyms, and some common sense reasoning. But how closely should computers approximate conventional human language interaction? R.S. Nickerson (5) considered but did not resolve this question.

An assumption that is not made, however, is that to be maximally effective, systems must permit interactions between people and computers that resemble inter-person conversations in all respects.... The question of what specific aspects of interperson conversations person-computer interactions should have is an open one; and the answer may differ from system to system and application to application.

First-generation expert systems allowed users to interact only through a sequence of system questions that the user could either answer or reply to with requests for a limited range of explanations, such as "Why do you want to know that answer?" or "What does that term mean?" Advanced knowledge-based applications try to produce cooperative dialogues where users can change the topic or ask a wide range of questions supplementing this limited regime. For novice users, such systems give rise to some of the same problems they had with databases, so natural language interfaces might provide a solution. However, the richer dialogues that natural-language interfaces support require the inclusion of more aspects of conversation, such as rules for taking control of the dialogue or changing the topic.

In many domains where such advanced knowledge-based systems are applied, natural language is not satisfactory as the sole interface mode. For advanced users, a command language may still be more efficient. For all users, some information may be more effectively conveyed graphically. For example, for design problems we usually need to view a graphical representation of the design. When the system uses both graphical presentation with direct manipulation and natural language in the interface, mechanisms must be introduced to allow them to interact and support cooperative dialogue.

Three major European research projects are trying to develop intelligent interfaces to knowledge-based systems (KBS). These intelligent interfaces will go beyond the rigid interviewing procedure common to KBS interfaces and support cooperative dialogues using natural language and graphics. The University of Saarbrucken's Department of Computer Science Project (Xtra (6_) system uses German. The Construction and Interrogation of Knowledge Bases Using Natural Language Text and Graphics Project (Acord (7)) uses English, French, and German. The Multi-Modal Interface for Man-Machine Interaction (MMI2) uses English, French, and Spanish as well as command language and mouse-drawn gestures (in the style of Wolf and Morrel-Samuels (8)). When alternative natural languages are available, the systems use them independently, making no deliberate attempt at machine translation. Let's examine the approach taken by these projects by focusing on the multi-modal interface project, with reference to the two others. (Other projects in this area have been recently summarized by Taylor, Neel, and Bouwhuis (9).)

These three projects currently focus on the structure of multi-modal dialogue to support cooperative communication. They do not incorporate the most advanced interface technologies. The quality of the graphics in these systems is poor compared to those in the Euroworkstation project mentioned earlier. Users point with a mouse rather than the Dataglove and enter the natural language by typing at the keyboard rather than by voice. The modular architectures of these systems will allow the later addition of these technologies, after developers have resolved the complexities of multimodal cooperative dialogue.

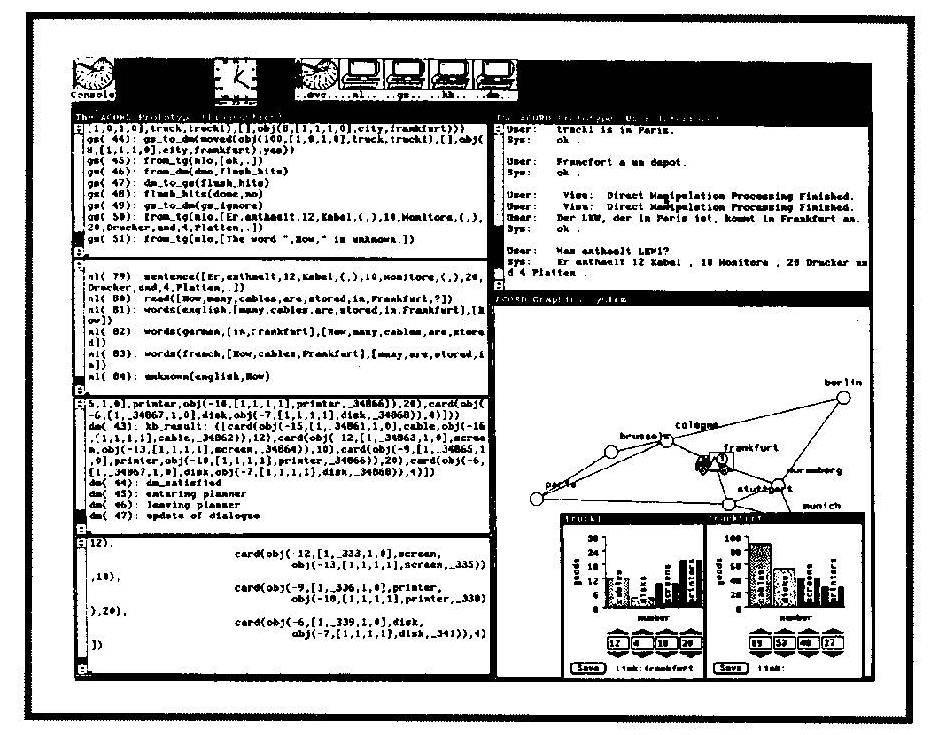

Each project has chosen a demonstration application domain that can provide a sufficiently rich interaction to require the cooperative multimodal interaction. In the Acord Project, the domain is that of storing and transporting goods around Europe (see Figure 5). The user might look at a displayed map of Europe, point to a node labeled "Stuttgart," and ask "How much storage is available there?" The system responds with a bar chart showing the amount of storage available for goods. The user can manipulate the bar chart to reflect his planned movement of 500 hectolitres of wine to Stuttgart, which in turn updates the system's knowledge base.

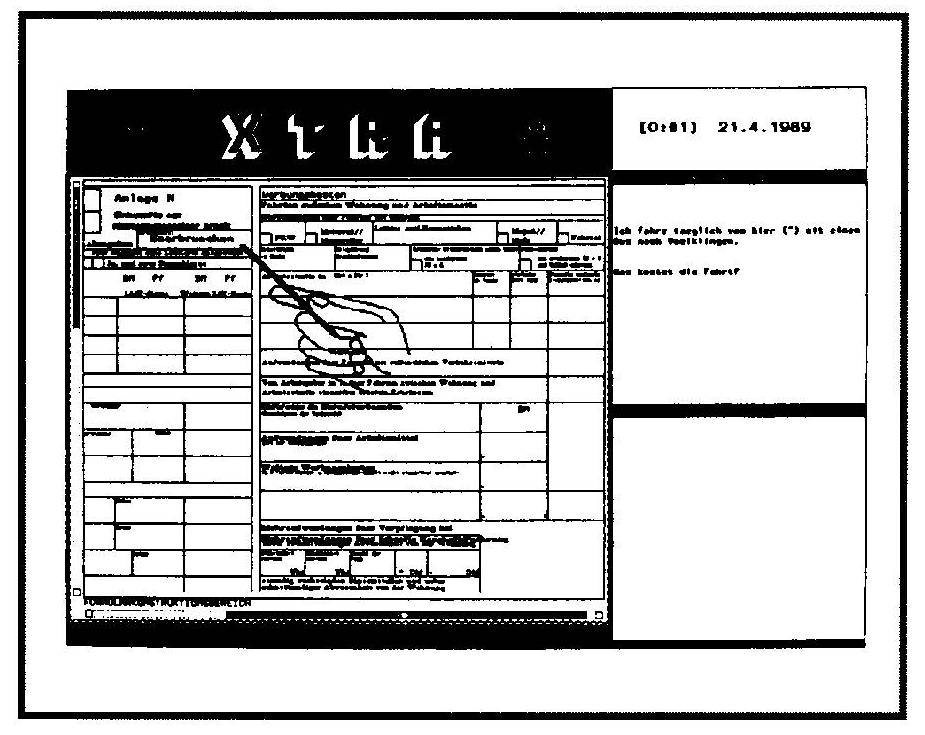

In the Xtra system, the domain is one of filling out a German annual tax withholding adjustment form (see Figure 6). The user can refer to regions, labels, and entries in the form by pointing at them or by asking for them in natural language. The user then enters data in the appropriate spaces.

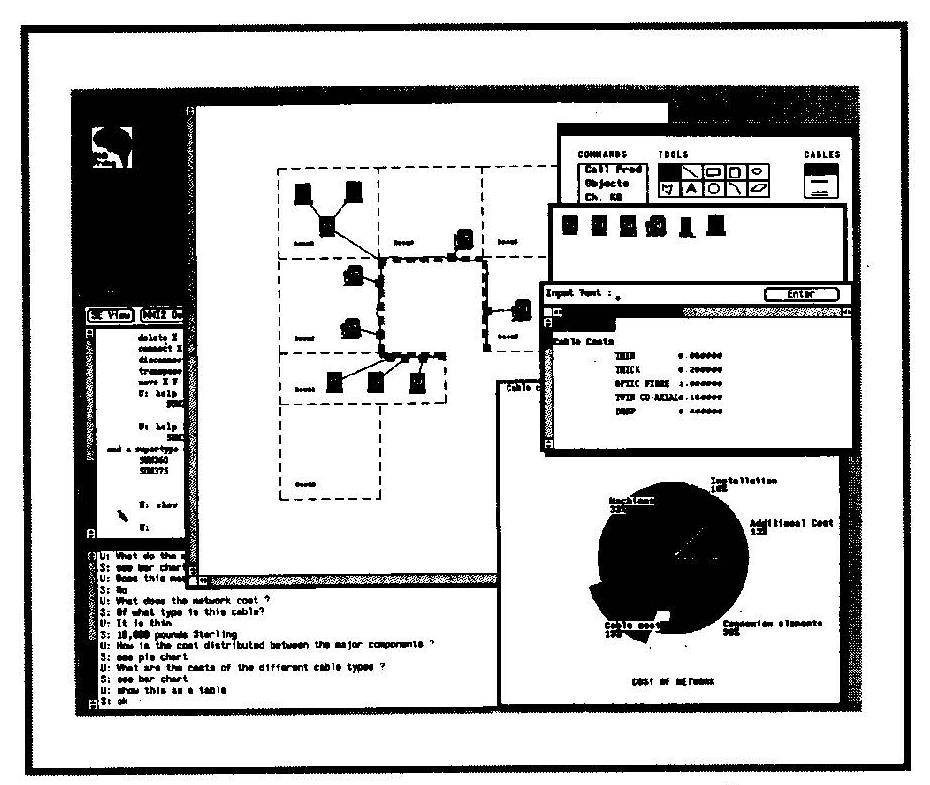

In the MMI2 system, the domain is that of designing a computer network for a building or site. Users can input the building structure diagrammatically, locate specific items of computer equipment on the plan by manipulating icons, and specify requirements for network load and usage in natural language. They can also ask the system to complete the design (which will be presented graphically), inquire about the design and have the answers presented as charts or tables, or inquire about the reasoning process behind the design and receive natural language replies tailored to the system's model of their knowledge and goals (see Figure 7). All these applications require a tight link between the graphical and textual interactions, which must be mapped onto an underlying KBS that reasons over them to produce replies and explanations.

The system links the graphic and textual interactions by using semantic representation languages as natural language database access systems do, but it includes in these languages not only the semantics required to support natural language but also graphical interaction. In the Acord system the semantic representation language is a sorted logic called Inl. The Xtra system uses SB-One, a logic-based representation language derived from KL-One. The MMI2 uses a typed first order logic called the common meaning representation. The CMR encodes the interactions between the various components of the architecture (handling gesture, graphics, command language, and natural language interaction modes) and the components of the dialogue management system (see Figure 8). Consequently, if a user selects the icon for a computer from a network diagram, this act is translated into CMR by the graphics mode, which is passed to the dialogue controller. When a user types an English command, it is translated into CMR by the English mode and passed to the dialogue controller.

The dialogue controller in the MMI2 architecture receives packets of CMR from the input modes and must decide what to do with them by matching the communication force on the packet with a communication plan, then executing the plan. For example, the communication force may state that the CMR represents a direct question from the user, and the CMR may state that the unknown value is the cost of a machine. Such a packet would be matched to a plan asking the knowledge base direct questions. This would be executed by sending the packet to the domain expert, which would in turn call the knowledge base, passing the reply back to the dialogue controller. The domain expert would translate the CMR packet into a query to the knowledge base in its own language and translate the reply back into CMR. This application-specific translation process would have to be specified for each new knowledge base. For more complex examples, the knowledge base may require further information before producing an answer, so a request would be sent as a CMR packet to the dialogue controller. The dialogue controller then establishes the content of an output packet and determines which mode can most effectively and efficiently present it to the user. The individual mode will then translate the CMR packet into graphics or natural language and display it.

The other experts in this modular interface architecture represent the sets of knowledge required as possible referents in a cooperative dialogue. By its interaction with the KBS, the domain expert represents knowledge of the problem domain in general and the capacity of the application knowledge base to reason over the domain.

The context expert represents the current dialogue in which the system and user are involved, allowing them to refer back to it. It also provides the context that the natural language experts require to resolve ambiguities in the user's input.

The interface expert describes the capabilities of the interface itself to conduct cooperative dialogues and present information graphically. The interface expert also describes the limits on the natural language processing in conducting uncooperative dialogues.

The user modeling expert represents information about the user of the system in terms of the user's preferences for graphical components in presentation, knowledge of the domain, goals in the dialogue, and some domain-relevant information.

The semantic expert represents the semantics of terms that can be used in the CMR and acts as a model of the world that the lexica of the natural language modes map onto.

The dialogue controller calls on each of these bodies of knowledge when it maps the CMR input onto a communication plan to control the dialogue and when it constructs the content of output for the user. For example, if the user modeling expert states that a user knows about a topic, the dialogue controller will omit descriptions of it from any output. But if the user modeling expert states that a user does not know about a topic, then it will be explained in system output when required. The structure of the dialogue can be complex, with shifts in topic by either the system or user, or include complex subdialogues to clarify or resolve specific issues that must be managed by the dialogue controller.

The Xtra and Acord systems do not share all of these components. Both have a dialogue management component, both must define the semantics of the internal language, and both must represent the context of the dialogue. Although the Acord system does not include a user model, that included in the Xtra system is very similar to that used in the MMI2.

The requirements for the MMI2 system and the knowledge in the user model and application KBS were acquired by using the Wizard of Oz simulation technique, a popular way of assessing potential use of an unbuilt system (see Green and Wei-Haas (10)). In a Wizard of Oz simulation, unknown to users, their terminals are connected to experts hidden in separate rooms. These experts act as the program. They type, speak, or draw the responses for the "functioning" program. For the MMI2 system, the terminals communicated using Musk so that both textual and graphic communication were available between user and simulated program. Researchers recorded these communications to provide data for task analysis and knowledge acquisition.

Interface developers addressed five major forms of interaction between natural language and graphic objects and actions to form a basis for integrating graphics and natural language:

The processes used to resolve reference in natural language ultimately resolve each of these forms of reference. These projects address the issues of what to pass to the natural language component.

Conventionally, in a graphics interface, resolving ostensive deixis is easy because the object selected would be uniquely identified. However, in an attempt to allow users to behave as naturally as possible, the Xtra project has shown that users often point beside the intended referent so as not to obscure it. Further, in an attempt at naturalness they allow four granularities of pointing device so that users can select the appropriate pointing device and then the referent rather than selecting the appropriate handle on part of a nested object.

Despite the use of different granularities of pointing device in the Xtra project, uncertainty remains as to the intended referent. Therefore, it is necessary to derive a measure of pointing plausibility from the degree to which a pointing gesture covers a region to express the extent to which a gesture indicates a region. The system collects the regions for which plausibility values have been assigned as a list of referential candidates. This list is then passed to other processes such as those that identify linguistic referents.

The MMI2 system resolves nonostensive deixis by the graphics expert's constantly updating a list of possible discourse referents in CMR terms visible on the screen. The context expert uses this to determine whether a textual referent should be chosen from its list of previously mentioned textual referents (anaphoric referents) or the current list of possible nonostensive deictic referents.

The resolution of referents from definite descriptions of domain objects by their graphical features requires that the graphical features of objects, as well as the real world feature, be available to the natural language referent resolution mechanism. For example, in a discourse about the performance of a local area network displayed diagrammatically, one workstation appears as a purple icon. A user intending to remove this machine from the network can type any of the following:

All of these use natural language descriptions of the intended item. The first uses a domain description, and the third uses a graphic description. The second is ambiguous as to whether the actual workstation is purple or the icon representing the workstation is purple. All require processes that identify linguistic referents to operate, but the second two require that those processes have access to descriptions of the graphical representation of the domain objects.

The fourth problem is an extension of this. It occurs when the definite reference is not merely in terms of the graphical description of the object but includes spatial relationships in the graphical display. In the example of a displayed network, the user could ask to remove the top workstation or remove the left workstation. In both cases the spatial relation is ambiguous: Is the workstation in the domain or in the graphical representation? For example, the top workstation could be that on the uppermost floor of the building or that on the upper edge of the displayed diagram. To resolve this ambiguity, the processes that identify linguistic referents must consider the context of the utterance or ask the user to clarify. However, to appreciate the ambiguity, these processes must have access to a representation of the spatial geometry of the domain and of the user's perception of the machine representation. The MMI2 project intends to address both of these issues, although no resolution is currently available. Both problems could be overcome if users identified referents by selecting graphical representations with the mouse, but this may not be the preferred method. As with other aspects of "naturalness" in the interface, this will require studies of users' performance preferences as these systems develop.

The fifth problem of graphically representing indeterminacies in natural language descriptions is resolved in these systems either through assuming defaults or by entering sub-dialogues to fully determine the user's intention. Although these work for locational examples such as Put a computer in that room (where the user can be asked to select the exact location with the mouse), they are less acceptable where the user asks for a large computer to be placed at a location. In that case the size of the graphical representation of the "large computer" must be assumed. The solution to this relies on basic cognitive science research, not yet undertaken.

The MMI2, Acord, and Xtra systems all combine natural language and graphic interaction modes with complex dialogue management systems to provide cooperative dialogue between users and knowledge-based systems. Although the Acord project is complete, researchers are still developing multimode interaction and dialogue management in all three projects.

In this review, we have described only seven of the pre-competitive and none of the basic research projects on user interfaces in Europe. However, this sample should provide a pointer to the areas in which developments are being made. Human factors have greater visibility both in the design process and to management than several years ago, and many organizations now have usability or human factors evaluation laboratories. Similarly, development environments are now beginning to incorporate human factors guidance and tools.

While these developments have been taking place, greater graphics capabilities become more available, and user interface designers now have to address these advances. Interface designers are integrating the improved interfaces with other technologies, such as cellular telephones, natural language interfaces, and Al-based dialogue systems. In these research projects, designers are developing interfaces to become advanced toolkits and UIMS that include not only graphics but also support for other interaction modes and conversational dialogue management systems.

These developments in designer guidance and new interaction techniques at the precompetitive level have raised issues that future research in basic cognitive science and human computer interaction will have to address. These issues are not uniquely European, but this review has presented a sampling of current European research and the direction future research might take.

We thank those working on the projects mentioned for making the information presented here available, and Bob Hopgood and David Duce for helpful comments on an earlier draft. Any misinterpretations or errors, however, remain the responsibility of the authors.

1. P J. Barnard, M.D. Wilson, and A. MacLean, Approximate Modeling of Cognitive Activity with an Expert System: A Theory Based Strategy for Developing an Interactive Design Tool, Computer J., Vol. 31, No. 5, Oct. 1988, pp. 445-456.

2. A. Buhmann. M. Gunther, and G. Koberle, Computer Graphics as a Tool in Training and Education: A Comett Project, Computers & Graphics, Vol. 13, No. 4, Oct. 1989, pp. 523-527.

3. L.A. Messina et al., Teachware Development for Education on CAD, Computers & Graphics, Vol. R-13, No. 2, Apr. 1989, pp. 237-241.

4. J.D. Foley, Interfaces for Advanced Computing, Scientific American, Vol. 257, No. 4, Oct. 1987, pp. 127-135.

5. R.S. Nickerson, On Conversational Interaction with Computers, in User Oriented Design of Interactive Graphics Systems, ACM, New York, 1977, pp. 101-113.

6. J. Allgayer, et al., XTRA: A Natural Language Access System to Expert Systems, Int'l J. Man-Machine Studies, Vol. 31, No. 2, Aug. 1989, pp. 161-196.

7. E. Klein, Dialogues with Language, Graphics, and Logic, ESPRIT87 (proceedings), Elsevier, Amsterdam, 1987, pp. 867-873.

8. C. Wolf and P. Morrel-Samuels, The Use of Hand-Drawn Gestures for Text Editing, Int'l J. Man-Machine Studies, Vol. 27, No. 1, July 1987, pp. 91-102.

9. M.M. Taylor, F. Neel, and D.G. Bouwhuis, The Structure of Multimodal Dialogue, North-Holland, Amsterdam, 1989.

10. P. Green and L. Wei-Haas, The Rapid Development of User Interfaces: Experience with the Wizard of Oz Method, Proc. Human Factors Society–29th Annual Meeting, Human Factors Society, Santa Monica, Calif., 1985, pp. 470-474.

Michael Wilson is a research associate in knowledge engineering at Rutherford Appleton Laboratory. His research interests include cognitive ergonomics, user modeling, task analysis, and knowledge acquisition and explanation. He holds a BSc in experimental psychology from Sussex University and a PhD from Cambridge University. He is a chartered psychologist and a member of ACM, ACM SIGCHI, British Computer Society, Specialist Group in Human Computer Interaction (BCS HCISIG), British Psychological Society, European Association for Cognitive Ergonomics, and the Society for the Study of Artificial Intelligence and the Simulation of Behavior.

Anthony Conway is Human Factors Section Leader in the User Interface Design Group and project manager for Rutherford Appleton Laboratory in the Elusive Office. His research interests include cooperative working and virtual reality. He holds a BSc in psychology from the University of London. He is a chartered psychologist and a member of the AAAI, British Psychological Society, Human Factors Society, and the Society for the Study of Artificial Intelligence and the Simulation of Behavior.