Susan joined the Atlas Lab after getting a 1st class honours degree at Oxford in Akkadian. Bob Churchhouse had started work on Information Retrieval and Don Russell had produced COCOA for users in linguistic analysis so there was already an interest in the area. Susan made full use of both the SC4020 and the PDP15 in developing software for the display of non-Western characters.

In 1973, she joined the Oxford University Computing Services where she directed the Oxford Concordance Project and managed their OCR and typesetting facilities. pioneering The OCR service managed used the Kurzweil Data Entry Machine (KDEM). She taught courses on Text Analysis and the Computer, and SNOBOL Programming for the Humanities. She was also the First Director of the Computers in Teaching Initiative Centre for Textual Studies and also directed the Office for Humanities Communication from 1989-91. She was elected to a Fellowship by Special Election of St Cross College in 1979 and an Emeritus Fellowship of the College in 1991. While at Oxford, she published books on SNOBOL Programming for the Humanities and a Guide to Computer Applications in the Humanities.

She was Chair of the Association for Literary and Linguistic Computing from 1984-97. During that time she founded the journal Literary and Linguistic Computing with Oxford University Press and also co-edited five volumes of the series Research in Humanities Computing for Oxford. She was a member of the Executive Committee of the Text Encoding Initiative starting in 1987 and twice served as chair of that committee.

In 1991, she became the director of the Rutgers and Princeton Center for Electronic Texts in the Humanities. At CETH she founded and co-directed (with Dr Willard McCarty) an annual International Summer Seminar on Methods and Tools for Electronic Texts in the Humanities. She also directed a programme of research on the use of the Standard Generalized Markup Language (SGML) for the Humanities including an interface to OpenText's Pat search engine, a pilot project linking the Text Encoding Initiative and the Encoded Archival Description SGML Document Type Definitions, and the Electronic Theophrastus.

Later, she joined the University of Alberta to direct the Canadian Institute for Research Computing in the Arts and serve as co-investigator on the Orlando Project, an online cultural history of women's writing in the British Isles. In 2000, she published Electronic Texts in the Humanities: Principles and Practice and joined the School of Library, Archive, and Information Studies at University College London, becoming its director the following year. When she retired from that position in 2004 to become Emeritus Professor of Library and Information Studies at UCL, the digital humanities community honoured her as the third recipient of its highest award, named for Fr. Roberto Busa for her contribution to the establishment of the field of Humanities Computing, and for her work on computers and text. Her Busa Award speech, Living with Google: Perspectives on Humanities Computing and Digital Libraries was published in LLC, a field-making journal she had helped to establish in 1986.

The 55-year History of Humanities Computing by her, published in 2004, is a good overview of the area. It includes 35 years in which she herself was a highly productive and transformative figure.

An early paper is Computing in the Humanities published in the ICL Technical Journal, November, 1979

This paper describes some of the ways in which computers are being used in language, literature and historical research throughout the world. Input and output are problems because of the wide variety of character sets that must be represented. In contrast the software procedures required to process most humanities applications are not difficult and a number of packages are now available.

The use of computers in humanities research has been developing since the early 1960s. Although its growth has not been as rapid as that in the sciences and social sciences, it is now becoming an accepted procedure for researchers in such fields as languages, literature, history, archaeology and music to investigate whether a computer can assist the course of their research.

The major computer applications in the humanities are essentially very simple processes. All involve analysing very large amounts of data but do not pose many other computational problems, once the data is in computer readable form. The data may be a text for which the researcher wishes to compile a word index or alphabetical list of words, or it may be a collection of historical or archaeological material which the researcher wishes to interrogate or catalogue or from which he may perhaps wish to calculate some simple statistics.

Getting the data into computer readable form is a major problem for any humanities application, not only because of its volume but because of the limited character set that exists on most computer systems. The original version of a text to be processed is more likely to be in the form of a papyrus, a medieval manuscript or even a clay tablet than a modern printed book. Many texts are not written in the Roman alphabet but, even if they are, languages such as French, German, Italian or Spanish contain a number of accents or diacritics which are not usually found on computer input devices. The normal practice is to use some of the mathematical symbols to represent accents so that for example the French word Été would be transcribed as E*te*, assuming of course that the computer being used has upper and lower case letters.

A text which is not written in the Roman alphabet must first be transliterated into 'computer characters', so that for example in Greek, the letter alpha becomes a, gamma becomes g and not so obviously theta could be transliterated as q. In some languages such as Russian, it is more convenient to use more than one computer character to represent each letter. This presents no problems provided that the program which sorts the words into alphabetical order knows that its collating sequence may consist of such multiple characters. They are also necessary for modern languages such as Welsh and Spanish.

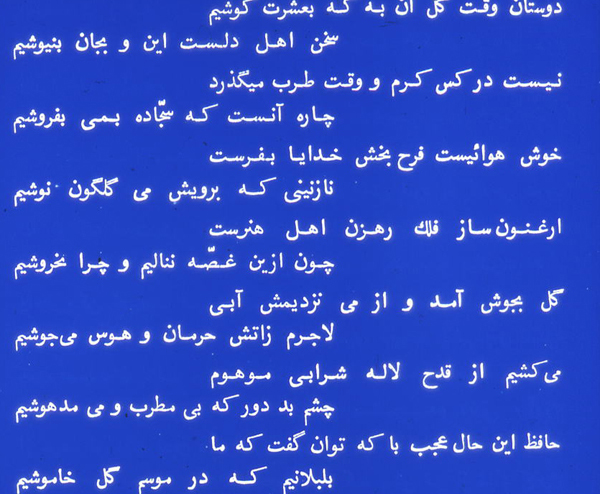

Arabic and Hebrew, though written from right to left in the original, are input in transliterated form from left to right. In neither language is it usual to write the vowel marks. Hebrew is easier in that it has only 22 letters, five of which have an alternative form when they appear at the end of a word. By contrast, each letter of the Arabic alphabet has four forms depending on whether it occurs in the initial, medial, final or independent position. Each of these forms can be transliterated into the same character, for it has proved possible to write a computer program to simulate the rules for writing Arabic, if the output should be desired in the original script.

There are of course a number of specialised input devices for non-standard scripts. The University of Oxford already has a visual display unit which displays Greek with a full set of diacritics as well as Roman characters. Other such terminals exist for Cyrillic, Hebrew and Arabic. Some, like the Oxford one, generate the characters purely by hardware, others use a microprocessor or even the mainframe computer to draw the character shapes. While this type gives a greater flexibility of character sets, it is slow to operate when its prime use is for the input of large amounts of data. Ideographic scripts such as Chinese, Japanese or even Egyptian hieroglyphs can be approached in a different manner. An input device is now in use for such scripts, which consists of a revolving drum covered by a large sheet containing all the characters in a 60 × 60 matrix. The drum is moved so that the required character is positioned under a pointer. The co-ordinates of that character on the sheet are then transmitted to a computer program which already knows which character is in that position.

Specialised devices are the only adequate solution to the problem of output. An upper- and lower-case line printer may be used at the proofreading stage but it does not have an adequate character set and the quality of its output is unsuitable for publication. A daisy-wheel printer may offer a wider character set and better designed characters but its printout resembles typescript rather than a printed book. There have been a number of experiments to use graphics devices to draw nonstandard characters. These have proved more successful than the devices mentioned above, particularly for Chinese and Japanese, but their main disadvantage is the large amount of filestore and processing time required to print even a fairly small text. It seems that the best solution to the output problem is a photocomposer which offers a wide range of fonts and point sizes and which can be driven by a magnetic tape generated on the mainframe that has processed the text. Such devices have been used with great success to publish a number of computer generated word indexes and bibliographies.

Besides simply retyping the text, there are other possible sources of acquiring a text in computer readable form. Once a text has been prepared for computer processing it may be copied for use by many scholars in different universities. There exist several archives or repositories of text in computer readable form which serve a dual purpose of distributing text elsewhere and acting as a home for material for which the researcher who originally used it has no further use. The LIBRI Archive at Dartmouth College, Hanover, New Hampshire, USA, has a large library of classical texts, mainly Latin. The Thesaurus Lingae Graecae at Irvine, California, USA, supplies Greek text for any author up to the fourth century AD. Oxford University Computing Service has an archive of English literature ranging from the entire Old English corpus up to the present day poets. The Oxford Archive will also undertake to maintain text in any other language if it is deposited there. The literary and Linguistic Computing Centre at the University of Cambridge has a large collection of predominantly medieval text. All these archives maintain catalogues of their collections and will distribute text for a small charge. There has usually been no technical difficulty in transferring tapes from one machine to another, provided that variable-length records are avoided. Another source of text on tape is as a by-product of computer typesetting. A number of publishers have been able to make their typesetting tapes available for academic research after the printers have finished with them. These have been particularly valuable for modern material such as case and statute law as well as dictionaries and directories.

The most obvious text-analysis application is the production of word counts, word indexes and concordances. A word count is simply an alphabetical list of words in a text with each word accompanied by its frequency. In a word index each word is accompanied by a list of references such as chapter, page or line showing where that word occurs in the text. In a concordance each occurrence of each word is accompanied by some context to the left and to the right of it showing which words occur near it. The reference is usually also given. In many cases the context consists of a complete line of text, but it may be a whole sentence or a certain number of words to the right and to the left.

Sorting words into alphabetical order is not as simple as one might think. Most concordance programs, as they are usually called, recognise several different types of characters. Some may be used to make up words and can be called alphabetics. Some may be punctuation characters and are therefore always used to separate words. These two categories are fairly obvious, but consider the case where an editor has reconstituted a gap in an original manuscript and has inserted within brackets the letters which he considers are missing, for example the Latin word fort[asse]. In a concordance this word must appear under the heading fortasse therefore the brackets must be ignored when the word is alphabetised but retained so that the word appears in the context still in the form in which it appears in the text. Thus we have another category of characters, those which are ignored for sorting purposes but retained in the context. A fourth category is usually used for accents and diacritics and very often for apostrophe and hyphen in English. We can consider the simplest case, that of the French words a, part of avoir, and à, the preposition. The latter form must appear as a separate entry in the word list immediately after all the occurrences of a, but before any word beginning aa-. If the accent is treated as an alphabetic symbol it must be given a place in the alphabetic sequence of characters, but wherever it is placed it will cause some words to be listed in the wrong position. Therefore it is more usual and indeed necessary to treat accents as a secondary sort key which is to be used when words can no longer be distinguished by the primary key. The same treatment is necessary for an apostrophe and hyphen in English. There is no position for an apostrophe in an alphabetic sequence which will allow all the occurrences of I'll to appear immediately after all those of ill and all those of can't to come immediately after all those of cant.

The alphabetic sequence for sorting letters may vary from one language to another. Reviewers of concordances of Greek texts have been rightly scornful if the Greek words appear in the English alphabet order. A concordance program should be able to accept a user-supplied collating sequence of letters and operate using that. It should also be able to treat the double letters of Welsh or Spanish and the multiple characters of transliterated Russian as single units in the collating sequence. More importantly, it should have a facility to treat two or more characters as identical so that for example all the words beginning with upper case A do not come after all those beginning with lower case a, but are intermingled with them in their rightful alphabetic position. It follows then that the machine's internal collating sequence is not suitable for sorting text and it is regrettable that too many manufacturers rely on this collating sequence for their sorting software.

There are several different kinds of concordances and word lists. The most usual method is to list all the words in forward alphabetical order. They may also be given in frequency order beginning with either the most frequent or the least frequent. In this case when many words have the same frequency the words within that frequency are themselves listed in alphabetical order. Words may also be listed in alphabetical order of their ending, i.e. they are alphabetised working backwards from the ends of the words. This is particularly useful for a study of rhyme schemes or morphology (grammatical endings). In a concordance, all the occurrences of a word may appear either in the order in which they occur in the text or in alphabetical order of what comes to the right or left of the keyword, as it is called.

A vocabulary distribution of a text always gives a very similar sort of curve, ranging from a few very-high-frequency words down to a very long tail of many words occurring only one. The high-frequency words occupy a very large amount of room in a concordance and are therefore sometimes omitted. In the handmade concordances of the nineteenth century they were almost always omitted because they accounted for such a large proportion of the work. Words can be missed out either by supplying a list of such words or by a cutoff frequency above which words should not appear. A third option in a concordance is to give only the references and not the context for high-frequency words.

Foreign words, quotations and proper names can also cause problems in computer- generated concordances. If there are many of them and particularly if they are spelled the same as words in the main text, they can distort the vocabulary counts to such an extent that they become worthless. This problem is usually resolved by putting markers in front of these words as the text is input. For example, in a concordance of the Paston letters in preparation in Oxford, all the proper names are preceded by ↑ and all the Latin words by $. These markers can then be used to list all these words separately or if the editor wishes they can be ignored at the sorting stage so that the words appear in their normal alphabetic position.

Word counts and concordances are the basis of most computer-aided studies of text. Their uses range from authorship studies, grammatical analysis and phonetics to the preparation of language courses and study of spelling variations and printing. A few examples will suffice to show what can be done.

Vocabulary counts have been used in several ways for preparing language courses. The University of Nottingham devised a German course for chemists using vocabulary counts of a large amount of German technical literature as a basis for selecting which words and grammatical forms to teach. It was found, for example, that the first and second person forms of the verb very rarely appeared in the technical text, although they would normally be one of the first forms to be taught. A second approach in designing a language course is to use a computer to keep track of the introduction of new words and record how often they are repeated later on in the course.

A word list of a very large amount of text can give an overall profile of one particular language. Several volumes of a large word count of modern Swedish have been published, using newspapers as the source of material. Newspaper articles are an ideal base for such a vocabulary count as they are completely representative of the language of the present day. Another study of modern Turkish, again using newspapers, is aiming to investigate the frequency of loan words, mainly French and Arabic, in that language. By comparing two sets of vocabulary counts with a 5-year interval between the writing of the articles, it is possible to determine how much change has taken place over that period.

Some forms of grammatical analysis may also be undertaken using a concordance, particularly the use of particles and function words which are not so easy to notice when reading the text. In several cases the computer has found many more instances of a particle in a particular text than grammar books for that language acknowledge. It is also possible, albeit in rather a clumsy fashion, to study such features as the incidence of past participles in English. They may conveniently be defined as all words ending in -ed. This will of course produce a number of unwanted words such as seed and bed but they can easily be eliminated when the output is read. It is more important that no words should be missed and experiments have shown that the computer is much more accurate than the human at finding them.

Concordances can also be used to study the chronology of a particular author. It is certainly true that for a number of ancient authors, the order of his writings is not known. It may be that his style and particularly his vocabulary usage have changed over his lifetime. By compiling a concordance or word index of his works, or even by finding the occurrences of only a few items of vocabulary, it is possible to see from the references which words occur frequently in which texts. In this kind of study it is always the function words such as particles, prepositions and adverbs that hold the key, for they have no direct bearing on the subject matter of the work. These words have been the basis of a number of stylistic analyses of ancient Greek texts. In the early 1960s a Scottish clergyman named A.Q. Morton hit the headlines by claiming that the computer said that St. Paul only wrote four of his epistles. Morton has popularised the use of computers in authorship studies and at times tends to use too few criteria to establish what he is attempting to prove. His methods have, however, been taken up with some considerable success by a number of other scholars, notably Anthony Kenny, now Master of Balliol College, Oxford. Kenny used computer-generated vocabulary counts and concordances to study the Nicomachean and Eudemean Ethics of Aristotle. Three books appear in both sets of Ethics and these three books have been traditionally considered to be part of the Nicomachean Ethics and an intrusion into the Eudemean Ethics. By analysing the frequencies of a large number of function words, Kenny found that the vocabulary of the disputed books shows that they are more like the Eudemean than the Nicomachean Ethics.

Authorship studies are undoubtedly the popular image of text-analysis computing. There have been a number of relatively successful research projects in this area, but it is important to remember that the computer cannot prove anything; it can only supply facts on which deductions can be based. Mosteller and Wallace's analysis of the Federalist Papers [1] a series of documents published in 1787-88 to persuade the citizens of New York to ratify the constitution, is a model study. There were three authors of these papers, Jay, Hamilton and Madison, and the authorship of 12 out of 88 was in dispute and that only between Hamilton and Madison, for it was known that they were not written by Jay. This was an ideal case for an authorship study for there were only two possible candidates and the papers whose authorship was known provided a lot of comparative material for testing purposes. Mosteller and Wallace concentrated on the use of synonyms and discovered that Hamilton always used while when Madison and the disputed papers preferred whilst. Other words such as upon and enough, for which there are again synonyms, emerged as markers of Hamilton. Working at roughly the same time as Mosteller and Wallace, a Swede called Ellegard applied similar techniques to a study of the Junius letters. Although he was not able to reach such a firm conclusion about their authorship, he and Mosteller and Wallace, to some extent together with Morton, paved the way for later authorship investigations.

There are other stylistic features besides vocabulary which can be investigated with the aid of a computer. In the late 19th century T.C. Mendenhall employed a counting machine operated by two ladies to record word-length distributions of Shakespeare and a number of other authors of his period. Although he found that Shakespeare's distribution peaked at 4-letter words, while the others peaked at three letters, he was not able to draw any satisfactory conclusion from this. It goes without saying that word length is the simplest to calculate but it is arguable whether it really provides any useful information. Sentence length has also been used as a criterion in stylistic investigation. This again has proved a variable measure, too often used because it is easy to calculate. Mosteller and Wallace first applied sentence-length techniques to Hamilton and Madison and found their mean sentence length was almost the same.

One feature which is more difficult to investigate with a computer is that of syntactic structure. Once one has gone further than merely identifying words which introduce, say, temporal clauses, it is not at all easy to categorise words into their parts of speech. Related to this question is the problem of lemmatisation, that is putting words under their dictionary headings. The computer cannot put is, am, are, was, were etc. under be unless it has been given prior instruction to do so. Most computer concordances do not lemmatise, but most of their editors would prefer it if they did. The most sensible way of dealing with the lemmatisation problem is to use what is known as a machine dictionary. This is in effect an enormous file containing one record for every word in the text or language. When a word is 'looked up' in the dictionary, its record will supply further information about that word such as its dictionary heading, its part of speech and also some indication if it is a homograph. The size of such a dictionary file can truly be enormous but it can be reduced for some languages if the flectional endings are first removed from the form before it is looked up. This is not so easy for English where there are so many irregular forms, but even removing the plural ending -s would reduce the number of words considerably. For a language like Latin, Greek or even German it is far more practical to remove endings before the word is looked up. For example in Latin amabat could always be found under a heading ama, which is not a true Latin stem, by removing the imperfect tense ending -bat.

A machine dictionary can therefore be used to supply the part of speech for a word. In the case of homographs such as lead, a noun, and lead, a verb, some further processing may be required on the sentence to decide which form is the correct one. Once the parts of speech have been found they can be stored in another file as a series of single letter codes and this file interrogated to discover, for example, how many sentences begin with an adverb, or what proportion of the author's vocabulary are nouns, verbs and adjectives.

Besides vocabulary and stylistic analysis, there are two more branches to text analysis where a computer can be used. We can consider first textual criticism, the study of variations in manuscripts. There exist several versions of many ancient and modern texts and these versions can all be slightly different from each other. With ancient texts, the manuscripts were copied by hand sometimes by scribes who could not read the material and inevitably errors occurred in the copying. When a new edition of such a text is being prepared for publication the editor must proceed through several stages before the final version of his text is complete. First he must compare all the versions of the texts to find where the differences occur. This technique is known as collation and the differences as variant readings which can be anything from one word to several lines. Once the variants have been found, the editor must then attempt to establish the relationship between the manuscripts, for it is likely that the oldest one is closest to what the author originally wrote. Traditionally manuscript relationships have been described in the form of trees where the oldest manuscript is at the top of the tree. More recently cluster-analysis techniques have been applied to group sets of manuscripts without any consideration of which is the oldest. The printed edition which the editor produces consists of the text which he has reconstructed from his collation and a series of footnotes called the apparatus criticus which consists of the important variants and the names of the manuscripts in which they occur.

The computer can be used in almost all these stages. It might seem that the collation of the manuscripts is the most obvious computer application, but this does have a number of disadvantages. The first problem is to put all the versions of the text into computer-readable form, a lengthy but surmountable task. More difficult problems arise in the comparison stage, particularly with prose text. In verse an interpolation or extra line must fit the metre. In prose it can be any length and most programs have been unable to realign the text after a substantial omission. If the manuscripts are collated by computer, the variants can be saved in another computer file for further processing. It is the second state of textual criticism, namely establishing the relationship between manuscripts, that a computer has been found more useful. Programs exist to generate tree structures from groups of variants, though most of them leave the scholar to decide which manuscripts should be at the top of the tree. Cluster-analysis techniques have also been used on manuscript variants to provide dendrograms or 3-dimensional drawings of groups of manuscripts. If one complete version of the text is in computer readable form it can be used to create the editor's own version and together with the apparatus criticus be prepared on the computer and phototypeset from it.

The other suitable text-analysis application is the study of metre and scansion in poetry, and to some extent also in prose. There are two distinct ways of expressing metre. Some languages like English use stress while others like Latin or Greek use the length of the syllable. In the latter case it is possible to write a program to perform the actual scansion; in the former it is more usual to obtain the scansions from a machine-readable dictionary. The rules of Latin hexameter verse are such that a computer program can be about 98% accurate in scanning the lines. The program operates by searching the line for all long syllables, that is those with a diphthong or where the vowel is followed by two consonants. The length of one or two other syllables, for example the first, can always be deduced by the position. It is usually then possible to fill in the quantities of the other syllables on the basis of the format of the hexameter line which always has six feet which can be either a spondee (two long syllables) or a dactyl (one long followed by two shorts). For languages like English, the scansion process may be a little more cumbersome but the net result is the same: a file of scansions which can then be interrogated to discover how many lines begin or end with particular scansion patterns.

Sound patterns of a different kind can be analysed by computer, particularly alliteration and assonance. Again the degree of success depends on the spelling rules of the language concerned. English is not at all phonetic in its spelling and simple programs to find two or more successive words which start with the same letter can produce some unexpected results. In other languages where the spelling is more phonetic this can be a fruitful avenue to explore. An analysis of the Homeric poems which consisted merely of letter counts revealed some interesting features. It was convenient to group the letters according to whether their sound was harsh or soft. When the lines that had high scores for harsh letters were investigated it was seen that they were about battle or death or even galloping hooves, whereas the soft sounding lines dealt with soothing matter like river or love. A comprehensive study of metre and alliteration was conducted by Dilligan and Bender [2] on the iambic poems of Hopkins. They used bit patterns to represent the various features for each line and applied Boolean operators to these bit patterns, for example to find the places where alliteration and stress coincided.

In contrast to raw text, historical and archaeological data are usually in a record and field structure. In fact many of the operations performed on this material are very similar to those used in commercial computing and many of the same packages are used. The files can be sorted into alphabetical order by one or more fields or they can be searched to find all the records that satisfy some criteria. If the material being sorted is textual, the same problems that occur for sorting raw text can also arise here. Another difference from commercial computing is the amount of information that is incomplete. It is quite common for a biographical record which can have several hundreds of fields to contain the information for only a very few. If the person lived several hundred years ago it is not likely that very much is known about him other than his name. Such is the case for the History of the University of Oxford [a Department of the University] who use the ICL FIND2 package for compiling indexes of students who were at the University in its early days. The computer file holds students up to 1560 and for each person gives such categories as their name, college, faculty, holy order, place of origin etc. The file is then used to examine the distribution of faculty by college, or origin by faculty etc. A related project of the History is an in depth study of Corpus Christi college in the seventeenth century. Here most of the information is missing for most of the people, but when the project is complete it is likely that some of the gaps can be filled in.

Historical court records have also been analysed by computer again to investigate the frequency of appearance of certain individuals and to see which crimes and punishments appear most frequently.

Archaeologists use similar techniques though their data more usually consists of potsherds, coins, vases or even temple walls. Another project in Oxford which now has about 50,000 records on the computer is a lexicon or dictionary of all the names that appear in ancient Greek, either in literary texts or inscriptions on coins or papyri. Each name has four fields which beside itself are the place where the person lived, the date when he lived and the reference indicating where his name was found. The occurrences of each name are listed in chronological order by place. Ancient dates are not at all simple. In this lexicon they span the period 1000 BC to 1000 AD approximately. Very few of the dates are precise. More often than not they consist of forms like 'possibly 3rd century' or 'in the reign of Nero' or 'Hellenistic'. When they are precise the ancient year runs from the middle of our year to the middle of the next year so that a date could be 151/150 BC. Sorting these dates into the correct chronological sequence is no easy task. It was solved by writing a program to generate a fifth field containing a code which could be used for sorting, but even after four years on the computer, more date forms are still being found and added to the program when required.

Humanities users are reasonably well provided with packaged software. This is a distinct advantage in persuading them to use a computer because they frequently do not need to leam to program. The policy in universities is for the researchers to do their own computing but courses for both programming and using the operating system are normally provided and there is adequate documentation and advisory backup when it is needed. Most universities offer at least some of the humanities packages. For historical and archaeological work FIND2 has been used with considerable success. FAMULUS is a cataloguing and indexing package which is also used by historians and the Greek lexicon project mentioned above. It is really a suite of programs which create, maintain and sort files of textual information which are structured in some way. It is particularly suitable for bibliographic information where typical fields would be author, title, publisher, date, ISBN etc. It always assumes that the data are in character form so that the user must beware when attempting to sort numbers. In practice these are usually dates and can be dealt with in the same way as the lexicon dates. FAMULUS has a number of limitations, particularly that it only allows up to ten fields per record and that its collating sequence for sorting includes all the punctuation and mathematical characters and cannot be changed. It also sorts capital letters after small ones. There have inevitably been a number of attempts to rectify these deficiencies and versions have thus proliferated, but the basic function of the package remains the same. Its success is also due to the fact that it is very easy to use. Within the humanities it has been applied to buildings in 19th century Cairo, a catalogue of Yeats' letters, potsherds, court records as well as numerous bibliographies.

The advent of database-management systems such as IDMS could well bring some changes to humanities computing, but they are not easy to understand. The humanities user is almost always only interested in his results and it should be made as easy as possible for him to obtain these results. Work so far has shown that database users need a lot of assistance to start their computing but that there are considerable advantages in holding their data in that format. Experiments in Oxford with court records and with information about our own text archive have shown that the database avenue is worth exploring much further.

A number of packages exist for text-analysis applications, particularly concordances. At present the most widely used concordance package is COCOA which was developed at the SRC Atlas Computer Laboratory. COCOA has most of the facilities required to analyse text in many languages but its user commands are not at all easy to follow. In particular it has no inbuilt default system so that the user who wants a complete concordance of an English text must concoct several lines of apparent gibberish to get it. A new concordance package is now being developed in the Oxford laboratory which is intended to replace COCOA as the standard. It has a command language of simple English words with sensible defaults and incorporates all the facilities in COCOA as well as several others which have been requested by users. The package is being written in Fortran for reasons of compatibility. Fortran is the most widely used and widely known language in the academic world and it is hoped that a centre will be prepared to implement such a package even if it only has one or two potential users. To ensure machine independence, the package is being tested on ICL 2980, ICL 1906A, CDC 7600, IBM 370/168 and DEC 10 simultaneously. Tests will shortly be performed on Honeywell, Burroughs, Prime and GEC machines.

There are other concordance packages in the UK. CLOC, developed at the University of Birmingham, is written in Algol 68-R and has some useful features for studying collations or the co-occurrence of words. CAMTEXT at the University of Cambridge is written in BCPL and uses the IBM sort/merge and CONCORD written in IMP at Edinburgh is not unlike COCOA.

Other smaller text analysis packages are worth mentioning. EYEBALL was designed to perform syntactic analysis of English. It uses a small dictionary of about 400 common words in which it finds more than 50% of the words in a text. It then applies a number of parsing rules and creates a file containing codes indicating the parts of speech of each word. EYEBALL has a number of derivatives including HAWKEYE and OXEYE. All can give about 80% accurate parsing of literary English and higher for technical or spoken material. The only complete package for textual criticism is COLLATE, written in PL/1 at the University of Manitoba. This set of programs covers all the stages required for preparing a critical edition of a text, but it was written for a specific medieval Latin prose text and may not be so applicable to other material. Dearing's set of Cobol programs for textual criticism has proved difficult to implement on other machines. OCCULT is a Snobol program for collating prose text and VERA uses manuscript variants to generate a similarity matrix which can then be clustered by GENSTAT. One at least of the Latin scansion programs is written in Fortran and is in use in a number of universities.

Although Fortran is the most obvious choice of computer language for machine- independent software, it is not very suitable for text handling. Cobol is verbose and not widely used in the academic world and none of the Algol-like languages was designed specifically for handling text rather than numbers. Algol-68 has its advantages and supporters but it is not particularly easy for beginners to learn. The most convenient computer language for humanities research seems to be Snobol, particularly in the Spitbol implementation. It was designed specifically for text handling and with its patterns, tables and data structures it has all the tools most frequently needed by the text-analysis programmer. The macro Spitbol implementation written by Tony McCann at the University of Leeds and first available on 1900s has done much to enhance the popularity and availability of Snobol. It has also dispelled the belief, which arose from earlier interpretive implementations, that it is an inefficient language. But until there is a standard version of Snobol with procedures for tape and disc handling it is unlikely that it will ever have the use it deserves in text-analysis computing.

To sum up, then, there are ample facilities already available for the humanities researcher who wishes to use a computer. The software certainly exists to cover most applications. Even if the input and output devices leave something to be desired, it can be shown how much benefit can be derived from a computer-aided study.

Interest in this new method of research is now growing rapidly and it is to be hoped that soon all humanities researchers will take to computing as rapidly as their scientific counterparts.

[1] MOSTELLER, F., and WALLACE, D.L.: Inference and disputed authorship (Addison- Wesley, 1964)

[2] DILLIGAN, R.J., and BENDER, T.K.: 'Lapses of time: a computer-assisted investigation of English prosody', in AITKENT, A.J., BAILEY, R.W., and HAMILTON-SMITH, N. (Eds.): The computer and literary studies (Edinburgh University Press, 1973)