Supercomputers are the most powerful scientific computers available at any given time. They tend to be used mainly for the simulation and modelling of complex systems, and their applications in research cover physics, chemistry, biology, engineering and environmental science's. They are used increasingly in industry for simulation and design work and they have been used for many years in weapons design, defence-related areas, energy projects and weather forecasting.

Today's supercomputers have peak speeds of over 100 million instructions per second, a few million words of main storage, and they cost about £5M. Although expensive, they represent good value in terms of price/performance. In consequence some modern supercomputers are being used as more general purpose mainframes, particularly as more general purpose software becomes available.

There are now about 75 supercomputers in use worldwide, and there are 11 in the UK academic research community. Both were provided primarily to meet the growing hatch processing requirements of the Regional Centres rather than as facilities for large scale research problems. Time on them for such problems is limited.

The next generation of supercomputers is gradually emerging on to the market and offers the potential of 10 or more times the power of today's machines at better price/performance.

This paper outlines the case for the provision of a next generation facility for academic research. Section 2 provides some background on UK academic research using supercomputers, and section 3 summarises work funded by SERC and NERC since 1979 which has used vector processing facilities. Section 4 discusses the limitations of present facilities and the advances a next generation facility could make possible. A summary and general comments are given in section 5.

UK academics have been exploiting supercomputers to advance scientific research for at least twenty years. The developments can be traced from the existence of strong schools of theoretical science in universities and research establishments in the 1950's, the emergence of computational science as a respectable discipline with vigorous British practitioners in the 1960's and 1970's, and the provision over the years by the Computer Hoard and SERC (or its predecessors) of a succession of supercomputers of their day (Atlas, IRM 360/195, CDC 7600s, Cray I, Cyber 205).

As a result the UK has a broad base of successful activities in computational science. There is extensive cooperation between universities in the development of techniques and software for supercomputers (eg the SERC's nine Collaborative Computational Projects), and this has been helped in recent years by developments in networking. The success of the UK in providing a good environment for this type of computing has been commented on by individual scientists from the USA, and is noted in the attached report by Lax.

While it is clear that the provision of computer hardware and infrastructure alone cannot ensure a strong and thriving scientific community, it is also clear that the present relatively healthy state would not have arisen if the right hardware had not been available to scientists at the right time.

This section summarises work done on the Cray 1 computer which was installed at Daresbury from mid 1979 until its transfer to ULCC in 1983. A more detailed account can be found in Some Research Applications on the Cray 1 Computer at Daresbury Laboratory 1979-1981 available from Daresbury Laboratory on request.

The machine was used by about 100 groups, mainly funded by SERC but with substantial use by NERC. All the SERC work was authorised via the peer review system. Some NERC work was on contracted projects. From 1979-1981 SERC/NERC had the use of up to 40 cpu hours per week, and from 1981 60 hours per week. By 1983 the demand for time exceeded supply.

The following table shows usage in the main areas of work>

| Category | CPU Time (Cray Hours) |

Grants |

|---|---|---|

| Protein Crystallography | 351 | 13 |

| Molecular Dynamics | 156 | 9 |

| Solid State | 389 | 21 |

| Quantum Chemistry | 313 | 18 |

| Atomic & Molecular Physics | 179 | 9 |

| Plasma Physics | 15 | 4 |

| Neutron Beam | 18 | - |

| Laser | 94 | - |

| Engineering | 123 | 13 |

| Astronomy | 172 | 16 |

| Nuclear Physics | 102 | 6 |

| NERC | 210 | 18 |

| Total | 2122 | 127 |

The above figures exclude time used under the auspices of the University of London Computer Centre.

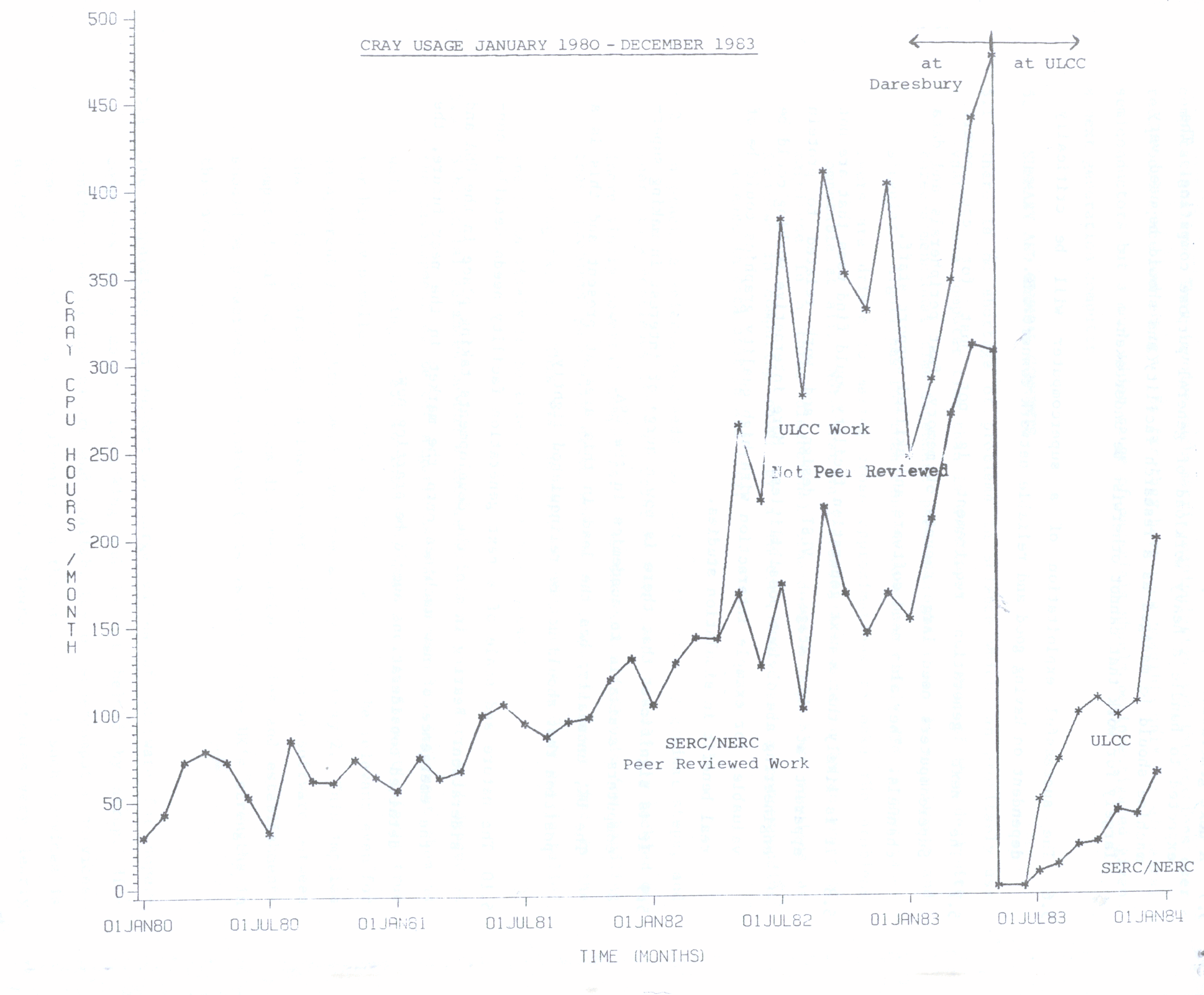

Figure 1 shows the use of the Cray since the beginning of 1980.

Much of the work was a natural development of work that had previously been undertaken on less powerful machines. The advances made possible by the use of the Cray include:

Although the applications of supercomputers cover a broad range of science the techniques used fall into four main areas. The following paragraphs summarise their use, the ways in which present facilities limit their exploitation and the advances that could be made if more computer power were available.

Many physics and engineering applications require the solution of the differential equations of a field over a spatial and temporal mesh - examples are hydrodynamics, plasma physics and electromagnetics. The main limitation arises from the need to compromise between having a mesh sufficiently fine to register any fine structure inherent in the problem and the explosive growth in computer time required as the mesh size shrinks. In some cases the dimensionality of the model has had to be reduced. In the oceanographic studies done on the Cray only a two-dimensional representation could be used in modelling tidal flow in the North East Atlantic.

These are used in simulation techniques in which the equations of motion of a large number of particles coupled by their mutual interaction (rather than a continuum field) are integrated in discrete time steps. The more particles that can be considered, the better the simulation in general, and the better the treatment of noise effects. Examples are found in plasma physics, molecular dynamics and astrophysics in which 105 particles are integrated over several thousand timesteps. This can take about 4 hours of Cray cpu time and covers only a small part of the time interval of interest. Again, the dimensionality of models has had to be reduced. In astrophysics only a few mesh points could be afforded in the third spatial dimension, thereby restricting the studies to disc galaxies.

These are used to sample systems with many degrees of freedom to carry out multi-dimensional integrals. The better the sampling, the better the statistics, so cpu power is the main requirement. Studies on the Cray included the configurational averaged properties of liquids and solids, and the statistical mechanics of quantum systems. The studies have been useful but were limited by cpu power in the sampling and the complexity of interaction it has been possible to include. More cpu power would enable these limits to be pushed back and to enable the scope of the computations to be broadened. They have application. in quantum chromodynamics to calculate hadronic masses and nucleon magnetic moments, etc.

In many cases the eigenvalue and scattering problems in quantum mechanics are handled in a basis set representation and the effective manipulation of large matrices is vital. The move to larger scale problems involves larger matrices and the cpu time for matrix multiplication scales as N3. There is an associated increase in main storage requirements and for backing store and I/O rates.

In quantum chemistry it is now possible to use Hartree-Fock methods to determine potential energy surfaces of molecules of pharmacological significance, and to apply the direct configuration interaction method to compute accurate correlated wavefunctions for small and medium sized systems. The need now is to undertake more accurate treatment of pharmacological molecules, to do accurate large scale surveys of potential energy surfaces for use in more realistic molecular dynamics calculations, molecule-molecule collisions and chemical reaction studies, and to include relativistic effects in molecules. This is not feasible on present day supercomputers.

The interactions of electrons and photons with atoms, ions and molecules have been studied via calculations of cross-sections for electronic and vibrational excitations and ionization. The calculations have applications in stellar and solar spectra, fusion plasmas, gas lasers and to experimental work at the SRS. With the Cray 1 it has been possible to treat accurately atoms and ions as heavy as Fe, and diatomic molecules. A next generation machine would enable studies of heavier atoms involving relativistic effects and an extension of scattering calculations to polyatomic molecules. It would make possible the study of electron impact ionization and double ionization. Extensive diagonalisation and inversion of matrices of order several thousand is involved.

In solid state physics the calculations on the Cray 1 of interatomic forces in metals and semiconductors were limited to the most symmetric departures from equilibrium atomic positions and to the simplest defects. A more powerful facility would enable work to start on the investigation of properties of metallurgical interest such as strength, the relationship between electronic structure and properties like ordering in alloys and the magnetic structure of transition elements and rare earths, and the effect of lattice relaxation around an impurity on the electronic properties of materials.

Many-body calculations using Green function techniques are very demanding computationally. They involve much matrix manipulation and integrations over reciprocal space and energy. So far they have been possible only for elemental semiconductors but a much wider range of materials should be investigated given a next generation computer.

There is no shortage of problems in science which can profitably use vastly more computer time than is available now. Most of them deal with three-dimensional time-dependent phenomena which may be non-linear and singular. Algorithms for them tend to converge slowly and in some cases users are driven to making gross approximations to make the problems computable at all. Note that an increase in resolution by an order of magnitude in a three dimensional time-dependent problem requires an increase in computing power by a factor 10000 (and a corresponding increase in data storage and I/O).

In practice science proceeds for most of the time by gradual steps, and there is a continuous range of problems that could be usefully tackled on increasingly powerful computers. The theoretical methods for handling these problems generally exist and it is computing power that is the limiting factor. This was the case in 1979 when the Cray was installed and it is likely to be true for many years ahead.

Substantial advances beyond a factor 10 in the short term will depend on the development of better algorithms and software to implement them as well as on having access to faster hardware. This is particularly true for highly parallel architectures, but it would also so be relevant even for an apparently straightforward move from a Cray 1 to Cray 2. This implies that existing arrangements that foster collaboration on software between computational scientists from different universities and establishments should be preserved and enhanced (this is one of the UK's strengths in this area).

The preparation and support of large suites of software for supercomputers is a major undertaking and is not helped by abrupt discontinuities in the nature and availability of supercomputer services. Some degree of long term planning in the provision of such services is needed if they are to be successful. Figure 1 illustrates very clearly the disruption to scientific programmes caused by the relocation of the Gray in 1983.

A next generation supercomputer for academic research should not also be expected to handle a heavy workload of general purpose computing. The machine should be managed as a research facility and should be used very largely for work that cannot otherwise be undertaken.

The successful exploitation of a supercomputer will be critically dependent on having good and reliable network connections.

The next generation requirement is not just for cpu power. Supercomputers need large amounts of memory, fast peripherals and data channels. They also need software and skilled support staff.

It is likely that a next generation facility would find uses that are not apparent at this stage. VLSI design and work related to protein engineering are obvious possibilities. More interactive working could be valuable, for example interaction with high quality graphics could be of real benefit in simulation studies.

It is significant that there is now a surge of interest in making supercomputers available to academics in the USA. The UK, unusually, has the lead in this area at present and this is a position that should not be relinquished lightly.

The nature and scale of a next generation facility needs detailed consideration. Bearing in mind the developments taking place in the USA and the emergence of new machines onto the market in the near future, the detailed considerations should be starting now.