The development of rich user-oriented interactive graphics systems has been hindered by the lack of an adequate conceptual framework for characterizing graphical input and interaction. In surveying recent work, two prevalent notions, the desirability of separating graphical input from, graphical output, and the ability of any one physical device to simulate another, are emphasized: misinterpretation and misapplication of these notions are criticized. The output and input technologies, the partners in the dialogue, and the dialogue's content and context are presented as the building blocks of interactive dialogues. It is shown how these building blocks can be used to construct a number of appropriate solutions to a typical problem in graphical interaction. Outstanding solutions to some other problems are also presented. The paper concludes with a sketch of a new framework for graphical input which appears to facilitate the construction of rich interactive systems, and with some suggestions for further research.

What is graphical interaction? Funk & Wagnall's Standard College Dictionary defines interaction as action on each other. The Second College Edition of Webster's New World Dictionary of the American Language adds the phrase reciprocal action or effect, where reciprocal is defined as done, felt, given, etc, in return. Thus graphical interaction is a set of actions of a computer graphics system and its user on each other. The user provides input to the system via a set of devices; the system provides output to the user via a set of displays. These inputs and outputs must be reciprocal, that is, they must be related to one another. Graphical interaction is thus a succession of interrelated actions and reactions.

The purpose of graphical interaction is to facilitate the use of a computer, to enhance the user's powers to accomplish or to create. Towards this end, interactive graphics systems should be user-oriented and they should be rich. User-oriented design of interactive graphics systems has been explored in a recent workshop of that name [Treu 78]. The term rich has many meanings, including, according to Websters', ... sumptuous ... full ... deep ... abundant... plentiful...

However, abundance without taste and balance results in systems that are bloated and unmanageable. Consider the differences between INTERLISP and PL/I, a symphony orchestra and a convention of high school bands, or a modern state dinner and a medieval feast.

The most far-reaching and technically sound vision of the potentialities of interactive computer graphics is that of Alan Kay. He calls his dream of a dynamic medium for creative thought the Dynabook:

Imagine having your own self-contained knowledge manipulator in a portable package the size and shape of an ordinary notebook. Suppose it had enough power to outrace your senses of sight and hearing, enough capacity to store for later retrieval thousands of page-equivalents of reference material, poems, letters, recipes, records, drawings, animations, musical scores, waveforms, dynamic simulations, ... There should be no discernible pause between cause and effect. (One of the metaphors we used - when designing such a system was that of a musical instrument, such as a flute, which is owned by its user and responds instantly and consistently to its owner's wishes. Imagine the absurdity of a one second delay between blowing a note and hearing it!) ... We would like the Dynabook to have the flexibility and generality of this second kind of item [items such as paper and clay], combined with tools which have the power of the first kind [items such aa cars and. television sets] ...

Instruments and tools are two of the metaphors that shed light on the desirable properties of interactive graphics systems. Vehicles, games, and interpersonal dialogues are some other useful metaphors.

As Kay notes, good instruments provide immediate feedback to their user. Instruments must be crafted with care and precision - consider the importance of the balance and feel of a violin. They must be re-engineered as components and techniques evolve and change - consider the relationship of the harpsichord to the clavichord to the piano, a progression characterized by changes in the striking or plucking mechanism.

Kay has noted the importance of tools having power, flexibility, and generality. Tools should be as adaptable as possible - the Swiss Army Knife is a tool of remarkable versatility. They must be robust, that is, resistant to user error - consider the unsuitability of a saw which removes a hand from its user when it slips.

Vehicles must respond sensitively - consider the importance of the steering of an automobile. They must respond repeatably - otherwise how could one land an airplane. They must provide adequate feedback - consider the multi-dimensional display of an airplane cockpit. They must be appropriate for the journey - consider bicycling up Mt. Everest.

Some of the best interactive graphics systems are games. Games must be engrossing, captivating, even joyous - otherwise players will lose interest. They must have rules or structure - otherwise players will lose patience or confidence. They should challenge the players, encouraging mastery and growth.

Finally, interpersonal dialogues have many attributes which the designers of person-machine dialogues seek to emulate [Nickerson 76]. Human responses are appropriate, based on intelligent processing of messages from the other person. Human responses are sensitive, based on interpretations of subtle clues in the behavior of the other person. Human dialogue is multi-dimensional and multi-level, allowing communication to proceed along a number of paths, and threads of conversation to be started and restarted at will.

Interactive graphics systems can be instruments for expression or measurement, tools to cause effects, vehicles for exploration, games for challenge or amusement, and media for communication. They can be sumptuous and abundant rather than spartan and boring. They can entice and delight rather than oppress and shackle. How does recent research serve these ends?

There have been five major streams of recent research relevant to graphical input and interaction. One stream has attempted to define architectures for graphics programming systems that would facilitate the construction of graphics application programs [Newman 74; Core 77]. A second stream has attempted to define a set of standards for graphics programming languages [Core 77]. A third stream has investigated various methodologies for graphical input programming. These include the embedding of an input sublanguage into a graphics programming language [Rovner 68], and the development of an independent control-oriented language [Newman 66,70,73; Kasik 76]. A fourth stream has proposed various sets of graphic input primitives or virtual devices [Newman 68; Cotton 73; Foley 74; Wallace 76; Deecker 77]. Finally, the fifth stream has concentrated on the effective human engineering of interactive computer systems in general and graphics systems in particular [Martin 73; Foley 74; Treu 76].

Space precludes our presenting the results of this work in detail. Instead we shall focus on two themes that pervade most current work. One theme is that of the need for. separating the graphical input and output processes. The other theme is that of simulating one virtual device by any other. These two themes incorporate useful ideas, but taken to extremes they lead to misinterpretations which can hinder the development of rich user-oriented interactive graphics systems.

Recent work, motivated by the desire for device-independent, general-purpose graphics languages, and by the desire for computer graphics standards, has stressed the need for the separation of the graphical input and output processes. A strong case can be made for this point of view. Arguments include the virtues of orthogonal descriptions of complex processes, the enhancement of program portability, and an increased ability to substitute one device for another. Newman and Sproull, for example, write in [Newman 74]:

...meddling with the output process should be avoided in the interests of device independence, even if it requires pointing by software or doing without spectacular dynamic input techniques...

The Status Report of the Graphic Standards Planning Committee of ACM/SIGGRAPH. known as the Core Report [Core 77], states quite definitively:

The conceptual framework of the Core System treats input and output devices as orthogonal concepts.

Thus recent work in graphical interaction has attempted to formalize a set of primitives for graphical input, viewed as a process separate from the graphical output process. I believe that these formalisms fail to describe the variety and richness of interaction possibilities. As Newman and Sproull imply, we must tamper with the output process if we are to achieve spectacular dynamic input techniques. Why is this so?

Architecturally, graphics systems come in four flavors - stand-alone single-user graphics machines, time-shared graphics systems, single-user graphics satellites, and time-shared graphics satellite systems. In a stand-alone single-user machine, immediate feedback to graphical input can be achieved by following a call to an input primitive with the code to generate the appropriate output. Since all system resources are available for this talk, response time is only limited by the available computational bandwidth, and the characteristics of the display device. However, in the other kinds of graphics systems, there may be arbitrary delays between the execution of one primitive function and the execution of the next one. This may be due to the competition of other users in time-sharing, or to the complexities of host-satellite communication in remote graphics. Thus, to guarantee the integrity and responsiveness of feedback to interactive input, we must encapsulate appropriate modifications to the display file as part of the input process, despite the device-dependence and the harmful effect on portability this creates.

Work on the Core System is typical of another recent trend in the study of input and interaction. The Core Report states in [Core 77]:

The basic goal of the Core System input primitives is to provide a framework for application program/operator interaction which is independent of the particular physical input devices that are available on a given configuration.

The primitives chosen are based most directly on the work of Foley and Wallace [Foley 74], work which is summarized in [Wallace 76] as follows:

All input devices for interactive computer graphics can be effectively modelled in programs by a small number of virtual input devices. By specifying an appropriate set of such virtual devices, the semantics of interactive input can be defined independently of the physical form of the devices. In the service of program portability, human factors adaptability, economy, and maximal use of terminal capability, a sufficient set of virtual devices is described,

In their earlier paper [Foley 74], Foley and Wallace also wrote:

Most important from this paper's viewpoint is the attendant flexibility of interchanging one physical device for another, thus facilitating easy experimentation and optimization of those interaction language aspects relating to visual and tactile continuity. While any physical device can be used as any one or more virtual devices, such experimentation is necessary because physical devices are not necessarily psychologically equivalent and interchangeable. To be sure, they can be substituted, but often at the cost of additional user compensation and self-training, which increases the psychological distance between man and computer. Such experimentation, which we call human factors fine tuning, would not be necessary were the design of interaction languages an exacting science rather than somewhat of an art form. But it is not...

The fact that any virtual device can be simulated by any physical device does not imply that such a simulation will produce an interactive system which is congenial or effective. On the contrary, one cannot construct rich user-oriented graphical interfaces independently of the physical form of the devices". If one input device is substituted for another, then we must, in general, redesign all aspects of the interactive dialogue to provide the most effective interface possible.

Consider an example taken from [Foley 74]. They argue that one can simulate a light pen (pick) by successively brightening all displayed entities and noting when buttons are pushed. Yet this method is so cumbersome that it destroys the simplicity and beauty of light pen picking - pointing directly at an item, and instantaneously moving the hand to select an item through its relationship to the entire visual field, rather than by its name or description. Surely one would restructure a system to allow selection in terms of a name or a property rather than accept the awkwardness of this kind of simulation.

Another example is implicit in the Core Report. Consider the simulation of the keyboard by a tablet (locator). A few characters of input can be handled by a trainable character recognizer. But if the original program were heavily keyboard oriented, and the only input device were a tablet, then the correct approach would be to redesign the user interface rather than rely on an awkward and cumbersome simulation.

A third example concerns dragging, which Newman and Sproull present as a primitive and Wallace presents as a nonprimitive virtual device. The essential feature of dragging is the ability it gives the user to set the position of an object, and to make slight instantaneous adjustments based on its relationship to the visual scene. Any simulation of this capability with keyboards or buttons would be so crude as to make the result unrecognizable.

A final example deals with inking, which Newman and Sproull present as a primitive and Wallace presents as a nonprimitive virtual device. Imagine the absurdity of trying to ink or sketch with a keyboard or a button box.

Thus the search for simple methods of device simulation and substitution distracts us from the goal of crafting good user interfaces. It distracts us from studying the very difficult relationships among display technology, computational bandwidth, device (anesthetics, and response time. It distracts us from the goal of interface optimization and suggests that we instead construct interfaces of the lowest common denominator that will function anywhere. Although portability is facilitated by device-independence, interactivity and usability are enhanced by device dependence.

Another way of expressing this is as an example of the strength vs. generality issue. We are not arguing that one should never build weak, general, portable systems, but only that there are often sizeable advantages in building strong, specific systems.

One other weakness of current attempts to formalize graphical interaction is the failure to incorporate time and the passage of time into the formalism. Foley and Wallace correctly describe boredom, panic, frustration, confusion, and discomfort as psychological blocks that often prevent full user involvement in an interaction. Appropriate response time is obviously an important factor in avoiding these blocks. Any interactive system designer or user knows how critical response time is. So why does response time not appear anywhere explicitly in our theories or descriptions of graphical interaction?

This paper now continues with a discussion of interactive dialogues, and an attempt to isolate their building blocks. We then present a collection of examples that illustrate the variety and richness of graphical interaction possibilities. These examples have all been invented in our laboratory in the last three years, although some may be similar to others invented in other laboratories. Our presentation will attempt to describe the range of possible solutions to each problem, and to explain why certain solutions are effective and others are ineffective. These explanations will usually make reference to the appropriateness of the graphical output for the particular graphical input, the response time for this output to appear, and the characteristics of the real physical device which is used for the input.

From these examples we shall try to elicit a set of characterizations of good interactive interfaces and mechanisms and principles that underly their construction. These principles and characterizations will then be used to provide some guidelines to the builders of graphics programming systems and some cautions to those attempting standardization. The paper concludes with a sketch of a framework for graphical input, and some suggestions for future research, both of which are rooted in extensive experience in implementing and evaluating a variety of interactive systems.

An interactive dialogue is a sequence of messages between a person and a machine. The person's messages are transmitted via a set of input devices; the machine's messages are transmitted via a set of displays. The nature of the dialogue is thus determined by the display technology, the input technology, the two partners in the dialogue (the person and the machine), and the content and context of the dialogue. Let us look at each of these factors in turn.

Most computer graphic displays belong to one of four classes: line-drawing refreshed devices, line-drawing storage devices, raster-oriented refreshed devices (digital video displays), and raster-oriented storage devices (such as plasma panels). Line-drawing refreshed devices allow the most dynamic form of interaction because subpictures can usually be inserted, deleted, and moved instantaneously. Line-drawing storage devices, such as the ubiquitous Tektronix, severely limit interaction style because they are not selectively eraseable. The plasma panel is selectively eraseable, but individual raster points cannot be updated very quickly. Digital video displays are selectively eraseable, and allow rapid updating of individual points, although current architectures do not facilitate the modification of subpictures.

There is an even greater diversity of input devices. Here are a few examples. Keyboards produce characters or character strings. Button boxes produce discretely varying values. Light pens produce pointers to subpictures. Thumbwheels produce continuously varying values. Tablets and mice produce pairs of continuous values. Some devices produce interrupts, defining events; others must be polled. All have their kinesthetic strengths and weaknesses; all can be designed and manufactured well or poorly, and can be comfortable or cumbersome to use. We shall expand on various device characteristics in the discussion that follows.

Between the input action and the displayed response there is a machine computation. Characteristics of the machine thus also affect the nature of the dialogue. By response latency we mean the time between the cessation of an action by the user and the initiation of a reaction by the system. This can include both time for the CPU to shift its attention to the input action, as in a time-shared graphics system, and the time to carry out whatever analysis is required before initiating a response. The response may occupy some length of time, and so by response time we mean the time between the cessation of the action and the completion of the reaction. Both response parameters depend upon the effective computational bandwidth with which the CPU can analyze the input action and produce an appropriate picture in response.

Also significant are characteristics of the user, such as those described in [Martin 73]. These include the intelligence of the user, whether he is a regular or casual user of the system, whether he is highly trained or not, whether he is strongly motivated or alienated, whether he is active or passive, and whether he is the ultimate user of the system or merely an intermediary.

Finally, it is important to consider both the content and the context of the dialogue in designing appropriate graphical interaction. Tasks requiring linear strategies which must be undertaken in real-time, such as air traffic control, impose different constraints than those which involve non-linear strategies with much backup, shifting of levels, and changing of points of view, such as computer-aided design. The input of complex curved shapes may be a very different problem depending upon whether they represent free-hand cartoon characters, borders extracted from heart X-rays, or features of maps. An emergency recovery sequence in a process control graphics system controlling a power grid will impose different requirements than a graphic simulation in a computer-aided instruction environment.

The design of interactive dialogues in general and graphical dialogues in particular is an art. I prefer to describe this art as that of crafting a user interface. Some of the influences that play a role in this process have been described above. Now let us illustrate the process with a detailed case study.

Consider the problem of positioning or repositioning an object - a deceptively simple task. There are a great number of different solutions that are appropriate under various circumstances.

Solutions fall into two categories, symbolic or demonstrative. Symbolic solutions are those in which we provide a description of the object's desired position in a symbolic language. Demonstrative solutions are those in which we indicate the object's desired position by means of direct action on or modification of the picture.

With a symbolic solution, we explicitly or implicitly specify expressions which when evaluated define the object's X and Y coordinates. One method is to input directly the desired coordinates, e.g., X=250 and Y=500. Another method is to input the desired change in coordinates, e.g., add 50 to the X coordinate (i.e., move it to the right). A third method is to describe the position in terms of the position of another object or objects, e.g., place it 50 units to the right of object A and at the same height as object B.

With demonstrative solutions, we drag objects, adjust markers or pointers, or construct simple sketches which convey our intentions without the direct use of numbers or symbols. In a sketching system, we can deposit drops of ink with a free-hand motion of a stylus. In a graphic design system, we can grab hold of and move a particular design element until it can be seen to be touching another element in the appropriate manner. In a newspaper page layout system, we can drag rectangular areas representing half-tone pictures in a continuous motion vertically and in a discrete motion horizontally, jumping from one column of the simulated page to another. In a circuit design system, we can position circuit elements both according to a grid of possible positions and according to a perceived visual relationship with adjacent elements.

Symbolic specification is ideal for a keyboard device. We can type statements of the form X15=S50, which set's the X coordinate of object 15 to 250, or X15=XA+50, which positions object 15 at 50 units to the right of object A. We can simulate such actions with other devices, but only with the aid of appropriately rapid modifications of the output process. For example, using a refreshed display and a tablet, we can drag object 15 to its intended destination, but we can position it exactly only if we can display its position in character string format, only if we can update this display quickly enough so the user's hand hasn't moved before he reads the position, and only if the updating is regular enough for him to relax and rely on it. If we have a digital video display and a tablet, still another solution is required - the user would indicate with one or more pen strokes the desired position, and only then would the system move the object.

Demonstrative specification is ideal for a device such as a mouse or a tablet. We can make sketches containing positional information or drag objects to positions, adjusting our hand movement on the basis of displayed feedback. But the nature and effectiveness of the feedback depends upon the display technology and the system's response capabilities. To allow sketches, the system must echo the information from the tablet onto the display. To allow dragging, the system must move objects to keep up with the user's hand movements, which is impossible with most digital video displays, and may be difficult even with some refreshed display architectures. To allow dragging under constraints, as in a newspaper page layout system, the system must compute the effect of the constraints in synchrony with the hand movements.

For the most part, demonstrative specifications cannot be properly simulated with a keyboard or a button box. Inking becomes impossible. So does unconstrained dragging. Constrained dragging (as in the newspaper layout case where objects are moved from column to column) could be achieved by a series of keystrokes or button pushes, but may be awkward.

Interaction techniques cannot be routinely transferred from device to device. They must be crafted anew for each combination of input and output technology and system response capabilities. As an example, consider the newspaper page layout problem of moving a rectangle which represents a picture. It is constrained to column boundaries horizontally, can move freely vertically, and in both cases is not allowed to overlap or pass through items already existing on the page. (See Figure 1.)

A typical display from the NEWSWHOLE newspaper page layout system. The left two thirds of the display represents a newspaper page, divided into nine columns. Three articles, labelled winter, carlos, and monkey, are on the page, as are two pictures, labelled trudeau and amin.

Case 1; Tablet, refreshed display, high computational bandwidth, low response latency.

The solution is similar to that used in the NEWSWHOLE newspaper page layout system [Tilbrook 76; Baecker 76]. Move the tracking symbol into the center of the rectangle representing the picture. Select that picture by depressing a button on the cursor, then drag the rectangle with the button depressed. It jumps from column to column horizontally. It moves smoothly and continuously vertically. It stops when it runs into obstacles.

Case 2; Tablet, refreshed display, medium computational bandwidth, tow response latency.

By medium computational bandwidth we mean that the system no longer has the power to drag a rectangle and compute the constraints in synchrony with hand movements. The system does have the power to move a simple tracking cross, or lay down a simple ink trail. These are intrinsically simpler operations because, unlike moving an arbitrary object or computing constraints, they do not depend on the complexity of the picture - we can determine in advance whether or not they can be done rapidly enough.

One solution, therefore, is to drag the tracking cross in an unconstrained manner, and to apply the constraints later. Two signals must be given, one when the cross is near a border of the object to be moved, and one when it is near its desired position. Another solution is to lay down a trail of ink from any point on the object to be moved to its desired position. In either case, latching techniques such as in SKETCHPAD can be used so that the user need not point with extreme accuracy directly at an edge or a corner in order to signify that a particular edge or corner is intended.

Case 3; Tablet, refreshed display, low computational bandwidth, high response latency.

Here we assume that the system can move a simple tracking cross, or lay down a simple ink trail, but not smoothly. The feedback starts and stops jerkily, due, for example, to contention from other users in time-sharing.

If this can happen, then the graphics support has been embedded incorrectly in the time-sharing system, or has been enfeebled due to the politics of time-sharing.

Use of the tablet here can be so awkward and so unreliable that it is probably better not to use it at all, but instead to use the keyboard with a symbolic language as in case 6 below.

Case 4: Tablet, digital video display (frame buffer), medium computational bandwidth, low response latency.

Here we assume that the system can move a simple complementing cursor (tracking cross) in synchrony with hand movements. The complementing cursor inverts the color on any pixel beneath it, then restores the original value when it moves away. The solution, then, as in case 2, is to indicate two points that represent the start and end points of the move. An inking solution is possible only if the system has an independent binary frame buffer for temporary storage of ink trails so that the picture is not destroyed.

Case 5: Light pen, refreshed display, high computational bandwidth, low response latency.

Here we assume that the system supports a picking or pointing function and can also track a tracking cross. To simulate Case 1 with a light pen, we would pick up the tracking cross, drag it to the center of the rectangle, depress a button, and then drag the cross to a new position. However, a far better solution is for the user to point to an edge or a corner of an existing rectangle, and then to track the cross to the desired new position. This solution can require a great deal of CPU power. The organization of the display file and the nature of the pick function must support selection of edges or corners, or this must be simulated in software. Furthermore, tracking requires significantly more CPU power for a light pen than it does for a tablet.

Case 6: Keyboard, refreshed or digital video display, low computational bandwidth, high response latency.

If we only have a keyboard, or the tablet has been rendered impotent as in case 3 above, how do we proceed? Direct simulation of a tablet, by driving a cursor with repeated keystrokes over the picture increment by increment is a terrible solution. Rather we need construct a symbolic language with which the user can refer directly to locations on the page and to properties of objects. This language should contain statements like R15=R17, which means that object 15 is to be moved so that its right side touches the right side of object 17; X15=3, which means that object 15 is to be moved into column 3; BR15=TL17, which means that object 15 is to be moved so that its bottom right corner touches the top left corner of object 17; Y15=Y15+10, which means that object 15 is to be moved up 10 units; and, Y15-230, which means that object 15 is to be moved to a height of 230.

The syntax of this language needs improvement; it is the semantics with which we are here concerned.

Case 7: Keyboard, refreshed or digital video display, high computational bandwidth, low response latency.

If we can update the picture immediately after every keystroke, then another solution becomes viable. We need not concern the user with coordinates, but can provide a set of movement direction keys and movement magnitude keys. Every time the user hits the right(left) arrow, the object moves one column to the right(left). Every time he hits the up(down) arrow, the object moves up(down) k units. Every time he hits the "increase(decrease) movement scale" key, then k is doubled(halved). These six keys would provide a very effective control console for positioning objects provide that the feedback to keystrokes is rapid enough.

The above example is intended to show in detail how, even in a simple but, realistic graphical interaction problem, the solution depends upon the available display technology, input devices, and system response characteristics. It is not put forth as a complete and exhaustive discussion of the problem, which would entail considering other technologies such as the storage tube, and other aspects such as the system in which the technique is to be applied, and the user for which it is intended.

Let us now briefly pose and solve some other problems in graphical interaction. All solutions assume a tablet, a refreshed display, low response latency, and adequate computational bandwidth to provide a highly responsive form of interaction.

An animation has been added to demonstrate the technique

An animation of a possible dialogue has been added.

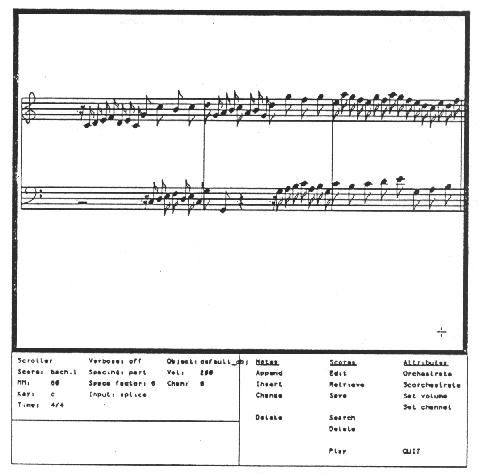

There are two columns of possible note positions to allow for the continuation of a chord or the initiation of a new one. See also Buxton's later history of the system and some videos.

The tracking symbol then becomes a moving menu of possible note durations (Figure 4b). We position the appropriate duration over the selected pitch to complete the definition of the note (Figure 4c). Because relatively little hand movement is required, this approach yields a remarkably fluid style of interaction.

b2 has been added to show how the possible notes are dragged until the required one is over what was the cursor position

The above techniques work well because they adhere to a number of principles which seem to apply to the design of good interactive dialogues:

The preceding discussion has been directed towards the design and implementation of better interactive dialogues. In this section, we turn our attention to the tools with which such dialogues may be constructed. We shall begin with some general remarks on input devices and input languages, and then present some principles and suggestions upon which to build an input language capable of sustaining very rich interaction.

Input devices have been characterized as being of two kinds, those that cause events and those that may be sampled.

The Core Report virtual devices can either cause events or be sampled, but not both, which is a design mistake.

We shall be primarily interested in the event sublanguage of our input system, because it is there that we encapsulate a system's automatic, routine responses to input device activity. Furthermore, the event sublanguage provides the application programmer with a straightforward method of dealing with the parallelism and multi-dimensionality inherent in good interfaces. Many input devices can be activated and the system can monitor activity on all of them. With sampling, on the other hand, the application programmer must construct anew for each case a program to map input device activity into appropriate output device feedback. We must be able to sample all input devices so that we can handle extraordinary situations not covered by the event sublanguage, but our goal should be to avoid these cases by making that language as comprehensive as possible.

We must therefore provide mechanisms for activating input devices, for specifying the echo or feedback that is to result, and for specifying the response time that is required. We must also provide mechanisms for specifying any constraints on input device activity that are to be applied. Before discussing these issues in terms of specific input devices and device classes, let us examine the types of output responses that it is reasonable to provide as feedback.

Immediate feedback, as we have already stated in Section 3, must involve direct modification of the display file by the input system without resort to the application program. The input system should accomplish this by requests to the output system. What kinds of requests are reasonable and which are unreasonable? We suggest that it is reasonable to perform output which involves only local changes to the display file and which does not necessitate interpretation of the entire display file. The meaning of locality is based on the concept of the picture segment, the independently manipulable subpicture which exists in most graphics systems. On most current refreshed vector displays and on properly designed future digital video displays [Baecker 78] it will be reasonable to provide feedback by any of the following mechanisms:

We assume that the hardware has a simple subroutining mechanism, otherwise a true copy would have to be made, necessitating interpretation of the display file.

We see input devices as clustering into four categories [Green 79], providing discrete or continuous data in single units or in sequences. A button box or an interval timer are devices that produce single discrete data items. A keyboard is a device that usually produces sequences of discrete data items. A mouse, tablet, or light pen can produce single continuous data items if it is tracking or dragging and continuous sequences if it is inking, and single discrete data items if it is pointing or selecting (picking). We have in effect denned a set of seven virtual devices - a signal, a timer, a selector, a writer, a tracker, a dragger, and an inker. Let us look at the events that can occur with each device, the feedback that is needed, the response time desired, the constraints that may be applied, and the method by which each event is initiated and terminated.

A signal consists of the depression or release of a button or a contact switch or the change of state of a toggle switch. Feedback can consist of changing the state of a light associated with the button, giving an audio response, or changing the visibility status of a picture segment. Response time must be instantaneous. Constraints that may be applied include the deactivation of certain signals as legitimate inputs, and the application of a minimum delay between the appearance of two identical signals to prevent phenomena such as contact bounce. A signal is indivisible, so it is terminated as soon as it is initiated.

A timer consists of the provision of an alarm or signal after some period of time has elapsed. Feedback can consist of changing the visibility status of a particular segment, or giving an audio response. Response time must be instantaneous. Constraints that may be applied include the deactivation of the interval timer while some other event is being processed. Like the signal, the timer is indivisible.

A selector consists of pointing to a particular segment with a mouse, tablet, or light pen. Feedback consists of changing the state of the segment, for example, its intensity or blink status. Response time is limited by the number of segments whose enclosing rectangle must be compared to the mouse or stylus coordinates.

We assume that, with the increasing dominance of mice and tablets over light pens, and of raster over vector displays, comparison will increasingly be done by software rather than hardware, and will attempt at first to identify the segment that the user has selected.

Constraints that may be applied include making segments visible or invisible to the selector. The selector also is indivisible.

A writer consists of a sequence of keystrokes on a keyboard. Feedback consists of echoing the characters on the display screen. The echoing must be instantaneous. Constraints that may be applied include the deactivation of certain keys as legitimate inputs, and the designation of other keys as break characters. The writer is initiated by the first keystroke, and terminated with a break character.

A tracker consists of a movement of the mouse or stylus terminated by a signal. Feedback consists of echoing the movement with a tracking symbol specified by the user. The echoing must be instantaneous. Constraints that may be applied can include the imposition of a grid of allowable positions and the designation of certain rectangular areas as protected or impenetrable to the tracker. Other rectangular areas may be designated as special in the sense that the tracker's entering or leaving them causes an event. This is useful for implementing interactive techniques such as the NEWSWHOLE picture modification scheme and the SMALLTALK windows [LRG 78]. A tracker is initiated and terminated by various system-defined signals.

A dragger is similar to a tracker except that the segment moved is one of the currently visible picture segments rather than a special tracking symbol. Again, echoing must be instantaneous. Constraints like those on a tracker apply, as do the methods of initiation and termination.

An inker consists of a movement by the mouse or stylus which results in a sequence of coordinate pairs. These positions are displayed by the appearance of an ink trail. The trail must be laid down instantaneously. Constraints that may be applied include whether the device is sampled on an equal space or an equal time basis, and the spacing or rate of the sampling. Inking is initiated and terminated by various system-defined signals.

It should be clear that the feedback described for the above virtual devices can be provided by the display file modification techniques listed above. But what about the imposition of constraints - how is this achieved? The answer is straightforward in all cases but that of region protection, so let us examine that case now.

Regions are the minimum enclosing rectangles of segments. Assume n segments are to be protected, or are to give signals upon tracker entry or exit. Their rectangles will overlap in general as is shown in Figure 5a. Now construct an uneven rectangular mesh which covers all rectangles as is shown in Figure 5b. Each element of the mesh is now associated with zero, one, or more segments. Furthermore, each element of the mesh can be linked to each of its four neighbors in a list structured representation in which each element's storage structure has four pointers to its top, right, bottom, and left neighbors, and an additional pointer to its list of associated segments. Once this data structure is computed, it becomes straightforward to monitor for segment entry or exit by a tracker or by a dragged segment without re interpretation of the entire display file.

As we noted in Section 2, there have been many attempts to specify ideal sets of virtual devices. The above presentation is another try to do slightly better. What distinguishes this from other attempts in the stress placed on device feedback and the introduction of constraints that determine what input is legal.

I believe that the virtual devices and echoing methods described above are adequate to handle a large percentage of desirable interaction techniques. If they are not, an escape should be provided. This escape is a method of specifying the virtual device, a routine which is to be executed whenever the virtual device is activated, and the frequency of execution of the routine that is desired. Alternatively, one can simply request that the routine be executed every time the virtual device provides new data. It is via such escapes that tools such as graphical potentiometers and the camera pan-zoom technique could be implemented.

Finally, a graphical input language should contain mechanisms, such as those described in [Van Den Bos 78] and [Green 79], for combining the input primitives described above into richer and more powerful mechanisms. Such a combination facility must allow new events to be specified as serial or parallel combinations of old events. If parallel combination is desired, one must specify what the possibilities are and how many of the possibilities need be realized for the new event to take place. If serial combination is desired, one must specify which primitive events must occur in sequence and what timing relations must hold true for the new event to take place.

After defining graphical interaction, we developed a vision of what graphical interaction should be in terms of a series of metaphors. We then turned our attention to recent research to see if it is helping us design rich user-oriented interactive graphics systems. We identified two highly regarded and generally accepted themes in the current literature which needed to be questioned. We argued that the separation of the graphical input and output processes could severely restrict the interaction styles that we are able to create. We further argued that the issue of device simulation and substitution is much more complicated than it has been portrayed. It should be no surprise that we approach these issues differently - whereas most current researchers seek device-independence and portability, we strive to exploit the particular characteristics of individual devices to build the strongest interfaces possible.

We then argued that the crafting of a user interface requires consideration of the display technology, the input technology, the machine, the user, and the content and context of the dialogue. We supported this argument by presenting a series of solutions to a problem in graphical interaction as we varied some of these factors. We also presented solutions to same other problems in graphical interactions, solutions which helped us to develop a number of principles that seem to apply to the construction of good user interfaces. Finally, in the preceding section, we reflected on how a language for graphical input could be made suitable for implementing graphical dialogues of the quality we had discussed.

Unfortunately, we have taken only a few small steps along the road towards the very difficult goal of a comprehensive theory or a systematic design methodology for graphical dialogues. We need to take far larger steps. We need to carry out more case studies of particular problems in graphical interaction like the one presented in Section 4. We need to accompany such case studies by building systems incorporating various design approaches and making controlled systematic observations and measurements on users. We need a methodology for carrying out such experiments. We need a language for describing graphical dialogues that is more descriptive than English. We need to record examples of design approaches and user interfaces on film, video tape, video disk, or some standard computer animation digital format, so that they can be archived, disseminated, compared, and contrasted. We computer scientists need to look closely at the literature from psychology and industrial engineering that deals with perception, memory, reaction time, problem solving, and task performance. We need to work with individuals from such disciplines to develop information processing theories of human performance in typical graphical dialogues. But even as we take our first faltering steps towards better theories of graphical interaction, we must never forget that the construction of user interfaces is and will remain a problem in design, and thus will always remain subject to that most intangible of qualities, good taste.

Several of the ideas in this paper were suggested or clarified by Bill Buxton, Mark Green, and Bill Reeves of the University of Toronto's Dynamic Graphics Project, and Mike Tilson of Human Computing Resources Corporation. The entire paper has benefited from their thoughtful comments. I am also indebted to Mary Lee Coombs and Dave Shentiar. for their help in preparing the manuscript. This work was sponsored in part by the National Research Council of Canada.

[Baecker 76] Ronald M. Baecker, David M. Tilbrook, and Martin Tuori, "NEWSWHOLE: A Newspaper Page Layout System", 10 minute 3/4" black-and-white video cartridge, Dynamic Graphics Project, Computer Systems Research Group, University of Toronto, 1978.

[Baecker 78] Ronald M. Baecker, "Digital Video Display Systems and Dynamic Graphics", Report to the Canadian Department of Communications, Human Computing Resources Corporation, March 1978.

[Buxton 78] William Buxton, Guy Fedorkow, Ronald M. Baecker., William Reeves. K.C. Smith, G. Ciamaga and Leslie Mezei, "An Overview of the Structured Sound Synthesis Project.", Proceedings of the Third International Computer Music Conference, Evanston, Illinois, November 1978.

[Core 77] Status Report of the Graphic Standards Planning Commitee of ACM/SIGGRAPH, Computer Graphics, Vol. 11, No. 3, Fall 1977.

[Cotton 72] Ira W. Cotton, "Network Graphic Attention Handling", Online 72 Conference Proceedings, Brunel University, Uxbridge, England, Sept. 1972, pp. 465-490.

[Deecker 77] G.F.P. Deecker and J.P. Penny, "Standard Input Forms for Interactive Computer Graphics", Computer Graphics, Vol. 11, No. 1, Spring 1977, pp. 32-40.

[Foley 74] James D. Foley and Victor L. Wallace, "The Art of Natural Graphic Man-Machine Conversation", Proceedings of the IEEE, Vol. 62, No. 4, April 1974, pp. 462-471.

[Green 79] Mark Green, "A Graphical Input Programming System". M.Sc. Thesis, Department of Computer Science, University of Toronto, 1979 (expected).

[Kasik 76] David J. Kasik, "Controlling User Interaction", Computer Graphics, Vol. 10. No. 2, Summer 1976, pp. 109-115.

[LRG 76] Learning Research Group, "Personal Dynamic Media", Xerox Palo Alto Research Center Report, 1976.

[Martin 73] James Martin, Design of Man-Computer Dialogues, Prentice-Hall, Englewood Cliffs, N.J., 1973.

[Newman 68] William M. Newman, "A System for Interactive Graphical Programming", AFIPS Conference Proceedings (Spring Joint Computer Conference), Vol. 32, 1968. pp. 47-54.

[Newman 70] William M. Newman, "An Experimental Display Programming Language for the PDP-10 Computer", University of Utah, UTEC-Csc-70-104. July 1970.

[Newman 73] William. M. Newman and Robert F. Sproull, Principles of Interactive Computer Graphics, McGraw-Hill Book Company, 1973.

[Newman 74] William M. Newman and Robert F. Sproull, "An Approach to Graphics System Design", Proceedings of the IEEE, Vol. 62, No. 4, April 1974, pp. 471-483.

[Nickerson 76] R.S. Nickerson, "On Conversational Interaction with Computers", appears in [Treu 78], pp. 101-113.

[Reeves 78] William Reeves, William Buxton, Robert Pike and Ronald M. Baecker. "Ludwig: an Example of Interactive Computer Graphics in a Score Editor", Proceedings of the Third International Computer Music Conference, Evanston, Illinois, November 1978.

[Rovner 68] Paul D. Rovner, "LEAP Users Manual", Lincoln Laboratory Technical Memorandum 23L-0009. MIT Lincoln Laboratory, December 1968.

[Tilbrook 76] David M. Tilbrook, "A Newspaper Page Layout System", M.Sc. Thesis. Department of Computer Science, University of Toronto, 1976.

[Treu 76] Siegfried Treu [Editor], "User-Oriented Design of Interactive Graphics Systems", Proceedings of the ACM/SIGGRAPH Workshop, Pittsburgh Pa., Oct. 14-15, 1976.

[Van Den Bos 78] Jan Van Den Bos, "Definition and Use of Higher-level Graphics Input Tools", Computer Graphics, Vol. 12, No. 3, August 1978, pp. 38-42.

[Wallace 76] Victor L. Wallace, "The Semantics of Graphic Input Devices", Computer Graphics. Vol. 10, No. 1, Spring 1976. pp. 61-65.