It is very pleasing to have an edition covering a wide diversity of subjects and opinions. Standards will have an increasing significance in the future and the article on STEP is of particular relevance. The articles on Computer Algebra. Support of Software and on AI Techniques all introduce forthcoming seminars and are worthy of special note. May I remind you again - this is your Newsletter and your contributions are always welcome. Please remember that the Newsletter heading competition entries are due in by the end of October. For libraries (or new readers) we have a back copies service to ensure you have a complete set of articles from the Newsletter.

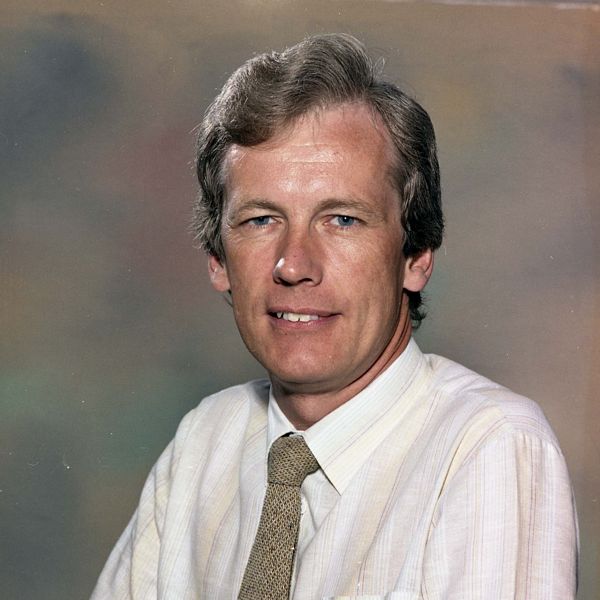

We welcome Dr David Boyd, BSc Phd MIEE CEng, to Informatics Department as the new Head of Computational Modelling Division from 2nd October.

After completing a Physics degree and a PhD in Particle Physics at Edinburgh University, David joined the Laboratory in 1973 to work on software development in HEP Division. In 1978, when SERC launched a Specially Promoted Programme in microelectronics involving the establishment of a number of central facilities, he moved to Technology Division to help set up the Microelectronics CAD Facility within the Computing Applications Groups. The growth in academic VLSI design activity through the early 1980s led to the formation of the UK Higher Education Electronic CAD Initiative in 1985. He was closely involved in this, later becoming secretary of the ECAD Management Committee which continues to oversee operation of the programme.

In 1986, David became manager of the Microelectronics CAD Facility and head of the CAD Group within the newly formed Electronics Division, later assuming responsibility for other engineering facilities provided by the Division. He was a member of the Alvey VLSI CAD Technical Committee and is a member of the joint DTI/SERC Microelectronics Design Subcommittee. He has been an Alvey project monitor and has managed RAL contributions to both Alvey and Esprit VLSI CAD projects.

He has recently been closely involved in the successful bid by RAL, along with four other European laboratories, to form a consortium to run the Esprit VLSI Design Action 'Eurochip'. This will co-ordinate the provision of microelectronics support facilities throughout the European academic community. He chairs the CAD Working Group which is making recommendations for the selection of CAD software and hardware for use in the programme. With this background in engineering computing support and software development, David is looking forward to integrating, promoting and extending the activities of the Division as an essential component of the EASE programme with the UK academic community.

This is the second article in a series of four, the first of which was published in Issue 14 of the Newsletter.

STEP (Standard for Exchange of Product Data) is a major international effort currently under way with the aim of standardising methods for the exchange of engineering data. The standard is formulated in a two-layer structure, where the top layer (application layer) contains the data models for the separate domains, and the bottom layer contains the implementation of these data models. A special data modelling language (called Express, to be published in the next Newsletter) has been developed to be used in the application layer. One of the currently used standards for data exchange, the IGES standard, has several serious shortcomings and therefore most likely will lose its importance after STEP has been published.

For historical reasons, in the United States the international standard is called PDES (product Data Exchange Standard).

Although the aim of STEP is primarily the development of data exchange methods, the techniques developed can also be used for database design. (See the article on databases, fourth article in this series to be published later.)

The data models reside in the application layer. There are a large number of data models, which can be classified as resource or application models. Application models are those that address the needs of a particular application area, examples are the shipbuilding model and the AEC (Architecture, Engineering, Construction) model. The resource models are those that provide facilities to the application models but are not a specific application area in their own right, geometry being perhaps the best example of a resource model. Some areas are difficult to classify unambiguously, for instance, depending on how one looks at it, the finite element analysis model can be seen as resource or an application.

The resource models that are currently under development, together with the date of scheduled completion, are:

PSCM = Product Structure Configuration Management

The application models are:

A single model consists of entity descriptions (normally in Express) together with constraints on the values of attributes, global constraints, etc, and also a detailed textual description and explanation of the entities.

It is intended to publish parts of the standard as they become available. Apart from the application models above, there will also be a number of documents supporting the application models. These are descriptions of the Express language (ready January 1990), Physical File (1990), Framework for Conformance Testing (1990) and an Overview Document (April 1990).

One of the perceived shortcomings of IGES is the size of the standard, i.e. the large number of entities. This makes it very difficult to create a consistent and complete implementation. STEP will solve this problem by the creation of Application Protocols. Each STEP subcommittee defines entities which taken together describe their field of expertise. The entities from all subcommittees are collected to form an entity pool. Application Protocols are defined as a subset of the entity pool relevant to a particular application, together with any special constraints and additional information which may include testing information. An implementation of a STEP file reader or writer will have to be able to process all entities which constitute a particular application protocol. The idea is if two systems are to exchange information agreement is required on which Application Protocol is to be used. The validation of processors will involve checking that the processor can deal with all entities of the relevant Application Protocol, but it is not necessary for a processor to deal with all entities of the entire STEP standard.

The actual data transfer between two computer systems is by means of a physical file. For ease of transfer the file uses ASCII characters only, and moreover in such a way that the file is human-readable. The syntax of the file is derived automatically from the Express definitions in the data models. This has the advantage that the developers of an application model do not have to concern themselves with the physical implementation of the model, but can concentrate on the accurate description of the entities. Only in a few cases, particularly where efficiency of the file is a major concern, is it necessary for the model developer to be aware of how entities actually look on the physical file.

The syntax from the physical file follows from the Express definitions in a straightforward manner. As an example, consider the Express definitions

ENTITY point; x-coor : real; y-coor : real; z-coor : real; END-ENTITY;

Actual instances of this entity on the physical file could be as follows:

#15 = POINT ( 3.3.4.4.5.5); #16 = POINT (6.6.7.7.8.8);

The one-to-one mapping of the attributes of Express entities and parameters in the physical file will be obvious. The numbers #15 and #16 are identifiers of the points so that later references to the points can be made.

The decoupling of the data descriptions and file implementation is one of the attractive design features of STEP. It has the consequence that the software for reading and writing the file can be written in a way which is independent of any particular application area. At RAL a post-processor has been written (called RDST - for ReaD STep file) which reads any STEP file and provides an easy to use interface to the information contained in the physical file. The advantage of using such a program is that the user does not have to be concerned with details of reading the physical file, for instance syntax checking or type checking of the parameters. It is important to ensure that the physical file is accurately checked for errors, and therefore RDST is internally driven by a parser which ensures that all errors are captured. The RDST program will be linked to the RAL Express compiler.

The use of this software is of course not limited to files or applications defined by the STEP standard. For any data exchange by means of a physical file work is required for defining the syntax of the file and the writing of file readers and writers. The work involved can be reduced if one uses Express to define the area of interest. This has the advantage that one is forced to give an accurate description of the area of interest, but no further action is required as the syntax of the physical file then follows from Express and the standard file reader/writer can be used. Software for writing the STEP physical file is under design at the moment.

Lancaster University maintains a collection of software packages for micros which is freely available to the academic community. The software has been grouped into several areas, one for each of the machines covered - currently the BBC, IBM PC, Apple MAC and Atari.

The Lancaster Kermit Distribution Service and Micro Software Archive has migrated to Lancaster's new Sequent Symmetry UNIX machine, and we have taken the opportunity to make some major changes. The major thing you will notice is that both the old services are now combined under the heading of the National P D Software Archive. The new name reflects the fact that we are now funded by the Computer Board as a national service. There is now one account only, and all software is accessible via this. The software can be collected by FIP (the preferable method) or in terminal sessions as before.

The Archive now contains a collection of PC-SIG, PC-BLUE and COMUG software for IBM PC and compatible systems. This has been purchased on behalf of the academic community by the Combined Higher Education Software Team (CHEST) and is mounted at their request.

Terminal calls: 000010403000 FTP: 0OOOl040300096ftp E-mail: 00001040300096ftp.mail.

The username you give to access the service is pdsoft, which has password pdsoft. NOTE THAT YOU MUST GIVE BOTH USERNAME AND PASSWORD IN LOWER CASE.

If you access the Archive in a terminal session, you'll find yourself talking to a custom UNIX shell that has a small, but adequate repertoire of commands. UNIX users should note that this is NOT the CShell, Bourne Shell, or any other shell you have ever come across; do not expect extrapolations from these to be valid!

Probably the best place to start is with the command help: this takes into a new interactive help system that you can browse around and should, we hope, tell you all you need to know.

Until quite recently, the major role of computers in science and engineering research has been limited to finding numerical solutions to problems that are too complicated to be solved algebraically. As a result, it is not widely appreciated that computers can be used to perform symbolic algebra and hence to obtain algebraic solutions to certain types of problem. Computer algebra systems such as REDUCE, Maple and MACSYMA have been available for many years but they have never enjoyed the popularity in the UK that they have in North America.

n order to promote the use of computer algebra systems (CASs) in the UK academic community, the Computer Board created the Computer Algebra Project and appointed a Support Officer at the University of Liverpool Computer Laboratory for a period of three years, beginning in October 1987. His remit is to provide advice and information on all aspects of computer algebra. This is achieved in a number of ways:

The Project acts as a national advisory service answering queries on computer algebra from universities, polytechnics and Research Council institutions throughout Britain. Most of the queries are received and answered by electronic mail via the Project username:

ALGEBRA@UKAC.LIVERPOOL.

The queries already received range from general questions such as What can CASs do? and Which CAS is best suited to my needs? to questions about specific mathematical problems and algebra systems such as How can I solve a set of simultaneous ordinary differential equations using Maple? and How can I generate FORTRAN code for a finite-difference scheme using REDUCE?. Technical questions from computer centres about the installation and maintenance of algebra systems are also handled.

In the first 18 months of the Project, over 350 queries were received and answered. Many of these involved lengthy exchanges of electronic mail and the writing of code to solve the problem or to duplicate it at Liverpool.

The Support Officer prepares documents on computer algebra systems. The most important is A Guide to Computer Algebra Systems which gives detailed information on the systems which are currently available and compares their features in several tables. It also includes information on the availability of each CAS for a range of machines and operating systems, together with addresses for ordering the systems. The Guide is updated regularly to reflect changes in the systems.

A booklet entitled An Introduction to Maple is also available. It describes the main features of the computer algebra system Maple and it is intended to help newcomers to the Maple system. It is also useful to users of other CASs who are thinking of moving to Maple.

In his welcoming address to the 2000 plus delegates from 68 countries, the IFIP's President, Ashley Goldworthy exhorted them to use the Congress as a bridge builder between people, technologies and users, a sentiment also echoed in the message received from the American President, George Bush.

The tone of the Congress was set by Professor Donald Knuth of Stanford University in his keynote speech taking the theme of Theory and Practice. He suggested that to produce good quality software was hard and that higher standards of accuracy had to take priority over everything else. This, he suggested, would only be achieved by funding a wide range of small projects rather than one vast project with predetermined goals. The major requirement was the provision of better tools for professionals, with a greater level of understanding and co-operation between Computer Scientists and the user community.

This contribution was followed by John Young, President and CEO of Hewlett-Packard who claimed that the issues facing the industry were:

He suggested that the movement towards standards cannot be reversed and would be fully supported by his organisation. After this the Congress got down to the real business of 112 sessions split into 11 tracks with speakers from 36 countries.

It is impossible to comment on many sessions, but some of particular interest to the EASE community include the announcement by C G Bell of Ardent Computers that Stellar and Ardent are to merge into a new company, Stardent. Details were limited but further information will be published in the Newsletter as and when it becomes available.

There appears to be considerable interest in Object Oriented Programming as it is claimed to offer the opportunity of increased productivity. To achieve this the requirement is: build fast, build cheap, build flexibly, with the emphasis on reusable software.

Expert Systems came in for criticism. At least one speaker claimed that Expert Systems will never be fault-free and in consequence were unsuitable for emergency control or real-time systems.

A new buzzword was defined, GROUPWARE, this is:

This activity to be supported by a real-time desktop conferencing, using workstations running something like X.400. BT were involved so maybe the service will be announced in the not too distant future!!

The Congress ended with a final keynote address by Bill Joy of Sun Microsystems. Firmly wedded to the concept of open systems, his vision of the future decade includes:

It will be interesting to see if any, or all of these predictions come true.

Since the report in the June 1989 issue of the EASE newsletter I have written to every engineering department in UK universities and sent a questionnaire to 140 departments to gauge computer usage in undergraduate and taught-postgraduate courses. To date, 47 questionnaires have been completed and returned. Thank you! I am busy mounting the data onto a database, analysing it and preparing to issue a software catalogue at the end of September.

In due course I will issue short reports in this newsletter, summarising sections of the questionnaire.

A sub-department of US Social Security recently had to pay out its first cheque over $100. Unfortunately the department's software could only cope with cheques up to $99.99. The bill for altering the software in the cheque pay-out system to cope with amounts greater than $100 was estimated to be $1.4m!

It is estimated that in the UK, over one billion pounds per year is spent on keeping software working. This is not because software wears out, but because the world has changed or someone wants to do something similar but not quite the same with the software.

Software is very easy to change - after all, it is only text. Yet how long does it take for research students to understand that really useful program which has been around the department for years and was written by Dr Smith who left ages ago? What happens when the computer supplier sends out the latest version of the operating system, which fixes all those annoying bugs, but means that a key experiment no longer runs? Can anything be done to help?

The answer is that there are indeed ways of coping with these and other similar problems, which primarily are due to the evolution of software. The field in Computer Science which is tackling these problems is termed Software Maintenance.

The following article explains what the problems are and where solutions to them can be found. You are invited to find out more on the subject and how EASE can help you by coming to an EASE seminar titled Software Maintainability - And How To Avoid Re-Inventing Wheels. The seminar will be held at Rutherford Appleton Laboratory on Thursday 9th November commencing at 11am in the R22 Lecture Theatre.

We can divide software maintenance into two major areas. The first of these is concerned with looking after what has been termed geriatric software. We have all come across examples of such software, which have just grown over the years; they have probably been modified by generations of research students and RAs. Ideally we would like to throw such software away and start again, but it is much too valuable to discard, and far too time consuming (and expensive) to rewrite. We must make the best of a bad job and persevere. At the seminar a range of possibilities for coping with this frequently found situation will be discussed. Techniques such as the rejuvenation of the software represent a cheap but effective approach. This involves simple improvements such as adopting standards for data names, adding section summary descriptions, and improving code layout.

On some occasions, a major re-engineering of the software may be warranted; parts or all of the system are rewritten in a form which is more maintainable. This is a much more expensive option, but may be justified when the system is expected to be viable for many years yet (or when it may have commercial potential!). In other cases, modifications may only in practice affect a small part of the code (hot spots), and it may be sufficient just to focus in on that part of the system, and leave the rest unchanged.

More than 50% of the time involved in this type of software maintenance is typically spent trying to understand how the software works, and how it all fits together. Usually, the original programmer has left years ago, and even if he or she left any notes on the design, these have been invalidated by subsequent changes. The major concern is always that a seemingly innocuous change in the part of the system we arc working on might have an unexpected side effect in a completely different part of the program. The problem may not show up immediately; it might only appear at the end of a long experimental run, resulting in a wasted set of results. How do we control this "ripple effect"? How should we test the program? A number of software tools to aid program comprehension and to assist in program analysis are becoming available, and these will be described in the seminar.

One of the attractions of software is that it can be altered. The more successful a piece of software is, the more likely it is that people will wish to alter it. Thus we should not be surprised that software evolves: we should expect this as the norm and actively plan for it. How then can we make software more maintainable from the outset? How can we design and write a system so that future generations of research students will not have to spend months or years pouring over listings before they are in a position to undertake their actual research?

The seminar will discuss a range of techniques from the very simple to the more complex for designing and writing systems which arc maintainable. These range from good programming techniques, such as the use of modularisation and data hiding, through to the use of design methods and the role of configuration management systems, which are essential for software which is produced by teams. The solutions are not technical alone: experience shows that effective software project management techniques are vital. In other words. software maintenance is not simply programming, but needs to call on a range of software engineering skills. The Centre for Software Maintenance at Durham has considerable experience on working on problems such as these with industrial collaborators. The seminar will present case studies based on some of this work.

For many years, software maintenance has been regarded as a poor relation in the software engineering research community. Most Computer Science research was focused on initial development despite the fact that in many software systems, the majority of the overall lifecycle costs are spent on software maintenance. Studies have shown that the majority of the software maintenance activity is not bug fixing, but is evolution.

The IEEE definition of software maintenance states the issue succinctly: it is the modification of the software product after delivery to correct faults, to improve performance or other attributes or to adapt the product to a changed environment.

In the last few years, the attention of the computer science research community has focussed rather more on software maintenance - it is becoming a respectable area in which to do research. The Centre for Software Maintenance at Durham was the first such centre to be set up worldwide to specialise in software maintenance. The seminar will briefly address some of the research areas being undertaken in the field and likely advances will be described. For example, work on hypertext documentation systems which (unlike conventional documentation) really help the programmer to understand the system will be mentioned.

Successful software in science and engineering fields may live for many years. Most of the effort that is spent on successful software is consumed by software maintenance and particularly by software evolution. If we are to maximise productivity of our research staff, we need to use better ways of undertaking such software maintenance. This will allow our researchers to spend proportionately more time doing useful work and less time on the overheads.

NISSBB is the online information service being provided by the National Information on Software and Services (NISS) Team at Bath University. This information service is available to anyone who can gain access to the UK's Joint Academic Network (JANET): primarily computer users at UK universities, polytechnics, research councils, and some colleges of higher education.

NISSBB has been set up to become the focal point for information dissemination in the UK higher education computing community. Our main objective is to promote the sharing of the vast pool of knowledge and expertise which exists within the community. You can help by f1lling in the gaps within NISSBB.

Parts of NISSBB are now controlled/edited by people other than the NISS team itself. Check the individual information page for contact details (or check the Introduction page for a specific section).

The JANET X.29 call address is: The NRS name is UK.AC.NISS (standard form). If you are unable to use this name, the number you should call is 000062200000.

If you call JANET hosts through a PAD UNIT, and you intend to call NISS regularly, your local Computer Unit may be willing to add the name NISS to the list of addresses stored within PAD. Then you could call up:

call niss

The NISS Catalogue is currently being developed at Southampton University as a database of the software and dataset holdings of 3rd level academic institutions. The catalogue is available to users over the JANET network.

The Catalogue contains two record types; firstly, a complete bibliographic and technical description of the items and secondly, a description of the implementation of the item at a specific site. Thus, a given program or dataset is described in detail in one record, but may also have a number of associated records each describing how the item is installed and used at a particular location.

Catalogue records are created to international bibliographic standards and therefore can be imported from or exported to other bibliographic systems.

Version 2 of the Catalogue is now available. New features include access to Local Holdings information both for a particular item and a specific site, and enhanced Help facilities. The user interface has been improved. However screen mode operation will not be available for a few months.

The content of the catalogue still consists primarily of a test database of software held at Southampton University. However the project has now begun cataloguing the holdings of other sites.

Version 2 of the NISS Catalogue is now available for use over JANET. Access to the catalogue can still only be gained via a special guest user ID and password, although it is planned to provide logon-free access soon. User IDs can be obtained by contacting C K Work, as above. When requesting an ID please supply a postal address for any accompanying documentation to be sent.

Having obtained an ID and password, use the following procedure to access the catalogue:

Your comments on the Catalogue are welcome as we wish to meet your requirements as closely as possible.

If a similar article was written thirty years ago it would have been entitled something like Why should engineers consider the use of computers? Without doubt, thirty years on, scientific computing has made its mark in engineering practice. Computers are used routinely to carry out various kinds of numerical analysis, eg in fluid dynamics and structural design. Computer graphics are also used extensively by engineers who carry out their drafting and design work using CAD packages. If engineers are already using computers, why should they consider using AI techniques now? What is AI anyway?

The Encyclopaedia of AI (Shapiro, 1987) defines AI as the study of ways in which computer can be made to perform cognitive tasks, at which, at present, people are better. Examples of problems that fall under the aegis of AI include common sense tasks, etc. In addition, AI includes expert tasks, such as diagnosing diseases, designing computer systems, locating mineral deposits, and planning scientific experiments. The techniques that AI applies to solving these problems are representation and inference methods for handling the relevant knowledge and search-based problem-solving methods for exploiting that knowledge. There are many good introductory books on AI, eg Introduction to Artificial Intelligence (Charniak and McDermott, 1985). A book that has been written specifically for engineers is What Every Engineer Should Know About AI (Taylor, 1988).

Engineers may not be interested in systems that perform common sense reasoning. However, systems that can perform expert tasks are highly relevant. In engineering there are a lot of problems that do not fit into the strict, rigid framework of algorithmic solution. Engineers are always making decisions that are based on their experience. They need to weigh up various pieces of information, consider the constraints that are influencing the current problem and then decide on a particular action. This decision making process occurs in design, construction, and maintenance of all kinds of engineering products in different areas of engineering.

Conventional computing techniques are inadequate when it comes to representing expertise. Rules of thumb have to be encoded in a way that the computer system can use to draw inferences when appropriate. Very often symbolic as well as numeric values have to be represented. With the advent of AI techniques, in particular knowledge-based techniques, it is possible to build systems that perform expert tasks. They are commonly called expert systems or knowledge-based systems.

A browse through any AI journal or the proceedings of an AI conference shows that AI techniques are being applied to many different areas of engineering. For example, the papers presented at one of the major applied AI conferences - The Second International Conference on Industrial & Engineering Applications of Artificial Intelligence & Expert Systems, 1989 - include descriptions of systems which diagnose faults in switching systems, computer performance, power plants, space shuttle main engines, jet engines, etc. There are papers on the design of chemical plant layout, aircraft engines, aircraft structures, etc. There are other applied papers on process control for different kinds of plants, manufacturing scheduling systems, flight simulator configuration, machine and cutting tool selection, and many more.You may ask, There are plenty of prototype systems. But, are AI systems practical? Some people do get the impression that the only successful and practical expert systems are two well known ones, XCON (Kraft 1984) for configuring DEC computers and Prospector (Duda, Gaschnig and Hart, 1979) for mineral exploration. This is far from the truth. In fact there are hundreds, if not thousands, of expert systems in practical use. Practical systems vary in sizes. Some may take many man-years to build, like XCON and Prospector, and some may take only months if the application domain is narrow and specific. A recent book The Rise Of The Expert Company (Feigenbaum, McCorduck and Nii, 1988) gives many accounts of how companies are using artificial intelligence to achieve higher productivity and profits. For example, the company Du Pont in the USA has over 200 expert systems presently in use: each has an average return of $100,000. Some systems that were built required only a small investment of a month of an engineer's time. There are hundreds more systems being developed at Du Pont. The book describes many expert systems that are currently in use in the construction, manufacturing, mining and transportation industries.

According to an analysis of the latest surveys on expert systems in Japan (Koseki and Goto, 1989), the number of expert systems being developed by non-AI specialist companies is rapidly increasing. In the 1987 survey there were only 100 systems listed and in 1989 there were over 300. It is also important to note that the number of prototype systems has decreased from 1988 to 1989, whereas the number of practical systems has increased significantly (see table below):

| Year | Prototype | Field Test | Practical Use | Total |

|---|---|---|---|---|

| 1987 | 50 | 31 | 19 | 100 |

| 1988 | 109 | 55 | 86 | 250 |

| 1989 | 99 | 89 | 144 | 332 |

The explanation of the increase in the number of practical systems is that many of the prototyped systems have been put into practical use. It was concluded that expert system technology in Japan has evolved from an experimental phase to a practical trial and practical-use phase.

AI techniques are relevant and they have been proved practical. Companies that have applied AI techniques to their work have found great benefit. AI systems help to maintain quality and consistency in decision making. They also help to preserve and disseminate the expertise that is available within a company.

There will be a one day seminar entitled Why Should Engineers Use AI Techniques? at RAL on 6th December. At this seminar, applications of AI to different areas of engineering will be described.

Charniak, E. and D. McDermott (1985), Introduction to Artificial Intelligence, Addison-Wesley.

Duda, A., Gasching, J. and Hart, P, (1979), Model Design in the Prospector System for Mineral Exploration. In Expert Systems in the Micro Electronic Age (ed Michie), Edinburgh University Press.

Feigenbaum E., P. McCorduck and H.P. Nii (1988), The Rise Of The Expert Company, Macmillan.

Koseki, Y. and Goto, S. (1989), Trends in Expert Systems in Japan. Paper presented at the Seminar on Japanese Advances in Computer Technology and Applications, June 1989, Computational Mechanics Institute, Southampton.

Kraft, A. (1984) XCON: An Expert System Configuration System at Digital Equipment Corporation. In The AI Business (eds. Winston and Prendergast), MIT Press.

Shapiro, S. (Ed) (1987), Encyclopaedia of AI. Wiley-Interscience Publication.

Taylor, W.A. (1988) What Every Engineer Should Know About AI, MIT Press.

Refinement (or reification) has come to be understood as the systematic development/transformation of specifications into designs or implementations. The first workshop in this series was held at York in 1988, the second at Milton Keynes in January 1989, and this, the third, will be hosted by IBM UK Laboratories at Hursley Park.

The first day is planned to consist of invited talks designed to set the scene for the remaining days of the workshop. They will form a "taxonomy of refinement". The topics to be covered are:

The second and third days will consist of technical presentations also given by invited speakers.

The presenters will develop themes raised by the first day and workshop sessions will be organised to allow time for discussion and debate.

A non-exhaustive and non-definitive list of invited speakers is:

Attendees are expected to take an active role in the workshop sessions. The workshop, as a whole, will concentrate on presenting a clear picture of the industrial application of refinement and the latest ideas emerging from research laboratories.

Finite Element Analysis and Transputers, 3 October, RAL

Image Analysis and Transputers, 7 November, RAL

Software Maintainability - How to Avoid Re-Inventing Wheels, 9 November, RAL.

Why Should Engineers Use AI Techniques, 6 December, RAL.

Transputer Awareness, 2 November

Exploiting the Transputer, 16 November

The Third Refinement Workshop 9-11 January, 1990.

Applications of Transputers , SERC/DTI Transputer Initiative's 2nd International Conference on the Applications of Transputers will be held at Southampton University on 11-13 July 1990.

Parallel Processing for Computational Mechanics, 1st International Conference, Parallel '90, Southampton, 4-6 September 1990

Parallel Computing III Engineering & Engineering Education, UNESCO, Paris, October 8-12, 1990.