The ad-hoc Panel met on 15 September, under the chairmanship of Prof Roger Wootton, to determine the activities to be carried out under the reduced EASE Programme. The Panel's discussions mainly focused on Parallel Processing and the Education and Awareness activities. The recommendations of the Panel, which were endorsed by the Engineering Research Commission at its meeting on 30 September, were:

In the next issue we will formally announce our plans for introducing the charges for events and advertising.

The Engineering Research Commission believes that the EASE Programme continues to have an important role to play in improving awareness and practice in computing matters within the engineering research community. When originally created more than 4 years ago, it was asked to provide a broad support to software applications in engineering research. Through the enthusiastic and expert approach of RAL staff, it has discharged this role admirably.

However, needs and priorities change so the Commission has reviewed, through an expert panel drawn from within the active research community, what these should be to support its future plans. Consequently, the on-going Programme will now focus on the Commission's key areas of interest and committees are being encouraged to take a pro-active role in liaising with the EASE staff to ensure that the Programme continues to be relevant to its present and future needs. I am sure this more focused Programme, concentrating more closely on areas important to the mainstream research of the committees will be of great benefit to the whole community.

(This new column, which is to become a regular feature, aims to create an on-going flow of readers' experiences, to share with others, in using the vast range of software (commercial and non-commercial) they use in pursuit of their engineering and other research. We will publish inputs from readers provided they are within the bounds of acceptability. Hopefully as the responses increase we will be able to focus on one particular area in each issue. I invited Ian Johnson from our User Interface Design Group to write the first article. which deals with C and C++ tools under UNIX. Your comments/additions to this, plus any other contributions giving your own experiences are particularly encouraged. Ed.)

This article summarises the experiences of software developers in RAL Informatics Department with a tool for assisting C and C++ programming under the UNIX Operating System. It presents an overview of the capabilities of the tool and reports on its usefulness.

The C++ programming language, and to a lesser extent the C language, are used within Informatics Department on a wide range of projects of varying sizes. Standard UNIX tools such as make are assumed to be a fundamental part of the software developers' culture, and are not covered here. Instead, this article describes a tool which makes the testing part of the software development cycle more productive.

The Purify tool from Pure Software Inc. reports on memory access errors while a program is running. Such errors can result in inefficient use of memory and, in the worst cases, abnormal termination of the program. Purify works behind the scenes to provide information which can help the programmer correct errors in programs.

Purify detects over 28 types of memory access problems. These can indicate real errors (e.g. reading/writing NULL pointers, indexing outside array bounds, using deallocated stack storage) or just potential troublespots (e.g. copying uninitialised memory). These errors can linger in code for years without being detected, and only show themselves at an inconvenient time (e.g. when one is demonstrating a program! )

When compiling a program, the link step is put under the control of Purify. This results in error-checking code being inserted into all object files and libraries, which are then linked together as normal. When the Purify'd program is run, all references to memory are checked against a number of criteria to ensure that they are valid. This catches many more references than the debugging malloc approach as used in the Sentinel tool.

Purify can report on invalid memory references either each time they occur, the first time they occur, or collect all reports and notify them at the end of program execution. The notification method can be to send reports to standard error, to a specified logfile, or via e-mail to a specified user. These features give a great deal of control over the operation of Purify.

Users in Informatics Department have responded favourably to Purify, as there is minimal effort needed to use it and the reward in finding potentially obscure bugs is great. These findings concur with the good worldwide reputation of Purify, as expressed in many articles on Usenet News and in the technical press. The Purify documentation describes each type of memory access error clearly and offers suggestions on how to cure the problem.

There is a comparison of Purify and other memory checking programs in the October 1993 issue of Byte (page 28). The September 1993 issue of UNIX Review contains a review of Purify, Sentinel and Insight (pages 5361), where Purify receives the highest rating of the tools surveyed. Purify currently runs on the SPARC platform under Solaris l.X (SunOS 4.X) and Solaris 2.X, and under HPUX 8.07 and HPUX 9.01 on the Hewlett-Packard 9000/700 range (soon to be available on the Hewlett-Packard 9000/800).

Purify is available in Europe from Productivity Through Software plc.

Parsys Ltd demonstrated their T9000 range of parallel processing systems in the Parallel Evaluation Centre (PEC), operating at Rutherford Appleton Laboratory.

Parsys have developed a modular parallel systems enclosure that can be used to build scalable parallel systems. Versions of the enclosure are available to hold T9000, T800 and peripheral devices (such as disks and i/o). An enclosure that contains a Sun SPARC processor provides a stand-alone capability for the parallel system.

Parsys brought two enclosures: a T9000 system and a T800 version. The T9000 enclosure can hold up to 16 INMOS T9000, the system displayed contained two beta-release T9000 running at 10MHz. Both enclosures were mounted onto the PEC's INMOS B300 ethernet system.

Parsys demonstrated the operation of the T9000 running X-windows based applications. The T9000 showed a significant increase in communication performance over the T800.

Engineering researchers wishing more details on the T9000 processors, the B300 and the Parsys enclosures can contact the Parallel Processing Group at RAL which provides technical advice and hands-on support to the PEC.

Two new types of device for 3D input and output were presented to us at the Visualization Community Club's 3D Visualization in Engineering Research seminar which was held earlier this year. These were 3D scanners (input) and stereolithography devices (output).

3D scanners are used for capturing the surface of a 3D object by measuring the spatial coordinates of a large number of points on the object. This is accomplished by moving the object in front of an optical or mechanical device that measures point coordinates on the surface of the object. The data produced by the scanner is represented as a set of CAD entities (i.e. points, polylines, splines, 3D mesh, triangulated polysurface, B-spline or NURBS) in various data exchange formats. The size of the mesh depends on the surface properties (smooth or irregular) and the accuracy required. 3D scanners are widely used in a range of applications: product design, visualization/ animation, multimedia, inspection/ analysis, etc.

Those interested in using 3D scanners can use the services of a 3D scanning bureau such as that run by 3D Scanners Ltd, who recently ran a pilot service for the academic community with support from the Advisory Group On Computer Graphics (AGOCG).

Stereolithography is the first solid imaging process enabling one to generate a physical object directly from a computer database, thus opening new frontiers in the field of rapid prototyping and manufacturing. The process uses photopolymer resins and laser light to build up solid parts layer by layer from a computer data description, usually created by CAD programs. Mechanical properties of resins and the accuracy of the prototype produced have been greatly improved by giving the resins greater impact resistance, enhanced elongation at break, and the ability to be drilled, tapped, milled and turned. Objects produced by this technique can be used for visualization and verification, for fit and interference tests, packing requirements, medical prosthesis evaluation, flow testing, making casts and numerous other automotive, aerospace, component and medical applications.

3D Systems Inc Ltd are one company selling stereolithography devices.

At the recent Building simulation 93 conference I attended in Adelaide, Australia (16-18 August) a significant number of papers addressed, directly or indirectly, the topic of Simulation Environments. The term environment is rapidly reaching the same cult status as knowledge-based, with the danger that indiscriminate, albeit in some sense correct, usage will tend to obfuscate instead of enlighten. I define an Environment as an extensible system providing a rich collection of collaborative/interworking tools/components and supporting the design, development, operation and/or management of domain specific processes by using these components. Improved definitions welcome! Clearly this definition encompasses a very wide spectrum of systems and indeed the papers presented covered a wide range of complementary objectives, approaches and techniques. As there was little obvious commonality between the systems described, it was necessary to first loosely categorize the systems in order to pick out the main features of each. At least this helped ensure like was being compared with like.

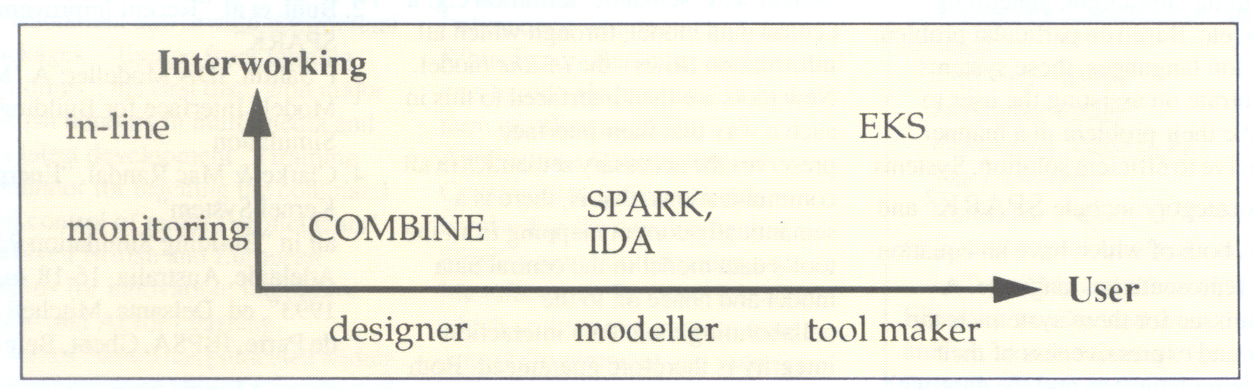

There are two main orthogonal axes for classification as shown in Figure 1. Firstly, simulation environments can be classified according to their targeted user. The systems described targeted 3 distinct categories of user:

Secondly, environments can be classified according to how they control tool/component interworking. The main categories here are:

Targeted systems aim to support the design process by integrating simulation (and other) tools into a coherent environment. The user's prime interest is in the simulation results, and only from necessity in the data input, and work required to obtain them. Typical of this sort of system is the COMBINE environment, where the disparate appraisal and CAD tools currently used by designers arc being composed into an Integrated Building Design System [1]. The crucial issues here arc data storage/exchange, in particular maintaining the semantic integrity of the data being exchanged by the tools, and the support of the design process itself, controlling the information flows and ensuring the integrity of the process. This latter task may also involve an (intelligent?) front end to isolate the designer from the detailed operation of the tools/system.

Targeted environments focus on supporting the modelling process, i.e. on collecting, organizing data, controlling simulation, generating results etc. Based on particular problem definition languages, these systems concentrate on assisting the user to describe their problem in a manner conducive to efficient solution. Systems in this category include SPARK [2] and IDA [3], both of which have an equation-based representation language. A crucial issue for these systems is the power and expressiveness of their underlying language and the case with which the user can generate it. A second consideration is the efficiency and flexibility with which the simulation can be carried out. These sort of environments would be of particular value as components within a designer environment replacing conventional simulation tools, as by their nature they are amenable to automated generation of problem descriptions.

Environments are targeted at the tool builders. In this case, the emphasis is on assisting the user to build the simulation tool itself, rather than apply a given tool to a problem. In this category is the EKS [4] system, based on a set of O-O classes corresponding to the building and thermodynamic domains which can be used as basic building blocks to build quickly and securely a wide range of modelling programs. As a system building environment, they do not fix on any particular problem definition language, solution technique, etc. but attempt to provide the user with a range of software components that can be plugged together to create different types of simulation program. Thus these environments would be used to create modeller environments. They have a strong relationship with software engineering environments, and are concerned with the same issues, namely, validity of components, integrity of composition and security of execution.

Turning to the second classification axis, how the environment controls the tool/component interworking. The main requirement is to ensure that when information is exchanged, not only is the syntax matched on both sides, but also that the semantics of the passed information are preserved. The obvious mechanism is 10 provide a system wide semantic definition e.g. a central data model, through which all information flows - the in-line model. New tools are then interfaced to this in such a way that their interface preserves the necessary semantics in all communications, that is, there is a semantically correct mapping from the tool's data model to the central data model and hence on to the collaborating tool. Tool interaction integrity is therefore guaranteed. Both the COMBINE project, using a central DB-based data model, and the SPARK project, using a equation-based representation, have adopted this strategy. The disadvantages are the tendency for the central controller to support the smallest common subset, rather than a superset, of the tools' capabilities and the extreme difficulties in adding any new tool that necessitates any modifications whatsoever to the definitions in the central controller.

An alternative mechanism is not to attempt to generate a single system-wide semantic definition, but to rely on a network of single focus semantic definitions - a mix of client-server and distributed object models. This encourages specialization of the tools (objects), and direct tool to tool interworking (client-server) adhering to the appropriate semantic definitions (service definitions). Each tool makes explicit the semantics it attaches to each communication, and the environment polices the interworking to ensure the integrity of operation. The EKS adopts this strategy, using defined "transport" objects to carry the communicated information and a Template/Metaclass scheme to ensure only semantically correct communication channels are established. This gives a system that is easy to modify and extend, but, as for any 0-0 system, places a much greater burden on the individual tool maker to ensure the generality and autonomity of the individual tool and on the environment builder to provide a complete and coherent set of service definitions.

The above is very much an off-the-cuff reaction to the plethora of software environments described at B593 and is an initial ad-hoc attempt to provide a framework for assessing these sorts of system.

[1] Augenbroe, et al. An Integrated Simulation Network

[2] Buhl, et al. Recent Improvements in SPARK

[3] P Sahlin. IDA Modeller: A Man-Model Interface for Building Simulation

[4] Clarke & Mac Randal. Energy Kernel System

all in Building Simulation 93, Adelaide, Australia, 16-18 August, 1993, ed. Delsante, Mitchell & Van de Perre, IBPSA, Ghent, Belgium, 93

A series of case studies on the application of parallel processing systems to engineering research problems is being launched by the Parallel Processing in Engineering Community Club (PPECC). The aim is to show, by example, ways in which parallel computing can help engineering researchers. The studies will focus on the contribution of parallel processing in solving the engineering problem (rather than on the engineering problem per se) and on the approaches and methods followed in practice. Issues of interest will include the role of parallel processing in design decisions, trade-offs between functionality, performance, and cost, and experience with the hardware and software products used. Options rejected, with the reasons, will be of as much interest as those eventually taken.

The studies will be developed by RAL staff in co-operation with researchers to extract their experience with real engineering problems. Researchers with suitable examples, either recently completed or well advanced, are invited to contact the PPECC support staff at RAL. In the first instance, two or three examples are sought, preferably of the application of affordable, modest-sized, parallel computing (eg in embedded applications or control systems). RAL staff will document and disseminate the results as exemplars of current practice, highlighting the information of benefit to others. Direct contributions from researchers will also be welcome where appropriate. Our intention is to establish a consistent style for the series.

Please send a message including contact details and a brief outline of possible case study examples to cpw@inf.rI.ac.uk .

Prof James Powell has been appointed IT Awareness Coordinator for the Engineering Research Commission. James Powell is Head of the Department of Manufacturing & Engineering Systems at Brunel University and Lucas Professor of Design Systems. For the past thirty years he had been researching into all aspects of communication and education for packages. He has also been responsible for developing a new training strategy based upon a Fourfold Model of Learning. He is currently incorporating this prototype development into several commercial packages. Escape from burning buildings, his first disc, won major British honours in multi media and his latest development a training simulator for teaching the command and control of major fire incidents received British and European awards for innovation, interactive audio and AI for Learning.

James Powell has published widely and has received almost £3 million of extra mural support for his studies into information transfer. He has an international network of some twelve researchers that are brought together to tackle major projects under his direction. His recent explorations include studies into team building and simultaneous engineering to promote creativity in design.

He has been a recent member of SERC's Engineering Board and Chairman of its Education and Training Committee and he chaired a panel developing SERC's Engineering Education and Training Policy upon which much of the new White Paper Realising our Potential was based. He has just finished a term of office as SERC's IT Applications Coordinator for Construction and was closely involved in the development of SERC's new Construction as a Manufacturing Process initiative which seeks major support from central Government and industry.

The World occam and Transputer User Group (WoTUG), with the full support of The Transputer Consortium and publisher John Wiley & Sons Ltd, has launched a new journal, Transputer Communications. The target audience is the entire High Performance Computing (HPC) Community. An Editorial Board has been appointed, consisting of leading members of the transputer community, supported by a small team of Consultant Editors, headed by Prof David May (the chief architect behind the transputer and the occam multi processing language), and Prof Tony Hoare from the University of Oxford (who was responsible for the development of the Communicating Sequential Processes theory).

The aim is to make the journal become THE forum for information exchange and experience sharing as well as a journal of record - ie a recognised route for documenting the results of research and development. Two thirds of the journal will consist of fully refereed long articles of high technical and academic quality and relevance to the HPC Community. The remainder will comprise short articles, invited short technical articles, user-group communications, editorial comment, letters, etc.

Transputer Communications seeks high-quality submissions that meet its aims and objectives. Issue number I was published in August 1993 and future issues are planned on a quarterly basis.

For more information about submitting articles and/or subscribing to Transputer Communications please contact:

Prof Peter Welch

Managing Editor

Computing Laboratory

The University

Canterbury, Kent

Two further programs have been added to the Common Academic Software Library on HENSA and are briefly described below. Access to the archive can be made via the JANET X.25 network with the address unix.hensa.ac.uk. Both of these programs are placed under the directory /misc/cfd/software, together with the TEAM software (described in ECN issue 42 this year).

One of the new programs is FLUX, which solves the 1D linear advection equation with unit speed or the inviscid Burgers' equation. It is primarily intended for teaching purposes, allowing the user to test various algorithms and discretisation schemes; a number of discretisation schemes are implemented, e.g. forward-time centred-space (FTCS), upwind differencing, MacMormack, Backward Euler with central differencing, Backward Euler with upwind differencing, Beam and Warming (2nd order accurate in time) and Lax-Wendroff. Further schemes can be readily added. Some GKS graphical display routines are also used. The program is menu driven and is self-explanatory.

The other program to be recently added to the archive solves the 2D Navier-Stokes equations in their primitive variable formulation, using the NAG/SERC Finite Element Library (FELIB). This implementation is not efficient, but it is intended as a starting point for developing other finite element based approximations to the Navier-Stokes equations. The program uses a simple point iteration scheme to solve the non-linear algebraic system resulting from the application of the Galerkin method to the governing equations. A standard problem of laminar flow over a square cavity is used to test the program.

The CFDCC was a co-sponsor for the IMechE Conference on The Engineering Applications of Computational Fluid Dynamics which was held on 7-8 Sept 1993. It was an interesting conference which generated a good amount of lively debate during the discussion sessions. About 20 members of the CFDCC attended, including about 10 research students who benefited from the large fee reduction which the CFDCC was able to negotiate. The CFDCC is intending to continue to collaborate closely with the IMechE in the areas of common interest.

At the time of going to press there are still a few places left on this one day seminar which is to be held at University College London on Wednesday 17 November. However, numbers are restricted and this is proving to be a popular subject. If you are interested in attending then please contact Debbie Thomas to check availability of places.

The popular Introductory School in CFD will be run again in January 1994. The School will provide a foundation in the physical understanding of fluid flow and the consequences for the numerical solution of fluid flow problems. It is intended to be suitable for recently-started graduate students or others starting in CFD research.

The topics to be covered in the School include the Navier-Stokes equations, simplifications to the equations determined by the physics (eg high and low Reynolds number problems, and thin shear layers), explicit and implicit time marching methods, turbulence models and their numerical implementation, pressure correction methods and grid generation. The formal sessions will comprise a mixture of review lectures on aspects of these topics in addition to lectures which present current research. The main course Lecturers are: Prof D M Causon (Manchester Metropolitan), S P Fiddes (Bristol), Prof J J McGuirk (Loughborough) and Dr B A Younis (City).

In addition to the formal lectures, there will be practical sessions where all participants will tackle a set of test problems which will be solved using some of the methods presented in the lectures. A network of SUN4s set up at The Cosener's House, will form the basis of the computing facilities for the School. Some commercial packages and the lecturers' own software will be mounted on the system for use in the practical sessions. A brief introduction to computing under Unix will be provided for those not familiar with this operating system.

The Introductory School will take place in The Cosener's House, Abingdon on 10-14 January 1994.

The Club is intending to organise a meeting on current research in Aeronautical CFD. This is still in the planning stages but the date has been fixed for Wednesday, 20 April 1994. The seminar will be held in the recently refurbished Lecture Theatre at RAL. Watch this space for more details in the new year.

For more information about any aspect of the Community Club's activities please contact: Mrs Debbie Thomas Secretary, CFDCC

This year's World Transputer Conference (WTC) joined up with the annual German transputer conference, called the Transputer AnwenderTreffen (TAT). The combined event took place at the Eurogress Centre in Aachen, Germany on 20-22 September.

The conference was run as five concurrent streams. Keynote talks and a closing panel discussion formed the plenary sessions. Hardware and software demonstrations from commercial suppliers and academic poster sessions took place in an exhibition area. A 2 day tutorial programme held at the Klinikum followed the main conference.

Overall, the conference was a great success. I attended as a delegate and picked up a lot of positive feedback particularly from the some of the 56 young European researchers who would normally have been unable to attend, had they not received funding from the CEC! There were around 475 attendees, with many gratifyingly from the less affluent parts of Europe. (32 counties were represented in total).

I arrived in Aachen on a Saturday evening right in the middle of the city festival. There was a great atmosphere in the open area surrounding the medieval cathedral, though I did quickly trip past the open-air stall where pigs' heads were being grilled on a tray. The conference banquet was held in the historic Town Hall. We stood around having drinks and a number of canapes went past on trays. I am afraid I am one of those people that will have at least one of everything that is not fish. Even when canape-style desserts arrived, we were still joking about it being like a banquet in microcosm or so this is it then. As the evening came to a close, it gradually dawned on us that that WAS it. There were complaints! Wits decided that the microcosmic banquet should become a regular feature of WTC conferences. The next evening Parsytec (a German firm that produces transputer systems) held a reception at their premises in Aachen. Food was provided in more conventional quantities.

The Klinikum, a monumental teaching hospital which acted as the site for the tutorials, is an overwhelming shout of an architectural statement. It looks like a cross between a land-locked oil-rig and a leviathan of an up-ended woodlouse. It adheres to the school of exposed services - vast candy-striped ductings clad the exterior. The inside is an astonishing shade of apple green.

Transputers On The Road, Dr. Uwe Franke, Daimler-Benz

The use of transputers to autonomously drive a car was demonstrated. I was impressed believing that autonomous driving was still some time away. The trick is to scale the size of the problem to the hardware, which has to be both commodity cheap and not add significantly to the price of a car. To reduce the computational power required, there is intelligent selection of which parts of the video image are to be analysed and also careful selection of the visual clues used to identify cars, road signs and road markings etc.

The system has already clocked up around 2000 km of autonomous driving on the autobahns of Germany. There were lively discussions amongst the delegates on the legality of this! The car really exists and was on display in the exhibition area. Dr Franke had driven it (or had it driven him?) from Stuttgart to Aachen!

Parallel Image Processing for Road Traffic Pricing and Port Container Traffic Control, Dr. Chuang Ping Derg, Rahmonic Resources Pte Ltd, Singapore

Singapore is a small island that supports a large population. To discourage traffic, car and road tax amount to several times the price of a car. The result is that only rich people have motor transport. A fairer system is to tax road usage directly using credit-cards which are slotted into the dash-board. These contain road units which are decremented automatically at electronic checkpoints and which can be simply purchased at garages. A system like this is no good, unless offenders can be caught. Computerisation means that the summons will probably get back to the house before the offender!

The system is built out of transputers and violations are identified using video cameras and image processing to capture licence plate information.

The port of Singapore is a busy thoroughfare, the third busiest in the world. Many containers are handled and many containers are lost! Using an image processing system similar to the above, containers are identified and their movements are tracked.

Architectural Advances in The T9000, Roger Shepherd, Inmos Ltd

Two aspects of the architecture of the T9000 were treated: the cache and shared channels. Such an admirably clear explanation was given of the design decisions in developing the cache of the T9000, that one could see no other way! However, predictability of performance for real time applications is lost with a cache. The T9000 solution is to use half the cache as fast on-chip memory and the other half of the cache as true cache. We gain from predictable performance when required, as well as general cache acceleration of a statistical nature. Instead of having to place critical data in on-chip memory as for the T800, the cache tables for this internal memory are set up to refer to the critical data notionally in off-chip memory. The result is that instead of moving the data to the on-chip memory - the on-chip memory is moved to where the data is. Clever, eh? So the cache memory is still actually acting as cache, except for half the cache there is no such thing as a cache miss.

Overall, I left the conference re-enthused and greatly impressed by those researchers and developers who are pushing the field forward. Many thanks go to the organisers of the conference both in the U.K. and Germany for their sheer hard work.