I am delighted to report that the first IT Awareness in Engineering Workshop held in April played a clear role in the establishment of a new research programme in Neural Networks, worth up to £1.5M. Full details of this can be found in the following article. Well done to all those who put in so much effort both during and after the meeting. I now hope teams of engineering and IT members will work together with industry to develop suitable proposals for future research. In particular the next three workshops already scheduled, will provide opportunities for such working together.

...VR is too important a technology to the nation to continue its unstructured research and development - US President's Office of Science & Technology Report; May '93.

If Virtual Reality (VR) is so important, why, then, has industrial uptake of the technologies been so slow on both sides of the Atlantic? Putting the issue of unstructured research and development to one side (a topic which would, from a UK standpoint, justify a paper in its own right), it is a sad fact that virtual games still dominate even the most respected of VR and advanced graphics events abroad. But the games industry is not solely to blame for industrial reticence. Even the so-called serious hardware and software vendors are at fault, resorting to outlandish VR experiences to attract delegates to their exhibition stands.

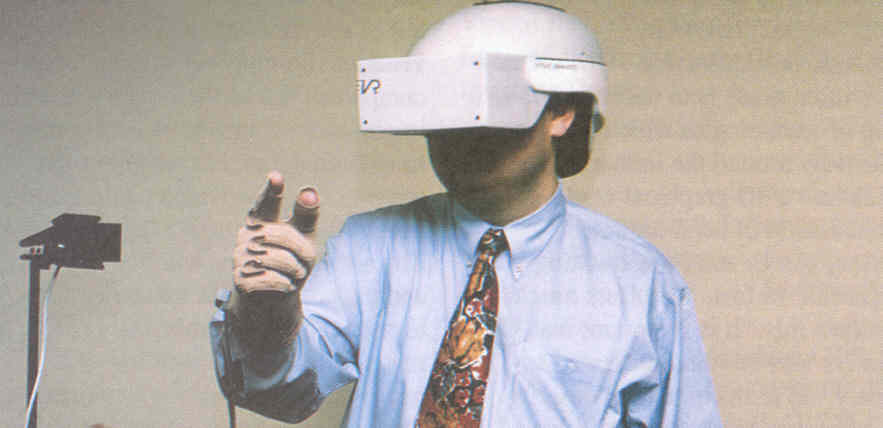

In the experience of the author, it is how VR technology is sold to industry that dictates how successful one is in accelerating its acceptance, or attracting quality contract research or collaborative sponsorship. One mistake that is often made is to describe VR as a group of technologies which revolve exclusively around the immersion of a human into a 3D graphical world, using a combination of head-mounted displays, gloves and body tracking equipment. In fact, VR offers much more than this. It is important that VR is always viewed - and presented to industry - in terms of a suite of tools and technologies which, carefully and skilfully implemented, are capable of matching the capabilities of the human user to the requirements demanded by the application or task he or she is required to work with. In other words, regardless of whether the application involves aircraft engine maintenance, surgery or the remote control of robots, and regardless of whether the application requires the immersion of the user into the virtual environment or interaction through the window (conventional screen-based, or desktop VR), Virtual Reality is first and foremost an advanced tool for the design, prototyping and implementation of human-system interfaces.

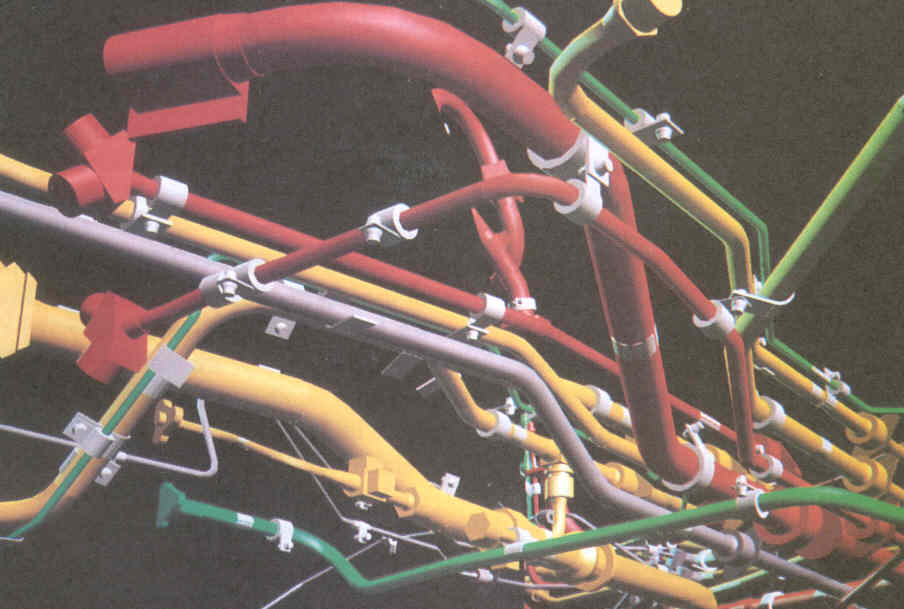

Another problem is that, when one is put into a position to define VR, there is a tendency to become preoccupied with graphics. Certainly, interaction with 3D graphics is an important element of VR. Basic visualisation activities throughout the UK have demonstrated that 3D fly-throughs of, for example, urban models and virtual supermarkets, have an important place in the establishment of potential markets. But the significance of VR lies in the nature and structure of the data underlying the graphics. The intelligence and attributes of, and associations between objects in a virtual environment permit an approximation to the nature and behaviours of their real-life counterparts. Or, in cases where such objects or processes do not exist in reality or are not visible to the naked eye (eg abstract data, molecular structures), such features of VR make the objects' behaviours more accessible and understandable to the human user.

Definitions and prejudices aside, the key to generating effective VR solutions for industrial applications lies not in the hard-sell tactics adopted by many (if not all) of the hardware and software vendors. Forcing VR systems upon companies before their technical and, importantly, commercial requirements have been defined is a highly risky strategy, and one which could backfire should the deliverables fail to perform. The alternative lies with independent and experienced VR industries who, together with academia, can provide a reasonably low-cost and low-risk research and development environment. The aim must be to deliver timely and unbiased technical advice, together with properly managed and quality applications demonstrators, accessible to all levels of the target industry (from the shop floor, through trades unionists, to senior directors). To do this effectively, the team should be of an interdisciplinary nature, comprising specialists with track records in fields such as human factors, software engineering, electronics, CAD database and graphical modelling.

In the UK, and as part of Salford's ongoing Virtual Reality & Simulation Initiative (VRS), co-ordinated by Intelligent Systems Solutions Limited (InSys), a strong model of industrial-academic collaboration is evolving which appears to be servicing the future needs of British Industry exceptionally well, with important educational spin-offs. The background to VRS has been reviewed extensively elsewhere (e.g. Stone, 1994; Stone & Connell, 1994), but three example areas supporting the Salford model are worthy of summary.

In the engineering domain, work under way for Rolls-Royce plc and Vickers Shipbuilding & Engineering Limited has been addressing a variety of virtual prototyping and usability issues associated with the evaluation and maintenance of aircraft engines (specifically the Trent 800) and submarine compartments. As a result of the first phase of this work (Stone & Connell, op cit.), a comprehensive list of customer requirements for near-term production VR systems has been drawn up, highlighting academic research topics which would accelerate the maturation process of evolving or unproven VR technologies (such as body suits, force feedback, and so on).

In addition, on the basis of work conducted in association with the Ergonomics Unit of University College, London, the group is forming a further collaboration to coordinate and focus human factors activities over the next 3 years.

In the Built Environments arena, architectural and urban demonstrators developed for, for example, Welsh Water, South West Water, the Cooperative Wholesale Society are spawning numerous topics for research, funding for many of which has been forthcoming or is on the verge of being awarded to the University of Salford by such important bodies as EPSRC, the Department of the Environment and LINK IDAC. Such topics include database management and display, formal VR methodologies, advanced rendering techniques, and interactive front-ends for knowledge-based space planning systems.

Research and development in support of the North of England Wolfson Centre for Minimally Invasive Therapy (keyhole surgery, see Rubinstein, 1994) provides another good example of how close industrial and academic partnerships have, within 7 months of start, started to deliver practical solutions for one of the most technically challenging application areas for VR human anatomy and physiology. Work undertaken by students in Edinburgh has resulted in a unique set of algorithms and deformable graphics routines for simulating human organs and tissue, to be displayed to the laparoscopic surgeon via a new headset/goggle-less (autostereoscopic) display system developed by Richmond Holographies. Work undertaken by a Manchester Metropolitan University student has produced a first-stage force/tactile feedback system for laparoscopic instruments, which will ultimately interface directly to the Edinburgh code.

VRS and its emerging opportunities for continued industrial academic partnerships, is demonstrating two key trends. The first is that VR is not going to change the future of IT in British industry, it is changing the future and it's changing it now. The second issue is that the demonstrators commissioned by the industrial members of VRS have generated - and continue to generate quality research topics suitable for academic pursuance. Topics such as database management, interactive peripherals design, usability and human factors issues, advanced rendering techniques and novel approaches to sensory feedback are all being evaluated as necessary developments to enhance the industrial uptake of this exciting and challenging technology.

Rubinstein R H, Smooth Navigation in Virtual Worlds; New Electronics; June, 1994.

Stone R J, A Year in the Life of British Virtual Reality: Will the UK's Answer to Al Gore Please Stand Up?!; Virtual Reality World (Meckler); 2(1); January/February, 1994.

Stone R J & Connell A P, The UK's Virtual Reality and Simulation Initiative: One Year Later; Virtual Reality World (Meckler); September/October 1994.

Bob Stone is a Senior Consultant within Intelligent Systems Solutions Limited (formerly and now incorporating Advanced Robotics Research Limited), with responsibilities for the Company's Human Factors programmes, its Virtual Reality & Simulation (VRS) Initiative, and related VR projects for British Industry. He currently holds the position of Visiting Professor of Virtual Reality within the University of Salford and has also recently taken on the role of Director of Virtual Reality Studies for the North of England Wolfson Centre for Minimally Invasive Therapy.

Richard Brook, Chief Executive of EPSRC has just revealed his vision for the future of the Council. Technology foresight will clearly become a major driver for Council strategy. The Council will therefore establish research priorities on the basis of user needs. A flatter and leaner organisational structure has also been agreed which will hopefully improve communication between the Council and its researchers and make for greater efficiency. Professor Brook is also keen to ensure that there is a genuine evaluation of the research sponsored by EPSRC, especially in terms of user impact. Within this change context the IT Awareness Initiative is still felt to be extremely important and will continue for the foreseeable future. Barry Martin, former Head of Engineering Division becomes the new head of Innovative Manufacture, the Initiative which acts as the parent for IT Awareness. Under his careful direction I am sure our Initiative will grow from strength to strength. On a more pragmatic note, all the papers for the informing Technologies to Support Engineering Decision Making event, to be held at ICE on 21st and 22nd of November, are ready for printing. They make excellent reading and I am sure this workshop will be extremely successful. Our last event was oversubscribed, so please book early to avoid disappointment. The formal programme for the Virtual Reality and Rapid Prototyping" is now complete, but I am still looking for stimulus contributions to guide EPSRC strategy in this area; this is particularly so for the Responsive Processing theme and good demonstrations of Rapid Prototyping systems.

We are also seeking contributors for the last event of the session. The proposed topic is Object Technology, but so far little interest has been expressed. So if it is to be a runner do contact me quickly with an indication of interest. Some have suggested that an event dealing with Multimedia and other Communicating Technologies might be a more appropriate alternative. Let me know what you think about this idea. The proposed venue for this meeting has been set and will be the University of Strathclyde where Ken McCallum has agreed to be our host.

The number of software archives at HENSA Unix has been increased with the addition of collections of Matlab, Maple and Mathematica programs and utilities. We are also the home of a large parallel computing archive which contains around 200 megabytes of freely-distributable software, documents, papers and other materials related to Parallel and High Performance Computing (HPC), with special interest in the transputer processor and the occam language.

HENSA Unix is well established as a provider of ftp and gopher resources. With the enormous growth of the World Wide Web it is now providing a number of useful Web services.

General access to the archive is through the Uniform Resource Locator (URL): http://www.hensa.ac.uk/hensa.unix.html which provides links to parts of the archive and other services. This page always contains details of scheduled archive downtime due to maintenance. The What's New page: http://www.hensa.ac.uk/WhatsNew.html contains details of improvements to and changes within the archive. A weekly report is generated listing the software added over the preceding week. New facilities available to users are also listed here. The changes for preceding months are archived in separate files, but still available.

The netlib, statlib, SunSite and parallel collections all have Web and gopher pages within their archives and we have made links to these archives from our main page. The advantage here is that these archives have been indexed by the maintainers and so the pages are as comprehensive and complete as possible.

The archive contains hundreds of thousands of files, so being able to find the information that you require is of utmost importance. To assist with this a number of searching facilities have been provided via the page: http://www.hensa.ac.uk/Search.html

A simple search, based on filenames, can be performed via this page. The search mechanism relies on a browser capable of handling Web forms. If your browser cannot handle these then an alternative search mechanism is available with the URL: http://www.hensa.ac.uk/cgi-bin/Simple.SearchlSINDEX.pl A number of the text based parts of the archive, for example, the USENET Frequently Asked Questions, can be searched using WAlS. These areas have been WAIS indexed and a sophisticated searching gateway placed on top. This is available through the URL: http://www.hensa.ac.uk/WAISform.

Again, a browser supporting forms is required to make use of this search mechanism.

We currently have an experimental facility aimed at providing users with a more helpful view of parts of the archive which would not otherwise be available via the Web. At the moment this is only available for the UUNET archive. Links allow you to navigate the archive and pull files or tar archives of directories.

In the same way that the ftp archive saves time and network bandwidth by providing a local source of useful software, the World Wide Web Proxy Cache saves these valuable resources by bringing Web documents closer to the people requesting them. With a simple reconfiguration, virtually any Web browser can be made to use the HENSA Unix Cache as a proxy server.

With this simple reconfiguration, a Web browser changes its behaviour from fetching documents itself to asking HENSA Unix to fetch them on its behalf. The advantage here is that the HENSA Unix Cache will keep a copy of many of the documents it fetches. If anyone asks for that document again, the copy from the cache is delivered to them at SuperJANET speeds. This saves considerable time as many documents are being requested from busy servers across slow international networks. The cache hit rate is over 60%.

If you are using a recent version of XMosaic then you can set the following X resource:

Mosaic*httpProxy : http://www.hensa.ac.uk/

If you prefer, or if you are using a recent version of Lynx, you can set the environment variable like this:

% setenv http_proxy http://www.hensa.ac.uk/

Other Web browsers may take advantage of the environment variable. Please check with the documentation that came with the browser.

In addition to caching Web documents, the proxy can cache ftp and gopher requests. The following X resources or environmental variables can be set:

mosaic*ftpProxy : http://www.hensa.ac.uk/ Mosaic*gopherProxy : http://www.hensa.ac.uk/ % sentev ftp-proxy http://www.hensa.ac.uk/ % sentev gopher-proxy http://www.hensa.ac.uk/

Once you have configured your browser to use the HENSA Unix Caching Proxy, it will fetch all documents via the cache, even your own local documents. In order to inhibit this behaviour, a number of browsers use the environment variable no proxy to indicate a selection of domains for which the proxy will not be used. This variable may be set as follows:

% sentev no-proxy <comma separated list of domain patterns>

Note: A no_proxy patch for XMosaic 2.4 exists at:

ftp://info.cern.chlpublwwwlproxy_support/Mosaic-2.4

When using the HENSA Unix Cache there is a chance that the document you receive will not be perfectly up-to-date. If a specific expiry date is not attached to a document, then an estimate is made on the basis of the last time that the document was modified. Most users of the cache are happy with the chance of a document being a couple of days out-of-date if they receive it in a reasonable amount of time.

The HENSA Unix archive currently makes available a wide range of technical reports, and Masters and PhD theses from a variety of institutions including:

Searches may be performed by author and title and, in addition, keyword searches may be made on both abstracts and keyword lists provided with each work. Either abstracts or complete works are easily retrievable. To use this facility either connect via a gopher server

gopher unix.hensa.ac.uk

and select

Bibliographic Services

or use a World Wide Web interface to connect to

http://www.hensa.ac.uk/Bib

We are interested in expanding this service by adding material from new sites. The work involved on your part is minimal as the software at HENSA Unix handles all the searching and data transfer. The advantage of this system is that you maintain your report archive locally without contacting HENSA Unix directly. HENSA Unix will make available only those items listed in an index file provided by you. This allows you to assemble reports in the anonymous ftp area but only make them accessible via HENSA Unix when an entry is made in the index file. Updates are visible from HENSA Unix immediately after the nightly update. You need to make all your reports and abstracts available from an anonymous ftp area on one of your local machines. The abstracts must be in ASCII text format The reports can be in any format but PostScript is preferred as this allows reports to be previewed using a World Wide Web browser. You need to provide a single index file containing records of all the reports you wish to make available and make this file accessible via anonymous ftp. HENSA Unix will take a copy of your index file nightly and as soon as the first copy has been obtained your reports are accessible via HENSA Unix. Information extracted from the index and abstract is used as the data for search operations.

We will soon be switching off our connection to X.25 Janet in preference to the IP based SuperJanet. For users who have no option but to use X.25 protocols, national relay services exist.

telnet Relay: net.ja.telnet telnet to: unix.hensa.ac.uk login: archive ftp relay: uk.ac.ft-relay user name: anonymous@unix.hensa.ac.uk password: your E-mail address

For information on how to access these relays please contact your local support personnel.

If you have any questions, suggestions or remarks on the HENSA Unix Archive please either E-mail: archive-admin@unix.hensa.ac.uk or fill in one of our WWW comment forms. Provided your browser supports Web forms, you may submit comments to the archive administrators via an online fill in form called a Comment Form at http://www.hensa.ac.uk/comment.html

This is probably the simplest way to report problems or suspected faults.

The UK University Funding Councils funded a £3m initiative to improve the availability of IT Training in UK HE Institutions called the Information Technology Training Initiative (ITTI).

The funding ran from August '91 to July '94.

Some thirty projects received funding and have produced many quality products in areas such as:

The electronically available products have been accessed by well over 400 institutions from far and wide with some products having access rates in excess of 200/day. The paper based and PC based products which are distributed from the University's Staff Development Unit (USDU) have been delivered to 139 UK HE institutions, 15 other UK institutions and 14 non-UK Universities.

Whilst funding has now ceased, a considerable number of significant products will become available between now and January '95 and USDU will continue to distribute products at least until July '95.

Full details of products and links to project World Wide Web (WWW) or gopher servers are to be found on the IITI WWW server:

http://www.hull.ac.uk/Hull/ITTI/itti.html

Details of new products will be posted to NISS Bulletin Board (Section H7) and the WWW.

The project at UMIST was the only project which addressed a specific engineering topic, Finite Elements, is nearing completion. It is worthy of note that the Open University are planning to adopt a significant portion of the materials.

Slowly but surely, parallel hardware, (from both expensive multiprocessor systems to virtual parallel systems made from networks of computers) is becoming more common. This trend is observable within two styles of computing resource. First is the dedicated compute server where the machine runs long and complex calculations such as simulations and analyses. The second, and more common, instance is a network of workstations. Often this may not be used as a powerful single system, but with the right support it could behave as such - potentially allowing more complex tasks to be solved far faster.

Almost all computers are used in conjunction with an operating system, and most people are familiar with at least one of the common systems such as Unix or MS-DOS. What is often less clear is why the operating systems are needed and what positive benefits they bring. Indeed, some people view operating systems as a large, complex and often unreliable piece of software which only prevents them from exploiting the full performance of their computer. For certain classes of problems running on certain dedicated types of hardware this is true, but for many more applications operating systems do have a use. Their purpose is to manage the resources of the computer fairly and equitably between all those who have legitimate need of them, and to provide protection for the information the system is managing.

Given that this is the goal of operating systems why does the style of the operating system matter? Firstly, operating systems, both for parallel and conventional hardware, have several different styles or architectures, and an appreciation of these styles has a great impact on the efficient use of the system. Secondly, different styles will vary in performance depending on the applications they are running and the hardware on which they themselves run. Finally, different styles provide different environments in which the program runs (and communicates with other programs) which affects how easily they may be written and maintained.

This article will look, very briefly, at several current styles and at a new style called the Single Address Space Operating System (SASOS) which is gaining in research popularity and explain why it hopes to improve on the environment and efficiency of existing systems.

The most common style of operating system today is that of a single, large monolithic kernel, which provides all the resource management, combined with a set of useful tools and applications which are separate programs. All requests for resources (e.g. processor time or disk space) are issued to the kernel via system calls (very slow pseudo-instructions), and the kernel must fulfil these requests. This structure is claimed to be efficient (though counter-claims exist) but is often less flexible or adaptable. This is most notable in the ability of this structure to cope with errors and failures.

This structure is losing ground to the microkernel approach outlined below, and several monolithic systems have adopted some of the ideas of microkernels to improve their flexibility or performance. Importantly, monolithic kernels are most notable for the difficulty with which they can be adapted to parallel and distributed systems. Typically they result either in a group of independent machines which co-operate only at a superficial level, or provide a very simplistic, and often costly, overall management of the parallel resources (e.g. as a static farm).

This is the most popular of current structures, and most newly developed operating systems will at least claim to be based around a microkernel. In this approach the absolute minimum of functionality, or resource management is provided by the microkernel. All the rest of the functionality that one has come to expect of an operating system kernel is provided by servers, processes which receive requests in the form of messages (or remote procedure calls). This structure is often claimed to be less efficient than a monolith, typically by proponents of the monolith.

Unsurprisingly counter-claims exist! The real advantage of the microkernel is the flexibility of the system due to the ease of introducing new servers, and the ability to get closer to the hardware with the potential performance gains that may allow. The truth of which is actually faster or more flexible is unclear, but almost all current research points to the microkernel with the caveat that it does depend on what you are doing and what fine tuning you are able to apply.

Microkernels' real benefit is the great ease with which they can cope with parallel and distributed systems. As all interactions are done via messages, the simple alteration of the message passing scheme so that it can deliver messages anywhere allows many nodes to cooperate as a single unified entity. This approach, when well implemented, makes it very easy to move processes from one place to another. This is a useful technique for spreading the computational load amongst a number of processors - a process called load balancing, which is of great use in parallel systems.

SASOS is a major new style of operating system which diverges significantly from the environment supported by both monolithic and micro-kernel systems. There are numerous research investigations exploring, developing and exploiting this style, including places such as City University (Angel), Cambridge University and University of Twente (pegasus) in Europe, University of New South Wales (Mungi), Rochester (psyche) and Washington (Opal) in the USA, and various Japanese companies. More information on these systems can be found on the WWW pages given at the end of this article.

Most of these systems are still microkemels, but they provide only a single address space in which all processes and data reside. In either of the systems mentioned above, each process has its own private address space, which no other process can see (or of which it can only see a very limited portion). In a SASOS, any process can see, in theory, the address space of any other. Obviously this has protection and security implications, so all SASOSs provide security mechanisms to allow a process to prevent other processes seeing all their private data. The real benefit comes when you consider sharing complex data between processes: it is as easily and readily usable by one process as another. If you like, SASOSs are designed with sharing and co-operation as a primary goal, unlike the previous styles.

In addition, SASOSs such as Angel preserve the data until it is explicitly deleted, much as the information in a file is preserved in a conventional system. This makes the construction and use of complex databases or knowledge banks very simple and, according to provisional studies, very efficient. Indeed Angel, for example, does not, at the lowest level, have a file system it only has the single address space.

Much of the development of SASOS was sparked off by the availability of 64-address bit processors such as the DEC Alpha or the MIPS R4000. With the doubling in the number of address bits came the ability to realistically use a single address space for all of the data in a computer system. Applying a SASOS to a parallel system efficiently might seem unlikely especially for a loosely coupled system, yet it can be done. Studies have shown that, with care and well structured software, sharing memory (which is what a SASOS needs) can be done at a speed comparable with message passing (though both suffer from pathological cases). In addition, it is possible to develop hardware which will greatly assist the speed of shared memory these vary from the simple, such as CICO, through the more complex, such as Simple COMA to the complex, such as DASH.

A SASOS can be used in much the same way as a conventional system by treating part of the address space as a file system and providing library calls that give the illusion of a conventional system. Work on Angel to provide such an interface is being carried out at Imperial College. Whilst this has evident advantages for porting existing software, it does cause many of the benefits of a SASOS to be lost. To understand why this is, one must understand the primary benefit that a SASOS brings. As an illustration consider a printed circuit board design program. Whilst the program is running the circuit board is represented internally to the program in a form littered with pointers or memory address values. When you start or finish the PCB designer, it must load or store the current design, and this almost always involves converting the internal representation and slowly storing it in a file, or reversing the operation to convert the file into internal format. The same basic operations apply when loading in information from a library that details information about components. In a SASOS there is no need to go through these slow, complex procedures. All the internal representation can be stored exactly as it has been used in the program, as every time the program runs, the data is guaranteed to occupy the same addresses as before. In fact the data is referred to (or named by) its address. This brings significant performance benefits and often simplifies code. In general, any application, or suite of applications, which needs access to a common database will benefit from a reduction in the amount of data conversion necessary. A common problem with many parallel computer systems is the lack of input/output performance. In essence, whilst the computer is able to process vast amounts of data in a short space of time, it may often not be able to extract this information from backing store quickly enough. The most promising solutions to this involve schemes such as the RAID system of disks, or log-based file systems. Our work with SASOSs have shown that a log-based scheme can very easily be integrated into a SASOS, resulting in the needed improvements in input/output performance.

As the dependence on computer systems increases and given the costs that are incurred when they fail, even in situations which are not life-critical, it is becoming more important that these computer systems are able to survive the failure of a component. There are existing, well understood schemes involving such things as hardware redundancy or alterations to software to ensure that important data, or program state, is stored in a safe, stable environment. Both of these schemes are costly, either in terms of extra, and often specialised, hardware or of programmer time. In a parallel or distributed system there is inherent redundancy available from the many computers that make up the system. Ideally it should be possible to use this redundancy to ensure that the system survives. (Unfortunately, in a system of networked workstations when a workstation fails the user of that workstation is without a computer, but at least his data should survive.)

As protection and management of data is the domain of an operating system, why shouldn't the operating system provide fault tolerance - the functionality to survive failures. Given the ethos of a microkernel design, fault tolerance should be kept as simple as possible, and ideally outside the microkernel. Work at City shows that data can be preserved when a true SASOS is used as the basis of the operating system. These experiments have shown that this can be performed at a relatively low (and fixed) cost. In essence the scheme exploits the automatic data replication that is provided by the shared memory schemes that underlie a SASOS. Perhaps most important is that this can be provided in a way that is totally transparent to the programs running on the system.

Keeping up with the pace of change in computer hardware can be difficult. It is obvious when new, faster processors, disks or memories come out, and with the crude performance measures available it is possible to perform reasonable comparisons between them. The impact of operating systems is less well know, and new approaches to operating systems are often less publicised. However, their impact in user-perceived performance and ease of use is real. Microkernels have, justifiably, started to displace the monolithic approach. Current research indicates the SASOS may also process further benefits, and that for certain applications they could displace the conventional style of operating system.

Further information on SASOSs can be obtained from several sources:

The Systems Architecture Research Centre (SARC) maintains a web page bibliography with the URL:

http://web.cs.city.ac.uk/bibliography/sasoslpapers.html

with more general information at:

http://web.cs.city.ac.uk/research/sarc/sarc.html

There is a mailing list for those interested in SASOS which can be subscribed to by e-mailing: request@cs.dartmouth.edu . SARC also maintains an ftp archive of local papers (including those relating to SASOS) at:

ftp:/ftp.cs.city.ac.uk/papers

Further information about SASOS can be found at URL:

http://web.cs.city.ac.uk/archive/sasos/sasos.html

A new programme entitled Neural Computing - the Key Questions has been launched by the Engineering and Physical Sciences Research Council (EPSRC). The programme will address key research issues identified by companies wishing to use neural computing and by researchers in the field. EPSRC is providing up to £1.5m to support the programme, and a call for proposals has recently been issued, inviting applications for research grants from academic researchers in collaboration with industry. The closing date for submission of outline proposals to the programme manager was 31 October 1994, however final proposals may be submitted up to 16 December.

In 1993 the Science and Engineering Research Council launched its Neural Computing Co-ordination Programme with the following objectives:

Following the restructuring of the Research Councils, this programme was transferred to EPSRC on 1 April 1994.

Between January and May 1994, the Neural Computing Co-ordinator, Professor David Bounds, sought the views of a wide cross-section of industry, commerce and academic researchers through a series of workshops, meetings and mailshots asking:

This period of consultation culminated in an open meeting at Aston University in June 1994. Professor Bounds submitted his co-ordinator's report to EPSRC in July 1994.

The co-ordinator's report identified a number of key questions which were widely seen as essential areas of research. Of these, five that were considered to be most relevant to the needs of industry and commerce have been selected by EPSRC to form the basis of this new programme.

The five key questions are:

Full details of these questions can be found in the call for proposals.

The UK is in a strong position to exploit neural computing research for economic advantage. The research funded by this programme will address the problems inhibiting further commercial exploitation of neural computing in the UK. Projects will need to demonstrate a level of industrial support which reflects the importance to industry of the research being addressed, and this will be one of the main criteria in the assessment of applications. It is expected that the industrial partner(s) will make a significant technical input to the project, as well as contributing a direct financial commitment either in cash or by the donation of software or equipment.

Proposals will be assessed against the following criteria:

The INMOS T9000, successor to the T800 transputer, is currently being relaunched at a roadshow currently touring the country.

This roadshow is being organised by INMOS, MMD and PARSYS to inject the T9000 into the academic market by providing aggressive discounts on early T9000-based systems. These aggressive offers are being held open until Dec'94.

The roadshow is announcing the production versions of the T9000 now being manufactured. These versions run at 20Mhz, with improvements scheduled to increase this to 50Mhz in the next year. Although the computational power of the T9000 is still not at its full specification the four communications engines, all on chip links, are running at 100 Mbits/s.

The roadshow emphasises how these link engines are providing world-class communications power for a wealth of modem application areas including video, Virtual Reality and ATM support.

Academics interested in finding out more about these education discounted T9000 systems should contact INMOS, MMD or PARSYS, or send an E-mail to the PPECC Secretary.

This is a new two day course, giving a hands-on introduction to parallel programming using C. Presentations on parallel programming concepts, techniques and systems (especially those of relevance to the C programmer) are given in the mornings. The afternoons are devoted to practical exercises using C in conjunction with a representative of one the emerging de-facto standards for message passing, eg PVM, P4, MPI.

The course is designed to break the inertial barrier of getting started with parallel programming and C.

While the course is oriented to expressing parallelism in a specific language, most of the ideas and techniques transfer readily to other contexts and will stand you in good stead for the future.

Attendees will be expected to have some experience of C or C++, but complete fluency is NOT a prerequisite.

The PPECC is organising a seminar on Embedded Parallel Processing on Thursday, 23 February 1994, at Rutherford Appleton Laboratory. This event will be chaired by Prof G W Irwin, Queens University Belfast. This seminar will provide an in-depth view of the use of parallel processing within embedded fields including: control systems; image processing and signal processing. A series of 6 detailed talks will take place followed by an open discussion session.

On 24 May 1894, Professor Osborne Reynolds presented to The Royal Society, London his famous paper on turbulent flow in which he proposed decomposing the fluid velocity field into mean and fluctuating parts. This stratagem led to the appearance of double velocity correlations - or, as we now know them, Reynolds stresses - as unknowns in the averaged equations of motion (or, more commonly, the Reynolds equations). With this work Townsend whose widely read textbook Reynolds thus established the subject of turbulence modelling. Indeed, this truly pioneering paper did much more than that, providing, for example the first derivation of the turbulent kinetic energy equation.

To mark the centenary of the presentation of this seminal work a symposium was held in Manchester on 24 May 1994. At UMIST's new conference centre, ten turbulence experts from around the world either presented new or reviewed existing strategies in turbulence research while Prof J D Jackson of Manchester University set the scene with a biographical account of Reynolds himself, the first Professor of Engineering in England. An audience of some 150 heard presentations by Prof W C Reynolds and Prof P Bradshaw of Stanford University, Prof G Binder (Grenoble), Prof K Bray (Cambridge), Prof F Durst (Erlangen), Prof H HaMinh (Toulouse), Prof N Kasagi (Tokyo), Prof W Rodi (Karlsruhe), Prof U Schumann (DFVLR, Munich) and Prof J H Whitelaw (Imperial). At the conclusion of the presentation of the formal papers, coaches took participants to the University of Manchester to see the opening of a new exhibition on Osborne Reynolds which included, as its centre-piece, the famous pipe flow apparatus in which, in 1883, you-know who had discovered that flow became "sinuous" when a certain dimensionless group, pVD/µ, exceeded a critical value.

The day ended with dinner and reminiscences at UMIST. Among those contributing to the latter was Dr A A had helped many of those present gain their first appreciation of turbulent shear flows.

The exercise to assess the provision of CFD courses [ECN - May 1994] is now under way. The information gathered on the courses will be published as a booklet in the New Year. If anyone provides CFD education and has not received a questionnaire please contact Debbie Thomas as soon as possible to ensure inclusion in this publication.

A parallel exercise to assess the requirements for CFD education has begun and questionnaires should shortly be arriving in the in-trays of CFDCC members and all relevant department heads. If you do not receive one and would like to participate please contact Debbie Thomas.

Visualization, virtual environments, balloons and bubbles and their application to research in the built environment: these were among the subjects presented to an audience of 60+ people in the new Queen's Building at De Montfort University in Leicester on 22 September 1994 at the VCC seminar: Visualization in the Built Environments. The Queens Building itself is noteworthy for its use of passive ventilation, its minimisation of heat loss and its maximisation of day lighting. The building is ... not just a shell for teaching, but a teaching object itself. Visualization was used in the design of the building.

CFD Applications was the topic of Dr Geoff Whittle of Amp Research and Development. His studies include the flow of air in buildings, the distribution of contaminants in air and the spread of fire and smoke. The parameters to be visualized can include velocity, temperature, pressure, turbulence parameters and contaminant concentrations. Animation is often essential. Although CFD was one of the earliest applications of visualization, the problems it presents are still unsolved. The use of Virtual Reality (or Virtual Environments) was the subject of two speakers, which suggests a growing overlap with visualization. Consider these problems: How do you design a complex aero engine and evaluate how maintainable it is, when each engine mockup costs £2M? How do you plan replacing sewers in a shopping street with minimum disturbance and convince the shopkeepers that your plans make sense? How do you plan a supermarket layout for maximum efficiency (or are they planned to extract the most money from the customers!)? How do you simulate the spread of fire and smoke and the movement of people to the exits so that you can meet fire safety criteria? These examples are from the presentations of Andrew Connell of National Advanced Robotics Centre Ltd at Salford and Michael Spearpoint of the Fire Research Station at Garston.

The answer is that some kind of VR system provides a useful addition to the toolkit of the engineering designer and this is becoming a practical proposition. Although VR is often associated with headsets, the use of high specification PCs and, at Salford, a dual projection system were described. It is also worth noting that real time display is not always needed: in the fire research work, the spread of fire and smoke and the progress of people trying to leave the building were correlated, but the speed of display was of less importance. In contrast to VR, Kevin Lomas (from De Montfort) presented The Visualization of Buildings using RADIANCE. RADIANCE allows the accurate calculation of luminance and can model realistic (hence complex) surfaces and lighting conditions particularly in the design of buildings. RADIANCE has an accurate numerical model so that quantitative comparisons against building requirements can be made. It forgoes real time in favour of obtaining accuracy of each image, which can take several hours on a Sparc processor.

One of the difficulties with any of these systems is the input of a complex CAD model to the virtual environment or imaging software. The answer eventually must be a standard such as STEP, which was mentioned by a different speaker. Dr Faroutan Parand of the BRE, talked about the role of STEP in building an infrastructure for energy-related building tools. Dr Phil Jones of the Welsh School of Architecture, Cardiff talked about the problems of measuring internal air movement inside buildings and comparing this with calculated behaviour. By combining with video and image manipulation, simple experimental techniques such as balloons and bubbles can now offer more quantitative and reliable results.

A small exhibition by companies and research groups was combined with a buffet lunch, which worked well.

To summarise, there are complex issues in the design of buildings, where visualization has become essential. VR is an answer for some problems. Whether visualization is by a data flow visualization system, VR or by photorealistic image synthesis, importing the model data presents difficulties which have not yet been solved.

Credit is due to Professor Neil Bowman and Mrs Pamela Morton who hosted this seminar on behalf of the Visualization Community Club.

30th November 1994, Leeds University, Chair: Dr Tim David, University of Leeds.

This seminar brings together skilled practitioners in both visualization and experimentation. The meeting will be of interest to all who are considering or are already using visualization of experimental data in their research.