If you understood this somewhat cryptic headline then you are already part of the massively popular and rapidly expanding Internet Global Village of WWW (World Wide Web) of which Informatics Department, RAL has recently become a member.

WWW has been described (by John Markoff in the New York Times) as A map to the buried treasures of the Information Age.

WWW is a networked information discovery and retrieval tool whereby anyone with Internet access can browse any information that anyone has chosen to make available about themselves. their company, their institute, their products, or indeed anything else that they think might be of interest to someone, somewhere...

The WWW world consists of documents and links, and uses hypertext as the method of presentation. Documents need not be just textual they can also be, or include, graphics. images, movies and sound. Links can lead from all or part of a document to all or part of another document. To follow a link, just click with the mouse.

WWW was originally developed to allow information sharing within internationally dispersed teams, and the dissemination of information by support groups. Originally aimed at the High Energy Physics community, it has spread to other areas and attracted much interest in user support, resource discovery and collaborative work areas. It is currently the most advanced information system deployed on the Internet, and embraces within its data model most information in previous networked information systems.

The WWW project started at CERN in March 1989, and first became available to the world in August 1991. Today, the number of servers is estimated at hundreds of thousands, and the number of users in the millions.

The Informatics' WWW server became available on 13 June 1994. It currently contains information about Informatics Departmental structure, people and projects. As part of the Publications section we plan to include Informatics Department's specialist publications for the IT community. The ECN is amongst these of course, together with information about the various Community Clubs run from RAL.

After only two weeks of service more than 8000 pages have been accessed by over 300 sites. Many of these accesses are from the UK, but we have also had visitors from Germany, Italy, Japan, the Netherlands, the USA and Australia.

Thus the readership of the ECN will go beyond the current (mainly UK and paper-based) circulation of 3000 to a potential (world-wide electronic) readership of many thousands.

WWW browsers are available for a wide variety of machines including those running X-Windows, NeXTStep and MS-Windows.

If you have nothing else but an Internet connection, then telnet to info.cern.ch (no username or password required). This very simple interface works with any terminal but in fact gives you access to anything on the web. It starts at a special beginner's entry point. Use it to find up-to-date information on the WWW client program you need to run on your computer, with details of how to get it. You can also find pointers to all documentation, including manuals, tutorials and papers.

Much of the information about WWW given in this article is taken from an on-line seminar about WWW given by Tim Berners-Lee (CERN).

I would like to take this opportunity to introduce myself as the new editor of the Engineering Computer Newsletter. I am taking over from Dr Mike Jane, who has been the editor for the last year or so. In fact the appointment was quite a surprise for me. I have just returned from a six months ERCIM (European Research Consortium for Informatics and Mathematics) research fellowship in Stockholm, Sweden, and wasn't quite sure what was waiting for me on my return!

In Sweden, I was researching into depth perception and Virtual Reality systems. You may guess from this that I am not your typical engineering user. In fact, I originally studied Psychology before continuing my education into Computer Science and applying this knowledge in Information Technology. My primary interests are still very much involved with the human factors issues in computing.

I see myself as a computer user not a programmer, and feel strongly that the role of a computer is that of a highly effective tool, but a tool that should be transparent to the user.

All too often, engineering users have to become computer hackers when what is needed are systems that deal in precisely the same terms as the engineer's discipline. Higher level systems such as these are effective on a number of grounds from a more gentle learning curve to a greater ease of reuse of software components.

I see one of the ECN's functions as encouraging cooperation between engineering computing users, so wheels are not being continually reinvented. This means that if you have solved a particular computing problem, it is likely that others are experiencing an identical difficulty. Please tell your colleagues via this publication.

Improvement in usability does not invalidate the need for engineering computing specialists nor communication in this area. The rate of change in machines, operating systems, interfaces, application software, computation techniques and standards is difficult to keep pace with.

This Newsletter's endeavour is to keep you informed to the best of our ability, and that in part depends on you, our readers.

The way we do this is to provide announcements of software releases, appropriate events, training courses and technical articles. You may have recently read a significant and informative article on FORTRAN90, a recent standard which may become the major language for scientific and engineering codes.

The Newsletter is also the major organ of the three UK Engineering Community Clubs: Parallel Processing in Engineering, Visualisation and Computational Fluid Dynamics. A positive response exercise for the Newsletter has just been completed, with over 3000 readers returning their cards. This procedure has allowed the distribution to be updated and so more closely targeted at current core Engineering Computing readers. We now wish to build upon this readership and reach appropriate users that were not in our original list. If you are not on our mailing list but would like to be added then please contact Rachel Miles at RAL (ryn@inf.rl.ac.uk).

There has been a significant event in the history of the ECN: it is now available in an electronic format. With the use of WWW becoming widespread, we plan to make each edition of the ECN available on the RAL server about a week before the paper version gets to readers.

Finally, if you do have any articles. diary events, news, services, adverts or job vacancies that you think would be of interest to your engineering computing colleagues please send them to the ECN E-mail address ecnewS@inf.rl.ac.uk

Learnfortran is a self-teaching hypertext course explaining the principle features and structures of Fortran77. The course consists of 10 sessions providing notes and demonstration programs together with self-assessment questions and answers. Users can open a window to access a Unix host enabling them to edit, run and compile example Fortran programs. Learnfortran has been developed at the University of Kent (UKC) as part of the Hypertext Campus Project currently being run there. The learning materials were written by Dr David Bateman of the Computing Laboratory at UKC and the hypertext implementation is by Vicki Simpson of the Hypertext Support Unit. The aim of the developers was to enable the students to practice programming using the self-assessment questions, while being able to see simultaneously the relevant page of notes and demonstration program.

The course has been evaluated; in October 1994, a class of 62 first-year undergraduate students were selected to trial Learnfortran. The students were divided at random into 4 groups. Group A did not attend lectures, but had unlimited access to Learnfortran. including one supervised hour a week. Group B attended lectures and had one supervised hour of Learnfortran a week, and Groups C and D attended lectures. but had no access to Learnfortran. Questionnaires were used to monitor the students' progress and experiences with Learnfortran. At the end of the course, all students were set a short pen and paper test. Results suggested that Group A were not disadvantaged by not attending lectures, and that Group B had benefited from the combination of teaching modes.

s a result of the students' comments and the lecturers' observations, work is currently in progress to extend the effectiveness of Learnfortran as a teaching package, and to release a new version.

Learnfortran is available by anonymous ftp from unix.hensa.ac.uk in the directory /pub/misc/unix/unix~ide/guide_docs/ fortran The course is written in Unix Guide. For those sites which do not already have Unix Guide, a read-only version of Guide - guide_reader - is available, as above, in the directory /misc/unix/unix~ide/guide_reader.

Information about guide_reader and Guide can be found in the file /misc/unix/unix~ide/guide_reader/README.

The Joint Information Systems Committee approved a proposal by the Visualization Group, in Informatics Department at DRAL, to review and report on the current status of Graphical User Interface Development Tools (GUIDT), The work at DRAL was to build on the evaluation of X Window System based tools, extend that work by evaluating the prominent cross-platform tools (GUI software development across multiple window systems) and to provide a complete report, putting the tools into appropriate categories. DRAL took this opportunity to define the categories as well as summarise what might be the user interface requirements of a broad range of applications. It is hoped that the reader will then be able to estimate how well a given tool supports his application's requirement. Open Interface, XFaceMaker and XVT-Design were among the tools that were evaluated.

The review was conducted at DRAL between October 1993 and March 1994 and two workshops, presenting the results of the review were held at Imperial College of Science and Technology on the 7th of April 1994 and at University of York on 14 April 1994. Vendor exhibitions were held in conjunction with both workshops. Both workshops were over subscribed and from the participants' comments appeared to have been very well received.

We were fortunate to obtain other speakers which allowed a comprehensive programme at these events. The programme included an introduction to various categories of GUIDTs and a summary of the review of the products that DRAL carried out under this project. One of the main requirements of this project was to evaluate cross-platform GUIDTs (variously referred to as virtual toolkits or Portable GUIDTs (PGUIDT)). This aspect naturally gave rise to the issue of the appropriateness of hardware for specific applications because PCs and popular PC software such as Visual Basic and Visual C++ are more affordable. We felt that it was important to provide information to the community about this aspect. independently of the GUIDT products themselves. Chris Cartledge, Sheffield University was invited to provide a PC perspective and Dr Simon Dobson. DRAL, was invited to talk about the appropriateness of the underlying hardware to different applications. Under the programming methodology, Object-Oriented Programming is fast gaining popularity and hence Trudy Watson, DRAL, described the concepts underlying OOP and set that in context with UIMIX, a OSF/Motif based User Interface Management System (UIMS) that was evaluated under the TISCDRAL GUIDT review. Dr Pat Moran. EVCS presented the experiences of using XVT, a PGUI evaluated independently by DRAL and Dr Robert Fletcher described SUIT, a public domain cross platform GUIDT. Dr Gilbert Cockton presented the experiences of using GUIDTs in teaching and highlighted the considerable delay in commercial tools adopting results from current HCI research.

Peter Whitehead, Chairman of the Workstations Working Party chaired the London workshop. Proceedings were made very lively by the direct participation of at least one of the vendors who decided that the opportunity to review the report earlier was not sufficient! The debate no doubt helped to drive home the results of the evaluation; in comparison, the York workshop was subdued but audience participation and the feedback discussions were more definitive. Dr Ken Heard, Head of Computing Services, Southampton University. chaired the York workshop.

A report containing the results of the DRAL review and papers from other speakers at the workshops is available free to academics while stocks last. If you are interested in getting a copy. please send your request (with postal address) to Mrs Virginia Jones, Informatics Department.

To most attending, the Neural Computing Seminar at the Institution of Civil Engineers, run as part of the IT Awareness in Engineering Initiative. was a huge success at a number of levels. Most of the speakers were judged to be of the highest calibre. being both interesting and informing. Many felt the presentations put neural networks (NNs) and neuro-fuzzy (NF) technology in perspective. Some would have liked more time after each presentation for formal discussion. However, others felt the style of event really did get people talking together from both the general engineering and the information/computing technology sides of our joint problem concern. Also with hindsight, it would have been good to have had more discussions about NF technology.

Nevertheless, there did seem to be much sharing of ideas and several new interdisciplinary teams had begun talking about working together during the meeting. For instance, the group who came together to discuss safety critical systems have already had a further exchange of correspondence to develop their own future strategy for that area. I also notice from the first issue of EPSRC Informatics Division's new journal, known as IMPACT, that our topic leader for Safety Critical Systems at the seminar, Professor Bob Malcolm, has become EPSRC's coordinator for the topic (see contact telephone later), so the event has clearly had some impact with EPSRC already. If any others would like to work together in a similar way we are happy to act as the initial go-between and for ECN to help match people up. Let me know if you want to use this facility and we will build it into the next newsletter. The discussions on day two of this first IT Awareness in Engineering Initiative seminar indicated, most clearly, the need for EPSRC to have a significant future neural computing research programme which focuses on the specific needs of engineering end users and/or other end users. The wealth creating potential of truly applicable NN/NF applications - both software and hardware - were undoubted and enormous.

This should be made clear to potential engineering users, who must also be helped to understand how best to optimise the designs of their neural computing applications. The key here is better technology transfer, better guidance and better education for neural computing use. Any EPSRC research strategy should make these human factors and educational issues central in any future studies and developments. The more detailed discussions for the future strategy focused on four areas of concern: dedicated hardware and software; safety critical systems; adaptive on-line control; and static data analysis and pattern recognition, dealing with these in turn:

Those examining the hardware/software issues at the seminar were loathe to make any strong recommendations on the hardware/software divide because they saw each area leap-frogging the other. At present they felt it was not helpful to prefer one development to the other. However, the group did define three areas of priority: i) architectural issues are still important, especially the engineering aspects of large systems implementations research here should focus not on the VSLI technology, which will develop anyway, but on systems aspects and architectural issues on whatever technology is available at the time; ii) the need for ASIC based NNs for intelligent or smart sensors which would be low power, low cost and enhance the quality of all our lives and help in areas like safety systems, biotechnology and our chemical industries - profitable areas for development include drug sniffers for airports, artificial retinas and more sophisticated hearing aids; iii) there is still room to develop fundamental and novel NN/NF circuits where the key research issues remain improved NN dependability, validation. confidence intervals, model complexity optimisation and inverse problems. The group felt this latter area would benefit from the involvement of mathematicians as well as computer scientists.

A second group looked at safety critical systems and NNs. They were particularly concerned that future NN/NF developments had high dependability. This required research into the characterisation of: safety properties; safety integrity; safer implementation; and safety assessment. The purpose of research here is to look at the characterisation of the inputs to the systems and sub-systems pertaining to NNs to see how they differ and how they should be expressed. This top-down approach, which will help the processes of standards development, should be complemented by one which originates from a more theoretical standpoint. In this bottom-up approach the idea is to understand what we can be sure about NNs in terms of their stability and continuity. Another big issue in this area of application is to understand the theoretical characterisation of domains so we can be sure that the NNs pick up the safety critical situations when they arise. Another important theme for research in this topic relates to understanding of the envelope protection afforded by NNs.

A third working seminar group identified the following industry areas where Adaptive On-Line Control based NNs (AOC NNs) could offer wealth creation in the medium term: process control (eg fermentation), manufacture and production (scheduling. optimisation etc); food preparation and inspection; pharmaceuticals; energy (discovery, production, supply and demand forecasting); environmental monitoring; vehicle control (routing); financial sector (markets, credit control and assessment); communications; management and decision support. In the longer term robotics, vehicle control (including aerospace systems) and more general forecasting would be important developments with respect to this theme.

Aspects of AOC NNs which could form the basis for academic only or collaborative academic/industry research programmes, with short to medium term wealth creating opportunities, include: integration issues (NN into real industry applications). methodologies for design and implementation, stability theory. feedback in operation, robustness, optimisation of network structures. learning theories (how to get a network to train more quickly), adaptability. learning and relearning strategies, learning algorithms, integration of NN with NF and genetic algorithms, and data coding and scaling, Important areas for blue skies research for AOC which will deliver wealth creation opportunities in the longer term are: the validation and verification of NNs; reliability; node failure decay and redundancy; loss of priority data, discovery and recovery issues; inverse networks; internal mode control; new architectures design; generalisation of learning techniques; statistical confidence; data encoding: hybrid neuro and neuro-fuzzy designs; non-linear feature analysis; and persistent excitation and network structural analysis.

The final group discussion came to no consensus, but considered key topic areas felt worthy of future study under the heading of static data analysis and pattern recognition. As with other groups, validation, in terms of confidence, guarantees and data quality. was considered as a top priority for research. Another big issue to this group was complexity optimisation; by combining the performance of networks (committees of networks) you can clearly improve their performance. There are rather interesting research issues with respect to this such as: the saliency of the inputs and input selection; sensitivity analyses; hybrid combinations and their relation to conventional methods of integration. The final area of concern to this group related to inverse problems. All at the seminar were in general agreement that the focus for all future neural computing R & D should not just be on fundamental research, but on pragmatic themes that could really be applied to real, tractable, problems. Furthermore, it was felt that many NN grant proposals were simply reinventing the wheel and that some form of clearing house was necessary for NN grants.

These general recommendations, together with the more detailed comments from others attending our NN meeting, have been passed onto Professor David Bounds, EPSRC Neural Computing Coordinator. I am sure they will help focus the discussions taking place at an open meeting to finalise the EPSRC strategy for neural computing for the UK, taking place at the University of Aston on 24 June 1994. Martin Prime, from DRAL will ensure our seminar attendees views are heard at that meeting and will report on its recommendations in the next ECN.

Finally, let me remind you of the next IT Awareness in Engineering seminar, which will be 21-22 November, again at the ICE in London. It will follow the same pattern as the previous seminar with an introductory day followed by a strategy setting day: in the latter respect we will be attempting to inform the strategies of the major themes of EPSRC's Innovative Manufacturing Initiative. The topic of the discussion is Informing Technologies to Support Engineer's Decision-making. Let me explain what I mean by the term. There is a recognised area of Information and Computing Science known as Decision Support. This relates to those IT systems, IT software and IT based protocols or heuristics that help/support general human decision making, Into this category come expert systems, design optimisation, simultaneous engineering tools, knowledge based engineering, computer supported collaborative working (CSCW). simulation, etc.

Unfortunately, many decision support systems developments do not yet actually help/support real decision making: often they tend to disable rather than enable. This seems especially to be the case in engineering. The proposed seminar therefore brings together those researchers, from both IT and general engineering, who have tried to develop working IT systems that truly support the decision making of engineers. Our seminar will start with a consideration of what we mean by engineering decision-making. However. we will then present, and demonstrate. successful attempts to support many different aspects of engineer's decision-making, especially where those attempts can be verified by practising engineers. We are also keen to report on failed attempts to develop such Engineering Decision Support (EDS) systems if something can be learned from those studies. If you are someone who has something to say, or to demonstrate, on either of these EDS aspects, or know someone who can, please contact me.

The third workshop to be run as part of the IT Awareness in Engineering Initiative will take place on the 26th and 27th of January 1995. The title of the workshop is Virtual Reality and Rapid Prototyping and it will be held at the University of Salford. This seminar will again follow the same patten as the ones before with an introductory day followed by a strategy setting day.

Neural Computing Contacts: Professor David Bounds, Neural Computing Co-ordinator and Professor Bob Malcolm, Safety Critical Systems Co-ordinator

Some thoughts on when (and when NOT!) to use a PC

Almost all computer users today work with machines which might be classed as workstations, with a Graphical User Interface (Gill) and connected to a network. Indeed, it is no longer seen as acceptable to expect users to deal with line-orientated. terminal-style applications: a sophisticated GUI is now essential for any Politically Correct (PC) application. At the same time, the needs of economy have resulted in a trend towards IBM PC-compatible (PC) systems, which offer good price/performance advantages over UNIX-based workstations. A corollary to this move has been the tendency for users to expect PCs de rigueur rather than UNIX boxes. This is unfortunate, as there are several important cases - affecting the active researcher in particular - for which a PC may not be the best choice of platform.

Creating reasonable GUI applications needs more than just a compiler. It needs libraries of common widgets. bitmap editors, and a screen designer or (preferably) a graphical compiler in which software with a GUI is developed through a GUI. Such things are inevitably more expensive than the console-orientated systems which they replace. In choosing how to develop an application, there are several factors to be considered.

Ease of programming is important for the busy researcher who is not a computer scientist and whose real area of interest is not software. Powerful systems can reduce the complexity of applications by providing more functionality built-in, but must be flexible enough to meet the needs of different applications. Conformance to standards is essential both for ease of use by unfamiliar users and for interworking with other tools. In general the high-level systems will score highly on speed of development and standardisation of interface, but may not be sufficiently flexible to deal with all applications.

The cost difference between PC and UNIX software development environments is staggering. For the PC, systems such as Visual Basic retail for a few hundreds of pounds, and software developed using them is free from licensing fees. UNIX environments typically cost several thousands of pounds and may incur fees for every piece of software sold or given away while X Windows is a free system. Motif is not. The costs are even greater for cross-platform GUI tools which can develop software for both PCs and UNIX boxes.

Despite the advantages, the rush to PCs is not an undiluted blessing. There are many applications in many environments for which PCs might not be the best choice. To illustrate the issues involved we shall discuss four aspects of software development modelling processes, presenting data. accessing databases and using networks - which may affect a typical engineering application.

Engineers use software for many purposes, but possibly the most fundamental is to model some real-world process and generate simulation results. Modelling can be very computationally intensive, involving many floating-point calculations. For many applications the data sets involved will be very large indeed - for example complete computational wind tunnels.

Large data sets can pose problems on PCs except in 32-bit Windows NT applications. Workstations are better able to take advantage of innovative advances in hardware and will generally have better floating-point performance. Neither the Intel 486 not the Pentium have particularly impressive mathematical performance, and this mitigates against the use of a PC to do the most complex modelling tasks (although Windows NT on an DEC Alpha does not have these limitations).

Having modelled a system, there is a need to present the results in an easily-understood format using some form of visualization to distill an image from a mass of data. A good presentation and user interface will make or break an application. Making the application look more attractive is another issue: a good user interface will make an application more usable and reduce its learning curve. Conversely, a badly thought-out user interface will fatally cripple an application - even if it uses all the standard widgets.

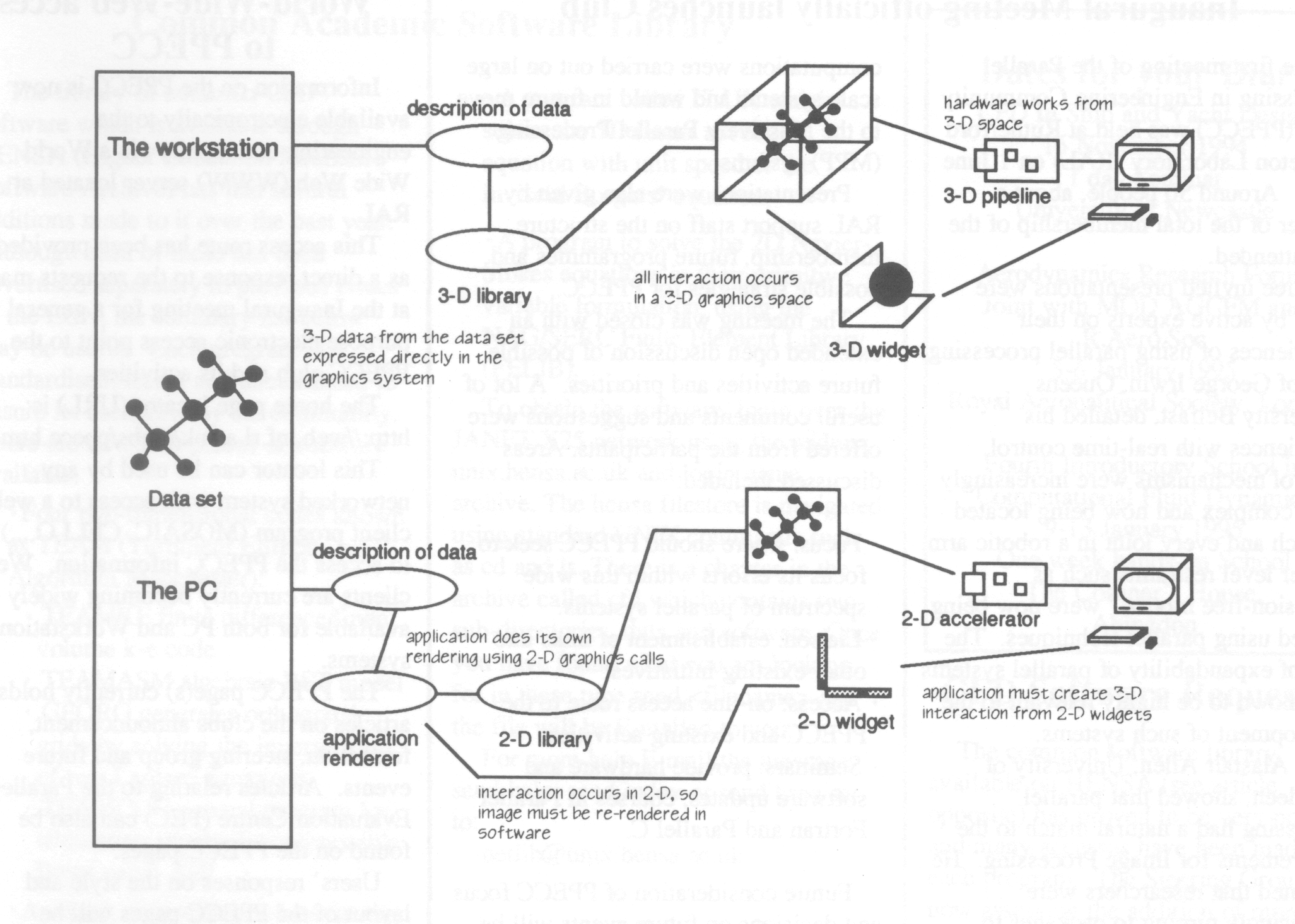

Windows is fundamentally a 2-D graphic system, and has no built-in operations for 3-D imaging. Writing a visualization application is thus a complex business, as the software must perform the translation from the 3-D data into a 2-D projection. There are no user interface objects provided to handle three dimensional images or interactions with them (figure 1). Moreover Windows is not well-optimised for the needs of even 2-D graphics. The creation of large bitmaps for example is a very time-consuming affair - it can take several seconds to convert a 1024×lO24 block of colour data into a bitmap ready for display.

Equally serious is that the PC architecture has as yet no support for the sorts of high-performance graphics needed to render three dimensional images in real time. For example a Silicon Graphics workstation will typically have its 3-D rendering pipeline optimised into dedicated hardware. Windows has no similar support - a Windows graphics accelerator, whilst it will improve overall graphics performance, is simply not in the same class and addresses a different problem.

An increasing number of applications are beginning to use databases as a central part of their function. This is a recognition of the part which a well-organised database may play in the effective storage and retrieval of experimental or simulation results. Many databases come with their own application generators. These seem to offer a quick way to develop database front-ends, but all seem to share one feature in common: they only make easy what the designers have anticipated, and can be very problematic in other situations. The application generator often does not support the full power of the database.

Several PC languages have taken steps to improve this situation - notably Visual Basic, which has language and widget support for retrieving and processing data. This makes simple front-ends very easy to create. An additional bonus is the emergence of the Open Database Connectivity system (ODBC), which allows an application to bind dynamically to any database using a common interface library and language. This effectively means that generic applications can be written, independent of the eventual target database.

It may seem a little strange to talk about network programming in a paper on graphical program development, but the use of networks for both client/server and remote access computing is increasing. Many engineering applications are performance-intensive, and their computational part must be run on the fastest hardware available to the researcher. The interactive part of the application, however, may be better run elsewhere - such as on the machine on the researcher's desk.

The underlying problem here is that no current commercial system offers good high-level support for network programming or client/server applications. Anyone needing to implement such software must resign themselves to a hard time. For the user needing simply to run an application remotely on fast hardware, X Windows offers a fairly painless solution.

Although UNIX-based workstations will always be able to take advantage of the latest advances in hardware technology, they do so at a price - for the hardware, the cost and quality of development tools, and the portability of applications. PCs have lower cost hardware and software, and Windows NT allows applications to migrate to a wide range of present and future architectures - those writing new PC applications would do well to take note. The PC (at present, anyway) is unsuited to domains which require the fastest graphics or mathematical performance.

The temptation to follow the crowd into the politically correct PC world is seductive and ever more popular. What we hope we have done is to give pause for thought for anyone with an application which might better be served by (for example) a dedicated graphics workstation.

A full version of this paper was presented at the JISC/RAL Workshop on Graphical User Interface Development Tools, 1994.

The first meeting of the Parallel Processing in Engineering Community Club (PPECC) was held at Rutherford Appleton Laboratory (RAL) on 1 June 1994. Around 50 people, about a quarter of the total membership of the CC, attended.

Three invited presentations were given by active experts on their experiences of using parallel processing.

Prof George Irwin, Queens University Belfast, detailed his experiences with real-time control. Control mechanisms were increasingly more complex and now being located on each and every joint in a robotic arm. Higher level restraints such as collision-free motion were now being tackled using parallel techniques. The ease of expandability of parallel systems was shown to be highly relevant to the development of such systems.

Dr Alastair Allen, University of Aberdeen, showed that parallel processing had a natural match to the requirements for Image Processing. He indicated that researchers were increasingly turning to packages to assist in their developments and that these packages needed to start supporting and supplying parallel components.

Dr Dale King (BAe) discussed how the drive for profitability demanded high performance computing requirements within the aeronautical industry. Currently most parallel computations were carried out on large scale systems and would in future move to the Massively Parallel Processing (MPP) systems.

Presentations were also given by RAL support staff on the structure. membership, future programmes and possible strategies for PPECC.

The meeting was closed with an extended open discussion of possible future activities and priorities. A lot of useful comments and suggestions were offered from the participants. Areas discussed included:

Future consideration of PPECC focus and decisions on future events will be carried out at the next meeting of the Steering Group in July 1994.

Information on the PPECC is now available electronically to the engineering community via a World Wide Web (WWW) server located at RAL.

This access route has been provided as a direct response to the requests made at the Inaugural meeting for a general purpose electronic access point to the PPECC club and its activities.

The home page locator (URL) is: http://web.inf.rl.ac.uk/clubs/ppecc.html.

This locator can be used by any networked system with access to a web-client program (MOSAIC, CELLO....) to access the PPECC information. Web clients are currently becoming widely available for both PC and Workstation systems.

The PPECC page(s) currently holds articles on the clubs announcement, formation, steering group and future events. Articles relating to the Parallel Evaluation Centre (PEC) can also be found on the PPECC pages.

Users' responses on the style and layout of the PPECC pages will be welcomed now that this service is fully implemented.

Anyone with information they feel they would like mentioned on the PPECC pages should contact the PPECC secretary with details of their requirements.

An archive of over 145 Mbytes of parallel software is maintained at the University of Kent HENSA site.

This archive currently holds over 145 Mbytes of data. Access to the archive can be made via the JANET X.25 network with the address unix.hensa.ac.uk.

Distributable software and papers are available for downloading and viewing. Areas currently covered include the INMOS transputer, occam language. high performance and parallel computing subject areas.

World Wide Web (WWW) access is also available to browse the available information using the public URL: http://unix.hensa.ac.uk/parallelfmdex.html. Over 2000 users access this archive monthly.

The library of common CFD software which is available through HENSA (Higher Education National Software Archive) has had several additions made to it over the past year. Although each of these has been advertised separately in previous issues of the ECN, the summary list below may be useful. Each program is standardised, tested and documented to ensure its usability by the community. There are now four pieces of software available:

To obtain the software, login over the JANET X25 network using the address unix.hensa.ac.uk and login name archive. The hensa filestore is navigated using standard UNIX commands such as cd and ls. There is a chapter in the archive called cfd which contains two sub-directories, data and software. Once you have found what you are looking for in these type send <filename> and the file will be E-mailed to you.

For more help E-mail the message send help, send index or send browser to: netlib@unix.hensa.ac.uk.

The common software library available on HENSA has proved to be very popular and many accesses have been made to each program, The Steering Group is now assessing the addition of more software to this library. The original request for software was made in 1991, so more could now be available in the Community.

If you have any software which you think would be of use to the Community and which you would be willing to provide in source code form to be mounted on HENSA then please contact Debbie Thomas. Your software will be fully tested and run through our software QA tools to ensure that it is portable before being made available. Any necessary enhancements to the graphics capability will be made and a revised version will be returned to you together with a list of all changes made.

The Computational Fluid Dynamics Community Club (CFDCC) held a one-day meeting entitled New Opportunities and Directions in Aeronautical OD at RAL on Wednesday, 20 April 1994. It was attended by 72 participants, 51 of whom were from universities, 10 from the research councils and 11 from industry. The meeting was chaired by Steve Fiddes (Bristol) and began with a review and preview of the Community Club given by Professor Geoff Hammond (Bath), who was the chairman of the ODCC from 19901994. The day's Programme was split into two sessions: Advanced Turbulence and Transition Modelling. and Unsteady Flows.

The first session began with a talk on directions in turbulence modelling by Professor Brian Launder (UMIST). He believes that better schemes will lead to the stage where numerical errors can be reduced to negligible, thereby allowing researchers to concentrate on turbulence effects. He also indicated that most commercial CFD codes have fundamental weaknesses in that models are too basic and thus require some wall correction input by the users. He concluded by saying that industrial CFD will continue to be based on averaged Navier-Stokes equations, with the increasing use of second moment closure models for complex flows.

Dr Peter Yoke (Surrey) then spoke about direct and large-eddy simulations (LES) and their role in understanding turbulence. He emphasised that simulations are not a theory of turbulence as they are numerical experiments whereas a good second-moment closure (SMC) of a flow is a theory of that flow. However, the simulations are potential tools for constructing a theory, thus our aim should be to use LES and FRS (fully resolved simulations) to construct SMCs.

Dr Neil Sandham (QMWC) spoke on Direct Numerical Simulations (DNS) of Shear Layer Transition and Turbulence. He described the methods used for the numerical simulation and presented some results. The DNS of transition at Mach 2 showed primary instability leading to quasi-streamwise vortex pattern and showed a persistent double-horseshoe type structure.

The next talk concerned the Research Council's support for aerospace, given by Dr Nigel Wood, who is the EPSRC Aerospace Coordinator. He outlined the current support from the Electro-Mechanical Engineering Committee Aerospace research. He said that aerospace research will be part of EPSRC research and is well placed with an industry-led research agenda.

The session on unsteady flows began with Professor David Nixon (Belfast) presenting his research on the transonic buffet which is characterised by oscillating shock waves. The work is a combination of a theoretical approach to understand the phenomena and develop control laws, and experiment to test any promising control law in a wind tunnel.

In a presentation on unstructured grid methods, Professor Ken Morgan (Swansea) described adaptive meshing algorithms for transient flows. He indicated that transient unstructured mesh methods were effective for Euler flows, but there were no unstructured methods for viscous flows.

he final talk was given by Professor Bryan Richards (Glasgow) on implicit methods in transonic viscous flows. He presented the work from aero-elasticity applications of a transonic flutter done in collaboration with BAe and Bristol University.

There were two lively question and discussion sessions. A particularly topical question was what may happen when we start to put turbulence models into unsteady flows. One important issue here is that validation for such a problem would probably be very difficult. Another question was directed to the amount of DNS/LES research being carried out. It was commented that more research in this area would be beneficial but that it should not be done in isolation. It is important that all this work should have focused objectives.

Abstracts for papers are called for in all areas of visualizing experimentally derived data. Please see separate inserts in this issue for detailed topics and arrangements for these events. Attendance at these events will be charged at the normal rates for EASE seminars. Other events are being planned by the Visualization Community Club's Steering Group for early 1995 on the following topics: the use of multimedia in visualization; visualization of extremes - which includes data which has particular complexity, such as multivariate or multiparameter characteristics. We invite suggestions for future events.

Information on the Visualization Community Club is now being placed online and is available electronically via a World Wide Web (WWW) server placed at RAL.

This will hold up-to-date information about events and technical material. In the short term, we anticipate that some people will experience networking difficulties with the more demanding material. However, we believe that the ability to access this kind of resource will grow. Also because of the ability to cross link to material at different sites, there is an excellent opportunity to use this technology to make visualization work in the community more widely known.

The home page locator (URL) is: http://web.inf.rl.ac.uk/vis/vcc/.

This locator can be used by any networked system with access to a web client program (MOSAIC, CELLO...) to access the VCC information. Web clients are currently becoming widely available for PC's, Macs and workstations.