On the 1 April 1995, responsibility for the Daresbury and Rutherford Appleton Laboratories (DRAL) passed from The Engineering and Physical Sciences Research Council (EPSRC) to The Council for the Central Laboratory of the Research Councils (CCL). DRAL will no longer be used to describe the joint Laboratories which become The Central Laboratory of the Research Councils. CCL will be identified by the logo shown above.

So what has been going on backstage? Immediately the White Paper Realising our Potential was published, and it was suggested that Daresbury Laboratory and Rutherford Appleton Laboratory would merge and their status would be reviewed, management set about searching for a practical solution which would allow us to build on the excellence of the two laboratories. Much political activity went on until, somewhat against prevailing government policy, it was agreed that we would be an independent body sitting alongside the Research Councils within the Office of Science and Technology (OST). The features which make DRAL special had to be emphasised at every opportunity and our friends, colleagues, clients and users all played their part in achieving the desired result by voicing their opinions on our effectiveness and quality - setting us in good stead for an independent life - but recognising that our main business was supporting research, a business that has little prospect of financial profit for the practitioner.

This momentous decision was promulgated late last year, giving us a few short months to bring the new body into being. Of course, we had already started to merge the activities of the two sites, particularly in the administration area, but now the challenge to turn them into a single, cost-effective, efficient and high quality organisation, began in earnest. This has resulted in the bringing together of similar activities across the two sites - many are now being managed on a federal basis - and will lead to direct cost savings in the future as areas are restructured.

The Royal Charter granted to CCL sets out the objects for which the Council is established and incorporated as follows:

A Statutory Instrument transferred the appropriate goods, chattels, contracts and liabilities to CCL from EPSRC on 1 April '95 and CCL's Council met for the first time on 27 April '95.

The distinct branches of Parallel Processing and Distributed Computing have many issues in common but are addressing different user communities. This was the conclusion of a workshop of researchers arranged by the Parallel Processing in Engineering Community Club (PPECC) to consider the question: Distributed vs Parallel: convergence or divergence?.

A clear consensus was that emerging operation systems based on microkernels, with multi-processor and multi-thread support, provide the key to future convergence in the technology base.

The PPECC Workshop brought together 45 active researchers, from both industry and academia, including technology specialists, applications developers, and engineering and other users. A booklet of 19 position papers submitted by attendees was distributed prior to the event. (A limited number of copies are still available on request from the PPECC secretary.)

The programme consisted of 4 invited keynote speakers, 9 selected position papers, and three subgroups to consider key aspects of the workshop theme.

The guest speakers provided stimulating presentations detailing the current positions of research within the parallel and distributed sectors. Denis Nicole (Southampton) discussed recent developments in parallel architectures and systems. These included fast, cheap microprocessors (providing virtually free computational power) and fast dedicated routers (providing communication power). Set against these are the delays in bringing products to market. System design and software construction are now major tasks crucial to future success but requiring significant time and effort. He observed that the distinction between distributed and parallel is about trust and outlined a spectrum of trust from a single operating system image to e-mail composition/encryption.

Gordon Blair (Lancaster) discussed the trends in the distributed field, including: the use of microkernels, open distributed processing, the move to ATM networking, and distributed file systems. Arguments for and against convergence with the parallel field were summarised for each topic. The challenge for microkernels lay in lightweight threads and in supporting the network. Recent developments are moving to greater use of standards and open platforms to build operating systems themselves. Chorus is a typical system.

Tom Lake (InterGlossa) discussed recent developments within languages and libraries that provide support for both parallel and distributed programming. Many key ideas and philosophies used were originally developed in the 1960s. Modern-day software developments are now being supported by the use of a neutral intermediate language TDF and its parallel extension ParTDF.

Bill McColl (Oxford) discussed the Bulk Synchronous Parallel (BSP) approach to architecture independent algorithms across both parallel and distributed systems. This programming style allows the design and programming of algorithms using barrier synchronisation between supersteps and has a simple but powerful cost model based on four main parameters (p, s, I, g).

The three subgroups, with Chairmen as follows, considered the current provision against requirements from three perspectives:

The first group reported that users and developers want access to well structured and sound programming design methodologies that encompass both parallel and distributed systems with smooth migration and scaling.

The second group reported that many users were now facing the management of large volumes of data. File systems were seen as a simple repository for storing data, but provide no effective data management support for accessing data. Database support was required to enable better data management to be incorporated into projects more easily, with parallel/distributed servers ready to fulfil future access requirements.

The third group reported that the distinctions between the two fields could be viewed as relating to two issues: performance (parallel) and reliability (distributed). Networked PCs were seen as the volume market for both communities.

One interesting view from the workshop was that many saw the operating system as an area where there was useful collaboration between the two communities. The Distributed Systems community took the approach of seeking to build from microkernels and open operating systems (such as Chorus), while the Parallel Processing community sought to construct communication harnesses/hardware that provided high-throughput.

In concluding, the workshop considered the future support needs of both user communities as the perceived convergence takes place. The key technologies in both fields need a wider dissemination as they are becoming more widely used across both areas.

It was felt that important topics for Training and Awareness activities include: getting started, migrating between systems (including performance modelling), software availability (utilities and packages), SQL awareness and use (and support for moving to databases), and a seminar with a user focus on understanding the impact of parallel and distributed operating systems.

The participants enjoyed the bringing together of these communities and felt that further meetings and joint programmes should be encouraged.

A fuller report is in preparation and will be distributed automatically to those who attended the Workshop. Copies may also requested by contacting the PPECC Secretary.

The announcement of a new MSc in Optimisation at the University of Hertfordshire provides as good excuse as any for introducing the the work of the Numerical Optimisation Centre (NOC) to a wider audience.

Established in 1968 at the (then) Hatfield Technical College, the Centre's mission has been the design, implementation and application of algorithms for nonlinear optimisation. During the 1970s and 80s a good deal of important research and development was done under the leadership of Laurence Dixon and Ed Hersom. In this period the first version of the OPTIMA library of Fortran subroutines was written (and the current version 8 is still in use in many places world-wide). Also during this time the NOC began to develop its consultancy expertise through a number of major projects, mostly in the aerospace industry. Particularly notable among these has been a long and fruitful collaboration with the European Space Operations Centre, Darmstadt, involving the optimisation of Ariane launch trajectories.

Since the late 1980s the work of the NOC has been somewhat broadened. In particular there has been significant activity in new areas such as automatic differentiation and interval arithmetic. While both of these have direct relevance to optimisation (the former in making Newton-like methods more attractive and the latter in providing a rigorous basis for global optimisation techniques) they have also given fresh insights into the mathematics/computer science interface which characterises numerical computation. Research staff at the NOC were early converts from Fortran 77 to Ada, appreciating among other things the benefits of operator overloading for providing a friendly interface to non-standard arithmetics. Although comparatively few numerical mathematicians have followed NOC's example in this, the Ada experience has, if nothing else, been a valuable preparation for the advent of Fortran 90!

Current work at the NOC includes the development of a new OPTIMA library in Fortran 90, in which automatic differentiation will be a key resource. In support of this, research is going on into efficient implementations of both the reverse and forward accumulation approaches. A recent application of these techniques has been in the calculation of Jacobian matrices in systems of stiff differential equations arising in reservoir simulation. Another project involves the use of interval arithmetic to deal with the global optimisation arising in an identification problem (inverse light-scattering) being studied by the Physics Division at the University of Hertfordshire. This same problem is also being approached from the modelling point of view by a finite element approach, which also draws on the Mathematics Division's expertise in parallel computation. As regards mainstream research in nonlinear programming, a project has just been completed on the extension of NOC's successful OPALQP algorithm to deal with sparsity in the function and constraints. In a similar vein, tests will shortly commence on a new version of the conjugate gradient algorithm which handles bounds on the variables.

NOC research has always been grounded as much as possible in experience of solving real problems in collaboration with other organisations, both commercial and academic. A recent example has involved the use of minimisation techniques to determine good strategies for sampling contaminated land, such as abandoned industrial sites. Current investigations include a parameter estimation problem occurring in the calibration of gas analysers and the solution of systems of nonlinear equations in the modelling of a metal forming process. Such external projects also provide us with the opportunity to share our experience of the benefits of implementing models and solutions in more up-to-date languages than Fortran 77!

The ability to include automatic differentiation and interval techniques has already been mentioned: but it is also important, for instance, that engineering and scientific users become aware that the more cumbersome aspects of sizing and passing workspace arrays no longer apply in Fortran 90. Indeed we now see it as part of our objective to promote wider awareness and acceptance (through user-friendly implementation) of a number of recent ideas in numerical computation.

The Centre is always pleased to give advice on matters relating to non-linear optimisation algorithms and software. For this purpose, or for discussions on specific consultancy projects, please contact me.

This new course, running under the Credit Accumulation and Transfer Scheme (CATS), will be offered from September 1995 and consists of a taught component (running from September to June) leading to a Postgraduate Diploma. In order to obtain the MSc, students must also complete a project between July and late September.

Building on the expertise of the Numerical Optimisation Centre, the course involves comprehensive study of methods of Linear and Nonlinear Programming. In addition, students must choose from a number of supporting courses in aspects of Mathematical Modelling.

This course is also available in part-time mode, alongside other part-time MSc programmes in Numerical Computation, Mathematical Modelling and Mathematical Studies.

Representation of data or knowledge is a key issue in the development of all computer-based systems. In traditional software systems, data, procedures and control processes are mixed together in one program. This approach has difficulty in dealing with the complexity of large information systems in which various independent sub-systems need to operate in a cooperative and interactive manner. Object Orientation is a paradigm that overcomes the difficulties of conventional software systems by bridging the gap between a piece of data and its operations.

In an object-oriented system, objects represent dynamic entities in computer memory that define data states and serve to group data that pertain to one real world entity. An object encapsulates both state and behaviour by having a set of procedures (or methods) that specify operations. Sets of similar objects are grouped together under classes to simplify association of knowledge within objects by keeping the implementation details private within each class and allowing interactions between objects of different classes to be easily controlled and manipulated. The information about how an object behaves is hidden from the behaviours of other objects, only their interactions and relationships are visible. The close coupling of the data and operations is one of the most important features of object-oriented techniques.

Three key object-oriented concepts are abstract data types, inheritance and polymorphism. Abstractive data types and inheritance enhance code extensibility and reusability by encapsulating the state (data structure) of an object with its dynamic behaviour (reasoning methods). Polymorphism allows a reasoning method to take more than one form, and make polymorphic references that can refer, over time, to instances of more than one class.

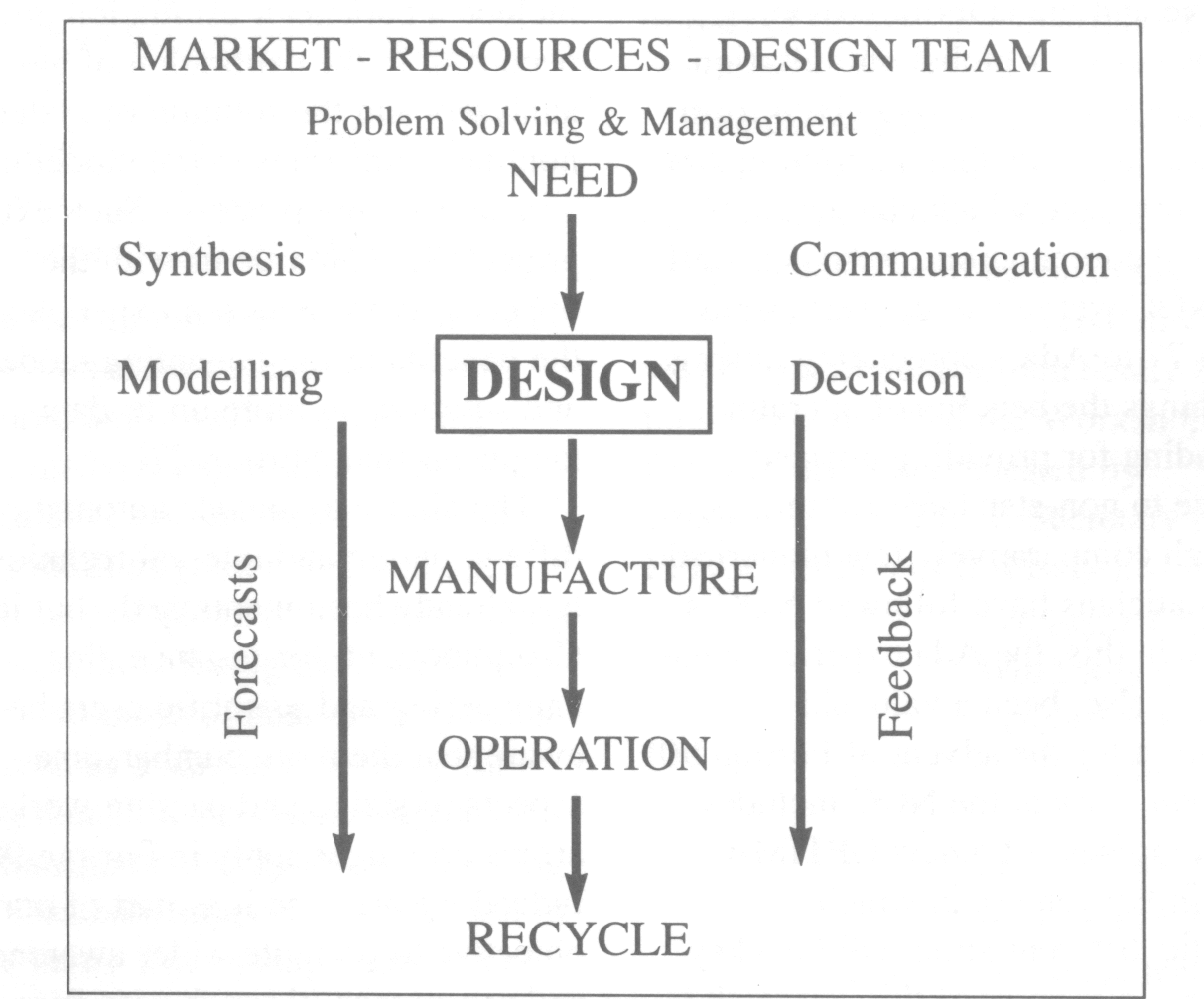

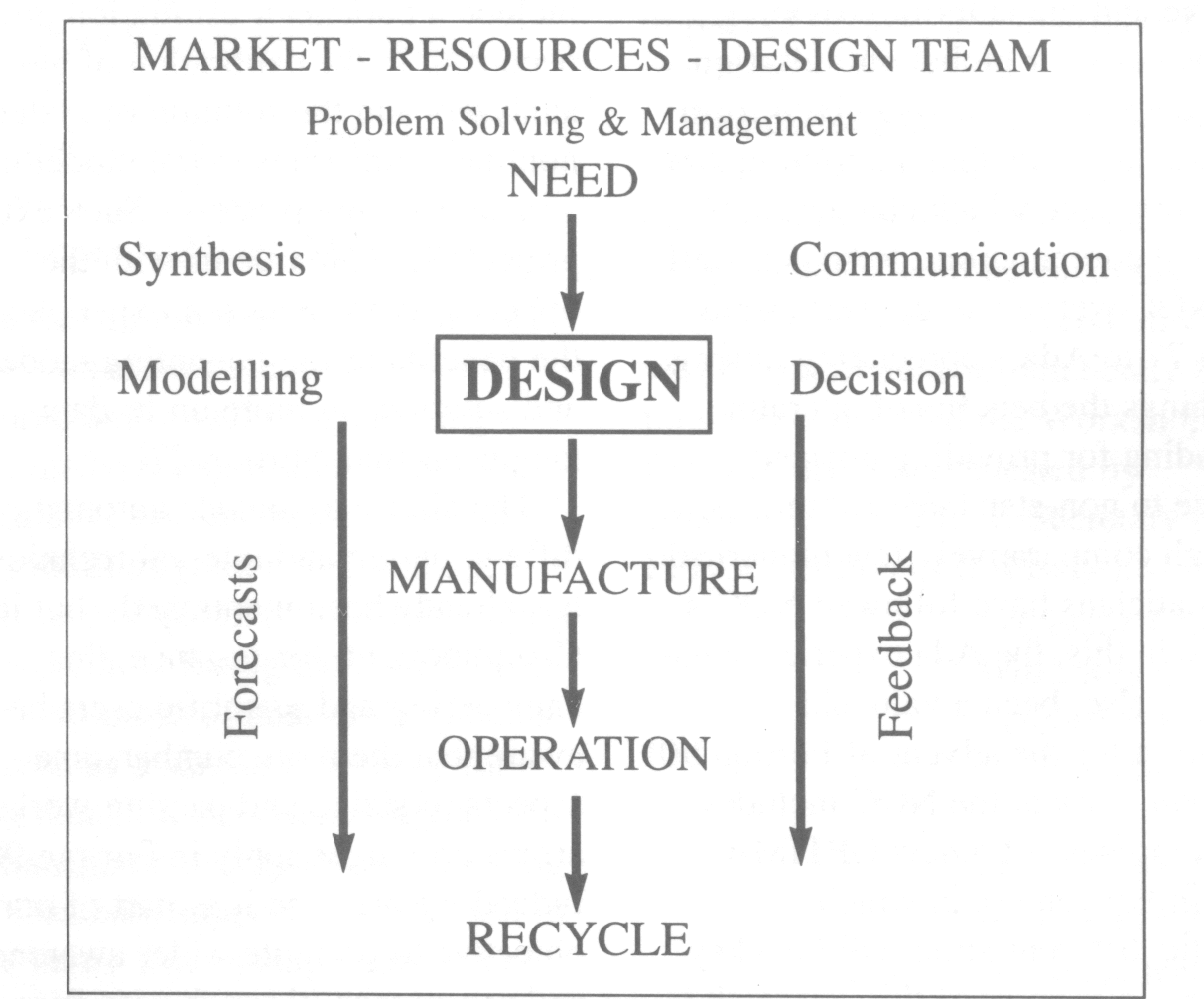

Engineering design tends to be object-oriented, constructive and incremental in that designers use basic components and simple mechanisms to construct larger and more complicated systems. A thorough understanding of basic components, their function, behaviour, and relationships in a dynamic situation forms a good basis for creating new designs. Engineering design problems are multi-dimensional and highly inter-dependent. It is rare for any part of a design to serve only one purpose, and it is frequently necessary to devise a solution which satisfies (not necessarily optimally) a whole range of requirements. Decomposition of a complex system into sub-systems, parts and components is essential in design in order to derive the overall behaviour of the system through the functional, structural and causal relationships of various subsystems. Figure 1 shows the position of design in the life phases of a product.

The computer has become an established tool for designers. The task of synthesising, analysing and evaluating design solutions in a computer-based design system is difficult as it needs a vast amount of knowledge and information from diverse sources. Unfortunately such knowledge and information are not readily available. This task is further complicated when various sub-systems within a complex system have been implemented using different computer languages on different platforms. Any attempt to balance design options on various software sub-systems to obtain total functionality and design optimisation, involves a complicated process of data processing. Modification to a part of one software sub-system may result in unpredictable consequences and unresolved conflicts among various other software subsystems. As a result, the design, implementation and maintenance of such complex design systems becomes a costly and lengthy process. A difficulty with current computer-based design support systems is matching the way in which a real world design solution space is explored and the way in which a computer system is developed to support the design, as illustrated in Figure 2. This gap between the problem space and the solution space tends to grow as the complexity of the product increases.

Object Orientation is not simply a matter of programming. It is an approach that applies to both the design activity in the problem space and the development of design support software in the solution space. Splitting a complex system into parts (objects) and assemblies (interactions between objects) in an object-oriented way provides an easy mapping between design problem space and solution space, allowing knowledge in each domain to be better organised in such a way that effective software systems can be developed and maintained. Abstract data typing allows high level knowledge structures to be defined to reflect the issues of concurrency, management and team work in a design environment. It also permits flexible object definition that can evolve during design. The encapsulation of the properties and operations of object classes allows complex design process modelling and product modelling techniques through a more natural structuring of complexity.

Object-oriented technology is having a large impact on the way in which future design support systems are designed and implemented. It emphasises the importance of defining, structuring, exploring and reusing design objects in both design problem modelling and software support system development, thus providing a natural way of coping with complexity inherent in CAD/CAE projects. System design is achieved by:

The essential components in an object-oriented design support system are a design knowledge base and a number of inference engines that infer on objects. Such a design knowledge base, will have structured design object classes supported by methods of reasoning. These object classes can be easily assembled to form the initial structure of a new design problem in which the values of some design variables are to be explored. The exploration of this initial design problem structure is carried out by designers making changes such as assigning variable values, adding or deleting objects, adding or deleting constraints etc. Any such change is passed to the relevant objects and the system's inference engines to carry out one or more of the following tasks: creating new objects and maintaining their relationships; propagating changes throughout the whole set of constraints to derive new values for other design objects; and creating dependency information between user decisions and derived design results.

Two object-oriented design systems are currently being developed at the Engineering Design Centre (EDC) in Cambridge: an object-oriented product data model and an integrated functional modelling system using two of the major object-oriented programming languages (C++ and Common Lisp Object System).

The Product Data Model (PDM) is an object-oriented system implemented in C++ that has an object hierarchy representing engineering design entities such as artefacts, assemblies, parts, and components. This engineering design object hierarchy has links to resource objects that can be instantiated to generate data for a design application. The definitions of objects and the way in which they can be structured in the PDM also provides basic guidelines for defining engineering design objects. The PDM is intended to form the basis for a number of engineering design tools being developed to share data through a common object-oriented database. The development of PDM represents the EDC's formulation of engineering design knowledge in an object-oriented way so that it can be shared by domain-specific design application systems.

The Integrated Functional Modelling System (IFMS) is an AI-based system supporting functional synthesis of design requirements, embodiment design of mechanical engineering products and kinematic analysis of system behaviour in an object-oriented environment. At the centre of this system is an object-oriented constraint management system consisting of three different inference engines:

The use of an object-oriented approach in the design and implementation of both systems allows extendible and reusable knowledge structures and domain independent inference engines.

The conference took place at Darmstadt, Germany on 10-14 April 1995. The aim was to have 800 participants. In the event nearly 1400 turned up which caused some difficulties getting into sessions with the main auditorium only holding 800! Even so, organisation went reasonably smoothly. I will not try and give an overview of all the papers that I attended. However, I will try and give a view of the things that are not in the Proceedings.

There were presentations followed by some discussion:

There is a news group on kiosk issues (comp.infosystems.kiosks).

The ERCIM Workshop in the afternoon was well attended. Thomas Baker of GMD had lined up a good range of speakers.

Detlef Kromker opened the conference proper. He pointed out that it was being transmitted on the Mbone, thus the reason for about 4 helpers sitting on terminals relaying people's slides etc to Wagga Wagga, London, Moscow, Washington, Brown University etc.

Walter de Backer, DG XIII, was the keynote speaker. He repeated what had been said at the W3C launch the previous Friday. The Commission are right behind the Web and W3C.

I went to a few panel sessions that do not appear in the Proceedings. This one had a set of speakers saying what they were doing on the company side of the firewall.

Siemens (http://www.siemens.de/siemens/) have about 190 companies world-wide with a revenue of 90B Marks. They were using the Web internally for storing business processes, documentation, code, and shipping software products. They have an overall company top-down design with variation allowed at the bottom to accommodate local needs. They still believed a lot of changes were needed in areas of quality, reliability, density, security, integration with workflow, traceability of effectiveness.

They have 3000 servers and use the Web to send out their list of suppliers to all the branches. It has reduced cost from $500K to $15K.

The best talk was from Boeing. They have no pages outside the firewall but intensive use within the company. He made the point that if you wanted to see how the Web was really being used, the place to look was in big corporations inside the company. Their principles were:

They have put Mosaic browsers in the homes of all senior managers. The janitor, the traditional source of gossip in Boeing, now has his own pages! There is a commitment to putting all the aircraft information on-line either by connections to external pages or to mounting it themselves. Thus major journals like Aerospace Daily are put up by Boeing.

They currently have 10,000 users on 200 servers with a 25% growth in usage per month. What was important was providing good support. PCs when delivered internally by PC Support, came with Mosaic installed, hotlists, guidelines and templates.

They saw the big challenge in the immediate future was to get management to accept the current chaotic situation. They are trying out many new ideas and it will take a while to settle down. They are using several different authoring tools, trying different approaches to security and distributed information management. They needed experience before they fixed the company's policy.

The speaker even had his number plate personalised to Web Surfer. The major difference at Mitre was the range of word processing systems they used. They had introduced a staging area which took WordPerfect, Word and Framemaker documents and intelligently turned these into Web pages.

The best parts of the discussion came from answers by the person from Boeing. The top and bottom of Boeing were committed to using the Web as a management tool of major importance in the company, the problem was the middle layer of legacy managers who saw erosion of their power base. Mitre had put a question on their yearly review which asked if employees were ensuring that the Web pages they were responsible for were up-to-date.

This was an invited talk from Microsoft that had the main auditorium bulging at the seams. The Microsoft Web is at www.microsoft.com with WinNews giving information on Windows95.

This was an upbeat presentation - new shell, new kernel, M-DOS is gone, major features are Win32, built-in networking, plug and play, long file names and shortcuts. All APIs will interface to Win32.

This is the biggest launch in the history of software with 50,000 beta testers and a preview programme extending to 400,000 people. The final beta, Build 347, has just been shipped. The demo was with build 440. The company was still on track for an August release. They will ship in 30 different languages. All major hardware vendors are committed to the product. Over 30 major software vendors are committed to shipping within 90 days of the launch.

The system is built for network access using either X25 or TCP/IP.

Microsoft Internet Explorer was the main announcement, this is a browser based on NCSA and Spyglass Mosaic. The demo was quite impressive gracefully moving in and out of the Web browser cutting and pasting from and to Web pages.

The remit was how to publish real commercial documents (already in SGML) on the Web. The problem was that HTML has to remain simple as that is its strength yet a unique structured model is neither possible or desirable for real information, you need the power of SGML's content-based or semantic mark-up.

Hitachi use HEADS (Hitachi Electronic Application Document System). This SGML DTD is defined and used by all the major VLSI vendors. They have 4000 components and 40,000 pages of documentation, use Omnimark to convert Style-sheeted RTF to SGML and have a filter which turns the SGML into HTML for viewing and also have a richer SGML browser that you can use with the Web shipping real SGML around.

They use the Grif SGML editor to capture product information and are working on translators down to HTML.

All their information is in SGML using their own DTD, browsing information via a filter to HTML.

The company policy is that all documentation is in DotBook, a DTD of SGML agreed by a Consortium. They have an SGML Viewer to access the information from the Web and make use of the Forms capability of HTML to capture information and also to retrieve information from the DotBook database.

The SGI presentation announced the Virtual Reality Markup Language (VRML) which allows you to ship 3D models around the Web. Readily available browsers are being provided for all major platforms including PCs. It is based on the Open Inventor format from SGI. Additions are HTML links.

You can ship the model and then interact with it either with complete control or moving along defined paths (for those who do not have an SGI machine!). As you zoom into a scene it will download another version with greater detail. The size of a file is about the same as a single gif image for the model and about 5% of the equivalent QuickTime movie.

Template are committed to supporting the viewer as well as SGI. You can put anchors on the model and clicking on these brings up the associated Web page. Input can be done via a set of CAD systems such as Wavefront IGES, ProEngineer, Unigraphics: 3D Studio etc.

The conference proceedings can be found at the URL http://www.igd.fhg.de/www/www95/www95.html, copies can be ordered from the URL http://www.elsevier.nl/www3/welcome.html.

Overall, it was an excellent conference and I am looking forward to next year in Paris.

Oxford, Newcastle, Belfast, Warrington, Dublin

The FPIV IT Programme is scheduled to issue its third Call for Proposals on June 15 and the DTI's ESPRIT Unit is arranging an Information Day to take place on June 27. The event is being hosted by the Council for the Central Laboratory of the Research Councils' Rutherford Appleton Laboratory and will be video-conferenced, using UKERNA's SuperJanet Network, to the Daresbury Laboratory (Warrington), Newcastle and (provisionally) Belfast. Subject to technical restrictions, the event will also be relayed to Dublin.

There will be speakers from the European Commission and the DTl; the two way nature of video-conferencing will allow all the audiences to participate fully in the question and answer sessions, whilst experts will be on hand at each locality to provide further advice and guidance.

At the time of writing, negotiations for sponsorship of the event are in progress with a major commercial organisation.

Further information will also be available on the WWW, see below. If you are not on the DTI ESPRIT Unit's mailing list; please contact the DTI ESPRIT Unit.

The next seminar in the PPECC series will seek to address the area of parallel field analysis. A large majority of all supercomputer usage is, in one form or another, for field analysis. This type of computation has therefore been an important target for the parallel machines.

The seminar is to be chaired by Dr M Sabin (Numerical Geometry Ltd previously with FEGS) and covers a wide range of applications (structural, crash-simulation, fluid dynamics and electro magnetics), and also a range of solution techniques (finite elements, finite differences and boundary elements). Both explicit and implicit solution of the equations will be covered, and also the mesh generation which can easily be the bottleneck for realistic problems. Both tightly coupled machines and the parallelism of multiple workstations will be addressed.

The seminar will consist of talks and presentations from current academic and industrial experts. There will be opportunities for discussion on each talk, and an extended session at the end of the event.

A full set of proceedings of the event, compiled from speakers presented contributions will be provided on the day of the event.

The seminar will be of particular interest to engineers who are looking for ways of using parallel computing power to speed their time-consuming analysis codes.

The next course in the PPECC series is Parallel Fortran.

Engineers and scientists would love to run their FORTRAN applications on faster machines. However, access time to supercomputers such as the CRAY is limited and expensive. A new generation of high performance parallel architectures, based on variously interconnected microprocessors, has the potential of providing an affordable resource for compute intensive applications on everyone's desktop. These systems include: workstation networks; the latest shared memory workstations and PCs; and dedicated networks of commodity microprocessors (eg alphas, i860s and transputers). This course will enable attendees to move their FORTRAN codes onto such machines:

An important concern of the applications developer is standardisation. How can it be ensured that the work done today to run an application on a parallel system will still be valid in the future? The tutors will provide information on the standardisation efforts in progress and the best systems to support the portability and future-proofing of source code. The practicals are based on PVM 3, an up-to-date de-facto standard programming system portable between networks of UNIX workstations and dedicated parallel machines.

Delays in publication of research papers are a constant source of irritation to authors and readers alike. Recent advances in electronic media, particularly e-mail and the World Wide Web, are being used to ease this problem in various disciplines and now the CFD Community Club is starting up a f1uid-dynamics service.

The service will be available to anyone with ftp, www and telnet access to the Internet. Contributors may submit any article or paper they wish for others to read and comment upon. Submission does not prohibit later publication in paper journals. In this respect it is no different from the long established practice of distributing paper copies of preprints. The advantage is that it provides a rapid method for publishing new results.

If you have papers you would like to make available via this service please contact me at the address below. Each paper contributed must consist of the following:

Tools to help with the preparation of papers will also be available.

The CFD Community Club is organising a workshop on Thursday 13 and Friday 14 July 1995 for those who wish to learn about the development of quality Fortran 77 software. It is primarily aimed at the CFD community but should also be of interest to researchers in other fields in particular in the engineering domain. It will be held at the Rutherford Appleton Laboratory.

The first day of the workshop will cover the fundamentals of good programming practice including testing, portability and structured programming. It will also cover those aspects of software engineering which are necessary to understand the commercial software Quality Assurance packages, including static/dynamic analysis and metrics. In the afternoon three commercial vendors will demonstrate their products. Also included will be a presentation on the public domain tools which are available and a talk by our guest speaker, Dr John Reid, on Fortran 90.

On the second day of the workshop, participants will have the chance to try each of the vendor tools and certain public domain software on their own Fortran 77 programs using a set of networked Sun Sparcstations.

As the workshop numbers are limited due to availability of workstations, anyone wishing to attend should complete and return the registration form, included as an insert to this newsletter, as soon as possible.

The CFDCC maintains a library of common academic CFD software on the ftp server at HENSA. Sadly, due to increasing pressures to generate income from software, fewer academics are able to make their software freely available. However, you may have software which you are willing to make available for academic use only. This is possible by releasing the software under the terms of a restricted licence.

The CFDCC would like to place on HENSA a catalogue of CFD software. available for academic use and is seeking entries for inclusion.

Entries, which can be e-mailed to cfdcc, should be plain text and contain the following categories of information with up to 10 lines in any category:

The recent exercise to survey providers of CFD courses has been completed. A booklet has been produced which includes details of CFD training courses run in the UK. The booklet is available to any CFDCC member from Virginia Jones.

Rutherford Appleton and Daresbury Laboratories have gone through many changes in structure and name during the last few months. These changes are now reflected on the Web. The main entry page for the new Council for the Central Laboratory of the Research Councils is at http://www.ccl.ac.uk/ The Computing and Information Systems Department of the Rutherford Appleton Laboratory is at http://www.cis.rl.ac.uk/. This page leads to the department structure, staff, details of projects and services provided. The page also links to the online versions of the Graphics and Visualization Newsletter and the Engineering Computing Newsletter, including back issues.

It was announced on the 15 February that the European branch of W3C (WWW consortium) is to be based at INRIA in France.

Video is a convenient, low cost medium for allowing time-varying information to be studied. It can be used for presentation to an audience or for analysis in one's own research. Straightforward phenomena can be studied and presented on a desktop computer. However more complex phenomena are outside the capabilities of a desktop but, with sufficient online storage, image sequences can be created and played back under the user's control.

If the local resources are not sufficient for this, video can be used. If the video is not being generated in real time, it is necessary for the VCR to be controlled by the computer which allows images to be replayable at the correct speed. Although equipment to do this can be purchased, it is in many cases not convenient for the user, especially if the video is a small part of the research.

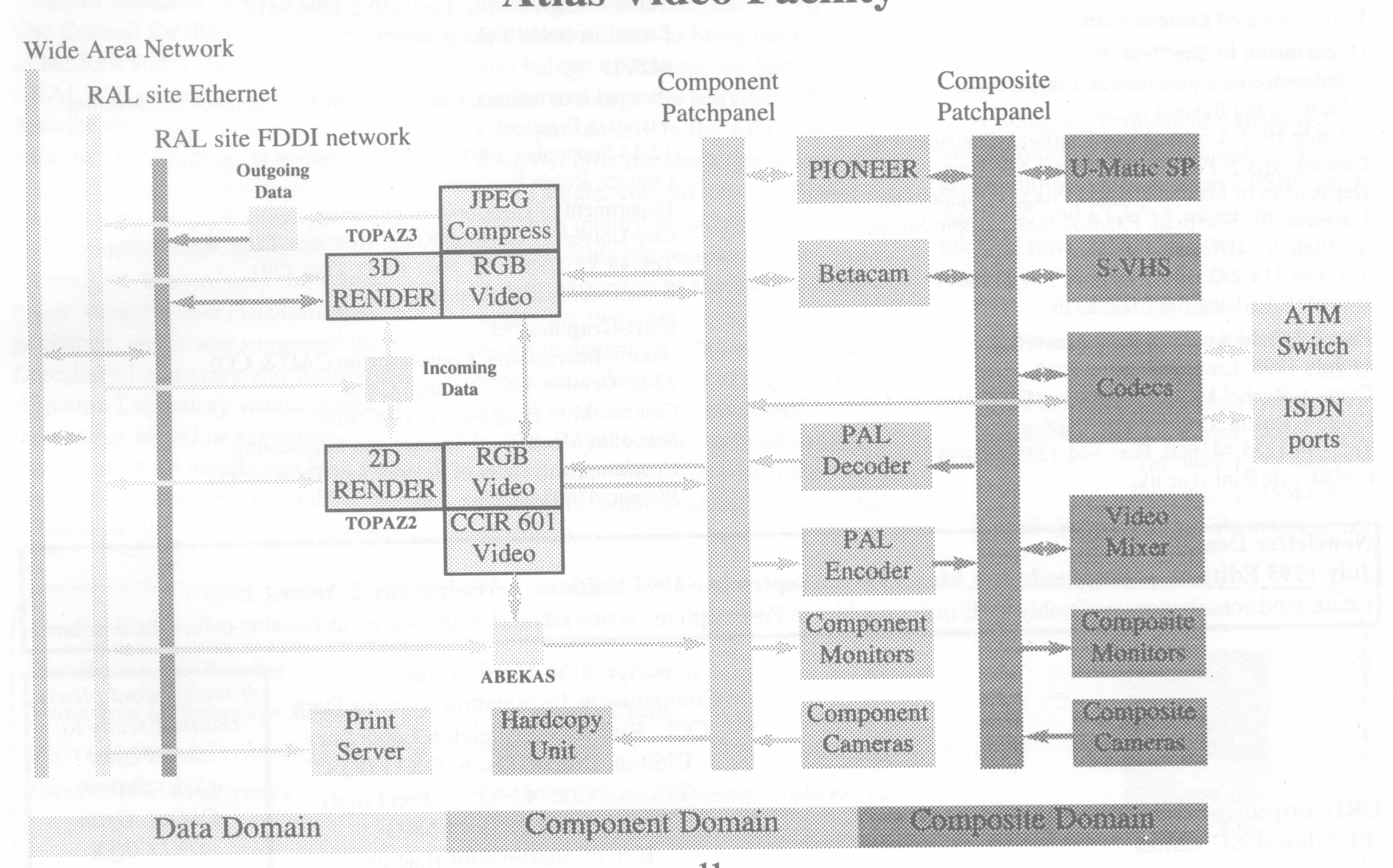

The Atlas Video Facility at RAL is designed to offer video production facilities for people needing to make videos where local production is not appropriate. It was originally set up in conjunction with the RAL Cray supercomputing service, but it can also be used independently and indeed has been used many times where no supercomputer is involved.

We believe that this facility may be particularly useful for people in the engineering research community who need to analyse or present complex data.

The most convenient way of thinking of the facility is to imagine the flow of data and images through the system:

The detailed structure of the facility is shown in the figure below.

The video facility has been used for science, engineering, biology, forensic science, ocean and atmosphere modelling, medicine, social sciences, architecture, fine arts etc.

If you believe that you may wish to make a video using the Atlas Video Facility, you should contact us early on. We can discuss methods of presenting your data and can prepare to make resources available (for example disc space) as necessary to support your use of the facility.

Depending on bandwidth, we can accept data by most physical media or over the network.

Like many other services nowadays, the facility is not free. Despite the change in the status of the Rutherford Appleton Laboratory, we still hope that it is possible to offer favourable arrangements to certain categories of people and these are being worked out. Details of the charging arrangements can be provided on request.

To discuss your use of the Atlas Video Facility, contact Chris Osland