The significant organisational changes affecting the Rutherford Appleton Laboratory (RAL) from 1 April 1995 have already been reported in the last issue of ECN (No. 56). The Council for the Central Laboratory of the Research Councils (CCLRC) is the legal entity which is now responsible for RAL, Daresbury Laboratory and Chilbolton Observatory. The formal relationship between CCLRC and all the other Research Councils is covered by an Umbrella Agreement with each separate Council. Any activity carried out by CCLRC on behalf of another Research Council is covered by a separate Service Level Agreement (SLA) which operates under the overall Umbrella Agreement with that Research Council. In general SLAs are expected to operate for a rolling 3-year period.

Unfortunately in the case of the current EASE programme the SLA with the Engineering and Physical Sciences Research Council (EPSRC) is for one year only (1995/96). This means that the funding for the present RAL-based EASE activities will end on 31 March 1996. At the time of writing there can be no certainty of any EASE activities continuing beyond this date. You will also be aware of the way that EPSRC is structured into a series of managed programmes with no apparent provision for federal activities such as EASE. EPSRC Programme Managers have decided to carry out a questionnaire survey of their existing grant holders to help them decide whether or not there is an identifiable need for some EASE-like activities after 31 March 1996. The questionnaire was sent on 3 July to 3000 existing grant holders defined by the Programme Managers with a deadline of 31 July for responses. Repeated requests from RAL to include the 3200 recipients of ECN in this survey were refused on the grounds that the primary role for any future EASE-like programme must be to support existing EPSRC grant holders. If you are not one of the existing grant holders to whom this questionnaire has been sent, I am afraid your views will not be sought on the value to you and your research of the current EASE programme, or the need for a continuation of all or part of it beyond 31 March 1996. All I can do in this case is to invite you to write to EPSRC (Dr P C L Smith) giving your views on both the current programme and the need for a future EASE-like programme. Should you decide to write such a letter I would appreciate receiving a copy.

Once the questionnaire responses have been analysed, the EPSRC Programme Managers will decide if there is sufficient demand for some form of continuation of the EASE programme. If they decide that there is a case, they will agree what form it should take and what level of funding they are prepared to earmark for this. The next stage will be to go to an open, competitive tender to run this new programme. RAL will have no part in any of the discussions on the questionnaire responses and will not have any privileged position in any competitive tender situation.

You will be informed of the final decision taken by EPSRC on this important matter in a future issue of ECN.

RAL staff have been discussing with EPSRC Programme Managers what specific events should be run in the current year under the EASE Events Programme. As a result of these discussions, 3 seminars have been identified. The topics and provisional dates for these are as follows:

If you would like to be involved in any of these seminars, either as an advisor on the programme or as a speaker please let me know as soon as possible.

Neural networks main claim to fame is that they have the ability to discern patterns in data. Great. How about being able to characterise the congestion pattern that is unfolding in a city, so that the traffic control system can be instructed to choose that control strategy that is most suited to that pattern?

It was with something like that aim in the back of the mind that research staff at the Institute for Transport Studies first approached the then SERC for funds for a one-year pilot study of the applicability of neural networks in transport, back in 1991. Data was acquired from the SERC transport research facility, the Instrumented City of Leicester, piped through to Nottingham University's Transportation Research Group by courtesy of Leicestershire County Council, and experimentation began on whether the data of flows, speeds and occupancies could recognise congestion. What is meant by congestion, of course, is a non-trivial question. Like other transport terms (such as accessibility and mobility), it is a term that is conceptually easy to grasp but difficult to define, for there are many dimensions to its meaning. A useful definition is that congestion causes a reduction in supply - the maximum through-put possible has been diminished (eg through blocking back to a junction). An observer assessed from videos what happened on a street, and determined whether a street was congested or not: and this provided the output indicator, which the neural network aimed to predict, utilising as inputs up to three congestion indicators. The three indicators were number of stops per vehicle; queue length; and capacity. Each was derived at 5-minute intervals for 20 inductive loop detector sites for the SCOCT urban traffic control system for a region of Leicester. Using the back-propagation technique, neural networks were found not to converge when only one measure was used; converged better when using two congestion parameters; and best of all when using three [1]. The power of neural networks to give insights into complex phenomenon was thus illustrated.

Or so we thought, on that evidence. Subsequent work at Nottingham led to a further 10 congestion indicators. The Nottingham group came to the conclusion that just one of these, a measure of travel-time, was a sufficient indicator for congestion on Leicester streets. Whilst the use of neural networks with various combinations of all 13 indicators was not systematically explored, that experience shows that, given the right sort of input data, simpler methodologies can suffice. Though of course this may not be known until different techniques have been tried.

But the power of neural networks to give insights into complex phenomenon is nonetheless a very real one. Using NeuralWorks Professional II, and the public domain software SOM_PAK, running on a SPARC workstation, it is extremely easy and quick to explore the effects of a wide range of modelling options and of choices of input data variables. This was amply illustrated by analysis of data obtained from another SERC grant, in which Institute for Transport Studies staff had designed and developed a computer-based simulation game (known as VLADIMIR). This was designed to obtain basic data on how drivers respond to in-car route guidance information. In the game, volunteer drivers choose a route through a hypothetical network. They are presented with a variety of decision parameters, which may or may not prove significant, as they arrive at each junction. Each example of a route choice decision was stored on disc with the corresponding parameter values. A large data set of several thousand route choice examples was collected. Over the course of several months, different forms of multivariate model were fitted to these data, the chosen form being that of multinomial logit, using the BLOGIT package. It was decided that this data set was suitable to carry out an experiment with the neural network package, to assess how readily it could discern patterns in the data. The analysis by neural networks was carried out using back propagation networks with a training set of approximately two thousand examples. A test set of two hundred examples not seen during the training phase was adopted. It was shown that the network could learn, and make predictions with an accuracy as good as or better than the best achieved with conventional statistical methods. The most remarkable finding was that this level of accuracy was achieved with a fraction of the effort required for conventional statistical techniques.

From that experience, has grown the conviction that neural network techniques can be a cost-effective tool for the analysis of even more complex data, obtained using the Leeds Advanced Driving Simulator, which was developed as a national research facility with the aid of EPSRC grants, the donation of a car by the Rover Group, and university funding. Under a current EPSRC grant, neural networks are being used to model driver decisions (eg of gaps acceptance at junctions); in due course this will be used to change the other drivers in the simulated scene from dumb to intelligent clones. Other work is planned to see how psychometric measures are affected by driving environment.

This use of neural networks as a modelling tool (as opposed to a classification tool) brought us up against the statistical analysis versus neural networks controversy of the present age (see for example Ripley [2]). The area of research that has exemplified for us many of the issues involved is that of short term forecasting. Data from the City of Leicester, referred to above, provided early experience in comparing the effectiveness of neural networks with Box-Jenkins techniques, in particular the ARIMA model, for an urban area, and suggested that the two techniques had comparable performance. (In the ARIMA model, flow at a site is expressed as a linear function of flows at previous time steps for that and adjacent sites). But it is the inter-urban context on which we subsequently focused more attention. Sensors on motorways every 500 metres or so provide information on traffic composition, speed, flow and occupancy at 1-minute intervals. Through involvement in the European Commission's DRIVE IT project GERDIEN, we developed modules for forecasting traffic 5, 15 and 30 minutes ahead, for each of the parameters flow, speed and occupancy. These were implemented on the GERDIEN trial site, for a stretch of the A12 motorway near Rotterdam, by crafting the neural networks down into C code, using the NeuralWare Designer Pack. Forecasts were made in faster than real time (ie in less than the minute that elapsed before new data came in), for all 24 sites, simultaneously. The key to achieving this performance was by developing a neural network structure that could be applied to all sites (so only the parameters of the networks varied from site to site); and by reducing the set of input variables to the most important ones.

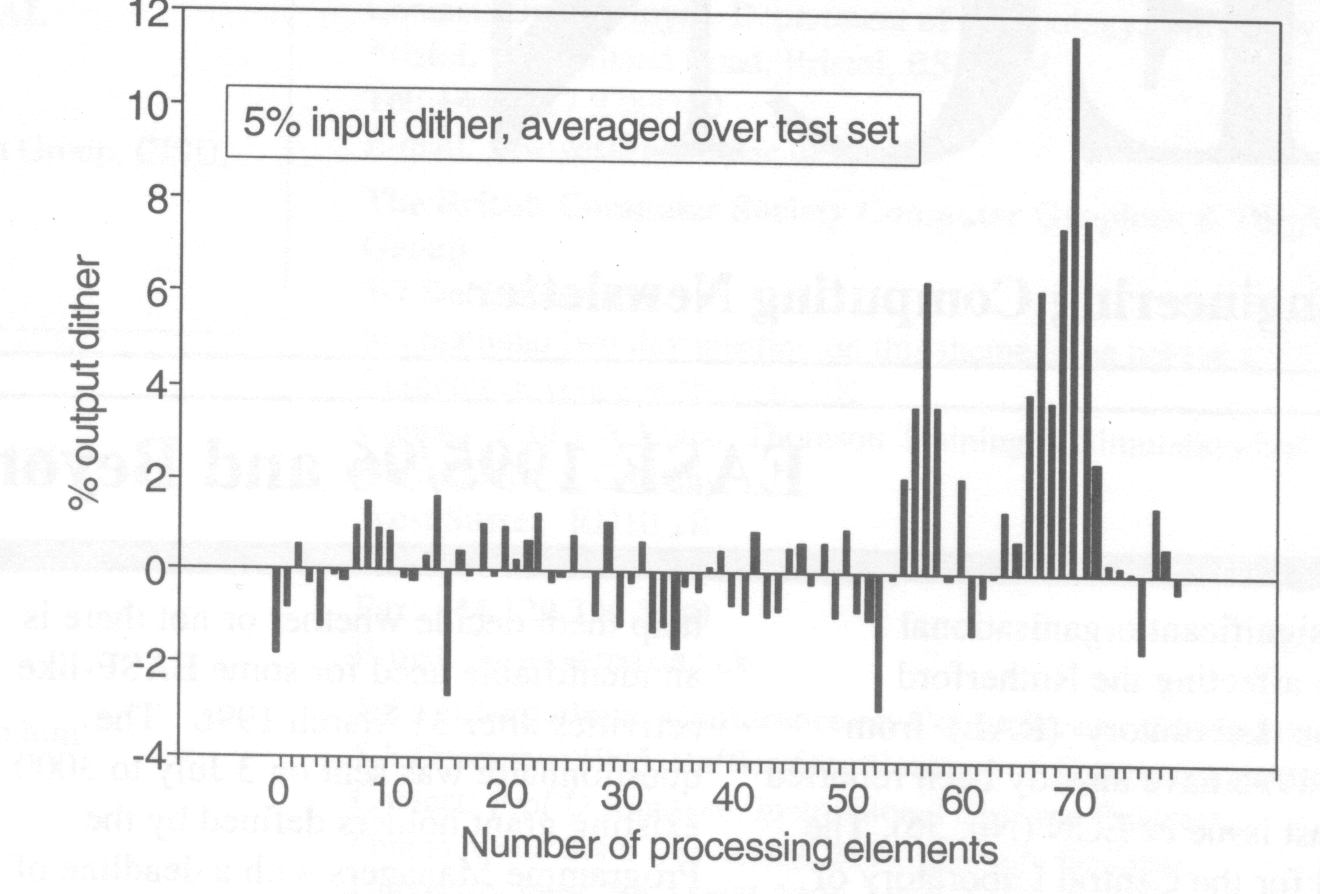

Establishing a procedure that could undertake this reductionist step was the most critical phase. By contrast with statistical methods, where techniques are well established to identify which variables to discard (or include) to explain most of the variation in a dataset, no such techniques were established in the neural networks area. Fortunately, experience with the analysis of the route guidance data referred to above, had already suggested an appropriate approach: vary the input data by a small amount, and see how much it affects the output. Because such sensitivity analysis had to be applied to all input variables many times, we called this technique dithering. With the aid of another EPSRC grant, the technique was further refined, and the generality of its usefulness established. The ratio of change in output to change in input (the dither ratio) was used to reveal the most sensitive variables to use; an example of this is shown in Fig 1.

Whilst we had therefore overcome one of the limitations that neural networks was deemed to have by contrast with statistical techniques, no direct comparison with statistical methods could be undertaken in the GERDIEN trial. Instead, we turned our attention to data from France, for which an adaptive statistical model, called ATHENA, had already been developed by a team at INRETS, Paris. The data proved to provide a different perspective from that for the Dutch site. Available only at 30-minute intervals, for just the forecasting site and sites on adjoining motorways some 30 kms away, it was much more difficult to get a satisfactory performance from the neural networks. Indeed, our first paper on the subject also reported poor performance with ARIMA type models as well: but fine-tuning of the latter has since produced forecasting performance nearly as good as the ATHENA model. The question arose as to whether there was some structural explanation of why the ATHENA model was performing better than the alternatives. The answer appeared to lie in the fact that the first stage of the ATHENA model was classifying the data set into different components. First, a July-August distinction; then a distinction by other time periods, in a manner suggested not by traffic engineering considerations, but by a clustering algorithm. The actual forecasting model for each cluster was thereafter very simple.

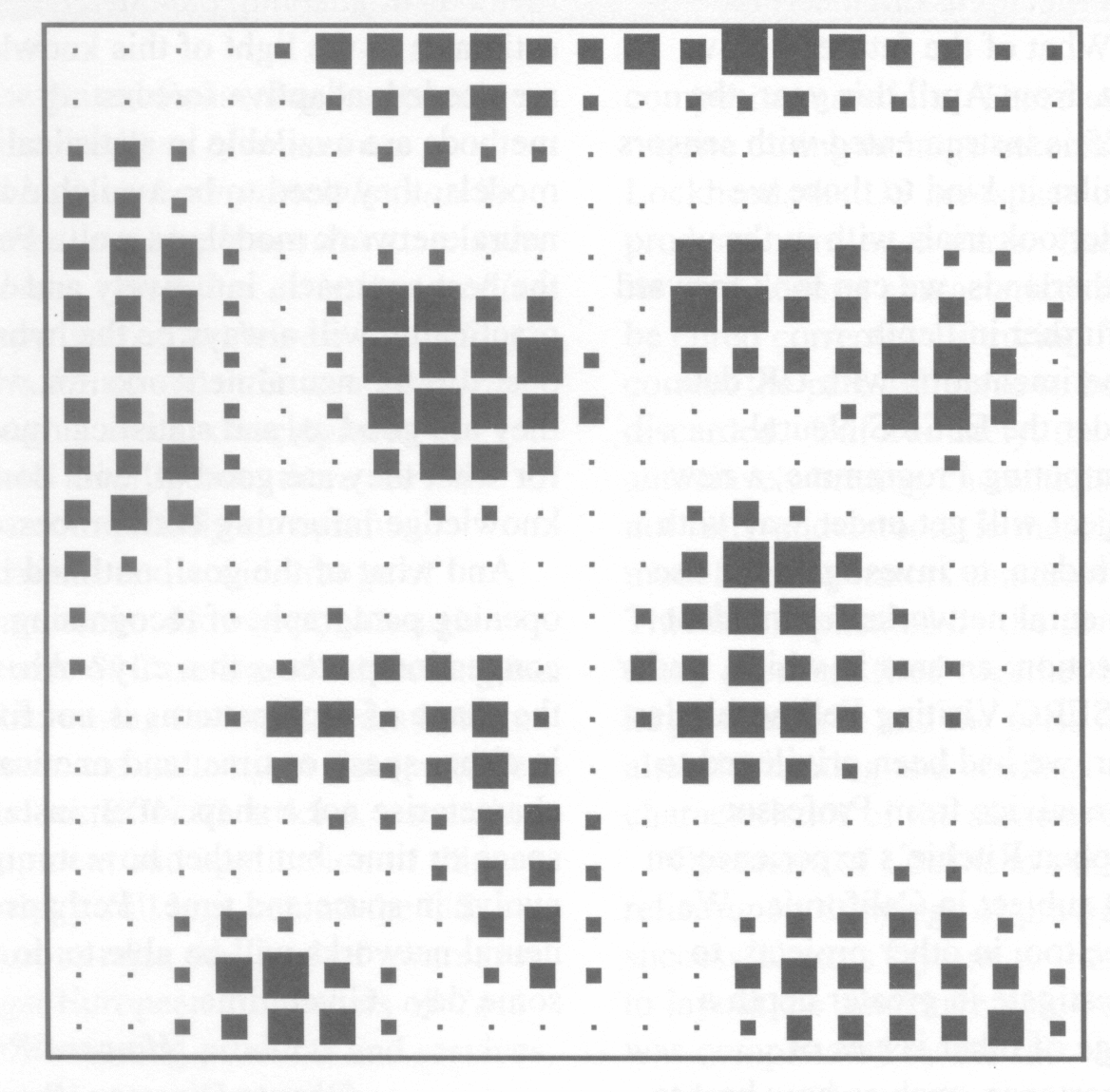

This led to the suggestion that perhaps the best approach overall might be a hybrid one: use neural networks for what they are best at - the classification stage; and statistical methods for what they are best at - the formal model of the relationships. Analyses conducted by a student on a work-placement from the University of Twente, has shown that the Kohenen net applied to these data produces a map (Fig 2) with strong clusters; and that ARIMA models developed for each cluster are now yielding a predictive performance overall better than ARIMA models alone, and nearly as good as the ATHENA model. Given that the latter was a site-specific approach, this suggests that generalisation to other situations may now be more readily feasible.

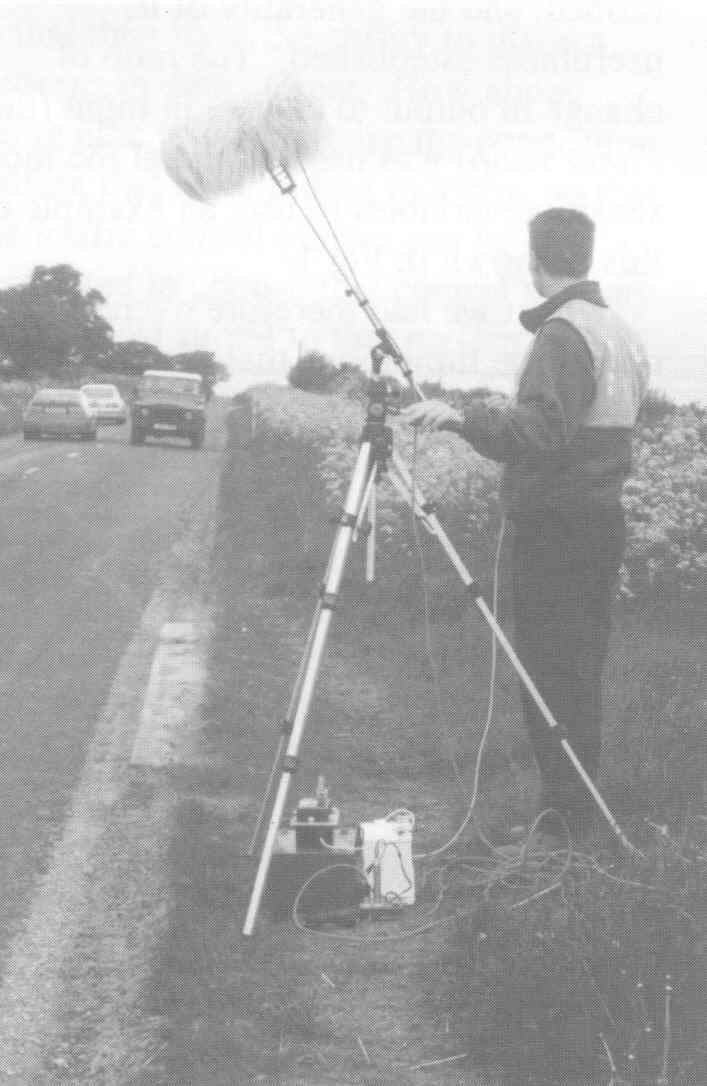

The use of Kohenen nets was not of course the first time that techniques other than the back-propagation technique have been tried in our work. Usually, they have not been very promising; but in another area, that of audio-monitoring, learning vector quantisation is being found to be particularly useful. In this study, also funded by EPSRC, th~ objective is to count and classify vehicles from the sounds they make, with the aim of developing a suitable sensor. Small-scale pilot trials (Fig 3) have yielded over 95% accuracy in the ability to classify vehicles into (at present) four types (As we went to press we learned that Dr Stephen Hadland, the research fellow employed on the audio-monitoring project, had died in a road traffic accident.). Essential to achieving this success has been the pre-processing stage (which is very important, for all application areas). In this case, the preprocessing used an auditory model, developed at the Speech Systems Laboratory of the Department of Psychology at the University of Leeds; the project also harnesses appropriate skills from the Department of Electronic and Electrical Engineering.

What of the future? Now that, from April this year, the M25 is instrumented with sensors similar in kind to those we undertook trials with in the Netherlands, we can look forward to further in-depth experimentation with UK data. Under the EPSRC Neural Computing Programme, a new project will get under way with such data, to investigate the use of neural networks for incident detection: an area in which, under an SERC Visiting Fellowship last year, we had been privileged to have advice from Professor Stephen Ritchie's experience on that subject in California. We hope too, in other projects, to investigate in greater depth a range of other issues of importance, such as how best to allow for the effects of dead detectors; how forecasting performance degrades over time; and the pros and cons of different techniques, with the aim of implementation.

What of the issue of statistics versus neural networks? It is clear that both kinds of technique require considerable tuning, and attention by experts, to get the best out of them. Overtness of model structure, which is often claimed as an advantage for the statistical model, is not necessarily a blessing. Consider motorway traffic: if speeds halve, it will take traffic twice as long to reach a given point. So the lags built into ARIMA-type models should not be fixed, but speed-dependent! Statistical models do not necessarily reflect the realities of traffic dynamics, nor are they necessarily able to cope with all the different kinds of data available. That provides some excuse for using models, like neural networks, that learn from the data how outputs are related to inputs, though models that do reflect the underlying processes, are clearly to be preferred, if they can be built. It is not clear that issues of comparative performance alone are the main consideration: issues of implementation, and of ease of updating parameters all come into it. Since, with short-term forecasts, one very soon has feed-back on the adequacy of the forecast, methods of adapting parameter estimates in the light of this knowledge are needed; adaptive forecasting methods are available in statistical models, they need to be available in neural network models as well. Perhaps the best approach, intuitively and practically, will always be the hybrid one: use the neural networks for what they are good at, and statistical models for what they are good at, with domain knowledge informing both processes.

And what of the goal, outlined in the opening paragraph, of recognising congestion patterns in a city? Ah, but the shape of such patterns is not fixed, in either space or time; and one wants to characterise not a shape at an instant of space or time, but rather how it might evolve in space and time. Perhaps neural networks will be able to do it some day. Given time.

[1] Clark, S.D., Dougherty, M.S. and Kirby, HR. (1993). The use of neural networks and time series models for short term traffic forecasting: a comparative study. In: Transportation Planning Methods, Proceedings, PTRC 21st Summer Annual Meeting, held in Manchester, September 1993. London, PTRC Education and Research Services Ltd.

[2] Ripley, B.D. (1994). Neural networks and related methods for classification (with discussion). J. Roy. Stat. Soc., B56(3), pp 409-456.

Dougherty, M.S, Kirby, HR. and Boyle, R.D. (1993). The use of neural networks to recognise and predict traffic congestion. Traffic Engineering and Control, 34(6), pp 311-318.

Dougherty, M.S., Kirby, HR. and Boyle, R.D. (1994). Using neural networks to recognise, predict and model traffic. In: Bielli, M., Ambrosino, G. and Boero, M. (1994). Artificial Intelligence Applications to Traffic Engineering. VSP, Utrecht, The Netherlands, pp 233-250.

Dougherty, M.S. and Joint, M. (1992). A behavioural model of driver route choice using neural networks. In: Ritchie, S.G. and Hendrickson, C.T. (eds), Conference Preprints, International Conference on Artificial Intelligence Applications in Transportation Engineering, San Buenaventura, California, USA, June 1992. Inst. of Transp. Studies, Irvine, California, pp 99-110

Case-Based Reasoning has been described as one of those rare technologies whose principles can be explained in a single sentence: to solve a problem, remember a similar problem you have solved in the past and adapt the solution to solve the new problem. Although a recent development in Artificial Intelligence, there are now many commercially successful applications and a wide-range of software tools. However, although awareness of Case Based Reasoning has been increasing in recent years both in academia and industry in Europe (refer to previous ECN 52 article), research, development and application of this technology still lags behind the us. Thus, to raise the level of awareness amongst European companies of Case Based Reasoning products and services, and help them understand the advantages of this new technology, the European Commission has established a project, CASTING, to analyse the demand for Case Based Reasoning, and identify appropriate Case Based Reasoning products and solutions that are available in Europe today.

Case Based Reasoning technology is a unique problem solving technique that offers the ability to develop Knowledge Based or Expert Systems more cost effectively and with a much reduced development time scale than many existing methods currently in use by European industry. This technology has proved its pedigree throughout the United States (for example in American Airlines, DEC, General Motors, Lockheed and Westinghouse) and at many major universities in recent years, but it is relatively new to European companies. Knowledge-Based Systems are one of the success stories of Artificial Intelligence research, Case Based Reasoning technology moves the frontiers of this research even further forward, enabling developers to create accurate decision support systems and automate problem solving processes based on the analysis of previous cases and examples.

For example, one of the first commercially fielded Case Based Reasoning application was by Lockheed in Palo Alto. Modern aircraft contain many elements that are made up from composite materials. These materials require curing in large autoclaves. Lockheed, the US aerospace company, produce many such parts. Each part has its own heating characteristics and must be cured correctly. If curing is not correct the part will have to be discarded. Unfortunately, the autoclave's heating characteristics are not fully understood (ie there is no model that operators can draw upon). This is complicated by the fact that many parts are fired together in a single large autoclave and the parts interact to alter the heating and cooling characteristics of the autoclave.

Operators of Lockheed's autoclaves relied upon drawings of previous successful parts layouts to inform how to layout the autoclave. However, this was complicated by the fact that layouts were never identical because parts were required at different times and because the design of the parts was constantly changing. Consequently operators had to select a successful layout they thought closely matched and adapt it to the current situation.

This closely resembled the Case Based Reasoning paradigm and when Lockheed decided to implement a Knowledge-Based Systems to assist the autoclave operators they decided upon Case Based Reasoning. Their objectives were to:

The development of CLAVIER started in 1987 and has been in regular use since the Autumn of 1990. CLAVIER searches a library of previously successful autoclave layouts. Each layout is described in terms of:

CLAVIER acts as a collective memory for Lockheed and as such provides a uniquely useful way of transferring expertise between autoclave operatives. In particular the use of Case Based Reasoning made the initial knowledge acquisition for the system easier. Indeed, it is doubtful if it would have been possible to develop a model-based system since operatives could not say why a particular autoclave layout was successful. CLAVIER also demonstrates the ability of Case Based Reasoning systems to learn. The system has grown from 20 to over several hundred successful layouts and its performance has improved such that it now retrieves a successful autoclave layout 90% of the time.

The awareness project, CASTING, will initially conduct a market research programme among European industries to enable the project to develop an appropriate seminar schedule which aims to stimulate industrial demand for Case Based Reasoning technology. The seminars will be held throughout Europe and will explain Case Based Reasoning solutions and their methodologies, and will discuss the relevance of this technology for Europe. To help companies identify suitable Case Based Reasoning products and services the project will also conduct a technical analysis of existing packages, and invite Cased Based Reasoning vendors to demonstrate the appropriateness of their tools and services across a wide range of application areas.

A broad range of European companies will be invited to participate in the initial analysis stage of the project. If your company would like be included please contact me.

The Construct IT Centre of Excellence, based at the University of Salford has become the implementation body for the Government's Information Technology Strategy for the UK Construction Industry.

At the launch of Construct IT, on 24 May at Althorp Hall, the Minister of State for Construction and Planning from the Department of Environment, the Viscount Ullswater, endorsed the Centre. The Minister said the strategy is to give focus to the next phase of development, to bring forward the common infrastructure necessary to support fully integrated systems and to secure the rapid, accurate and auditable flow of information related to projects ...it is proposed to implement the strategy by... the creation of a Centre of Excellence.

He went on to say this implementation body has been styled the Centre of Excellence. I am particularly delighted that the team will be led by Geoff Topping of Taylor Woodrow.

Geoff set up the Centre with backing of Government to address this need. He is supported by an administrative team, based at Salford and has formed a Management Board with representatives from industry, clients, professional and research institutions and the IT industry.

At the launch of Construct IT, Geoff gave the industry's view and the need for industry to be well-informed and to work together. He extended the points made by the Minister, by saying the emergence of advanced technologies and the greater spirit of collaboration within the industry, is creating a major new opportunity to do, not just talk about, something practical for the industry. It can, in part, be achieved by identifying and sharing best practices from our own industry and others; and we must ensure that standards and policies will work.

The launch was sponsored by BT in conjunction with the Department of the Environment and was attended by 160 leading figures from construction and consulting companies. It was set in the sumptuous surroundings of Althorp Hall, home to the Spencer family. In addition to speeches there was a Solutions Showcase, demonstrating some of the latest available technology; to show how some of the new emerging technologies can become a reality.

The Construct IT Centre of Excellence is a collaborative venture between industry, clients, professional and research institutions and the IT industry. As well as having the support of the Department of the Environment; contributing contractors and consultants include: Alfred McAlpine, AMEC, Balfour Beatty, Bovis, Costain, Laing, Tarmac, Taylor Woodrow, Trafalgar House, Tilbury Douglas, Building Design Partnership and Bucknall Austin.

Members from the IT industry include UNISYS and Engineering Technology and from the communications industry, BT. The supporting associations and professional institutions are: BMP, CIRIA, CIOB, Construction IT Forum, EDICON, RIBA and the RICS. Universities and research institutes include the Building Research Establishment and the Universities of Loughborough, Nottingham, Reading, Salford, Sheffield, Strathclyde and UCL.

There are many activities planned for the Centre. A series of Best Practice Seminars and reports starting in September will kick-off with the results from a benchmarking exercise to show the use of IT between suppliers and contractors. The second in the series will focus on the relationship with clients. A major activity will be coordination of the implementation of projects referred to in the Construct IT Report. The Centre of Excellence will be working closely with Government to ensure that these projects reach successful implementation.

A database of research projects in the field of Construction IT is being compiled for the World Wide Web and will be available in the autumn. A detailed research project into the development of an integrated project database and an integrated industry knowledge base has also just commenced.

In addition three international conferences are planned. The first in October 1995 hosted by the University of Reading, to be held in Oxford, on Construction Futures in conjunction with CIB W82. The second in August 1996 in conjunction with CIB W65 in Glasgow, and the third hosted by the University of Loughborough in conjunction with CIB W78 to be held in London.

In October a part-time Modular Masters programme in Construction IT will commence at the University of Salford, with industrially sponsored delegates. The objective of the course will be to give delegates a full working knowledge of efficient construction business processes and how relevant and cost effective information technology can be harnessed to the benefit of the Construction industry.

The administrative centre is based at the University of Salford and if you would like to find out more about the Centre or become an Associate, please contact us.

The London Stock Exchange (LSE) has nine sites in central London with further properties throughout the country. The use of these premises vary considerably, and a high level of resilience and redundancy is required. The building services include computer systems, heating, ventilation and air conditioning; in total there are over 10,000 items of plant. Throughout the life-cycle of each building, equipment is subjected to alteration and replacement. Hence, an up-to-date and accurate record must be maintained.

The construction industry has a long history of difficulties with recorded information. Hand-over documentation is varied when a large number of companies are involved. Much of this information is not in a form directly usable by the building operator and, hence, accuracy is compromised. The statutory requirements under the provisions of the Safety at Work Act, the Work Equipment Regulations, and the soon to be instigated Construction (Design and Management) Regulations dictate that accurate records of all systems are maintained.

This problem is not just common to the LSE: engineering industries also face major problems with information control. The magnitude of this task led to the formation of the AMDECS (Asset Management and Data Exchange for Complex Engineering Systems) Project, partially funded by the Department of Trade and Industry, with the collaboration of industrial partners who faced similar problems, and the technical advice and support from the Control Systems Research Centre. The South Bank University is the academic partner; the industrial partners are Facet Ltd, Haden Young Ltd, Hallmark Facilities Management Ltd, Wix McLelland and the LSE.

The aim of AMDECS is to produce a set of generic tools that would allow the development of a common framework for data exchange and modelling. Such a platform would facilitate the seamless exchange of information between different applications, throughout the life cycle of the various plant equipment.

The research and development undertaken for AMDECS has posed many challenges for software engineering. The software being developed fully conforms to the International Standards Organisation standard STEP - ISO 10303.

For this project the choice of data model was mainly based on those of interest to the consortium partners, although due consideration was given to third party domain compatibility criteria. However, this approach to data modelling does not preclude accommodating any existing sector-specific application protocols. Thus the tools developed for AMDECS support model evolution and migration as well as remaining generically applicable across all domains irrespective of the type of data model or application sector. The data model is created using a NIAM modelling tool, NESSIE, which was developed by South Bank University on a previous research project sponsored by DTI and SERC.

Within the AMDECS framework the EXPRESS Schema generated by NESSIE is passed to a SQL compiler that converts it into two sets of ANSI SQL statements. The first set of SQL statements is used to generate the central database with all the links and relationships specified in the graphic data model preserved. The second set of SQL statements is normally activated when data exchange becomes necessary, whereby this set extracts the required data from the database and outputs it to a physical file conforming to ISO 10303-part 21.

AutoCAD has been enhanced to allow on-line communication with the central database. The database can thus be populated, modified or purged via this CAD interface. Consistent with the requirement for the genericity of the platform, such a CAD interface is designed to be user-configurable so as to operate with different data models.

A gateway has been produced to allow the importing of information from a STEP file to the central database. This gateway allows the user to deselect some items or attributes in the data model if the user wishes to avoid importing such items to the central database. Each configuration can be stored as a process for a specific source of the STEP file. Such a process can then be recalled to import further STEP files from the same source. This control mechanism provides a safeguard that prevents the central database from being corrupted by overwrites; a security feature critical to the protection of reference data such as the engineering design specification.

As part of AMDECS, an additional interface has been produced to read and write STEP files for the in-house Building Maintenance Management Systems. As the majority of the commercial Data Base Management Systems can both import and export ASCII-delimited files, an ASCII translator module has been developed which translates an ASCII-delimited data file into a STEP physical file or vice versa.

Initially the LSE engineers developed their data model using NESSIE. This model, represented as an EXPRESS schema, was passed to the SQL compiler to generate the resulting LSE database. The default values in the database were modified to suit LSE's target applications. Thus, using the translator and the gateway, the information from existing database was imported to the LSE database. Similarly, supplier information could be imported as required. One of the system tables in the LSE database had AutoCAD-related class information added to it to enable online user control via the AutoCAD menu-based command system that thus acted as the host user interface. In this way, using the CAD interface, objects within existing drawings can be associated with specific information in the database or a new instance can be created in the database to describe the object. Also, updates to drawing material arising from physical modification or expansion occurring in the plant can be accommodated. The integrated architecture thus offers flexible and efficient asset maintenance and data exchange in complex database applications.

The outline proposals received against the first calls in the Aerospace, Construction and Process Industries Sector Targets have been assessed for relevance against IMI criteria and a proportion, around 25%, have been invited back as full proposals. The summary outcome of the assessment exercise is shown in Table 1 below.

These full proposals are in the final stages of the consideration process, with the first projects being under way in July.

| Number | £M value | Public Sector Provision £M |

Industry Provision £M |

Number of Academic Institutions |

Number of Industrial Partners |

|

|---|---|---|---|---|---|---|

| Aerospace | 31 | 21.3 | 8.7 | 12.6 | 18 | 38 |

| Construction | 23 | 6.3 | 3.3 | 2.8 | 19 | 94 |

| Process Industries | 16 | 20.7 | 11.7 | 9.0 | 13 | 60 |

| Total | 70 | 48.1 | 23.7 | 24.4 | 50 | 192 |

The breakdown of outline proposals received against the first call for the Road Vehicles are shown in Table 2.

| Number of Proposals |

Value £M |

Public Sector Provision £M |

Industry Provision £M |

Number of Academic Institutions |

Number of Industrial Partners |

|

|---|---|---|---|---|---|---|

| Aerospace | 43 | 29 | 13 | 16 | 22 | 124 |

These outlines are now being assessed by the Sector Programme Manager, Chris Cernes, and those satisfying the criteria will be invited to submit full proposals.

As a result of competitive tender, Praxis plc (part of Touche Ross) has been awarded a contract to carry out a scoping study to identify and prioritise the telecommunications requirements for manufacturing within the UK. The study will include the needs of the four IMI Sector Targets which have already been launched (Aerospace, Construction, Process Industries and Road Vehicles). The results of the study will be used to determine the research areas within the telecommunications sector which should be supported.

Professor John O'Reilly from UCL will present the revised case for a telecommunications sector to the IMI Management Committee at its meeting on 17 July.

Anyone who wishes to make an input to the scoping study should contact me.

The Economic and Social Research Council (ESRC) has recently announced that, as part of its contribution to the IMI, it is commissioning a Resource Centre for Business Processes that will be a network centred upon the University of Warwick. This was as the result of an open competition process. The formal start date of the Centre was 1 April 1995.

The Business Processes Resource Centre will develop research capacity and facilitate knowledge exchange through services to researchers and business. These services are expected to include:

The Director of the Business Process Resource Centre is Dr J Daniel Park. He can be contacted by post at: Warwick Manufacturing Group, Department of Engineering, University of Warwick

With the announcement by the ESRC of the Business Processes Resource Centre it is planned to start a series of IMI meetings directed at reporting back to the community on IMI work related to the business process and at seeking input from the community as to the direction of generic work on business processes.

The first meeting will be held on Wednesday 26 July 1995 at the Queen Elizabeth II Conference Centre, Westminster. The provisional agenda includes a keynote address, reports from each of the current IMI industrial sectors (aerospace, construction, process industries and road vehicles) on their Business Process Frameworks and reports from selected UK groups outlining their approaches to business process analysis. An invitation has been sent to those people who are on the IMI database and have indicated an interest in business processes.

There has already been a second call for outline proposals for the Aerospace, Construction and Process Industries (the dead-line for calls directed at the life-science aspects is the 15 August).

General enquiries, requests for (outline proposal) forms and similar matters should be directed to me.

The PPECC Steering Group met recently to finalise the annual report and develop the programme of activities for the next year.

The contents of the PPECC Annual Report for 1994/95 were agreed by the Group and scheduled for release to Club members (Note: Non-members can receive a copy of the Annual Report by request to the PPECC secretary ) ) in July.

The Steering Group is looking to increase the industrial representation within the membership and the Steering Group during the next year. A recruitment campaign will be launched to increase membership in this area. Recommendations for potential new industrial Steering Group members are welcome.

The initial plans for the next series of seminar/courses were also discussed and full details will be announced when these are organised. Initial plans under consideration included: Parallel languages, Thread-based programming, User impact of Parallel & Distributed Operating Systems and a new coordination for UK based parallel groups and activities.

CAPTools is a software package for computer aided parallelisation which has just been released at Beta level for researchers to review. Developed by Prof M Cross et al, University of Greenwich. CAPTools can be located and downloaded from: http://www.gre.ac.uk/~ma09/captools.html

The Club now has a selection of reports ready for release to Club members and non-members. This set of reports includes:

Approximately, 36 people attended this seminar which took place on a beautiful sunny day at RAL. The contents covered both explicit and implicit schemes with the latter type being given more extensive coverage.

After a very uplifting introduction by the chairman, Prof Gosman, the proceedings began with the first two lectures giving a brief outline of the latest methods for solving large systems of equations using explicit schemes. In particular, Dr Reid gave a brief and general overview on frontal/multifrontal and spectral methods for solving large systems of equations.

This was followed by Dr Sweby who concentrated on the use of explicit schemes specific to CFD, giving a very good overview on the TVD family of schemes such as FCT, MUSCL, UNO etc.

After the customary coffee break, the morning series of lectures was concluded with an excellent introduction to iterative schemes for CFD problems given by Prof Van der Vorst. He concentrated on the advantages and disadvantages to what he termed the Rolls-Royce of such schemes, that of GMRES. From the ensuing general discussion, the most salient points were that in most cases iterative schemes require preconditioning before they work. In general they are less stable and robust in comparison to the explicit ones but they are extremely fast for most typical CFD problems.

Following a good lunch and losing a few delegates to the sunshine, the program reconvened with a presentation by Dr Babcock on the use of factored and unfactored methods in aerospace related problems. The described scheme appeared to be a subset of the more general GMRES method, the difference being the choice of algorithm used in the preconditioning of the equations. Another advantage of this scheme lies in its efficient parallelisation when compared to other iterative schemes.

This was followed by Prof Van der Vorst's second lecture, where he continued his theme on implicit schemes by discussing GMRES related schemes such as CG, BiCGS, LSQR, ICGS etc. This lecture not only gave good background information, but also excellent reviews as to their convergence and stability criteria. It is perhaps regrettable that some of the graphs used for this were not included in the general handout as I am sure they would have been excellent reference material. However, a good list of general references were given along with a WWW URL detailing how to obtain templates of the various schemes presented. This was a most valuable piece of information and an ideal example to be followed by others in future.

This was followed by Prof Toro's presentation on High Resolution schemes and adaptivity. He gave an excellent example of what CFD does best - the generation of excellent flow visualization information. The video film, which showed the CFD simulation of a dam being breached at its centre and the subsequent movement of the liquid mass, was excellent.

The program of presentations was closed by Dr Lai who lectured on the use of quasi-Newton methods in problems associated with viscous-inviscid coupling techniques. In particular, numeric schemes to this interesting problem were presented and discussed from the point of view of numeric stability and computational expense.

In the concluding discussion, the most significant point raised by most of the academics present was their apprehension about future funding for the continuation of this and similar types of work.

The chairman closed the seminar and all the faithful disappeared quickly before they were caught up in the legendary 4:45 Harwell traffic jam.

Before concluding with this article, I would like to raise a general point, that of the quality and extent of the seminar handouts. It seems to be general practice, certainly on the seminars I have attended, for the presenters to give out copies of their overhead slides with very little information or comments added. This, from industry's point of view significantly reduces the value of the seminars. It is because for every industry attendee, there are at least 2 to 3 other colleagues to whom the seminar proceedings would be circulated too, who would require more detailed information. It would therefore be of great help to industry and give a greater incentive to participate in such meetings, if more extensive descriptions of the presentations given were made available.

In conclusion, despite having to deal with a relatively difficult subject, the seminar was very successful in attaining its set aims, well organised as usual and certainly well worth attending.

This one-day meeting will provide an opportunity for those working in the area of automatic generation to gather and discuss recent development. The general sections of the proposed programme are:

The meeting will be under the chairmanship of Dr N P Weatherill of University College, Swansea.

The electronic preprint service announced in the last ECN is now running on a trial basis. To find out how to submit or retrieve a paper, e-mail a message to cfdcc@inf.rl.ac.uk containing the subject send info or consult the WWW page which can be found by following the link to the CFD Community Club in: http://www.inf.rl.ac.uklclubs.html

A number of papers have already been offered to this service which may be obtained through anonymous ftp (www.inf.rl.ac.uk) in directory pub/cfdcc/95 where an index to the papers can be found.

Information on the service and paper submission can be found in pub/cfdcc/preprint-info and pub/cfdcc/preprint-submit.

Further information can be obtained from Dr John V Ashby.

In several events of the Visualization Community Club, Virtual Reality (VR) has been the subject of presentations where it is described as an aid to visualizing a complex model or the results of a simulation. This has occurred even though VR was not as such the primary topic of the meeting.

The Visualization Community Club is planning to develop this further. An event of VR for Visualization is planned and more details will be available in the next issue.

A Virtual Reality Centre is being built up at Rutherford Appleton Laboratory, under the auspices of the Visual Systems Group. This article describes the equipment that has been purchased recently.

To investigate large models and simulations, it was agreed that a powerful graphics system was required. The system at RAL currently consists of a Silicon Graphics Onyx system with Reality Engine 2 graphics and one Raster Manager subsystem. This is attached to a Multi-Channel Option, allowing up to six independent output streams from the graphics pipeline. In particular, this allows either interleaved stereo (viewed with synchronised shutter glasses) or parallel stereo (viewed with a headset or via video projectors).

In addition to the basic hardware of the Onyx, RAL have attached a Division VR4 headset, complete with Polhemus position sensor and 3D mouse. Two LitePro 580 video projectors have been purchased, which, with polarising filters and glasses will allow stereo viewing on an 8 foot by 6 foot screen. The projectors are of high brightness and are also in much demand at RAL for conventional (non-stereo) presentations.

As well as the standard Silicon Graphics Performer software, RAL has purchased Division's VR software suite which together with Performer, will assist in the conversion of CAD models into suitable form for the Onyx and the handling of interaction between user and model in the virtual world.

In the next few months, Visual Systems Group will be familiarising itself with the whole system and working on pilot projects to bring CAD models and the results of simulations into the VR system. Once the general method of working has become familiar, we will be glad to collaborate with engineering researchers on projects where VR can help them with their problems. If so contact Chris Osland for more information.

The Support Initiative for Multimedia Applications is a project funded under the Higher Education Funding Council's Joint Information Systems Committee. It aims to provide a single focus of information in the area of multimedia and will link in with other projects and initiatives for UK Higher Education and dovetails with the current activities of the Advisory Group On Computer Graphics.

Subscribers receive reports prepared for the initiative as they are issued. Reports so far include: Multimedia Formats, Evaluation of Image Capture Pathways for Multimedia Applications, Running a World-Wide Web Service and several reports on video conferencing.