The picture on the front cover was produced on the Cray X-MP/48 for the UK Universities Global Atmosphere Modelling Project (UGAMP). It shows the predictions of the global atmospheric flow in a perpetual January.

As announced earlier in FLAGSHIP, RAL and IBM have entered into a joint study agreement for two years to investigate the effectiveness of a large IBM 3090 configuration for advanced scientific research.

The RAL IBM mainframe is currently being upgraded and by the time you read this it should be a 3090-600E with 6 IBM Vector Facilities (VFs). Each VF has a peak performance of 116M Flops, about half the peak performance of a Cray X-MP processor, but the scalar performance is more comparable with that of a Cray processor. Our 3090 has 256 MBytes of main memory and 1 GByte of expanded memory (compared to 64 MBytes memory and 256 MBytes SSD on the Cray). Unlike the Cray the IBM 3090 is a virtual memory machine so it needs no special user instructions to manage jobs larger than the physical memory. Paging to expanded memory is extremely rapid and paging to disk will occur for exceptionally large jobs.

The IBM 3090 complements the Cray X-MP, being well suited to very large jobs. The IBM vectorising compiler is good at spotting ways to vectorise loops and will even reverse the order of nested loops if necessary. Multi-tasking on the 3090 under IBM's parallel Fortran extensions is efficient at creating and synchronising tasks.

Computing time on the 3090 is available through normal SERC Peer Review procedures.

In addition a substantial part of the Joint Study is to be carried out as a "Strategic User Programme" in which about 10-12 major users will be allocated large amounts of time in order to carry out frontier research in their subject. At this stage we have in mind that a "Strategic User" could expect to have in the order of 1000 processor hours per annum for the study period of two years. The Strategic Users will be expected to exploit both vector and parallel processing and assistance in this will be provided by RAL and IBM staff. Such users may also be expected to participate in occasional seminars organised via IBM or otherwise. Help will also be available for the production of high quality graphical material to present the results.

The review and allocation of time to Strategic Users will be made by a small committee chaired by Professor P G Burke, FRS. The timing of this process is likely to be that bids will be needed by June 1989 for allocation in August 1989. The committee's considerations will include scientific merit and potential for exploitation of the features of the 3090-600. Collaborations with industrial partners will be especially welcome. Please note that only computing resources are available and the committee has no funds for staff or equipment.

Any academic or Research Council person who would like to propose a project for the Strategic User programme is invited to write to me for an application form.

A meeting held at Imperial College London on 30th and 31st March was attended by about 100 people from within the UK scientific community. It was requested by the Computer Board's Joint Policy Committee (JPC) for Advanced Research Computing and covered mainly the applications on the three conventional supercomputers at London, Manchester and RAL. Novel architectures were represented by two speakers from Edinburgh, describing the Edinburgh Concurrent Supercomputer Project and genetic sequence matching on the AMT Distributed Array Processor.

The meeting was most impressive in the range of scientific disciplines represented and in the extent to which they rely on the provision of massive computing power. The application with probably the largest computing requirement is environmental modelling - meteorology and oceanography being represented at this meeting.

In oceanography the main problem is the need to resolve rather small scale eddies (about 10km) which are important as dissipation mechanisms, while at the same time following long time scales because of the long thermal time constant of deep water. Modelling of flow velocity, energy transport and temperature are clearly key elements in global climatology, while salinity and temperature affect other economically important resources such as fish stocks.

The meteorologists are rather better placed in that they are already able to resolve the important length scales, but in addition to fluid dynamics they must also solve complicated equations for atmospheric chemistry, radiation transport in clouds and the thermodynamics of evaporation and precipitation.

The next stage of modelling for both the meteorologist and oceanographer requires much more memory than the present Cray X-MP can supply - at least 20MWords - and could easily use a large part of a single national facility. These simulations also produce prodigious quantities of intermediate data and have large demands for data storage, retrieval and graphical visualisation. Looking further forward the coupling of oceans and atmosphere in a single simulation could exhaust the capabilities of the currently foreseeable supercomputers.

One of the more unusual applications represented was the search for geographical clustering of certain diseases (particularly some forms of cancer). The problem is simply that in any large body of random data one will find fluctuations at a few standard deviations from the mean. It is then difficult a posteriori to decide whether a particular fluctuation is significant, and if a cancer cluster is found near to a nuclear or chemical installation it is clearly of more than academic interest.

Supercomputer power makes it possible to analyse this data, looking for all possible geographic correlations and, it is hoped, extracting an unbiased collection of those which are statistically significant. Again the memory of the Cray X-MP was singled out as a drawback and some of this work is being done in parallel on the Cray 2 at the Harwell Laboratory.

Traditionally, physicists and chemists have been large users of supercomputers, but it was important to see the extension of this into biochemistry and biophysics. Protein crystallographers have traditionally refined experimental data using a mixture of computer analysis and PhD student labour but are now able largely to automate the process using molecular mechanics models and experimental data to provide a mutually consistent solution.

Computer modelling has moved into drug design; a particularly exciting possibility is the development of bio-reductive drugs. A problem in cancer treatment is the lack of specificity and the way in which chemo-therapy drugs will attack both healthy and cancerous cells. It should be possible to exploit the small difference in blood supply between healthy tissue and tumours which could make a drug molecule with the right redox potential change from oxidised to reduced form when it moves from cell to cell. If only the reduced form is lethal to cells then one has a highly selective form of treatment. Supercomputers are used both in molecular stereochemistry and advanced graphics to study the ways in which drug molecules interact with enzymes, and in quantum chemistry to calculate the small difference in energy between the oxidised and reduced forms. The major breakthrough in this area has been the ability to simulate drug molecules in water rather than in vacuum and now redox potentials can be calculated at least as accurately as (and rather more easily than) they can be measured.

This area of application needs both high powered desk top machines for the graphical displays and large processing power for the potential calculations. Improvements in network performance are necessary to link these together

.Again in molecular biology we find supercomputers, albeit of novel architecture, being used to match sequences of proteins or nucleic acids. The linear structure of these genetic materials is obtained by cloning and sizing the fragments but the full three dimensional structure (or folding) remains unknown. When two sequences are being compared for similarity the occasional difference or missing fragment may be relatively unimportant if it represents a small loop separating otherwise similar structures.

The representation of either protein or nucleic acids is very wasteful on a conventional supercomputer with floating point arithmetic since only four nucleic acids and twenty or thirty proteins are of interest. The AMT DAP 510 is particularly suited to this work since it is an array of 1024 single bit processors and can match its word length to the needs of the application. In this work it is many times more cost effective than the 64 bit word Cray supercomputer.

The future needs of genetic sequencing are enormous since there is a global project to sequence the whole of the human genome (ie all the DNA in all 24 chromosomes), this work will take several years and will produce a sequence of 3 billion bases.

The traditional supercomputing areas of atomic physics, solid structure calculations and chemistry are not being left behind and routine atomic physics calculations now give very precise energy levels and cross sections accurate to about 10%. The quality of this data gives much more confidence in the interpretation of atomic spectra as plasma diagnostics in fusion research and astrophysics. Atomic physics calculations are also playing a key role in the development of new lasers at X-ray wavelengths.

Quantum mechanical calculations of band structure in solids are now also very reliable and are becoming increasingly important in practical applications such as metallurgy, where the ductility of alloys can be predicted from their band structure and can lead to cost savings for manufacturers. The currently fashionable studies of high temperature superconductors also make use of supercomputing to calculate the band structure of similar materials, only one of which is superconducting. Detailed differences in the band structure and the position of the Fermi surface may then give an insight into the mechanism of high temperature superconductivity.

Theoretical chemists have always had an infinite appetite for computing power and are now using the Intel iPSC2 hypercube at Daresbury. The 32 node machine with vector processing at each node is several times faster than a Convex C2 on some applications and about one half of a Cray X-MP processor in peak vector performance. The machine is soon to be upgraded to 64 nodes and will have greatly enhanced I/O performance with controllers at each node.

The largest scale parallel computing project in the UK is at Edinburgh (see Nick Stroud's article on Page 16). One of the large projects on this machine is the calculation of Quantum Chromo Dynamics (QCD) on a four dimensional space time mesh. QCD, the study of matter at the level of quarks and gluons, is much more difficult for theorists to analyse than Quantum Electro Dynamics (QED) which can be studied using perturbation theory. The computing problem for QCD is enormous since it is necessary to have a lattice spacing much smaller than the objects under study and an overall mesh much larger than this size. With the number of mesh points scaling as N4 the problem is demanding in both memory and computing power.

Many of the international leaders in this field now have dedicated, often special purpose, hardware for Lattice Field calculations but the Edinburgh group have been able to make significant progress on the masses of certain mesons using the transputer arrays.

In astrophysics, supercomputing makes itself felt very powerfully although indirectly through the provision of atomic data, but more obviously through the ability to simulate many body systems subject to their mutual gravitational interactions. The methods are applicable to both star clusters and whole galaxies, but galaxies are almost totally collision-less whereas binary collisions in globular clusters are just significant. The problem of the origin and evolution of spiral structure in galaxies is amenable to computer modelling and requires an enormous number of simulation particles to sample the configuration and velocity space of the problem. With the latest machines it has become possible to study for the first time the interaction of two galaxies in collision and the development of spiral arms and bars following the encounter.

Finally, the use of supercomputing in engineering areas has grown dramatically during the last two years and in one three month period accounted for 20% of the computing on the RAL X-MP/48.

Computational fluid dynamics has the same problem in simulating turbulent fluid flow that the environmental modelling groups have in oceanography, namely that the flow must be resolved down to very small sizes to include the eddies that are important in energy and momentum transport. Flow at a Reynolds number of 1,000,000 would require 750,000 years of computing on a Cray X-MP with 10,000,000,000,000 words of memory!

Faced with this problem there are a variety of techniques which give valuable approximate results for realistic fluid flow and one such method, the Large Eddy Simulation, has been used to predict temperature excursions in the turbulent flow of liquid sodium in a fast reactor. At the more fundamental level, spectral simulations of the particle motions in fully developed Kolmogorov turbulence give some surprising answers for the average transport coefficients of a turbulent fluid.

The computer modelling of elastic plastic flow has been developed to the point where it provides invaluable insight into crack propagation and failure in high tensile materials. With the current limitations on memory it is possible only to study the rather simple (but still 3D) geometries of test specimens rather than the full structure of pressure vessels.

The afternoon session on March 31st was devoted to a fuller consideration of future need for Advanced Research Computing. On the hardware front it is clear that conventional machines of several Gflops processing power and GBytes of memory will be available by 1990/91. At the same time machines of better than Cray 1 performance will become available at the price level where they can be purchased by individual departments and high powered vector workstations will approach this level of performance on the desktop.

Professor Forty, chairman of the Computer Board and chairman of the JPC introduced an open discussion period by addressing a problem that several of the earlier speakers had mentioned - it is often easier to obtain funding for equipment than people, and people are going to become an increasingly scarce resource.

The pressing need was to improve the connection of workstations to central computing power, but the fundamental speed limitations of JANET would remain until there was a breakthrough in the speed of X25 switches. It was important to avoid a variety of ad hoc solutions to this problem and the JPC had already instigated a working group to report on the problems of the supercomputing "environment" in the 1990s.

The next twelve months would be important in building up the case for a new machine of whatever type. In addition to the academic case it would be important to address the problems and costs of workstation connections and also the essential nature of visualisation in understanding the results of supercomputer simulations. Links with computer suppliers, European collaborations and industrial partners would all help to make the case more convincing.

The audience discussion centred mainly on the need for a central state of the art supercomputer but several people commented on the benefits of a local service and Starlink was singled out as a model of a distributed service with good central support. The view was expressed that novel architecture machines had sometimes been placed in groups that were too small to provide the manpower needed to overcome the lack of software available on very new machines and that a concentration of this effort would be beneficial.

Those present felt that the meeting had conveyed the great breadth of application of supercomputing, the crucial role that it played in scientific research and the need for the most powerful resources if UK research is to remain internationally competitive.

Some Electronic Mail systems on JANET allow incoming mail to be sent to a mailbox that does not correspond to a userid on the computer. The mail is instead forwarded to a list of mailboxes on that or other computers. These mail systems are acting as mail distributors or mail servers; the pseudo-mailboxes are known as distribution lists. Most mail user interfaces allow you to keep a list of mailboxes that can then be used to simplify sending mail to many other people but mail servers have the advantage that the information is only stored in one place on one computer.

Mail distribution lists are best used within a closed community when many people are contributing to a discussion. If there is no discussion involved, only information dissemination and/or the community is very large, then a bulletin board (such as the NISS Bulletin Board described in the November/December issue of FORUM 88) is a better solution.

We have recently installed a version of the LISTSERV software (called LISTRAL) on the CMS system at RAL. Among its facilities LISTRAL supports mail distribution lists; in fact it is used by most of the mail-servers on EARN and BITNET. Such lists are not restricted to JANET users. Subscribers can be on any machine reachable by mail from UK.ACRUB (or the JANET-EARN gateway UK.ACEARN-RELA Y). We plan to set up peer lists for some of the EARN/BITNET lists that are most popular with JANET users. This will reduce the traffic across EARN as only one copy of a mail file will be sent from an EARN server and then this will be fanned out at RUB to JANET subscribers.

Distribution lists controlled by LISTRAL are set up by us and then managed by a list manager who, by mail commands to LISTRAL, can control the characteristics and membership of the list. Lists can be set up so that they have open subscription (ie anyone can subscribe by sending a mail message to LISTRAL) or closed subscription (only the list manager(s) can add or delete people from the list). They can have open distribution (anyone can send mail to the list) or closed distribution (only those already on the list can send mail).

We plan to offer a mail distribution service to the SERC-funded community. A typical list will have 10-100 subscribers and be on a topic relevant to the work of SERC; we do not want lists aimed at everyone on JANET who uses a PC or teaches undergraduates. If you would like a distribution list set up, please contact me (0235-446574 or JCG@UK.ACRUB) to discuss your needs.

I am very sorry to have to report that Muriel Herbert is leaving the Laboratory. She has worked at the Atlas Centre for over twenty years in a variety of jobs, the most recent being that of Documentation Officer, ordering, packing and sending your manuals. Recently she has also helped with the preparation of articles for the computing newsletters. I am sure you will all join me in wishing Muriel best wishes as a lady of leisure.

Please continue to send all requests for manuals to the id on the back cover - and please be patient while we re-allocate her work.

During the six years in which the Edinburgh Regional Computing Centre was running two ICL DAPs, Edinburgh University became one of the leading centres for parallel computing, with worldwide recognition in such key scientific fields as physics and molecular biology. In anticipation of the decommissioning of the DAPs, a proposal was submitted in 1986 to the Department of Trade and Industry, the Computer Board and SERC to establish a large transputer array facility, the Edinburgh Concurrent Supercomputer (ECS), in collaboration with Meiko Limited of Bristol.

The ECS Project is built around a Meiko Computing Surface which is based on the transputer. This is the computer on a chip, the Inmos chip which for the first time gets all the components of a computer - processor, memory and communication channels - on to a single chip. The result is not only a computer smaller than a matchbox which has the power and performance of a top-end VAX, but also a component specifically designed as a building block with which to achieve massively parallel computers. The system provides a multi-user resource by partitioning the T800s into variable-sized domains, each of which is electronically configurable in software. So far the machine has 400 T800 transputers delivering a peak performance of around 0.5 Gflops, with some 1.7 Gbytes of memory. Support to a total of £3.5 million has already been raised from the Department of Trade and Industry, SERC, the Computer Board, industry (whose commitment is now over £1.5 million), and Edinburgh University. The machine is networked (CALL UK.AC.ED.SUPER on JANET) as a national facility, with more than 200 registered users.

Applications under development come from a wide range of disciplines, and include: neural networks; fluid flow simulation; quantum physics; molecular biology; graphics; scene visualisation; medical imaging; weather simulation; chemical engineering; communications simulation; and parallel programming tools. A complete list of projects is published each year in conjunction with the annual seminar which reviews the status of the Project. Further details can be obtained from us:

The ECS Project,As the years have gone by, the graphics facilities provided and supported by RAL Central Computing Department have evolved. To coincide with the demise of some old packages and systems (MUGWUMP, SMOG, etc..), we are providing this review of the facilities, bringing together information from many previous FORUMs and ARCLights.

Graphics Group wants to provide you with the most appropriate facilities for your work. The range of graphics devices and systems these days is enormous. Month by month, new facilities are developed that supersede earlier "best buys". This makes it dangerous to have a "preferred terminal" list since any such list is rapidly (or even immediately) out-of-date.

To deal with this situation, in 1984 Graphics Group adopted a policy for the central computers of the time which stressed continuity of graphics interfaces rather than specific devices. This has been updated as the ISO standard CGM (Computer Graphics Metafile) came into being and UNIRAS was purchased. In a nutshell, this policy is now as follows:

It is as a result of this policy that we are now removing from CMS the earlier systems of SMOG, MUGWUMP and GINO-F, since support of these packages would reduce the effort we could devote to the preferred packages. We would have had to embark on a substantial development effort to get these packages working under CMS 5.5 and the number of remaining users was very small.

The packages supported by Graphics Group on each of the machines we support are:

In general all these systems are available from us on:

For the status of UNIRAS on each system, see David Greenaway's article. On the IBM under MVS/370, RAL-GKS is at version 0.11 rather than 1.11 and the UNIRAS interactive utilities are not installed. The UNIRAS interactive utilities are not available for the Cray.

We are currently evaluating the requirement for GKS-3D and/or PHIGS and would welcome any comments about your requirements for packages providing such 3D functionality. On the Cray (and Silicon Graphics IRIS) we have been evaluating 3D modelling and animation systems and have packages available for experimentation.

We have developed a large suite of modules for the reading, writing and interpreting of Computer Graphics Metafiles (CGMs) which can be made available to users who want to generate CGMs very efficiently. These are written in 'C' and are used in the Iris workstation and the Video Output Facility.

Although graphics hardware continues to drop in price, a number of devices are just too expensive for wide-spread installation and we provide them as central facilities.

Foremost amongst these is the Versatec 42" electrostatic colour plotter. This produces a complete, 256 colour, BO drawing (1 metre wide and 1.4 metres long) in about 10 minutes, irrespective of the complexity of the picture being drawn. Access is available from RAL-GKS and NAG; work is required (and scheduled) to provide access from UNIRAS.

Both Xerox 4050 laser printers at RAL are equipped with full (monochrome!) graphics capability and the one in the Atlas Centre has been interfaced to the HARDCOPY system for some time. Further work is required to provide graphics output on the other (in R1) but this work is now scheduled. Access, as for the Versatec, is available from RAL-GKS and NAG, but not from UNIRAS at present.

Access to these devices is provided by the HARDCOPY system which runs under CMS on the IBM mainframe, but which is available to any computer that can transfer files to the IBM. HARDCOPY takes files in CGM or GKS-metafile format, translates them to the code required for each different device and sends the file to it for you. Graphical output is also possible on the IBM 4250 electro-erosion printers, although these are rarely used for pure graphics.

This system is currently undergoing 'field trials'; for further information see page 21.

Since the departure of the FR80 there has been no high volume film output facility at the Atlas Centre. Much of the film function of the FR80 is expected to be satisfied by the Video Output Facility. A low volume (Dunn) camera remains at the Atlas Centre for those who wish to produce 35mm slides. It is attached to a Sigma 5688 which can be connected to the IBM 3090 or any computer on JANET.

Two thermal transfer printers are installed in the Atlas Centre; the elder is connected to a Sigma 6160 and only supports 8 colours. The other, very recently installed, is currently connected to the Iris 3130 workstation and the VAXStation 2000 (and the video system) and supports 4096 colours. It is our intention to add connections to a Macintosh, IBM PS/2, Sun and IBM 6150 PC/RT.

We realize that these "hands-on" slide and foil systems do not adequately address the requirements for these media, and we are looking at the provision of spooled facilities for both.

There is only space to provide a quick overview of the methods of working in an article this size. Let's start with the most obvious way, at your own terminal.

Interactive graphics is possible on all the machines we support, although on the Cray you will have to be registered to work interactively and on the IBM interactive working is provided under CMS, not MVS. Each of the packages can be told to send output to your terminal.

In GKS (and hence anything which, like NAG, uses it) you can open more than one graphics stream, so you can see the pictures on your screen as they are produced and can save them in a metafile as well.

For long or large programs the more usual way of working is to produce a metafile and view it at your convenience. This has the advantage that you can look at earlier pictures without having to rerun your program. Also, any transmission errors do not wreck the one possibility of seeing your results. All the supported packages allow you to produce metafiles and to view them later; not all of them produce the same format. Roy Platon described the various formats in FLAGSHIP 2. The following table shows which programs can read and/or write each format.

| Program | CGM | RAL GKSM | UNIRAS GKSM | UNIPICT | UNIRAST |

|---|---|---|---|---|---|

| RAL-GKS | RW | RW | u.d. | ||

| GKSMVIEW | RW | RW | u.d. | ||

| HARDCOPY | R | R | u.d. | ||

| UNIRAS V5.4 except GIMAGE and CADRAS/C | W | RW | W | ||

| GIMAGE & CADRAS/C | W | ||||

| UNIRAS V6.1 | RW | W | RW | W |

Note:

Those of you that use PROFS (in particular) may have encountered IBM's own graphics system -GDDM. While this system - and the Interactive Chart Utility (ICU) - provide useful facilities within the IBM environment, they are not currently integrated with the rest of the facilities described above. It is recommended that you only use ICU when there is no requirement to exchange any pictures generated with non-PROFS users. Remember that you cannot print ICU pictures on the Versatec colour plotter or Xerox laser printers.

Over the last nine months we have been updating the RAL GKS Guide and a completely reissued version - corresponding to GKS level 7.4 - is being put together. NAG and UNIRAS have their own, comprehensive manuals. The online Help files on the IBM have been the subject of a major overhaul in the last six months. If you require any documentation of the graphics facilities, please contact the Documentation Officer, MANUALS@UK.AC.RL.IB or 0235-44-5272.

The aim of this article has been to provide the most general overview of all the graphics facilities. Cash and effort limit the extent to which they may match your needs. Graphics Group are continually developing and installing new facilities in response to users' comments and are increasingly working with individual users and projects to assist them with their graphics requirements. If you want advice, do contact me on 0235-44-6565.

The Graphics News pages in the previous issues of Flagship have included an overview of the UNIRAS graphics system and its SERC infrastructure. In this issue we outline the progress on each of our implementations. The main groupings in the UNIRAS system are the libraries, the interactive utilities and UNIGKS.

All interactive utilities and subroutine libraries are installed and working on RL.VE. UNIGKS is also up and working. Copies are being distributed to managers of other SERC machines, on request. Daresbury has taken this implementation and has installed it.

All interactive utilities and subroutine libraries are installed and working for display terminals that are coax connected. Hardcopy output on the IBM 4250 has been established, and routing to the Xerox 4050 and the Versatec 9242 will follow shortly. There is a serious problem with output to displays that are PAD connected, and this is being investigated. UNIRAS will not be released on CMS until this problem has been resolved.

UNIRAS is not installed under MVS/370 at present.

The subroutine libraries are installed and working, except that output from the CADRAS (RASPAK/C) package is not possible; UNIRAS are investigating this. Output can be disposed to the VAX and the IBM front-end machines, and user documentation for this is being prepared. UNIRAS did not supply the interactive utilities or UNIGKS for the Cray. ULCC have requested UNIGKS, and UNIRAS have now said that they can deliver it. If ULCC install it, we may do so as well for compatibility.

We have received three versions - SUN OS3 for the SUN3, SUN OS4 for the SUN3 (copied to Daresbury who have installed it) and SUN OS4 for the SUN4. Informatics Department have all these versions, but they may wait for UNIRAS version 6 rather than supporting 5.4 at all. None of the SUN tapes had UNIGKS, but it has been ordered at Daresbury's request, for their version.

We have received (less UNIGKS and the interactive utilities) on 5.25 inch diskettes for the IBM PC/AT and clones, and on 3.5 inch diskettes for the PS/2. To run, it requires 640 kbytes of memory, 10-12 Mb free space on a Winchester disk, a math-coprocessor, and Version 2.1 of the Ryan-McFarland Fortran compiler.

We have received the RT implementation, but not installed it on any machine.

All interactive utilities and subroutine libraries in UNIRAS are installed on RL.NA, the ND 560/CXA at RAL. Testing has been limited in scope, but output to Tektronix 4010-type and 4105-type terminals has been successful, as has plotting on a ROLAND flat-bed plotter (an HPGL-type device). Work is continuing on other drivers. One problem is the sheer size of the packages and the load this imposes on the machine. Various ideas are being tried to cope with this. Rather than installing UNIGKS, the availability of device drivers and the prospects for future support has dictated that an attempt be made to get RAL-GKS installed instead and work on this is in progress.

First user trials of the RAL Video Output Facility are now taking place. We are looking for users with applications suitable for video and will produce short "test strips" from their programs or metafiles. This trial period will allow us to produce general guidelines for future users of the facility and will allow you to have early use of this powerful presentation medium.

The RAL Video Output Facility was described in Arclight 5 and Forum 87 (Sept/Oct). This was at the time the system was designed, before of all the orders had been placed. All the equipment was installed by April 1988 and software for it has been developed over the last year. A general interpreter for Computer Graphics Metafiles (CGMs) has now been written and runs in the video system's computer. The program has a number of options: each CGM picture may be written to one or more frames on the videotape; the starting point on the videotape can be specified; a subset of pictures from the CGM can be processed. Together, these allow CGMs to be combined onto videotape in animation sequences.

During this trial period, the CGM files will be transferred from wherever they exist to the video system "by hand". The production system will use a VAX 3600 as the "front-end" to the video system and files sent to a pseudo-user on this VAX will automatically be transferred to the video system and processed. The RAL GKS and CGM systems will be extended with ESCAPE codes to specify the options described above and so will allow the video system to be fully automatic.

We are now actively soliciting more "guinea pig" users of the video system; they will have the opportunity to see their output on videotape and we will be checking out the operation of the system. Together we will be building up experience of the things that work best on videotape. If you have an application you would like to test, do contact me on 0235-446565 (CDO@UK.AC.RL.IB) and we will proceed from there.

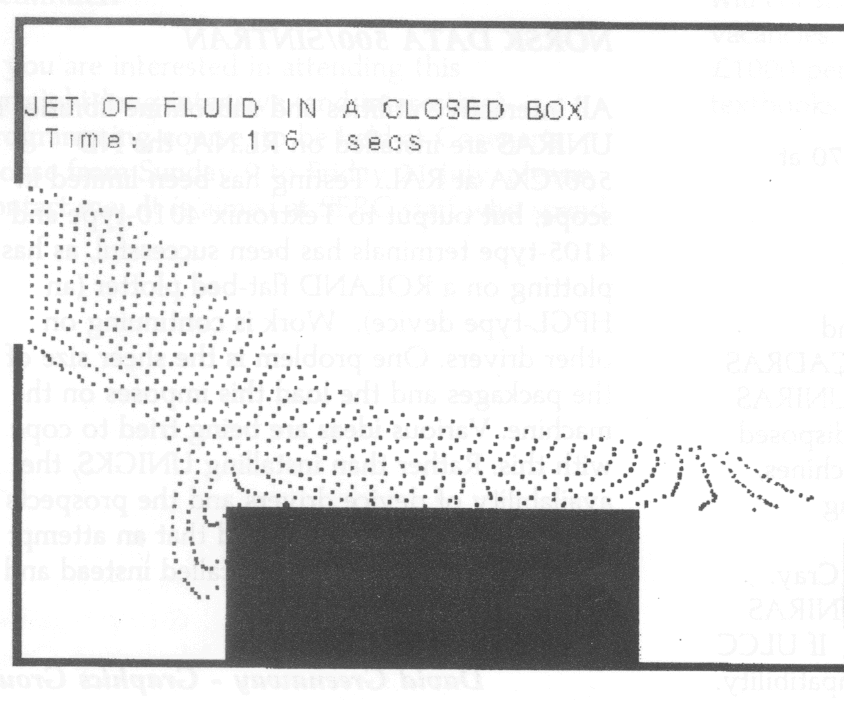

This picture, one of a sequence from a short animation sequence, was produced by the RAL Video Output Facility. It shows the behaviour of a fluid jet in a container with inlet and outlet holes. The Computational Fluid Dynamics program was written by Siebe Jorna, of the Department of Mathematical Sciences, St. Andrews University. Originally developed on an Amstrad PC, the sequence was run on the Silicon Graphics Iris 3130 at RAL, which produced a Computer Graphics Metafile (CGM). This CGM was sent to the video facility and recorded onto U-Matic tape.