The picture on the front cover is of Atlas the Titan supporting the World. The word atlas is used for a book of maps because a representation of Atlas supporting the heavens was used as a frontispiece to early atlases.

Our picture was not computer generated, but was scanned by an IBM 3117 to provide the reduced version here.

SUP'EUR is a user group which has been formed to provide a forum where European IBM supercomputer users and support staff can exchange their experiences and problems. In this it has similar aims to a group in the US called SUPER! Supercomputing for University People in Education and Research.

Membership of SUP'EUR is open to any European academic or research institution (including computer centres serving academia or research) with an IBM 3090 computer capable of exploiting vector or parallel processing (ie with at least two CPUs or one vector facility). Current membership of SUP'EUR consists of sixteen centres in nine European countries. Close collaboration with SUPER! is ensured by the participation of the Cornell National Supercomputer Centre. The user group is closely linked with the IBM European Academic Supercomputer Initiative and as part of that initiative, high-speed network links are being established between many of the centres.

The specific mandate of SUP'EUR is to assist supercomputer users in academia and research to make the best use of their hardware and software resources. This goal should be achieved both by encouraging the exchange of relevant information and by pressuring the computer manufacturer to respond positively to common user needs. Typical activities foreseen are exchanges of:

With this last aim in mind, SUP'EUR is setting up links with other bodies with overlapping aims such as SEAS, the European IBM User Group, and SUPER!.

SUP'EUR has met twice in Montpellier in Southern France, in November 1988 and again in March 1989, and is planning to hold a two day conference in Geneva on 21-22 September 1989. This conference is open to all and it is hoped that new SUP'EUR members will be attracted. Before the conference there will be a half day of introductory tutorials on vector and parallel computing and following the conference a half day meeting of SUP'EUR will be held. Further details can be obtained from me.

SUP'EUR recognises that all centres running and users using IBM supercomputers face similar problems and that these are similar to the problems faced by users of other supercomputers. Sharing experiences will save duplication of effort.

The Atlas Cray Users Meeting held at RAL on 25 May 1989 was relatively poorly attended with only about fifteen users present. At least in part this must have been due to an agenda which had no burning issues to discuss but we suspect also that there has been an excess of meetings in the last two months.

After a short welcome by the chairman Professor Pert from York, Roger Evans gave a general introduction. Thanks were offered to those who had contributed to the Cray Science Report which had been generally well received and had given a good impression of the quality of science being carried out on the X-MP/48. The use of the machine continues to increase and in particular file space on the Masstor M860 had been in short supply. A squeeze had been applied on users with large amounts of file space that had been unused for six months or more and this had given enough breathing space while extra file store is being acquired. It is hoped that an upgrade of the M860 from 110GB to 165GB will be possible in a few months and on a slightly longer time scale it is hoped to install a Terabyte automatic cartridge tape store.

With the increased workload, turnaround of large memory jobs has deteriorated. There is little that can be done to improve this apart from purchasing a 16 MWord memory, but users may find a modest improvement in turnaround as well as a reduced job charge if they can make effective use of multitasking.

David Rigby described forthcoming changes in Cray software. COS 1.17 is about to be introduced; this is Cray's last release if COS and all future development will concentrate on UNICOS although sites running COS will still be supported. COS 1.17 should appear identical to the user although it offers greater control of the machine resources by operators and systems staff. In particular problems on the DD49 disks can be fixed much sooner since data can be forcibly moved around; with this feature Cray have expressed willingness to reduce Preventive Maintenance times by two hours per fortnight.

UNICOS is being actively evaluated and UNICOS version 5.0 has just arrived at the Atlas Centre. This release is the one that is planned for a user service in 1990 if it proves suitable.

Tim Pett gave a summary of recent developments on the IBM 3090 service particularly where they impact the Cray service. CMS 5.5 has recently been introduced and brings the IBM operating system up to date and more importantly to a point where all parts are now properly supported by IBM. The change has been accompanied by a cleanup of old software and some graphics software has been withdrawn.

The 3090 is about to receive its final upgrade to a 600E with 6 vector facilities as part of the SERC/IBM Joint Study Agreement. About one third of this machine is set aside for "strategic users" who will be expected to make optimal use of the vector and parallel processing of the machine but will in return each have of the order of 1000 hours per year of machine time. Bids to participate in the strategic user programme were invited in the June issue of FLAGSHIP

Mike Waters described the upgrade of the VAX front end to a cluster configuration of an 8250 "supercomputer gateway" with a VAX BI interface to the Cray capable of transferring data at 2 MBytes per second. The user end of the cluster is a Micro VAX 3600 which is about three times more powerful than the older Micro VAX II and should be able to cope with expected increases in the VAX front end demand. The new configuration has about 2 GByte of disk space and for the first time it has been possible to provide daily and weekly scratch areas for temporary files.

Roy Platon gave an introduction to the new UNIRAS software which has been purchased nationally by the CHEST committee for all UK academic users. The software is capable of producing high quality colour presentation graphics and has particularly good routines for producing the three dimensional cut-away graphs that are used in geography and geology. These could obviously be used in other disciplines where the form of visualisation was appropriate.

Currently there are some inconsistencies in the implementation on the Cray which require support from UNIRAS to resolve. However disposing UNIPICT files to the VAX is possible; they can then be transferred to other VAXes on JANET for local viewing. The next release of UNIRAS promises support for X-windows and interactive working across networks.

An additional agenda item was a presentation by John Smallbone of Intercept Systems of the FORGE software optimising tools. These have been available on a SUN workstation at RAL for about a year but have not been widely used since users do not nowadays expect to have to travel to use a computer! Alan Mayhook of RAL has just completed a port of the FORGE system to run under VM/CMS and this is now available to anyone who has IBM full screen terminal emulation. FORGE works with a knowledge of the factors that hinder vectorisation under the various CFT compilers and will rewrite parts of a user's code using additional input from the user to resolve possible ambiguities. Loops can be split, inner and outer loops switched to improve vector length, and loops can be pulled into subroutines. The current version is officially a beta test but users are welcome to try it provided they feed back any problems to Alan Mayhook.

After lunch Roger Evans gave a brief presentation of the working group set up by the Atlas Centre Supercomputer Committee to consider the use of supercomputers in the 1990s. The group had started from the present position of batch jobs submitted from text only terminals and felt that this a severely outmoded form of working. Computer users are increasingly finding that they have a reasonable computing resource on their desk in terms of workstations or PCs and will expect that these can be connected in a more or less transparent way to other computers either locally or at national centres.

There are many problems for the interworking of computers from different suppliers but the general move towards UNIX like operating systems will provide a starting platform. In the short term it would be desirable to recommend standardisation on UNIX as an operating system, TCP/IP for networking and X-windows for graphical data interchange. Looking further ahead ISO/OSI standards will become generally supported and three dimensional extensions to X-windows are expected.

The current JANET network does not have adequate bandwidth to support graphical interactive working and there is an urgent need to improve the end to end bandwidth as well as the capacity of the JANET trunk lines. The network executive are planning to install faster lines to several universities over the next 12 months and with the current limitations on the X25 switches it will be possible to provide dedicated lines to users with particularly large requirements. With this increased data rate to users it is possible that the supercomputers themselves will then be the bottlenecks.

Funding these upgrades is a major problem since a national network of the desired capacity is very expensive but should be regarded as a national resource for all academics and not just the supercomputer users. In particular the graphic arts, medical and geographic sciences could have large requirements for visual data exchange once it becomes generally available. The provision of workstations for individual users is also difficult since they are typically too expensive for departmental funding and too small for research council grant awards. Nevertheless the provision of an up to date working environment must be seen as an important problem if the science is to remain internationally competitive.

There was general agreement that improvements in the working environment were desirable but the funding difficulties were reiterated; in particular there was a fear that the current separation of "computer time only" grants would be lost.

Users who had made a first step on the workstation ladder by using PC compatible machines as terminal emulators expressed the need for better means of file transfer between the PC and various mainframes. The Atlas Centre was urged to support Kermit on the IBM although in reply it was stated that the MOS terminal emulator could perform file transfer.

Users were generally enthusiastic about FORGE but expressed concern about using their scarce resource of CMS accounting units. While no specific guarantees could be given it was promised that users should always have enough CMS resource to be able to work efficiently.

There were some problems with access to the IBM front end over JANET which Atlas staff have promised to investigate. In particular Queens University Belfast have difficulty in getting full screen access and there is a general problem in interrupting screen output.

Some users have a need to dispose Cray files to another ID and this is not currently allowed. It was pointed out that this causes a duplication of files and could help to reduce the overall filestore requirements. The lack of security against accidental overwriting of tapes was also mentioned; this will be solved when the new Tape Management System is introduced.

The next user meeting will be a major meeting to discuss the introduction of UNICOS as the only Cray operating system. The meeting will be in October/November and will again be at RAL although this interrupts the promised sequence of meetings on and off the RAL site.

The SERC and the Computer Board jointly organised a meeting for users of the three National Centres and this was held in London on 16th May 1989. The aim of the meeting was to promote liaison between the three national supercomputing centres and their users.

The meeting was opened by Paul Thompson, Head of User Support and Marketing Group at the Rutherford Appleton Laboratory Atlas Centre.

This meeting was, in some respects, a continuation of the regional meetings that had been organised on previous occasions by the SERC and Computer Board. With the establishment of the three national supercomputer centres, there was now a far higher level of cooperation between them in the provision of services. A number of national centre working groups exist dealing with matters such as the common procedures for applying for computing resources, and the definition and implementation of common user interfaces. Some groups had been set up by the Kemp Working Group on the National Centres Common User Interface, others directly by the Centres. Although user committees and groups existed associated with one of the Centres, and user representatives participated in some of the national centre groups, there was not yet a national group for users of the three services. It was felt that this meeting could provide a forum for users and computer centre representatives to discuss matters of common interest. Invitations had been sent to computing centre directors and to grant holders (Class 1 project leaders). It was hoped that this meeting would provide input to the national centres on user views of current services and on future requirements.

The programme was designed to provide a brief update on the developments which were taking place at the three centres and in the networking infrastructure, and give news from the Computer Board.

Dr Brian Davies, Head of Central Computing Department, RAL, spoke about recent developments at the Atlas Centre.

The equipment configuration was briefly described, covering the Cray X-MP/48, IBM 3090, VAX interface to the X-MP, Masstor M860, graphics, workstations and network connections.

Workload on the X-MP service has steadily increased and the forward demand profile shows that the machine capacity has been fully allocated for most of 1989. It is expected that, while some grants are coming to an end and other new grants are being made, the demand will continue to exceed the capacity available. Usage patterns, however, do show some differences from those expected from the demand profile, but overall all available resource is being taken up. A report on the scientific results from the Joint Research Councils Cray X-MP/48 was published in January.

Work on the evaluation of the operating system UNICOS continues, and a user service is planned with UNICOS version 5.0 in 1990 provided this version proves suitable. Demand for filestore, particularly on the Masstor M860, has continued to increase. It is hoped to upgrade the M860 and to install an automated cartridge store later this year.

The equipment providing the VAX/VMS common user interface service to the X-MP has been upgraded to match that at ULCC, namely a cluster configuration of a VAX 8250 supercomputer gateway and a Micro VAX 3600. The 3600 is about three times more powerful than the old Micro VAX II which previously provided the service.

The IBM 3090 is to be upgraded to a 600E with six vector facilities as part of the SERC/IBM Joint Study Agreement. Part of the resources will be allocated for "strategic users" who will be expected to make optimal use of the vector an parallel processing features of the system and be allocated about 1000 CPU hours per annum for the work.

Other topics covered included graphics services including video output, and future needs.

The Atlas Centre Supercomputing Committee established a working group to consider the use of supercomputers in the 1990s. The chairman of the group was Dr Brian Davies and members included Dr Richard Field, Professor Frank Sumner and Dr Bob Cooper.

Today computer users often have on their desk reasonable computing resources in the form of workstations and PCs and are expecting to be able to connect these systems in a more or less transparent way to computers located at local and national centres. The working group identified the problems that need to be addressed to provide a suitable environment for the use of supercomputers from remotely located workstations, and in particular the enhancements that would be needed in networking arrangements and graphical data interchange.

Dr Richard Field, Director of University of London Computer Centre spoke about recent developments at ULCC, which included the installation of a Cray X-MP/28, StorageTek 4400 automated cartridge store, and VAX equipment, and progress towards the common user interfaces for access to the supercomputer services.

As a result of the procurement exercise for an interim replacement of the Cray-1S computers at ULCC, with the primary aim to reduce costs associated with running the equipment, a Cray X-MP/28 was installed at ULCC in January 1989 and brought into user service on 6th February. The X-MP offers an improved throughput of work compared to the Cray-lSs, the ability to process jobs requiring larger memory, and a closer compatibility with the X-MP at the Atlas Centre, although the ULCC configuration does not include a solid state device (SSD) or on-line tape handling. It also provides a platform for the development of a service on UNICOS, alongside the COS production service. It is expected that an initial UNICOS service will be available during 1990.

The VMS-based access to the COS service (VAX/VMS common user interface) was introduced in October 1988 using the VAX-cluster configuration described above. About 100 users are registered for this service. Work is continuing on the implementation of the IBM system VM/XA SP2, and the associated CMS, to form the VM/CMS-based access to the COS service (VM/XA CMS common user interface). The initial service is expected to be available during the summer.

With the ever-increasing demand for filespace both from the MVS and COS services users, a case was made in 1988 for funding for a new automatic tape facility to replace the Masstor M860 archive. The new tape facilities are based on "3480"-type 18 track tape cartridges ("square tape"). The equipment chosen is the Automated Cartridge System 4400 manufactured by StorageTek of Louisville, Colorado, USA. The largest component of the system, the Library Storage Module (LSM) contains a vision-assisted robot capable of moving to any point on the internal surface of the 12-sided structure to collect one of the 6000 tape cartridges. Each cartridge can hold 200 megabytes of data, so that the LSM represents about 1200 gigabytes of storage compared with the 110 gigabytes of the Masstor M860 archive. The system will be used to introduce an hierarchical storage system, on the MVS service and on the COS service, in such a way that to the user a dataset will logically appear to be on user discs, i.e. the transfer to and from tape will appear almost transparent to users. On the MVS service the IBM product DFHSM (Data Facilities Hierarchical Storage Manager) will manage the new cartridge system, and on the COS service the COS archiving software will handle the automatic dataset migration and recall. Archiving facilities will also be provided from VM/CMS, but the transfers will not be as transparent as for the MVS and COS services.

Other topics briefly covered included the introduction of MVS/XA which allows larger region sizes on the MVS service for batch jobs, the installation of an IBM 3745 Communications Controller, the plans for installing a new switch for the London network, and the initial plans to introduce workstation support and to extend graphics services.

Professor Frank Sumner, Director of Manchester Computing Centre spoke about developments at MCC.

A change of name for the Centre had recently occurred. At the beginning of April the name Manchester Computing Centre came into use, to replace University of Manchester Regional Computer Centre. The new name is more appropriate in describing the Centre and its activities.

A procurement exercise for the interim replacement of the CDC Cyber 205 at Manchester had taken place at the same time as that at London, with the same aim to reduce running costs. As a result, the Cyber 205 was replaced by an Amdahl VP1200. This machine is from the same range as that chosen for the local Manchester Computation Intensive Facility, the VP11OO.

The equipment configuration at MCC currently includes the two VP systems, Amdahl 5890/300, VAX for the common user interface, Masstor M860 filestore, and network connections.

The VP1200 offers a national supercomputing service. It has a peak theoretical speed of 571 megaflops, compared with 286 for the VP11OO, and cycle times of the scalar and vector units of 14 and 7 nanoseconds respectively. The scalar unit is about as powerful as one of the processors in the Amdahl 5890. The VP1200 is estimated to be three times as powerful in throughput terms as the Cyber 205. The operating system is MVS/XA, with extensions to support vector processing added by specific Amdahl software, VP/XA. The VP11OO and VP1200 are intended for batch processing production jobs, although certain housekeeping tasks and some program development tasks can be carried out interactively.

The Amdahl 5890/300 supports the VM/CMS screen-based front-end environment for access to the VPs and will also support the common user interface facilities. The VP systems themselves provide a screen based terminal environment for job submission and viewing of job output. The interface is TSO and ISPF/PDF.

In addition to the excellent tools for optimisation and vectorisation of programs, a range of applications software is already implemented for the user service and more packages are in various stages of acquisition and installation.

Statistics for the first 19 weeks of 1989 on CPU usage, total and vector, show that overall about 29% of the CPU usage is vector. It is expected that this proportion will increase as users gain more experience in optimising codes for the VP.

As at both other national centres, a VMS-based service for access to the VP is available. Take-up of this service is at present fairly low.

It is hoped to enhance the Masstor M860 in the near future and so provide a larger filestore for users.

After lunch Dr Ted Herbert, Secretary to the Computer Board, gave a talk summarising recent developments in Computer Board support for national centres. The Board was concerned about the proportion of its total grant absorbed by the two national centres at London and Manchester. The Board had therefore invited Mr Miller and Dr Booth to establish a working group to review the operations of the national centres and to report back to the Board.

After consultation with the national centres, the group had been given terms of reference relating to the definition of services: the recommendation of appropriate levels of funding; the comparison of resource levels; the identification of local service components; the apportionment of responsibility among funding bodies; the definition of common three-year plans; the establishment of performance monitoring and audit procedures; the identification of special financial support; and the preparation of an interim report by March 1989.

The working party had put forward three major actions as a result of its deliberations: the need for a sub-committee to allow more extensive consideration of estimates and plans submitted by the national centres; the need to debate and advise the efficiency review on the question of apportionment of costs vis a vis sources of funding; and the need to implement performance monitoring and audit procedures.

The allocation of resources on the front-end Amdahl systems was also a matter that needed to be examined. The initial split at ULCC had been 10% of the front end for access to the supercomputer, 30% for local London use and 60% for national use. At Manchester the proportions were respectively 20%, 40% and 40%. It appeared in that in practice the front-ends supported largely class 3 local work and it had to be established to what extent these facilities were necessary as part of the provision of national services.

An increasing source of concern was the question of the extraterritorial constraints imposed by some American suppliers. This had to be resisted since they were nothing less than a basic infringement of United Kingdom sovereignty. It would be necessary to consider with the CCTA and DTI how to develop a coordinated UK response. A successful agreement had been negotiated over the use of supercomputers at the national centres but increasingly the American authorities were seeking to extend proposed provisions to machines of lower power and indeed to the use of operating systems and applications software.

The Common User Interface Group under Dr Peter Kemp had now completed its work. Common access paths to each of the three national centres had been established on VAX front-ends. Common VM/CMS services were approaching fruition, although delays had occurred at ULCC and MCC due to late delivery of some of the software. The applicability of MVS at ULCC was being considered.

Overall, the Computer Board was anxious to develop close liaison with the UFC, the PCFC and the research councils and to maintain an appropriate balance of support for local installations, national centres and networks, taking into account the funding provided by the other Higher Education bodies.

The last presentation was by Dr Bob Cooper, Director of Networking and Head of the Joint Network Team.

A very wide range of activities and developments are being undertaken at present and in the time available it was only possible to give a brief description of a cross section of these.

In the area of network performance upgrades three components were discussed. The first was enhancements to JANET (named JANET II) whereby 2 megabit per second links were to be installed at a suggested rate of about seven to ten Megastreams a year. This programme of work was planned to begin immediately. The second was a need to improve local area networks on campuses, and a five year programme to install 100 megabit per second high performance backbone LANs was planned to begin in 1990. The third was the development of a Super JANET. The need for very high bandwidth connections was recognised to satisfy the requirements for the supercomputer environment for the 1990s and also the demands from other parts of the community for visualisation and graphics applications which require the interchange of large volumes of visual data. The establishment of Super JANET to provide a 140 megabit per second wide area network would be very expensive but should be targeted for the future.

The UK academic community's transition from existing, mainly UK-specific, Coloured Book protocols to open system services and protocols based on ISO standards is expected to be on-going for a number of years to come. The open systems networking environment that has developed in the UK academic community is complex and some 130 sites are interconnected via the wide area JANET X.25 (1980) switching service. There are gateways to PSS for national and international X.25 access. A number of projects are already in place dealing with the very many different aspects of achieving the transition whilst maintaining continuity of service between equipment using the old protocol set and equipment using the new protocol set, via appropriate converters.

The Name Registration Scheme (NRS) central database contains information on available services and associated routing information. It is already widely used within the community. Projects associated with the NRS include the establishment of name servers and the development and expansion of directory services.

Electronic mail services are being used by an increasing number of people within the academic and other communities. International e-mail services are widely used by academics throughout the world in collaborative projects. E-mail services will continue to be improved so that, for example, address information that a user needs to provide will become simplified. A JANET X.400 mail gateway between IXI, the projected common European network, and the USA is being established.

Developments in Europe were also described. For example, in the development of IXI the switches at Amsterdam and Bern will have a trunk connection between them of 64 kilobits per second initially, and during the pilot phase be upgraded to 2 megabits per second. There was a need to ensure that the facilities offered through EARN would continue to be available within the IXI environment so that users could rely upon a continuity of service.

A discussion session followed these presentations and covered topics such as the availability and user perception of UNIX based systems on workstations to supercomputers; the continuing need for centralised large scale computation facilities; the impact of workstation provision and performance on such demand; and specialisation of national centres in the provision of complementary services. Comments on the meeting and suggested topics for future meetings are welcomed and should be communicated to the national centres through the normal channels. Information about the services from a particular national centre is published in the centre's newsletter and on networking in Network News published by the JNT and Network Executive.

On the first of September Paul Thompson will be moving to a new post at RAL: heading a group which will bring into service for the Laboratory a large suite of financial management software packages obtained from Management Sciences America (MSA). In his new role he will become a member of the RAL Administration Department.

Paul has been a member of RAL Central Computing Department and its predecessors for over twenty years and there can be few users of the Department's facilities and services who will not have experienced and benefitted from his energetic and down to earth approach to computing issues. He came into computing via bubble chamber work, computer operations and then into user support, and for the past five years he has been Head of User Support and Marketing.

I would like to take this opportunity to thank him on behalf of Central Computing Department for his innumerable contributions to the Department's work and particularly his determination to make sure that the views and interests of users remain high on our list of priorities.

His colleagues in CCD wish him well in his new post, which promises to have at least as many challenges as the one he is leaving.

Gaussian 86 is a connected system of programs for performing semi empirical and ab initio molecular orbital (MO) calculations.

Gaussian 86 has been designed with the needs of the user in mind. Thus, all of the standard input is free-format and mnemonic. Reasonable defaults for input data have been provided and the output is intended to be self-explanatory. Mechanisms are available for sophisticated users to override defaults or interface their own code to the Gaussian system.

The capabilities of the Gaussian 86 system include:

Gaussian 86 is available on the Atlas Cray. The program is called GAUS86 so a simple COS JOB like

GAUS86 /EOF data cards

will read the data from $IN.

We have a few copies of the (very thick) Gaussian 86 manual so users not familiar with its use should contact the Documentation Officer (MANUALS@RL) to obtain a copy.

Since the hardware upgrade in May, we have had six central processing units (CPUs) on the IBM 3090, each with a vector facility. This now provides the opportunity to execute a single job across multiple processors, potentially reducing a program's elapsed time by a factor of up to six if the computationally intensive parts of the code can be divided up to execute in parallel.

We have IBM's Parallel FORTRAN to enable you to write and compile programs able to exploit this facility. The Vector Facilities and Parallel FORTRAN may be used together to gain the maximum performance from a code.

IBM's Parallel FORTRAN is based on VS FORTRAN Version 2 release 1.1 (the CMS FORTVS2 command invokes VS FORTRAN Version 2 release 3.0), but includes extra language constructs, subroutine calls, compiler directives and compiler options for parallel processing.

To execute a FORTRAN job using parallel processors, the job must be divided into multiple tasks. These tasks are executed on a FORTRAN processor whenever the task has work to do. The number of FORTRAN processors is defined at run time; normally, this would be a number between one and six. Each of the FORTRAN processors is mapped onto a virtual CPU in your virtual machine. If you request more than one FORTRAN processor, a virtual CPU is used for each FORTRAN processor and the original CPU is used by CMS. The control program (CP) schedules each virtual CPU to the real CPUs.

When developing a program to execute using multiple processors, you have the choice of creating and managing tasks implicitly using the autoparallel option of the compiler or explicitly using the parallel language extensions and library services. Here is an outline of the facilities provided by Parallel FORTRAN.

The compiler option PARALLEL used with the sub-option AUTOMATIC requests the compiler to analyse DO loops and generate parallel code if there are no computational dependencies between iterations and if it considers that parallel code will be more efficient than serial code. The use of automatic parallel code generation is simple and keeps your FORTRAN code portable. There are also compiler directives provided to supply the compiler with more information.

The following language extensions are provided to create tasks explicitly and to assign work, in the form of subroutines, to tasks.

The SCHEDULE and DISPATCH statements assign a named subroutine to a previously originated task for asynchronous execution. Arguments may be passed to the subroutine and specified COMMON areas can be shared or copied between tasks.

Alternatively, parallel loops may be explicitly defined. Each iteration of the DO loop must be computationally independent of the other iterations and hence be capable of being executed in parallel with the other iterations.

Can you remember the original Atlas I? (Do you really admit to being that old?) Did you run programs on this machine, with its card and tape readers and punches? Then read on...

The decision was taken to buy a Large Atlas computer on which a broad based computing laboratory could be set up. This laboratory (the Atlas Computer Laboratory) was built and staffed during the following three years and the Atlas I (built by Ferranti, later taken over by ICT - who became ICL in 1967) was delivered in May 1964. A regular computing service was opened in October 1964 - almost twenty five years ago.

We want to make the next issue of FLAGSHIP a special Silver Anniversary of this event. I will be delving into my box of memorabilia to find gems of historical interest to pass on - and I would love to hear your stories of the Atlas I computer. If you were one of the early users and have an amusing or interesting story - or perhaps you have a horror story - please contact me soon!

It is very interesting to note the changes in computing over this period; to whet your appetites - here is part of the specification of the Atlas I:

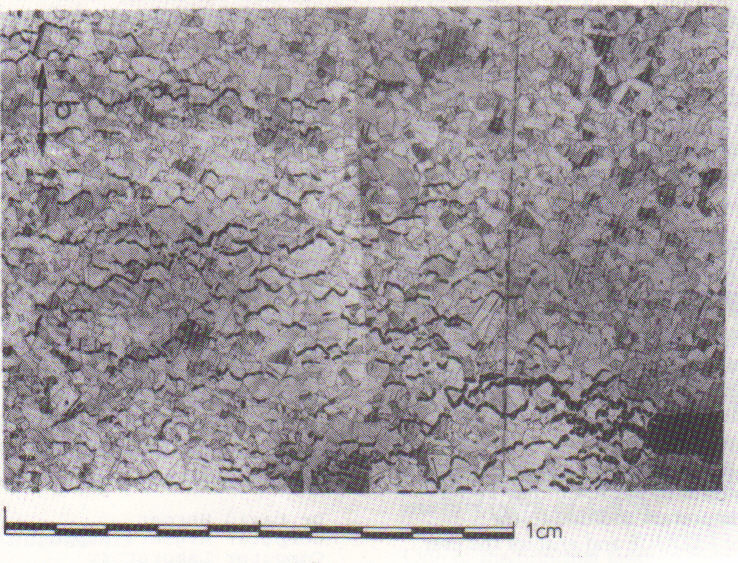

The design and manufacture group within the Department of Mechanical Engineering at the University of Sheffield is working on the modelling of the deformation and damage growth behaviour of high performance engineering materials and components. The components of interest are to be found in high temperature plant and machinery such as gas and steam turbines, heat exchangers, boilers and pressure vessels, nuclear reactor components, and aerospace engines and vehicles. The components are subjected to combined thermal and mechanical loadings at temperatures which can be in excess of one half of the absolute melting temperature of the material. These conditions produce in the material time dependent deformation and degradation. The process of damage formation is due to the nucleation, growth and coalescence of microscopic defects over a long period of time and results in ultimate failure of the component. An example of an evolved damage field is shown in the micrograph of Figure 1 where a plate containing a narrow slit has been subjected to a remote uniform tension σ.

Professor David Hayhurst heads a research group which has been modelling the development of such damage fields and a new branch of mechanics has been established known as Continuum Damage Mechanics (CDM). The techniques of CDM have been well developed for high temperature metals and their alloys, but groups around the world are now utilising these techniques to study the behaviour of engineering components fabricated in high performance materials.

The design methodology usually employed for this class of materials and components is now summarised. Basic laboratory tests are carried out on samples of materials under the conditions of temperature and stress. The data produced is used to create appropriate computer data bases which can be accessed by designers. Once the domain of operating conditions for the engineering component in question is known, then the materials models can be selected which reflect the physics of the deformation and damage formation processes. These models, or constitutive equations, can then be fitted to appropriate data collected from the computer data base. Very often, in an attempt to simplify the design task, engineers make use of phenomonological models which are usually based on either one- or two-state variables but, if this route is followed it is essential to verify that there is a one-to-one relationship between the physically based models and the state variable representations. The constitutive equations are then used to interpolate and to extrapolate the deformation and damage growth behaviour of the materials. They are usually used together with computational techniques, of varying levels of complexity, to carry out analyses of component behaviour in detailed design.

The level of computational difficulty is dependent upon the geometry of the component and the applied loading and thermal boundary conditions. Usually both these change with time and the computational problem often involves the solution of a boundary value problem or an initial value problem or a combination of both. The application of classical techniques for these problems is inappropriate since they require modification to fit within the domain of Continuum Damage Mechanics. These problems are often both geometrically and materially non-linear, with the stiffness of the governing equations changing from modest amounts to severe levels. The overall requirement of high plant-system thermal efficiency generates the need for the design engineer to model and simulate the performance of the component, and in order to make professional judgements there is a need to access information on the change of scalar and tensor fields in a time or in a load space. This places stress upon the need to provide high quality, user friendly, graphics and animation packages.

The aim of the Sheffield work is to work towards a situation where the behaviour of engineering components, which limit the overall performance, can be predicted using computers from simple laboratory test data; that is the route followed by most design codes and procedures. Such prediction techniques can be used in design to optimally satisfy the design requirements, instead of following an expensive proto-type development route, and to vastly shorten lead times.

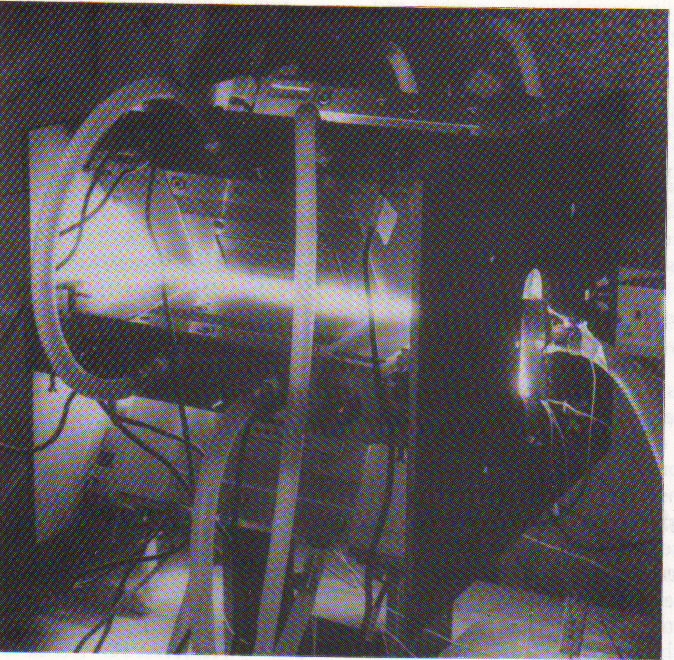

The effectiveness of the computational modelling work carried out at Sheffield is judged using the results of laboratory tests carried out on model components performed using realistic service conditions; and the difference between the measured and the computed performances can be assessed and used, if necessary, to develop improved models and computational techniques. An example of this work is shown in Figure 2 where a high performance component is subjected to a history of thermal shock. The component is heated by a bank of six, high response, quartz lamps while regions of the component are chilled using high pressure water cooling.

The computing requirements of this research are large storage capability coupled with high processing speed. These particular demands are such that they can seldom be met by local computing facilities. The research described here has been made possible by access to the facilities at the Rutherford and Appleton Laboratory. The computational work has been carried out by remote batch processing over relatively slow national communications networks. Further improvements in computational speed, in communications; and, in graphic and animation facilities will make available the results of the researching in the form of tools and aids, for use in the design of critical engineering components and processes involving high performance materials.

The forward scenario is one where advanced computing power in the form of vector and parallel process is providing the ability to solve economically design problems which were hitherto insoluble. This in turn will require material models which are mathematically simple yet accurately reflect the governing physics of the processes of deformation and degeneration. The next generation of engineering materials will be manufactured using unconventional techniques; and, will have highly non-linear behaviour. Both the manufacturing route of materials, and the design of components will rely increasingly on computational modelling to enable the achievement of optimum design for manufacture.

The work at Sheffield is aimed at providing the designer with computational-numerical tools which will enable analysis and simulation of component performance; and in addition will allow human interaction, to a sufficient level, to permit the achievement of optimal design.