Traditionally, at this time of year, our computing newsletters have been slim issues because so many people have been away on holiday. This year is different, thanks to the effort and enthusiasm of Jacky Hutchinson and all the people who sent her their reminiscences.

Although I missed Atlas 1 arriving, I was one of its operators - and cannot resist joining in the nostalgia. I remember days spent fetching and loading armfuls of Ampex tapes which weighed seven pounds each; they were attached by plastic buckles to leader tapes on the decks. If there were a power failure the decks would stretch the tapes, unless we were fast enough opening the tape deck doors. I learnt how to read at a glance the monochrome TV monitor which showed the Control Console - but could not understand the real thing in full colour!

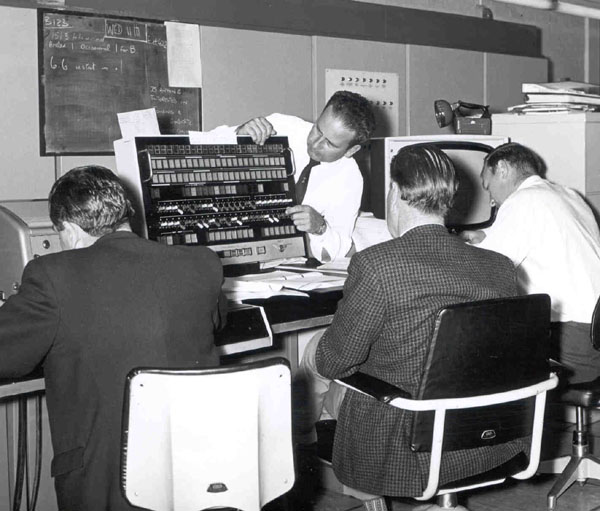

There were so many people around the machine room then; up to eight operators and four engineers per shift, with extra staff during day shift and the punch room girls outside the glass partition - it was like nineteenth century harvesting, compared with the solitary tractor of today.

In the May 1989 issue of FLAGSHIP I invited bids against the Strategic User element of the Study Agreement. From a substantial field of applications those listed below were accepted at a selection meeting chaired by Professor P G Burke on July 13.

| Name | Affiliation | Project | Total |

|---|---|---|---|

| R D Amos N C Handy | Chemical Laboratory Cambridge | Continued optimisation of Quantum Chemistry Code CADPAC | 500+ |

| I M Barbour | Physics & Astronomy Glasgow | Extend existing studies to larger lattices | 2000 |

| K Morgan | Aeronautics Imperial College | Solution of compressible high speed flows using unstructured mesh techniques | 1900 |

| M J Seaton | Physics & Astronomy Imperial College | Calculating accurate opacities for stellar envelopes | 275 |

| W G Richards | Physical Chemistry Oxford | Design peptide inhibitors of enzymes which have range of medical possibilities | 2000 |

| A D Gosman | Mechanical Eng Imperial College | Large scale computational fluid dynamics | 2000 |

| B A Bilby | Mech Process Eng Sheffield | Fundamental understanding of failure processes | 1725 |

| R Catlow | Royal Institution | Realistic modelling studies of oxide and zeolite heterogeneous catalysts | 2400 |

| J C Hunt | Applied Maths Cambridge | Pdf of 2-particle separation & Delta.-P(A,t) | 1000 |

| I H Hillier | Chemistry Manchester | Use of accurate molecular modelling methods to study systems with reference to drug design | 1800 |

| D Hayhurst | Mechanical Eng Sheffield | Design/analysis techniques, developed, use of models material performance under high temperature complex stress conditions | 2710 |

| J L Finney | Crystallography Birkbeck | Perform computer simulation calculations of aqueous systems | 1300 |

| T S Fuller-Rowell | Physics & Astronomy UCL | Numerical model of stratosphere mesosphere and thermosphere | 1320 |

| M F Guest | Daresbury | Develop extend GAMESS program to exploit IBM Architectural features | 100 |

| J Gerratt | Theoretical Chem Bristol | Determination of many excited electronic states of molecules and ions and interactions between them, using spin-coupled VB method | 468 |

Forge is an optimisation tool designed to help you improve the performance of Fortran programs to be run on the Cray. It was originally written for a UNIX environment, but it has now been ported to CMS and is available to anyone accessing the RAL CMS system from a 3270 or 3270 emulator in full screen mode.

Forge does not aim to produce the best performance possible. To do that you usually have to make substantial modifications to the logic of the program. That takes a considerable amount of time and effort, and carries the risk of introducing serious errors into the program. Often this is not necessary. In many cases small changes can be made to critical parts of the program which results in enough of the code becoming vectorisable to get a significant increase in performance. The purpose of Forge is to identify these situations, and to automate the process of changing the code so as to avoid the errors that are usually introduced by manual modifications. Its objective therefore is to produce a significant improvement in performance within a short period of time and with relatively little effort.

The first thing you have to do is to tell Forge which processor your program will run on and which compiler will be used to compile it. These two factors affect vectorisation and Forge needs this information when deciding if the code can be vectorised. Secondly, you need to identify which parts of the code need restructuring. Only heavily used parts are worth considering. Forge can insert subroutine calls into your code at strategic places to find out how often subroutines and DO loops are entered, and how long they take to execute. You can then run the program using realistic data and find out how it performs in practice; you may need to use more than one set of data to cover all aspects of the program. You do not need to do this. Forge will process the program if this data is not available, but it is useful to you to know how the program performs, and it is better to give Forge as much information as possible.

Now you have collected all the necessary information you can tell Forge which routine to process. Forge will parse the routine in a similar manner to the compiler and ask which loop to restructure. It will then analyse the loop to determine which statements will prevent the loop from being vectorised. While doing so it will prompt you for additional information which is useful in the analysis but which cannot be deduced from the code. Thus Forge bases its actions on your knowledge of the program and its performance in real cases as well as on the code. Forge can then suggest changes which will improve performance. Its primary strategy is to split DO loops into sections, separating parts which can be vectorised from the parts which cannot, but there are other things it can do as well. Where there are nested DO loops it may suggest inverting the order of the loops in order to increase the length of vectors. If a loop contains temporary scalars these may be converted to vectors to ensure they are evaluated simultaneously. Another possible action Forge can take where there is a subroutine call within a loop is to move the loop inside the subroutine, which will reduce overheads and provide new opportunities for vectorisation.

This process is entirely under your control. Forge only suggests actions that you might take. It will never change any code unless you say so. Whenever it changes a routine it always keeps the old version so you can go back a step if any changes are not successful. If you wish, Forge will also keep an audit trail of all the decisions made when changing a routine. This gives you the ability to go back and review what you did, and to change to a different tack if necessary.

Forge can be used from any type of 3270. It operates in full screen mode and splits the screen into windows to display its information. Therefore the more lines your screen supports the better. You access Forge by the CMS command GIME FORGE. This will create a link to the Forge disk, which contains all the Forge files and also a printable version of a Forge Users' Guide.

Forge operates by asking you questions, or asking you to select from a menu of possible actions. From the main menu you can choose a number of functions instead of restructuring code. One option is to browse through a database of information on Cray processors and the general theory of vectorisation. Another option is to perform file maintenance operations such as deleting unused files, preparing code for collecting timing data, collecting together modified files for test runs, and so on. A third tells you how to use Forge, although this hasn't yet been updated to include details of the CMS version. When you select the option of restructuring code you are guided through the process by the questions Forge asks. Online help is available in many situations in case you are in doubt. In general the interface is quite friendly, and the best way to learn about Forge is to read the Users' Guide and then try it out.

The following article has been reprinted, with permission, from CERN Computer Newsletter No 194.

All the LEP collaborations have finally discovered what many other physicists have known for some time: that RNDM is not good enough to use for a serious Monte Carlo. This was brought home most strikingly when one group found the same simulated events appearing twice, and another prepared a paper showing that the probability of getting the same events again is not at all negligible, and proposed ways to get around it.

In fact, the situation is potentially much more serious than even what these collaborations have discovered. It is obvious that getting the same events more than once is not good, but there are more subtle effects which are much harder to predict and potentially just as bad for any Monte Carlo calculation where one expects to get quantitatively correct results. It is now clear that, whereas the method of RNDM was acceptable in the days when one only needed a few tens of thousands of random numbers per calculation, it is inadequate for many of the applications now being performed.

The good news is that we no longer have to use RNDM. Much better generators are now known which are almost as fast and in addition portable and have other highly desirable features not available in RNDM, as described below.

The most serious bad properties of RNDM are listed here. The new generators proposed below solve all these problems.

For this reason it has been proposed that the standard form for a random number generator should be a subroutine which returns a vector of random numbers at each call:

REAL VEC(LENGTH) CALL RANxxx(VEC, LENGTH)

or, if you only want one at a time, simply:

CALL RANxxx(RANUM, 1)

Tests on several popular computers show that a subroutine call is not slower than a function call when one number is wanted, and is faster when several are needed at one time.

Two new algorithms have been proposed which solve in different ways all of the above problems: one is due to G.Marsaglia and A.Zaman of Florida State University in Tallahassee and the other is given by P.L'Ecuyer of McGill University in Montreal. A general discussion of the current situation in pseudorandom number generation, with descriptions and listings of these basic algorithms, is given in CERN report DD/88/22, by F. James, to be published in Computer Physics Communications.

One important feature of these algorithms is that they can produce independent sequences which can be used simultaneously. This has been implemented by having two styles of calling sequence for the new generators. Subroutine RANMAR (the Marsaglia algorithm) is called with parameters VEC and LENGTH as above whereas subroutines RMMAR (also the Marsaglia algorithm) and RANECU (the L'Ecuyer algorithm) have a third argument ISEQ which specifies which of several possible sequences of pseudorandom numbers one wishes used. This can be very useful for simulation of complex events. For instance, imagine a big detector composed of several different independent elements. For every element of the detector, a different ISEQ can be used (these do not need to be different initially). The different configurations of one element can then be tested without perturbing the random number sequence, and so the response, of the rest of the detector. A FORTRAN common block of sequences can be an elegant implementation of this technique. Moreover, in case of parallel processing, only one copy of the routine would be needed, provided that every task calls the routine with a different ISEQ (this could be the task number).

In both the generator RMMAR and RANECU each of the independent sequences has to be initialized by the user, otherwise the result is unpredictable. By default the routine contains a buffer of space to handle only one sequence. If more are needed, then a bigger buffer should be allocated in the main program defining the appropriate common block of the appropriate size.

For VI13 RANMAR and RMMAR one seed is needed to initialize a random number sequence, but it is a one way initialization. Each of the 900,000,000 starting seeds gives rise to an independent sequence which will not overlap a sequence started with a different seed, but the seed cannot be output and used to restart the sequence at a given point. In order to restart the generation, the number of random numbers generated is recorded by the generator. The sequence is restarted by either generating this many random numbers or saving and restoring a vector of 103 words. The number of generations is stored in two integer variables as the period is bigger than the maximum number which can be represented by a 32 bit integer.

For VII4 RANECU several independent sequences can be defined and used. Two integer seeds are used to initialize each sequence. Not all pairs of integer numbers define a random sequence which is independent from others. Sections of the same random sequence can be defined as independent sequences. The period of the generator is 260 (approx 1018). A generation has been performed in order to provide the seeds to start different sections of the same random number sequence. A routine is provided to return the seeds to start any of the generated sections. There are 100 possible seeds pairs and they are all 109 numbers apart. This means that a sequence started from one of the seed pairs, after 109 numbers, will start generating the next one. Note that a sequence is of the same order of magnitude as the full period of RNDM. Longer sequences will be generated and the corresponding seeds made available to users in the near future. Please note that the seeds of these independent sequences may change in this process of revision, while the numbers generated by the default sequence will not change.

The appropriate short writeup, available from the Program Library Office, should be consulted for more detailed instructions on the use of these routines.

Several important packages in the CERN Program Library (GEANT, LUND, ISAJET,...) are being modified to use the new generators. In particular, the next version of GEANT (3.13), scheduled to be announced with the next CNL, is being tested with RANECU.

The above routines will be introduced into the library in addition to the existing random number generators, notably RNDM. The code of RNDM will not be changed to avoid old programs to start giving different results because the random number sequence is changed. This protects users from unexpected/unwanted changes and makes them responsible for any improvements in their code by converting calls to RNDM into calls to one of the new generators. Users are, however, reminded that RNDM is not adequate for many modern applications and are urged to change to one of the new generators. The program Q902 FLOP is a library item which can be used for this kind of conversion, which, in the simplest cases, can be even handled by an editor.

Well! This exercise has certainly brought back memories for some of you! I feel a bit of a fraud - having arrived at the Atlas Computer Laboratory only 18 years ago. However, it was before the Atlas 1 disappeared so I do feel that I was part of the Atlas era, if only for the latter part of it.

My thanks to everyone who contacted me and particularly to those who found time to put pen to paper and write an article. These I have simply reproduced without any editing - to tell their own story.

Quite a long time ago I was visiting one of the big American National Laboratories; the man I had spent the day with took me home to dinner and as we were driving through a small town he waved his hand towards a group of houses and said, in a very solemn and serious voice, Ah, if only those walls could talk, what tales they could tell! Why, some of those buildings must be almost thirty years old. Well, the idea of the Atlas Laboratory started just about thirty years ago, of the Atlas computer rather more; that's a human generation, but far, far longer for computers. Many things relevant to computers were different, too: no SERC, for example (not even SRC), and no Computer Board. My own arrival on this Berkshire (as it was then) plateau was even longer ago, in 1948, to set up a computational and mathematical support group in the recently-created AERE Harwell - before there were any computers, at least for sale: who can imagine such a time?

It seems likely to me that many of the people now working in the Laboratory don't know how it came about, so I think the story is worth telling. I wrote about it in the collection of papers that the Harwell Theoretical Physics Division put together in 1979 to celebrate the 25th anniversary of the UK Atomic Energy Authority; most of what I wrote there, though, was about the pre-computer days at Harwell.

The computer industry began to get going in earnest in 1955, with the IBM 650, a thermionic valve, magnetic drum machine; by about 1959 its importance was evident, although I doubt if anyone foresaw just how important. America was already dominant and many people here were becoming concerned that Britain, where a lot of pioneering work had been done, would be left hopelessly behind. I and several UKAEA colleagues, mainly K W Morton (now Professor at Oxford), E H Cooke-Yarborough of the Electronics Division and John Corner of Aldermaston, talked this over quite a lot and came to the conclusion that there was a need for an advanced, large scale computer project: we were not entirely uninfluenced in our thinking by the news that IBM had embarked on its Stretch computer design, a machine, apparently, of fabulous power. We put this to Sir John Cockcroft, then Director of Harwell, who responded very enthusiastically and arranged two 2-day meetings at Harwell in which all the leading computer people in Britain took part and at which the idea was discussed with great seriousness. In the course of these discussions the Manchester University group Professors F C (Freddy) Williams and Tom Kilburn - revealed their plans for a very fast, sophisticated machine, in many ways very different from but in the same class as Stretch. The outcome was agreement that there was a serious need for an advanced machine project and that if one could be supported it should be based on the Manchester concept.

A lot of activity followed, but I don't need to go into the details here. The essence is that the Ferranti company, who had built and sold machines based on previous Manchester designs, indicated their interest in taking up this new design; and Cockcroft gave me the task of making a case to be put to the UKAEA, for a machine to be ordered from Ferranti and installed at Harwell: this was to be the Atlas Ferranti had always given its machines mythological names, like Pegasus, Perseus, Mercury: rather charming, but perhaps not all that well suited to to-day's related series. I spent most of 1960 on this, getting a lot of help from a lot people in the AEA, Cooke-Yarborough especially; the case was put to the Authority in early 1961 by Sir William (later Lord) Penney, Cockcroft then having left to become the first Master of the new Churchill College at Cambridge; and was accepted. There was then a very interesting turn of events. This machine was going to cost a great deal of money, about £3.5 millions - £30M or so today? - so it had to be approved by the Treasury and also by the Minister for Science (there was one then - Lord Hailsham). The case submitted to and accepted by the AEA was, in essence, this:

However, the authorisation that came out of the Minister's office was:

I have never learned just what led to this change; certainly a very important factor must have been that NIRNS had been set up with the right - the duty, in fact - to spend public money providing services free of charge to universities, whilst neither the UKAEA nor Government bodies had that right. But it was a decision of supreme importance for the future laboratory, and, as things turned out, a very fortunate one.

An immediate consequence of the Minister's decision was that new accommodation would have to be created for the machine, and a new organisation to run it. The NIRNS Board, chaired by Lord Bridges, took over the project and agreed to build a new laboratory, to be called the Atlas Computer Laboratory, on the Chilton site. In the autumn of 1961 I was given the job of setting up the laboratory and the organisation; the machine had been ordered from Ferranti in the summer of that year, was delivered to the brand new, specially designed building in April 1964 - in 19 truck loads - and began to provide a service in the autumn.

That's the story I set out to tell; I will say just a few words more. One can look with amusement at Atlas's specification - about half a megabyte of memory and half a MIP raw speed: something you can now buy off any shelf in Tottenham Court Road, pay for with a credit card and take home in the back of your car. But this is saying that technology has changed by more orders of magnitude over the last 30 years than anyone could have imagined; Atlas was a very exciting adventure at the time. Perhaps it's in order to comment here that it's proved very fortunate that this very modest amount of information processing (a term unknown in 1960) power did require so many cubic metres of accommodation, tonnes of metal and kilowatt-hours of energy - the building has since then sheltered unbelievable amounts of processing power without turning a hair. And lastly - I'm delighted to see the Laboratory so flourishing; it was created to provide a service, a very sophisticated service, to its users and so long as it has the users' needs at heart it will continue to flourish.

I joined AERE Harwell in 1958, shortly after the Ferranti Mercury had arrived, but it was already clear that more powerful computing was needed to do all the scientific and engineering work surrounding the early reactor development. We used the IBM 704 at Aldermaston, which was upgraded to a 709, moved to Risley, and upgraded again to a 7090. We developed ingenious systems for handling the decks of cards at a distance, including a machine that transmitted card images over telephone lines and an early facsimile machine for getting summary print-outs back. Imagine debugging a program with a two-day turn-round! We introduced Fortran to the Authority, and thence to the UK during this period.

Negotiation with Ferranti about a new computer for Harwell involved a number of technical issues - deciding the functions to be provided as extracodes (wired-in subroutines), estimating performance trade-offs with the swinging tape scheme (there were no disks in those days, and the drums were used to make the virtual store), and (with difficulty) drawing the boundary between the supervisor and the Programming Support Environment we were writing (which I called Hartran, since it was mainly to be used with Fortran programs). In parallel the Rutherford Laboratory (then called NIRNS) was also negotiating with Ferranti, but for a different computer, the Orion. (I never discovered why no-one noticed the enormous duplication of effort this implied.) A parallel team at Rutherford produced the Fortran compiler and support environment for the Orion.

As I understood the situation at the time, Harwell found that the power of the Atlas was greater than the predicted needs of the establishment, so soundings were made to find who else should use it. Principal among the candidates were the universities, particularly in view of the rapid expansion already imminent. However, at this point an administrative problem arose. Under the terms of the Atomic Energy Act, if the UKAEA owned the computer and other institutions were to use it, they then would have to pay the AEA. Since university funding was in prospect, this implied a transfer of cash from the SRC to the universities, for onward transmission to the AEA. The alternative administrative arrangement (proposed, it was rumoured, by Lord Hailsham), was for the SRC to own the computer, so that universities could have access to it without cash transfer; however, this meant that Harwell would have to pay the SRC for its computing done on the Atlas.

Needless to say, there was now intensive negotiation between AERE and the SRC to decide the charging formula, but the effect was that Harwell did relatively little work on the Atlas, keeping its powder dry for another two years when it got its own IBM 360. This was after the Mercury had been scrapped, and we installed at first an IBM 1401 (to do job preparation, using magnetic tapes for communication to the computers elsewhere, including the IBM 7030 (Stretch) at Aldermaston), then a small 360 (model 30), while we were negotiating with IBM for the main machine. This had originally been planned to be model 67, with IBM's Time Sharing System (TSS), but we found that its performance was not adequate, so opted for the model 65, with the ordinary operating system OS/360, on top of which I designed our HUW multi-access system. But that is another story!

I have found a paper from that period (1962) in which the four Atlas/Titan installation representatives discussed their plans for Automatic Programming Languages. There was Ferranti providing Mercury Autocode and Nebula, Cambridge preparing CPL1 (subsequently planned to be CPL2 but actually becoming BCPL, from which C developed), London doing CHLF3 Mercury Autocode and Lunacode, Manchester doing Atlas Autocode - and NIRNS/Harwell doing FORTRAN. Looking back, I don't think it was a bad decision.

It was an exciting time (and frustrating too). I led the team building the Atlas Fortran compiler from scratch, writing it in Fortran and developing it on the 7090. The main team consisted of Barbara Stokoe, Bart Fossey and Paul Bryant (Harwell), with Brian Chapman from Ferranti doing the machine specific code generation. We had others in for particular parts, including Bob Hopgood (can't remember which part for - sorry Bob!) and Arthur (Ferranti) who wrote the loader. Just to set the date in context, one thing I remember vividly was listening to Barbara's portable radio to hear the first ever space flight: the Mercury mission when a rocket went into a sub-orbital trajectory with an astronaut on board. In some ways we were going into space too!

I worked at AWRE Aldermaston from 1959 to 1962 and at the Culham Laboratory at Abingdon from 1962 to 1968, so I witnessed much of the Atlas saga from nearby.

When I joined AWRE they had an IBM 709. Shortly thereafter it was replaced by a 7090, an incredibly reliable machine, which itself was replaced by Stretch, the IBM 7030, in April 1962.

This for me personally was the start of the Atlas story. Stretch, a physically huge machine, was delivered on schedule. As I recall it was operational a week later and in service in approximately a month. Ferranti soon learnt to laugh at Stretch. Financially it was not a success for IBM. Watson was rumoured to have said the quickest way for IBM to go bust is for Stretch to be a success, and I guess Stretch is one of the reasons why IBM withdrew from the market for super-computers, but from the users' point of view it was a terrific machine. Aided by Alec Glennie's Fortran compilers and run by established computer professionals at AWRE it provided Culham with a splendid batch service using a thrice daily taxi courier.

But Aldermaston were not permitted to buy Stretch. Lord Hailsham decreed Thou shalt buy British, although nobody would admit officially to the fact. Thus Aldermaston was sentenced to rent Stretch until a suitable British alternative (i.e. Atlas) was available.

As the time for delivery approached (and passed), Ferranti's mirth became subdued and eventually disappeared. Finally when asked which was the superior, Atlas or Stretch, the reply came forth It all depends on the programmer.

In fact the Atlas delivered to Harwell in 1964 was an inferior beast with a troublesome two-level store. The original drums spun so fast they shed the oxide layer and slower higher-density drums were substituted. This made a mess of any timings, of course. Not surprisingly the new service had its own special problems, and Culham stuck with AWRE until their own KDF9 was delivered in 1965.

Aldermaston did eventually take delivery of their own Atlas. That Atlas had an all core memory (i.e. one level, no drum), yet it took literally years to commission. Indeed it took so long to get into service, permission was finally granted to purchase the aged Stretch, by which time the purchase price was peanuts, comparatively speaking of course.

I view the Atlas saga as a very sad and significant episode in the history of British computing. For me Atlas represents the moment when the British computer industry finally backed off and admitted second best to the Americans.

There are some of us still around for whom the computer per se was a quantum leap from the slide rule and mechanical calculator. (Do I hear music in the background)? There were computational needs at RAL (or NIRNS=> RHEL=> RL=> R&AL=> RAL...) before Atlas. Atlas 1 was just another step in the continuing improvement in speed of processing, or, for the more cynical user, another blip in the saga of things are working well, it's time for a change.

Much of Nimrod was designed using the Ferranti Mercury at Harwell: programming was done in Mercury Autocode on 5-track paper tape and if one had a bug in the program the compiler diagnostics were comprehensive, but concise and to the point - NO GO. Back to the punch room, splicing tapes and reeling up yards of it from the floor hoping that no one would stand on it. But it was bliss compared to those mechanical calculators!

Then came the Ferranti Orion, actually on site, at the end of R1. This was real progress - and 7-track paper tape. But it was almost transitory - there was talk about a new machine called Atlas, time sharing, n jobs at once, bigger, faster, the ultimate in computing. So the building appeared across the road and filled with Atlas 1. Rutherford (at whatever stage it was then) Laboratory hired time on the new machine and we had to learn HARTRAN (HARwell forTRAN).

Yet a while and IBM System 360/75 took the place of Orion, on site at the end of R1, and the Atlas complex was left to go its own way. This was a period of Multi Variable Tasks, trays of cards, stacks of lineprinter paper and 2-hour turnround. Then interactive terminals started to appear, the 360/75 became 360/195 and talk was in Mbytes.

The incorporation of the Atlas Centre into RAL is recent history and the many changes since that date have become translucent to the user. Perhaps someone 25 years hence will ask, Do you remember the Cray X-MP?

Atlas 1 did get a whole new building!

I joined Atlas as a Shift Supervisor in April 1965, after having been a visiting External User for 9/10 months (10 = E000002).

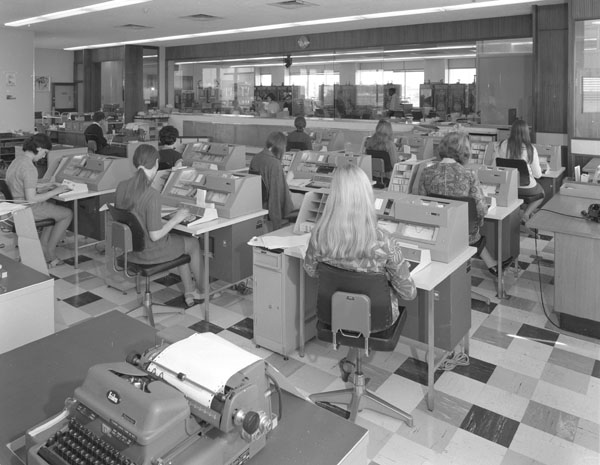

The CPU and the associated electronics were on the Ground Floor, including the Control Console, while all the peripherals were on the floor above. This First Floor Operations Area was separated from the Data Preparation Area and Visitors Data Prep. machines by a glass partition, one panel of which was slid back to be the Job Reception Counter.

One of our prime requirements was to schedule the work so that as high a percentage of the CPU time as possible was spent in User calculations. To do this it was necessary to keep an eye on the Control Console. This was made possible by the installation of a CCTV camera downstairs, monitoring the console, which was displayed on a Monitor in the centre of the Operation Area.

I am sure that the visitors thought we were completely mad when they looked at us running around the Operations Area stopping, every now and then, in the middle of the room to look at our feet. The CCTV system had broken down and there happened to be a small opening in the floor almost immediately above the console and that is how were keeping tabs on the job mix.

We had a special category of job, the Express Job, to enable a maximum of four visiting users to help them develop their jobs quickly. They were guaranteed four runs during the shift, the maximum time for any run being three minutes. We had one user who, perhaps he had trouble with the English language, thought that the rules meant that he could have twelve minutes CPU time. He submitted twenty-four thirty second jobs. He was a little put-out when he received the first four jobs within half-an-hour and then nothing during his visits over the next two hours. When he finally asked what had happened to his other twenty jobs one of my operators had to spend a long time explaining the rules. If I remember he still managed to get half of them before the end of the shift, but he didn't try it again.

We've still got one of the Atlas lineprinters. We bought it from you when Atlas was broken up to attach to our POP8/I. We also had another one (for spares) and a couple of card readers. I believe we paid £50. We never did use the card readers, they just occupied space for a while. We haven't used the printer for years, but it has never been thrown out, and I believe it still works. Would you like it back?

My memories of Atlas are of morning drives over the downs from Reading in Jorgen Christoffersen's ancient Citroen van. We used Atlas frequently during 1965-1968 to simulate the UV high resolution spectra of substituted benzenes.

The Algol 60 programs could be prepared, debugged and compiled on the Reading University Elliott 803 computer but could not be run. So, twice a week we chugged off to Chilton with programs and data on 5-track 803 paper tape, which the Atlas Algol compiler could understand after a pre-processing stage.

I recall that we prepared the mysterious JCL tape at Chilton on the paper tape standard there so our jobs were submitted as 3 or 4 separate labelled (1, 2, 3...) tapes. One computed spectrum was split into 4 or 5 jobs each taking many minutes, to overcome problems of machine failure, and every job required the large program tape - the program must have been compiled each time. So, a lot of time was spent in preparing jobs by copying the program tape. Tears caused by the tape readers and by rewinding and mis-punches during copying were frequent. Output could be produced on 803 paper tape which we took back to Reading for summation, smoothing and plotting. One final memory - Atlas provided the opportunity to see from time to time former friends and colleagues at other Universities who had come for a day or two, or scribble messages to them on their listings waiting to be posted. A fore-runner to email.

Those who never knew the good old days will never appreciate the enormous advance when eight-hole tape replaced the narrow five-hole tape, will never know the joy of seeing the middle of a roll of paper tape fall to the floor followed by a tangled streamer. At the Atlas Laboratory itself there was the ultimate of mod. cons. for tape experts, the envy of all backwoodsmen, the thermal tape splicer.

In those early days we had to send our precious tapes from the backwoods (Edinburgh in my case) to Atlas and then wait for a week for the feared diagnostic. Back came the tape one day with a diagnostic which I could not comprehend; my tape would clatter through the mechanical reader and in the short space of two hours I would have the program listing. I could see no conceivable error, so back to Atlas it went. The next week it returned with the same diagnostic statement, which evidently had to be taken seriously. The listing still showed no error, so I decided to make a copy of the tape and compare with the original. The copier reading head, just like at Atlas, was optical, and although it was rather unreliable it did produce on this occasion a perfect copy; or so it seemed. However, on comparison with the original using a mechanical reader there turned out to be one extra hole in the 100-yard copy. All that effort for one extra erroneous hole - that's what we had to contend with! Corresponding to the extra hole on the copy was a grease spot on the original tape, an optical hole but not a mechanical one. All that was needed to achieve a working program was a month of graft and the brief rub of a pencil.

I worked in the Support Office and in those days of batch processing (no networks, no interactive processing) jobs that failed used to come into the Support Office. We would telephone the user and explain the error message, and try to decipher what the problem was. If it was simple (a tape splice or a re-punched card, for example) we would make the change and re-submit the job. Otherwise the job would be returned to the user by post.

Many users would visit the Lab (to get an acceptable turn-round) and each week the Support Office would get a list of users who were known to be visiting, along with those who had booked cells - tiny offices containing a desk, chair and telephone. Users who hadn't booked a cell would congregate in the Think Room.

One of the problems Algol users had was deciphering the different dialects of Algol available on the Atlas. In the table of contents of The Atlas Algol System by F R A Hopgood, published in 1969, there is a list of 15 dialects:

Apart from the different I/O packages to contend with, each dialect had the reserved Algol commands distinguished in a different way. And then there were the terminators - who remembers ***Z (paper tape) and 7/8 in column 1, with a Z in column 80 (cards)? And to confuse the user still more, there was the problem of how to cobble together a job using different paper tape widths - again taken from the Atlas book:

If the Job Description has to be changed at the Laboratory, then it is usual to punch it on the most compatible device. .... In the case of dialects punched on 8-hole paper tape, it is usual to punch the Job Description in Ferranti 7-hole tape code and splice it onto the front of the 8-hole tape program. As it is not possible to splice 7-hole tape to 8-hole tape, a flexowriter is provided at the Laboratory generating Ferranti 7-hole code tapes onto 8-hole wide tape with the eighth track empty..... In this case the input should be marked as 7-hole and not 8-hole on the Job Slip.

Don't ever complain about Fortran again....

The following was taken from the Atlas Computer Laboratory - Some Aspects of Current Operation and Research, 1966 - from Jack Howlett's Introduction.

In a typical week we run 2,500 jobs, input 800,000 cards and 30 miles of paper tape, print 1.8 million lines of output, punch 50,000 cards and handle 1,200 reels of magnetic tape

From the Atlas Computer Laboratory User's Handbook (undated, but I guess late 1964) comes some information on the speed of the Atlas.

Time for various subroutines:

It is interesting to see how things have changed in what is really quite a short time. I wonder what changes will take place in the next 25 years - what could the next step be after the communal harvest and the tractor?