The picture on the front cover is a computer simulation of the Active Magnetospheric Particle Tracer Explorers (AMPTE) man-made comet, courtesy of Ross Bollens of the University of California, Los Angeles. The research was accomplished by utilizing two IBM 3090 supercomputers located in different continents: SERC's Rutherford Appleton Laboratory and NSF's Cornell National Supercomputer Facility, located in the United States, collaborated in providing exceptional supercomputing resources that enabled the researcher to develop a fully three dimensional space plasma computer simulation.

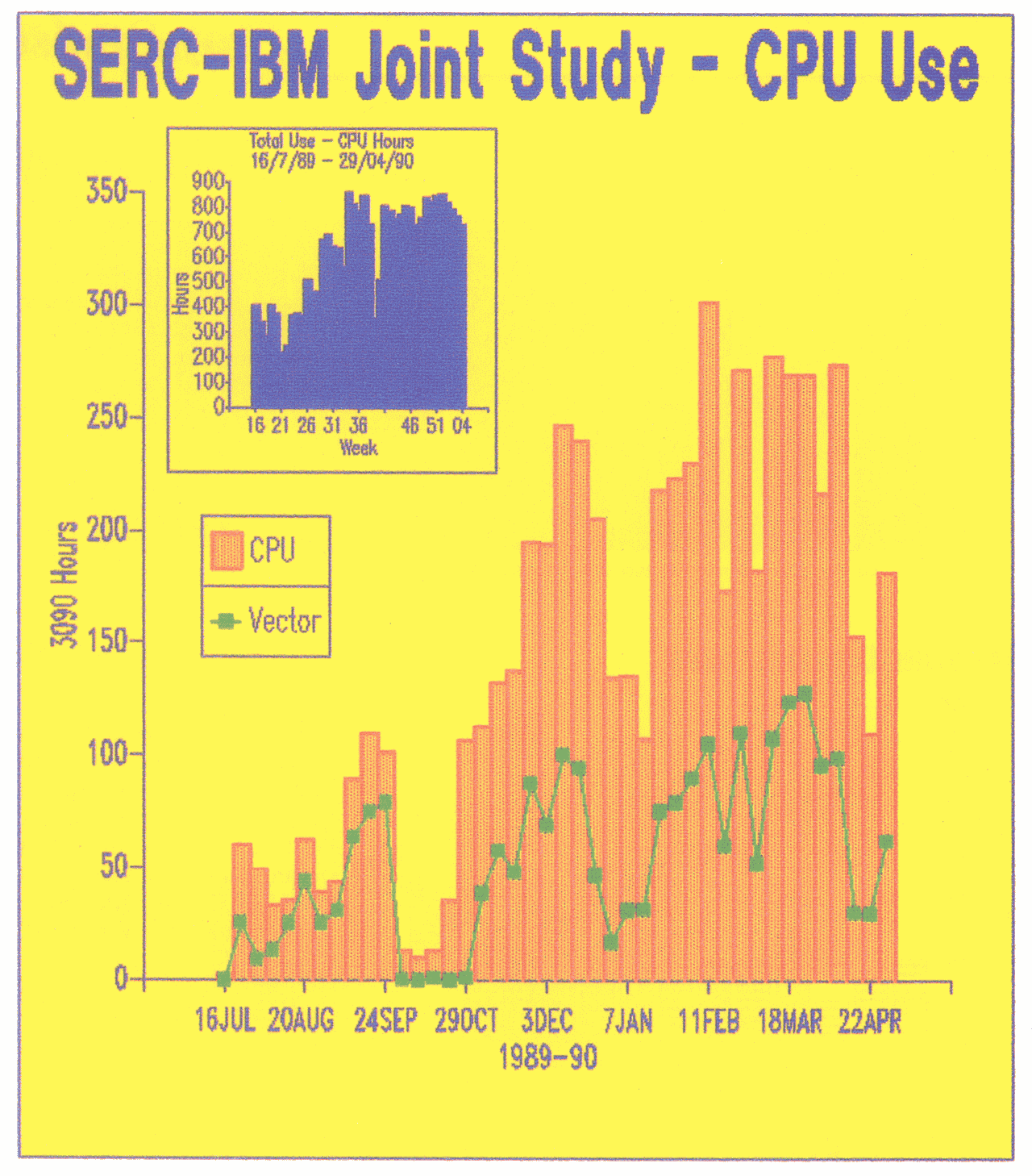

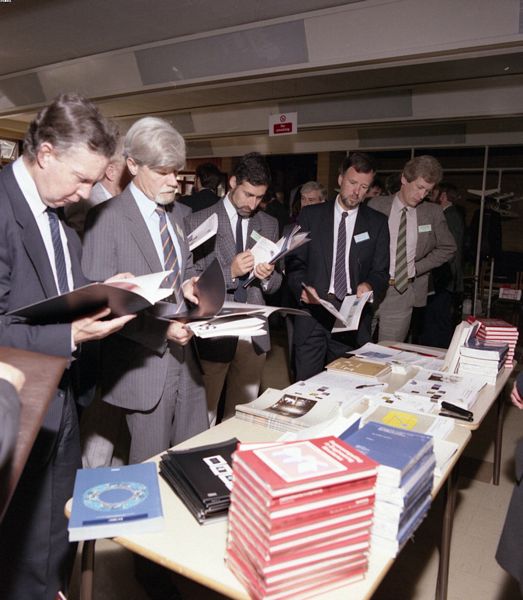

On Thursday 17 May RAL hosted a seminar to mark the successful completion of the first year of the joint SERC-IBM study on supercomputing and its applications to problems in science and engineering. A very successful day's activities were attended by some 100 representatives from academic institutes and industrial companies and sixty staff from IBM and RAL.

The proceedings were opened by the RAL Director, Dr Paul Williams, who noted that there had been a long history of collaboration between RAL and IBM with an IBM mainframe on site for more than twenty four years. The opening address was given by the Right Honourable the Lord Tombs of Brailes, Chairman of Rolls-Royce plc.

Lord Tombs, who was celebrating his birthday that day, spoke about the importance of industrial links with large computer manufacturers such as IBM, as well as the links between academia and industry. He pointed out the great benefits of supercomputers in the simulation of aircraft engines, a problem which he considered much more difficult than simulating the weather or the economy, because it was not possible to make approximations and an accurate understanding of the technology was needed. Nevertheless, supercomputers allowed very large scale models to be generated which were needed for three dimensional flow analysis and stress calculations. This produced enormous cost savings when compared with simulations using real hardware models.

Professor Bill Mitchell, Chairman of SERC, spoke on the topic of supercomputers in academic research. He commented how a combination of Government and industrial support had put the UK in a good position over the last two years in the field of research with supercomputers. In this connection he expressed his gratitude to IBM for providing the upgrade of the IBM 3090 from a 200E to a 600E with 6 vector facilities. He noted that SERC has a large body of expertise which is available for application to industry's problems and cited this joint SERC-IBM project as a good example. He hoped that this partnership would continue in the future. He saw computer simulation as an essential part of the method of research in science and engineering, complementing both experiment (real ones!) and analytic theory. While it was an excellent way of saving experimental time he cautioned the need to make contact with actual experiments from time to time to avoid the danger of straying too far from reality. Good examples of this were the work done by Professors Bilby and Hayhurst on failure analysis and materials science and the way in which they had related to experimental work being carried out by the University of Sheffield and Rolls-Royce.

Dr Brian Davies, Associate Director for Central Computing at RAL, gave the background to the SERC-IBM study agreement. He began with an overview of the history of the Atlas Centre, its past and present computing hardware and its network links based on the Joint Academic Network, JANET, which provides access to users at all the universities and most of the polytechnics and Research Council establishments in the UK. He reiterated the aims of the study agreement and described how the fifteen "strategic" users had been chosen by a panel chaired by Professor Phil Burke from a list of thirty applicants. He stressed that the criteria for the choice had been the potential for taking advantage of the vector, parallel and large memory features of the 3090 and the representation of a wide variety of scientific and engineering applications.

The last speaker of the morning was Mr Chris Conway, Southern Regional Director of IBM UK Ltd. He said how delighted he was for IBM to be a partner in this exercise and pleased to support the academic community at large. IBM's involvement with science, especially in research and development, went back a long way both through collaboration with the scientific community and through achievements in IBM's own laboratories.

As examples of the former he quoted the Alvey project work on image recognition at Sheffield and Edinburgh, collaboration with Cranfield on portable testing tools for robotics, the production of the new edition of the Oxford Dictionary and molecular modelling for drug design. He finished his talk with a slide presentation overviewing IBM's own scientific research which had led to several Nobel prizes. The subject areas included Josephson junction technology, high temperature superconductivity, Benoit Mandelbrot's fractal scaling of nature, William Latham's computer sculptures and the scanning tunnelling microscope which allowed individual atoms to be moved across a surface and positioned accurately. He admitted however, that no practical application had yet been found for this last development!

As the morning session took everyone by surprise by finishing early the audience was treated to two short computer generated videos. The first had been compiled by Chris Osland from a number of sequences generated on the Atlas Centre's video system including the modelling of Antarctic and global atmospheric currents. The second was a spectacular sequence from the award winning work by William Latham on computer sculpture generated with the Winchester Solid Modelling package (WINSOM).

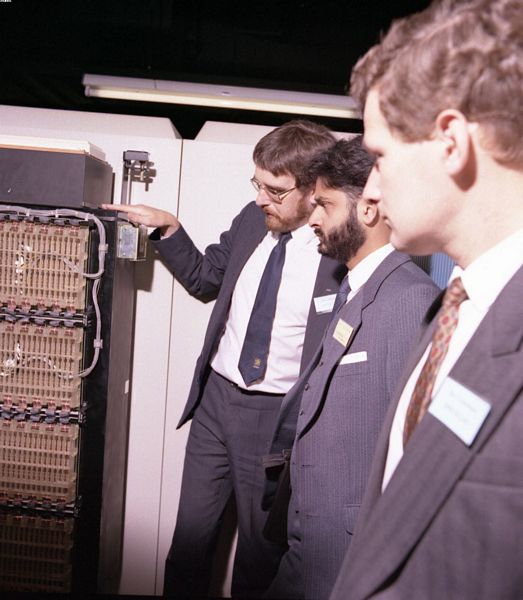

An excellent buffet lunch was enjoyed by all, and during the lunch break many of the visitors took advantage of guided tours of the Atlas Centre where they were able to see the inner workings of the 3090.

The afternoon was taken up by presentations from four of the strategic users on their scientific results from the first year of the study programme.

This work involved the study of air flow in hypersonic aircraft design, particularly with respect to flow past the engine cowl lip. The object had been to develop general purpose codes to model fluid flows past complex geometries in the transient and steady states. The finite-element technique was used with structures of 2-D and 3-D triangular meshes. Use of the 3090 had enabled the computer simulation to be completed in 27 hours and total aircraft simulation in 4 days.

Professor Morgan is working closely with NASA on the simulation of the shuttle orbiter separation form its booster rocket. So far this has been done in 2-D and will be extended to 3-D in future.

Dr Richards work was aimed at preventing the dreadful side effects of anti-cancer drugs which kill normal cells as well as tumour cells. The strategy was to design compounds which would attack tumour cells selectively based on a difference in blood supply to normal and cancerous tissues leading to the latter being less well supplied with oxygen. The technique for designing these bioreductive anti-cancer drugs made use of statistical mechanical and quantum chemical calculations which were totally dependent on the supercomputing facilities of the 3090.

Some related work involved the computer simulation of enzymes which convert chemicals into the building blocks of DNA and the WINSOM package was used for visualisation. This enabled molecular structures to be produced which would otherwise be very difficult to achieve experimentally. Dr Richards showed some slides of the simulation of small molecules binding into larger ones. Apparently one of these had been on the lunch menu!

The aim of this work was to improve the understanding of the failure processes in tough materials used in critical engineering applications such as aircraft, engines, gears, oil-rigs, pipelines etc. It involved large-scale finite element computations which simulated the damage ahead of the tip of a growing crack and determining the validity of measurements made with specimen materials when transferred to actual structures. A locally written stress analysis program, TOMECH, was used for these computations. Work has begun on detailed 3-D studies of a theory that the progressive development of damage is due to the growth of voids near crack tips during the process of ductile fracture. This work is aimed at reducing the often heavy cost in human and economic terms that is all too often seen as the result of catastrophic structural failures.

Dr Bollens work involved a 3-D computer simulation to model the theoretical explanation for the unexpected results from the AMPTE artificial comet. There were no existing theories to explain why the initial deflection of the ion clouds released by one of the AMPTE satellites was in the transverse direction with respect to the solar wind rather than the ambient flow direction. A theory which explained this phenomenon in terms of a rocket effect was developed by UCLA and RAL. A full 3-D computer model simulating the theory was produced and found to give excellent agreement with the experimental data.

This model could only be run on the 3090 because it required 998 Mbytes of storage and made heavy use of IBM's parallel Fortran compiler to reduce the run time from 175 to 75 hours. Dr Bollens showed some very impressive slides of the artificial comet from various aspects showing the direction of the ion cloud, an unexpected hole in the top of the comet and a sequence of views of an imaginary observer moving right through the centre of the comet.

The day was completed by Dr Roger Evans, Head of RAL's Advanced Research Computing Unit whose theme was the Challenge of Supercomputing. He noted the versatility of the IBM 3090 which provided large-scale memory as well as enormous computer power. He stressed the importance of projects like the SERC-IBM study programme for scientific research and for the leverage that it provided on funding for scientific research from the Department of Education and Science. He said that in future the aim should be to achieve the highest quality research by working with industry to exploit the design and development capabilities of supercomputers. However, he stressed the importance of providing the most appropriate computer power to those who need it whether it be workstations such as the RS/6000 or supercomputers like the Chen SS-1.

The Harwell Numerical Analysis Group has been supporting users of the Harwell Central Computers for some 25 years and has become known as a centre of excellence for research in numerical analysis. The principal mechanism for this support has been provision of general-purpose Fortran subroutines, supported by theoretical analysis. Currently, the most active areas are in the exploitation of sparsity and in optimization. The four principal members of the group are moving to the Atlas Centre and hope to continue this work in support of users of SERC supercomputers. We feel able to help in most areas in large-scale computation; our particular expertise lies in solving large linear and nonlinear problems.

The four, with their principal activities, are:-

John, Nick, and Jennifer moved on April 2nd, and lain will move at the beginning of June (he is in the middle of a contract for Harwell).

The group's software is written in Fortran and is collected into a library called the Harwell Subroutine Library. This is marketed by Harwell and is mounted on the Atlas supercomputers. An agreement has been reached with Harwell for the group to continue as consultants to maintain and support the library, while Harwell continues to market it. A subset of the library is also marketed by NAG as the Harwell Sparse Matrix Library.

The particular strength of the group lies in software for sparse matrices and for optimization. The following subroutines are of special note:

John Reid is a member of IFIP (International Federation for Information Processing) WG 2.5 (Working Group on Numerical Software) and was formerly its secretary and then chairman. This has very limited membership and meets once a year to exchange ideas. It arranges working conferences that have a particularly international flavour about every third year. He is also a member of the Fortran standardisation committee X3J3 and was formerly its secretary. The new standard, Fortran 90, is about to be finalized and John plans to withdraw from this work. The motivation for involvement was the huge investment in Fortran code that the Atomic Energy Authority has made (including the subroutine library itself) and the need to protect this investment.

Iain Duff has a part-time appointment as leader of the Parallel Algorithms Team at the European Centre for Supercomputing in Toulouse (CERFACS), which is involved in a variety of research topics, including parallel methods of optimization, partitioning and tearing in an MIMD environment, and parallel iterative methods. He is a visiting Professor of Mathematics at Strathclyde University and serves on the Technical Options Group of the Joint Policy Committee on National Facilities for Advanced Research Computing. He is one of the Editors of the IMA Journal on Numerical Analysis.

Nick Gould has a very productive collaborative venture on large-scale optimization with Andy Conn of the University of Waterloo and Philippe Toint of the University of Namur. They have established the theoretical framework that they need and are now constructing a large-scale package to implement their ideas.

Both Nick Gould and Jennifer Scott have won Fox prizes for numerical analysis. Nick won a first prize for his paper On the accurate determination of search directions for simple differentiable penalty functions. and Jennifer won a second prize for her paper on A convergence recipe for discrete methods for generalized Volterra equations.

The Group ran a joint series of research seminars with Oxford University, with two talks at Harwell each term, and occasional talks at Harwell during Oxford vacations. We plan to hold these seminars at the Atlas Centre and to do our best to choose speakers of interest to a wider audience in the SERC community.

We are all looking forward to playing an active role in the work of the Atlas Centre. Currently, we are acclimatizing ourselves to a different IBM operating system and to the Sun workstations that have kindly been donated to us by Amoco to aid our research.

The User Support and Marketing Group is no more! After many years during which it has provided an excellent service to the community, it has been dissolved to make way for two new groups, both in the Computer Services Division of the Central Computing Department.

Dr Tim Pett, previously Head of Systems Group in the Software and Development Division, has transferred to Computer Services Division to head the Marketing Services Group, and Dr John Gordon has been appointed Head of the Applications and User Support Group. Members of the old User Support and Marketing Group have been integrated into the new structure.

Reorganisation is a topic guaranteed to generate lively debate with strong sentiments for and against. However, it is a normal development and growth pattern of any healthy organisation, being a response to changes in the environment, technology, customer requirements etc. The organisation must keep pace with such changes if it is to remain alive and dynamic. Computing has probably seen more rapid and more far-reaching change than almost any other discipline and the Atlas Centre has been at the forefront of many of these changes. As a result of this, perhaps the Atlas centre has had more than its fair share of reorganisation and restructuring!

Looking at a little history, computing has been prominent at Rutherford for many years. In the early eighties, central computing was supported by a single, large division. This underwent a major reorganisation in 1984 when a significant fraction split off to form the Informatics Division. The then Central Computing Division was left with three major groups, one of which was the User Support and Marketing Group. This group had the responsibility of providing support to what was already an extensive user community. In addition to programming support, the group allocated computer resources and charged the community for use of those resources, hence the marketing component. In 1987, there was a further major reorganisation in the laboratory and the Central Computing Division became a full department with two internal divisions. The User Support and Marketing Group survived these changes and continued to provide basically the same service in the new Computer Services Division. The group and its members are of course well known to most readers of FLAGSHIP and I am sure that their efforts and the services they provide are widely acknowledged and appreciated.

There are two essential reasons. First, there have been very significant steps forward in technology in the last few years. In particular the department has seen the introduction of of the Cray X-MP supercomputer and the expansion of the IBM mainframe into the supercomputer field through the addition of further central and vector processors. These were major technological steps that placed increased demands on the User Support group. In order to exploit fully the potential of these new machines, the User Support group had to gain expertise in vectorisation and parallel programming techniques and develop the necessary training courses to pass on this expertise to the community. There are other exciting steps forward in technology still around the comer. Visualisation is an area that is advancing at a remarkable rate, largely through the availability of faster, more powerful workstations. The close coupling of such workstations with supercomputers, along with the development of new visualisation techniques, will not only be an exciting development in itself, but one which will generate more demand for interactive access to the supercomputers. At a more basic but equally important level, there will be a need to develop general algorithms that can very efficiently exploit the multiprocessor and vector capabilities of the machines. We want to involve the User Support group in these activities.

The Application and User Support Group under the leadership of John Gordon has been set up with the intention of achieving this objective. It is made up of the Scientific Support section of the old User Support and Marketing Group together with a new Applications section. The new section will have specific responsibility for the provision of applications, initially in the areas of workstations and parallel algorithms. The two sections will work closely together and share common problems - not the least of which is the continuing provision of support to the community.

The second key reason for change in the user support and marketing environment is the Department's intention to expand its marketing interests through the sale of computer resources and Rutherford expertise to external customers. These should include a significant number from British industry. Britain is still lagging behind its major competitors in the exploitation of supercomputers in its major industries. We would like to play a part in correcting this imbalance by providing access to our facilities and if necessary consultancy at both scientific and technical levels. The charges made for these services will be competitive. The revenue raised will be used for the purchase of additional equipment and facilities such as memory and disks. The amount of CPU time made available to external customers will be limited to around ten per cent of the total deliverable. At this level the additional resources purchased should be sufficient to raise the overall efficiency of the system to such that we should be able to accommodate the external customers whilst still delivering the same or possibly even a better level of service to our existing users.

The resource management, training and documentation sections of the old User Support and Marketing Group have combined under the leadership of Tim Pett to form the nucleus of the new Marketing Services Group. In addition to the provision of these services, the group will be responsible for publicising and marketing the Atlas Centre's computing services to commercial customers.

The current restructuring of the User Support environment is modest compared with some of the restructuring that has taken place in the past, but it is a fundamental and important change and one that should benefit us all. Tim and John will provide you with more news on their groups in future editions of FLAGSHIP. For the moment I wish them every success and I am sure that all of you will add your own encouragement and support.

As you will all have found out by now the migration from COS to UNICOS has gone more slowly and more painfully than we had hoped. The move got off to a bad start with some hardware problems in the first week of UNICOS. This caused lots of users to try experimenting with different syntax when the cause was something totally out of their control.

During the first couple of weeks of the UNICOS service, User Support Group were "snowed under" with well over one hundred calls per day. Thanks are due to all users who were patient and tenacious in struggling through these initial experiences. Thanks also to all in User Support who worked hard and sorted out upper/lower case problems for the n'th time!

We have had to run the parallel COS and UNICOS services for much longer than we would have liked and this has exposed difficulties such as the smaller amount of disk space available for each operating system and problems of job continuity over the "guest / native" transition. It would probably have been easier on the UNICOS side if we had been able to drop COS quickly but there were delays in getting all the applications packages running under UNICOS.

At last the end seems to be in sight, and provided the new applications packages run satisfactorily under UNICOS, we can now plan for the withdrawal of COS. By the time this appears in print it is possible that the COS service will have been terminated, but, as we have said before, it is still possible to move old COS datasets to UNICOS using the security copies. This becomes more difficult for our data management staff as time goes by and there may well be delays of a few days in recovering old data.

Our thanks go to all users who have stayed with us through this difficult time and our apologies for the service disruption. I believe it will prove to be worthwhile, particularly since new users are increasingly familiar with, and sometimes demand, UNIX as their preferred operating system.

As part of the Joint Study Contract with IBM (UK) for the use of the IBM 3090/600E, a course on High Performance Numerical Computing was held at The Cosener's House, Abingdon from April 6-9. Normally there is difficulty in financing these courses since few academics are able to find the costs of four days residential accommodation and lecture fees, and we in SERC can only obtain funding through the grants line. However on this occasion, thanks to the generosity of IBM, we were able to offer the course to forty participants free of charge. The course was heavily oversubscribed and preference was given to those currently using the Atlas Centre supercomputing facilities and to those able to pass on the knowledge gained from the course to others in their institutes.

The course aimed to describe the opportunities for high performance computing that are available on modem multi-processor shared memory vector supercomputers. This began with a short description of how supercomputing hardware has developed and how the quest for hardware speed has placed some restrictions on the structure of the users programs.

The course described in detail the constructs that will and will not vectorise on the IBM 3090 vector feature and how the compiler can be helped to produce better code with compiler directives. There was a liberal provision of examples and Jonathan Wheeler gave an introduction to running Fortran under VM/CMS on the RAL 3090/600E. We were pleased to see that some participants produced better performance improvements than the model answers.

As well as vector computing, which is now well established, there were lectures on parallel processing on shared memory machines and in particular the paradigms that must be adopted for correct access to shared data and shared resources. We were indebted to Vic Saunders from Daresbury Laboratory and John Hague from IBM for sharing their experiences on parallel computing.

The final part of the course was a lecture by Phil Carr of Bath University on the essence of good software writing, producing code that is usable not only now, but also by the next generation of research students, and reminding us all that codes MUST be tested before they are used on real problems, and tested again every time they are modified. Let us hope that the signs of agreement from the audience were actually translated into action when everyone returned home.

Brian Davies closed the course with a challenging look into the future of high performance computing where the major limitations will be funding rather than technology and Teraflops of performance will be available to those who have large enough cheque books to pay for it.

In the midst of all these computing lectures, John Reid and Stephen Wilson of the Advanced Research Computing Unit at RAL gave lectures on specific areas where algorithms rather than code must be tuned for maximum performance.

Thanks go to Ben Ralston of IBM for supplying much of the course material and providing endless (and sometimes amusing!) quotations during his lectures. Mary Shepherd and Linda Miles were indispensable to the organisation and we must also thank Telecoms Group for setting up over twenty terminals at The Cosener's House.

All present agreed the course was a success and filled a very important need now that so many universities have access to vector and parallel computing. We hope to give essentially the same course again in October this year and will try to broaden it to include industrial participation.

In one of the fastest video productions yet achieved on the Atlas Video Facility, a three minute video was made on April 20 and 21, just in time to be shown at the European Geophysical Society meeting in Copenhagen the next week. The sequence showed a number of simulations of how gases released at various locations in a model stratosphere subsequently move and mix. These simulations were produced by UGAMP (UK Universities' Global Atmospheric Modelling Project) on the ULCC and Atlas Cray computers.

UGAMP is a major UK initiative involving principal investigators at five universities (Cambridge, Edinburgh, Imperial College, Oxford and Reading) and at RAL; computing takes place at all three UK supercomputer centres but principally at ULCC and RAL. These universities have developed considerable experience in the numerical modelling of many aspects of the troposphere, stratosphere and mesosphere, spanning from ground level to 80 km. The aim is bring all this experience together to model key aspects of our climatic and chemical environment.

Naturally the subject of the Antarctic ozone hole is one which commands immediate attention. Its discovery lead to the Montreal conference in September 1987, at which most of the major industrial nations pledged to reduce the release of CFCs (chlorofluorocarbons) by modest amounts. New evidence reported just after the conference confirmed that these gases were responsible for most of the springtime damage to the ozone layer over Antarctica and that far more drastic CFC reductions would be needed.

The UGAMP project is part of the UK effort towards understanding the implications of this and other global change phenomena. It uses a hierarchy of models. At the top is a global model based on the ECMWF operational meteorological model. It includes many physical effects but compromises on vertical and horizontal resolution. Further down the hierarchy, one model has been used to simulate at very high horizontal resolution the way gases released into the Northern and Southern hemispheres move around and mingle with their surroundings. Its resolution exceeds that of today's space-based remote sensors.

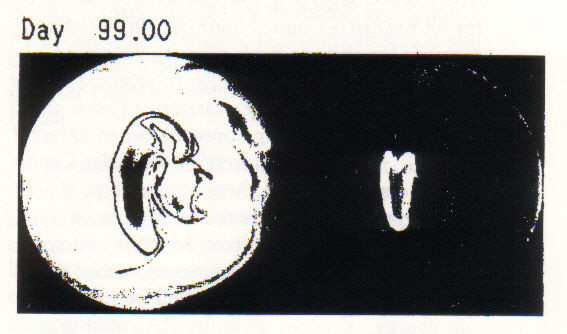

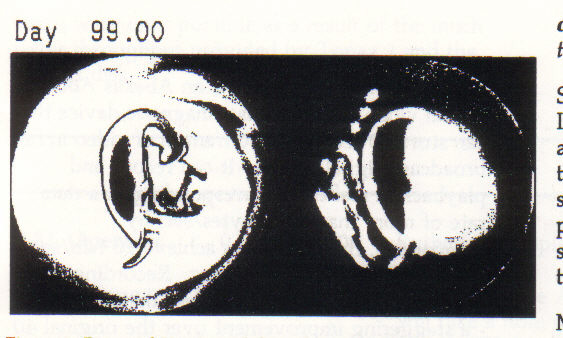

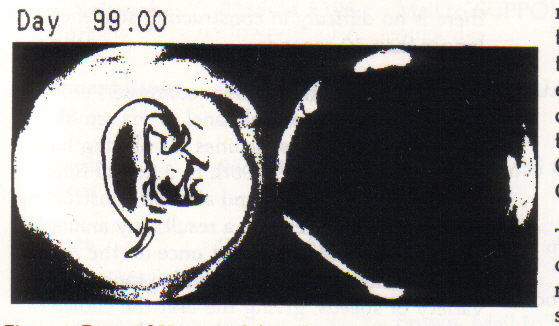

The video sequences produced at Atlas show 100 days evolution of this model; at each timestep (a quarter day), two views are displayed. Both show the same system, but they present different aspects of it. On the left is the potential vorticity, a measure of the inherent cyclonicity, or spin, of a material air mass. The picture on the right shows how a passive chemical tracer moves and disperses. Three different video sequences were produced: in the first, the chemical tracer is released at the pole (centre of the picture); in the second, midway between the pole and the Equator; and in the third, at the Equator (edge of the picture).

The first pair of pictures (figure 1) shows the pattern after 99 days of the simulation with the tracer was released at the pole. The area covered by appreciable levels of tracer has increased but virtually all of it is trapped in the vicinity of the pole. This system is called the stratospheric polar vortex.

When the tracer is released midway between the pole and the Equator (figure 2) it rapidly spreads throughout the mid latitudes. Remarkably, however, only tiny amounts penetrate into the polar vortex region.

For the tracer released at the Equator (figure 3) most remains in a band around the Equator although some disperses into mid latitudes.

The remarkable isolation of the polar vortex is believed to be a key factor in the formation of the Antarctic ozone hole. The video was extremely useful at the conference, demonstrating not only the vortex isolation effect but also the necessity of using models of unprecedented resolution to represent such effects correctly.

The task of producing the video sequences started the previous week when Simon Cooper of DAMTP at Cambridge contacted the Atlas Centre to ask whether he could make some videos of UGAMP output. We replied that we would be delighted and discussed how an existing UGAMP display program could be adapted to produce output suitable for the video system. In the time available it was decided to produce a Computer Graphics Metafile (CGM) in Clear Text Encoding (see FRAM - the making of the video in FLAGSHIP 7 for the same technique being used for FRAM).

Simon came to Atlas at 4:15 on Friday afternoon. In an hour or so we had his program running on a Sun SPARCStation and by about 9 pm were in the middle of a run producing a first video test sequence (about 8 seconds of video). At this point he mentioned that he had another 3 long sequences to do and that the finished video, with titles, had to be in Cambridge by Saturday night!

Mercifully, all the new hardware for the video system (see Video System Enhanced in this issue) was by now installed, working and had software written for it, so we worked through much of the night and all the following morning and finished the job. Each of the sequences (release at pole, release at mid latitudes and release at the Equator) was recorded in turn on the Abekas video disk and then transferred onto two U-Matic video tapes at several speeds. Final editing, making up one U-Matic and two VHS copies, was done on Saturday afternoon (also on the Atlas Video Facility), Simon returned to Cambridge and the tape was taken to Copenhagen.

The model used for these video sequences marks only the beginning of a long term effort towards more realistic global modelling; this will make severe demands on both human ingenuity and computer power for years to come.

I would like to extend my sincere thanks to Simon Cooper of DAMTP in Cambridge for his hard work in reprogramming the UGAMP display programs to produce CGM output and to Dr Michael McIntyre FRS (also of DAMTP) for his great assistance with the scientific background to this article. Simon has now moved to the US and Dr McIntyre would be delighted to hear from any skilled programmer interested in joining the UGAMP Group in Cambridge to keep up the momentum in this challenging area. May I also thank Nick Gould of Atlas's Numerical Analysis Group for his loan of the SPARCStation for the weekend. Further reading on the UGAMP project may be found in James and Hoskins Supercomputer, vol 7 pp 104-113, 1990.

The Atlas Video Facility, already responsible for the output seen at several shows and in a BBC Open University Oceanography program, has recently been considerably enhanced. In its new configuration, it is not only very much faster but can also provide users with sequences at a variety of playback speeds (forwards or backwards). It has been busy from the day it was upgraded, producing new FRAM sequences, demonstrating the latest work from the UGAMP project and contributing to the SERC-IBM Study Agreement meeting, the NERC 25th Anniversary video and the RAL Open Day video.

The original video system consisted of a number of components that processed the graphics metafile or image file:

All the equipment involved in this process, except the 68010-based Topaz system, is still part of the new system, but two new components have revolutionized the speed and method of making videos.

A new Topaz system, based on a 68030 running at 33 MHz, has replaced the original and is dramatically faster. The new CPU can read graphics data faster from its disk and all aspects of its work are faster; some simple sequences produced by the PHIGS system on a Sun have been rendered at 2 or 3 pictures per second, a speed inconceivable on the original Topaz computer.

The more radical change is that the pictures produced by the Topaz system are no longer recorded onto tape but disk, an Abekas A60 digital video disk. This is a magnetic device that can store 30 seconds (750 frames) of video in full broadcast digital format. It can record and playback in real time, corresponding to a data rate of more than 25 Mbytes/sec; by comparison, the Cray disks achieve 10 Mb/sec and the IBM disks 4.5 Mb/sec. Recording each frame onto the Abekas takes less than a second - a staggering improvement over the original 40 seconds.

Once the video sequence is complete (or the disk is full) the Topaz tells the animation controller to record from the disk to video tape. Since the Lyon Lamb can achieve frame-accurate edits, there is no difficulty in constructing sequences longer than 30 seconds.

The Abekas disk has its own controller, accessible from a control panel or via remote links, which provides facilities for playing back at any speed from I/1000th to 32x real time, forwards or backwards and also for constructing loops and sequences. As a result, any animation sequence may be stored just once on the Abekas disk and then dropped onto video tape at a variety of speeds, giving the user the choice of different viewing times without redoing the animation.

A final and most significant advantage of the Abekas disk is that its contents can be dumped to a magnetic tape and so complete video sequences can be archived. At present we are doing this through the Topaz computer, but hope to attach an Exabyte drive directly to the Abekas in the future. This will allow video sequences to be reproduced, at full master quality, without keeping the original input file or reprocessing it.

The first sequences produced on the new system were used at the Oceanology conference in Brighton in March this year; these showed a simulated flight around the Triple Junction area in the Indian Ocean seabed. The same sequence was used by Granada Television for a new program on the environment. Most recently the UGAMP video sequences (see article in this issue) were only possible as a result of the much faster throughput provided by Topaz 2 and the Abekas disk.

For more information on the Atlas Video Facility, please contact me on RAL ext 6565.