Front cover drawn by Nicola Amos, the daughter of Trevor Amos (Operations Manager).

As reported in FLAGSHIP 27, we have installed a small "farm" of DEC Alpha workstations to form the core of our initial General Purpose Unix Service. The first phase of this service is now ready for use and you are encouraged to try it. This article describes the configuration and the future development of this service.

The hardware consists of five DEC 3000 model 400 workstations connected together by FDDI and also connected into the RAL site FDDI network.

Each CPU of this farm has about twice the power of one IBM 3090 processor. One of the machines will provide the main interactive service. This machine has 128 Mbytes of memory and 26 Gbytes of directly connected disk. The other four machines each have 64 Mbytes of memory and 2 Gbytes of local disk. One gigabyte of this local disk will be available as scratch space on each machine. It is planned to increase the memory available on some of the back-end machines to enable larger problems to be tackled. Batch work will run under the control of NQS across all five machines. All the machines will share a common user home file system local to the farm, and via FDDI they will have access to the Atlas Central Unix Filestore and to the 7 Terabytes of tape data in the Atlas Data Store.

These machines will run DEC's version of OSF/1, the operating system from the Open Software Foundation. This is a 64 bit Unix operating system; one consequence of this is that file systems are no longer limited to a maximum of 2 Gbytes. At the time of writing, the third party software installed is as follows: the NAG Fortran subroutine library; a wide selection of CERN software; NAG graphics library; RAL-GKS; RAL-CGM; and a wide range of public domain utilities such as elm, xmosaic, tin and emacs. This list will no doubt have extended by the time this article is published. OSF/1 includes X11R5 software, including the usual X utilities that one would expect. There is also a range of DECwindows equivalents, familiar to users of Ultrix and VMS: the Session Manager, BookReader, Mail viewer, DECterm, and others. Other software will be installed to meet the needs of the user community. If there are packages that you, as a potential user, feel should be available on a general purpose Unix system, then please let us know.

This farm will also serve as a test bed for the development of message passing parallel programs; as a consequence the farm has been isolated by a router from the rest of the Atlas Centre FDDI network.

We have chosen the farm structure for a central Unix service because of its flexibility. Extra machines or disks can be added at a small incremental cost, or other larger machines can be added to the farm. Another advantage is the greater total network bandwidth for uses such as remote tape access.

During the initial phase, which will probably last until the end of 1993, this service will be available to authorised users without rationing CPU time and we shall not be accounting its use. For this time there may be more scheduled development periods while we fine tune the configuration, establish operating procedures and install extra software, but it is already a mature enough system to offer the opportunity for useful scientific work. Also during this period accounting and rationing will be developed with a view to putting this service on a par with other Atlas Centre services in 1994.

All existing users of Atlas Centre services are eligible to have a userid which can be requested from User Support (us@ib.rl.ac.uk). Other non-users, whether SERC staff or academics eligible for SERC support, who wish to use the service now should contact me or Margaret Curtis in the Atlas Centre: John.Gordon@rl.ac.uk or Margaret.Curtis@rl.ac.uk . In 1994, users will be able to transfer existing IBM grants to this farm and we expect that similar arrangements will be made for Cray users. New grants requesting time on this farm can be submitted to the February 1994 grant round. Subject areas which get block allocations of time on Atlas services will be entitled to the same share of these new services. All formal applications for time on this service should be made using form AL54. Pump-priming applications should be sent to Dr B W Davies at RAL. Grant applications should be sent to the relevant Research Council.

During September the Atlas Centre Y-MP was upgraded with the addition of a 256 Mword SSD (Solid-state Storage Device).

Use of the SSD will be divided three ways.

Of the three categories, only the last is available as a resource for user jobs (accessible via an additional QSUB directive), but your udb (user data base) entry will not permit access by default. If you wish to find out more about using the SSD, or would like to try some of the above options, contact User Support bye-mail on US@ib.rl.ac.uk for more information.

The Cray User Meeting on Friday 10 September was very timely, in that the new 256 MWord SSD had been delivered a few days earlier.

After Professor Catlow's opening remarks, Roger Evans gave a brief summary of the last six months' operations. The Y-MP has been extremely reliable, so that currently the major causes of service interruptions are the planned breaks due to air conditioning maintenance, routine preventive maintenance and system development. Within the scheduled time, the Y-MP service has averaged the exceptionally good result of 99.6% availability.

Cray had conducted a performance analysis of the Y-MP during June and found that the Y-MP was generally running very well. Users whose jobs were found to be performing I/O inefficiently will be offered help; others, who appeared to compile programs every time rather than saving binary copies of the code, will be offered advice on utilities such as the Unix make command.

The frequency of system accounting has been cut from every two hours to only twice a day, reducing overheads. It has been suggested that disk space accounting by file ownership is more efficient than by home directory.

Ways of using the new SSD were described briefly; for more details, see the separate article in this issue. The initial setup would move the Idcache space from main memory to SSD, to ease the contention for main memory, and also use the SSD as swap space for interactive and small batch jobs, to improve response. Secondary Data Segments (SDS) offer advantages to the user in better IO performance and the ability to declare SDS-resident Fortran arrays. SDS will come into service shortly, as experience is gained in scheduling.

Chris Plant described some application software changes in the last six months:

Gaussian 90 has been replaced by Gaussian 92 Abaqus 4.9 by Abaqus 5.2, and IMSL-PL was being tested. Unichem and MPGS were due for imminent update to v2.0 and v5.0 respectively. In the area of Computational Fluid Dynamics, Fluent, NEKTON, Star-CD and Feat are available on the Y-MP to all academic holders of workstation licences.

The latest Cray Scientific Library, libsci v8.0 is on test and may be accessed by adding -1 /lib/craylib/libsci.a to the cf77 command line.

Chris also drew attention to the fact that RAL documentation is now held on line in a directory tree under the environment variable $DOC. Documentation is physically on UNIXFE but may be accessed transparently from either UNIXFE or the Y-MP. For more details, see the separate article in this issue.

John Gordon presented detailed statistics on the job turnaround in different classes. The class e6 had relatively poor turnaround and an option for a new large memory job class with a smaller time limit was put forward.

The topic of front end machines and different routes for job submission was raised as a discussion point for the afternoon. The multiplicity of front ends and job submission routes caused an overhead that could be reduced, if the user community wished, given the apparent pervasiveness of Unix and TCP/IP networking. John described the new option of job submission using the TCP/IP ftp command. The client end software for ftp is widely available and Cray have modified the Y-MP end to accept job submission into the NQS batch queues from an ftp process. This is less convenient than remote NQS job submission because there is no automatic return of job output, but it has the advantage of being freely available to a very wide community.

Stephen Wilson gave a presentation on some new benchmarks in Quantum Chemistry. The benchmarks were concerned with accuracy rather than speed. It has recently become possible to show that the widely-used basis set methods for self-consistent field calculations do converge to the exact solution, provided that the refinement of the basis set is carried out in a systematic way. The conclusion is important, since exact solutions can only be calculated for atoms and diatomic molecules. The basis set method is used for arbitrary molecules.

Brian Davies reported briefly on recent happenings at the SERC supercomputer management level.

The ABRC Sub-committee on Supercomputing had received the report it had commissioned from Coopers and Lybrand on options for introducing charging for the use of supercomputers. The report was generally in favour of charging but it also recommended that options for the future structure of the national Supercomputing service should be considered, irrespective of charging. The Company had been asked to produce a further report on structural options, to be completed during the autumn.

The procurement of a new massively parallel supercomputer had proceeded to benchmarking, with a decision on the supplier expected in late October. In parallel, there had been an Operational Requirement on the running of the service; RAL and Daresbury Laboratory would be bidding jointly to run the new service.

In the afternoon open discussion it was decided to introduce a new queue for jobs of up to 30 Mwords of memory and 600 seconds CPU time to improve the distribution of turnaround times.

The proposed SSD configuration was agreed; users would be informed of any changes that were made to tune performance so that good or bad consequences could be fed back to RAL staff.

The forward look and the possibility of upgrades to the Y-MP were discussed. The possible upgrades to the Y-MP, more disk space and an additional I/O cluster were considered to be expensive, and in view of the lengthy time scales involved in bids for upgrades, it was considered to be more appropriate to bid for a new vector supercomputer at an appropriate time.

The meeting noted enthusiastically the offer made by Digital in June 1993 where a Cray Y-MP/EL was listed at £75K. The meeting thought that such a machine would make an invaluable improvement to the Unix front end facilities, offering binary compatibility with the Y-MP8I and the possibility of doing compilation, test and debugging on the front end. If a Y-MP/EL with more disk space than the Digital offer could be obtained for about £100K, the meeting would strongly recommend that funding should be sought for its purchase.

Professor Catlow informed the meeting that SERC's Science and Materials Board was considering ways in which more effective use could be made of the national supercomputing centres by forming a modest number of consortia, each with larger allocations of CPU time. The consortia would function in much the same way as the Collaborative Computational Projects with effort being provided to produce efficient code for communal use.

A clone of CERN's Central Simulation Facility (CSF) is being commissioned at RAL as a joint project between the Central Computing and Particle Physics Departments.

CSF uses a farm of Hewlett-Packard RISC workstations, with a Unix operating system, to provide an environment for CPU-intensive work such as event simulation. The RAL CSF is a facility for high energy physicists within the UK, and a trial service has been announced recently.

The RAL service will run on six Hewlett-Packard HP9000 model 735 RISC workstations connected via FDDI. Each machine has 48 Megabytes of memory and one Gigabyte of internal disk. Additional disk space for home area and scratch space is mounted via NFS. One machine will serve as an interactive front-end for the preparation and submission of jobs via NQS to the five back-end machines. Tape access will have the same user interface as at CERN, but will use the RAL Virtual Tape Protocol to provide access to data in the Atlas data store. This approach ensures that users have the same environment at RAL as that implemented at CERN and that the RAL CSF service is fully integrated into the central computing services.

Each workstation is capable of delivering scalar performances greater than the total IBM 3090/400E processors. Farms of RISC workstations provide an effective way of meeting the increasing demand for raw CPU time.

For many years the UK academic community has suffered from e-mail addresses with different ordering from the rest of the world. At last we are getting into step.

When the JANET network was set up, a strategic decision was taken that network addresses should be little-endian - i.e. the most significant part of the host name (UK) should come first, and successive elements should go in decreasing order of significance from left to right e.g. UK.AC.RL.V2. This method had advantages, but there was one over-riding disadvantage: the Internet had adopted the exact opposite method (big-endian) where the last element signified the highest level of addressing (e.g. TSO.DESY.DE) and this had spread to the rest of the world, leaving the UK isolated.

I have often jokingly reacted to criticism of the UK's position by saying that we drive on the "wrong" side of the road too, but whereas the UK is only one of many countries who drive on the left, in e-mail addressing we stand alone.

In theory the different approaches should not pose a problem. E-mail gateways should accomplish full name reversal so that e-mail reaching the UK from abroad should arrive with all the addresses in UK order. Similarly our mail should reach overseas recipients with their name ordering. In practice though, gateways have not always reversed all addresses correctly and e-mail addresses find their way abroad on published journals, letter headings, business cards etc.. This adds to the confusion when people use the given form of address, only to find it cannot reach the recipient. This has been a problem for people in the UK mailing abroad and for the rest of the world trying to reach the UK. It has led to a perception, that is only slowly being reversed, that the UK is electronically isolated and has also served as a reinforcement of stereotypes about "English eccentricity".

With the introduction of JIPS, many UK e-mail users are now effectively connected directly to the Internet and so can use 'world order* (big-endian) names directly. This split of the academic community has highlighted the problems of having a different naming order. The response is a plan by the JNT to allow users to specify email addresses in the same order as the rest of the world. This change is planed for early 1994. Many UK universities have already pre-empted this decision by supporting world order naming for all their internal mailers and recommending that their users quote their e-mail address in world order (e.g. fred@vax.OXFORD.AC.UK). Translation to UK order is handled by site mail hubs which order the addresses depending on the destination.

Central Computing Department has decided to join this number and is recommending that the whole of RAL makes the changeover by the end of 1993. All RAL users are now recommended to give their e-mail addresses in world order. The "world order' should now be treated as the canonical form of e-mail address on the RAL site. RAL central services will change their software so that mail presented to the user is also shown in world order. There is already a site mail hub in operation to ensure that mail leaving the RAL site will be in the correct order for the receiving site. Because there are so many systems at RAL affected, they may not all make the change at the same time. Some are already working in world order and others may take a considerable time to make the change. Each system will inform its users when the change affects them and all systems will continue to interwork during the changeover. Any RAL systems administrators who want to follow our lead and wish advice on this change can contact me in the first instance.

Users elsewhere in the UK who wish to address e-mail to RAL should continue to use the form recommended by their local system managers. So, if your site is still using UK order, mail to j.c.gordon@uk.ac.rl will still reach me, even though I advertise my mail address as j.c.gordon@rl.ac.uk.

During the next six months you should apply the same care when using UK e-mail addresses as you have always done with foreign ones. It is hoped that by early next year everyone in the UK will be able to address everyone else using the same naming convention as the rest of the world. Then, perhaps, we can enjoy a seamless connectivity with colleagues across the world.

(The Best Thing Since Sliced Bread)

As the global network continues to grow, finding the piece of news, information or software you require becomes a daunting prospect. There are nearly 2,000,000 machines available worldwide and most offer some information.

Navigating through the wealth of available material is not an easy task. In the past, a number of attempts have been made to make sense of this mass of information; FTP, Archie, WAIS have all helped, but there has never been a single mechanism by which all this material could be accessed... until now.

World Wide Web (WWW) is a concerted attempt to combine all the existing information services worldwide and present them in a friendly, easy-to-use way.

Originating at CERN in collaboration with the National Center for Supercomputer Applications (NCSA) in Illinois, WWW usage has been growing rapidly, doubling every four months or so, and it now seems set to become the pre-eminent information access system.

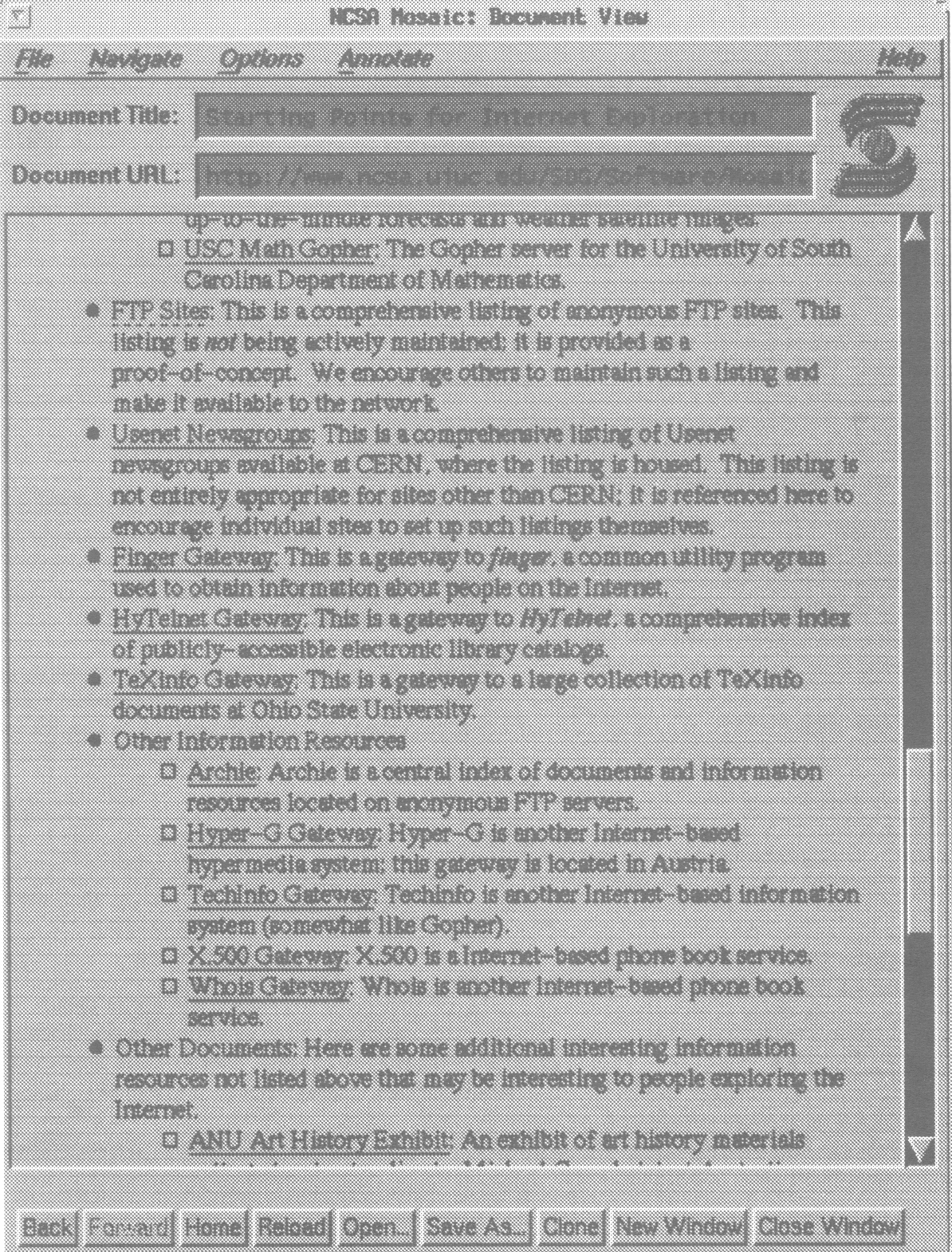

WWW is both powerful and easy to use. It is based on a hypertext system which allows documents to be constructed of text, images, sound or even animation. Parts of each document can be located anywhere on the network; simply select the next item you wish to look at and your browser will fetch and display it to suit your current terminal. A range of browsers are available for most platforms.

The hypertext language which WWW uses is called Hyper Text Markup Language, HTML, and was derived from the Standard Generalized Markup Language, SGML, with which it has many feature in common.

What makes HTML special is that any part of a document can be described by the author as being a link. A link acts as a pointer and could be to another part of the text, an index or a definition, an image stored locally as a GIF file, another HTML document stored on a machine around the world. It could even point to another information source: a GOPHER server, an anonymous FTP archive, UseNet news or a WAIS server.

WWW was specifically designed to provide access to existing network information systems and so your WWW browser can provide unprecedented access to information across the world. Unlike many other systems, however, WWW is not structured or hierarchical: as its name suggests, it is a web - a complex mesh of information. Far from being difficult to navigate, however, the vast number of interlinks mean that users can quickly navigate to the information they require.

For those wishing to provide information, WWW provides an easy means of integrating existing databases and news system using simple shell script to generate HTML documents automatically whenever someone accesses a particular page of information. In this way, your site can offer up-to-date information dynamically with little administrative overhead. RAL has used WWW in this way to create an information service which offers news and current information on a range of topics.

The address of the RAL service - its home page is http://www.rl.ac.uk/rutherford.html This is also the default page for browsers on Unixfe, the IBM 3090 and the OSF/1 service.