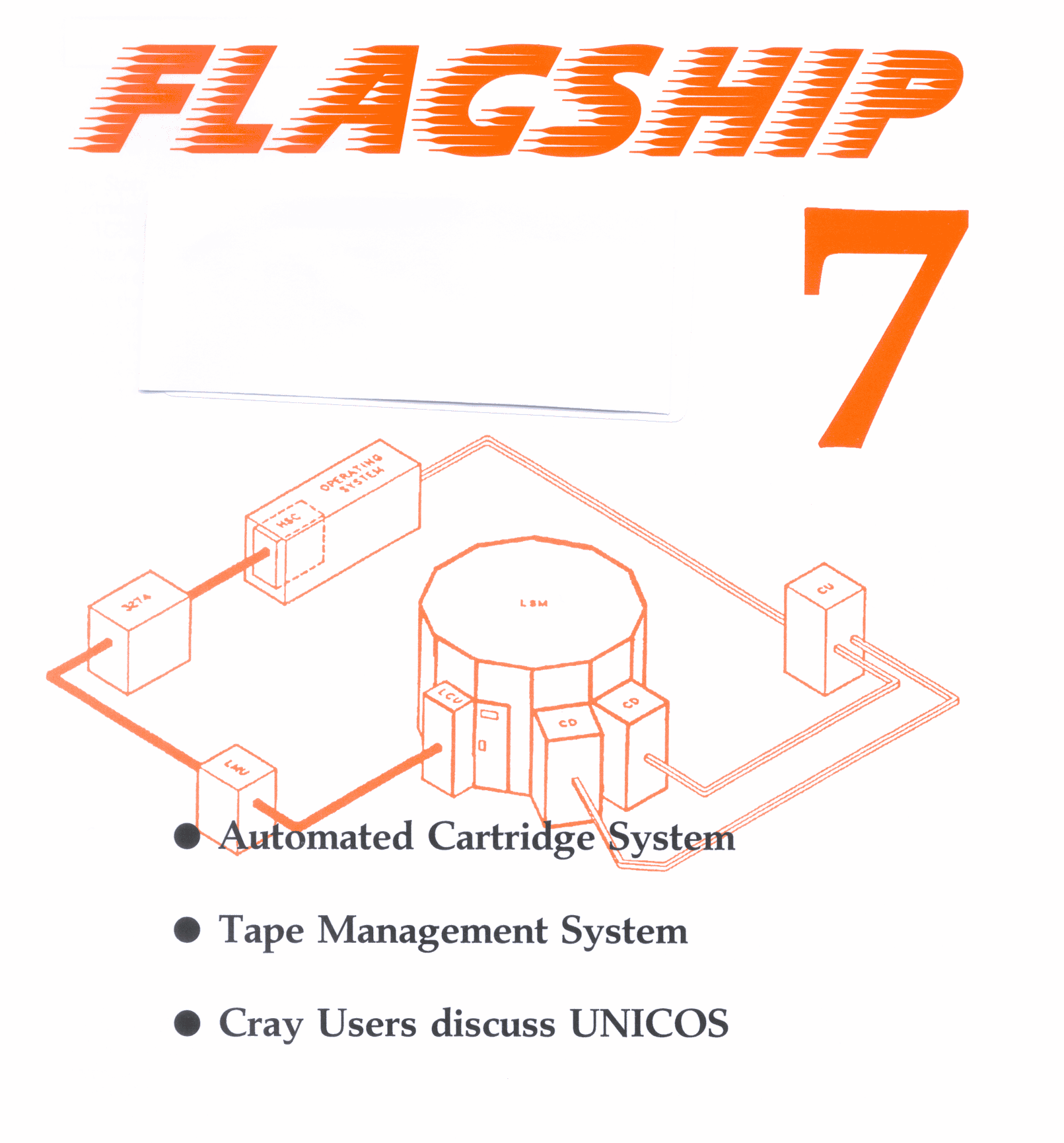

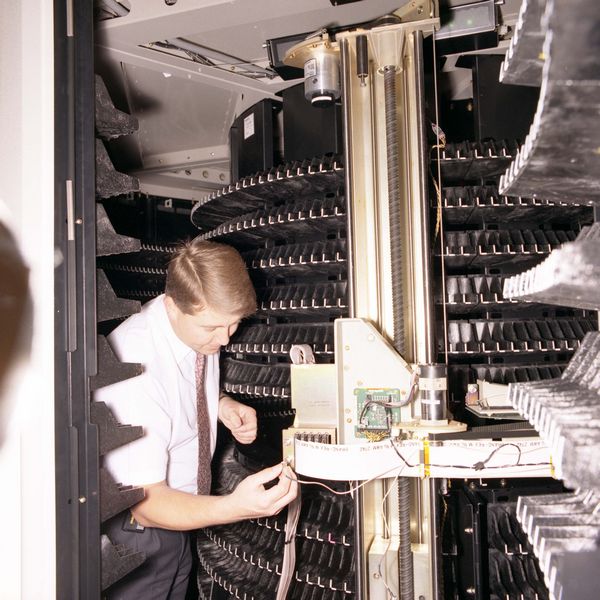

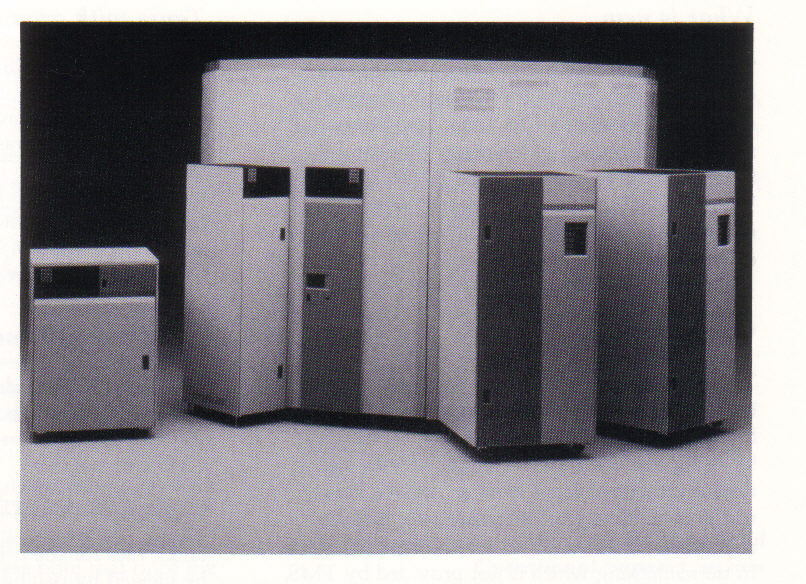

The StorageTek Automated Cartridge System (see article in FLAGSHIP 6) was duly delivered to the Atlas Centre on Wednesday 22 November 1989. The pictures show the ACS at various stages of its installation in the upper machine room. By the following Tuesday the ACS was completely assembled and ready to be put through its paces

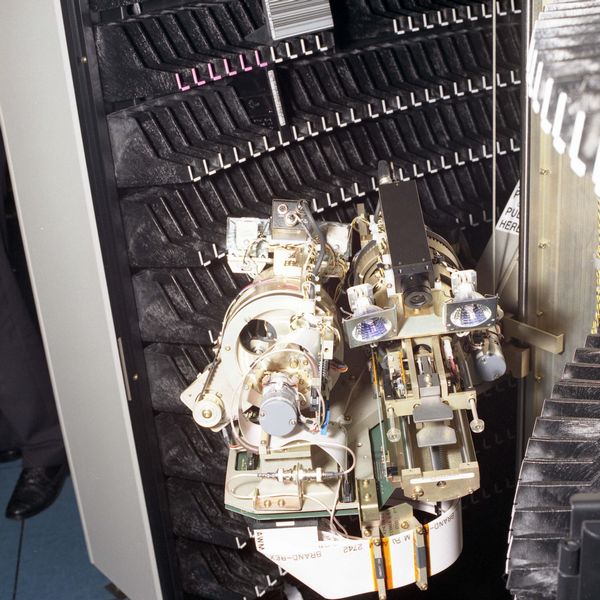

The largest element of the device is the Library Storage Module (LSM), an anulus-shaped box of height 8 feet and diameter 11 feet. There is a single door to provide access into the ACS which also contains the Cartridge Access Port (CAP) for entering and ejecting tapes. The inside of the external wall and the internal wall are covered with the racking which can hold up to 5,500 IBM 3480 cartridge tapes. Each tape can hold up to 200 Megabytes of data giving the ACS a total capacity of just over 1 Terabyte. The robot is centrally pivoted and rotates around the space between the inner and outer walls. It has two cameras to enable it to read the bar-coded labels on the cartridges and two hands for picking the cartridges from the racks, one of each for each wall. Cartridges to be entered into the ACS are placed in the CAP which can hold up to twenty one at a time. The robot moves them from the CAP to appropriate slots in the racks. Cartridges are ejected by the robot fetching them from the racks and placing them in the CAP. Therefore in normal operation there is never any need to open the door and enter the ACS. The first four pictures show StorageTek personnel installing the Atlas ACS.

The main function of the ACS is to mount cartridges into the drives which are bolted on to two of the external walls. The number of drives can vary but the configuration at Atlas has two drives with four transports in each. The robot is capable of mounting and dismounting well over 100 cartridges per hour into these drives.

The movements of the robot are controlled by a Library Management Unit which is attached to the IBM 3090 where host software running under VM/XA controls the operation of the ACS. The cartridge drives are also connected to the 3090 and to the Cray. Interfaces have been written by CCD staff to all three of our operating systems, VM and MVS on the 3090 and COS (later UNICOS) on the Cray. Thus requests for cartridge tape mounts from any of these systems are handled automatically by the ACS without any human intervention. Of course, humans are still needed for putting cartridges into and out of the ACS and for sticking labels on to them!

After some initial teething problems the ACS has been performing very well and ran over the whole of the Christmas period, much of it unattended, without any problems. It is already being used for taking dumps of the disks belonging to all three systems. In future it will be used for migration of CMS minidisks and UNICOS datasets and for handling users' data tapes.

If it transpires that extra capacity is needed then the ACS can be expanded up to 16 LSM's! In a multi-LSM configuration, each LSM is attached to its neighbour with a pass-through port which allows the robots to pass cartridges to each other. However, it is unlikely that such a storage capacity will ever be needed and in any case there is only room for about five in the Atlas Centre machine room.

Our most recent Cray Users' Meeting, held at the Atlas Centre on 30 November 1989, attracted a slightly higher turnout than usual with its invitation to come along and debate our planned transition to the UNICOS operating system in 1990. It was noticeable that many of the new faces were UNIX enthusiasts, already using UNIX on local computers or on workstations.

The meeting, chaired by Professor Pert from York, began with a summary of developments over the last six months presented by Tim Pett. The current Cray operating system COS had been upgraded to version 1.17 which Cray have said will be the last release of COS apart from bug fixes. The station software running under the IBM VM operating system had also been upgraded to 5.0, both these changes being mainly to maintain currency of the software.

The systems team developing the UNICOS operating system have progressed to version 5.0.12 running under GOS and this will be the version which we plan to use for the user migration early in 1990. Along with the introduction of UNICOS work has been done on communications into UNICOS based on the industry standard communications protocol TCP/IP and this is available from VM/CMS, the VAX/VMS front end, and from other UNIX machines connected to the Rutherford Laboratory site Ethernet. TCP/IP provide interactive terminal access to UNICOS and can provide the underlying structure for more sophisticated protocols such as X-windows and NFS.

The most significant hardware change at the Atlas Centre has been the delivery (just a few days before the meeting) of a StorageTek STK 4400 robotic cartridge tape handler. This will shortly provide a filestore service to augment the Masstor M860 device which is essentially full. The STK 4400 is an enclosed tape silo, 3.5 metres across and 2.5 metres high, weighing two tons when fully loaded with 5500 tape cartridges. The tape cartridges are the IBM 3480 standard currently having a capacity of 200 MByte each. This gives the silo a capacity of 1.1 Terabyte compared with the 110 GByte in the M860. It is expected that higher density tapes will become available during the life of the system so that factors of two or four upgrade are likely over a few years. The robot retrieves tapes with an average delay of 11 seconds for insertion into one of eight tape transports. The search time down the tape is typically longer than the M860 so we will continue to use the M860 for some data for its slightly better access time.

The comments of users concerning the different user interface at each of the three national computing centres have been taken on board by a working party trying to define a Common User Interface. The password systems and SETPWD/AUTHLINK commands are now harmonised for controlling access by users and others wishing to link to their files. XPRINT is the standard command to print files and GETFILE/PUTFILE for file transfer to other systems. COPYTAPE/DUMPTAPE provide uniform interfaces to simple magnetic tape management. The target to implement these is 31 December 1989.

Chris Osland then described the work that had been done so far using the video system developed at RAL. Based on a small UNIX machine with frame store and video recorder interfaces it is capable of displaying a frame or sequence of frames defined by means of the CGM (Computer Graphics Metafile) format. This is supported by all of the RAL/CCD computers and most machines at universities. Chris showed a variety of quite complicated colour pictures that had been generated from CGM datasets from several high level graphics packages and at several sites in the UK.

A variety of animated sequences were shown which have been produced for several trial users including simple computational fluid dynamic models and the NERC collaboration studying the Antarctic Ocean (FRAM). Other significant users have been the Open University for their teaching packages and the IBM scientific centre at Winchester.

The debate on the introduction of UNICOS was introduced by Roger Evans who began by presenting the expected counter-arguments: basically the Cray service has run perfectly well for three years so why change it, and UNIX is for computer scientists isn't it?

However the stability of the COS service has to weighed against the fact that Cray's first line of software development is UNICOS and typically software will become available on UNICOS significantly before it is available on COS. The autotasking version of the CFT77 compiler was available on UNICOS for nearly one year before the COS version. COS is essentially a 1960's (!) batch operating system with little terminal support and no facilities for direct connection to wide area networks. For those who are used to it COS JCL is straightforward but it is unique whereas UNICOS scripts are essentially the same as users will use on other UNIX machines. COS is locked in to the 24 bit addressing of the Cray-1 and all Cray machines with more than 16 MWords of memory require UNICOS to access all available memory.

UNIX is increasingly the industry standard for scientific computing, was endorsed by the working party that examined the supercomputing environment for the 1990's and is almost certainly the operating system for the next generation of supercomputer. It is what is used on most workstations and most mini-supercomputers. UNIX has good standards for networking (TCP/IP) which in principle can be extended across JANET to give full feature connections from a local workstation directly in to the Cray.

The disadvantages of moving to UNICOS are the traumas associated with the change. Rewriting JCL for most people will be only a few hours work but some will need substantial help from staff at the Atlas Centre. Everyone will have to recompile code and libraries and some parts of FORTRAN programs have to be changed (some I/O statements and calls to COS from within FORTRAN). The COS and UNICOS filestores are incompatible and file conversion will either be done by RAL staff or users will be given simple utilities to read COS datasets from within UNICOS and then save them in UNICOS.

The decision to go to UNICOS formally belongs to the Atlas Centre Supercomputer Committee (ACSC) chaired by Professor Brian Hoskins from Reading. Professor Pert will represent the views from the Users Meeting and users can also contact committee members directly.

With approval from the ACSC the intention is to make the move to UNICOS during February-April 1990 with the aid of the loan of memory and DD49 disks by courtesy of Cray UK. Restrictions on total file space mean that users will be given individually a two week slot within this period to transfer data from COS to UNICOS and support outside this time slot will be limited.

Users who provide software packages for others will be offered the opportunity of early support on UNICOS to help them port their software before other users require to use it.

After lunch the meeting debated the UNICOS move and was broadly in favour of the change. Reservations were expressed by those who relied on software packages which were provided by other groups over which they had no control, but the Atlas Centre will exert as much influence as possible to ensure that current packages continue to be available. Others wished to be reassured that appropriate levels of support would be available to help them learn a new operating system fairly quickly. The general feeling was that UNICOS had been talked about for a long time and its time had finally come.

Professor Pert will convey these feelings to the ACSC with the recommendation that subject to adequate support being given to the changeover period the conversion to UNICOS should go ahead as soon as possible.

When we moved to CMS 5.5 in 1989 it became possible to address more than 16 Mbytes of virtual storage. In order to do this, your machine needs to be running in XA-mode. This will be the default for all virtual machines eventually, but for now we will stay in 370-mode (see the CMS 5.5 article in the January 1989 issue of FLAGSHIP).

Batch machines will now run in XA mode if a storage size of more than 16 Megabytes is requested. We can also authorise users to be able to increase their virtual machine size to more than 16 Mb and tell them how to set their machine to run in XA-mode. Large user machines should only be used for program development; long jobs should be run in batch.

There are two new options, AMODE and RMODE, for the LOAD, GENMOD and LKED commands which determine whether or not your program can use storage above the 16 Mb line.

AMODE determines the addressing mode. It may have values of 24 (24 bit addressing), 31 (31-bit addressing) or ANY (either 24 or 31-bit addressing). Using 24-bit addressing, it is only possible to address up to 224-1 (about 16 million) bytes of storage. Using 31-bit addressing, however, it is logically possible to address up to 231-1 bytes of storage but, in practice, this is limited to 999 Mbytes by the operating system and the amount of real storage available to the processor.

RMODE determines the residency mode. This refers to where a program resides when CMS loads it. It may have values of 24 or ANY. RMODE 24 indicates that CMS loads the program below the 16 Mb line whereas RMODE ANY indicates that CMS loads the program above the 16 Mb line if sufficient storage is available.

If you use ANY for both RMODE and AMODE, whether your program uses 24 or 31 bit addressing and resides above or below the 16 Mb line will depend on whether it is run in a 370 or an XA-mode virtual machine.

The VS FORTRAN compilers at level Version 1 release 2 and later (we are now using Version 2 release 3) assign AMODE and RMODE values of ANY and, therefore, these programs may be run in either 370 or XA-mode.

Executable programs to run above the 16 Mb line must be created in LOAD mode (ie. using a TXTLIB of VSF2FORT and a LOADLIB of VSF2LOAD). This is because several of the FORTRAN I/O library routines must reside below the 16 Mb line. If you use LINK mode (ie. using a TXTLIB of VSF2LINK), these routines will become part of your module and hence the whole module will have to be loaded below 16 Mb.

If you use dynamic common blocks, they will be loaded above the 16 Mb line if you have specified 31-bit addressing mode. All program units referring to the dynamic commons must also be in 31-bit addressing mode.

If you have existing TEXT files or TXTLIBS, they will have been assigned values of AMODE ANY and RMODE ANY if you used VS FORTRAN Version 1 release 2 or later to compile them. They can, therefore, run in 24-bit or 31-bit addressing mode and may reside above or below the 16 Mb line.

Programs larger than 16 Mb stored as non-relocatable modules will only run in a virtual machine of the same size as the one in which they were generated. This is very inconvenient so load modules should be created by using the RLDSA VE option for LOAD before creating the module with GENMOD. Load modules for such big programs occupy a lot of disk space so you might consider the extra overhead of LOADing your program each time to save disk space. The use of Dynamic Common will also reduce the size of load modules when stored on disk.

In summary, to exploit the 31 bit address ability of CMS 5.5 from a FORTRAN program you must:

A few things might prevent your program from running above 16 Mb:

If you attempt to load such a program above the 16 Mb line, the loader will complain and attempt to restart the loading below 16Mb. With a big program this will fail.

The first cause is easily cured by changing to VSF2FORT TXTLIB and VSF2LOAD LOADLIB. The second needs re-compilation of the FORTRAN with the latest compiler FORTVS2. The third problem needs changes to be made to the assembler program. If you need help with this or the program is in someone else's library then contact PAO for help.

Once you increase your virtual machine size above 4 Mb, you should note that some program products will not run in large virtual machines. At present, these include PROFS (4 Mb limit); GDDM, ICU, IVU, IMD (6 Mb limit); QMF, DW370, DCF, and APL.

If you think that running programs in XA-mode would be useful for your work, please contact Scientific User Support (US@UK.AC.RL.IB) - we are waiting to hear from you!

What have 3000 hours of Cray time, 24 hours of FTP time, 8 hours of Sun time, 2 hours on the RAL video system and 11.5 seconds in common? Answer: Each was a factor in making the first FRAM video.

This is the story of how a number of videos were made for the FRAM (Fine Resolution Antarctic Model) project. It is slightly unusual in that Graphics Group, in the interests of time, did much of the programming, but illustrates one approach to the production of videos.

The FRAM model runs on the Cray X/MP-48 at the Atlas Centre and produces prodigious quantities of output data. It simulates the behaviour of the oceans in the region from about 24° South to the Antarctic. This is divided into 720 half degree longitudinal slices (E-W) and 220 quarter degree latitudinal slices (N-S). The model evaluates a number of physical parameters (functions), all but one of them at 32 different depths. The exception is the stream function which is only defined at sea level. Each function (at each depth) is a 720 X 220 array of real values, encoded into 2 bytes and therefore occupying about 320 kilobytes. All the output data for the model requires about 160 such arrays or about 50 megabytes.

However, all this data just describes the state of the oceans at one instant: FRAM is modelling their behaviour over 20 or 25 years. While the model works internally with a small timestep (half an hour) output may be obtained every day. Most functions, particularly those at lower depths, change sufficiently slowly that every ten days is sufficient, but the stream function varies very rapidly.

The FRAM model runs fairly continually in the Cray, simulating about 100 days each week. The FRAM scientists regard the first six (simulated) years as the "running up period", during which the model achieves a dynamic stability from an initial - and artificial - static state. The model completed these six years in December 1989. In September 1989, when the model had nearly completed four years, FRAM requested a video of the results so far.

The available data at this stage was the stream function, stored at ten day intervals - 144 timesteps in all. Since video, outside the USA Japan runs at 25 frames per second, putting each timestep onto one video frame would produce a sequence of less than 6 seconds; it was decided to repeat each timestep for an extra frame, producing a more respectable 11.5 second sequence.

Because of the lack of time for development of the video, a very simple strategy was adopted. A "Clear Text" CGM (Computer Graphics Metafile) was produced by adding "fprintf" statements to an existing display program written by FRAM. This metafile was large (about 900 Kilobytes) but could be converted to "Character" encoding and thereby reduced to 120 Kilobytes using the RAL-CGM software. Both the display program and RAL-CGM could be run on a Sun workstation. The FRAM data files are produced on the Cray and disposed to the Vax frontend. The processing chain and time required per file were therefore:

The result could have been 144 steps of 3+120+60+12+70 seconds (about 4.5 minutes) but the various steps were overlapped and the total time was around 9 hours rather than 12.

The result, a video sequence lasting less than 12 seconds, was shown at a FRAM meeting in Southampton on September 29. Immediately after the meeting, the BBC Open University Production Unit - who were about to film the FRAM data display for their Oceanography course - asked whether they could include this sequence in the film as well. Happily agreeing to this led to considering improvements that could be made and finally to a number of sequences being included, as described in FLAGSHIP 6.

Having made the first video and, as part of the preparation for the BBC filming at the Atlas Centre, having adapted the display program so that it runs on the Silicon Graphics IRIS 3130, a number of further projects have been initiated.

1. The initial viewing of the first video, at Southampton, provided visual confirmation that, for rapidly-changing functions like the stream function, data should be stored for each day rather than every 10 days. Unless this was done, short lifetime phenomena -such as temporary eddies - would be simulated by the model but never seen. A new video is being made a factor of five slower than the original one, each video frame now corresponding to a single day (the original had two video frames for each 10 days).

2. Many individual areas of interest, such as the Agulhas currents off the coast of South Africa, occupy only a small area of the screen when the full 720 x 220 display is used. A "zoom" facility was developed in time for the BBC filming and is being generalized.

3. For teaching purposes - and maybe for some scientific purposes as well - it is convenient to have a polar "bird's eye" view instead of the rectangular (pseudo-Mercator) projection used to date by FRAM. This, and a smooth animation between polar and rectangular projections, was developed for the BBC film. The second picture shows an accelerated version of this.

We are now busy tuning the different types of display and rerunning from day 0 to the latest available (well into the ninth year) using these additional forms of presentation. Members of the FRAM project are requesting zoomed displays of different areas on video as an investigative tool. All data produced by the FRAM model is now being stored on Exabyte tapes by the Vax frontend and so can be analysed retrospectively if required.

I would like to thank David Stevens of the University of East Anglia, Peter Killworth and Jeff Blundell of the University of Oxford, David Webb of IOS Wormley and Bob Day of RAL Informatics Department for their assistance in various aspects of this exercise.

The 1000th SERC PROFS User is Bob Hilborne of Swindon Office; he is registered to use PROFS and is awaiting eagerly his connection to the system by EDPU. By the time of the presentation the number of registered users had risen to 1031, demonstrating the fastest rate of growth since the start of the project.

To mark this milestone in the development of the PROFS service throughout SERC, Bob is seen here receiving a bottle of champagne from Keith Jeffery, who leads Information Management Group at the Atlas Centre. Some members of the PROFS team joined the celebration: Kay Lloyd and Jill Winchcombe from Swindon Office and Jed Brown (who leads the Office Systems Section within Information Management Group) and Rob Williams (who suggested the celebration and works in Jed's section).