In January we will issue the first edition of a new type of newsletter covering the activities, facilities and services in the Computing and Information Systems Department at CCLRC. The newsletter, which will be known as ATLAS, will be aimed at a wide external readership and will include news items and articles by users of our facilities and by our own staff. In general the material will be presented at a non-technical level. One of the key features of the newsletter will be references to the rapidly expanding amounts of detailed technical and background information that we provide on the World Wide Web, and as a means of drawing attention to new material we will issue a regular electronic ATLAS News Supplement URL:

http://www.cis.rl.ac.uk/publications/ATLAS.latest/index.html

News items and other material which are relevant primarily to staff of CCLRC will be covered through separate, largely electronic, channels.

A facsimile of the cover of ATLAS appears on the front of this final issue of FLAGSHIP.

The design has evolved from the Atlas Centre logo and the colourways of CCLRC. The addition of the longitude and latitude lines to the globe visually explains the newsletters name and also represents the inter-change of information and technology around the world.

Accordingly, as this is the final edition of Flagship, I would like to take this opportunity to thank Ros Hallowell for the excellent work she has done as Editor ever since the first edition appeared in January 1989 and particularly for the way in which she has managed with great skill and patience to extract articles from busy people and to meet the demanding deadlines for publication.

The editor of ATLAS will be Martin Prime, who will introduce himself in the first edition.

This final edition of FLAGSHIP contains a positive response questionnaire and another one will be contained in the first issue of ATLAS. Can I ask you to spare a small amount of time to complete one of these please; a comprehensive set of returns will help us greatly in preparing future issues of ATLAS.

Unlike the corresponding meeting of over a year ago there was no conflict with strikes on BR or the London Underground, and a good number of users turned up at the Royal Institution to hear about the state of play with the Atlas supercomputing service.

After a brief welcome from the chairman, Prof Catlow of the Royal Institution, Roger Evans presented a Progress Report, apologising for a mass of statistics and promising to concentrate on the issues affecting users. The raw statistics for the availability of the Cray Y-MP8 service are still impressive with an availability within scheduled time of 99.77% and a mean time to failure of several weeks.

The service perceived by the user may not always reflect these numbers since for some time the Cray Service has suffered from trying to squeeze a quart into a pint pot. Surprisingly it has been possible to squeeze a little extra work through the machine and the quarterly figures for the last 18 months show that with the continuing build up of work, throughput has increased by a few percent. The penalty for this is of course the increased time spent queuing for machine resources and the queue for large memory and long running jobs have now reached unacceptable levels of three to five days.

Other difficulties with the service have in the past concentrated on the continuing growth of user file store without any increase in the real disk space. This was highlighted at the last meeting with severe pressure on the Cray Data Migration software and misuse of the /tmp file system leading to lost batch jobs when /tmp fills up suddenly. The pressure on dm has been addressed through the provision of 36 GByte of SCSI disk to provide a migration route for disk files of up to 1 MByte. Files within this limit should be recalled with a minimum of delay since there is no waiting for tape mounts.

Another 45 GByte of SCSI disk has been allocated to relieve the pressure on /tmp through an NFS mounted file system known as nfstmp. It is difficult to police allocations on /nfstmp and currently the strategy is to allocate space on /nfstmp in large chunks to what are perceived to be the most needy users. Any users feeling that their needs have not been recognised are invited to contact the Atlas Centre! There are still some teething problems with the NFS link and the /nfstmp files are not suitable for heavy 10 usage since there is a definite cpu overhead to their use.

On the issue of front end services, it is intended to close the VMS front end before the next User Meeting. The elderly MicroVAX is expensive to maintain, use is small and VMS is not seen as a strategic operating system for the supercomputing service. The reaction of the user community is sought to moving the Unix front end function from the RS6000 (unixfe) to an OSFI (Digital Unix) machine. The service on the RS6000 has suffered in the past from file store limitations within AIX and although these are removed in the latest AIX releases it is felt that a better service and improved compatibility with other scientific services at Atlas could be provided under OSF!.

Users are reminded of the closure of the VM/CMS service at the end of September and should now have alternative homes for their old CMS files and any remaining functions such as electronic mail still on the 3090.

After the closure M/CMS there will be some changes in the Cray accounting but these should affect only those groups currently using the accounting system to exercise close control of the weekly usage by sub-projects within their grant. Anyone concerned about possible changes should contact Margaret Curtis for further information and watch out for information in News files.

There will be another trial of running the Atlas computers without operator cover outside the normal working day. This commences on 2 October and all the reliability issues identified in the previous trial should be fixed before this trial begins.

John Gordon gave a presentation of the important new EPSRC Superscalar Computing Service (for more information see Flagship 38). Although this service is not available to all Cray users it is sufficiently important (the new machine is as powerful as the Y-MP8 at a much more affordable price) that it merits a full description at the Atlas Cray Users Meeting.

The new machine was procured after a full EU tender action specifying a multi-processor shared memory machine with single node performance of at least 250 Mflop/s. The competition was won by a Digital 8460 with six Alpha 21164 (also known as EV5) processors giving a peak performance of 3.6 Gflop/s, 2 GByte of main memory and 150 GByte of disk space. The EV5 processor can carry out two integer and two floating point operations each cycle of its 300 MHz clock and with a three level cache structure this gives quite a distinct application characteristic compared with the vector processing, flat memory architecture of the Cray Y-MP.

On code that vectorises well the 8460/EV5 can match the Cray performance on small problems that fit in the first level cache. On larger problems the performance falls well below the Y-MP. With code that does not vectorise, the EV5 can outperform the Y-MP by a factor of 8 or more on small problems before again tailing off when all the caches become too small for the problem in hand. On real applications there is a considerable spread, from six times faster than the Y -MP to five times slower. For most Cray users achieving a performance of 50-100 Mflop/s on the Y-MP the EV5 chip should give around 80% of the Y-MP single processor performance; those Cray users running at slower Mflop rates should find the EV5 gives better performance than the Y-MP, up to 6 times better on one particularly scalar code.

The scope of applications software on the new service is currently being considered and user input is very much required. A full range of compilers (F77, F90, C, C++, Pascal) will be available under the DEC Campus arrangement and there is the dxml optimised scientific library. AMBER will be on the machine, having been used during the field test and AVS and the NAG numerical library will be mounted. Gaussian 94, and Abaqus (including explicit and post) are available at modest additional cost and will be included if there is any interest. Any engineers interested in Dyna-3d are particularly asked to contact us.

Use of the Superscalar machine is restricted to EPSRC funded users for whom there are three routes:

Existing Cray users wishing to transfer grants to the new machine should discuss this with Alison Wall who can advise on how to approach the Programme Managers.

Chris Plant introduced some miscellaneous items including new application software: Abaqus is now at version 5.4 including standard and explicit, Gaussian 94 had been built the day before the meeting, GAMESS 5.1 is available and Cray's new documentation guide Craydoc is accessible through the command cdoc.

Some of the Cray documentation is now available on the World Wide Web (WWW) and more will follow soon. If user reaction is favourable then the WWW may become the primary reference for new documentation. Look at http://www.cis.rl.ac.uk/services/cray/ and please send us your comments.

A Silicon Graphics Indy (address unichem.rl.ac.uk) has been purchased to cope with the demand for workstation front end packages: initially Unichem users without local SGI machines had used Chris' own workstation but with the growth of Unichem use and the introduction of pre and post processors for Abaqus, Flow3D, Phoenics, Star-CD and Amber /leap there was clearly a need for a separate machine. The Indy currently runs NQS to submit batch work to the Y-MP but may move to Cray's own NQE software if the licence terms are favourable.

Chris also reminded everyone of the changes in tape management caused by the withdrawal of the IBM service: tapes need to have a real owner whereas in the past they could be owned by system; tapes will be managed through the 'datastore' command, not through TMS. The IBM tape robot should soon be upgraded to IBM's NTP (New Tape Product) which has 10 GByte tape volumes, 9 MByte/s transfer speed and will give a capacity of 30 TByte within the robot. This should eliminate delays caused by old data currently migrating to DAT tape and having to be manually mounted.

From the audience, Lois Steenman-Clark of UGAMP stressed the need to provide tools such as the ds command which presented a Unix style interface rather than the old VM style interface of the datastore command.

The scientific presentation of the day was from Professor Jonathan Tennyson of UCL, with the intriguing title Getting into Hot Water - big problems with small molecules.

Professor Tennyson introduced his talk, describing calculations of the opacity of water molecules at temperatures up to 5000K, with a newspaper cutting carrying the headline Damp Patches on the Sun. Compared with the typical solar surface temperature of 6000K, sunspots have local temperatures down to 3000K and some infra-red absorption lines in sunspots have been identified with water molecules on the basis of comparison with laboratory spectra. Lines from diatomic molecules such as OH are well identified whereas for polyatomic molecules the theoretical data is much more sparse. Polyatomic molecules are important contributors to the opacity of cool stars while at lower temperatures the opacity of water is very important in the modelling of the earth's atmosphere.

Modelling of water begins with a description of the potential surfaces in which the nuclei move. Ab initio calculations are still not accurate enough for this and it is necessary to augment them with experimental data. There follows several computational steps to define energy eigenvalues for the ro-vibrational motion, dipole matrix elements and finally to sort up to 2 × 107 transitions in order to calculate spectra.

Calculation of the energy values and matrix elements for the largest rotational quantum numbers, J=30, requires up to 80 + 160 hours of Y-MP time, and the memory usage is large enough to cause real problems with elapsed time. The major issue for the work has however been the need for up to 18 GByte of intermediate disk space and it is only since the introduction of the nfstmp that this work can be seriously attempted.

The quality of data calculated so far is vastly better than existing data: working up from lower values of J, 6 × 106 transitions have been identified compared with a total of 3 × 104 from previous laboratory work. The aim is to extend this work to much higher rotational quantum numbers and to obtain around 2 × 107 transitions with spectroscopic accuracy, meaning that the lines can be clearly matched with the observed spectra.

Rob Whetnall of the High Performance Computing Group in EPSRC (the managing agent for the national HPC programme) gave a short talk on the future policy for HPC in the UK. In 1994 the policy for HPC seemed clear with the announcement of closure dates for the services at ULCC and MCC, a new Cray T3D installed at Edinburgh, and an expected capital provision of £10M for a major new machine in Summer 1996.

The situation at present is much less clear: the Director General of the Research Councils (DGRC) has withheld £5M of the capital provision which makes procurement of a major machine impossible before Spring/Summer 1997. The responsibility for HPC has been devolved to the Research Councils but the transfer of funding is still under review (on the World Wide Web see http://www.epsrc.ac.uk/strategy/strategy_development.html). The key points at present are that the need for access to the highest performance machines is recognised but that the previously top sliced funds should be devolved to individual Research Councils who will still work through EPSRC as a managing agent. The ABHPC is likely to have a stronger Steering Committee role and the strategy will be tied to the requirements of individual Research Councils.

The level of future capital provision depends on decisions from the DGRC and from individual Research Councils, it is likely that these will not be known until the PES settlement is made public in January/February 1996 and no new machine is likely until at least 12 months after that.

In the meantime it is crucial that existing services remain effective. ABHPC has approved upgrades to the T3D at Edinburgh and a sum of money could be made available to relieve the pressure on the Y-MP8 at RAL. Discussion of this would follow Dr Davies' talk.

In response Prof Catlow noted the increased importance of ensuring that Research Councils and individual Project Managers were made aware of the importance of HPC provision in their subject area.

Brian Davies introduced the subject of possible upgrades at the Atlas Centre by noting that upgrades to the Y-MP itself were very limited and very expensive and the serious options to relieve pressure on the Y-MP were to procure a new machine, the difficult decision being whether such machine should be binary compatible with the Y-MP or not.

The Cray J90 range falls into the price range that is under consideration and a minimum useful machine would probably be 16 processors and 2 GByte of memory. This would have a peak performance of 3.2 Gflop/s and would approximately double the throughput at the Atlas Centre. The advantages of a binary compatible machine are obvious, there is almost no disruption to the user community and the scientific productivity of the machine begins on the day it is installed.

Non-compatible superscalar or vector parallel machines would require more effort on the part of users to transfer their codes. The boost to users' productivity might therefore be less instant though in most cases it could still be quick. Depending on the choice of machine, the support load could be somewhat greater. Also, it is possible that alternative technologies could offer more performance for a given sum of money, though this depends on the outcome of negotiations with suppliers and these had not yet begun.

The decision would ideally be influenced by the longer term strategy as to the future role of vector-parallel and superscalar machines but this might not be known at the time decisions on the RAL procurement were needed.

After lunch Professor Catlow introduced a round table discussion. The turnaround of the largest queue (e7) was a concern to everyone and RAL staff will investigate what can be done to improve this. This will however make turnaround somewhat worse for the other queues but no-one would volunteer which queues should suffer the most!

There were detailed concerns about file store management and particularly about the worst case behaviour of data migration. RAL will look at the possibility of using SCSI disks to augment the home file system but they were pessimistic as to whether this can be achieved at an acceptable cpu overhead.

Users were willing to see the demise of the VMS front end which had been talked about for some time, but wanted assurances that a new (Unix) front end would be available first.

In looking at the new EPSRC superscalar service several users were excited about the possibilities but wanted more than 150 GByte total file store.

Anyone wanting more information about the discussion on new machines or wishing to inject their own views on the relative importance of cost and Cray compatibility are invited to contact Brian Davies or Roger Evans since it is not felt appropriate to make this information completely public until further discussions have taken place with EPSRC and with the suppliers.

We expect a user service to be in place by the end of October on the Digital 8400 superscalar computer (Columbus) which has been purchased to enhance the scalar service provided at CCLRC for EPSRC users.

The Digital 8400 has not been installed as quickly as we envisaged when we reported on its purchase in FLAGSHIP 38, but the delays have been due to protracted contractual negotiations and not to any problem with hardware availability. We now expect the hardware to have been delivered by the time you read this.

Among the software we expect to have installed are:

Other software will be made available on user request.

In addition to the arrangements for new EPSRC research grants to use this machine which were described in FLAGSHIP 38, EPSRC are also considering allowing limited use to researchers who already have EPSRC peer-reviewed awards (not necessarily for computing) and to EPSRC-funded research students through their supervisors. To obtain access through this channel, contact Dr Alison Wall.

Anyone who is eligible for EPSRC research grant support can apply for access to the new machine. Pump-priming time of 20 CPU hours over six months can be obtained by filling in the form AL54, obtainable as a Level 2 PostScript file by Anonymous FTP from unixfe.rl.ac.uk in pub/doc/supercomputing/al54.ps or from Gill Davies at the Atlas Centre.

The completed form should be returned to Margaret Curtis at the Atlas Centre. A full grant allocation on the 8400 should be requested using the EPSRC form EPS(RP) together with the AL54, sent to the relevant EPSRC programme manager.

Any meeting with such a provocative title was sure to attract a good audience and the meeting room at University College London (UCL) was packed with a mixture of computer scientists and users of High Performance Computing (HPC) for the meeting organised by Prof Peter Welch of the University of Kent under the auspices of SELHPC (the London and South East consortium for education and training in HPC).

In his opening remarks Prof Welch addressed what he saw as the key points for the day: given the apparently poor fraction of peak performance achieved on the latest parallel computers, is there a crisis? Does efficiency matter given the fact that machines are steadily faster and cheaper? Are people disappointed? Is the problem in hardware or software or in our models of parallelism? Can we do better a) now and b) on the next generation of machines?

The first talk by Prof David May, the architect of the Transputer, and recently moved to the University of Bristol, was sure to introduce many controversial points and we were not disappointed. David described the mood of optimism from around 1990 when massively parallel computers were expected to find a breadth of application, be cheap, and produce new results. Many computer science issues were at that time not resolved and by 1995 we observe that much of the computing need is satisfied by single processor machines and small symmetrical multi-processor (SMP) computers. Provocatively David said that he expected this to continue for some time yet.

In an invited talk presented in 1989, he had commented on the need for standard languages and libraries to popularise parallel computing, the same need existed today! The industry had pursued the simple metrics of Mflop/s and Mbyte/s communications bandwidth while ignoring issues of latency and context switching time which had been balanced in the original Transputer design. Almost all the current parallel computers are based on commodity microprocessors without hardware assistance for communications or context switching. With the resulting imbalance it is not possible to context switch during communications delays and efficiency is severely compromised.

It is not obvious that the volume microprocessor market with its emphasis on cheap memory parts will allow these deficiencies to be rectified but the major new growth area of multi-media servers requires parallel systems with well bounded communications delays and may well be the opportunity for balanced systems to be designed again. The large scientific massively parallel computer has limited market potential and the desktop machine is where volume and profits can be achieved. Today this is where the small SMP machines have been very successful.

In relating the experiences of end users, Chris Jones of BAE Warton described a finite difference electromagnetic simulation used to model lightning strike on military aeroplanes. The code achieved high efficiencies on a Cray Y-MP and on a Parsytec (transputer based) parallel computer but gave only 12% efficiency on a Cray T3D.

Ian Turton from Leeds is a computational geographer trying to popularise HPC in the geography community. He had been seduced by the promise of a 38 Gflop Cray T3D and his disappointment in achieving only 7-8 Gflop/s was exceeded by his surprise at being told that this was the best anyone had achieved on that machine. Ian felt that the uncertainty over the future of HPC and more particularly concerns regarding the Cray specific optimisations needed to achieve good performance meant that he could not honestly recommend it to other geographers.

Chris Booth from DRA Malvern described detailed event driven simulations of Army logistic support. Originally driven by the problem of moving 9000 vehicles for each Brigade to Germany in the event of war, this scale of simulation requires the power of parallel computing. Experience with parallelising C++ code had not been encouraging, giving very poor load balance. Lower latency and faster context switching times would help as would a global address space.

Other speakers addressed the issues of parallelisation tools and of language limitations. None of the current languages appear ideal for expressing parallelism in a way that can lead to efficient code. Has the time come to abandon the old baggage and start from scratch again? In a second presentation Peter Welch described the different approaches to parallelism of the computer scientist and the application user. The former thinks parallelism is fun and the latter just wants performance and should have the parallelism hidden from him. Today's parallel computers were described as immature with too much machine specific knowledge needed for good performance. The lessons of the Transputer had been forgotten and latency and context switch times had not moved apace with floating point performance and communications bandwidth. A machine designed from scratch with the correct balance would be easier to program and be closer to being a general purpose machine.

There followed some parallel subgroups and a closing plenary discussion. The general view was that there was disappointment with MPP performance, machines having been oversold not only by the vendor but also by local enthusiasts. Despite this, there exists a range of problems that can only be solved on the large MPP machines. If there was a crisis it was that many MPP vendors had gone out of business, more might find the market unprofitable and the remaining suppliers might exploit a monopoly situation where prices would rise again to the detriment of the end users.

It was alarming to see the range of information and dis-information that had given rise to the idea of a crisis. Clearly many people had expected greater things of machines such as the Cray T3D but its limitations are clearly understood by those around the machine and are in the memory characteristics of each node rather than any part of the parallel architecture. Inter processor bandwidth and latency of the T3D are perfectly adequate for most applications although the need to use Cray specific constructs to achieve good performance is less than ideal.

Many people are also disappointed that parallel computing has not gained greater acceptance but this ignores the phenomenal growth in the power of desktop machines to the point where a few processors in SMP configuration provide as much power as most users need. For the few who are the most demanding, larger scale parallelism must be addressed and the rewards in the range of science that can be tackled are great enough that some machine specific optimisation is acceptable.

The reasons for disappointment with the latest generation of MPPs are that despite a range of architectures from different suppliers none of them are as well balanced as the old transputer or the vector-parallel machines such as the Cray YMP that they have superseded. Users who were used to achieving 50% of peak performance suddenly found that it is impossible to do better than 20-25% of peak, and the order of magnitude performance improvement suddenly becomes a factor of three or so.

Within the UK, the crisis in HPC must surely be that the level of funding forces us to choose between a single top-end machine that is only applicable to a few subject areas and several midrange machines that cause us as a nation to lose touch with the highest performance hardware.

It would be nice to think that some enterprising manufacturer would come up with a 300 Mflop/s chip with balanced communications and context switch times so that the next generation of parallel language would find an efficient home on which to run and bring us a single architecture and programming model that scaled from laptop computers to teraflops, but the scale of investment needed is enormous. (The closing comments at the meeting referred to an announcement that Sandia Laboratory in the USA is to host an Intel computer of 1.8 Tflop/s comprising 9000 Intel P6 processors - perhaps this dream is closer than we think!)

Experience with HPC has been mixed. Some get good performance, but many feel that their expectations have yet to be realised. Among specialists there is disappointment that parallel machines with the right characteristics have yet to be built, eg fast context switch and low latency. Of these two, fast context switch is most essential, - given that, there are techniques by which the effects of latency can be hidden.

As pointed out by David May, it is not enough to bolt fast communications (ie. high bandwidth) onto fast individual nodes. Parallel machine designers, and parallel programmers, need constantly to Remember that the aim is to keep ALL the processors busy doing useful compute (rather than optimising single node performance per se).

Challenges remain at several levels:

At root, the problem is one of expression and mapping, from applications to (parallel) programs to target architecture. The first (applications) are given, the second and third can be designed to suit - and should be, if the compiler and system software is to have a chance! Finally, and more provocatively, it is no use any more to think of matching the application (or algorithm) to the architecture. Too much variety is doomed in a rapidly changing industry.

Note: A full report of the Workshop has been prepared by the Chairman, Prof Peter Welch, and is available at web address http://www.hensa.ac.uk/parallel/groups/selhpc/crisis/.

During the summer, the Director General of Research Councils (DGRC) has been considering the funding arrangements for high performance computing. A report reviewing arrangements for HPC was prepared by the Advisory Body for High Performance Computing (ABHRC), supported by the Facilities and High Performance Computing Group of EPSRC, and submitted to the DGRC at the end of June. This relied heavily on information gathered from researchers, programme managers and service providers during the strategy study earlier this year. The review report made a very strong case for the continuation of a strategic national high performance computing programme, and was supplemented by information on scientific achievements from current systems in the form of A Review of Supercomputing 1991-1994 (covering the Convex, Cray Y-MP8 and Fujitsu vector services, and the Intel iPSC 860 and CM200 parallel systems), and a draft report on the first year of science on the Cray T3D.

The full report remains a confidential submission to the DGRC, but the key findings and recommendations are available on the EPSRC WWW service at URL: http://www.epsrc.ac.ukjhpc/ under strategy.

The DGRC and Research Councils' Chief Executive Officers have now agreed the following:

The Facilities and HPC Group of EPSRC will be working with the other Research Councils to update the material gathered as part of the strategy development earlier in the year, and to present a strong case for major new investment to supplement and replace existing HPC facilities. There is now no possibility of a procurement being started before early 1996, which effectively means that there will be no major new services before early 1997.

Information on further developments will be published on the WWW at URL http://www.epsrc.ac.uk/hpc/ and via the newsgroup uk.org.esprc.hpc.news. Comments and discussion are welcome on the newsgroup uk.org.epsrc.hpc.discussion.

Several factors have influenced our decision to modify the accounting and control systems for the Central Computers, notably the introduction of more Unix-based platforms (such as the OSF Farm and the Superscalar machine) and the need to move all the databases and associated control and reporting software off the IBM 3090.

The context within which we have designed our new databases includes the following points:

This context has resulted in us having to limit the functionality of the new control software in the following ways.

A grant will, by default, be given ONE sub-project which will, on most platforms, map to a Unix Group. Control will be on the TOTAL allocation for the grant. (Those familiar with Unix will appreciate that enabling and disabling Unix Groups is a non-trivial business as it has file-protection implications.)

Large projects with a real need to split allocations up can arrange to have more than one sub-project per grant but setting up and administering these will have to be handled in conjunction with the Resource Management staff. Current sub-projects will be taken over.

Control of CRAY disk space will not be implemented as part of the replacement ACCT functions. Equivalent functionality will be provided on the Cray itself.

A World Wide Web (WWW) interface will be available to provide the equivalent of the ACCT commands. We plan to make similar commands available also on the Cray. A replacement version of OATS for users to obtain reports of the level of use of the services will also be available via the WWW

We are taking a first step towards getting the technical information that was customarily presented in Flagship available on the World Wide Web (WWW). We have placed the Atlas Data Store and Central Computer Statistics which you have been used to finding at the back of Flagship on the WWW. The information will be updated bi-monthly.

The statistic can be found at URL http://www.rl.ac.uk/cisd/services/stats/flagship.html

Milton Park in Oxfordshire is probably the first business park in. Britain to give its tenants free access to the Internet.

A unique partnership between Rutherford Appleton Laboratory's (RAL) Computing and Information Systems Department (CISD), Lansdown Estates Group (who own and manage the Park) and Aligrafix Ltd (a Park tenant) has resulted in Milton Park having its own World Wide Web (WWW) site. Lansdown Estates Group has arranged for free access to the Internet from a public computer terminal for all the Park tenants. They have provided a PC in their Innovation Centre at 68 Milton Park which is connected via a modem and dial-up telephone line to RAL. RAL provides the on-going connection to the Internet.

Aligrafix, who are specialist designers, particularly of on-line information, have produced a set of WWW pages for the Park which are stored on their own WWW server but can be viewed by anyone with Internet access (the address is http://www.miltonpark.co.uk/).

The pages include general information about the Park, a directory of all the companies, and a home page for each company. The latter contains information about the company such as details of the company's services, contact names and addresses.

Also on WWW, as part of Milton Park's Site, is a list of job vacancies among the 130 companies on the Park, who between them employ over 3500 people, information on how to get to the Park and the facilities available there.

This facility was launched at a press conference on 14 September at the Innovation Centre by local MP, Mr Robert Jackson, who pushed the button causing Milton Park's home page to be displayed publicly for the first time. Mr Gavin Davidson, Lansdown's Managing Director, told the audience that he believed access to the Internet was important for the input and output it offered the Park tenants. The input was the information gathering that was now possible from sources world wide and the output was the ability of companies to advertise themselves and their services. We believe the Internet is developing into a very useful business tool with great potential. We want to make full use of it and we feel it is important that our tenant companies are given an opportunity to get involved he said.

Dr Paul Williams, Chief Executive Officer of CCLRC, referred to the Government's White Paper and the new mission of RAL to collaborate with industry. He saw the collaboration with Milton Park companies as a very good example of this. He also took the opportunity to mention the CCLRC Associates Group scheme which enables companies to learn about the achievements of the Rutherford Appleton and Daresbury Laboratories and to benefit from their expertise.

Mr Andy McLeod of Aligrafix, gave an on-line demonstration of the Milton Park Web pages, including a bulletin board, and other facilities available via WWW. He finished with a search for Milton Park and discovered a luxurious hotel of the same name in Australia which caused some amusement.

After a buffet lunch provided by Lansdown there was an opportunity for attendees to try their hand at Surfing the Web.

Lansdown Estates Group, who are financing the new facility, have ensured that Milton Park is one of the first business parks to put details of their commercial properties to let on the Internet. Potential business tenants, who could be accessing this information from anywhere in the world, can view the list of units to let and e-mail Lansdown's Lettings Manager, Hugh Richards, to request further information. The facility in the Innovation Centre will be available to all the tenants throughout the working day and also provides email as well as WWW access.

The Rutherford Appleton Laboratory (RAL) has set up an Internet Club to provide the opportunity for local science-based and high-tech businesses to explore the potential benefits of the Internet and the World Wide Web (WWW). In the first instance this will be restricted to one or two small, diverse, groups of 10-12 companies who will be provided with the necessary support to set-up Internet links. Because of the interest shown by companies based at Milton Park, Oxfordshire's largest Business Park which is just 3 miles from RAL, the first group to be set up contains mostly, but not exclusively, companies based at Milton. In future there may be one or more other Club groupings depending on the level of interest from companies located elsewhere. Initially, at least, each group will operate independently, although it may well be appropriate to have an occasional open meeting involving all the groups.

Each group will meet at regular intervals with experts from RAL's Computing and Information Systems Department (CISD) to discuss experiences, new ideas and any problems encountered. It is hoped that the diversity of each group will ensure that a wide range of applications are explored and their commercial value assessed. In addition to businesses we would also welcome participation from public sector organisations.

At Milton Park, Lansdown Estates, as well as joining the Club themselves, have agreed to fund a public access facility in the Innovation Centre, providing a dial-up connection to RAL, to give companies on Milton Park the opportunity to try out the Internet/WWW before reaching a decision to have their own connection (see separate article in this issue). In advising companies on the appropriate hardware and software we will make every effort to minimise costs while maintaining a high-quality, friendly user interface.

Member benefits include:

To date, eight companies have joined the Club's first group, including six from Milton Park. They have been provided with SLIP software to enable dial-up from a PC to CISD's Decserver, a Web browser and a mailbox on CISD's POP server accessed from a local e-mail client. It is expected that the first meeting of this group will be held in November.

While the World Wide Web (WWW) has grown at a prodigious rate, one recurring criticism has been the poor performance when graphics are included in pages. A contributory factor to this is that all graphics have been stored, and therefore transmitted, as image files, which are inefficient for graphics that were originally line drawings such as most CAD output, maps, organisational diagrams and the like. With support from the Advisory Group on Computer Graphics (AGOCG), RAL-CGM, one of the more widely used Computer Graphics Metafile (CGM) interpreters, has now been extended so that it can act as a Web browser in Mosaic and Netscape, adding geometric graphics to WWW's capabilities.

The CGM (International Standard 8632) is the standard format for the storage and exchange of 2D graphics, and is widely supported by packages that generate 2D graphics. CGM provides a compact form for 2D graphics, reducing storage costs and transmission times; it supports a wide range of functions and is an integral component of CALS, thus ensuring it has US government support.

Work is in hand with the help of Anne Mumford, the AGOCG Graphics Coordinator, and Alan Francis of Page Description to register CGM as a MIME data type; this will allow CGMs to be used in applications (such as e-mail) that allow MIME extensions. Rutherford Appleton Laboratory (RAL) has raised the issue of registering CGM as an approved file type for WWW with W3C, the World Wide Web Consortium, and this is proceeding.

RAL-CGM, developed at RAL, is a comprehensive interpreter for all forms of Computer Graphics Metafile (IS 8632). It can interpret all the standard encodings, producing output on-screen (Windows, X-Windows, various other devices) or in a file (all CGM encodings and PostScript).

RAL-CGM has now been extended so that it can act as an external viewer under Mosaic and Netscape Web' browsers and the code is available, without charge, from: ftp://ftp.cc.rl.ac.uk/pub/graphics/ralcgm

RAL are continuing to develop RAL-CGM, both as a stand-alone system and as a Web viewer, towards the more recent version of the CGM standard which adds many new elements and enhanced capability.

The closure of the IBM 3090 VM service has been advertised in previous issues. Many of the user services closed on 2 October 1995 and therefore, have no further user access to the VM service. Some service functions will continue to use the system until it is finally switched off in December.

Some users will wish to retrieve program and data files from VM mini disks after the end of the user service. As services were closed to users the contents of each minidisk were copied into separate files in the Atlas Datastore. A new command called vmdisk has been installed on all Atlas Centre production Unix systems which accesses this copy of the minidisk and can be used to copy CMS files to disk storage. The syntax of the command is:

vmdisk <vmuser> <minidisk> [options]

where:

If no options are supplied, the VM minidisk directory is listed. If -o is not specified, the -f value is used and the file is placed in the current directory. The default translation is ASCII with newlines added. Full details of the command may be found in the on-line man or HELP documentation.

The Datastore copies of VM minidisks are managed in the same way as Datastore virtual tapes and access to minidisk copies uses the same control mechanism.

Note: To read files from a minidisk owned by another userid, a suitable Datastore rule must be created. Users who need further assistance should contact Applications and User Support Group either by sending an e-mail message to us@r1.ac.uk or by using the new World Wide Web User Query page advertised elsewhere. The section on 'Access control for virtual tapes in the Datastore' in the document 'Tape data in the Atlas Datastore' gives further details. This document can be accessed as file

$DOC/general/datastore.ps (PostScript) or $DOC/general/datastore.txt (plain text)

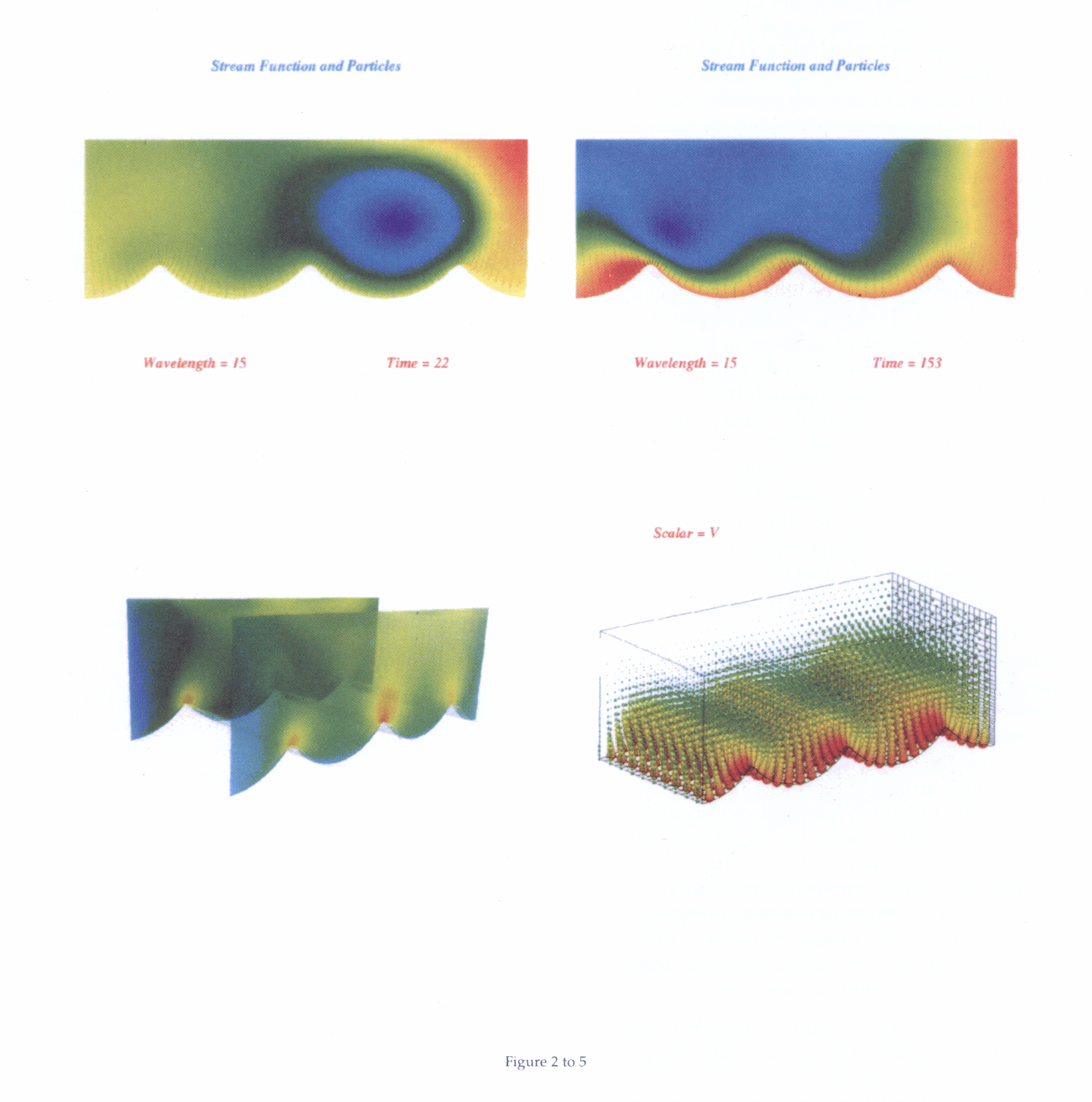

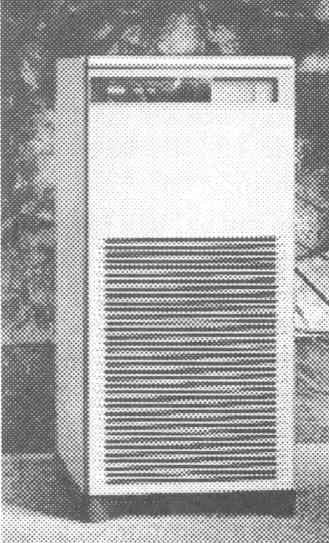

Two programmes of work at Computer and Information Systems Department (CISD) are being drawn together to provide powerful facilities that CCLRC can hopefully exploit in a wide range of areas in the future. The first, on the numerical simulation of 3D turbulent flow is based on an on-going programme in Computational Fluid Dynamics (CFD) at CISD, will exploit the new super scalar computing services. The second revolves around a programme on visualisation and the development of a virtual reality facility. The link between the two is the notoriously difficult area of the visualisation of highly turbulent unsteady flows. Currently the visualisation part of the programme can provide animated displays of the flow on video. Over the next few months this will be modified to provide stereoscopic viewing of the flow from an external view point. Finally it is intended to follow the flow in a fully Virtual Reality (VR) environment. It is anticipated that the visualisation of unsteady flows in a VR environment will have wide ranging applications.

In order to provide finely resolved data on unsteady 3D flows we have chosen a problem on turbulent oscillatory flows which forms a joint CISD/University/MAFF project. In particular, we are interested in the boundary layer interaction between the water and a rippled sedimentary layer forming in a river or sea bed.

Turbulent oscillatory boundary layers arise in a number of applications of particular interest. For example, the action of water waves can generate such boundary layers at the sea bed. In many situations the resulting bed stress is sufficient to cause the erosion of large quantities of marine sediment. An understanding of these boundary layers is therefore important for predictions of suspended sediment transport in coastal and estuarine environments where significant wave action is found.

Predicting the behaviour of coastal defence, navigation, waste disposal, fisheries, oil and gas extraction, power generation (from waves and tides), search and rescue operations, response to oil spills and other pollution incidents all fall within the boundaries of the present programme. In addition, there is long term concern about the impacts of climatic change and sea level rise for coastal defence. The recognition of the need to manage coastal and shelf seas requires a major extension of our existing predictive capability of complex interactions that take place between these processes.

The accurate modelling of near shore flows is important for the purpose of coastal protection and prediction of sediment transport. A knowledge of the effects of bed roughness on such flows is essential. Bed forms frequently occur over sandy beds in the near shore region and affect significantly flow resistance. Because of their importance a number of studies, both theoretical (computational) and experimental, have been devoted to investigating the flow over such bed forms.

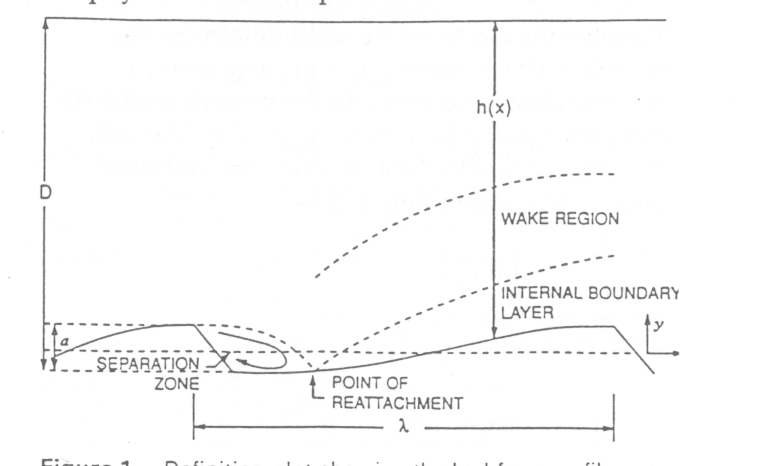

The fluid dynamics of turbulent flow over a rippled bed is not straightforward, but the flow can be regarded as consisting of a number of different spatial regimes which interact (Fig 1). A simple but illuminating description can be given, where the flow is divided into distinct regions in which simple models can be applied, then combined. Flow over the crest produces separation and a re-circulation region in the lee, together with wake-like flow downstream, and above, the re-circulation zone. This wake interacts with the internal boundary layer that forms as the separated region re-attaches. Further, surface curvature is important, and introduces effects not found in flows over flat surfaces. Thus, these flows represent a severe test of how accurately turbulence models can capture the physics of the complex flows.

Numerical calculation of flow structure and the suspended sediment concentration over rippled bed under surface wave motion is conducted using a Differential Second Moment (DSM) closure turbulence model in two and three dimensions. The computational procedure for the governing equations is based on the fully conservative, structured finite volume framework, within which the volumes are non-orthogonal and collocated such that all flow variables are stored at one and the same set of nodes. Particular emphasis is on solving a flow field with phase lag in space under wave motion, which is a feature lacking in the oscillatory flow calculation. The bottom boundary condition for calculation of suspended sediment transport is given by condition in which sediment flux is taken to be functions of local and instantaneous bed stress. The other boundary conditions are based on the traditional wall function approach with the important difference that they take into account all stages of the flow regime (hydraulically smooth, transitional or fully rough). The velocity components at the to boundary conditions are given from the solution of the second order Stokes wave theory. The characteristic phenomenon such as the vortex formation and vortex lift off over a sand rippled bed is simulated.

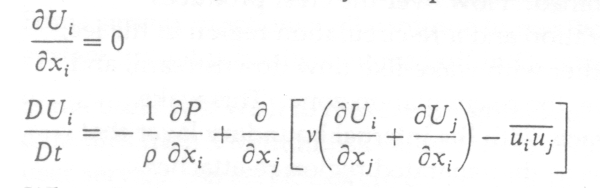

The governing equations for the flow are the time average Navier-Stokes equations, which, for a constant density fluid may be expressed as:

Where Ui is the mean velocity component in the i-direction. P is the mean pressure and the last term is the Reynolds-averaged stress correlation, ρ and ν are the fluid density and kinematic viscosity, respectively.

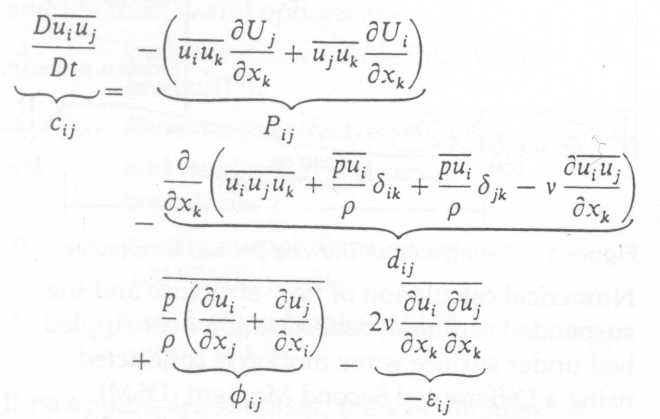

To solve the equation we must determine the turbulent shear stress a priori using a suitable closure scheme. In the present model the exact transport equation for turbulent shear stress is derived from the original Navier-Stokes equations and their time-averaged counterparts as:

An inspection of this equation shows that it represents a balance between stress convection Cij production Pij redistribution φij (by interaction between pressure and strain fluctuations), diffusion dij and viscous dissipation εij.

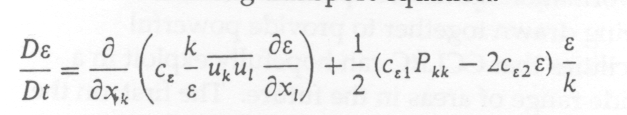

The closure model adopted here for these processes is based on the original Gibson-Lauder proposal, whilst the dissipation rate is calculated from the following transport equation.

The computational procedure for the governing equations is based on the fully conservative, structured finite volume framework, within which the volumes are non-orthogonal and collocated such that all flow variables are stored at one and the same set of nodes. To ease the task of discretisation and to enhance the conservative property of the scheme, a Cartesian decomposition of the velocity field is used. The solution algorithm is iterative in nature, approaching the steady solutions with the aid of a pressure-correction scheme. Convection is either approximated with a higher order upstream weighted scheme, QUICK, or the TVD-type MUSCL scheme. The latter is applied to the transport equations governing turbulence properties because the QUICK scheme has a tendency to provoke oscillations in regions of steep property variations.

We have carried out preliminary studies on the Digital Turbolaser at the Atlas Centre. Using a fairly coarse mesh (20 × 30 × 82) has required around 75 iterations(for good convergence) per time step. Each time step takes approximately 17 minutes, so a reasonable simulation of around 180 time steps required some 50 hours of computational time.

However, a more realistic simulation will require a much finer computational mesh (say 3 to 4 times finer in each spatial direction). Assuming that convergence is equally efficient on this finer mesh and allowing for a similar number of time steps a full run could take in excess of 500 hours. Since we are only using a single processor this will translate exactly to the Digital 8400 super scalar.

The visualisation problems discussed here form a part of a larger programme on visualisation and VR, but by the very nature of the unsteady motion it is probably with the most complex system to be investigated. In this presentation we shall limit ourselves to the discussion of CFD systems.

The characteristics of turbulent oscillatory flows are quite different from wall turbulence which is steady in the mean. In the accelerating phase, turbulence is triggered by shear instability near the wall but is suppressed and cannot develop. However, with the beginning of flow deceleration, turbulence grows explosively and violently and is maintained by a bursting type of motion. The turbulent-energy production becomes exceedingly high in the decelerating phase, but the turbulence is reduced to a very low level at the end of the decelerating phase and in the accelerating stage of flow reversal. The behaviour is thus more complex than for steady flows.

This can be seen in the 20 simulation of Fig 2 and Fig 3. There appears to be little difficulty in the visualisation of 20 flows. We can display a number of independent variables in turn and get the general feeling that we understand what is going on. Colour rendering and the introduction of particle tracers enhance this feeling and time dependent motion is handled by creating of time sequence video, often with animation. This facility is potentially available at RAL for users of the computational facilities, the video can either be generated at RAL or the mpeg files exported so that the graphics can be displayed and manipulated on the remote users' machine.

The representation of 3D flows is much more difficult but the remarks made above on the generation of video for time dependent flows applies equally well here. The real problem is choosing the parameters and viewing aspect that will reveal the properties that one wants to see. For example arrows to represent 3D flows are notoriously difficult to interpret and iso-surfaces have only a limited use in displaying unsteady motion. We have adopted two approaches, neither of which is completely satisfactory. The first, Fig 4, is based on highlighting one plane, the remainder of the fluid being transparent. This plane can then be scanned in a spanwise direction a certain number of time for each time step.

There appears to be an optimum ratio between the spanwise motion and the time stepping to give a reasonable impression of the flow, although this is inherently very subjective. The second approach is to adopt a bubble representation of the flow, Fig 5, to mimic the time dependence of the flow. This approach again has strengths and weaknesses.

Although there is at RAL, a fully developed video system capable of providing broadcast quality videos with animation and sound, the video results described here, are produced on a local Silicon Graphics Indigo equipped with a frame grab and hold video card. These research quality videos can be produced in real time or immediately preceding a numerical simulation and form a routine part of the output facilities offered to users of the HPC facilities.

The next step in the visualisation programme is to provide stereoscopic viewing of the flow. Stereoscopic frame images can be produced on the A VS system and these images will be viewed through a pair of Crystal Eyes glasses (the stereo images being produced on the screen of a Silicon Graphics Crimson workstation). The eye point for the viewing will be external to the flow and this view point can be rotated in space. Massless tracer particles can be introduced into the flow, although at present this is confined to an individual time step since this facility on AVS is for steady-state motion only.

This part of the programme will lead on automatically to the visualisation of the flows using the virtual reality centre being set up at RAL. Currently the heart of this system is a Silicon Graphics Onyx station with head-up display and two camera projection system.

At this stage the sedimentary sand particle transport part of the CFD code will be utilised. The view point will be one of the sand test particles and this will be used to follow the flow from an internal view point. The output will be projected via two projectors onto a large screen. Different aspects of the flow can be investigated by choosing different test particles to follow the relevant part of the motion.

Although this part of the programme is being driven by the investigation of fluid motion it is intended that the facility be available to a much wider audience. Already, interest has been expressed from other fields as wide apart as space physics and the cooling flow in micro channels.