Front cover shows the IBM3494 Tape Library Dataserver.

Mainframes and manual tapes are dying, while distributed computing and managed data storage are developing rapidly. During 1993, the number and power of computer systems running applications reading and writing data which has traditionally been held on tape in the Atlas tape library and processed by central mainframes, has increased dramatically. There is now over five times more scientific processing power than in the IBM 3090 VM system available in the Atlas Unix (apart from the Cray) and VMS services alone, and there is now a significant number of machines outside the Atlas Centre also accessing this data.

In order to provide a framework for giving these network-attached systems convenient and flexible access to large amounts of data, we developed the Atlas Data Store concept and the VTP (Virtual Tape Protocol), which have been described in FLAGSHIPs 23 and 13.

It became clear that our existing data handling hardware based around two StorageTek tape "silos" and the IBM 3090 system would not be able to handle the increased capacity requirements, and especially not the increased data throughput requirements, of the ever-increasing number of new systems requiring access to the data store.

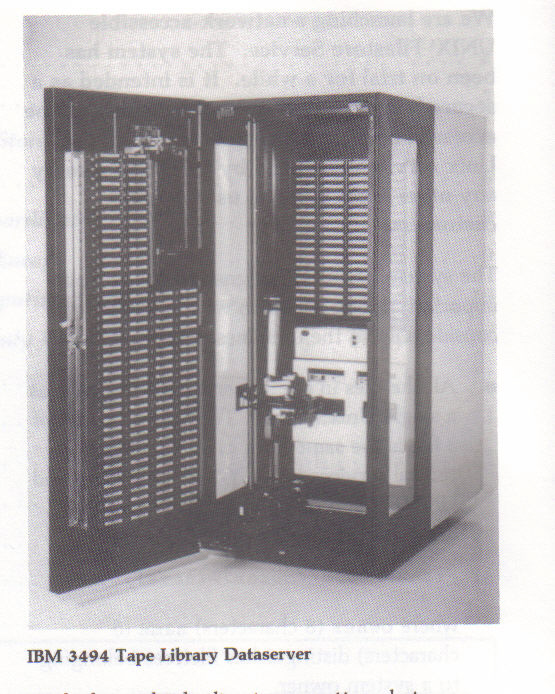

In order to address these problems and to provide a framework for expansion in the future, we have placed an order with IBM for an automatic tape cartridge robot system (an IBM 3494 Tape Library Dataserver ) to be installed in February 1994

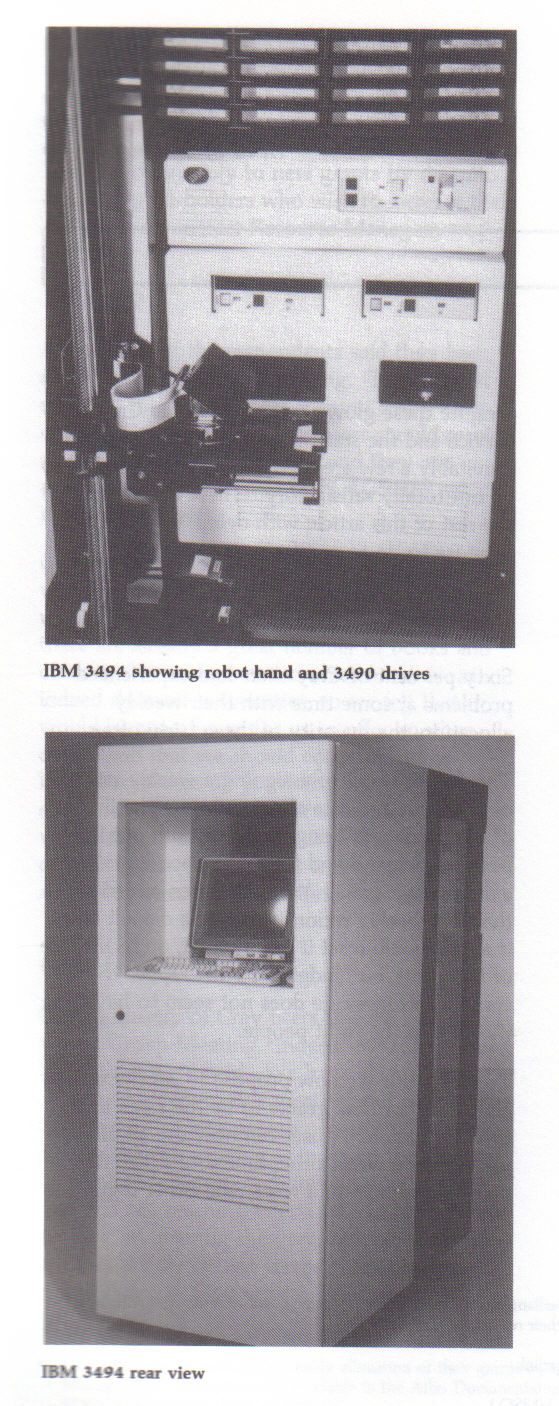

This is a linear tape robot system, unlike the annular one in our existing StorageTek equipment. A lightweight hand runs between two facing walls of tape cartridge shelving. Among the cartridge shelves are mouths of tape drives, so the hand with its horizontal and vertical motions can put any cartridge into any drive.

With the multiple direct connections between the IBM 3490C drives in this system and our existing IBM RS/6000-360 network interface processors, we expect to provide a much improved data service to all the new and existing Unix and VMS systems, without any incompatible changes to our existing application and user interfaces to the data store

We expect this new hardware to go into service in the spring, and that by the autumn of 1994 it will be providing to client systems across the network aggregate data rates exceeding 10 Mbytes/sec, from over 2 Tbytes of online data.

A key part of the plan is that this hardware will enable us to take advantage in a timely fashion of future developments in magnetic tape technology, in order to give even higher data rates to even more data.

The Atlas Data Store currently holds about 10 Tbytes of data, which includes the data in the old-style Tape Library at Atlas. All data used in the last six months or so is held in magnetic tape robots (soon to include the IBM 3494) and so is accessible without human intervention at any time with a delay of a couple of minutes or so, depending on the size of the file. Data not used for longer than six months or so is held on manually mounted (4mm DAT - Digital Audio Tape) media from which it is reloaded automatically when requested. A copy of all data is maintained daily in fire-resistant safes in a remote building.

We plan that by the end of 1995 the Atlas data store should have over 10 Tbytes of data online in automatic tape systems (with a larger pool of older data stored on manual media) and be able to sustain aggregate data rates of 20-30 Mbyte/sec.

Data integrity is very important in such a storage service. In order to guard against loss of a piece of data, all data is stored duplicated in two separate buildings; older data in fact has three copies. In order to guard against undetected corruption by faulty hardware (or software), redundancy check data is stored with the data from every file. This is enough to detect all single-bit errors and most multibit ones, as well as to detect that the data, although correct, is out of place or from the wrong file. From time to time such events actually have occurred, so it is just as well this checking is done.

Data can be read and written from any machine with IP network connectivity to RAL, including the Atlas services (Cray Y-MP8, IBM VM/CMS, DEC VMS, DEC Unix farm). The application interface includes both a callable subroutine library and a utility to copy data to/from a local file (or pipe for Unix systems). So, existing client applications can usually use data in the Atlas Data Store without changes, while more demanding applications can make more sophisticated use of the facilities via the callable interface.

There have been several changes in the hardware configuration of the Atlas Data Store and its network servers in order to improve the performance of the system, and there are more such changes planned.

Versions of these Atlas Data Store client libraries and utilities are available for most flavours of Unix and VMS system. For further information and for copies of the client software, please ring the Service Line or contact me at djr@rl.ac.uk

We are launching a network-accessible UNIX Filestore Service. The system has been on trial for a while. It is intended as a secure home for disk-style data which can be accessed conveniently by users on all the Unix services managed by CCD and also by any other Unix systems used by our customers.

/rutherford/'<owner>-<name>'/...

where owner (8 characters) name (6 characters) distinguishes filetrees belonging to a system owner.

This constant naming convention across all systems means that shell scripts and other applications using files in this store can run unchanged both on central services (such as the Cray) and on private workstations.

To meet these requirements, we have implemented this server system on IBM RS/6000 computers with Seagate Elite-3 disks (at present 54 Gbytes) and FDDI connections. The basic storage unit is an AIX logical volume containing a single filetree, which has excellent integrity and resilience characteristics. The 'export list' of clients which can access this filetree is maintained separately per filetree. Space is allocated when a filetree is created; the filetree is guaranteed to be expandable to that size (irrespective of activity in other filetrees) but not beyond (without redefining the filetree). Arranging things in this way means that one user's filetree is very well insulated from other filetrees - problems are contained, filetrees can be backed up and restored independently etc.

For further information about the Filestore Service, to request new filetrees to be set up for your use and for details of how to configure your own Unix systems to use this store with the standard pathnames, please ring the Service Line or contact me djr@rl.ac.uk

The post of National Supercomputing Facilities Information Coordinator is a new position created by the Supercomputing Management Committee of SERC. The National Supercomputing Facilities:

are managed by SERC on behalf of all the Research Councils. The management structure will change with the establishment of the new Research Councils in April 1994, but some form of Supercomputing Management Committee will continue!

I am involved with the management of supercomputing resources, the processing of grant applications for supercomputing time, and the preparation of reports on scientific results from the supercomputers.

Although I am based at the Atlas Centre, RAL, I also work regularly at Manchester Computer Centre and the University of London Computer Centre.

I hope to be able to act as a source of information on the National Supercomputing Facilities for supercomputer users (and potential users), the Supercomputing Management Committee (and its various Panels and Working Groups) and the Research Council staff.

The Science and Engineering Research Council, acting as agent for the Office of Science and Technology to provide High Performance Computing for all research councils, has chosen to purchase a Cray T3D supercomputer.

The system offers over five times the power of any existing UK academic facility, and will provide world-class high performance computing facilities to a small number of UK researchers for use in areas where high performance computing is an essential and integral component of their research programme and who require the highest level of computational resources.

The system to be purchased by SERC will have:

The system will run:

Extensive evaluation and benchmarking showed the T3D to have good performance across a wide range of scientific areas.

It will be installed in April 1994, and come into service during the summer.

An SERC press notice stated:

After a separate tendering exercise, Edinburgh Parallel Computing Centre (EPCC) at the University of Edinburgh has been chosen to provide a national service based on the new system. EPCC has an international reputation in parallel computing, and has put together an attractive package which will provide significant additional capacity at no cost to the academic community. The extra capacity will be shared between academic work and industrial exploitation based on EPCC's consultancy services. This is in line with the aims of the Government White Paper Realising our Potential: A Strategy for Science, Engineering and Technology.

The new system is being provided as part of a continuing programme of investment in national high performance computing facilities, and will be available to users from all the research councils' communities.

A small number of early users, for the period May 1994 to April 1995, will be selected from amongst those already holding large Research Council peer-reviewed supercomputing grants. It is hoped that a full peer-review mechanism will be in place by the end of 1994 for projects to start in April 1995.