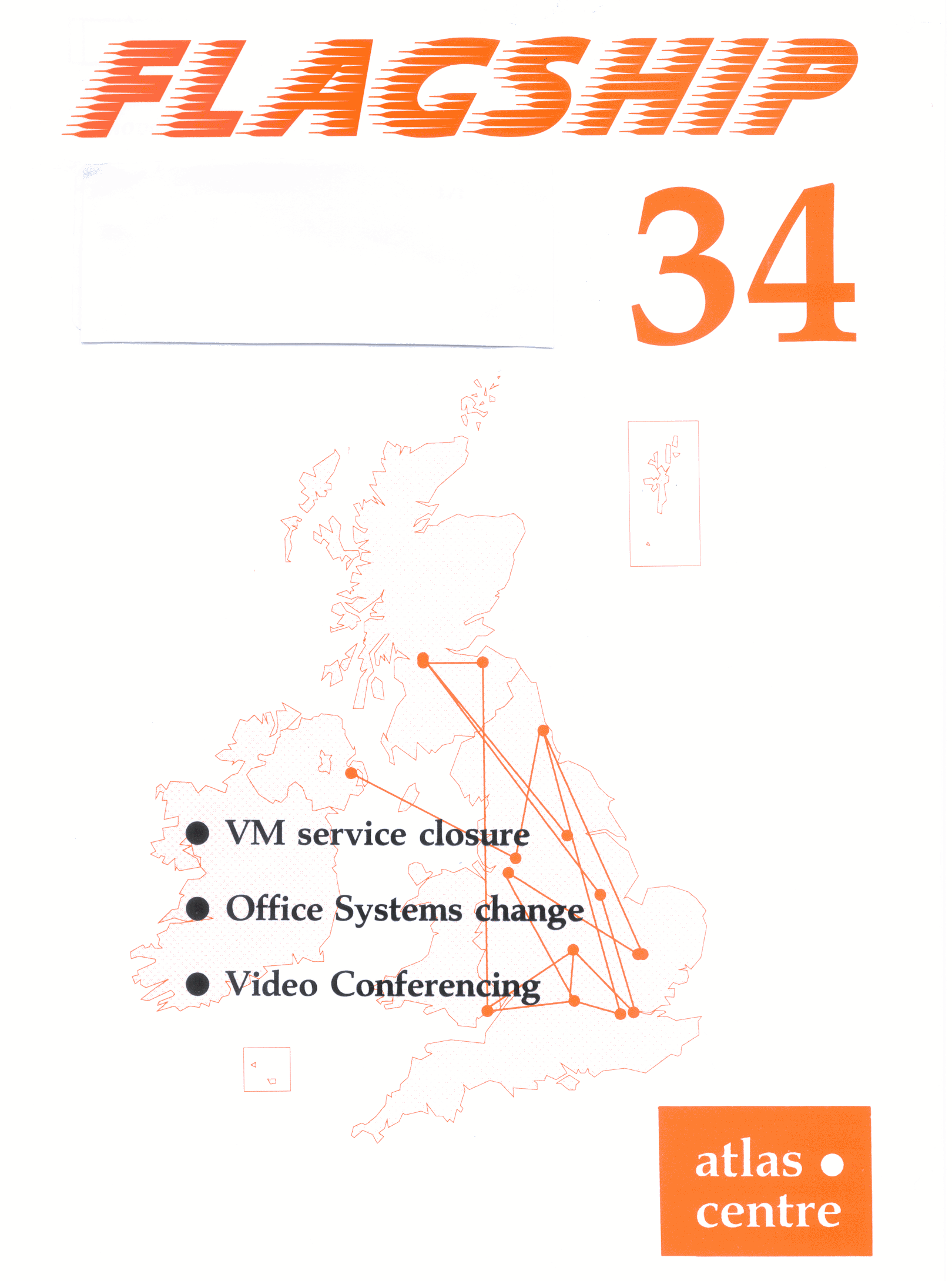

The cover shows a map of the UK with the 14 sites on the ATM network which provides 2 Mbit/sec communication for video conferencing.

The IBM 3090 is expected to be finally switched off towards the end of 1995. The precise date has yet to be decided and depends on the timescale for completing the transfer of office systems work from the IBM to other facilities, much of which is due to take place in the first half of 1995 (see the next article). Thereafter the IBM will remain in use only for as long as it takes to ensure the transfer is complete, and this is unlikely to extend beyond the end of 1995.

Most of the scientific workload from the IBM has already transferred to the new Unix and VMS services that have been described in recent issues of FLAGSHIP. Those science users who remain on the IBM should now make plans to complete their moves to the new services by the summer of 1995 so as to be ahead of the eventual closure date.

User Support will provide help and advice on migration and would be interested to hear if you have a requirement that might be better met by some other form of scalar service.

Early in 1983 the IBM Professional Office System, PROFS, was brought into service to provide office system facilities for RAL staff. Now known as Office Vision, it currently serves 900 registered users at RAL and 500 at Swindon Office. Although OV/VM provides an excellent service for a number of important office functions, it is now looking increasingly dated and cannot meet the current requirements to provide a graphical user interface to high-function applications within a LAN-based environment.

An alternative office system based on distributed servers and supporting principally PC and Mac clients is now being constructed, and this will become available for use by Daresbury Rutherford Appleton Laboratory staff during 1995. OV/VM users are expected to migrate to the new office system during the first half of 1995, and it is intended that the use of OV/VM by DRAL staff will cease by July 1995. Swindon Office are embarked on a similar migration on broadly similar timescales.

Despite the railway signalmen's strike, the Atlas Cray User Meeting at the Royal Institution in London was well attended, with those from farther away being even more in evidence than usual.

Professor Richard Catlow of the Royal Institution gave the welcome and chairman's address in the famous Royal Institution Lecture Theatre and Roger Evans presented a short Progress Report. The Y-MP8 has continued to give an excellent reliable service with no hardware problems other than those triggered by external events such as power failures. Unices has also been exceptionally reliable, the only incidents of any note being administrative file systems filling up. The continuing message is that the Y-MP8 is most reliable when left alone and Cray have continued their agreement to take only eight of the twelve possible maintenance sessions in twelve months.

Job turnaround is acceptable. It fluctuates from queue to queue as particular users go through periods of increased activity, but trying to adjust the relative queue priorities on a dynamic basis was felt to be chasing a moving target and probably not worthwhile.

Roger Evans presented a paper on future policy on Front-end access to the Y-MP8. It had been agreed at the previous User Meeting that VM/RQS would be discontinued at the end of 1994 since the software was unreliable and support costs were high. The VM/CMS service would remain for somewhat longer, and Cray users could continue to submit batch jobs via the FTP protocol. Those continuing to use VM/CMS should be aware that there is continuing pressure for reducing costs, and if funding is reduced it may be necessary to withdraw the VM/CMS service at short notice.

It is intended that the VAX/VMS front end vmsfe and the Unix front-end unixfe continue in their present forms; vmsfe will run at minimum cost with hardware and software frozen at current levels but the batch job software on unixfe will probably change to Cray's NQE product for better compatibility with Unicos batch job submission.

In looking to the future, it would seem to be a better use of resources to replace the "front-end" philosophy with an "appropriate architecture" policy. It remains true that there are some things that Cray Y-MPs do not do well, such as running programs with large dynamic memory allocations (e.g. interactive graphics and symbolic algebra) or running scientific work with little vector content. It was proposed that the Atlas Users should support a policy of eventually replacing "front- end" machines with a powerful superscalar computer that would provide a valuable scientific computing resource and complement the vector processing of the Y-MP.

John Gordon continued the theme of interactive use of supercomputers, noting that most users now submit Y-MP batch jobs from interactive sessions. John proposed a set of developments that could make the Y-MP more attractive as an interactive service. The current range of shells: Bourne, Korn (POSIX compliant in Unices 8.0) and C, could be extended to include shells such as tcsh and zsh if there were sufficient demand. With a greater variety of shells available it would be necessary to ensure consistent settings of the interactive environment between different shells; a set of scripts developed by the Particle Physics community would be used to control this.

Editors are always a matter of personal preference and the meeting was asked for suggestions for new editors to provide, for instance, compatibility with the VM or VMS editors. Complicated shell scripts can be managed more easily with command processing languages such as REXX, peri or tk/tcl and user feedback was requested. Access to the Internet from the Y-MP was obviously important and the Y-MP would be set up with elm and pine for e-mail and the tin program to interface to Usenet News. Graphical access to the World Wide Web was felt to be too much of an overhead for the Y-MP and users were asked to provide for this from local machines.

John Gordon continued with a short presentation on the use of magnetic tapes and the Atlas Data Store. At the previous Atlas User Meeting it had been agreed that user magnetic tapes would be withdrawn by September 1994, but this had been delayed since the Virtual Tape Protocol had not achieved a high enough performance until recently. Users agreed that vtp performance was now adequate and the tpmnt command could be removed at the end of October, vtp will have access to new high density and high speed tape drives when the IBM 3494 tape drives are upgraded in 1995.

Chris Plant described some of the new features of Unicos 8.0 which it is hoped to introduce in November 1994. The kernel has increased multi-threading for greater efficiency and a default value of NCPUS=4 is more appropriate to most busy machines. Users expecting to use more processors must remember to specify NCPUS=8 themselves. Unicos and NQS have increased POSIX compliance and NQS produces significantly more logging information. It will be possible to see all queued batch jobs and not just a user's own jobs - a long standing request. NQS will once again support the option QSUB -A to set the account (this was supported some time ago as a local modification but withdrawn in favour of newacct to reduce support costs).

On-line documentation for Unicos 8.0 is much improved but the important new product craydoc does not run on Unicos, but on a "front end" machine! Craydoc is hypertext-based, so that links to related subjects are easily followed. It is hoped to introduce Craydoc on unixf e very shortly.

Some new application software had been procured since the last User Meeting: FLOW3D from AEA Harwell has at last appeared after protracted contractual negotiations, and Cray's Unichem package at version 2.3 can now be accessed from any X11-compatible Unix workstation, whereas previously it had required Silicon Graphics workstations at the server end.

Brian Davies prefaced this presentation by noting that the SMC and the ABRC sub-committee had been disbanded some months earlier and that the new EPSRC Advisory Body on High Performance Computing had not then held its first meeting. During this interim period it had been announced that there would be a review of the future needs for vector supercomputing facilities, and there had also been an announcement that the Convex supercomputing service at ULCC would not continue beyond the end of July 1996. The question of whether or not charging would be introduced for use of national high performance computing facilities had been left unresolved at the demise of the old committees and was being considered by the OST.

In these changing circumstances it was not yet clear how plans for the future would be developed, but nevertheless it would be useful to start to think about the kind of facilities the users of the Atlas Centre would like to have available, say two or three years from now. As regards large machines, Brian thought there were probably three types of option: an MPP that in some senses might by then be "better than a T3D"; a new and more powerful shared-memory vector supercomputer; a large multiprocessing superscalar facility, which might be either shared-memory or distributed-memory. A Superscalar facility, being based on the technology used in workstations, would need to have a large "headroom" in aggregate performance, memory or disk space compared with desktop machines to warrant its provision as a national service. These options, which were not necessarily mutually exclusive, would need to be accompanied by commensurate data storage and networking capabilities.

Brian asked the users to start thinking about this and to make any initial comments in the afternoon's discussion section.

The User Presentation of the day was from Dr Peter Yoke, of the University of Surrey, who described some detailed Computational Fluid Dynamics using the Large Eddy Simulation (LES) method. Many of the state-of-the-art problems in CFD are related to the treatment of fluid turbulence and there were two distinct historical approaches. The traditional approach of the classic fluid dynamicists such as Reynolds and Kolmogorov is to regard turbulence as a statistical process modelled in the manner of thermodynamics through the expectation values of various quantities. A more modern approach typified by the computational modellers and first developed by Lorenz and Orszag is to regard turbulence as essentially deterministic and computable given enough computing power. The numerical methods developed are very important since a turbulent fluid is fractal in phase space and with present computing power only the most simple geometries can be computed by direct integration of the Navier Stokes equations.

LES simulation has developed as a bridge between these two approaches where the large scale and most important eddies are resolved and computed and statistical models are used to close the set of equations and model the sub-grid processes. The intention is that the sub-grid processes should be on such a small scale that they are describable by universal statistics. As an indication of the scale of simulation possible it is hoped to model a single turbine blade using the LES method on the Edinburgh T3D.

Peter showed a video of an LES simulation of the flow of coolant in a fast reactor. The coolant strikes a flat plate and the pattern of turbulent eddies formed is important in predicting the thermal stresses on the walls. Experimental measurements on the correlation of temperature fluctuations had shown long scale correlations that could not be explained using older CFD methods but had been successfully explained by the LES simulations.

After a buffet lunch in the Long Library, the afternoon session began with a short presentation on publicity issues from Alison Wall, who is part of the High Performance Computing Group at EPSRG see HPC News elsewhere in this issue of FLAGSHIP for more details.

The general round table discussion began with operational issues relating to job throughput and turnaround and all present were happy with the status quo. On the issue of interactive use of Unicos, there was a desire expressed for MicroEMACS and a Digital EDT-like editor to be available. The users present were happy for the tpmnt command to access magnetic tapes to be withdrawn soon, as long as anyone with real problems would be allowed special consideration.

The issue of data retention was raised by Atlas staff, since, as well as keeping secure copies of all current data, the Atlas Centre also currently keeps copies of old and deleted data. There is a small trickle of requests to restore non-current versions of files, sometimes many months old. It was agreed that for efficiency reasons, data which was no longer current (i.e. the file had been changed or deleted) and was more than six months old, would not be retained.

Users were generally keen to promote the concept of a powerful superscalar machine sitting alongside the Cray Y-MP, and pointed out the benefits of using the superscalar machine for data analysis and visualization. There is a residual need for a front-end machine, and such a superscalar computer should be closely integrated into the vector supercomputer service.

On the issue of future directions for the Atlas Centre, a strong feeling existed that new machines should be procured with a view to compatibility and migration from existing services, whether the Y-MP or the T3D. Benchmarking any new machine would be extremely important and should be raised at the next User Meeting. It was also considered important that the new Advisory Body arrangements should contain effective ways for users' views to be expressed.

Professor Catlow closed the meeting with a quotation from Tyndall, director of the Royal Institution, in 1870, which showed remarkable prescience:

An intellect the same in kind as our own would, if only sufficiently expanded, be able to follow the whole process from beginning to end. It would see every molecule placed in its position by the specific attractions and repulsions exerted between it and other molecules, and the whole process and its consummation being an instance of the play of molecular force.

It is hoped to introduce UNICOS 8.0 in November 1994. There are various changes that have been made in the new release that could mean that user code will have to be changed. For a more detailed account, please see the news file on the subject (a PostScript version is in /usr/local/doc/unicos/unicos80.ps) or the docview document unicos80.ro.

NQS will use the explain catalogue system for any error messages. It now sends back an additional file that contains information from the master NQS daemon that pertains to the job. This log can be used to track any problems the job may have had. This feature can be switched off from the job.

The X windows system will be release 5, rather than release 4 on the UNICOS 7.0 system. This should be upwardly compatible. The Cray tools now all use the same "CrayLook" interface (similar to Motif). Docview now has an X interface.

There are changes to the I/O, C and Fortran libraries and additions to the data conversion routines. The C compiler now does ANSI function prototyping, which may cause some codes that compiled under 7.0 to fail to compile now. The Fortran compiler is stricter at language checking which, again, could cause problems.

The UNICOS 8.0 release is POSIX compliant. This means that there are many changes to the commands that may cause scripts to stop working. The default shell at 8.0 will be the POSIX-defined shell, similar to the Korn shell at 7.0. To change to the Korn shell before the move to 8.0, issue the command:

chsh user-id /bin/ksh

The changes to the operating system should be transparent to the users. The kernel is now multi-threaded, which should reduce system overheads and improve job turnround.

Video conferencing is rapidly becoming an accepted part of business life, spreading in a way reminiscent of the take-up of fax machines. Corporate systems (linking meeting rooms) and personal systems (linking desktops) are together helping to improve communications between people and save debilitating travel. RAL and DL have embraced both types of system and several groups have already used video conferencing systems at the laboratories for committee and project meetings. This article explains the systems, their different capabilities and how the meeting room systems can be used by DRAL staff.

The most obvious distinction between systems is whether you go to the facility (meeting room style) or the equipment is in your normal workplace (desktop style). There is a half-way house, where all the equipment is on a trolley which is moved to where you are going to use it (roll-around style).

While meeting room systems can be ten times the cost of desktop ones, the usage of a meeting room system (number of people × number of hours) is likely to be more than ten times higher than that of a desktop system, so both systems have their benefits.

Naturally, you need a connection to the other site (or sites). There are three main networks for the long-haul part of the connection:

Private Circuit Network including systems like Kilostream, Megastream and the SuperJANET networks;

Public Circuit Network this effectively means ISDN;

Packet Network this effectively means the Internet.

In the past, long duration calls (such as are common with video conferencing) would have been considered uneconomic, but a standard (2 x I28kbit) ISDN link to the US is now about £100 per hour, making such usage very attractive, compared with transatlantic flights.

With a circuit-based system, the flow of information is effectively guaranteed, so the sound and picture should never break up. By contrast, a packet-based system may provide a good picture one minute and a very poor one the next, because of other users on the network. Audio, which requires less bandwidth than video, may be less affected than video, but can still be interrupted by heavy network traffic.

Most of the networks will be familiar to readers of FLAGSHIP, but the ATM network may be unfamiliar. It is a 140 Mbit/sec network joining 14 UK academic sites: Belfast, Birmingham, Cambridge, Cardiff -> Welsh Network, DL, Edinburgh, Glasgow, London Imperial, London UCL -> Livenet 2, Leeds, Manchester, Newcastle, Nottingham and RAL. The network consists of 14 ATM switches connected as shown in the first diagram.

DRAL have several requirements for a video conferencing system, which cannot all be addressed by any one solution.

1. There is a strong requirement for a RAL-DL link that provides general liaison between the two laboratories.

2. Many projects now depend on (international) video conferencing for project control.

3. There are frequent occasions when a seminar at RAL or DL can usefully be relayed to other laboratory.

This does not exhaust the list of requirements (or even the configurations that have already been provided) but provides an indication of the main requirements.

The major work so far has been towards the meeting room style of working, since the RAL-DL liaison function was regarded as a particularly urgent requirement. For desktop working, progress has been made in several departments at RAL and DL on access to the Mbone (Multimedia backBONE) over Internet, and also on desktop video using PCs and Macs.

The rest of this article describes the progress towards meeting room style working over ATM and ISDN.

For maximum flexibility, several rooms have been provided with links back to a central video conferencing control centre, where the codec (CODer/DECoder) and network interface equipment is housed. At RAL the Atlas Colloquium (R27), UKERNA boardroom (R31) and Atlas Video Facility are connected, with the main RAL Lecture Theatre (R22) also accessible and other rooms being considered (in R1 and R71). At DL the main Lecture Theatre has been connected and there are plans and approval to connect a permanent studio in the NSF tower.

As extra systems (such as ISDN) become operational, and as new rooms get connected, this structure allows all the rooms to make use of all the systems.

CCD is currently acquiring suitable audio-visual equipment for the Colloquium and recommending similar equipment for the other RAL rooms. DL have already acquired some of the equipment required for their room.

The 14 sites on the ATM network (which provides 2 Mbit/sec communication for video conferencing) are all connected to Multipoint Control Units (MCUs) located at London, Cardiff and Edinburgh, as shown in the second diagram. These units extend the systems from merely point-to-point communication to multisite conferences. Fully interactive meetings with up to six sites have been run, as well as a large number of two site meetings. For multisite meetings, picture switching is automatic, according to the site currently speaking (this works better than you might guess!).

DRAL staff can organize meetings using the RAL and DL sites and any of the other sites on the ATM network. To use the system, you need to contact your ATM network site person; at RAL this is Chris Osland (ext 5733, e-mail VIDEOCON@ib.rl.ac.uk); at DL this is Network Support (ext 3379 or bleep 200, e-mail nets@dl.ac.uk). They will need to know which sites you are going to link to and which rooms (or what capacity rooms) are needed at each site.

Thanks to Scott Currie, University of Edinburgh Computing Services, for the original ATM Network diagram.

For several years we have been running courses in the Atlas Centre on computing topics. Office systems courses have been held in a rather small and cramped room in R27 and other courses in the Colloquium room. Although the latter is much more spacious, it is used for many other purposes and running a course has involved a lot of work in moving PCs and other equipment in and out each time, not to mention all the cabling that had to be done.

Now all that has changed. The old computer room in R30 has been converted into a permanent Training Room. It already had air-conditioning which has been retained to provide a comfortable temperature and airy atmosphere. It has a false floor which allows most of the cables to run under the floor instead of trailing across it. So it was an ideal room to use for training purposes. We have had floor boxes installed to provide power points and network connections. The photographs were taken during the first C programming course in the new room.

As well as the computing equipment which includes PCs and Sun workstations, we have provided flip charts, a whiteboard, overhead projector and projection screen. We are currently using an LCD palette for projection from a PC, but are considering the purchase of an SVGA projector when these become available later this year.

All computing courses will now be held in this room. We will be providing training on all the products which form part of DRAL's new office system as well as continuing with the other popular courses including the introductory and advanced courses on Unix and C programming. In fact, we are willing to arrange courses on any computing topics for which there is sufficient demand. It can be more cost effective to run a course on site, rather than attending a public course, for as few as three people. Details of courses currently scheduled and others which are planned are publicised in FLAGSHIP and also electronically via the news systems. The schedule for the R30 Training Room is maintained in the OV/VM diary RALTRAIN.